1. Introduction

Estimating the probability of bankruptcy is a critical component of financial risk assessment, providing valuable insights for different stakeholders, mostly for creditors and investors. Predicting the likelihood of business failure enables creditors to minimize potential financial losses and to establish risk-adjusted credit terms.

Various quantitative models, such as Altman’s Z-score [

1], logistic regression [

2], and, more recently, machine learning (ML) and deep learning techniques have been developed for predicting the probability of default or firm failure, using financial ratios, but also industry and macroeconomic indicators.

Machine learning models have been extensively compared with more traditional approaches and found to outperform them in bankruptcy prediction [

3]. Random forests [

4] and gradient boosting methods, such as XGBoost [

5], have been shown to perform well in bankruptcy prediction [

3,

6]. Indeed, Alanis et al. find that XGBoost outperforms other ML methods [

6].

Deep learning methods have also been studied in bankruptcy prediction. For example, Mai et al. [

7] show that when incorporating textual data from financial reports alongside financial ratios and market data, deep learning methods “can further improve the prediction accuracy”. However, Grinsztajn et al. [

8] compare deep learning models with XGBoost and random forest and conclude that “tree-based models remain state-of-the-art on medium-sized data (~10K samples) even without accounting for their superior speed”. These findings suggest that while deep learning may offer certain benefits when large amounts of data or unstructured data are available, their applicability in practical bankruptcy prediction problems is limited by data availability, which is usually structured, tabular, and of medium size.

With such a diverse array of options, model selection becomes crucial. Besides choosing between model classes, the practitioner must also optimize a model’s hyperparameters to achieve the best possible performance when used with new, unseen data. While all models are susceptible to overfitting the training set, ML models—being both more powerful and more complex—are particularly prone to this risk.

Overfitting the training sample leads to poor generalization performance and also model instability.

To address this problem and improve generalization performance, practitioners often employ k-fold cross-validation for model selection. The available dataset is split into k disjoint sets (folds). Models are iteratively trained on k-1 folds and tested on the remaining fold. This allows for out-of-fold predictions and performance metrics to be calculated for the entire dataset. The model’s performance calculated using this approach is referred to as cross-validation (CV) performance.

Cawley and Talbot [

9] demonstrate that, on a finite sample of data, it is possible to overfit any model selection criteria. Specifically, they show that tuning hyperparameters and choosing the model with the best CV performance improves out-of-sample (OOS) performance—defined as the performance on an unseen test set—only up to a point. Beyond that, OOS performance starts to deteriorate—continued tuning may even degrade generalization. This effect is primarily the result of variance in the CV performance metric, rather than its bias [

9]. As a result, Cawley and Talbot [

9] suggest using a nested cross-validation approach for model selection.

However, Wainer and Cawley [

10] later argue that, in practical applications, k-fold cross-validation is sufficiently accurate and that nested cross-validation benefits do not justify the significantly increased computational effort.

In this research, we use a publicly available database of Taiwanese listed companies [

11] and try to identify the model with the best generalization performance for bankruptcy prediction. We follow Teodorescu and Toader [

12], who use the same database and investigate logistic regression with regularization, random forests, and XGBoost. They tune hyperparameters and use k-fold cross-validation for model selection. In the present paper, we extend their analysis by employing a nested cross-validation approach.

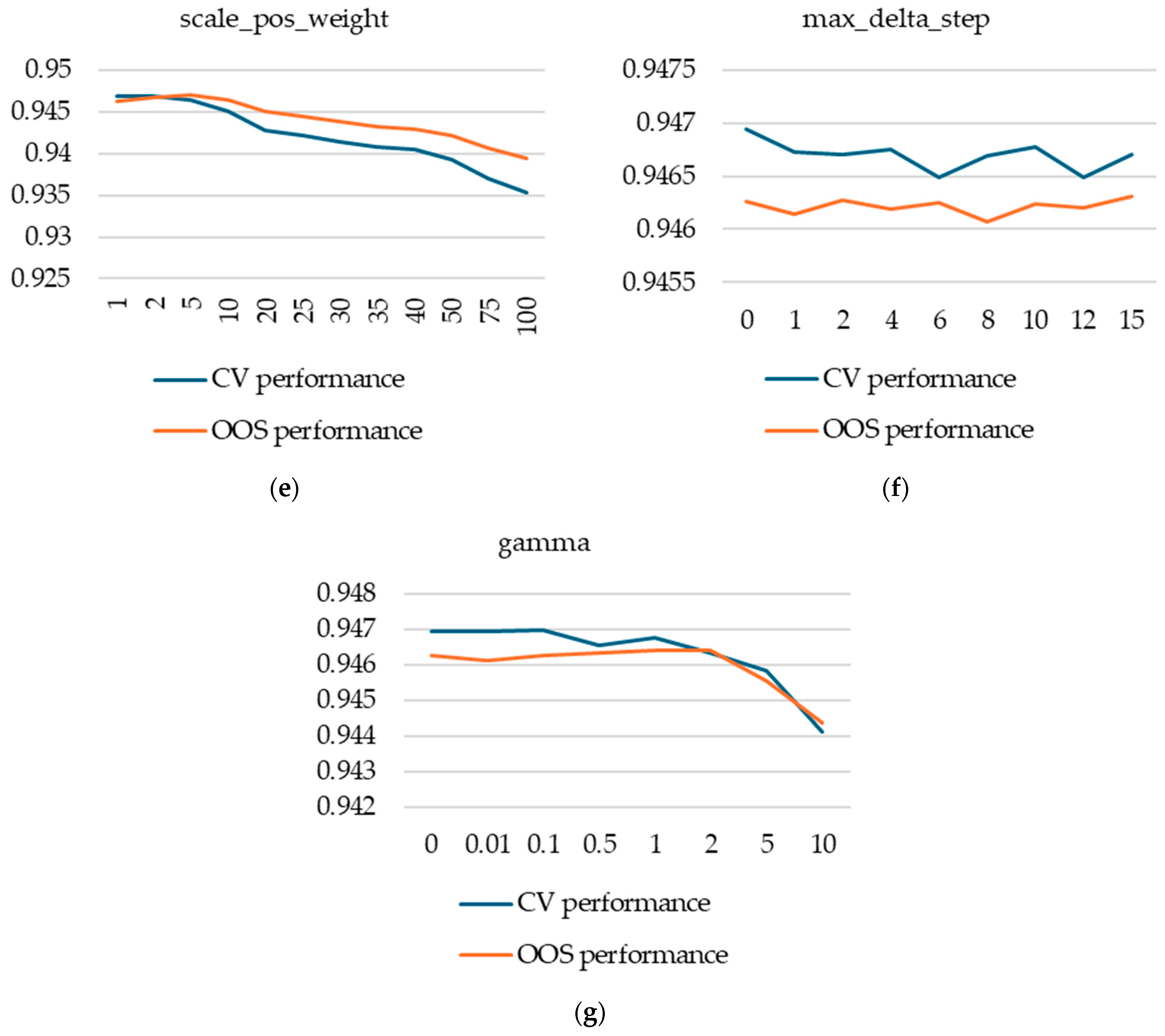

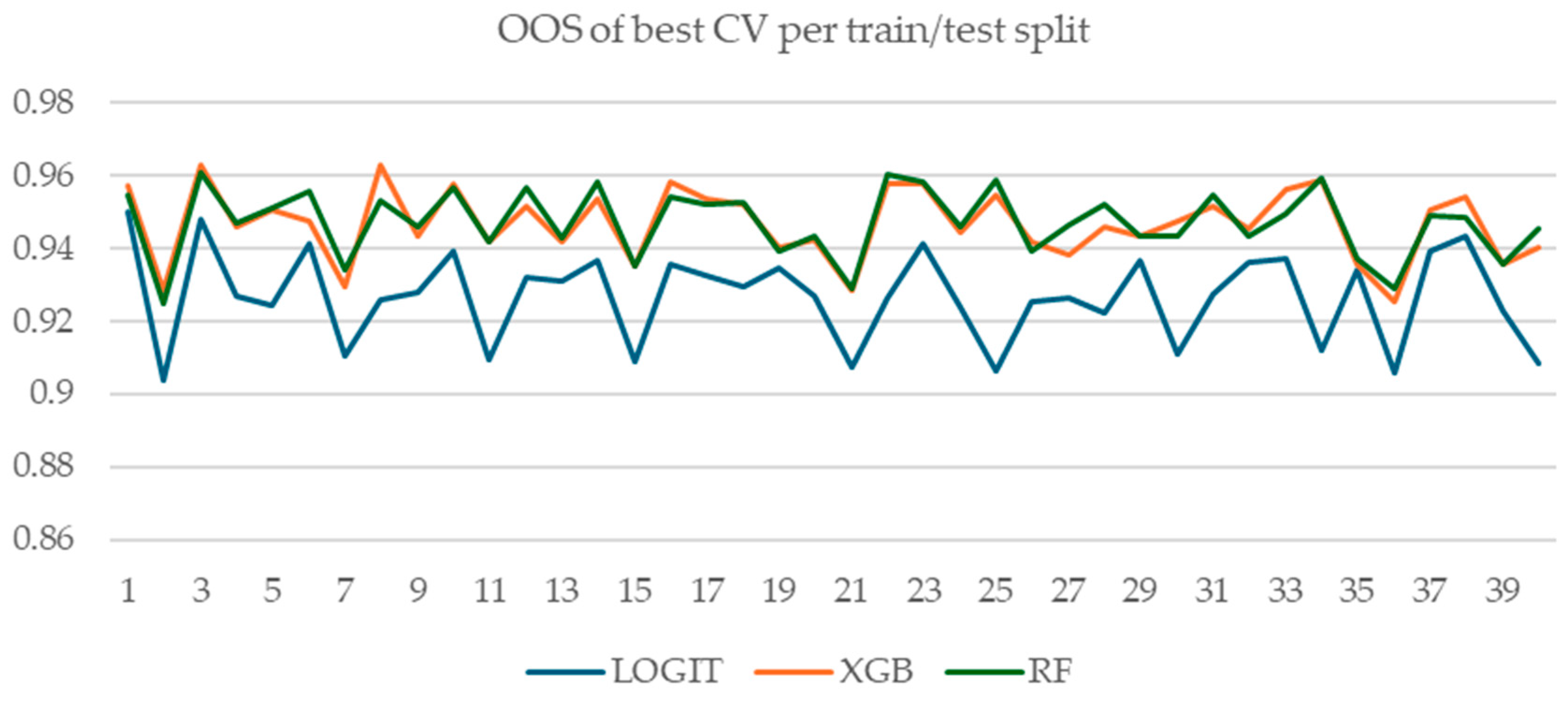

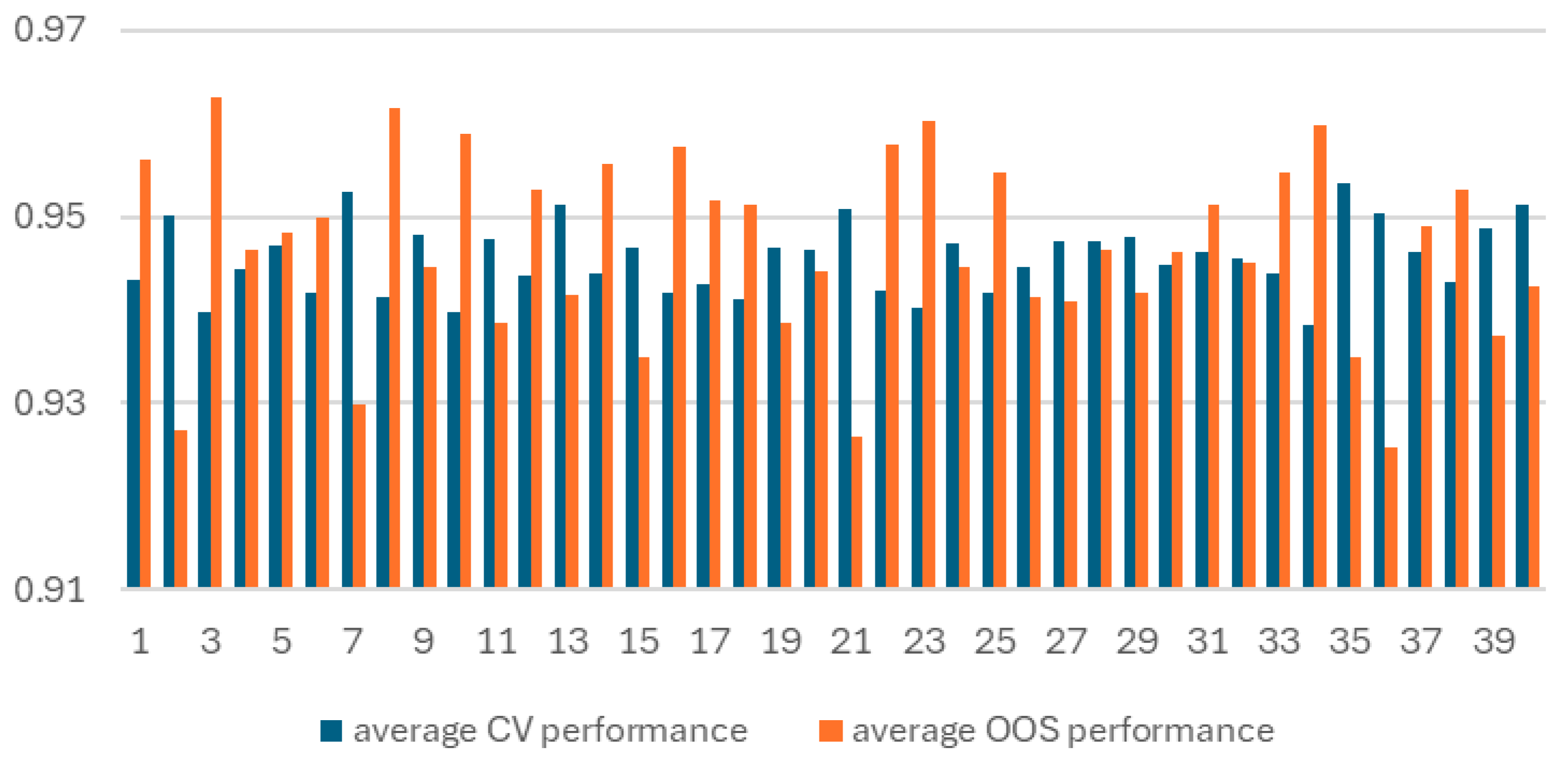

Our main goal is to evaluate whether k-fold cross-validation is a valid methodology for selecting the best performing model for generalization with new, unseen data. To simulate real-world deployment scenarios, we evaluate model selection reliability across 40 different random train/test splits, derived using a nested cross-validation approach.

Our main finding is that k-fold cross-validation is a valid technique on average for selecting the best-performing model on new data. However, on specific train/test tuples, the method may fail to produce the best results, selecting models with poor OOS performance. We define regret as the loss in OOS performance from selecting the model with the best CV performance relative to the one with the best OOS performance. Using variance decomposition, we show that 67% of the variability in the model selection outcome (regret) is due to statistical differences between the training and test datasets.

Furthermore, we observe that both regret and the correlation between CV and OOS performance are stable for multiple runs using the same type of model (XGBoost) and the same train/test split. This stability reinforces our key finding that the success of model selection depends mostly on the relationship between training and test data, rather than the selection procedure itself. Further support for our conclusion comes from the observation that the correlation of OOS performance on different test sets is, on average, positive, but, in structure, it can be negative.

A puzzling finding, which requires further investigation, is that correlations between CV and OOS performance for random forest models are different than those for XGBoost for the same train/test splits. As a result, it seems that the CV performance’s predictive power is mitigated by both differences in data samples and by different model types.

We investigate random forest and XGBoost models and use grid search to tune hyperparameters and to study the relationship between CV and OOS performance. We present some key insights regarding important hyperparameters, such as eta for XGBoost and splitrule for random forest.

We also investigate and present some important findings regarding the practical implementation of k-fold cross-validation, such as setting the value of k or the way predictions could be calculated for new data (as the average of all k models’ predictions or as predictions of a single model, retrained on the full dataset). Our results indicate that large values of k may overfit the test fold for XGBoost models, leading to improvements in CV performance with no corresponding gains in OOS performance.

2. Materials and Methods

2.1. Dataset

Our database consists of a publicly available dataset, compiled by Liang, Lu, Tsai, and Shih [

11]. This database contains financial data covering the period 1999–2009, for Taiwanese nonfinancial listed companies that had at least three years of available data—spanning both firms that experienced bankruptcy or financial distress and those that remained healthy. It includes 6819 firm-year observations, with 220 bankruptcies (for an aggregate bankruptcy rate of 3.23%). It provides 95 independent variables representing financial ratios from 7 categories: solvency, capital structure, profitability, turnover, cash flow, growth, and other.

We selected this dataset for several reasons. First, it contains a large number of predictors, with some correlated, making it particularly suitable for investigating the behavior of various machine learning algorithms.

Second, the dataset has a relatively modest size for machine learning models, allowing for extensive investigation of alternative methodologies.

Third, it is a high-quality database, having little noise and no missing data.

Our choice for this database was motivated by its public availability, its quality, and its manageable size, which made it appropriate for experiments requiring repeated resampling and careful model tuning.

2.2. Performance Metric

Various performance metrics can be used to evaluate bankruptcy prediction models [

13]: sensitivity, specificity, Type I and Type II errors, AUROC, and F1 score. We chose AUROC for three reasons: (1) it is a widely used metric [

13], (2) it is directly implemented by XGBoost as a performance measure to be optimized, and (3) it does not require the setting of an extra hyperparameter, the threshold.

2.3. k-Fold Cross-Validation

The k-fold cross-validation technique is implemented by randomly splitting the available data into k-folds. Models are trained on k-1 folds and tested on the remaining kth fold. By iterating over all k-folds, the practitioner can obtain out-of-fold predictions and performance for the entire dataset. This is referred to as cross-validation (CV) performance. k-fold cross-validation is widely used by practitioners for model selection—choosing both between model classes and tuning hyperparameters [

13].

2.4. Machine Learning Models

We evaluate two ML ensemble tree-based classifiers: random forests [

4] and XGBoost [

5]. Both models have been shown to perform well in bankruptcy prediction [

3,

6].

To implement random forest, we use the

ranger library in R [

14].

In random forest, trees grow independently of each other. In contrast, XGBoost is an iterative model—successive trees are added to the existing forest in such a way as to improve its past performance. As a result, XGBoost can improve its performance on the training data ad infinitum. However, such an improvement will result in out-of-sample performance improvement only up to point. Hence, the algorithm needs to be stopped at some point. One way of deciding the best iteration to stop the algorithm is to use a separate test set: the algorithm will stop when performance on the test set will not improve for a specified number of iterations. In the context of k-fold cross-validation, the kth fold can also be used for stopping the algorithm. This implies that CV performance is no longer completely out-of-sample, since the test fold is used during training, albeit just for stopping the algorithm—potentially leading to an optimistic bias. This optimistic bias would pose a problem when comparing models from different classes (such as comparing XGBoost with random forest—since the latter do not use the kth fold at all during training) and when the magnitude of the optimistic bias in the CV performance might be dependent on hyperparameter values. As a result, it is worth investigating in detail the potential overfitting of the CV performance metric in the case of XGBoost and its behavior.

2.5. Experimental Design

To assess the validity of the k-fold cross-validation technique for selecting the best performing model on new data, we employ a nested cross-validation technique.

We first divide the data into four equal outer folds. We use three of these folds for training and model selection based on CV performance, while the fourth is used to compute out-of-sample (OOS) performance. We repeat the process iteratively using all four outer folds as test folds, while the remaining three serve as training folds. Our objective is to analyze the relationship between CV performance (computed within the three training outer folds) and OOS performance (computed on the fourth outer fold). A diagram and pseudocode for the nested cross-validation technique is presented in

Appendix B.

Our experimental design simulates the real-world use case where a practitioner uses k-fold cross-validation to train and select a model (on three outer folds) and then uses it with new data (the fourth outer fold).

To thoroughly study the relationship between cross-validation performance and out-of-sample performance, we repeat this process on 10 different data splits, each comprising of 4 outer folds. As a result, for each type of model tested and for each hyperparameter set, we have a total of 10 runs × 4 outer folds = 40 pairs of train/test data splits, corresponding to 40 pairs of cross-validation and out-of-sample performance measures (each computed using the k models required by the k-fold cross-validation technique).

We perform both the outer split and the inner split using a stratified sampling approach over the target value Y. As such, the class balance is maintained in all outer and inner folds.

Our dataset has an imbalance ratio of 30:1. López et al. [

15] and Moreno-Torres et al. [

16] show that, when the dataset is imbalanced, k-fold cross-validation may lead to folds with different data distributions. Even if stratified sampling is performed, thus resulting in folds with the same class balance, various clusters in terms of explanatory variables may not be equally and uniformly represented in the resulting folds. On the other hand, Rodriguez et al. [

17] show that k-fold cross-validation error variance is mainly attributable to the training set variability rather than changes in the folds, a result consistent with ours. Our choice of plain stratified cross-validation (SCV) is further supported by Fontanari et al.’s [

18] findings that, for imbalanced datasets, “SCV remained the most frequent winner” for performance estimation when compared to more elaborate CV strategies.

When applying k-fold cross-validation, as Forman and Scholz [

19] point out, there are two options for calculating CV performance: as an average of the k performance measures calculated for each of the k-folds (CV

avg) or as a single measure, calculated across all k-folds and using aggregate predictions (CV

agg). Forman and Scholz [

19] show that, although the latter option is less common in practice, it is a valid approach when stratified sampling is used. We also employ this approach, motivated by a number of factors: in many machine learning pipelines, there are multiple layers, and at each layer, predictions from previous layers are used as inputs. As such, well-calibrated predictions across folds are needed. Moreover, random forests and XGBoost models produce well-calibrated results. Indeed, our results show little difference between CV

avg and CV

agg in our case.

2.6. Oversampling

An important aspect when modelling imbalanced datasets is investigating whether oversampling techniques may lead to an improvement in performance. Santos et al. [

20] investigate a number of oversampling approaches and conclude that oversampling should be applied after the data are split into train, test, and validation sets and only on the training set. Applying oversampling on the whole dataset will lead to label leakage and overoptimism in the performance metric. The same result is noted by Neunhoeffer and Sternberg [

21], who show that incorrectly applying oversampling to the whole dataset before partitioning it may overestimate the CV performance measure to the point of completely invalidating an article’s results.

Santos et al. [

20] also conclude that a number of techniques may lead to overfitting, especially random oversampling (ROS). They note that oversampling may have opposite effects: on the one hand, it may exacerbate the effect of noise, but, on the other hand, may also increase the importance of rare cases. One of Santos et al.’s [

20] findings is that some oversampling techniques that rely on generating new, synthetic observation of the minority class(such as SMOTE—synthetic minority oversampling technique), may, when combined with cleaning procedures, such as Tomek links (TL) or edited nearest neighbor (ENN), offer superior performance.

Indeed, Gnip et al. [

22] use 15 publicly available databases for bankruptcy prediction (including the Taiwanese database used here) to assess the performance of various oversampling approaches. They find that SMOTE+ENN outperforms other techniques on the Taiwan bankruptcy prediction database when using XGBoost models. However, they do not benchmark their results on the datasets without oversampling.

We briefly investigate the performance of ROS, SMTE, SMOTE + TL, and SMOTE + ENN in 8 of the 40 train/test splits. We perform the oversampling technique on the training set only (at each iteration, the k-1 folds used for training). Since we use AUC as a performance metric, we do not need to recalibrate the predictions on the test sets.

2.7. Hyperparameter Tuning

For both random forest and XGBoost models, the grid search was performed manually based on a predefined list of values for each hyperparameter. We selected values covering the whole range of values considered appropriate for each hyperparameter. No special software or library was used.

2.7.1. Random Forest

We used the

ranger [

14] library in R and we tuned the following hyperparameters:

ntree,

mtry,

min.node.size,

sample.fraction, and

splitrule (with value “

gini” and “

hellinger”). We used a complete grid search approach for these hyperparameters.

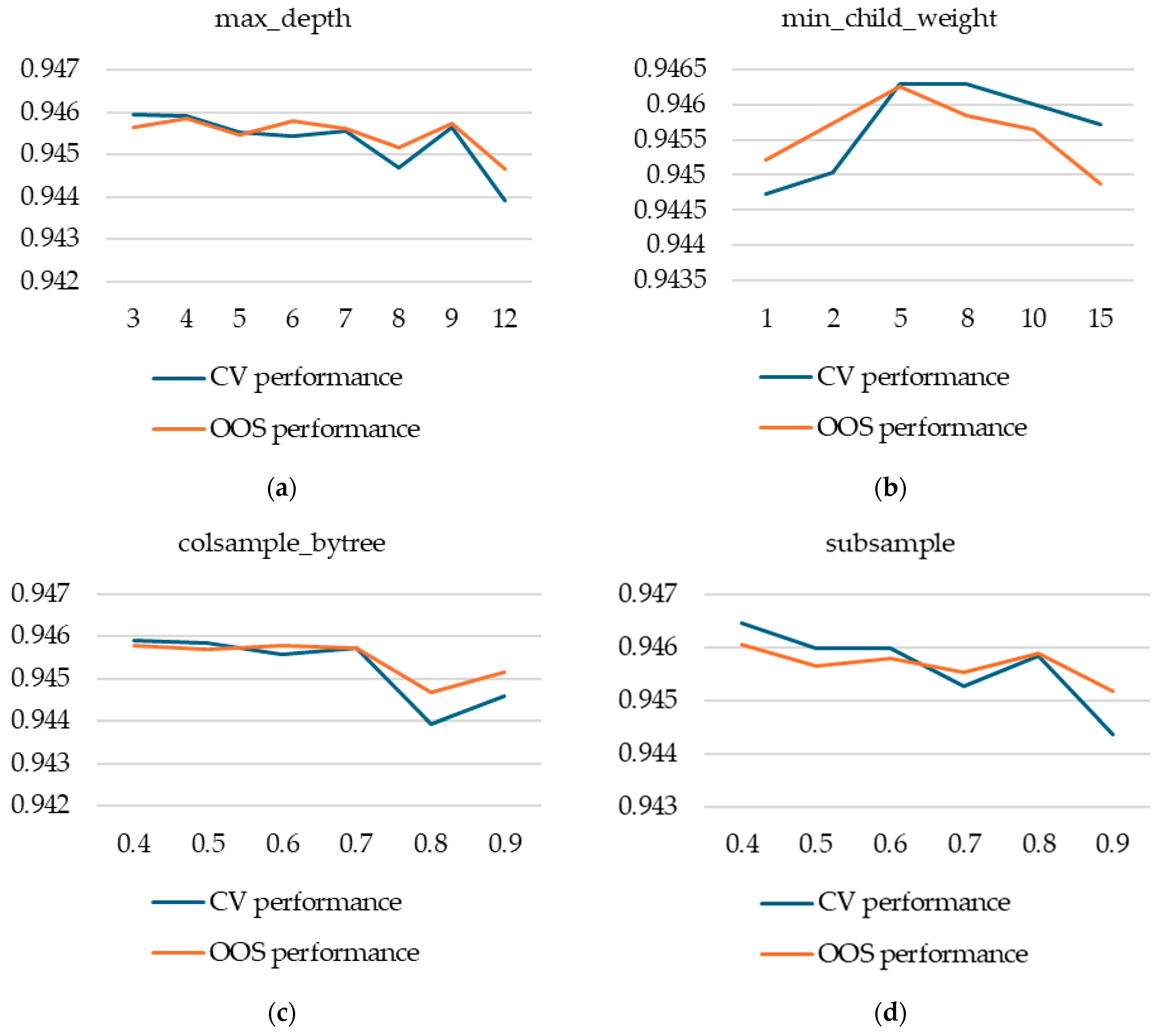

2.7.2. XGBoost

We used the xgboost library in R and tuned the following hyperparameters: eta, min.child.weight, max_depth, subsample, colsample_bytree, gamma, scale_pos_weight, and max_delta_step. Due to the higher number of hyperparameters considered and also due to the higher computational effort required to train a single model, we used a guided grid search approach—we first tried a couple of well-established values for each hyperparameter and then refined our search around those with the best CV performance.

2.8. Stability and Correlation Metrics

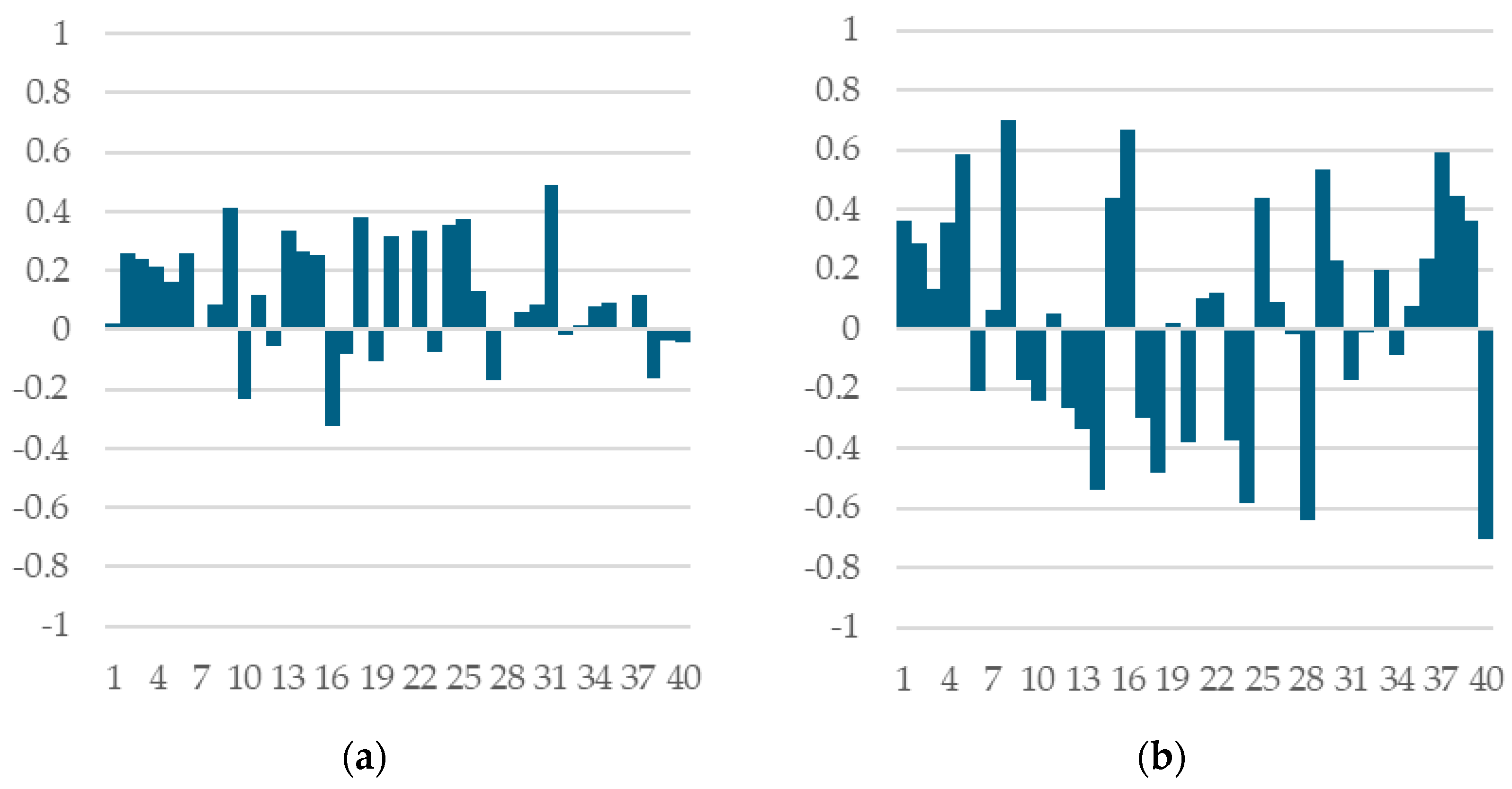

To investigate the k-fold cross-validation technique’s validity for model selection, we calculated the Spearman correlation coefficient ρ between CV performance and OOS performance for each of the 40 train/test pairs that we investigated.

In the same context of model selection, we defined the model selection regret δ. Let {M

1, M

2, …, M

n} be a set of alternative models trained on a training dataset D

training and to be evaluated on a test dataset D

test. Furthermore, let CV(M) be the CV performance on model M on D

training and OOS(M) the out-of-sample performance of model M on dataset D

test. We were ultimately interested in maximizing OOS(M) over M in {M

1, M

2, …, M

n}. Let M

CV be the model with the highest CV performance and M

OOS the model with the highest OOS performance. We define regret as follows:

To understand the sources of model selection regret variability, we performed a variance decomposition, attributing it to train/test splits, inner CV fold assignments, and model training stochasticity.

4. Discussion

Our results provide empirical evidence on the effectiveness of k-fold cross-validation as a model selection methodology within the same model class.

We find that although k-fold cross-validation performs well as a model selection tool on average, it may fail in specific cases. Our findings suggest that such failures are largely attributable to the inherent properties of the dataset available to the practitioner during training and the data on which the model will be deployed. These properties are outside of the practitioner’s control and are a given in any real-world modelling exercise. This conclusion is supported by our variance decomposition analysis, which shows that almost 67% of model selection regret is attributable to the train/test split.

Three key observations lead to our conclusion:

The model is not at capacity; hence, it will benefit from being trained on the full dataset (result from

Section 3.2.1)

Retraining a single model on the whole dataset produces practically the same results on new data as using all k models trained during the k-fold cross-validation model selection phase (result from

Section 3.2.2)

Pairwise correlations between OOS performance measures of single retrained models on the full training set for each of the 40 runs are sometimes negative (result from

Section 3.1.3)

Observations 1 and 2 lead us to conclude that, regardless of which model a selection process selects, a practitioner will ultimately arrive at a situation equivalent to having a single (selected) model trained on the full training dataset to be used in practice with new data. This conclusion, combined with observation 3, leads us to conclude that any model selection procedure that a practitioner may implement will ultimately be largely affected by the characteristics of the training and deployment datasets. From a practical perspective, this conclusion is in line with Wainer and Cawley [

10], who argue that more complex model selection techniques do not provide sufficient benefits to justify their increased computational cost.

These insights are further reinforced by the stability of the Spearman correlation between CV and OOS performance and also of the model selection regret within a fixed train/test split: when the inner k-folds or the model training seeds are varied, correlations between CV and OOS performance remain stable in both sign and magnitude.

As noted, according to our results, k-fold cross-validation apparently fails to select the best model between classes of models (random forest and XGBoost), suggesting that the interaction between data properties and model type is more complex. This observation warrants further research.

As shown, k-fold cross-validation can overfit the CV performance metric if care is not used, with no corresponding OOS performance gains. For XGBoost models, for example, setting k too high and using the best iteration, using a small eta, or trying out multiple alternative splits into k-folds may entail significant computational cost and artificially increase CV performance with no impact on OOS performance.

A limitation of our study stems from not having access to actual new data. Other research papers split the data according to time. Models are trained on data from earlier periods and tested on data from later periods. Such a setup would simulate the arrival of new data from the real world, but it was not possible to implement it in our case because the data sample, even though it spans across multiple years, does not have a time stamp.

It is important to note that our study is an empirical in-depth analysis based on a single dataset. Although our findings offer valuable insights into the use of k-fold cross-validation for model selection and also into hyperparameter tuning for random forest and XGBoost models, they may not fully generalize to other datasets.

Our findings are relevant beyond bankruptcy prediction, offering insights for practitioners using k-fold cross-validation as a model selection strategy in various financial and risk modeling tasks.