AraEyebility: Eye-Tracking Data for Arabic Text Readability

Abstract

1. Introduction

2. Background

2.1. Arabic Language

2.2. Eye Tracking

2.2.1. Eye Tracking and Reading

2.2.2. Eye-Tracking Visualization

2.2.3. Eye Tracking and Arabic Language

- Arabic reading’s informational density makes it more time-intensive than Latin languages, making word identification more challenging [30].

- The direction of reading influences the perceptual span’s asymmetrical extension. In Arabic, this focus area extends more to the left, while in Latin languages, it extends more to the right, impacting visual processing during reading [30].

- The length and familiarity of words affect eye movement decisions in both Arabic and Latin languages. However, the impact of skipping words is less significant in Arabic, with low differences in skipping rates for low- and high-frequency words [30].

- Studies suggest that words in Semitic languages are best understood when the focus is placed on the middle, unlike Latin languages, for which the focus should be placed on the beginning–middle. This difference is due to the morphological structure indicating core meaning [30].

- Arabic writing is more complex due to the mandatory dots above or below many letters, unlike Latin languages, for which only two lowercase letters have dots [25].

- Numbers in Arabic are read from left to right, unlike text, which is read from right to left. This can cause inversion errors during reading [48].

- Arabic text is more challenging to read than Latin text due to its cursive nature, context-dependent characters, diverse writing styles, and unique letter positioning, such as in the word “محمد” (Muhammad), for which some letters can be placed above others [49].

3. Literature Review

3.1. Eye Tracking in Reading Studies

3.2. Corpora

3.2.1. Arabic Readability Corpora

3.2.2. Eye-Tracking Corpora

3.3. Discussion

4. Methodology

4.1. Corpus Preparation

4.1.1. Identifying Participants’ Criteria

4.1.2. Defining Different Aspects of the Participants’ Tasks

4.1.3. Collecting and Testing Corpus Texts

4.1.4. Paragraph Segmentation

4.1.5. Extracting and Testing Arabic Readability Guidelines

4.2. Data Collection

4.2.1. Pilot Testing the Eye-Tracking Experiment

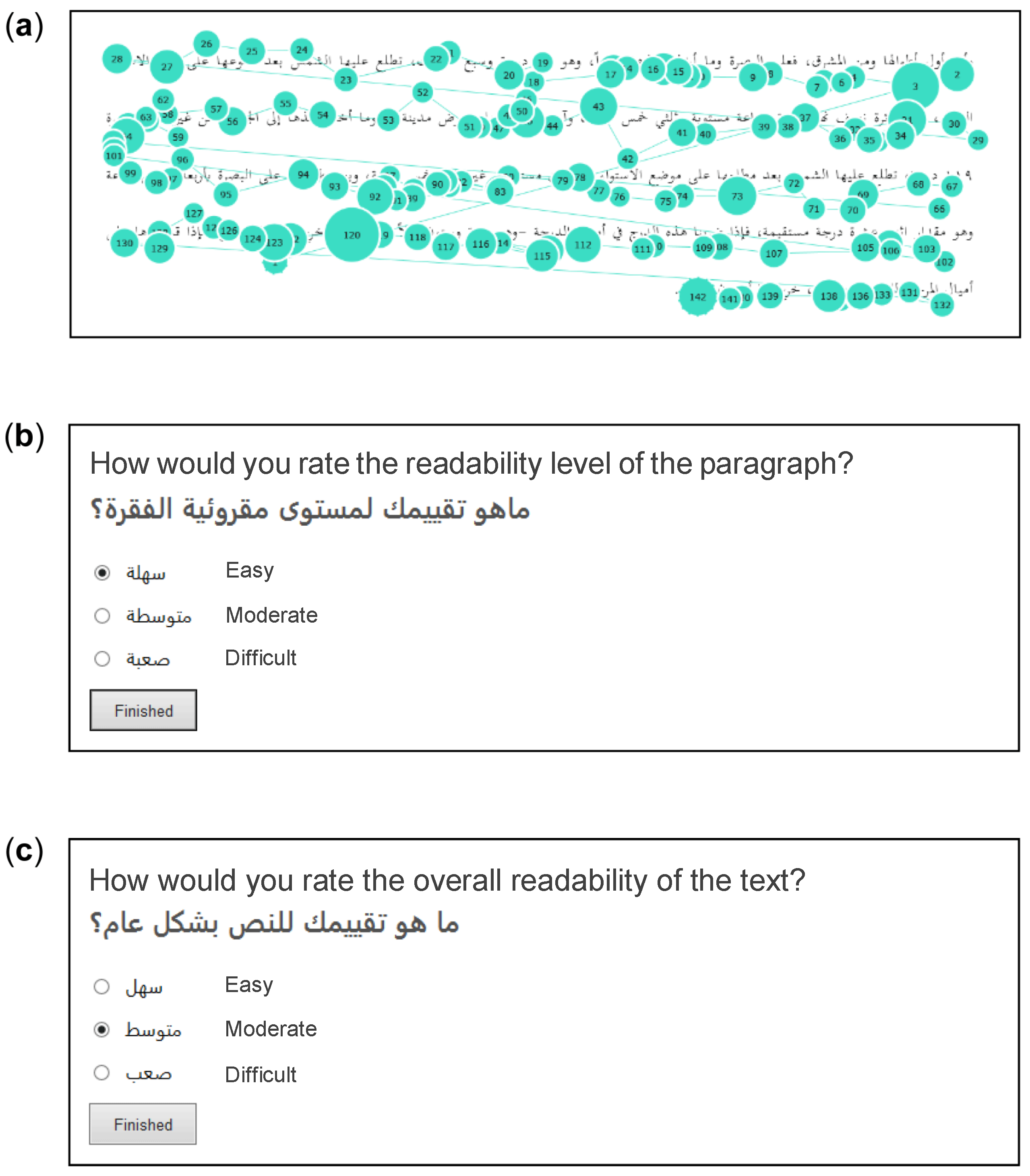

4.2.2. Designing the Eye-Tracking Experiment

Apparatus and Setup

Materials

4.2.3. Setting Up the Eye-Tracking Experiment

4.2.4. Conducting the Eye-Tracking Experiment

4.2.5. Participants

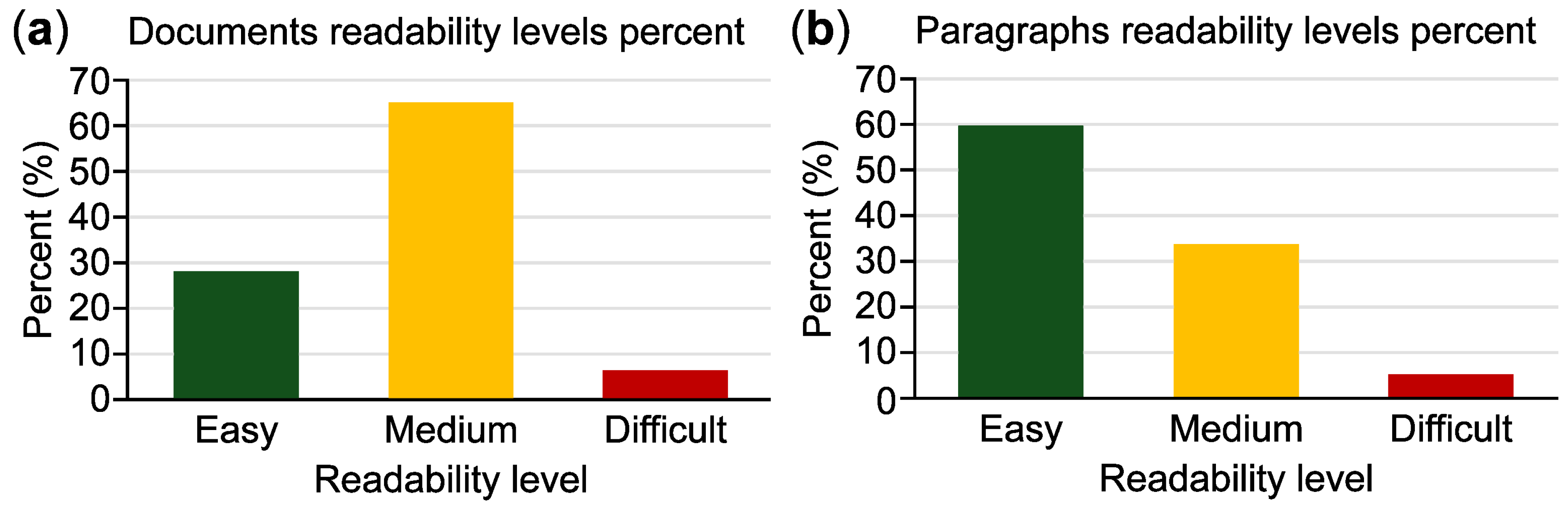

4.2.6. Results

Sessions 1 and 2 for MSA Texts

Session 3 for CA Texts

4.2.7. Quality Control

4.3. Data Preparation

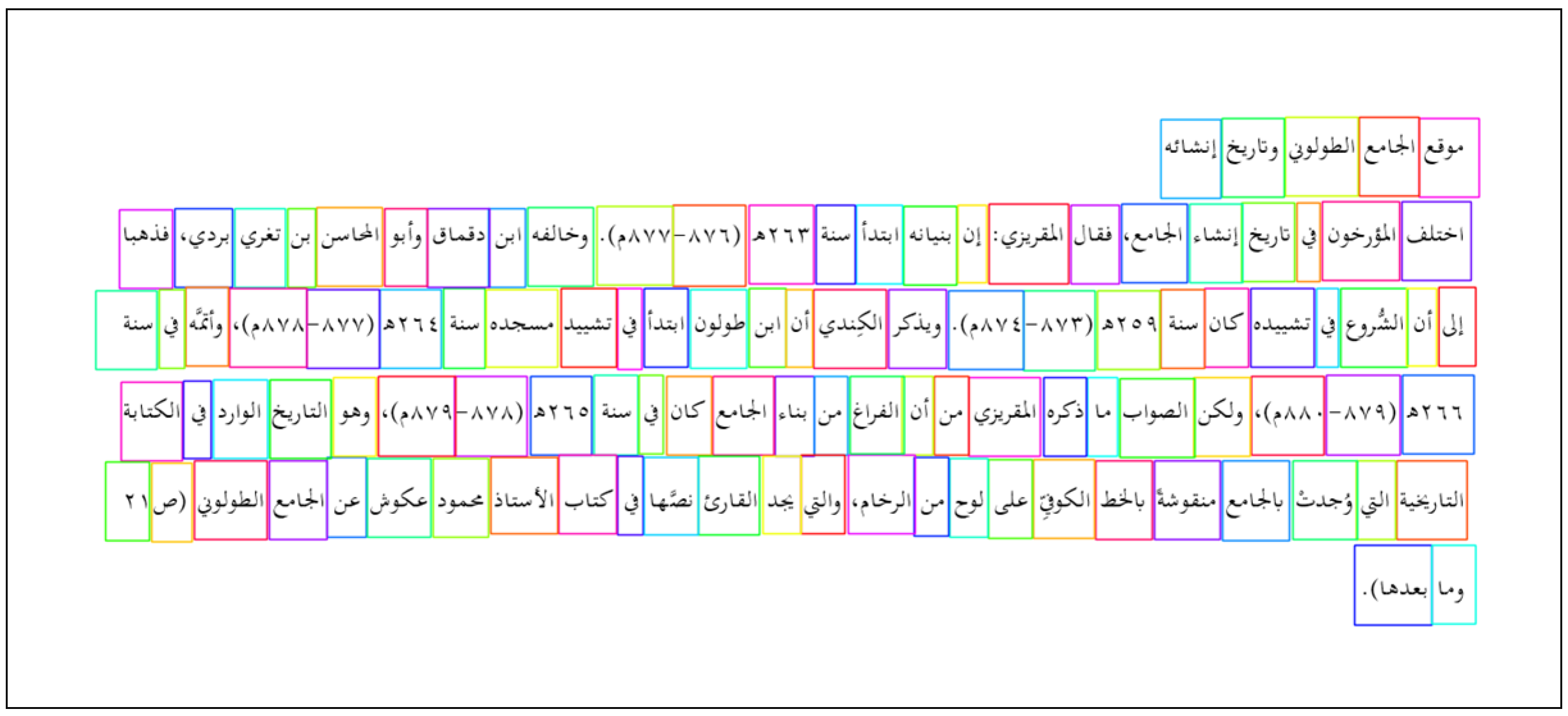

4.3.1. Tokenization

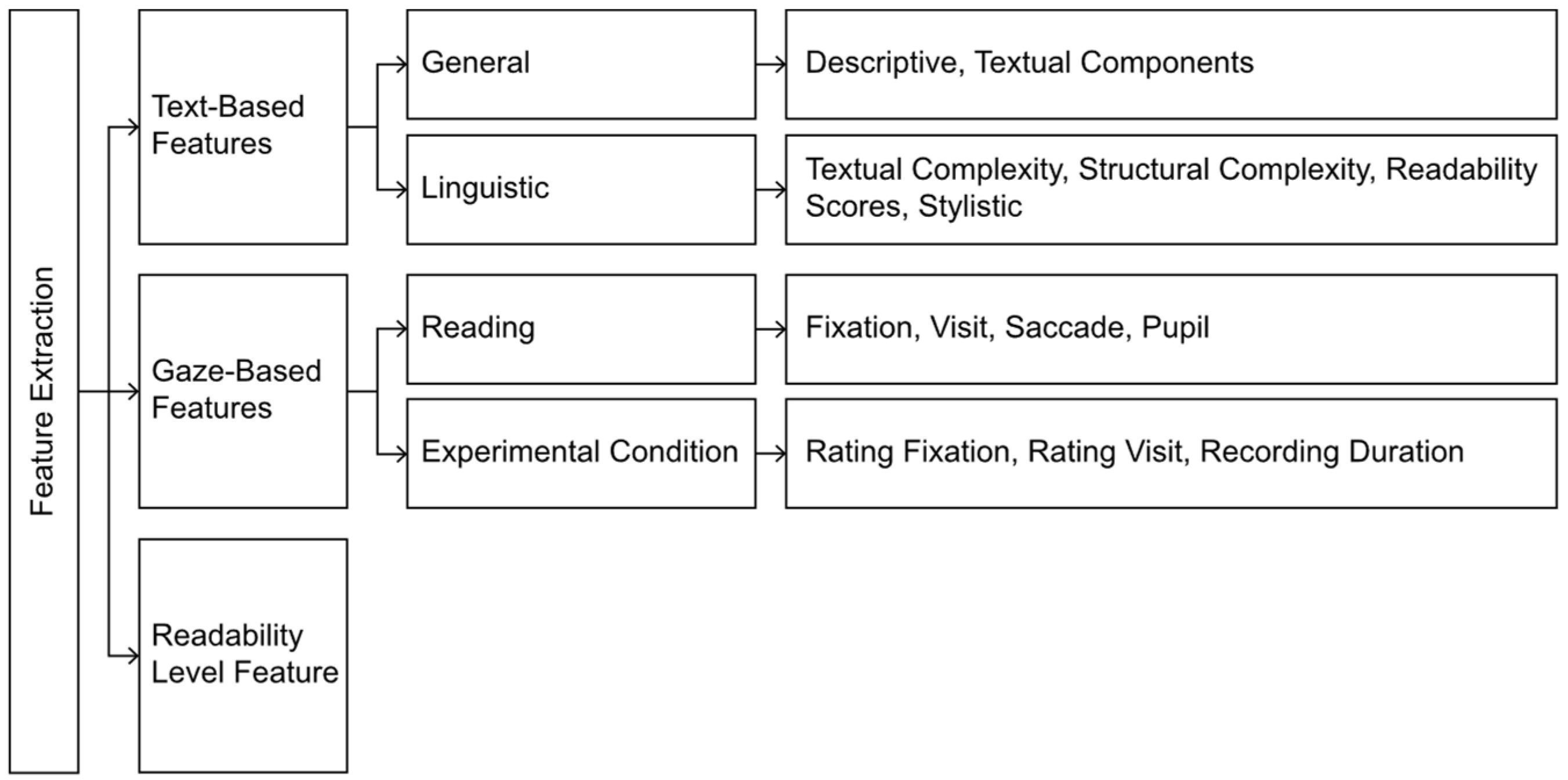

4.3.2. Feature Extraction

- 1.

- Text-based features represent the general characteristics or linguistic complexity of the selected texts.

- 2.

- Gaze-based features, derived from eye-tracking experiments, reflect cognitive processing and comprehension through established eye-tracking metrics.

- 3.

- The readability level feature represents the combined subjective readability ratings of paragraphs and documents, as provided by participants during the eye-tracking experiments.

Text-Based Features

Gaze-Based Features

Readability Level Features

4.3.3. Data Preprocessing

Encoding of Categorical Features

Data Formatting

Data Cleaning

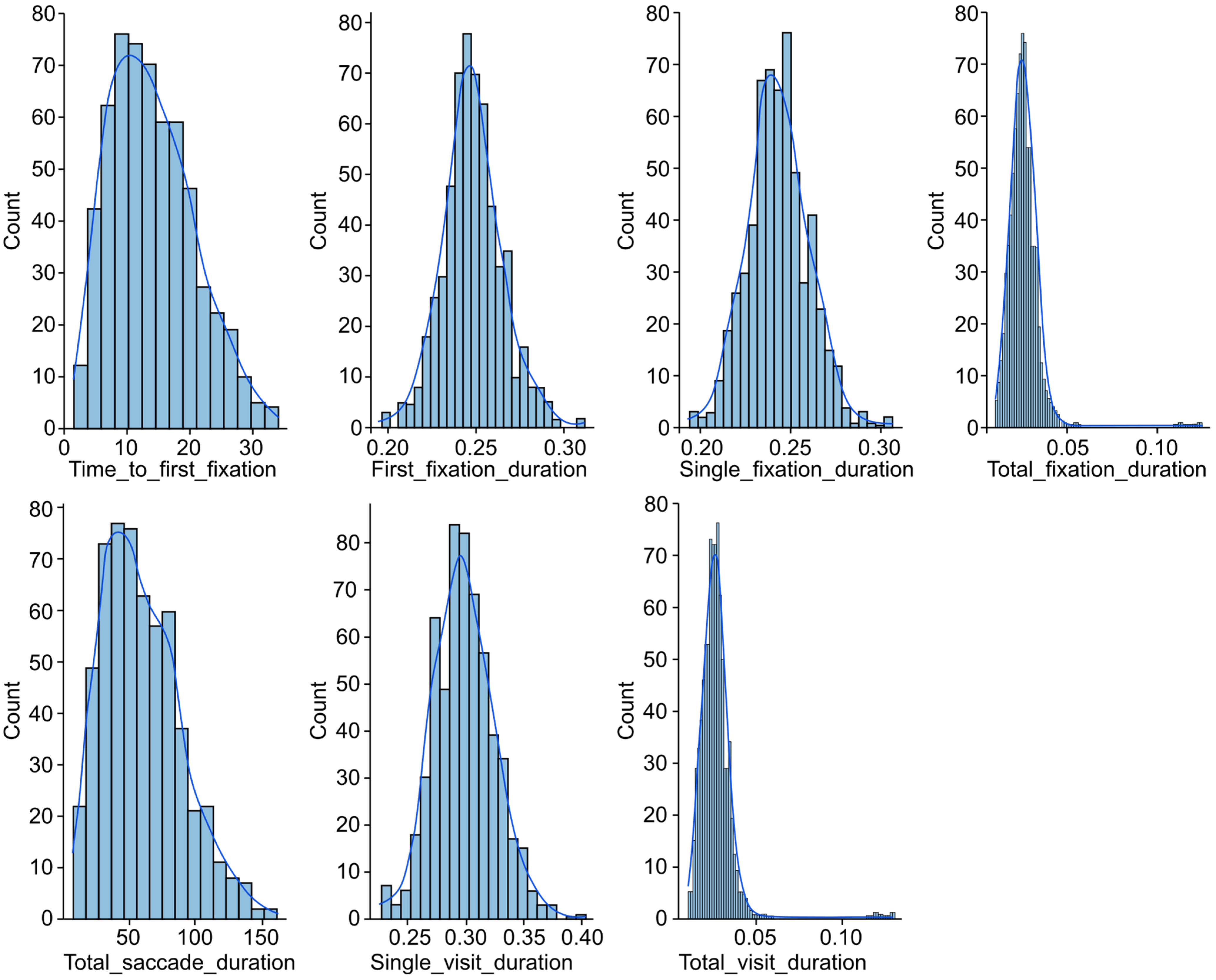

4.3.4. Corpus Evaluation

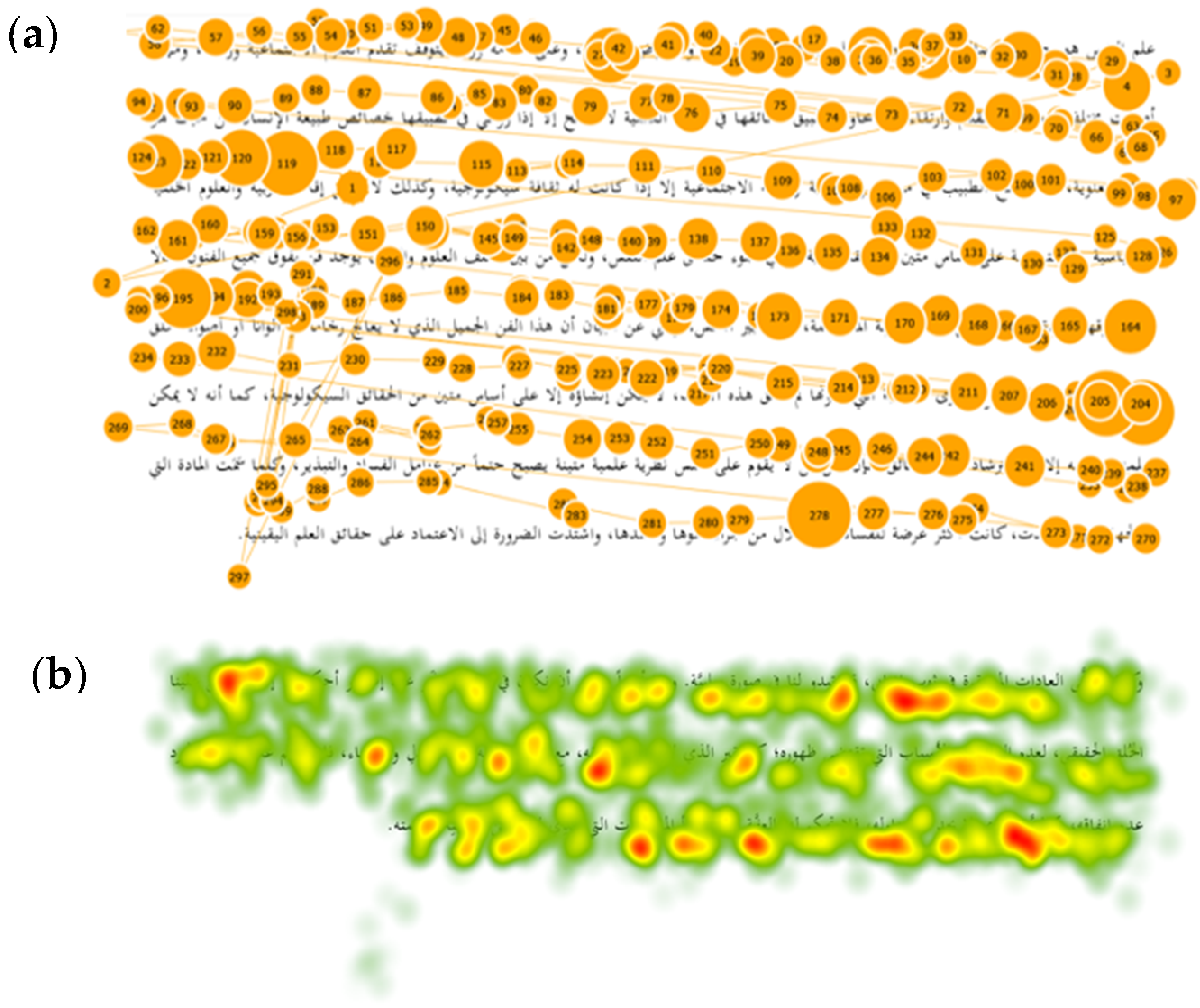

Visualization of Gaze Plots

Interpersonal Consistency in Reading Times

Association Between OSMAN and Other Features

5. Conclusions, Limitations, and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Corpus | Description | Used or Compiled |

|---|---|---|

| Targeting Arabic L1 Learners or Readers | ||

| Saudi Curriculum Texts | 60 Arabic texts, 20 each from the 3rd and 6th grade elementary and 3rd grade intermediate levels, with each text being around 100 words. | [5] |

| Saudi Curriculum Texts | 150 Arabic curriculum texts, with 50 each from elementary, intermediate, and secondary levels, totaling 57,089 tokens. | [3] |

| King Abdulaziz City for Science and Technology Arabic Corpus | Over 700 million words spanning a period of more than 1500 years; the materials are organized according to period, geographical area, format, field, and subject, allowing for search and exploration based on these categories. | [73] |

| United Nations Corpus | 73,000 corresponding English and Arabic paragraph pairs sourced from the United Nations corpus. | [72] |

| Jordanian Curriculum and Saudi Articles Dataset | 600 Saudi news articles and 866 Jordanian curriculum lessons, totaling 1200 records and 307,238 tokens, categorized into school and advanced readability levels. | [109] |

| Open-Source Corpus | 75,630 Arabic web pages not tailored to language learners; a subset of 8627 longer sentences was selected. | [123] |

| Jordanian Curriculum Texts | 1196 Arabic texts from the Jordanian elementary curriculum, covering different subjects. | [7] |

| Medicine Information Leaflets | 1112 Arabic medicine information leaflets, acquired from the King Abdullah Arabic Health Encyclopedia and the Saudi Food and Drug Agency Authority. | [74,75] |

| Modern Standard Arabic Readability Corpus | 644 curriculum texts from Moroccan primary books, categorized into 7 difficulty levels ranging from kindergarten (Level 0) to the 6th grade (the final primary grade). | [76,102,124] |

| Targeting Arabic L2 Learners | ||

| GLOSS | Created by the Defense Language Institute Foreign Language Center; offers public access to over 7000 reading and listening lessons in 40 languages and dialects sorted into 11 difficulty levels based on the Interagency Language Roundtable proficiency scale. | [4,102,104,107,108,123,125,126,127,128,129] |

| Aljazeera Learning | Instructional Arabic texts on the Aljazeera website are categorized into 5 difficulty levels, from beginner to advanced. | [102,104,128,129] |

| Malaysian Curriculum Texts | 313 reading texts sourced from 13 religious curriculum textbooks for grades 1–5 in Malaysia. | [130] |

| Al-Kitaab fii TaAallum al-Arabiyya | Textbook series commonly used to teach MSA as a second language. | [106,123] |

| Saaq al-Bambuu | An Arabic novel with an approved condensed edition for learners of Arabic as a foreign language. | [123] |

| Collected Web Texts | 39,792 documents manually sourced from the Web on various topics categorized into 4 readability levels: easy, medium, difficult, and very difficult. | [70] |

| Targeting both Arabic L1 and L2 Learners | ||

| A Leveled Reading Corpus of Modern Standard Arabic | Constructed from Arabic curriculum (grades 1–12) and adult fiction, categorized into 4 levels with a total of 22,240 documents. | [126] |

| Arabic Learner Corpus | Comprises Arabic texts by Saudi Arabian students, divided into non-native learners of Arabic and native speakers improving their written proficiency. | [123] |

Appendix B

| Level | Characteristics |

|---|---|

| Easy Paragraph |

|

| Moderate Paragraph |

|

| Difficult Paragraph |

|

| Level | Characteristics |

|---|---|

| Easy Document |

|

| Moderate Document |

|

| Difficult Document |

|

| Level | Characteristics |

|---|---|

| Easy Paragraph |

|

| Moderate Paragraph |

|

| Difficult Paragraph |

|

| Level | Characteristics |

| Easy Document |

|

| Moderate Document |

|

| Difficult Document |

|

Appendix C

Appendix C.1. Text-Based Metrics

Appendix C.1.1. Descriptive Features

| Feature | Description |

|---|---|

| Book Name and Author | The complete name of a book and its author(s). |

| Document Code | Includes the topic of the text from a book and a unique identifier for the text. |

| Paragraph Code | Includes the topic of the paragraph, a unique identifier for the text, and the sequence number of the paragraph in its source text. |

| Book Language | The language of the text, whether MSA or CA. Although MSA originated from CA, it has evolved and led to differences in structure and word complexity from CA [26]. |

| Book Topic | The topic of the book from which the text was chosen. It was assumed that texts on different topics would have different readability levels. The possible values of this feature are grammar and morphology, literature and eloquence, history, geography and travel, health and nutrition, philosophy, politics, biography, sociology, technology, psychology, commerce, and arts. |

| Publication Century | The century in which the book containing the text was published was found to influence the text’s readability level [26]. The possible values for this feature are 8, 9, 10, 11, 12, 14, 20, and 21. |

| Authorship Type | The gender of the text’s author(s) was assumed to affect the text’s writing style, perspective, and reading experience. The possible values of this feature are single-gender (male) book, single-gender (female) book, and mixed-gender book. |

| Translation Type | The gender of the text’s translator, which is similar to the gender of the author(s), was also assumed to affect the text’s readability level. The possible values of this feature are single-gender (male) translation, single-gender (female) translation, mixed-gender translation, and no translation. |

| Author Count | The number of authors of the book from which the text was taken was also assumed to affect text readability because each author has a different writing style, perspective, and experience. |

| Text Source | This indicates the part of the book from which the text was taken. It was assumed that texts taken from the author’s introduction at the start of a book would be easier to read than other book contents, which are usually deeper and more detailed. The possible values of this feature are introductory content and other book content. |

Appendix C.1.2. Textual Complexity Features

| Feature | Description |

|---|---|

| Listing Count | The number of all lists [e.g., bullet, number, letter, and number word (e.g., first, second, etc.) lists] in a text. |

| Parenthesis Count | The number of all parenthesis pairs containing additional information or abbreviations in a text, including all parenthesis pairs for textual content [e.g., ( التجمُّد) and (العالم العربي)] and parenthesis pairs for numerical content [i.e., numbers, dates, times, years, and percentages; e.g., (١٩٦٠م)], and excluding parenthesis pairs used in numbered lists [e.g., (1) and (2)] and lettered lists [e.g., (أ) and (ب)] because they will be accounted for in the Listing Count feature. |

| Parenthetical Expression Count | The number of all parenthetical expressions between two dashes in a text (e.g., “- بما فيها من قوة الحياة -”). |

| Numerical Content Count | The number of all numerical content, such as numbers, dates, times, years, and percentages, in a text (e.g., ٢٥٠٠ عام). Numbered lists [e.g., “-١”, “-٢”, “(١)”, and “(٢)”] are excluded because they are part of the Listing Count feature and are not considered numerical content. Sequences of numbers with attached characters should also be considered (e.g., “١٦٤٨م” and “٨٩٣ م -٨٩٤”). |

| Religious Text Count | The number of all verses (“Ayah”) of the Holy Qur’an and of the Hadith, a statement of the Prophet Muhammad (peace be upon him), in the text. |

| Poem Verse Count | The number of verses (e.g., “وخالدٌ يَحْمَدُ أصحابُهُ ... بالحَقِّ لا يُحمَدُ بالباطلِ”) of Arabic poems in the text. One verse in an Arabic poem has two hemistichs (parts), which are separated by ellipses (“…”). However, some texts contain ellipses, such as “ومنها ما هو عارضٌ كالأديان والغَزَوات... إلخ”, which required manual revision. |

Appendix C.1.3. Structural Complexity Features

| Feature | Description |

|---|---|

| Character Count | The number of characters in a text, excluding punctuation [7,87,109] and diacritics [72]. While certain studies [5,7,109] have linked this feature to Arabic text difficulty, other studies, such as [106], indicate that it may not substantially impact word complexity. |

| Word Count | The number of words in a text. This represents the text length in tokens using white space as a token separator in a text [105,106,109,125]. |

| Average Word Length | The average length of a word in characters per text [3,107]. This is calculated as follows [3,7,74,109]: Average Word Length = Character Count per Text/Word Count per Text. This feature has been used in a great deal of readability research to show the density of a text, as a denser text with longer words tends to be more difficult to read than a less dense text [3,7,125]. |

| Syllable Count | The total number of syllables in a text. Some studies indicate that in Arabic, words with more syllables do not significantly impact readability [26], as words with over three syllables can still be simple [3,72], contrary to studies suggesting that having more syllables in words affects readability [3,106]. |

| Average Syllables per Word | The average number of syllables per word is calculated as follows [3,107]: Average Syllables per Word = Syllable Count per Text/Word Count per Text. |

| Difficult Word Count | The number of difficult words in a text [7]. Scholars continue to debate the definition of “difficult words”, but several studies have defined them as words with six or more letters [7,108,109]. In this study, difficult words were defined as OSMAN Faseeh words: words that have six or more characters and end with any of the following letters: ء ,ئ ,وء , ذ ,ظ ,وا, and ون. This is indicated in [72]. |

| Average Difficult Word Count | The average number of difficult words in a text is calculated as follows [7,109]: Average Number of Difficult Words = Difficult Word Count per Text/Word Count per Text. |

| Unique Loan Word Count | The number of loan words used in a text, excluding repetitions. |

| Total Loan Word Count | The total number of loan words used in a text, counting repetitions. This is the same as the previous feature, except that in this feature, every occurrence of a loan word is counted. |

| Unique Foreign Word Count | The number of foreign words used in a text (e.g., herbalists, Thomas More, and apothecary), excluding repetitions. |

| Total Foreign Word Count | The total number of foreign words, including repetitions, in a text. This is the same as the previous feature, except that in this feature, each occurrence of a word is counted. |

| Foreign-Words-to-Token Ratio | The percentage of foreign words in a text [104] is calculated as follows: Foreign-Words-to-Token Ratio = Total Foreign Word Count per Text/Word Count per Text. |

| Loan-Words-to-Token Ratio | The percentage of loan words in a text [104] is calculated as follows: Loan-Words-to-Token Ratio = Total Loan Word Count per Text/Word Count per Text. |

| Feature | Description |

|---|---|

| Sentence Count | The number of sentences in a text. This feature suggests that sentence length and structure affect text difficulty [7,109]. For an accurate readability assessment, sentences are counted based on meaning, focusing on complete, meaningful units rather than merely punctuation [105,108]. |

| Average Sentence Length in Words | The average number of words in a sentence [3,5,107,125] is calculated as follows [3,7,74,109]: Average Sentence Length in Words = Word Count per Text/Sentence Count per Text. This feature is widely considered a key measure of readability in readability formulas and studies [3,5,7,106,125,128] due to the belief that longer sentences are harder to read and understand [5,75]. |

| Average Sentence Length in Characters | The average number of characters per sentence in the text is calculated as follows: Average Sentence Length in Characters = Character Count per Text/Sentence Count per Text. This feature indicates the density of a text. Denser texts, or those with higher average sentence lengths in characters, tend to be more difficult to read than less dense texts [3,7]. |

| Paragraph Count | The number of paragraphs in a text. This might affect a text’s organization and how easily readers can digest the information. |

Appendix C.1.4. Readability Scores

Appendix C.1.5. Stylistic Features

| Feature | Description |

|---|---|

| Text Style | The method of choosing and composing words to express meanings for the purpose of clarification and influence. Possible values for this feature include scientific, literary, literary scientific, and social scientific. |

| Script Style | The method that the text writer used to prepare, organize, and produce the text. Possible values for this feature include argumentative, expository, guideline, narrative, informative, and demonstrative. |

| Linguistic Style | The approach that the text writer followed in creating vocabulary and structures to express meanings. Possible values for this feature include informative, structural, and mixed informative and structural. |

| Writing Technique | An expression mechanism innovated by the text writer. Possible values for this feature include critic, mentor and educator, objective researcher, the narrator, and subjective. |

Appendix D

Appendix D.1. Fixation Metrics

| Metric | Description |

|---|---|

| Time to First Fixation | The period between the onset of a trial containing an AOI and the moment a participant fixated inside the AOI. It measures how long it takes participants to notice and fixate on the AOI. A longer time to first fixation suggests a longer task completion time [41,43,132]. |

| Fixations Before | The number of times a participant fixated on the trial before first fixating on the AOI. The fixation count begins when the medium that contains the AOI is presented for the first time and ends with the participant’s first fixation on the AOI [41]. |

| First Fixation Duration | The duration of a participant’s fixation inside an AOI for the first time [16,43,111]. In reading studies, a higher first fixation duration indicates difficulty in processing the text by reflecting both syntactic processing and low-level lexical access [16]. |

| Single Fixation Duration | The average duration of each (single) fixation inside an AOI [19,41,77]. While there is an assumption that this duration reflects a reader’s engagement in reading [97], longer fixations are believed to reflect increased cognitive effort during reading [13,132,133]. |

| Total Fixation Duration | The total time a participant spent fixating inside an AOI in a trial, including regressions to that AOI (refixations after the AOI was left) [13,17,36,40,41,43,44,95,110,111]. Longer fixations could indicate higher interest or perceived importance of the AOI, but conversely, they could indicate deeper processing, possibly due to confusion with the AOI [13,16,110]. |

| Average Fixation Duration | The average duration of all the fixations inside an AOI [13,17,19,43,133], which represents the average duration of a participant’s fixation on an AOI. It is calculated as follows: Average Fixation Duration = Total Fixation Duration/Total Fixation Count. This measure can distinguish AOIs that receive more attention and correlate strongly with text difficulty. Prolonged durations on certain words may indicate their complexity for the reader [43]. |

| Total Fixation Count | The total number of fixations on a specific AOI of a trial [43,110,111]. A higher total fixation count suggests that the AOI was either attractive to the participant or required greater visual effort [43,44,133]. Increased fixations are associated with comprehension difficulties and text complexity in reading studies [13,17,132]. |

| Percentage Fixated | The percentage of eye-tracking recordings in which the participants fixated on an AOI at least once [41]. |

| Average Number of Fixations per Word | When working with text, the fixation count can be adjusted based on the text length by calculating the normalized number of fixations for each trial [17,133]. This metric is calculated as follows [17]: Average Number of Fixations per Word = Total Fixation Count/Word Count. |

| Fixation Rate | The number of fixations per second. For comprehension tasks, a high fixation rate indicates either participant interest or AOI difficulty. This metric is calculated as follows [134]: Fixation Rate = Total Fixation Count/Total Fixation Duration. Measuring both the fixation count and the fixation duration is crucial as they can vary independently. Thus, the fixation rate provides insight into how frequently an AOI is fixated on relative to the overall time spent fixating on it [133,134]. |

Appendix D.2. Saccade Metrics

| Metric | Description |

|---|---|

| Total Saccade Count | The number of saccades that occurred in a trial [43]. A higher count indicates increased searching and mental workload, providing insight into how eye movements are affected by material difficulty [133]. |

| Total Saccade Duration | The time taken between the start and the end of the search path [44]. Shorter saccades indicate comprehension difficulties and higher mental workload [13,44,133], whereas longer saccades are associated with more readable texts and shorter reading times [38]. |

| Average Saccade Duration | The estimated speed of processing information. A longer duration suggests increased cognitive effort, indicating that more time is spent on comprehending the content [97,110]. This is calculated as follows: Average Saccade Duration = Total Saccade Duration/Total Saccade Count. |

| Saccadic Amplitude | The saccade size is measured in degrees (the angular distance) [132]. This metric represents the distance spanned by the eyes during a saccade [43]. This distance tends to decrease as task difficulty and cognitive load increase, indicating the focused, detailed visual exploration that is commonly used to deal with complex, information-rich material requiring careful analysis [132]. |

| Saccade-to-Fixation Ratio | The ratio of the time between information search and cognitive information processing [44]. A higher value of this metric indicates more searching compared to processing (more saccades and fewer fixations) [133]. This is calculated as follows: Saccade-to-Fixation Ratio = Total Saccade Duration/Total Fixation Duration. |

| Absolute Saccadic Direction | The angle between the horizontal axis and the current fixation point, with the prior fixation location serving as the origin of the coordinate system, calculated using a unit circle. The direction of a participant’s gaze implicitly reflects the participant’s area of attention [132]. |

| Relative Saccadic Direction | The angle changes between the current saccade and the prior saccade. It is calculated using the difference between the absolute directions of two consecutive saccades [132]. |

Appendix D.3. Visit Metrics

| Metric | Description |

|---|---|

| Total Visit Count | The number of visits of a participant inside an AOI, reflecting how many times a participant ran fixations in the AOI [41,43]. This metric helps identify areas that captured a participant’s attention frequently [132], indicating their importance or the participant’s need to revisit them for understanding or memory [13,43,44]. |

| Single Visit Duration | The average duration of each (single) visit to an AOI [41]. |

| Total Visit Duration | The duration of all visits that occurred in an AOI, starting from the first fixation in this AOI until a further fixation occurred in a subsequent AOI [41,43]. Longer durations typically indicate greater difficulty in processing the text within the AOI [16]. |

| Average Visit Duration | The average duration of all visits to an AOI, indicating the average time spent fixating on an AOI [43]. It is calculated as follows: Average Visit Duration = Total Visit Duration/Total Visit Count. |

| Average Number of Visits per Word | For text analysis, visit counts can be normalized as follows to account for varying word counts in different texts [17,133]: Average Number of Visits per Word = Total Visit Count/Word Count. |

Appendix D.4. Pupil Metrics

Appendix D.5. Experimental Condition Metrics

| Metrics | Submetrics |

|---|---|

| Rating Fixation | Rating Total Fixation Duration, Rating Total Fixation Count, Rating Percentage Fixated |

| Rating Visit | Rating Total Visit Count, Rating Total Visit Duration |

| Recording Duration | Recording Duration |

Appendix D.6. Recording of General Information Metrics

References

- Balyan, R.; McCarthy, K.S.; McNamara, D.S. Comparing Machine Learning Classification Approaches for Predicting Expository Text Difficulty. In Proceedings of the the Thirty-First International Flairs Conference, Melbourne, FL, USA, 21–23 May 2018; pp. 421–426. [Google Scholar]

- Collins-Thompson, K. Computational assessment of text readability: A survey of current and future research. ITL Int. J. Appl. Linguist. 2014, 165, 97–135. [Google Scholar] [CrossRef]

- Al-Khalifa, H.S.; Al-Ajlan, A.A. Automatic readability measurements of the Arabic text: An exploratory study. Arab. J. Sci. Eng. 2010, 35, 103–124. [Google Scholar]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Modern Standard Arabic Readability Prediction. In Proceedings of the Arabic Language Processing: From Theory to Practice (ICALP 2017), Fez, Morocco, 11–12 October 2017; pp. 120–133. [Google Scholar]

- Al-Ajlan, A.A.; Al-Khalifa, H.S.; Al-Salman, A.S. Towards the development of an automatic readability measurements for Arabic language. In Proceedings of the Third International Conference on Digital Information Management, London, UK, 13–16 November 2008; pp. 506–511. [Google Scholar]

- Dale, E.; Chall, J.S. The Concept of Readability. Elem. Engl. 1949, 26, 19–26. [Google Scholar]

- Al Tamimi, A.K.; Jaradat, M.; Al-Jarrah, N.; Ghanem, S. AARI: Automatic Arabic readability index. Int. Arab J. Inf. Technol. 2014, 11, 370–378. [Google Scholar]

- Baazeem, I. Analysing the Effects of Latent Semantic Analysis Parameters on Plain Language Visualisation. Master’s Thesis, Queensland University, Brisbane, Australia, 2015. [Google Scholar]

- Mesgar, M.; Strube, M. Graph-based coherence modeling for assessing readability. In Proceedings of the Fourth Joint Conference on Lexical and Computational Semantics, Denver, CO, USA, 4–5 June 2015; pp. 309–318. [Google Scholar]

- Cavalli-Sforza, V.; Saddiki, H.; Nassiri, N. Arabic Readability Research: Current State and Future Directions. Procedia Comp. Sci. 2018, 142, 38–49. [Google Scholar] [CrossRef]

- Feng, L.; Elhadad, N.M.; Huenerfauth, M. Cognitively motivated features for readability assessment. In Proceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, Athens, Greece, 30 March–3 April 2009; pp. 229–237. [Google Scholar]

- Balakrishna, S.V. Analyzing Text Complexity and Text Simplification: Connecting Linguistics, Processing and Educational Applications. Ph.D. Thesis, der Eberhard Karls Universität Tübingen, Tübingen, Germany, 2015. [Google Scholar]

- Vajjala, S.; Meurers, D.; Eitel, A.; Scheiter, K. Towards grounding computational linguistic approaches to readability: Modeling reader-text interaction for easy and difficult texts. In Proceedings of the Workshop on Computational Linguistics for Linguistic Complexity (CL4LC), Osaka, Japan, 11 December 2016; pp. 38–48. [Google Scholar]

- Vajjala, S.; Lucic, I. On understanding the relation between expert annotations of text readability and target reader comprehension. In Proceedings of the Fourteenth Workshop on Innovative Use of NLP for Building Educational Applications, Florence, Italy, 2 August 2019; pp. 349–359. [Google Scholar]

- Mathias, S.; Kanojia, D.; Mishra, A.; Bhattacharyya, P. A Survey on Using Gaze Behaviour for Natural Language Processing. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20) Survey Track, Yokohama, Japan, 7–15 January 2021; pp. 4907–4913. [Google Scholar]

- Singh, A.D.; Mehta, P.; Husain, S.; Rajkumar, R. Quantifying sentence complexity based on eye-tracking measures. In Proceedings of the Workshop on Computational Linguistics for Linguistic Complexity (CL4LC), Osaka, Japan, 11 December 2016; pp. 202–212. [Google Scholar]

- Copeland, L.; Gedeon, T.; Caldwell, S. Effects of text difficulty and readers on predicting reading comprehension from eye movements. In Proceedings of the 6th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Gyor, Hungary, 19–21 October 2015; pp. 407–412. [Google Scholar]

- Kennedy, A.; Hill, R.; Pynte, J.E. The Dundee Corpus. In Proceedings of the 12th European Conference on Eye Movements, Dundee, Scotland, 20–24 August 2003. [Google Scholar]

- Hollenstein, N. Leveraging Cognitive Processing Signals for Natural Language Understanding. Ph.D. Thesis, ETH Zurich, Zürich, Switzerland, 2021. [Google Scholar]

- Cop, U.; Dirix, N.; Drieghe, D.; Duyck, W. Presenting GECO: An eyetracking corpus of monolingual and bilingual sentence reading. Behav. Res. Methods 2017, 49, 602–615. [Google Scholar] [CrossRef]

- Mathias, S.; Murthy, R.; Kanojia, D.; Mishra, A.; Bhattacharyya, P. Happy are those who grade without seeing: A multi-task learning approach to grade essays using gaze behaviour. In Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing, Suzhou, China, 4–7 December 2020; pp. 858–872. [Google Scholar]

- Hollenstein, N.; Rotsztejn, J.; Troendle, M.; Pedroni, A.; Zhang, C.; Langer, N. ZuCo, a simultaneous EEG and eye-tracking resource for natural sentence reading. Sci. Data 2018, 5, 13. [Google Scholar] [CrossRef]

- Hermena, E.W.; Drieghe, D.; Hellmuth, S.; Liversedge, S.P. Processing of Arabic diacritical marks: Phonological–syntactic disambiguation of homographic verbs and visual crowding effects. J. Exp. Psychol. Hum. Percept. Perform. 2015, 41, 494. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Sarsam, S.M.; Alzahrani, A.I.; Alalwan, N. Reading text with and without diacritics alters brain activation: The case of Arabic. Curr. Psychol. 2020, 39, 1189–1198. [Google Scholar] [CrossRef]

- Paterson, K.B.; Almabruk, A.A.A.; McGowan, V.A.; White, S.J.; Jordan, T.R. Effects of word length on eye movement control: The evidence from Arabic. Psychon. Bull. Rev. 2015, 22, 1443–1450. [Google Scholar] [CrossRef]

- Baazeem, I.; Al-Khalifa, H.; Al-Salman, A. Cognitively Driven Arabic Text Readability Assessment Using Eye-Tracking. Appl. Sci. 2021, 11, 8607. [Google Scholar] [CrossRef]

- El-Haj, M.; Kruschwitz, U.; Fox, C. Creating language resources for under-resourced languages: Methodologies, and experiments with Arabic. Lang. Resour. Eval. 2015, 49, 549–580. [Google Scholar] [CrossRef]

- Alnefaie, R.; Azmi, A.M. Automatic minimal diacritization of Arabic texts. Procedia Comput. Sci. 2017, 117, 169–174. [Google Scholar] [CrossRef]

- Hermena, E.W.; Bouamama, S.; Liversedge, S.P.; Drieghe, D. Does diacritics-based lexical disambiguation modulate word frequency, length, and predictability effects? An eye-movements investigation of processing Arabic diacritics. PLoS ONE 2021, 16, e0259987. [Google Scholar] [CrossRef]

- AlJassmi, M.A.; Hermena, E.W.; Paterson, K.B. Eye movements in Arabic reading. Exp. Arab. Linguist. 2021, 10, 85–108. [Google Scholar] [CrossRef]

- Roman, G.; Pavard, B. A comparative study: How we read in Arabic and French. In Eye Movements from Physiology to Cognition; Elsevier: Amsterdam, The Netherlands, 1987; pp. 431–440. [Google Scholar]

- Azmi, A.M.; Alnefaie, R.M.; Aboalsamh, H.A. Light Diacritic Restoration to Disambiguate Homographs in Modern Arabic Texts. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 21, 1–14. [Google Scholar] [CrossRef]

- Bouamor, H.; Zaghouani, W.; Diab, M.; Obeid, O.; Oflazer, K.; Ghoneim, M.; Hawwari, A. A pilot study on Arabic multi-genre corpus diacritization. In Proceedings of the Second Workshop on Arabic Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 80–88. [Google Scholar]

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Conklin, K.; Pellicer-Sánchez, A. Using eye-tracking in applied linguistics and second language research. Sec. Lang. Res. 2016, 32, 453–467. [Google Scholar] [CrossRef]

- Just, M.A.; Carpenter, P.A. A theory of reading: From eye fixations to comprehension. Psychol. Rev. 1980, 87, 329. [Google Scholar] [CrossRef]

- Grabar, N.; Farce, E.; Sparrow, L. Study of readability of health documents with eye-tracking approaches. In Proceedings of the 1st Workshop on Automatic Text Adaptation (ATA), Tilburg, The Netherlands, 8 November 2018. [Google Scholar]

- Mathias, S.; Kanojia, D.; Patel, K.; Agarwal, S.; Mishra, A.; Bhattacharyya, P. Eyes are the windows to the soul: Predicting the rating of text quality using gaze behaviour. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2352–2362. [Google Scholar]

- Gonzalez-Garduno, A.V.; Søgaard, A. Using gaze to predict text readability. In Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications, Copenhagen, Denmark, 8 September 2017; pp. 438–443. [Google Scholar]

- Tobii Technology AB. Tobii Studio User’s Manual (Version 3.4.8); Tobii Technology AB: Danderyd, Sweden, 2017; p. 172. [Google Scholar]

- Tobii Technology AB. Fundamentals. Available online: https://developer.tobii.com/xr/learn/analytics/fundamentals/ (accessed on 7 July 2023).

- Tobii Technology AB. Tobii Pro Lab User’s Manual (Version 1.130); Tobii Technology AB: Danderyd, Sweden, 2019. [Google Scholar]

- Wu, X.; Xue, C.; Zhou, F. An Experimental Study on Visual Search Factors of Information Features in a Task Monitoring Interface. In Proceedings of the Human-Computer Interaction: Users and Contexts, Los Angeles, CA, USA, 2–7 August 2015; pp. 525–536. [Google Scholar]

- Al-Wabil, A.; Al-Sheaha, M. Towards an interactive screening program for developmental dyslexia: Eye movement analysis in reading Arabic texts. In Proceedings of the 12th International Conference on Computers Helping People with Special Needs, Vienna, Austria, 14–16 July 2010; pp. 25–32. [Google Scholar]

- Al-Edaily, A.; Al-Wabil, A.; Al-Ohali, Y. Dyslexia Explorer: A Screening System for Learning Difficulties in the Arabic Language Using Eye Tracking. In Proceedings of the Human Factors in Computing and Informatics, Maribor, Slovenia, 1–3 July 2013; pp. 831–834. [Google Scholar]

- Al-Edaily, A.; Al-Wabil, A.; Al-Ohali, Y. Interactive Screening for Learning Difficulties: Analyzing Visual Patterns of Reading Arabic Scripts with Eye Tracking. In Proceedings of the HCI International 2013—Posters’ Extended Abstracts, Las Vegas, NV, USA, 21–26 July 2013; pp. 3–7. [Google Scholar]

- Blanken, G.; Dorn, M.; Sinn, H. Inversion errors in Arabic number reading: Is there a nonsemantic route? Brain Cogn. 1997, 34, 404–423. [Google Scholar] [CrossRef] [PubMed]

- Naz, S.; Razzak, M.I.; Hayat, K.; Anwar, M.W.; Khan, S.Z. Challenges in baseline detection of Arabic script based languages. In Proceedings of the Intelligent Systems for Science and Information: Extended and Selected Results from the Science and Information Conference, London, UK, 7–9 October 2013; pp. 181–196. [Google Scholar]

- Wiechmann, D.; Qiao, Y.; Kerz, E.; Mattern, J. Measuring the impact of (psycho-) linguistic and readability features and their spill over effects on the prediction of eye movement patterns. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 5276–5290. [Google Scholar]

- Frazier, L.; Rayner, K. Making and correcting errors during sentence comprehension: Eye movements in the analysis of structurally ambiguous sentences. Cogn. Psychol. 1982, 14, 178–210. [Google Scholar] [CrossRef]

- Rayner, K.; Chace, K.H.; Slattery, T.J.; Ashby, J. Eye movements as reflections of comprehension processes in reading. Sci. Stud. Read. 2006, 10, 241–255. [Google Scholar] [CrossRef]

- Liversedge, S.P.; Paterson, K.B.; Pickering, M.J. Chapter 3—Eye Movements and Measures of Reading Time. In Eye Guidance in Reading and Scene Perception; Underwood, G., Ed.; Elsevier Science Ltd.: Amsterdam, The Netherlands, 1998; pp. 55–75. [Google Scholar]

- Raney, G.E.; Campbell, S.J.; Bovee, J.C. Using eye movements to evaluate the cognitive processes involved in text comprehension. J. Vis. Exp. 2014, 10, 50780. [Google Scholar] [CrossRef]

- Schroeder, S.; Hyönä, J.; Liversedge, S.P. Developmental eye-tracking research in reading: Introduction to the special issue. J. Cogn. Psychol. 2015, 27, 500–510. [Google Scholar] [CrossRef]

- Atvars, A. Eye movement analyses for obtaining Readability Formula for Latvian texts for primary school. Procedia Comp. Sci. 2017, 104, 477–484. [Google Scholar] [CrossRef]

- Sinha, A.; Roy, D.; Chaki, R.; De, B.K.; Saha, S.K. Readability analysis based on cognitive assessment using physiological sensing. IEEE Sens. J. 2019, 19, 8127–8135. [Google Scholar] [CrossRef]

- Zubov, V.I.; Petrova, T.E. Lexically or grammatically adapted texts: What is easier to process for secondary school children? In Proceedings of the 24th International Conference on Knowledge-Based and Intelligent Information & Engineering Systems, Petersburg, Russia, 16–18 September 2020; pp. 2117–2124. [Google Scholar]

- Nassiri, N.; Cavalli-Sforza, V.; Lakhouaja, A. Approaches, Methods, and Resources for Assessing the Readability of Arabic Texts. ACM Trans. Asian Low Resour. Lang. Inf. Process. 2023, 22, 1–30. [Google Scholar] [CrossRef]

- Bensoltana, D.; Asselah, B. Exploration of Arabic reading, in terms of the vocalization of the text form by registering the eyes movements of pupils. World J. Neurosci. 2013, 3, 263–268. [Google Scholar] [CrossRef][Green Version]

- Maroun, M.; Hanley, J.R. Are alternative meanings of an Arabic homograph activated even when it is disambiguated by vowel diacritics? Writ. Syst. Res. 2020, 11, 203–211. [Google Scholar] [CrossRef]

- Awadh, F.H.; Zoubrinetzky, R.; Zaher, A.; Valdois, S. Visual attention span as a predictor of reading fluency and reading comprehension in Arabic. Front. Psychol. 2022, 13, 868530. [Google Scholar] [CrossRef] [PubMed]

- Hallberg, A.; Niehorster, D.C. Parsing written language with non-standard grammar: An eye-tracking study of case marking in Arabic. Read. Writ. 2021, 34, 27–48. [Google Scholar] [CrossRef]

- Leung, T.; Boush, F.; Chen, Q.; Al Kaabi, M. Eye movements when reading spaced and unspaced texts in Arabic. In Proceedings of the Annual Meeting of the Cognitive Science Society, Vienna, Austria, 26–29 July 2021; pp. 439–444. [Google Scholar]

- Khateb, A.; Asadi, I.A.; Habashi, S.; Korinth, S.P. Role of morphology in visual word recognition: A parafoveal preview study in Arabic using eye-tracking. Theory Pract. Lang. Stud. 2022, 12, 1030–1038. [Google Scholar] [CrossRef]

- Hermena, E.W.; Juma, E.J.; AlJassmi, M. Parafoveal processing of orthographic, morphological, and semantic information during reading Arabic: A boundary paradigm investigation. PLoS ONE 2021, 16, e0254745. [Google Scholar] [CrossRef]

- Al-Khalefah, K.; Al-Khalifa, H.S. Reading Process of Arab Children: An Eye-Tracking Study on Saudi Elementary Students. Int. J. Asian Lang. Process. 2021, 31, 2150003. [Google Scholar] [CrossRef]

- Hermena, E.W.; Reichle, E.D. Insights from the study of Arabic reading. Lang. Linguist. Compass. 2020, 14, 1–26. [Google Scholar] [CrossRef]

- Lahoud, H.; Eviatar, Z.; Kreiner, H. Eye-movement patterns in skilled Arabic readers: Effects of specific features of Arabic versus universal factors. Read. Writ. 2023, 37, 1079–1108. [Google Scholar] [CrossRef]

- Bessou, S.; Chenni, G. Efficient measuring of readability to improve documents accessibility for arabic language learners. J. Digit. Inf. Manag. 2021, 19, 75–82. [Google Scholar] [CrossRef]

- Nassiri, N.; Cavalli-Sforza, V.; Lakhouaja, A. MoSAR: Modern Standard Arabic Readability Corpus for L1 Learners. In Proceedings of the 4th International Conference on Big Data and Internet of Things (BDIoT’19), Rabat, Morocco, 23–24 October 2019; pp. 1–7. [Google Scholar]

- El-Haj, M.; Rayson, P. OSMAN―A Novel Arabic Readability Metric. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 23–28 May 2016; pp. 250–255. [Google Scholar]

- Daud, N.M.; Hassan, H.; Aziz, N.A. A corpus-based readability formula for estimate of Arabic texts reading difficulty. World Appl. Sci. J. 2013, 21, 168–173. [Google Scholar]

- Al Aqeel, S.; Abanmy, N.; Aldayel, A.; Al-Khalifa, H.; Al-Yahya, M.; Diab, M. Readability of written medicine information materials in Arabic language: Expert and consumer evaluation. BMC Health Serv. Res. 2018, 18, 139. [Google Scholar] [CrossRef]

- Alotaibi, S.; Alyahya, M.; Al-Khalifa, H.; Alageel, S.; Abanmy, N. Readability of Arabic Medicine Information Leaflets: A Machine Learning Approach. Procedia Comp. Sci. 2016, 82, 122–126. [Google Scholar] [CrossRef]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Combining Classical and Non-classical Features to Improve Readability Measures for Arabic First Language Texts. In Proceedings of the International Conference on Advanced Intelligent Systems for Sustainable Development, Tangier, Morocco, 21–26 December 2020; pp. 463–470. [Google Scholar]

- Barrett, M. Improving Natural Language Processing with Human Data: Eye Tracking and Other Data Sources Reflecting Cognitive Text Processing. Ph.D. Thesis, University of Copenhagen, Copenhagen, Denmark, 2018. [Google Scholar]

- Luke, S.G.; Christianson, K. The Provo Corpus: A large eye-tracking corpus with predictability norms. Behav. Res. Methods. 2018, 50, 826–833. [Google Scholar] [CrossRef] [PubMed]

- Salicchi, L.; Chersoni, E.; Lenci, A. A study on surprisal and semantic relatedness for eye-tracking data prediction. Front. Psychol. 2023, 14, 1112365. [Google Scholar] [CrossRef] [PubMed]

- Hollenstein, N.; Troendle, M.; Zhang, C.; Langer, N. ZuCo 2.0: A dataset of physiological recordings during natural reading and annotation. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 138–146. [Google Scholar]

- Leal, S.E.; Duran, M.S.; Aluísio, S. A nontrivial sentence corpus for the task of sentence readability assessment in Portuguese. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 401–413. [Google Scholar]

- Leal, S.E.; Lukasova, K.; Carthery-Goulart, M.T.; Aluísio, S.M. RastrOS Project: Natural Language Processing contributions to the development of an eye-tracking corpus with predictability norms for Brazilian Portuguese. Lang. Resour. Eval. 2022, 56, 1333–1372. [Google Scholar] [CrossRef]

- Zhang, G.; Yao, P.; Ma, G.; Wang, J.; Zhou, J.; Huang, L.; Xu, P.; Chen, L.; Chen, S.; Gu, J.; et al. The database of eye-movement measures on words in Chinese reading. Sci. Data 2022, 9, 411. [Google Scholar] [CrossRef]

- Hollenstein, N.; Barrett, M.; Björnsdóttir, M. The Copenhagen Corpus of Eye Tracking Recordings from Natural Reading of Danish Texts. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 1712–1720. [Google Scholar]

- Kazai, G.; Kamps, J.; Koolen, M.; Milic-Frayling, N. Crowdsourcing for book search evaluation: Impact of hit design on comparative system ranking. In Proceedings of the 34th International ACM SIGIR Conference on Research and development in Information Retrieval, Beijing, China, 24–28 July 2011; pp. 205–214. [Google Scholar]

- Aker, A.; El-Haj, M.; Albakour, M.-D.; Kruschwitz, U. Assessing Crowdsourcing Quality through Objective Tasks. In Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12), Istanbul, Turkey, 21–27 May 2012; pp. 1456–1461. [Google Scholar]

- Vajjala, S.; Majumder, B.; Gupta, A.; Surana, H. Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems; O’Reilly Media Inc.: Sebastopol, CA, USA, 2020. [Google Scholar]

- Hindawi Foundation. Available online: http://www.hindawi.org/ (accessed on 27 June 2021).

- Alrabiah, M.; Alsalman, A.; Atwell, E. The design and construction of the 50 million words KSUCCA King Saud University Corpus of Classical Arabic. In Proceedings of the WACL’2 Second Workshop on Arabic Corpus Linguistics, Lancaster University, UK, 22 July 2013; pp. 5–8. [Google Scholar]

- Fouad, M.M.; Atyah, M.A. MLAR: Machine Learning based System for Measuring the Readability of Online Arabic News. Int. J. Comput. Appl. 2016, 154, 29–33. [Google Scholar] [CrossRef]

- Chung-Fat-Yim, A.; Peterson, J.B.; Mar, R.A. Validating self-paced sentence-by-sentence reading: Story comprehension, recall, and narrative transportation. Read. Writ. 2017, 30, 857–869. [Google Scholar] [CrossRef]

- Aldayel, A.; Al-Khalifa, H.; Alaqeel, S.; Abanmy, N.; Al-Yahya, M.; Diab, M. ARC-WMI: Towards Building Arabic Readability Corpus for Written Medicine Information. In Proceedings of the 3rd Workshop on Open-Source Arabic Corpora and Processing Tools, Miyazaki, Japan, 8 May 2018; p. 14. [Google Scholar]

- Al Khalil, M.; Habash, N.; Jiang, Z. A large-scale leveled readability lexicon for Standard Arabic. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 3053–3062. [Google Scholar]

- Nielsen, J. Thinking Aloud: The #1 Usability Tool. Available online: https://www.nngroup.com/articles/thinking-aloud-the-1-usability-tool/ (accessed on 15 December 2022).

- Leal, S.E.; Vieira, J.M.M.; dos Santos Rodrigues, E.; Teixeira, E.N.; Aluísio, S. Using eye-tracking data to predict the readability of Brazilian Portuguese sentences in single-task, multi-task and sequential transfer learning approaches. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5821–5831. [Google Scholar]

- Callison-Burch, C. Fast, cheap, and creative: Evaluating translation quality using Amazon’s Mechanical Turk. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–7 August 2009; pp. 286–295. [Google Scholar]

- Chen, Y.; Zhang, W.; Song, D.; Zhang, P.; Ren, Q.; Hou, Y. Inferring Document Readability by Integrating Text and Eye Movement Features. In Proceedings of the SIGIR2015 Workshop on Neuro-Physiological Methods in IR Research, Santiago, Chile, 2 December 2015. [Google Scholar]

- Biedert, R.; Dengel, A.; Elshamy, M.; Buscher, G. Towards robust gaze-based objective quality measures for text. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 201–204. [Google Scholar]

- SR Reserach Ltd. SR Research Experiment Builder User Manual (Version 2.3.1); SR Reserach Ltd.: Ottawa, ON, Canada, 2020. [Google Scholar]

- Bhandari, P. Central Tendency|Understanding the Mean, Median & Mode. Available online: https://www.scribbr.com/statistics/central-tendency/ (accessed on 28 December 2022).

- Measures of Central Tendency. Available online: https://statistics.laerd.com/statistical-guides/measures-central-tendency-mean-mode-median.php (accessed on 20 August 2023).

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Evaluating the Impact of Oversampling on Arabic L1 and L2 Readability Prediction Performances. In Networking, Intelligent Systems and Security; Springer International Publishing: Singapore, 2022; Volume 237, pp. 763–774. [Google Scholar]

- Jian, L.; Xiang, H.; Le, G. English Text Readability Measurement Based on Convolutional Neural Network: A Hybrid Network Model. Comput. Intell. Neurosci. 2022, 2022, 1–9. [Google Scholar] [CrossRef]

- Nassiri, N.; Lakhouaja, A.; Cavalli-Sforza, V. Arabic L2 readability assessment: Dimensionality reduction study. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 3789–3799. [Google Scholar] [CrossRef]

- Al Khalil, M.; Saddiki, H.; Habash, N.; Alfalasi, L. A leveled reading corpus of modern standard Arabic. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Cavalli-Sforza, V.; El Mezouar, M.; Saddiki, H. Matching an Arabic text to a learners’ curriculum. In Proceedings of the 2014 Fifth International Conference on Arabic Language Processing (CITALA 2014), Oujda, Morocco, 26–27 November 2014; pp. 79–88. [Google Scholar]

- Salesky, E.; Shen, W. Exploiting Morphological, Grammatical, and Semantic Correlates for Improved Text Difficulty Assessment. In Proceedings of the Ninth Workshop on Innovative Use of NLP for Building Educational Applications, Baltimore, MD, USA, 16 June 2014; pp. 155–162. [Google Scholar]

- Saddiki, H.; Bouzoubaa, K.; Cavalli-Sforza, V. Text readability for Arabic as a foreign language. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–8. [Google Scholar]

- Al Jarrah, E.Q. Using Language Features to Enhance Measuring the Readability of Arabic Text. Master’s Thesis, Yarmouk University, Irbid, Jordan, 2017. [Google Scholar]

- Mishra, A.; Bhattacharyya, P. Scanpath Complexity: Modeling Reading Effort Using Gaze Information. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 77–98. [Google Scholar]

- SR Reserach Ltd. EyeLink Data Viewer User’s Manual (Version 3.1.97); SR Reserach Ltd.: Ottawa, ON, Canada, 2017. [Google Scholar]

- Scikit-Learn. Scikit-Learn Machine Learning in Python. Available online: https://scikit-learn.org/stable/ (accessed on 5 October 2023).

- Brownlee, J. Ordinal and One-Hot Encodings for Categorical Data. Available online: https://machinelearningmastery.com/one-hot-encoding-for-categorical-data/ (accessed on 20 December 2022).

- Rayner, K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef]

- Ghosh, S.; Dhall, A.; Hayat, M.; Knibbe, J.; Ji, Q. Automatic Gaze Analysis: A Survey of Deep Learning Based Approaches. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 61–84. [Google Scholar] [CrossRef] [PubMed]

- Makowski, S.; Jäger, L.A.; Abdelwahab, A.; Landwehr, N.; Scheffer, T. A discriminative model for identifying readers and assessing text comprehension from eye movements. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Dublin, Ireland, 10–14 September 2018; pp. 209–225. [Google Scholar]

- Caruso, M.; Peacock, C.E.; Southwell, R.; Zhou, G.; D'Mello, S.K. Going Deep and Far: Gaze-Based Models Predict Multiple Depths of Comprehension during and One Week Following Reading. In Proceedings of the 15th International Conference on Educational Data Mining, International Educational Data Mining Societ, Durham, UK, 24–27 July 2022; pp. 145–157. [Google Scholar]

- Copeland, L.; Gedeon, T. Measuring reading comprehension using eye movements. In Proceedings of the IEEE 4th International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 2–5 December 2013; pp. 791–796. [Google Scholar]

- Copeland, L.; Gedeon, T.; Mendis, B.S.U. Predicting reading comprehension scores from eye movements using artificial neural networks and fuzzy output error. Artif. Intell. Res. 2014, 3, 35–48. [Google Scholar] [CrossRef]

- Sanches, C.L.; Augereau, O.; Kise, K. Using the Eye Gaze to Predict Document Reading Subjective Understanding. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; pp. 28–31. [Google Scholar]

- Gonzalez-Garduno, A.; Søgaard, A. Learning to predict readability using eye-movement data from natives and learners. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 5118–5124. [Google Scholar]

- Sarti, G.; Brunato, D.; Dell’Orletta, F. That looks hard: Characterizing linguistic complexity in humans and language models. In Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, Virtual, 10 June 2021; pp. 48–60. [Google Scholar]

- Khallaf, N.; Sharoff, S. Automatic Difficulty Classification of Arabic Sentences. In Proceedings of the Sixth Arabic Natural Language Processing Workshop (WANLP), Virtual, Kyiv, Ukraine, 19 April 2021; pp. 105–114. [Google Scholar]

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Exploring the Impact of Deep Learning Techniques on Evaluating Arabic L1 Readability. In Artificial Intelligence, Data Science and Applications; Springer: Cham, Switzerland, 2024; pp. 1–7. [Google Scholar]

- Shen, W.; Williams, J.; Marius, T.; Salesky, E. A language-independent approach to automatic text difficulty assessment for second-language learners. In Proceedings of the 2nd Workshop on Predicting and Improving Text Readability for Target Reader Populations, Sofia, Bulgaria, 8 August 2013; pp. 30–38. [Google Scholar]

- Saddiki, H.; Habash, N.; Cavalli-Sforza, V.; Al Khalil, M. Feature optimization for predicting readability of Arabic L1 and L2. In Proceedings of the 5th Workshop on Natural Language Processing Techniques for Educational Applications, Melbourne, Australia, 19 July 2018; pp. 20–29. [Google Scholar]

- Forsyth, J.N. Automatic Readability Detection for Modern Standard Arabic. Master’s Thesis, Department of Linguistics and English Language, Brigham Young University, Provo, UT, USA, 2014. [Google Scholar]

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Interpreting the Relevance of Readability Prediction Features. Jordanian J. Comput. Inf. Technol. 2023, 9, 36–52. [Google Scholar] [CrossRef]

- Berrichi, S.; Nassiri, N.; Mazroui, A.; Lakhouaja, A. Impact of Feature Vectorization Methods on Arabic Text Readability Assessment. In Artificial Intelligence and Smart Environment (ICAISE 2022); Springer: Cham, Switzerland, 2023; Volume 635, pp. 504–510. [Google Scholar]

- Ghani, K.A.; Noh, A.S.; Yusoff, N.M.R.N.; Hussein, N.H. Developing Readability Computational Formula for Arabic Reading Materials Among Non-native Students in Malaysia. In The Importance of New Technologies and Entrepreneurship in Business Development: In The Context of Economic Diversity in Developing Countries: The Impact of New Technologies and Entrepreneurship on Business Development; Springer: Cham, Switzerland, 2021; Volume 194, pp. 2041–2057. [Google Scholar]

- Brooke, J.; Tsang, V.; Jacob, D.; Shein, F.; Hirst, G. Building readability lexicons with unannotated corpora. In Proceedings of the First Workshop on Predicting and Improving Text Readability for target reader populations, Montréal, Canada, 7 June 2012; pp. 33–39. [Google Scholar]

- Mahanama, B.; Jayawardana, Y.; Rengarajan, S.; Jayawardena, G.; Chukoskie, L.; Snider, J.; Jayarathna, S. Eye movement and pupil measures: A review. Front. Comput. Sci. 2022, 3, 733531. [Google Scholar] [CrossRef]

- Sharafi, Z.; Shaffer, T.; Sharif, B.; Guéhéneuc, Y.-G. Eye-tracking metrics in software engineering. In Proceedings of the 2015 Asia-Pacific Software Engineering Conference (APSEC), New Delhi, India, 1–4 December 2015; pp. 96–103. [Google Scholar]

- Mohamad Shahimin, M.; Razali, A. An eye tracking analysis on diagnostic performance of digital fundus photography images between ophthalmologists and optometrists. Int. J. Environ. Res. Public Health 2019, 17, 30. [Google Scholar] [CrossRef]

| Book Language | Document Count | Paragraph Count | Sentence Count | Word Count |

|---|---|---|---|---|

| MSA | 60 | 442 | 2835 | 37,486 |

| CA | 32 | 145 | 1936 | 20,559 |

| Total | 92 | 587 | 4771 | 58,045 |

| Participant Code | APT Score | Gender | Age Range | Country of Origin | Academic Level | Major |

|---|---|---|---|---|---|---|

| P01 | 9 | F | 25–30 | Saudi Arabia | Master’s | Biochemistry |

| P02 | 9 | F | 25–30 | Sudan | Bachelor’s | Business Administration |

| P03 | 9 | F | 40–45 | Egypt | Bachelor’s | Arabic Linguistics |

| P04 | 10 | M | 30–35 | Saudi Arabia | Master’s | Information Systems |

| P05 | 9 | F | 35–40 | Sudan | Master’s | Biotechnology |

| P06 | 9 | F | 35–40 | Saudi Arabia | Doctoral | Arabic Literature |

| P07 | 9 | M | 35–40 | Saudi Arabia | Bachelor’s | Information Systems |

| P08 | 9 | F | 30–35 | Jordan | Master’s | Mathematics |

| P09 | 10 | F | 30–35 | Sudan | Bachelor’s | Computer Engineering |

| P10 | 9 | M | 40–45 | Saudi Arabia | Master’s | Internet Communication |

| P11 | 9 | M | 25–30 | Palestine | Bachelor’s | General Management |

| P12 | 9 | M | 40–45 | Saudi Arabia | Bachelor’s | Computer Engineering |

| P13 | 9 | M | 30–35 | Syria | Bachelor’s | Healthcare Management |

| P14 | 9 | M | 30–35 | Saudi Arabia | Doctoral | Dentistry |

| P15 | 9 | F | 20–25 | Yemen | Bachelor’s | Chemistry |

| Readability Level | Documents | Paragraphs |

|---|---|---|

| Easy | 22 | 297 |

| Medium | 38 | 139 |

| Difficult | 0 | 6 |

| Total | 60 | 442 |

| Readability Level | MSA | CA | Total | Percent (%) |

|---|---|---|---|---|

| Easy | 22 | 4 | 26 | 28.26 |

| Medium | 38 | 22 | 60 | 65.22 |

| Difficult | 0 | 6 | 6 | 6.52 |

| Total | 60 | 32 | 92 | 100 |

| Readability Level | MSA | CA | Total | Percent (%) |

|---|---|---|---|---|

| Easy | 297 | 59 | 356 | 60.65 |

| Medium | 139 | 60 | 199 | 33.90 |

| Difficult | 6 | 26 | 32 | 5.45 |

| Total | 442 | 145 | 587 | 100 |

| Participant Code | No. of Correctly Answered Questions (CA) | No. of Correctly Answered Questions (MSA) | Total | Percent (%) |

|---|---|---|---|---|

| P01 | 14 | 28 | 42 | 77.78 |

| P02 | 17 | 27 | 44 | 81.48 |

| P03 | 15 | 32 | 47 | 87.04 |

| P04 | 12 | 25 | 37 | 68.52 |

| P05 | 15 | 34 | 49 | 90.74 |

| P07 | 11 | 23 | 34 | 62.96 |

| P08 | 12 | 25 | 37 | 68.52 |

| P09 | 14 | 25 | 39 | 72.22 |

| P10 | 16 | 31 | 47 | 87.04 |

| P12 | 13 | 29 | 42 | 77.78 |

| P15 | 16 | 26 | 42 | 77.78 |

| P16 | 14 | 28 | 42 | 77.78 |

| P17 | 14 | 26 | 40 | 74.07 |

| P18 | 12 | 31 | 43 | 79.63 |

| P19 | 14 | 28 | 42 | 77.78 |

| Features | Subfeatures | |

|---|---|---|

| General | Descriptive | Book Name and Author, Document Code, Paragraph Code, Book Language, Book Topic, Publication Century, Authorship Type, Translation Type, Author Count, Text Source |

| Textual Components | Parenthesis Count, Parenthetical Expression Count, Numerical Content Count, Listing Count, Religious Text Count, Poem Verse Count | |

| Linguistic | Textual Complexity | Character Count, Word Count, Average Word Length, Syllable Count, Average Syllables Per Word, Difficult Word Count, Average Difficult Words Count, Unique Loan Word Count, Total Loan Word Count, Unique Foreign Word Count, Total Foreign Word Count, Foreign-Word-to-Token Ratio, Loan-Word-to-Token Ratio |

| Structural Complexity | Sentence Count, Average Sentence Length in Words, Average Sentence Length in Characters, Paragraph Count | |

| Readability Scores | OSMAN Score, Lasbarhets Index Score, Automated Readability Index Score, Flesch Reading Ease Score, Flesch–Kincaid Score, Gunning Fog Score | |

| Stylistic | Text Style, Script Style, Linguistic Style, Writing Technique | |

| Metrics | Submetrics |

|---|---|

| Fixation | Time to First Fixation, Fixations Before, First Fixation Duration, Single Fixation Duration, Total Fixation Duration, Total Fixation Count, Percentage Fixated, Average Fixation Duration, Average Number of Fixations per Word, Fixation Rate |

| Visit | Total Visit Count, Single Visit Duration, Total Visit Duration, Average Visit Duration, Average Number of Visits per Word |

| Saccade | Total Saccade Count, Total Saccade Duration, Saccadic Amplitude, Absolute Saccadic Direction, Relative Saccadic Direction, Average Saccade Duration, Saccade-to-Fixation Ratio |

| Pupil | Pupil Size |

| Reading Time Metrics | Coefficient of Skewness (G) |

|---|---|

| Time to First Fixation | 0.512 |

| First Fixation Duration | 0.248 |

| Single Fixation Duration | 0.190 |

| Total Fixation Duration | 4.796 |

| Total Saccade Duration | 0.676 |

| Single Visit Duration | 0.231 |

| Total Visit Duration | 4.881 |

| Reading Time Metrics | Easy | Medium | Difficult | Maximum/Minimum |

|---|---|---|---|---|

| Time to First Fixation | 10.990 | 18.271 | 20.535 | 20.535 |

| First Fixation Duration | 0.247 | 0.249 | 0.259 | 0.259 |

| Single Fixation Duration | 0.242 | 0.244 | 0.252 | 0.252 |

| Total Fixation Duration | 0.025 | 0.026 | 0.031 | 0.031 |

| Total Saccade Duration | 47.263 | 79.051 | 84.421 | 84.421 |

| Single Visit Duration | 0.295 | 0.301 | 0.315 | 0.315 |

| Total Visit Duration | 0.026 | 0.027 | 0.032 | 0.032 |

| OSMAN Score | 129.586 | 127.694 | 125.482 | 125.482 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baazeem, I.; Al-Khalifa, H.; Al-Salman, A. AraEyebility: Eye-Tracking Data for Arabic Text Readability. Computation 2025, 13, 108. https://doi.org/10.3390/computation13050108

Baazeem I, Al-Khalifa H, Al-Salman A. AraEyebility: Eye-Tracking Data for Arabic Text Readability. Computation. 2025; 13(5):108. https://doi.org/10.3390/computation13050108

Chicago/Turabian StyleBaazeem, Ibtehal, Hend Al-Khalifa, and Abdulmalik Al-Salman. 2025. "AraEyebility: Eye-Tracking Data for Arabic Text Readability" Computation 13, no. 5: 108. https://doi.org/10.3390/computation13050108

APA StyleBaazeem, I., Al-Khalifa, H., & Al-Salman, A. (2025). AraEyebility: Eye-Tracking Data for Arabic Text Readability. Computation, 13(5), 108. https://doi.org/10.3390/computation13050108