1. Introduction

Analytical techniques and numerical simulations of nonlinear partial differential equations (PDEs) have been extensively studied across various fields, including mathematics, physics, chemistry, and engineering. The complexity of nonlinear PDEs continues to grow as more real-world factors influencing engineering systems are incorporated. Among these, the FitzHugh–Nagumo (FHN) equation plays a crucial role. It is widely used in the study of diverse engineering problems, fiber optics, and neurophysiology [

1]. The FHN model effectively describes phenomena such as solitary pulses and periodic wave trains in systems like photonic crystals, semiconductors, and fiber optic communication networks [

2]. Since analytical solutions for the FHN equation are rare, there is intensive research on numerical methods. Thus, a variety of numerical methods have been applied to solve the FHN equation, including the Haar-scale-3 wavelet collocation method [

3], Rabotnov’s fractional exponential function approach [

4], the two-step hybrid block method [

5], the local meshless method [

6], the Chebyshev polynomial-based collocation method [

7], the compact finite difference method [

8], the spectral Galerkin method [

9], semi-analytical methods [

10], the cubic B-spline-based one-step optimal hybrid block method [

11], the fully discrete implicit-explicit finite element method [

12], and the sixth-order compact finite difference method [

13].

One should note that the classical FHN model consists of two coupled fields: an activator, which evolves rapidly and includes a cubic nonlinear reaction term, and an inhibitor (recovery variable), which evolves on a slower timescale and provides negative feedback. The one-field PDE with a cubic reaction term, which we solve in this work, corresponds to the fast subsystem of the FHN model, obtained by assuming that the inhibitor variable equilibrates instantaneously (i.e., adiabatic or singular perturbation limit). Mathematically, this corresponds to taking the limit where the inhibitor relaxation time tends to zero, so that the inhibitor variable can be expressed algebraically as a function of the activator and eliminated. This reduction is valid in the regime where (i) timescale separation is extreme, and the inhibitor recovers much faster than the activator evolves, (ii) spatial coupling of the inhibitor is negligible, or its diffusion is either vanishingly small or homogenized, and (iii) the dominant dynamics of interest arise from wave propagation shaped primarily by the cubic reaction term. This reduced model is therefore appropriate for studying front propagation, bistability, and excitable pulse initiation in regimes where recovery is effectively instantaneous. By eliminating the inhibitor variable, the features of the full FHN system, such as slow recovery dynamics, inhibitor diffusion, relaxation oscillations, and spike frequency adaptation, are no longer captured.

Most traditional numerical methods for solving PDEs rely on discretizing the problem domain using a mesh (or grid) of finite points. This approach requires the problem to be formulated in terms of discrete spatial and temporal variables. While such mesh-based techniques are widely used and considered effective in many scenarios, they face significant challenges when applied to high-dimensional problems. As the number of dimensions increases, the mesh must become increasingly refined, which leads to an exponential growth in the number of grid points. This, in turn, results in a sharp increase in computational complexity, as the algorithm requires more processing power and memory to handle the larger grid. This high-dimensional scaling problem becomes especially pronounced in fields like fluid dynamics, material science, and complex biological systems, where the number of variables and their interactions can be vast. In response to these limitations, a new class of modeling approaches has emerged, driven by advancements in artificial intelligence and computational power. One such technique, PINNs, represents a significant departure from traditional mesh-based methods. Unlike conventional approaches, PINNs leverage neural networks to learn the solution to a PDE directly from the governing equations and data, without the need for a predefined mesh. By incorporating physical laws into the loss function of the neural network, PINNs can efficiently solve complex nonlinear PDEs, even in high-dimensional spaces, without suffering from the computational burdens associated with grid refinement. Recent work by Raissi et al. [

14] has highlighted the power and versatility of PINNs in solving a range of challenging nonlinear PDEs. Their research demonstrated that PINNs can effectively tackle problems such as nonlinear Schrödinger equations, Allen–Cahn equations, Navier–Stokes equations, Korteweg–de Vries equations, and Burgers’ equation. Furthermore, they have proven to be a valuable tool for addressing high-dimensional inverse problems, where traditional methods often struggle with scalability and efficiency. These developments mark a promising shift towards more flexible and scalable techniques for solving complex, real-world problems across various scientific disciplines.

In this study, we compare an improved PINN approach with the standard EFDM to solve the FHN equation. The FDM approximates derivatives at discrete points by constructing a grid and calculating differences between nearby values, with accuracy depending on grid step size and derivative approximation order. In contrast, PINN is a machine learning-based method where a neural network is trained to minimize the residual between the predicted and actual solution, effectively handling complex boundary conditions and geometries. However, PINNs can be computationally expensive and sensitive to hyperparameters like network architecture.

Although promising, PINN’s accuracy compared to conventional methods like FDM still remains largely unexplored. In our previous work, EFDM outperformed PINN in solving the Burgers’ and Sine-Gordon equations [

15,

16]. To make further progress in this regard, in this work, we present the first comparison, to the best of our knowledge, of EFDM and improved PINN for solving the FHN equation, using two test problems with different initial and boundary conditions. This contrasts with other studies focused primarily on comparison between conventional numerical methods, like explicit and implicit time-stepping methods for the FHN equation [

17].

2. The FitzHugh–Nagumo Equation

We considered the FHN equation, which is a nonlinear PDE given by [

8]

We note that in Equation (1a), α(

t) multiplies the second spatial derivative (diffusion term), whereas

β(

t) multiplies the first spatial derivative (advection term). In the case of

[

11]:

The initial condition for Equation (1a,b) is given as follows:

and boundary conditions read as

where

μ is the positive parameter and

t and

x are space and time variables, respectively.

3. Explicit Finite Difference Method

Using the EFDM, where the forward FD scheme is used to represent the derivative term

and central FD schemes are used to represent derivative terms

=

and

, Equation (1a) is written in the following form:

and Equation (1b) is given as follows:

where

, and indexes

i and

j refer to the discrete step lengths

x and

t for the coordinate

x and time

t. The grid dimensions in the

x and

t directions are

and

, respectively. Using the FD scheme, the initial condition (2) and boundary conditions (3) are given as follows:

Equation (4b) represents a formula for at the (i, j+1)th mesh point in terms of the known values along the jth time row. The truncation error can be reduced using small enough values of t and x until the precision attained is within the error tolerance.

4. Physics-Informed Neural Networks

4.1. The Basic Concept of the PINNs Is Solving PDEs

A machine learning method called the PINN can be used to approximately solve PDEs. A general form of PDEs with corresponding initial and boundary conditions is

Here, N is a differential operator, and represent spatial and temporal dimensions, respectively, is a computational domain, is a computational domain of the exposed boundary conditions, and is the solution of the PDEs with the initial condition and boundary conditions .

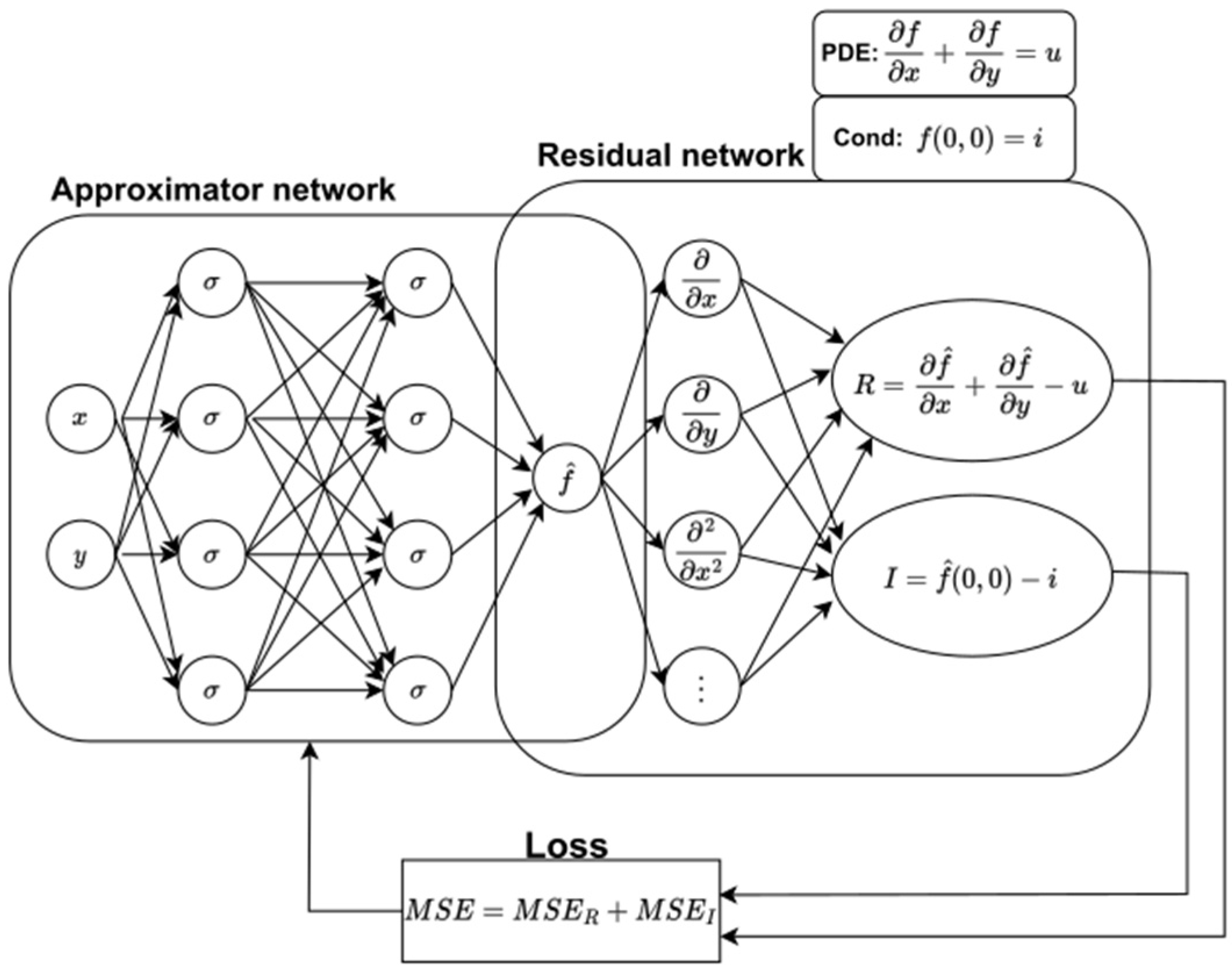

The approximator network and the residual network are the two subnets that make up PINN in its original construction [

18]. The approximator network receives input

, undergoes the training process, and provides an approximate solution

as an output. The approximator network is trained using a grid of collocation points, which are sampled randomly or regularly from the simulation domain. To optimize the network’s weights and biases, a composite loss function is minimized:

where

Here, , , and represent residuals of governing equations and initial and boundary conditions, respectively. , , and are the numbers of mentioned collocation points of the computational domain and initial and boundary conditions, respectively. The residuals are computed by the residual network, a non-trainable component of the PINN model. To calculate these residuals, PINN requires derivatives of the outputs with respect to inputs x and t. Automated differentiation (AD) is used to compute these derivatives by integrating the derivatives of individual operations, which is a key feature distinguishing PINNs from earlier methods that relied on manually derived back-propagation rules. Only first- and second-order spatial derivatives appear in the residual. Automatic differentiation computes these exactly and directly. No hidden higher-order derivatives or regularization terms are introduced through the AD graph. The script can be checked at GitHub Repository. Deep learning frameworks like TensorFlow 2.13.0 and PyTorch 2.1.0 provide robust AD, enabling efficient calculation of derivatives of all orders in space–time without the need for manual derivation or numerical discretization. We use default unity weights for loss components for the PDE itself, as well as boundary and initial conditions. To check the impact of various weight distributions on the result, we checked three different weight distributions, with each of them putting emphasis on the PDE, boundary condition (BC), and initial condition (IC). The findings show that, for this specific problem, there is no statistically significant impact, bearing in mind the variance introduced by different seeds.

A schematic of the PINN is demonstrated in

Figure 1, in which a simple PDE

is used as an example. The approximator network is used to approximate the solution

, which then goes to the residual network to calculate the residual loss

, boundary condition loss

, and initial condition loss

. The weights and biases of the approximator network are trained using a composite loss function consisting of residuals

,

, and

through the gradient-descent technique based on the back-propagation. For a simple PDE shown at the top of

Figure 1, the residuals are defined as

and

. The total loss function combines the mean squared errors of these two terms, ensuring both the PDE and its conditions are satisfied: MSE = MSE

R + MSE

I, where MSE

R represents the mean squared residual of the PDE and MSE

I enforces the initial or boundary conditions. During training, the network parameters are optimized to minimize this combined loss, leading to a solution that simultaneously approximates

f(

x,

y) and adheres to the underlying physical constraints. This approach enables the PINN to solve PDEs in a mesh-free manner while integrating physical laws directly into the learning process, making it highly effective for modeling complex systems in fluid dynamics, heat transfer, and other physics-based domains.

It is also interesting to note that a previous research study focused on the synthesis of a four-bar mechanism for the trajectory planning of a point belonging to the connecting rod [

19]. The objective was to generate a tool that synthesizes the mechanism topology given the desired trajectory. This preliminary study has shown how PINNs are suitable to automate the synthesis of mechanisms, where regular NNs would generally fail. Numerical analyses also demonstrate that a PINN learns relations from a physical numerical model in a more efficient way than a traditional NN.

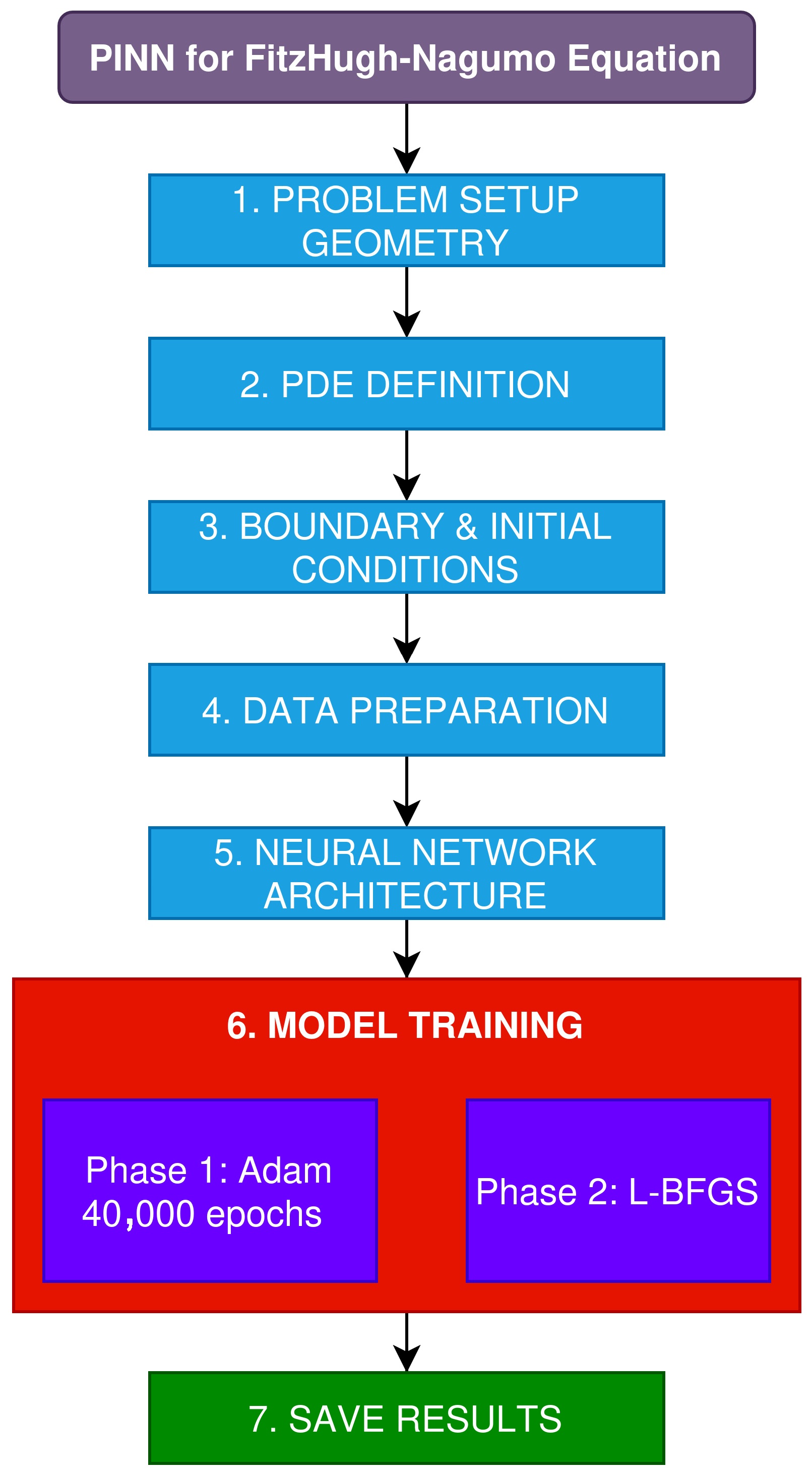

4.2. Implementation of PINN in Solving the FitzHugh–Nagumo Equation

We used the DeepXDE 1.14.0 library to construct the PINN model for solving the FHN equation [

20]. Our PINN has two inputs (

x,

t) and three layers, and each layer has 30 neurons. All neurons use a Tanh activation function. The collocation points are divided into three subsets: the largest (8000 points) covers the problem domain, while the second and third subsets (400 and 800 points) impose boundary and initial conditions. Both test cases share the same conditions. The PINN training consists of two phases: in the first, weights and biases are optimized using the Adam algorithm with a learning rate of 10

−3. In the second phase, the Limited Memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) algorithm is used to refine the solution after the “global” search phase, as described in [

21]. The entire training process takes about 85 s on the NVIDIA Tesla T4 GPU. Due to lost implementation details, we approximated the training as follows: Adam was run for ~40,000 epochs until the loss stabilized, followed by L-BFGS for ~200 iterations, with early stopping criteria activated. The stopping criterion was defined as either the loss dropping below 1 × 10

−8 or minimal change between iterations. Sample points were chosen using uniform random sampling in the domain. The assumed boundary and initial conditions are given in Equation (7).

While different hyperparameters, activation functions, training methods, and PINN topologies could yield better solutions, we chose the most common values from the FHN literature, as hyperparameter tuning is a time-consuming process beyond the scope of this work. We conducted a brief hyperparameter analysis, changing one kind of hyperparameter at a time and monitoring the change in RMSE:

Various activation functions: tanh—0.000039; swish—0.000040; sin—0.000035; sigmoid—0.000070.

Various PINN architectures (layers × neurons per layer): 3 × 10—0.000046; 3 × 30—0.000039; 3 × 50—0.000020; 4 × 30—0.000018; 4 × 50—0.000014.

Various numbers of collocation points (PDE, BC, IC): (2000, 100, 200)—0.000059; (4000, 200, 400)—0.000055; (8000, 400, 800)—0.000039; (16,000, 800, 1600)—0.000017.

On the other hand, RMSE variance calculated from 10 random seeds is 1.5 × 10−5, which is approximately 35% in relative RMSE terms, making the change in hyperparameters statistically insignificant. This variance justifies our choice of PINN with 3 × 30 neurons using tanh activation.

It is worth noting that the prior studies [

22,

23] have used hybrid neural network–metaheuristic frameworks for reliability and survival analysis. In contrast, our study introduces a PINN formulation tailored to the FHN reaction–diffusion system, with an efficient, validated, and systematically optimized PINN framework. The study achieved several key advancements in applying PINNs to the FHN system. It systematically explored hyperparameters, analyzing activation functions, network architectures, and collocation point densities, revealing that increasing both network depth and collocation density significantly improved accuracy. Despite examining larger architectures, the final model of three layers with 30 neurons struck a balance between accuracy and computational efficiency, requiring only approximately 85 s of GPU training, demonstrating that moderately sized PINNs can deliver highly accurate solutions without extensive resources. A two-stage optimization strategy combining Adam and L-BFGS enhanced convergence stability, with Adam providing global search and L-BFGS refining local minima, resulting in smoother and more precise final solutions. The robustness of the approach was verified across ten random seeds, showing a relative RMSE variance of about 35% and indicating consistent performance with low sensitivity to initialization. Comparative validation against both analytical solutions and the EFDM confirmed that the PINN accurately reproduced the expected dynamics of the FHN system. Finally, the study demonstrated that accuracy scales predictably with model complexity, as RMSE improved monotonically with increasing network depth and collocation point density, highlighting the method’s scalability and providing valuable insights for potential extensions to higher-dimensional or more complex reaction–diffusion systems.

The accuracy of the numerical results for two FHN equation test problems generated using the EFDM and PINN approach is compared to the analytical solutions presented in the literature in this research.

5. Numerical Results and Discussion

To illustrate the accuracy of the EFD scheme and PINN approach, several numerical computations are carried out for two test problems.

Test Problem 1: Consider the FHN Equation (1b) with the initial condition

and boundary conditions

The analytical solution of the problem is given as [

11]

Equation (12) represents a traveling-wave solution, where the hyperbolic tangent describes a wavefront moving through space over time. Such a solution is characteristic of excitable media, where a localized disturbance propagates as a pulse or wave—exactly what the FHN model captures in nerve impulse transmission or cardiac excitation.

Equation (4b) represents the EFD solution of this test problem; the initial condition (10) in terms of finite differences becomes

and boundary conditions (11) are given as

The comparison of the numerical solutions to Test Problem 1 obtained by the EFDM (step lengths are Δ

x = 0.5 and Δ

t = 0.0001) and PINN with the analytical solution (12) at different times

T, by taking the parameter

is shown in

Table 1 (for

T = 0.1),

Table 2 (for

T = 3), and

Table 3 (for

T = 5).

Table 4 displays the accuracy of the EFDM and PINN in solving Test Problem 1 at various points in time, where the shown root mean square error is calculated as

where

K is the total number of observed points along the

x axis.

For FHN Equation (1a), there are three Courant–Friedrichs–Lewy (CFL)-like constraints: (i) diffusion CFL: (ii) advection CFL: ; and (iii) reaction CFL: where and . For Δx = 0.5 and Δt = 0.0001, the numeric bounds are , , and . Beyond these stability limits, the numerical solution blows up, or one will also often see high-frequency spatial oscillations before blow-up.

In the EFDM calculation, the spatial step Δx = 0.5 and time step Δt = 0.0001 were chosen to ensure both accuracy and stability. The small spatial step allows the steep wavefront to be resolved precisely, while the time step satisfies the CFL condition for explicit schemes, preventing numerical instability. These values ensure that the numerical solution converges to the true solution without introducing significant errors, while still keeping computational costs manageable, providing a good balance between resolution, stability, and efficiency. Further reducing Δt and Δx did not lead to an improvement in the accuracy of the numerical solution.

In order to make the PINN implementation clearer, we give here the exact equation for the loss function:

Although a good agreement between our numerical solutions and analytical solution can be seen, it is important to emphasize that the PINN provides a better fit with the analytical solution.

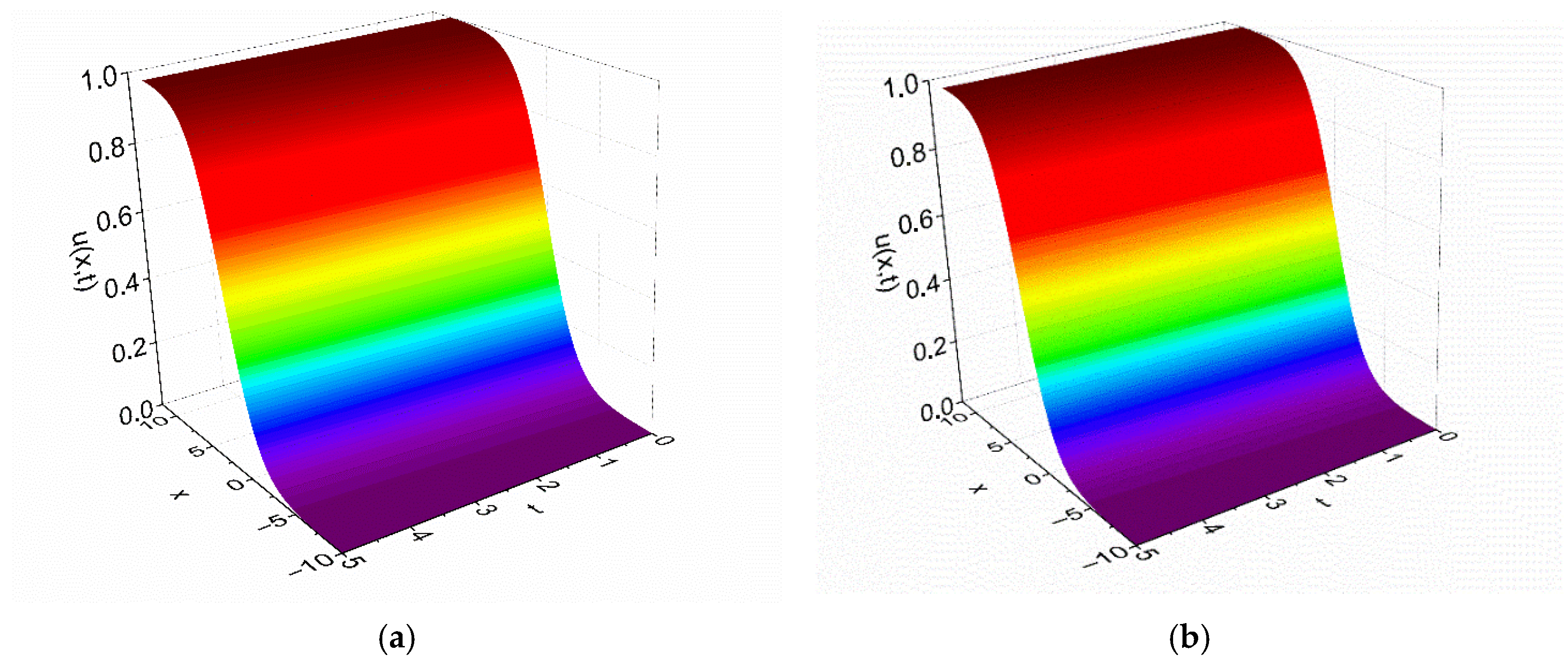

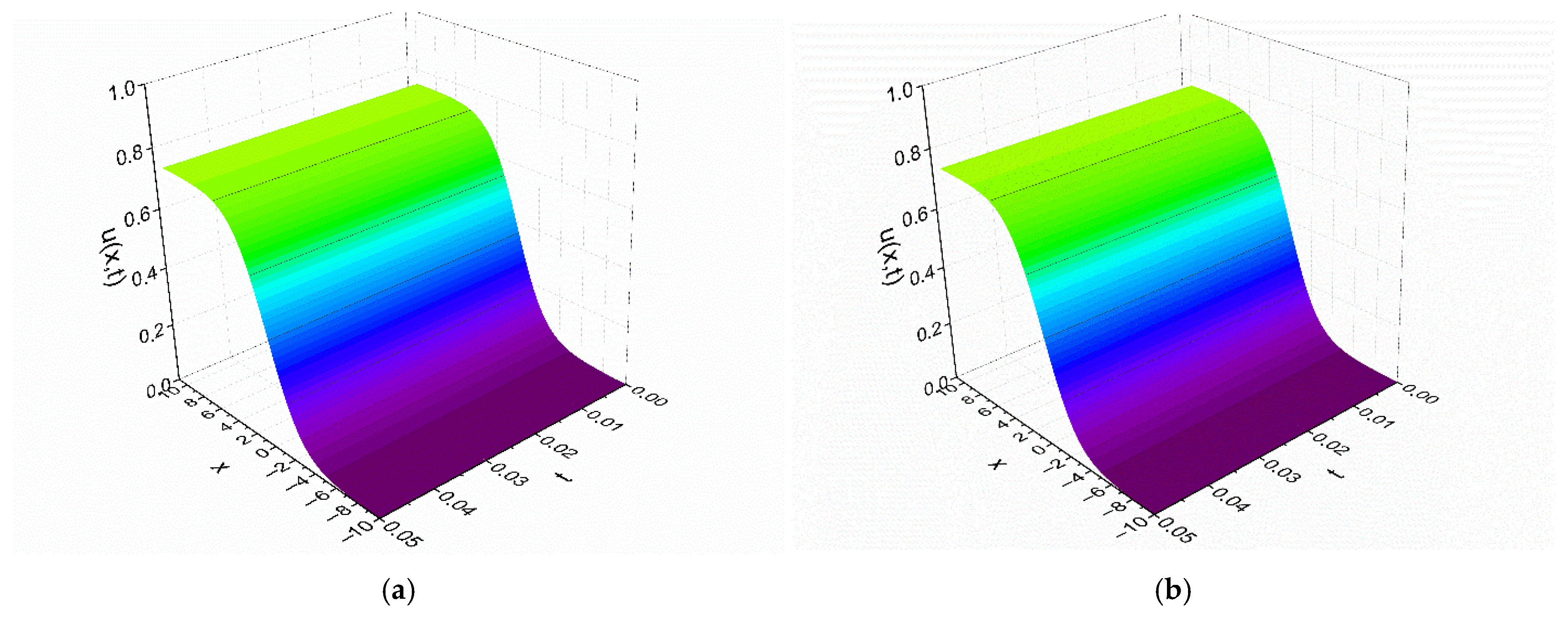

Figure 2 provides a further demonstration of the physical behavior of the EFD and PINN solutions to Test Problem 1 in 3-dim.

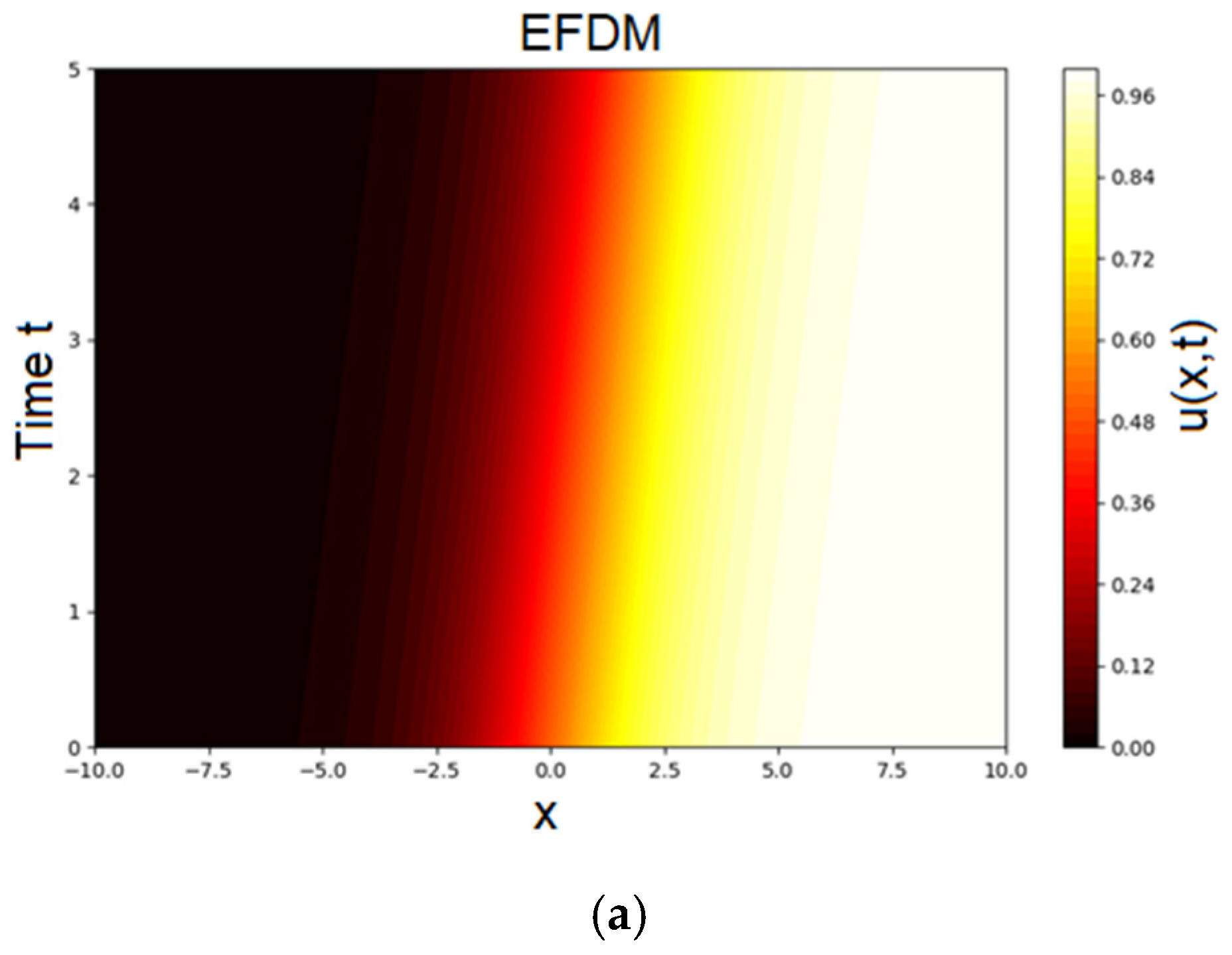

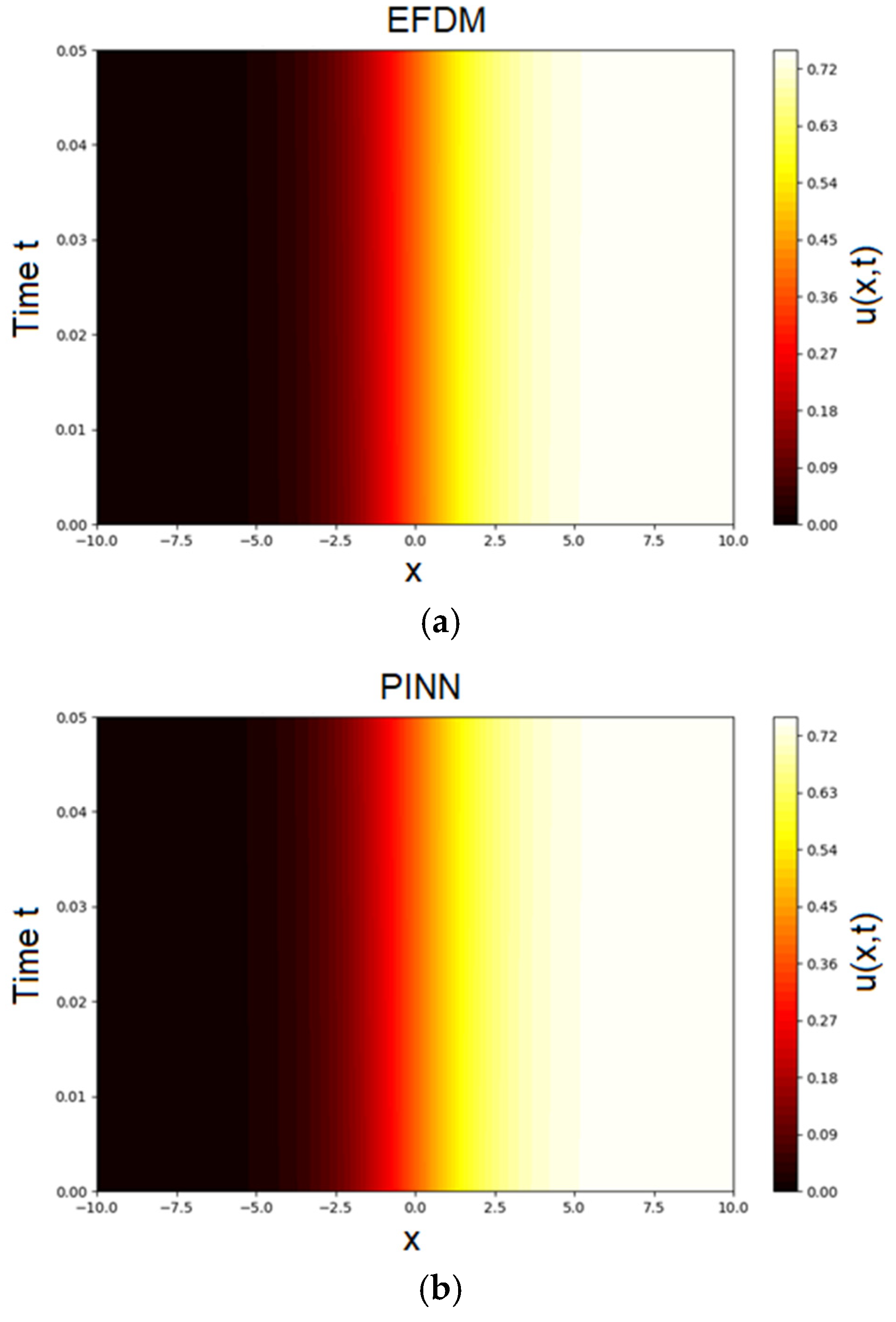

Figure 3 shows a heatmap of the predicted solution

u(

x,

t) over the full space–time domain for Test Problem 1.

Test Problem 2: Consider the FHN Equation (1a) with

the initial condition

and boundary conditions

The analytical solution of the problem is given as [

8]

Test Problem 2 models a traveling wave in an excitable medium that oscillates periodically over time, capturing how signals or disturbances can speed up, slow down, or reverse under rhythmic forcing. This behavior appears across many real-world systems: in neuroscience, it reflects neurons responding to periodic inputs, influencing spike timing and synchronization; in cardiac tissue, it represents pacemaker-driven excitation waves and helps study arrhythmias; in chemistry, it describes oscillating reaction fronts under external forcing, such as in Belousov–Zhabotinsky reactions; in ecology, it models population waves affected by seasonal or cyclical environmental changes; and in engineering and materials science, it illustrates nonlinear wave propagation in semiconductors, plasmas, or memristive devices under periodic driving. Overall, the problem provides a controlled setting to test numerical and machine learning methods on non-stationary, periodically forced waves, linking mathematical theory to practical excitable systems.

The EFD solution of this test problem is given as

The initial condition (16) in terms of finite differences becomes

and boundary conditions (17) are given as

One should mention that we used one-sided second-order differences for the advection term at two boundaries, in the following form:

and

where

and

are given in Equation (20a) and Equation (20b), respectively. Compared to the alternative choice of ghost nodes, one-sided second-order differences provide a much better propagation, preserving amplitude and speed. The truncation error for the difference Equation (21a,b) is

O((Δ

x)

2).

A comparison of the numerical solutions to Test Problem 2 obtained by the EFDM (step lengths are Δ

x = 0.5 and Δ

t = 0.0001) and PINN with the analytical solution (18) at different times

T, by taking the parameter

is shown in

Table 5 (for

T = 0.001),

Table 6 (for

T = 0.01), and

Table 7 (for

T = 0.05). Further reducing Δ

t and Δ

x did not lead to an improvement in the accuracy of the numerical solution.

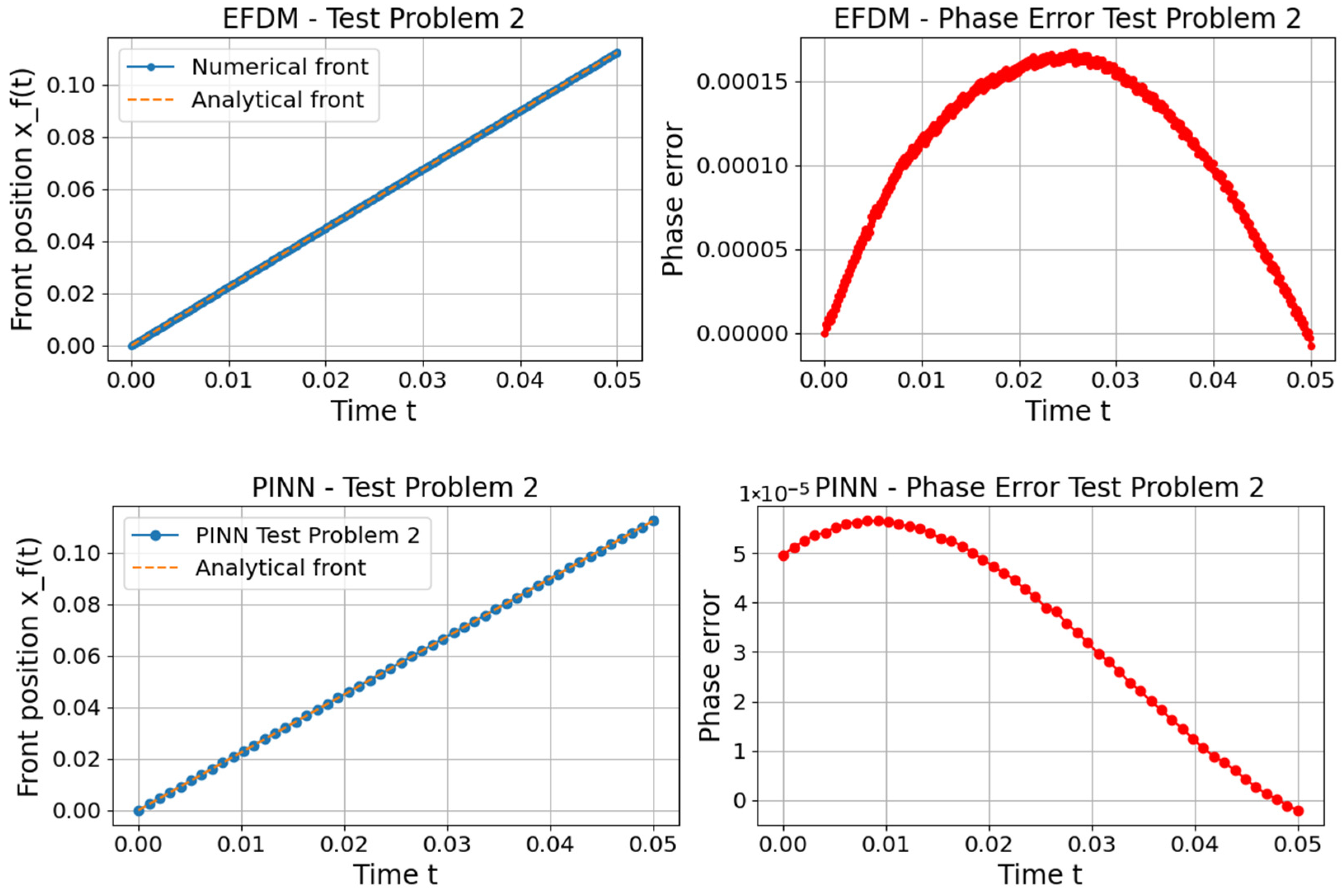

Table 8 displays the accuracy of the EFDM and PINN in solving Test Problem 2 at various points in time. Although a good agreement between our numerical solutions and analytical solution can be seen, it is important to emphasize that the EFDM provides a better fit with the analytical solution.

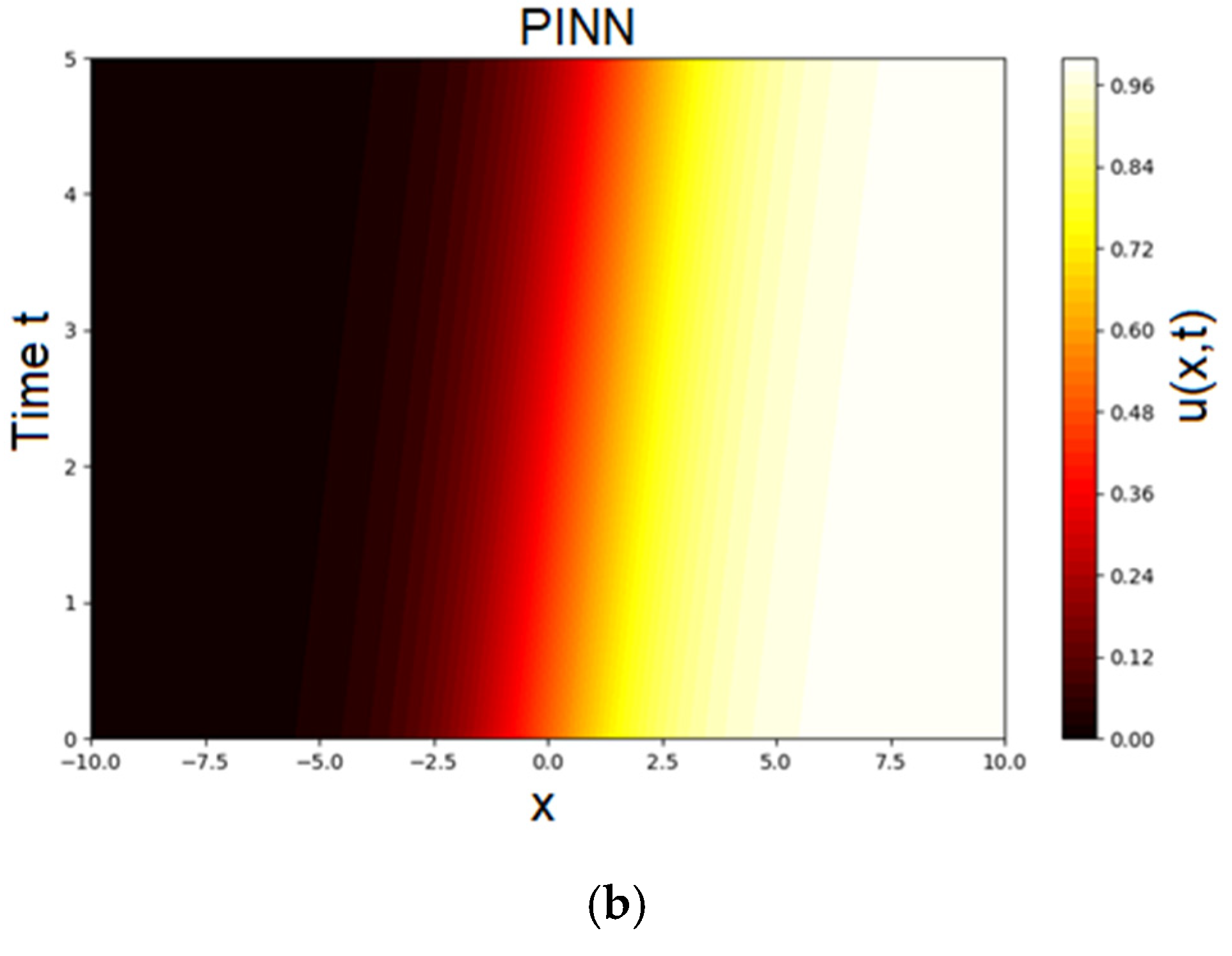

Figure 4 provides a further demonstration of the physical behavior of the EFD and PINN solutions to Test Problem 2 in 3-dim.

Figure 5 shows a heatmap of the predicted solution

u(

x,

t) over the full space–time domain for Test Problem 2.

6. Accuracy Assessment of EFDM and PINN

We conducted a brief grid/time refinement study for EFDM, using L

2 error as a measure of accuracy:

In both test problems, the rates are approximately 2, as expected from the order of the problem being solved (see

Table 9 and

Table 10).

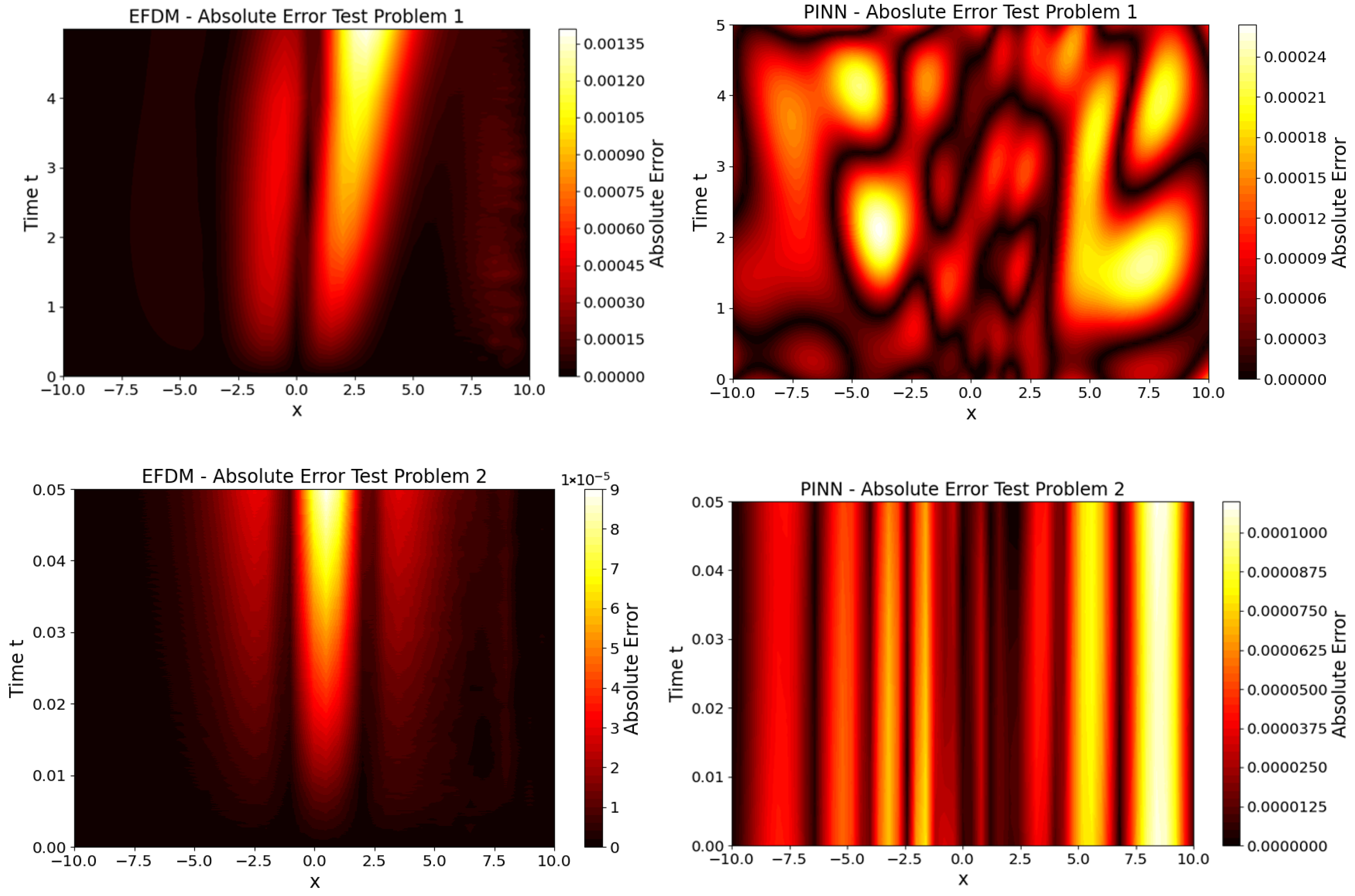

Figure 6 illustrates the integrated space–time L

2 error plot for EFDM and PINN. One can see from

Figure 6 that quite different patterns for space–time L

2 error characterize EFDM and PINN. It can be seen that, for EFDM, areas with higher absolute error are concentrated towards higher values of t, while for PINN, the errors are evenly distributed. This happens because, in EFDM, the error accumulates due to the explicit time-stepping method, while in PINN, there is no classical time-stepping.

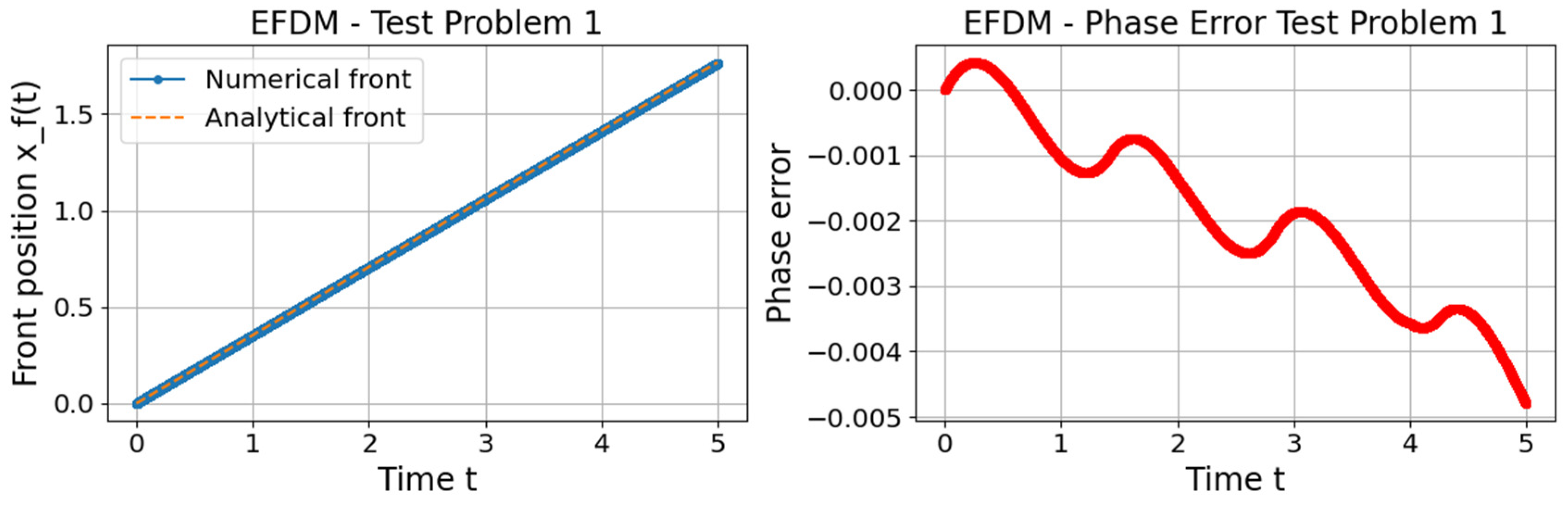

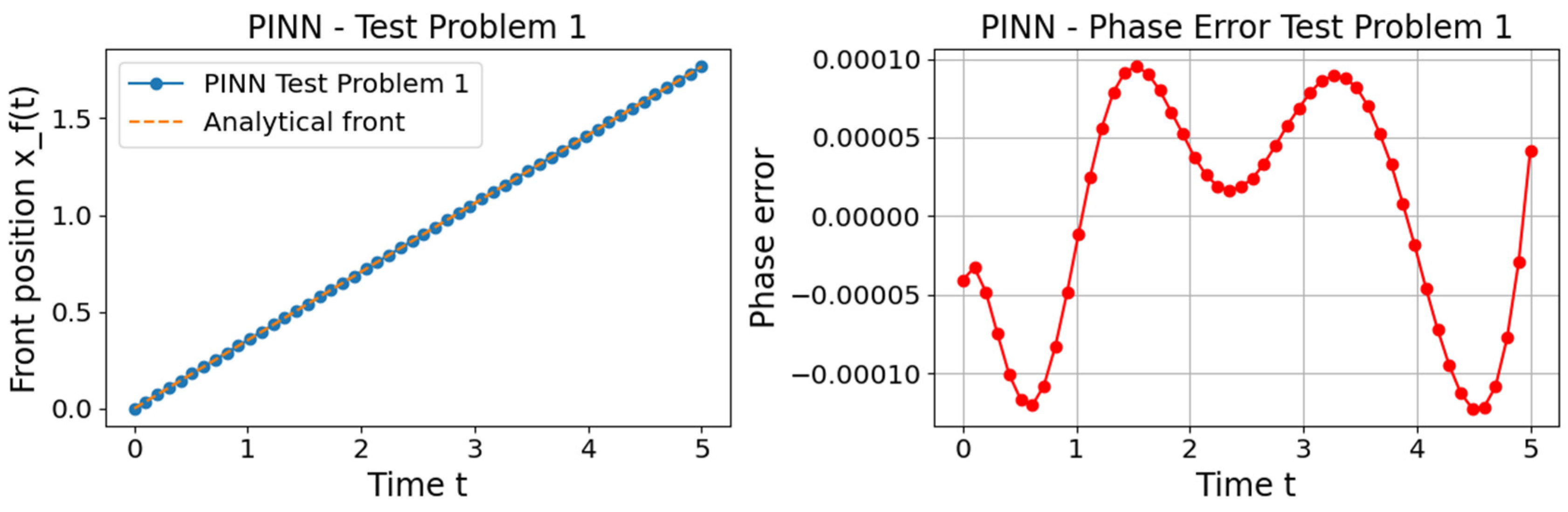

The phase error measures the timing/distance discrepancy in wave propagation.

Figure 7 and

Figure 8 show phase errors for traveling waves with front position

, for Test Problems 1 and 2, respectively, calculated using the following equation:

The EFDM requires 1.1 s to solve Test Problem 1 (T = 0.05) and 21 s to solve Test Problem 2 (T = 5). These computation times are significantly shorter than the 85 s required by the PINN approach. Unlike EFDM, the PINN framework does not employ a classical time-stepping scheme; therefore, its total computational time corresponds solely to the training time of the PINN.

In Test Problem 2, although we admit time-varying coefficients α(t), β(t), and γ(t), the tests still follow smooth traveling-wave tanh profiles. In general, using smooth traveling-wave profiles (e.g., tanh functions) with time-varying coefficients α(t), β(t), and γ(t) simplifies the FHN model, focusing on core dynamics like wave propagation and the effects of time-varying parameters, while avoiding the complexities of pulse formation and front interactions. Stiffer, multi-scale, or pattern-forming dynamics require more advanced numerical methods and greater computational resources, so avoiding them helps reduce complexity and computational cost. In many real-world applications, such as real-time simulations or large-scale modeling, smoother, simpler profiles are more practical. While pattern formation and front interactions are relevant in some biological contexts, many systems do not exhibit these behaviors unless under specific conditions. By starting with smoother dynamics, one aligns with more controlled experimental setups or theoretical models where the system behaves in a simpler, steady-state regime. Early testing with simpler cases ensures the accuracy of numerical methods, allowing one to scale up to more complex systems later. In cases like neural or cardiac modeling, where wave propagation is often smooth, focusing on smoother dynamics better represents these scenarios.

Finally, to the best of our knowledge, we compare the accuracy of the EFDM and improved PINN approach for solving the FHN equation in this work. We obtained that, for solving the FHN equation, the PINN approach demonstrates better accuracy than numerical solutions produced by EFDM. It should be noted that the PINN approach is one or even two orders of magnitude more computationally demanding than the EFDM. In our previous work, we demonstrated that the EFDM outperforms the PINN approach in terms of accuracy when solving Burgers’ equation [

15] and the Sine-Gordon equation [

16]. PINN outperforms EFDM for the FHN equation because its smooth, dissipative, and stiff nature aligns with the strengths of NN-based smooth approximations and global optimization. In contrast, Burgers’ and Sine–Gordon equations involve shocks or oscillatory conservative dynamics, where traditional numerical schemes better preserve sharp or oscillatory structures, while PINNs’ smooth approximators struggle [

15,

16]. The results of this study are particularly important for assessing the performance of the PINN in solving the FHN equation, especially for various nonlinear physical processes. These processes include phenomena such as pulse propagation in optical fibers, geophysical fluid dynamics, and long-wave propagation in both shallow and deep ocean environments. This highlights the potential of PINNs to handle complex, nonlinear systems, despite their initial limitations compared to EFDM. The PINN flowchart used in this work is shown in

Figure 9.

Finally, while the classical FHN model captures the basic excitability of neurons and other excitable media, real-world systems are often far more complex. In such systems, singularities naturally arise due to the interplay of multiple timescales, strong nonlinearities, and spatial or network interactions. Fast–slow dynamics, for instance, create regions where the state variables change abruptly over very short spatial or temporal scales, producing boundary layers or spike bursts that cannot be captured by the simple smooth FHN equations. Discontinuous or piecewise nonlinearities further introduce singular points in the system, where the derivative of the response changes suddenly, modeling threshold-like behavior observed in real neurons, cardiac tissue, and chemical reactions. High-dimensional extensions of FHN, such as coupled networks or spatially extended reaction–diffusion systems, produce singular wave phenomena including spiral cores, wave collisions, and reentrant patterns, all of which play a central role in arrhythmias, seizure dynamics, and pattern formation in chemical media. In addition, stochastic perturbations or external forcing can amplify these singular behaviors, leading to complex phenomena like chaotic bursting or wavefront breakup. From a numerical standpoint, singularities present significant challenges. Standard explicit methods may become unstable or fail to resolve sharp gradients, while naive discretization can smear essential features of the solution. Accurate simulation requires adaptive time-stepping, mesh refinement, or specialized singular perturbation techniques, and these approaches must balance computational efficiency with the need to capture critical dynamical features. Ultimately, studying singular FHN-type models provides deeper insight into the rich dynamics of excitable systems, revealing mechanisms behind rhythmic bursting, conduction block, pattern formation, and nonlinear wave interactions that the classical smooth FHN model cannot represent. By incorporating singularities, these models bridge the gap between idealized theory and the complex behaviors observed in biology, chemistry, and materials science.

7. Conclusions

We compared the analytical solutions that had previously been reported in the literature with our numerical results for the solution of the nonlinear parabolic differential equation of the FHN type, which we obtained using the improved PINN and standard EFD approach. While both approaches provide good agreement with analytical solutions, our findings reveal that the PINN method aligns more closely with the analytical solutions in Test Problem 1, whereas the EFDM achieves higher accuracy in Test Problem 2. This study is key to assessing the PINN’s effectiveness in solving the FHN equation and its application to nonlinear phenomena, such as pulse dissipative dynamics in optical fibers, drug delivery, neural behavior, geophysical fluid dynamics, and long-wave propagation in oceans, showcasing the potential of PINNs for tackling complex systems. The principles underlying the methods of solution employed in this study may be used to create numerical models for nonlinear PDEs employed for the investigation of a wide range of nonlinear physical processes in physical and engineering problems.