Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods

Abstract

1. Introduction

2. Problem Statement

3. Stability Analysis

3.1. Propagation of Discontinuities

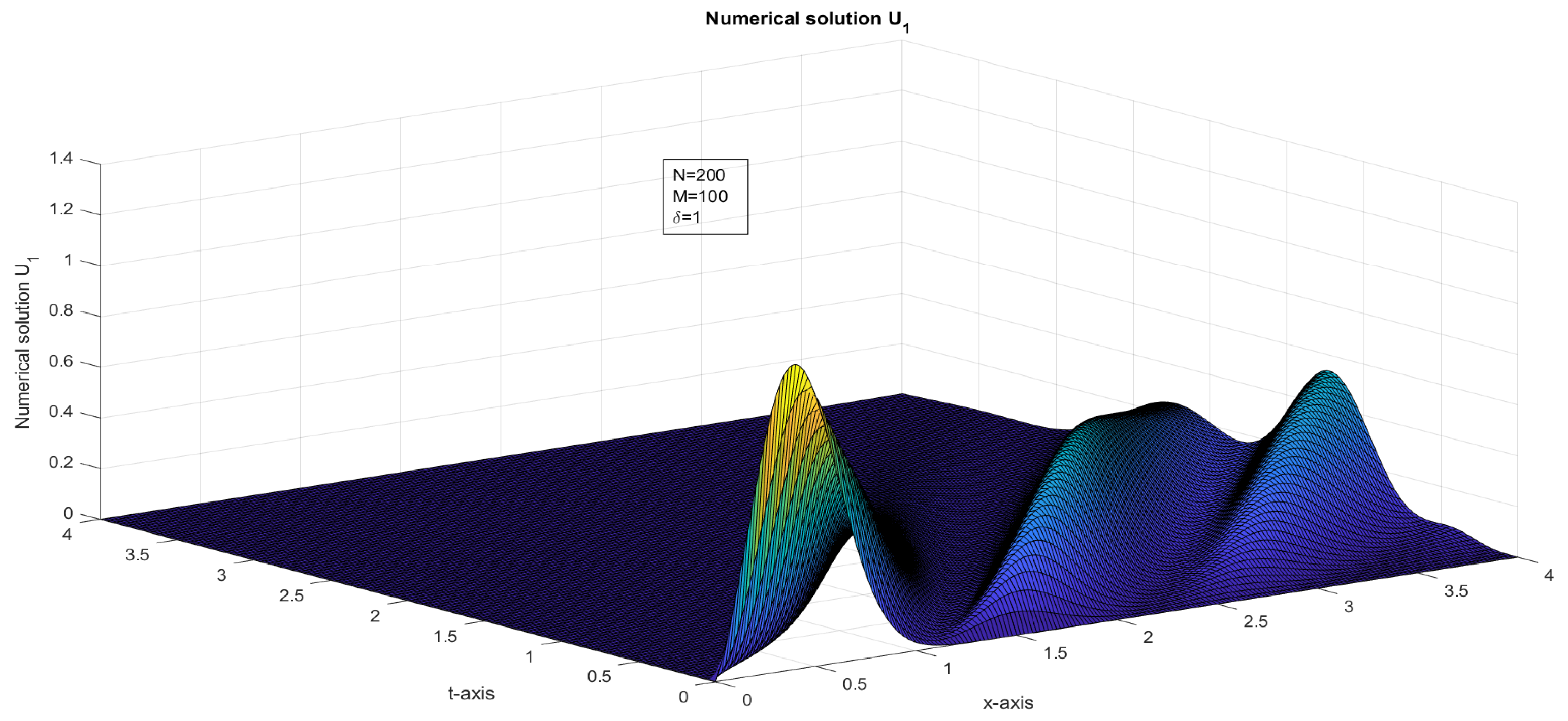

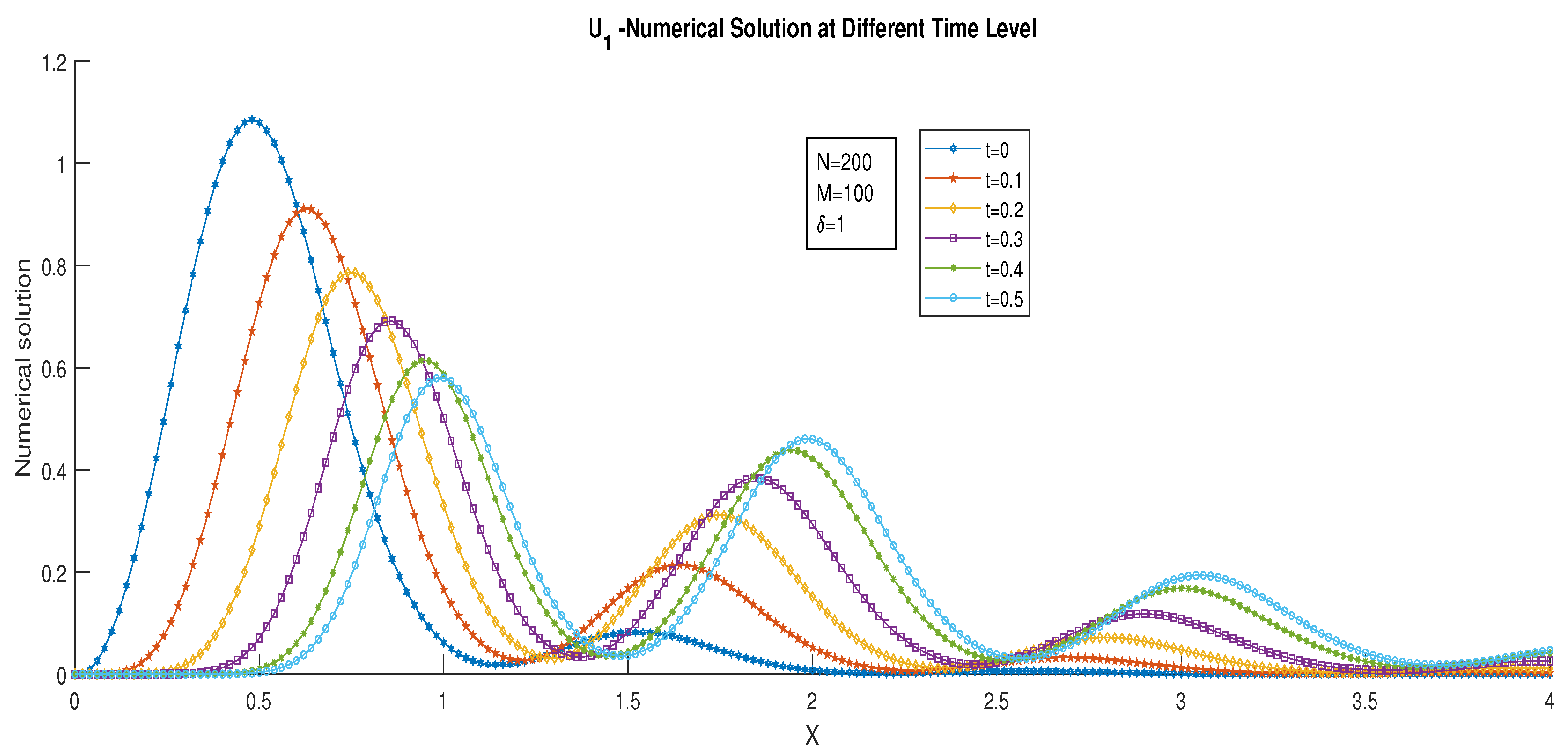

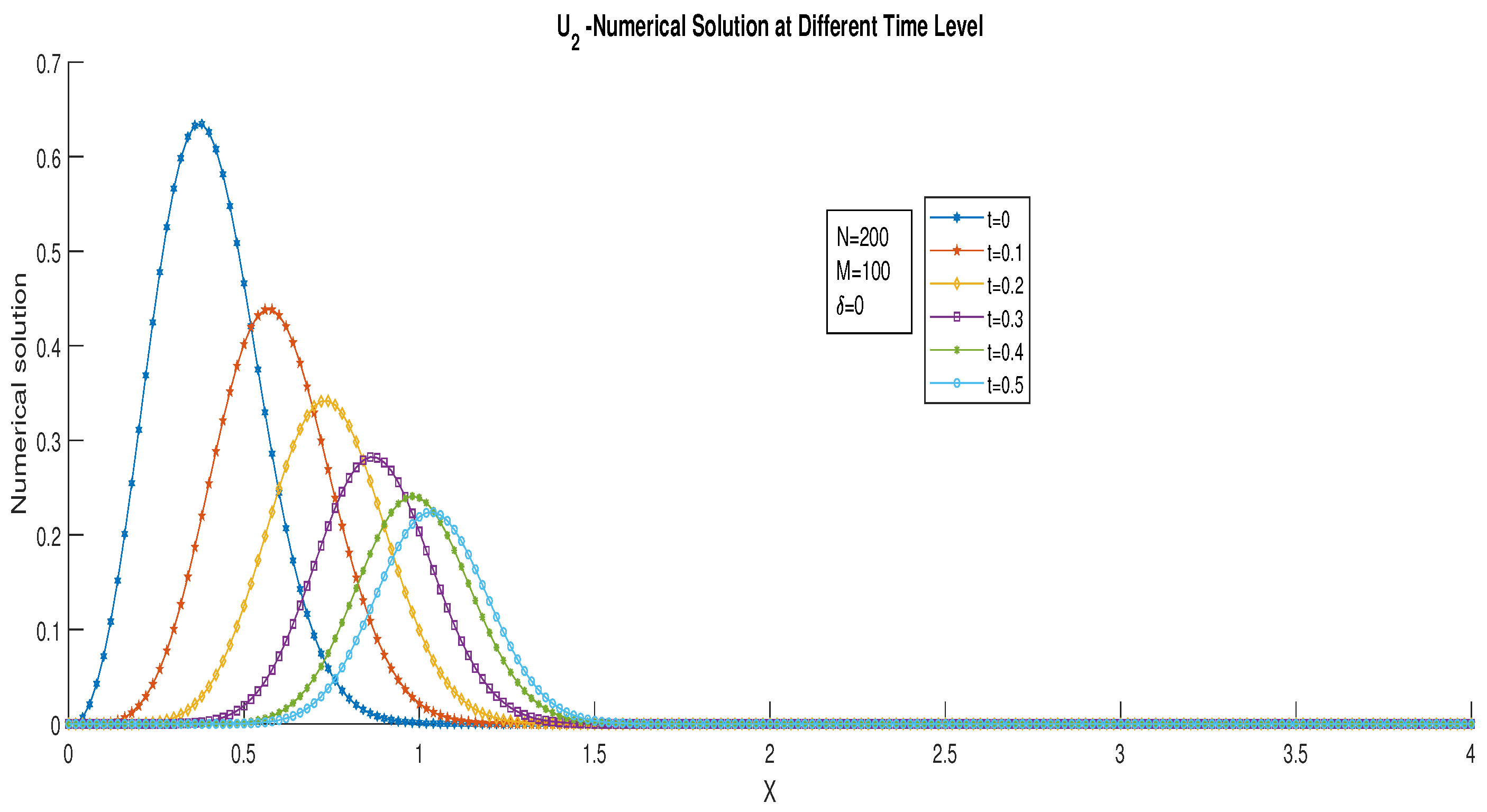

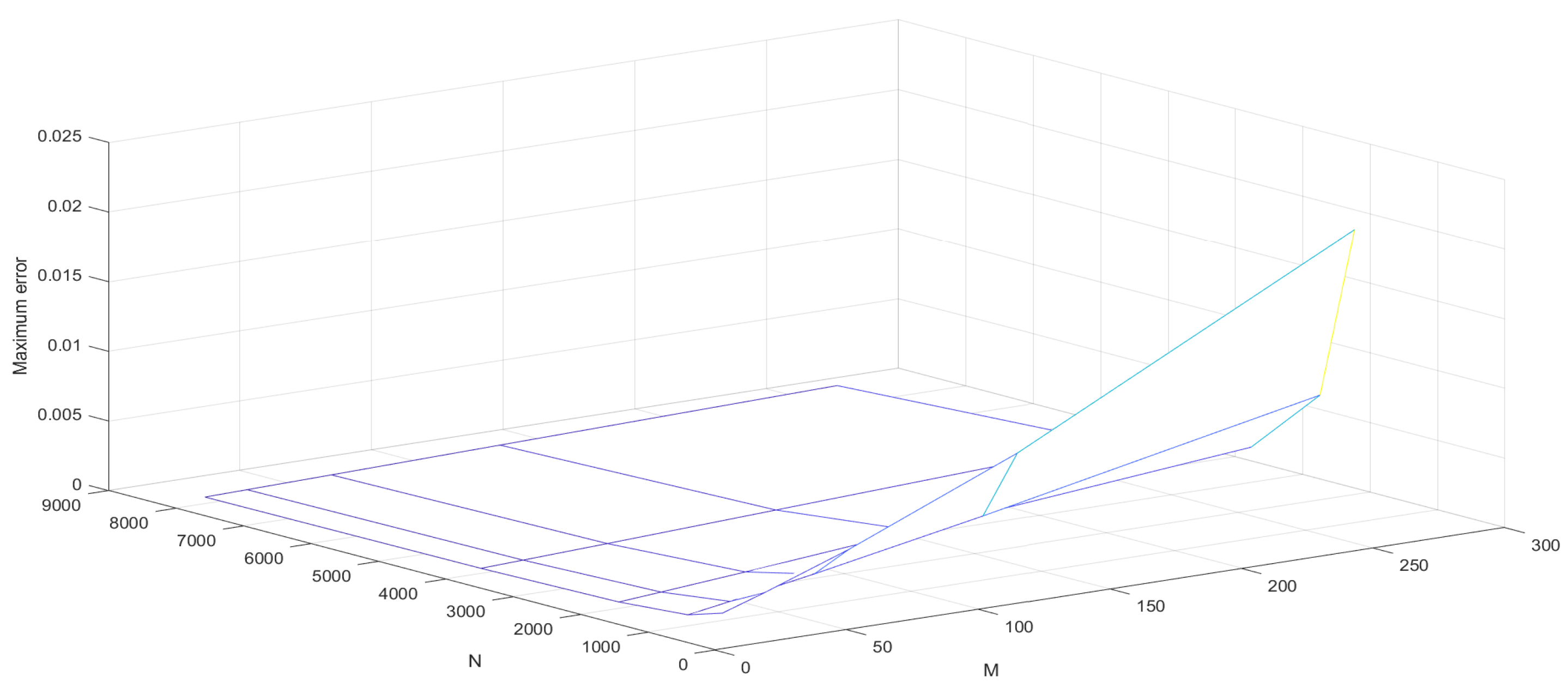

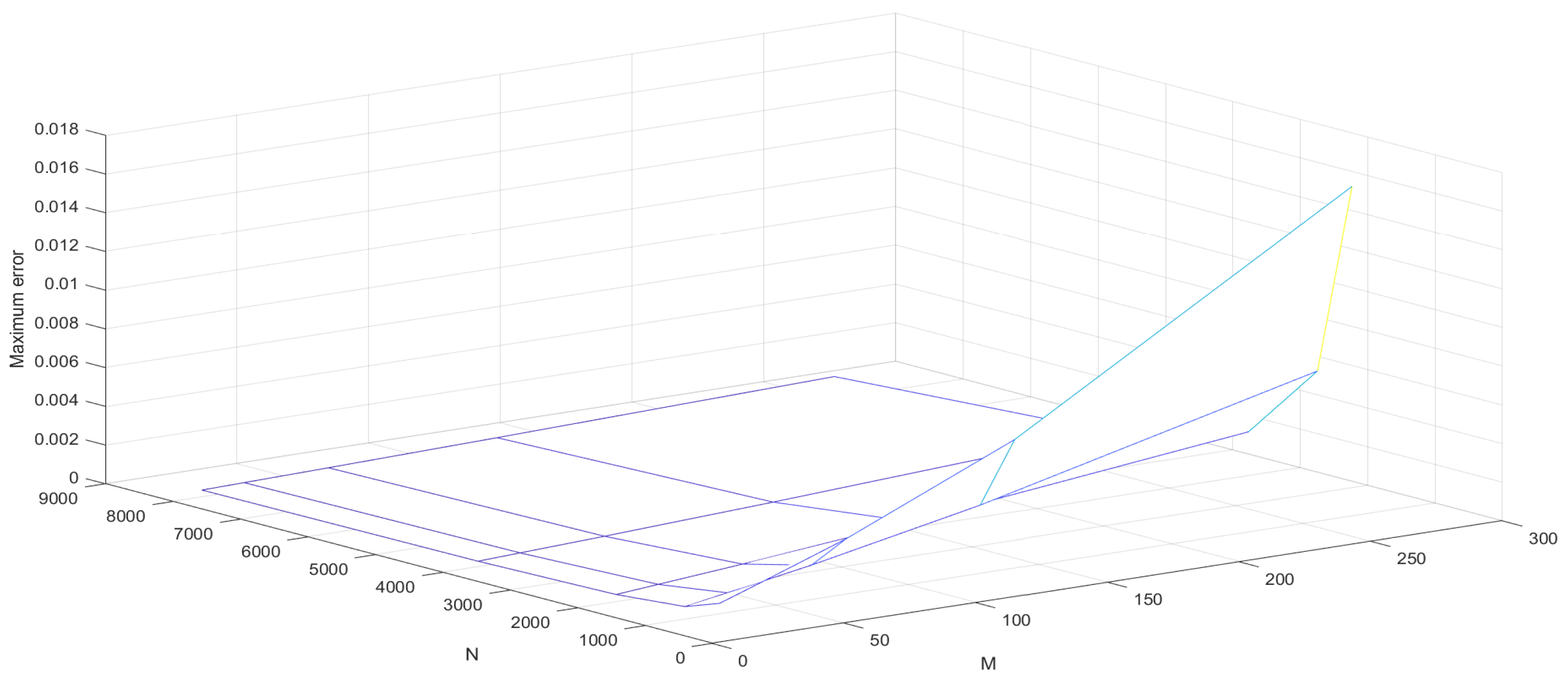

3.2. Derivative Bounds

4. Semi-Discretization in Temporal Direction

5. Fully Discretized Problem

Spatial Mesh Points

6. Numerical Examples

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, H.L. An Introduction to Delay Differential Equations with Applications to the Life Sciences; Springer: New York, NY, USA, 2011; Volume 57, pp. 119–130. [Google Scholar]

- Bellen, A.; Zennaro, M. Numerical Methods for Delay Differential Equations; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Kuang, Y. Delay Differential Equations with Applications in Population Dynamics; Academic Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Stein, R.B. A theoretical analysis of neuronal variability. Biophys. J. 1965, 5, 173–194. [Google Scholar] [CrossRef]

- Stein, R.B. Some models of neuronal variability. Biophys. J. 1967, 7, 37–68. [Google Scholar] [CrossRef]

- Holden, A.V. Models of the Stochastic Activity of Neurons; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Rai, P.; Sharma, K.K. Numerical study of singularly perturbed differential–difference equation arising in the modeling of neuronal variability. Comput. Math. Appl. 2012, 63, 118–132. [Google Scholar] [CrossRef]

- Varma, A.; Morbidelli, M. Mathematical Methods in Chemical Engineering; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Banasiak, J.; Mika, J.R. Singularly perturbed telegraph equations with applications in the random walk theory. J. Appl. Math. Stoch. Anal. 1998, 11, 9–28. [Google Scholar] [CrossRef]

- Sharma, K.K.; Singh, P. Hyperbolic partial differential-difference equation in the mathematical modelling of neuronal firing and its numerical solution. Appl. Math. Comput. 2008, 201, 229–238. [Google Scholar]

- Singh, P.; Sharma, K.K. Numerical solution of first-order hyperbolic partial differential-difference equation with shift. Numer. Methods Partial Differ. Equ. 2010, 26, 107–116. [Google Scholar] [CrossRef]

- Al-Mutib, A.N. Stability properties of numerical methods for solving delay differential equations. J. Comput. Appl. Math. 1984, 10, 71–79. [Google Scholar] [CrossRef]

- Loustau, J. Numerical Differential Equations: Theory and Technique, ODE Methods, Finite Differences, Finite Elements and Collocation; World Scientific: Singapore, 2016. [Google Scholar]

- Warming, R.F.; Hyett, B.J. The modified equation approach to the stability and accuracy analysis of finite-difference methods. J. Comput. Phys. 1974, 14, 159–179. [Google Scholar] [CrossRef]

- Süli, E.; Mayers, D.F. An Introduction to Numerical Analysis; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Singh, S.; Patel, V.K.; Singh, V.K. Application of wavelet collocation method for hyperbolic partial differential equations via matrices. Appl. Math. Comput. 2018, 320, 407–424. [Google Scholar] [CrossRef]

- Protter, M.H.; Weinberger, H.F. Maximum Principles in Differential Equations; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Bainov, D.D.; Kamont, Z.; Minchev, E. Comparison principles for impulsive hyperbolic equations of first order. J. Comput. Appl. Math. 1995, 60, 379–388. [Google Scholar] [CrossRef][Green Version]

- Jain, M.K.; Iyengar, S.R.K.; Saldanha, J.S.V. Numerical solution of a fourth-order ordinary differential equation. J. Eng. Math. 1977, 11, 373–380. [Google Scholar] [CrossRef]

- Rana, M.M.; Howle, V.E.; Long, K.; Meek, A. A New Block Preconditioner for Implicit Runge–Kutta Methods for Parabolic PDE Problems. SIAM J. Sci. Comput. 2021, 43.5, 475–495. [Google Scholar] [CrossRef]

- Takei, Y.; Iwata, Y. Numerical Scheme Based on the Implicit Runge-Kutta Method and Spectral Method for Calculating Nonlinear Hyperbolic Evolution Equations. Axioms 2022, 11, 28. [Google Scholar] [CrossRef]

- Southworth, B.S.; Krzysik, O.; Pazner, W.; Sterck, H.D. Fast Solution of Fully Implicit Runge-Kutta and Discontinuous Galerkin in Time for Numerical PDEs, Part I: The Linear Setting. SIAM J. Sci. Comput. 2022, 44, A416–A443. [Google Scholar] [CrossRef]

- Mizohata, S.; Murthy, M.V.; Singbal, B.V. Lectures on Cauchy Problem; Tata Institute of Fundamental Research: Mumbai, India, 1965; Volume 35. [Google Scholar]

- Miller, J.J.H.; O’Riordan, E.; Shishkin, G.I. Fitted Numerical Methods for Singular Perturbation Problems: Error Estimates in the Maximum Norm for Linear Problems in One and Two Dimensions; World Scientific: Singapore, 2012. [Google Scholar]

- Subburayan, V.; Ramanujam, N. An asymptotic numerical method for singularly perturbed convection-diffusion problems with a negative shift. Neural Parallel Sci. Comput. 2013, 21, 431–446. [Google Scholar]

| N and | ||||||

|---|---|---|---|---|---|---|

| M ↓ | 64 | 128 | 256 | 512 | 1024 | |

| 64 | 2.3551 × 10 | 1.1683 × 10 | 5.8188 × 10 | 2.9037 × 10 | 1.4505 × 10 | 5.8188 × 10 |

| 128 | 3.9796 × 10 | 1.9611 × 10 | 9.7352 × 10 | 4.8503 × 10 | 2.4209 × 10 | 9.7352 × 10 |

| 256 | 8.9886 × 10 | 4.2439 × 10 | 2.0649 × 10 | 1.0186 × 10 | 5.0590 × 10 | 8.9886 × 10 |

| 512 | 2.0254 × 10 | 9.2206 × 10 | 4.4098 × 10 | 2.1579 × 10 | 1.0675 × 10 | 9.2206 × 10 |

| 1024 | 4.3110 × 10 | 1.8317 × 10 | 8.5104 × 10 | 4.1089 × 10 | 2.0196 × 10 | 8.5104 × 10 |

| 8.9886 × 10 | 9.2206 × 10 | 9.7352 × 10 | 4.8503 × 10 | 5.0590 × 10 | - | |

| N and | ||||||

|---|---|---|---|---|---|---|

| M ↓ | 64 | 128 | 256 | 512 | 1024 | |

| 64 | 3.7129 × 10 | 1.8074 × 10 | 8.9174 × 10 | 4.4293 × 10 | 2.2074 × 10 | 8.9174 × 10 |

| 128 | 7.2100 × 10 | 3.4536 × 10 | 1.6906 × 10 | 8.3643 × 10 | 4.1602 × 10 | 8.3643 × 10 |

| 256 | 1.3467 × 10 | 6.3518 × 10 | 3.0862 × 10 | 1.5217 × 10 | 7.5559 × 10 | 7.5559 × 10 |

| 512 | 2.2033 × 10 | 1.0136 × 10 | 4.8746 × 10 | 2.3920 × 10 | 1.1850 × 10 | 4.8746 × 10 |

| 1024 | 3.4673 × 10 | 1.4911 × 10 | 7.0017 × 10 | 3.4002 × 10 | 1.6763 × 10 | 7.0017 × 10 |

| 7.2100 × 10 | 6.3518 × 10 | 8.9174 × 10 | 8.3643 × 10 | 7.5559 × 10 | - | |

| N and | ||||||

|---|---|---|---|---|---|---|

| M ↓ | 64 | 128 | 256 | 512 | 1024 | |

| 64 | 1.5319 × 10 | 7.5986 × 10 | 3.7843 × 10 | 1.8885 × 10 | 9.4332 × 10 | 9.4332 × 10 |

| 128 | 2.5359 × 10 | 1.2540 × 10 | 6.2355 × 10 | 3.1093 × 10 | 1.5525 × 10 | 6.2355 × 10 |

| 256 | 4.7023 × 10 | 2.2893 × 10 | 1.1298 × 10 | 5.6127 × 10 | 2.7973 × 10 | 5.6127 × 10 |

| 512 | 9.7474 × 10 | 4.5751 × 10 | 2.2226 × 10 | 1.0958 × 10 | 5.4407 × 10 | 9.7474 × 10 |

| 1024 | 2.2033 × 10 | 9.5061 × 10 | 4.4622 × 10 | 2.1665 × 10 | 1.0678 × 10 | 9.5061 × 10 |

| 9.7474 × 10 | 9.5061 × 10 | 6.2355 × 10 | 5.6127 × 10 | 9.4332 × 10 | - | |

| N and | ||||||

|---|---|---|---|---|---|---|

| M ↓ | 64 | 128 | 256 | 512 | 1024 | |

| 64 | 1.2625 × 10 | 6.2681 × 10 | 3.1231 × 10 | 1.5588 × 10 | 7.7871 × 10 | 7.7871 × 10 |

| 128 | 2.0230 × 10 | 1.0021 × 10 | 4.9875 × 10 | 2.4881 × 10 | 1.2426 × 10 | 4.9875 × 10 |

| 256 | 3.5823 × 10 | 1.7576 × 10 | 8.7066 × 10 | 4.3331 × 10 | 2.1616 × 10 | 8.7066 × 10 |

| 512 | 7.3619 × 10 | 3.5035 × 10 | 1.7114 × 10 | 8.4594 × 10 | 4.2067 × 10 | 8.4594 × 10 |

| 1024 | 1.7733 × 10 | 7.7339 × 10 | 3.6528 × 10 | 1.7789 × 10 | 8.7813 × 10 | 8.7813 × 10 |

| 7.3619 × 10 | 7.7339 × 10 | 8.7066 × 10 | 8.4594 × 10 | 8.7813 × 10 | - | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karthick, S.; Subburayan, V.; Agarwal, R.P. Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods. Computation 2024, 12, 64. https://doi.org/10.3390/computation12040064

Karthick S, Subburayan V, Agarwal RP. Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods. Computation. 2024; 12(4):64. https://doi.org/10.3390/computation12040064

Chicago/Turabian StyleKarthick, S., V. Subburayan, and Ravi P. Agarwal. 2024. "Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods" Computation 12, no. 4: 64. https://doi.org/10.3390/computation12040064

APA StyleKarthick, S., Subburayan, V., & Agarwal, R. P. (2024). Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods. Computation, 12(4), 64. https://doi.org/10.3390/computation12040064