Abstract

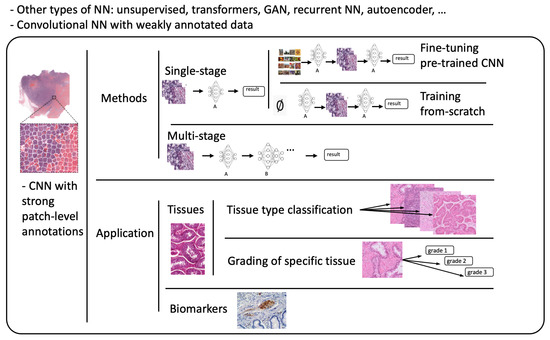

Deep learning (DL) and convolutional neural networks (CNNs) have achieved state-of-the-art performance in many medical image analysis tasks. Histopathological images contain valuable information that can be used to diagnose diseases and create treatment plans. Therefore, the application of DL for the classification of histological images is a rapidly expanding field of research. The popularity of CNNs has led to a rapid growth in the number of works related to CNNs in histopathology. This paper aims to provide a clear overview for better navigation. In this paper, recent DL-based classification studies in histopathology using strongly annotated data have been reviewed. All the works have been categorized from two points of view. First, the studies have been categorized into three groups according to the training approach and model construction: 1. fine-tuning of pre-trained networks for one-stage classification, 2. training networks from scratch for one-stage classification, and 3. multi-stage classification. Second, the papers summarized in this study cover a wide range of applications (e.g., breast, lung, colon, brain, kidney). To help navigate through the studies, the classification of reviewed works into tissue classification, tissue grading, and biomarker identification was used.

1. Introduction

Traditionally, pathology diagnosis has been performed by a human pathologist observing stained specimens from tumors on glass slides using a microscope to diagnose cancer. In recent years, deep learning has rapidly developed, and more and more entire tissue slides are being captured digitally by scanners and saved as whole slide images (WSIs) [1]. Since a large amount of WSIs are being digitized, it is only natural that many attempts have been made to explore the potential of deep learning on histopathological image analysis. Histological images and tasks have unique characteristics, and specific processing techniques are often required [2]. The authors in [3] carried out an extensive and comprehensive overview of deep neural network models developed in the context of computational histopathology image analysis. Their survey covers the period up to December 2019. Since the volume of research in this domain is rapidly growing, the aim of this review is to complement their overview with papers published since 2020. In contrast to their survey, the focus of this review is on a specific area of supervised learning only, namely classification using strongly annotated data.

The rest of this paper is organized as follows. In Section 2, a basic overview of neural networks used in the context of computational histopathology is presented. Section 3 discusses in detail supervised deep learning models and approaches used in digital pathology for classification tasks. These approaches have been grouped into three main categories: one-stage classification using fine-tuning, one-stage classification training models from scratch, and the multi-stage classification approach. In Section 4, we discuss the histopathological point of view by classifying the methods according to their area of application. In Section 5, we conclude the paper.

2. Materials and Methods—Convolutional Neural Network

For this survey, only papers that performed classification of histological images with common convolutional neural network models and used strongly annotated datasets were selected. Other articles that used more complex deep learning models or weak annotations were not included in this review. The review was carried out by searching mostly through PubMed and also arXiv for articles containing deep learning (DL) keywords such as “convolutional neural networks”, “classification”, “deep learning”, and histology keywords such as “hematoxylin and eosin”, “H&E”, and “histopathology” in the title or abstract. To narrow down the selection, combinations of deep learning keywords with histology keywords were used, for example, “CNN hematoxylin and eosin”. The combination “deep learning histopathology” was omitted since both words are too general. Moreover, only articles published since 2020 have been searched. The subsequent filtering process can be described in four steps. The first two steps were designed to quickly filter out articles that were obviously irrelevant to the topic of this review and thus reduce as much as possible the number of articles that needed to be analyzed in more detail in the remaining two steps. In the first step, articles were filtered based on the title. Papers that were obviously not related to CNN’s application for histological image data classification were excluded. This resulted in approximately 700 papers. Articles that could not be unambiguously excluded based on the title were filtered in a second step based on reading the abstract. In the third step, the introduction was analyzed. The main purpose was to exclude studies that did not meet the criteria of this review, such as papers using more complex deep learning approaches than convolutional neural networks or datasets not only consisting of histological images. In the last step, approximately 100 articles were fully read. This part was mainly focused on filtering out studies that only worked with strongly annotated datasets. We also included some papers that were missing from the initial search but were cross-referenced in selected articles.

The purpose of this chapter is to explain the concepts and models of deep neural networks (DNNs) used for classification tasks in digital pathology. Machine learning is a type of artificial intelligence that allows computers to learn and modify their behavior based on training data [4]. Supervised learning methods are the most commonly used, where the dataset consists of input features and corresponding labels. In the case of classification, the label represents one of a fixed number of classes. The algorithm learns patterns and connections in the data to find a suitable function that maps inputs to outputs, creating a model that captures hidden properties in the data and can be used to predict outputs for new inputs. Training a model involves finding the best model parameters that predict the data based on a defined loss function [5,6].

Neural networks are the foundation of most DNN algorithms, consisting of interconnected units called neurons organized into layers, including input, hidden, and output layers. DNNs have multiple hidden layers. A neuron’s output, or activation, is a linear combination of its inputs and parameters (weights and bias) transformed by an activation function. Common activation functions in neural networks include sigmoid, hyperbolic tangent, and ReLU functions. At the final output layer, activations are mapped to a distribution over classes using the softmax function [6,7].

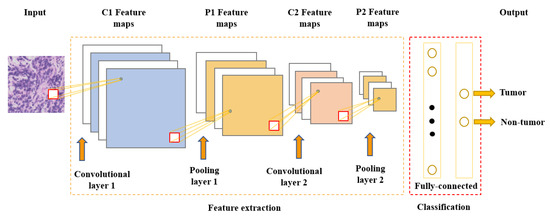

One of the most popular and commonly used supervised deep learning networks is CNNs, which are often employed for visual data processing of images and video sequences [8,9,10]. CNNs consist of three types of layers: convolutional layers, pooling layers, and fully connected layers, as shown in Figure 1. The convolutional layer is the most significant component of the CNN architecture. It consists of several filters, also called kernels, which are represented as a grid of discrete values. These values are referred to as kernel weights and are tuned during the training phase. The convolution operation consists of the kernel sliding over the whole image horizontally and vertically. Additionally, the dot product is calculated between the image and kernel by multiplying corresponding values and summing up to create a scalar value at each position. In particular, each kernel is convolved over the input matrix to obtain a feature map. Subsequently, the feature maps generated by the convolutional operation are sub-sampled in the pooling layer. The convolution and pooling layers together form a pipeline called feature extraction. Above all, the fully connected layers combine the features extracted by the previous layers to perform the final classification task [8,11,12].

Figure 1.

Convolutional neural network architecture.

3. Classification of Histopathology Images

This section provides a general overview of recent publications using deep learning and convolutional neural networks (CNNs) in digital pathology. The focus of this work is solely on supervised learning tasks applied for the classification of histological images. This category includes models that perform image-level classification, such as tumor subtype classification and grading, or use a sliding window approach to identify tissue types. Most deep learning approaches do not use the whole-slide image (WSI) as input because it would be computationally expensive (high dimensionality). Instead, they extract small square patches and assign a label to them. Existing methods can be grouped according to the level of annotations they employ. Based on the type of annotations used for training, two subcategories may be identified: the strong-annotations approach (patch-level annotations) and the weak-annotations approach (slide-level annotations) [13]. The first approach relies on the identification of regions of interest and the detailed localization of tumors by certified pathologists, while for the latter approach, it is sufficient to assign a specific class to a whole-slide image. In this work, a survey of the strong-annotations approach is conducted.

3.1. Strong-Annotations Approach (Patch-Level Annotation)

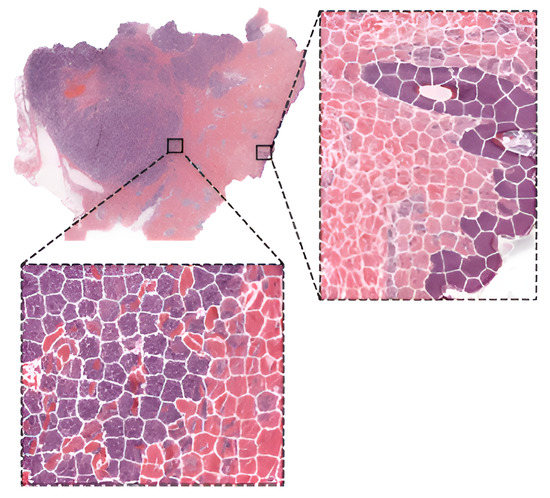

Referring to patch-level annotations as strong means that all extracted patches have their own label class. Typically, patch labels are derived from pixel-level annotations. Manually annotating pixels is very time-consuming and laborious work requiring an expert approach. For instance, pathologists have to localize and annotate all pixels or cells in WSI by contouring the whole tumor. This approach is shown in Figure 2. Therefore, there are currently very few strongly annotated histological images. Besides whole-slide image classification, pixel-wise/patch-wise predictions with the sliding window method enable spatial predictions such as localization and detection of cancerous cells/tissue. In addition, stacking patch predictions next to each other builds a WSI heatmap, so the model can be considered interpretable. Multiple examples of using CNNs in the problem of patch classification employ a single-stage approach when the patch is classified using one CNN architecture. In contrast, several approaches use a multi-stage workflow, where typically the output of one CNN architecture is fed into another CNN that delivers the final decision. Of course, even more CNN models can be included in such a workflow that can be labeled as multi-stage classification. For the one-stage approach, one can differentiate between models that have been trained from scratch with artificially initiated weights and models that use pre-trained CNN architectures on data often not related to the original problem. For multi-stage problems, such differentiation becomes difficult due to many possibilities, since some CNNs from the multi-stage workflow may be trained from scratch, while others may be pre-trained. In Figure 3, the top graphic shows the categorization of CNN methods used in this section.

Figure 2.

Construction of patches from pixel-level annotations of WSI.

3.2. Fine-Tuning

The easiest way of training CNNs with a limited amount of data is using one of the well-known pre-trained architectures. Typically, models are initialized using weights pre-trained on ImageNet and fine-tuned on histopathological images. Papers using this approach are summarized in Table 1. In [14], the authors fine-tuned VGGNet [15], ResNet [16], and InceptionV4 [17] models to obtain the probabilities of small patches (100 × 100 pixels), being tumor-infiltrating lymphocyte (TIL)-positive or TIL-negative extracted from WSIs of 23 cancer types. For the region classification performance, they extracted bigger super-patches (800 × 800 pixels) and annotated them with three categories (Low TIL, Medium TIL, or High TIL) based on the ratio of TIL-positive area. To obtain a prediction of the category, super-patches were divided into an 8x8 grid and each square (100 × 100 pixel patch) was classified as TIL-positive or TIL-negative. Subsequently, the correlation between the score of CNN (number of positive patches in super-patch) and pathologists’ annotations was observed. In [18], they developed a deep learning-based six-type classifier for the identification of a wider spectrum of lung lesions including lung cancer. Furthermore, they also included pulmonary tuberculosis and organizing pneumonia, which often needs to be surgically inspected to be differentiated from cancer. EfficientNet [19] and ResNet were employed to carry out patch-level classification. To aggregate patch predictions into slide-level classification, two methods were compared: majority voting and mean pooling. Moreover, two-stage aggregation was implemented to prioritize cancer tissues in slides.

In [20], scholars proposed three steps to develop an AI-based screening method for lymph node metastases. First, they trained a segmentation model to obtain lymph node tissue from WSI and broke it into patches. Next, they used a fine-tuned Xception model to classify patches into metastasis-positive/negative. Finally, the absence or presence of two connected patches classified as positive determined the final result of WSI. In [21], the authors compared the accuracies of stand-alone VGG-16 and VGG-19 models with ensemble models consisting of both architectures in classifying breast cancer histopathological images as carcinoma and non-carcinoma. In [22], the authors compared the performance of the VGG19 architecture with methods used in supervised learning with weakly labeled data to classify ovarian carcinoma histotype. The problem of binary classification into benign and malignant lesions, with subsequent division into eight subtypes with modified EfficientNetV2 architecture on images from the BreakHis dataset, was addressed by the authors in [23]. Similarly, Xception was employed in [24] for subtyping breast cancer into four categories. The binary subtype classification of eyelid carcinoma was performed in [25]. They used DenseNet-161 to make predictions for every patch in WSI and then used a patch voting strategy to decide the WSI subtype. In [26], the authors used AlexNet [27], GoogLeNet [28], and VGG-16 to detect histopathology images with cancer cells and to classify ovarian cancer grade. Since neural networks behave like black-box models, the authors employed the Grad-CAM method to demonstrate that CNN models attended to the cancer cell organization patterns when differentiating histopathology tumor images of different grades. Grad-CAM was also employed in [29], where the authors used this method to provide interpretability and approximate visual diagnosis for the presentation of the model’s results to pathologists. The model consisted of three neural networks fine-tuned on a custom dataset to classify H&E stained tissue patches into five types of liver lesions, cirrhosis, and nearly normal tissue. A decision algorithm consisting of three networks was also proposed in [30] to detect odontogenic cyst recurrence using binary classifiers. The procedure consisted of letting the first two models make predictions. If the predictions did not match, a third model was loaded to obtain the final decision. Another example of using Grad-CAM is [31] to visualize classification results of the VGG16 network in grading bladder non-invasive carcinoma.

Hematoxylin-eosin (H&E) is considered as the gold standard for evaluating many cancer types. However, it contains only basic morphological information. In clinical practice, to obtain molecular information, immunohistochemical (IHC) staining is often employed. Such staining can visualize the expressions of different proteins (e.g., Ki67) on the cell membrane or nucleus. This approach is referred to as double staining. Many recent studies have shown that there is a correlation between H&E and IHC staining [32,33,34].

In [35], the authors addressed the problem of double staining in determining the number of Ki67-positive cells for cancer treatment. They employed matching pairs of IHC- and H&E-stained images and fine-tuned ResNet-18 at the cell-level from H&E images. Subsequently, to create a heat map, they transformed the CNN into a fully convolutional network without fully connected layers. As a result, the fine-tuned ResNet-18 was able to handle WSI as input and produce a heat map as output.

In [36], the authors proposed a modified Xception network called HE-HER2Net by adding global average pooling, batch normalization layers, dropout layers, and dense layers with a Swish activation function. The network was designed to classify H&E images into four categories based on Human epidermal growth factor receptor 2 (HER2) positivity from 0 to 3+. In addition to routine model evaluation, the authors compared their modified network to other existing architectures and claimed that HE-HER2Net surpassed all existing models in terms of accuracy, precision, recall, and AUC score.

To produce accurate models capable of generalization, it is essential to obtain large amounts of diversified data. Typically, this problem is addressed by pooling all necessary data to a centralized location. However, due to the nature of medical data, this approach has many obstacles regarding privacy and data ownership, as well as various regulatory policies (e.g., the General Data Protection Regulation GDPR of the European Union [37]). The authors of [38] simulated a Federated Learning (FL) environment to train a deep learning model that classifies cells and nuclei to identify TILs in WSI. They generated a dataset from WSIs of cancer from 12 anatomical sites and partitioned it into eight different nodes. To evaluate the performance of FL, they also trained a CNN using a centralized approach and compared the results. The study shows that the FL approach achieves similar performance to the model trained with data pooled at a centralized location.

Table 1.

Summary of fine-tuning papers.

Table 1.

Summary of fine-tuning papers.

| Reference | Cancer Types | Staining | Dataset | Neural Networks in Models | Method |

|---|---|---|---|---|---|

| Abousamra et al. (2022) [14] | 23 cancer types | H&E | The Cancer Genome Atlas (TCGA) | Vgg-16, ResNet-34, InceptionV4 | Patch-level classification of Tumor infiltrating lymphocytes (TIL) |

| Yang et al. (2021) [18] | Lung cancer | H&E | Custom dataset of 1271 WSIs and 422 WSIs from TCGA | ResNet-50, EfficientNet-B5 | Six-type classification of lung lesions including pulmonary tuberculosis and Organizing pneumonia |

| Hameed et al. (2020) [21] | Breast cancer | H&E | Custom dataset of 544 WSIs | VGG-16, VGG-19 | Ensemble of neural networks to classify carcinoma and non-carcinoma images |

| Yu et.al (2020) [26] | Ovarian cancer | H&E | TCGA | AlexNet, GoogLeNet, VGG-16 | Cancerous regions identification and grades classification |

| Liu et al. (2020) [35] | Different types of cancer | H&E, IHC (Ki67) | Custom dataset from 300 Regions of interest | ResNet-18 | Classification of Ki67 positive and negative cells |

| Baid et al. (2022) [38] | 12 types | H&E | TCGA | VGG-16 | Federated learning for classification of tumor infiltrating lymphocytes |

| Cheng et al. (2022) [29] | Liver cancer | H&E | Custom dataset | ResNet50, InceptionV3, Xception | Ensemble of 3 networks pretrained on ImageNet used to differentiate Hepatocellular nodular lesions (5 types) with nodular cirrhosis and nearly normal liver tissue |

| Shovon et al. (2022) [36] | Breast cancer | H&E | BCI dataset | Modified Xception | Four class classification of HER2 with modified Xception model pretrained on ImageNet |

| Rao et al. (2022) [30] | Odontogenic cysts | H&E | Custom dataset | Inception-V3, DenseNet-121, Inception-Resnet-V2 | Binary classification of cyst recurrence based on decision algorithm consisting of 3 models |

| Farahani et al. (2022) [22] | Ovarian cancer | H&E | Custom dataset | VGG19 | Comparison of classification of ovarian carcinoma histotype by four models |

| Sarker et al. (2023) [23] | Breast cancer | H&E | BreakHis dataset | Modified EfficientNetV2 | Binary classification of malignant and benign tissue and multi-class subtyping using fused mobile inverted bottleneck convolutions and mobile inverted bottleneck convolutions with dual squeeze and excitation network and EfficientNetV2 as backbone |

| Luo et al. (2022) [25] | Eyelid carcinoma | H&E | Custom dataset | DenseNet161 | The differential diagnosis of eyelid basal cell carcinoma and sebaceous carcinoma based on patch prediction by the DenseNet161 architecture and WSI differentiation by an average-probability strategy-based integration module |

| Mundhada et al. (2023) [31] | Bladder cancer | H&E | Custom dataset | VGG16 | Grading of non-invasive carcinoma |

| Khan et al. (2023) [20] | Breast and colon cancer | H&E | PatchCamelyon | Xception | Segmentation of lymph node tissue with subsequent classification to detect metastases |

| Hameed et al. (2022) [24] | Breast cancer | H&E | Colsanitas dataset | Xception | Using Xception networks as feature extractor to classify breast cancer into four categories: normal tissue, benign lesion, in situ carcinoma, and invasive carcinoma |

3.3. Training from Scratch

As already stated, fine-tuning is a promising method for training deep neural networks. On the other hand, it can only be applied to well-known architectures that are already pre-trained. When designing a custom CNN architecture, it needs to be trained from scratch. Table 2 summarizes studies in which neural networks were trained from scratch. In [39], the authors proposed a method based on CNN with residual blocks (Res-Net) referred to as DeepLRHE to predict lung cancer recurrence and the risk of metastasis. Later in [40], scholars established the new DeepIMHL model consisting of CNN and Res-Net to predict mutated genes as biomarkers for targeted-drug therapy of lung cancer. In addition, the authors in [41] trained and optimized EfficientNet models on images of non-Hodgkin lymphoma and evaluated its potential to classify tumor-free reference lymph nodes, nodal small lymphocytic lymphoma/chronic lymphocytic leukemia, and nodal diffuse large B-cell lymphoma. In [42], the authors proposed three architectures of ResNet differing in the construction of residual blocks trained from scratch. Their suggested model achieved accuracy comparable to other state-of-the-art approaches in the classification of oral cancer histological images into three stages. To classify kidney cancer subtypes, in [43] the authors developed an ensemble-pyramidal model consisting of three CNNs that process images of different sizes. The authors in [44] demonstrated that CNN-based DL can predict the gBRCA mutation status from H&E-stained WSIs in breast cancer. According to researchers in [45], CNN can be employed to differentiate non-squamous Non-Small Cell Lung Cancer versus squamous cell carcinoma. To classify the tumor slide, they pooled information using the max-pooling strategy. Moreover, they added quality check with a threshold for predictions to select only tiles with a high prediction level. Additionally, to improve the prediction, they also used a virtual tissue microarray (circle from the centroid based on the pathologist’s hand-drawn tumor annotations) instead of WSI.

To compare the performance of pre-trained networks with the custom ones trained from scratch, researchers in [46] used images of three cancer types: melanoma, breast cancer, and neuroblastoma. Unlike others using patches, the authors applied the simple linear iterative clustering (SLIC) to segment images into superpixels which group together similar neighboring pixels, as shown in Figure 4. Thus, these superpixels were classified into multiple subtype categories based on the type of cancer. To make WSI-level predictions, they used multiple specific quantification metrics such as stroma-to-tumor ratio. Although the custom NN achieved comparable results, pre-trained networks performed better on all three cancer types. A similar comparison was carried out in [47] for the classification of subtypes in lung cancer biopsy slides. Results showed that a CNN model built from scratch fitted to the specific pathological task could produce better performances than fine-tuning pre-trained CNNs.

A comparison of training from scratch versus transfer learning was performed in [48]. The authors compared three approaches for training the VGG16 network: training from scratch, transfer learning as a feature extractor, and fine-tuning on images of breast cancer to detect Invasive Ductal Carcinoma. According to the results, the model trained from scratch achieved better results in terms of accuracy (0.85). However, using transfer learning, they were able to train a comparable model (accuracy 0.81) ten times faster. Furthermore, among the transfer learning approaches, transfer learning via feature extraction (accuracy 0.81), which involved retraining some of the convolutional blocks, yielded better results in less time compared to transfer learning via fine-tuning (accuracy 0.51).

Table 2.

Summary of papers training neural networks from scratch.

Table 2.

Summary of papers training neural networks from scratch.

| Reference | Cancer Types | Staining | Dataset | Neural Networks in Models | Method |

|---|---|---|---|---|---|

| Wu et al. (2020) [39] | Lung cancer | H&E | 211 samples from TCGA | Custom CNN with residual blocks | Prediction of lung cancer recurrence |

| Huang et al. (2021) [40] | Lung cancer | H&E | TCGA | Custom CNN with residual blocks | Identification of the bio-markers of lung cancer |

| Steinbuss et al. (2021) [41] | Blood cancer | H&E | Custom dataset from 629 patients | EfficientNet | Classification of tumor-free lymph nodes, nodal small lymphocytic lymphoma/chronic lymphocytic leukemia, and nodal diffuse large B-cell lymphoma |

| Panigrahi et al. (2022) [42] | Oral cancer | H&E | Custom dataset | Three ResNet architectures | Classification of 3 grades |

| Wang et al. (2021) [44] | Breast cancer | H&E | Custom dataset of 222 images | ResNet-18 | BRCA gene mutations prediction |

| Le Page et al. (2021) [45] | Lung cancer | H&E | Custom dataset of 197 images and 60 images from TCGA | InceptionV3 | Classification of patches (tiles) into cancer subtypes. For final case classification they used majority-vote method or highest probability class |

| Zormpas-Petridis et al. (2021) [46] | Melanoma, breast cancer and childhood neuroblastoma | H&E | Custom dataset | Custom CNN | Classification of the: melanoma (tumor tissue, stroma, cluster of lymphocytes, normal epidermis, fat, and empty/white space) breast cancer (tumor, necrosis, stroma, cluster of lymphocytes, fat, and lumen/empty space) neuroblastoma (undifferentiated neuroblasts, tissue damage (necrosis/apoptosis), areas of differentiation, cluster of lymphocytes, hemorrhage, muscle, kidney, and empty/white space) |

| Abdolahi et al. (2020) [48] | Breast cancer | H&E | Kaggle | Custom CNN, VGG-16 | Classification of invasive ductal carcinoma |

| Yang et al. (2022) [47] | Lung cancer | H&E | Custom dataset | Custom CNN | Comparison of classification lung cancer by fine-tuned models and models trained from scratch |

| Abdeltawab et al. (2022) [43] | Kidney cancer | H&E | Custom dataset | Custom CNN | An ensemble-pyramidal deep learning model consisting of three CNNs processing different image sizes to differentiate 4 tissue subtypes |

Figure 4.

WSI image segmentation using the SLIC superpixels algorithm. Reprinted from [46], with permission according to Creative Commons Attribution License.

3.4. Multi-Stage Classification

In [49], scholars tackled the complex problem of computer-aided disease diagnosis by designing a two-stage system to determine the Tumor Mutation Burden (TMB) status, which is an important biomarker for predicting the response to immunotherapy in lung cancer. For the first stage, they developed a CNN based on InceptionV3 [50] to classify known histologic features for individual patches across H&E-stained WSIs. In the second stage, the patch-level CNN predictions were aggregated over the entire slide and combined with clinical features such as smoking status, age, stage, and sex to classify the TMB status. The final model was obtained by ensembling 10 independently trained networks.

In [51], the authors proposed a diagnostic framework for generating a whole-case report consisting of the detection of renal cancer regions, classification of cancer subtypes, and cancer grades. From every stain-normalized WSI, patches were selected from tumor and non-tumor regions to form a dataset. For tumor region classification, they fine-tuned several different architectures and identified InceptionV3 as the most suitable one. Thus, they also used this architecture for the remaining tasks. Patches classified as containing a tumor were further classified into three tumor subtypes and four grade classes.

It should be noted that CNNs have proven to be successful classifiers in the field of histology. Nevertheless, they can also be employed in conjunction with other machine learning (ML) classifiers. The authors in [52] developed a CNN model for the automated classification of pathology glioma (brain tumor) images into six subtypes. The images pass through the CNN to obtain patch-level output categories. At this point, those patch labels go through a hierarchical decision tree for patient-level diagnosis based on the amounts and proportions of tumor types. The outcome thus includes results for both the image patch-label and the patient-level label. In [53], researchers developed a three-step approach to HER2 status tissue classification in breast cancer. Firstly, they used a pre-trained UNet-based nucleus detector [54] to create patches. Secondly, they trained a CNN to identify tumor nuclei and further classified them as HER2-positive or HER2-negative. In [55], the authors proposed a classification method for subtype differentiation of liver cancer based on a stacking classifier with deep neural networks as feature extractors. They used four pre-trained deep convolutional neural networks, ResNet50, VGG16, DenseNet201 [56], and InceptionResNetV2 [17], to extract deep features from histopathological images. After fusing extracted deep features from different architectures, they applied multiple ML classifiers (Support-vector machines (SVMs), k-Nearest Neighbor (k-NN), Random Forest (RF)) on the feature vector to obtain final classification.

To predict 5-year overall survival in renal cell carcinoma, scholars in [57] fine-tuned a ResNet18 pre-trained on the ImageNet dataset. The CNN assigned a probability score for every patch, and to determine the class for an entire WSI, the scores of all associated patches were averaged and classified. In addition to single-stage classification, they also used CNN prediction with other clinicopathological variables for multivariable logistic regression analysis. The authors in [58] presented an approach that combines a deep convolutional neural network as a patch-level classifier and XGBoost [59] as a WSI-level classifier to automatically classify H&E-stained breast digital pathology images into four classes: normal tissue, benign lesion, ductal carcinoma in situ, and invasive carcinoma. InceptionV3 was trained as the Patch-Level Classifier to generate four predicted probability values combined into a heatmap. By comparing the classification accuracy of different classifiers, they chose XGBoost as the WSI-level classifier.

In [60], researchers trained a deep learning classifier and applied it to classify lung tumor samples into nine tissue classes. From the extracted features, they computed spatial features that describe the composition of the tumor microenvironment and used them in combination with clinical data to predict patient survival, as well as to predict tumor mutation. The authors of [61] claim that they were the first to propose a method for detecting Pancreatic ductal adenocarcinoma in WSIs based on CNNs. They employed InceptionV3 as a patch-level classifier and predicted patches combined with a malignancy probability heatmap. At this point, statistical features were extracted from WSI heatmaps and applied to train a Light Gradient Boosting Machine [62] for slide-level classification. Similar approaches were taken by researchers in [63]. On histological images of gastric cancer (GC), they made both binary and multi-class classifications. Firstly, InceptionV3 was used for both malignant and benign patch classification as well as discriminating normal mucosa, gastritis, and gastric cancer. Secondly, they separated all WSIs into categories, “complete normal WSIs” and “mixture WSIs” with gastritis or GC, and used 44 features extracted from the malignancy probability heatmap generated by CNN to train and fine-tune the RF classifier.

The addition of attention mechanisms to CNNs for increased performance has become increasingly popular nowadays. In [64], the Divide-and-Attention Network (DANet) was proposed for breast cancer classification and grading of both breast and colorectal cancers. This network has three inputs: the original pathological image, the nuclei image, and the non-nuclei image. The nuclei and non-nuclei images are obtained as a result of a nuclei segmentation model. A similar approach was used in [65], where the authors developed the Nuclei-Guided Network (NGNet) for grading of breast invasive ductal carcinoma. Compared to DANet, NGNet has only two input images: the original image and the nuclei image obtained from segmentation.

Medulloblastoma (MB) is a dangerous malignant pediatric brain tumor that can lead to death [66]. In [67], the authors proposed a mixture of deep learning and machine learning methods called MB-AI-His for the automatic diagnosis and classification of four subtypes of pediatric MB. The diagnosis is performed in two levels. The first level classifies the images into normal and abnormal (binary classification level), while the second level classifies the abnormal images containing MB tumor into the four subtypes of childhood MB tumor (multi-classification level). Three pre-trained deep CNNs are utilized with transfer learning (ResNet-50, DenseNet-201, and MobileNet [68]) to extract spatial features. These features are combined with time-frequency features extracted using the discrete wavelet transform (DWT) method. Finally, a combination of spatial features and five popular classifiers is used to perform multi-class classification, including SVM, k-NN, Linear Discriminant Analysis, and Ensemble Subspace Discriminant. A similar approach is introduced by the authors in [69]. Multi-class classification of the four classes of childhood MB is much more complicated than binary classification. Few research articles have investigated this multi-class classification problem. Their pipeline consists of spatial DL feature extraction from 10 fine-tuned CNN architectures, feature fusion and reduction using the DWT method, and subsequent selection of features. Classification is accomplished using a bidirectional Long-Short-Term Memory classifier. All papers using multistage classification are listed in Table 3.

Table 3.

Summary of studies using multi-stage classification.

Table 3.

Summary of studies using multi-stage classification.

| Reference | Cancer Types | Staining | Dataset | Neural Networks in Models | Method |

|---|---|---|---|---|---|

| Sadhwani et al. (2021) [49] | Lung cancer | H&E | TCGA and custom dataset of 50 WSIs | Custom CNN | Multiclassification into subtypes and binary classification of Tumor Mutation Burden |

| Wu et al. (2021) [51] | Renal cell cancer (RCC) | H&E | 667 WSIs from TCGA + new RCC dataset of 632 WSIs | InceptionV3 | Identification of tumor regions and classification into tumor subtypes and different grades |

| Jin et.al (2021) [52] | Brain cancer | H&E | slides of 323 patients from the Central Nervous System Disease Biobank | custom CNN based on DenseNet | Classification into 5 subtypes of glioma |

| Anand et al. (2020) [53] | Breast cancer | H&E, IHC | dataset from University of Warwick and TCGA | Custom neural network | Identification of tumor patches and classification of HER2 into positive or negative |

| Dong et al. (2022) [55] | Liver cancer | H&E | Custom dataset of 73 images | ResNet-50, VGG-16, DenseNet-201, InceptionResNetV2 | Classification of three differentiation states |

| Mi et al. (2021) [58] | Breast cancer | H&E | Custom dataset of 540 WSIs | InceptionV3 | Multi-class classification of normal tissue, benign lesion, ductal carcinoma in situ, and invasive carcinoma |

| Fu et al. (2021) [61] | Pancreas | H&E | Custom dataset of 231 WSIs | InceptionV3 | Classification of patches into cancerous or normal |

| Ma et al. (2020) [63] | Gastric cancer | H&E | Custom dataset of 763 WSIs | InceptionV3 | Classification of normal mucosa, chronic gastritis, and intestinal-type |

| Attallah (2021) [67] | Brain cancer | H&E | Custom dataset of 204 images | ResNet-50, DenseNet-201, MobileNet | Classification of normal and abnormal Medulloblastoma |

| Attallah (2021) [69] | Brain cancer | H&E | Custom dataset of 204 images | 10 CNN architectures | Multi-class classification of 4 medulloblastoma subtypes |

| Yan et al. (2022) [64] | Breast and colorectal cancer | H&E | BACH dataset and datasets avaiable from different articles | Xception | Classification of breast cancer, colorectal and breast cancer grading based on Divide-and-Attention Network using Xception CNN as backbone |

| Yan et al. (2022) [65] | Breast cancer | H&E | Custom dataset | NGNet | Grading of breast cancer using attention modules and segmentation. Classification is done with two images: original image and corresponding nuclei image) |

| Raczkowski et al. (2022) [60] | Lung cancer | H&E | Custom dataset | ARA-CNN | Classification of mutation based on tissue prevalence and tumor microenvironment composition computed from ARA-CNN output. CNN was used to classify patches into 9 tissue subtypes |

| Wessels et al. (2022) [57] | Kidney cancer | H&E | TCGA | ResNet18 | Pretrained ResNet18 CNN was used to predict 5-year overal survival in renal cell carcinoma. Furthermore, the CNN-based classification was an independent predictor in a multivariable clinicopathological model |

4. Discussion

Based on the studies described in the previous chapter, it is clear that there are many approaches to successfully use neural networks for many classification tasks in histology and a variety of cancer types. Most commonly, DL has been applied to lung and breast cancer. Breast cancer is a leading cause of cancer-related deaths in women worldwide, and lung cancer was the second most commonly diagnosed cancer worldwide in 2020, behind female breast cancer [24,47]. From a histological point of view, the tasks in which neural networks were successfully applied have been divided into the following three groups: tissue types, grading, and biomarker classification. The articles mentioned in this review are arranged according to this categorization in Table 4.

Table 4.

Overview of all studies classified according to the application area.

4.1. Tissue Types

One of the most fundamental tasks in histology is the classification of tissue types. It is possible to look at this task in two ways. The first aspect and the complete basis is to identify tumor tissue and other tissue types. This may involve a binary division into tumor and non-tumor tissue (this approach was used in [21]) as well as multi-class detection of tumor, stroma, lymphocytes, fat, necrosis, and other. In [46], the authors demonstrated that their proposed SuperHistopath framework succeeded in tissue multi-classification of three different cancer types and was able to achieve high accuracy (98.8% in melanomas, 93.1% in breast cancer, and 98.3% in childhood neuroblastoma).

The second aspect is classifying tumor tissue into cancer subtypes. This could be the classification of malign vs. benign carcinoma, invasive vs. non-invasive carcinoma, or various subtypes of a certain cancer type. This subtyping is an important part of determining a treatment plan; however, it often needs special IHC staining to be done. Therefore, the ability to perform subtype classification directly from H&E images could be of great benefit in terms of clinical application. Authors in [23] proposed a method for subdividing breast cancer into eight subtypes, four for benign (adenosis, fibroadenoma, phyllodes tumour, and tubular adenoma) and four for malignant (carcinoma, lobular carcinoma, mucinous carcinoma, and papillary carcinoma). They showed that their model achieved significant results compared to other state-of-the-art models mentioned in the study.

It should be noted that the two approaches are not always clearly separable, and the classification of tissue type is often associated with the classification of tumor subtypes. This approach was demonstrated in [24], where the breast tissue was categorized as normal tissue, benign lesion, in situ carcinoma, or invasive carcinoma. Another example is [29], where researchers managed to obtain models with accuracy over 0.95% in classifying five types of liver lesions, cirrhosis, and nearly normal tissue.

4.2. Tissue Grading

Cancer grading has its origins in 1914 when pathologist Albert Broders began collecting data showing that cancers of the same histologic type behaved differently. By the late 1930s, tumor grading was considered a state-of-the-art prognostic technique for scientific cancer care. Today, there are hundreds of grading schemes for various types of cancer [70]. However, in comparison with subtype classification, pathological image grading is considered a fine-grained task [64,65].

Researchers in [42] used residual networks to grade images of squamous cell carcinoma, since it accounts for about 90% of oral disorders. To demonstrate the deep learning capability of grading different cancer types, the authors of [64] developed a model with an average classification accuracy of 95% and 91% for colorectal and breast cancer grading, respectively. The breast cancer grading task was also addressed in [65].

4.3. Bio-Marker Classification

A bio-marker is a biological molecule found in tissues that is a sign of a normal or abnormal process or of a condition or disease, such as cancer. Typically, bio-markers differentiate a person without disease from an affected patient. There is a tremendous variety of bio-markers, including proteins, antibodies, nucleic acids, gene expression, and others. They can be used in clinical treatment for multiple tasks, such as estimating the risk of disease, differential diagnosis, predicting response to therapy, determining the prognosis of the disease, and so on [71].

In [36], the authors presented the architecture HE-HER2Net, which surpassed the accuracy of other common architectures in the multiclassification of HER2 into four categories. Following this, researchers in [40] developed a CNN to predict the mutated genes, which are potential candidates for targeted-drug therapy for lung cancer. The average probability of the bio-markers of lung cancer was received through the model, with the highest accuracy of 86.3%.

Ki67 is a protein that is found in the cancer cell nucleus and can be found only in cells that are actively growing and dividing, which is typical for cells mutated into cancer. Therefore, Ki67 is sometimes considered a good marker of proliferation (rapid increase in the number of cells) [72]. In [35], scholars fine-tuned an NN to classify cell images into Ki67-positive, Ki67-negative, and as a background image with an accuracy of 93%.

5. Conclusions

The article presents a detailed survey of recent DL models based on neural networks in the context of classification tasks for the analysis of histological images. The analysis of approximately 70 articles published in the last three years shows that automated processing and classification of histopathological images by deep learning methods have been applied to a wide range of histological tasks, such as tumor tissue classification or biomarker evaluation to determine treatment plans. The survey reveals several conclusions:

- Application Areas: Deep learning has been applied to several types of cancer (e.g., breast, lung, colon, brain, kidney) and has proven to be capable of assisting pathologists with visual tasks in the treatment of various diseases. The reviewed works have identified the following three groups of specific tasks: classification of tissue type, grading of specific tissue, and identification of the presence of biomarkers.

- Single- and Multi-Stage Approaches: Convolutional neural networks can be applied either as a stand-alone classifier or can be used as a feature extractor whose outputs will proceed into another machine learning model to carry out the final classification.

- Pre-Training: Training networks from scratch requires a large dataset and a lot of computing time. Therefore, it is recommended to experiment with well-known architectures pre-trained on ImageNet. If the results are not sufficient, then one can design their own custom network and train it from scratch.

Author Contributions

Conceptualization, D.P. and I.C.; resources, D.P.; writing—original draft preparation, D.P.; writing—review and editing, D.P. and I.C.; visualization, D.P.; supervision, I.C.; funding acquisition, I.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Operational Program “Integrated Infrastructure” of the project “Integrated strategy in the development of personalized medicine of selected malignant tumor diseases and its impact on life quality”, ITMS code: 313011V446, co-financed by resources of European Regional Development Fund.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AUC | Area Under Curve |

| BCI | Breast Cancer Immunohistochemical |

| DL | Deep Learning |

| CNNs | Convolutional Neural Networks |

| WSIs | Whole Slide Images |

| NNs | Neural Networks |

| TIL | Tumor Infiltrating Lymphocytes |

| H&E | Hematoxylin and Eosin |

| IHC | Immunohistochemical |

| FL | Federated Learning |

| GDPR | General Data Protection Regulation |

| SLIC | Simple Linear Iterative Clustering |

| TMB | Tumor Mutation Burden |

| ML | Machine Learning |

| HER2 | Human Epidermal Growth Factor Receptor 2 |

| SVM | Support-vector machines |

| k-NN | k-Nearest Neighbor |

| RF | Random Forest |

| GC | Gastric Cancer |

| MB | Medulloblastoma |

| DWT | Discrete Wavelet Transform |

| RCC | Renal cell cancer |

References

- Pantanowitz, L. Digital images and the future of digital pathology: From the 1st Digital Pathology Summit, New Frontiers in Digital Pathology, University of Nebraska Medical Center, Omaha, Nebraska 14–15 May 2010. J. Pathol. Inform. 2010, 1, 15. [Google Scholar] [CrossRef]

- Komura, D.; Ishikawa, S. Machine Learning Methods for Histopathological Image Analysis. Comput. Struct. Biotechnol. J. 2018, 16, 34–42. [Google Scholar] [CrossRef] [PubMed]

- Srinidhi, C.L.; Ciga, O.; Martel, A.L. Deep neural network models for computational histopathology: A survey. Med. Image Anal. 2021, 67, 101813. [Google Scholar] [CrossRef] [PubMed]

- Alzubi, J.; Nayyar, A.; Kumar, A. Machine Learning from Theory to Algorithms: An Overview. J. Phys. Conf. Ser. 2018, 1142, 012012. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Wang, M.; Lu, S.; Zhu, D.; Lin, J.; Wang, Z. A High-Speed and Low-Complexity Architecture for Softmax Function in Deep Learning. In Proceedings of the 2018 IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), Chengdu, China, 26–30 October 2018; pp. 223–226. [Google Scholar] [CrossRef]

- Ahmad, J.; Farman, H.; Jan, Z. Deep Learning Methods and Applications. In Deep Learning: Convergence to Big Data Analytics; Springer: Singapore, 2019; pp. 31–42. [Google Scholar] [CrossRef]

- Yao, G.; Lei, T.; Zhong, J. A review of Convolutional-Neural-Network-based action recognition. Pattern Recognit. Lett. 2019, 118, 14–22. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2019, 9, 85–112. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Dimitriou, N.; Arandjelović, O.; Caie, P.D. Deep Learning for Whole Slide Image Analysis: An Overview. Front. Med. 2019, 6, 00264. [Google Scholar] [CrossRef] [PubMed]

- Abousamra, S.; Gupta, R.; Hou, L.; Batiste, R.; Zhao, T.; Shankar, A.; Rao, A.; Chen, C.; Samaras, D.; Kurc, T.; et al. Deep Learning-Based Mapping of Tumor Infiltrating Lymphocytes in Whole Slide Images of 23 Types of Cancer. Front. Oncol. 2022, 11, 806603. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. Computational and Biological Learning Society. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar] [CrossRef]

- Yang, H.; Chen, L.; Cheng, Z.; Yang, M.; Wang, J.; Lin, C.; Wang, Y.; Huang, L.; Chen, Y.; Peng, S.; et al. Deep learning-based six-type classifier for lung cancer and mimics from histopathological whole slide images: A retrospective study. BMC Med. 2021, 19, 80. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; JMLR: Cambridge, MA, USA, 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Khan, A.; Brouwer, N.; Blank, A.; Müller, F.; Soldini, D.; Noske, A.; Gaus, E.; Brandt, S.; Nagtegaal, I.; Dawson, H.; et al. Computer-assisted diagnosis of lymph node metastases in colorectal cancers using transfer learning with an ensemble model. Mod. Pathol. 2023, 36, 100118. [Google Scholar] [CrossRef]

- Hameed, Z.; Zahia, S.; Garcia-Zapirain, B.; Javier Aguirre, J.; María Vanegas, A. Breast Cancer Histopathology Image Classification Using an Ensemble of Deep Learning Models. Sensors 2020, 20, 4373. [Google Scholar] [CrossRef] [PubMed]

- Farahani, H.; Boschman, J.; Farnell, D.; Darbandsari, A.; Zhang, A.; Ahmadvand, P.; Jones, S.J.M.; Huntsman, D.; Köbel, M.; Gilks, C.B.; et al. Deep learning-based histotype diagnosis of ovarian carcinoma whole-slide pathology images. Mod. Pathol. 2022, 35, 1983–1990. [Google Scholar] [CrossRef] [PubMed]

- Sarker, M.M.K.; Akram, F.; Alsharid, M.; Singh, V.K.; Yasrab, R.; Elyan, E. Efficient Breast Cancer Classification Network with Dual Squeeze and Excitation in Histopathological Images. Diagnostics 2023, 13, 103. [Google Scholar] [CrossRef]

- Hameed, Z.; Garcia-Zapirain, B.; Aguirre, J.J.; Isaza-Ruget, M.A. Multiclass classification of breast cancer histopathology images using multilevel features of deep convolutional neural network. Sci. Rep. 2022, 12, 15600. [Google Scholar] [CrossRef]

- Luo, Y.; Zhang, J.; Yang, Y.; Rao, Y.; Chen, X.; Shi, T.; Xu, S.; Jia, R.; Gao, X. Deep learning-based fully automated differential diagnosis of eyelid basal cell and sebaceous carcinoma using whole slide images. Quant. Imaging Med. Surg. 2022, 4166–4175. [Google Scholar] [CrossRef]

- Yu, K.H.; Hu, V.; Wang, F.; Matulonis, U.A.; Mutter, G.L.; Golden, J.A.; Kohane, I.S. Deciphering serous ovarian carcinoma histopathology and platinum response by convolutional neural networks. BMC Med. 2020, 18, 236. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Cheng, N.; Ren, Y.; Zhou, J.; Zhang, Y.; Wang, D.; Zhang, X.; Chen, B.; Liu, F.; Lv, J.; Cao, Q.; et al. Deep learning-based classification of hepatocellular nodular lesions on whole-slide histopathologic images. Gastroenterology 2022, 162, 1948–1961.e7. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.S.; Shivanna, D.B.; Lakshminarayana, S.; Mahadevpur, K.S.; Alhazmi, Y.A.; Bakri, M.M.H.; Alharbi, H.S.; Alzahrani, K.J.; Alsharif, K.F.; Banjer, H.J.; et al. Ensemble Deep-Learning-Based Prognostic and Prediction for Recurrence of Sporadic Odontogenic Keratocysts on Hematoxylin and Eosin Stained Pathological Images of Incisional Biopsies. J. Pers. Med. 2022, 12, 1220. [Google Scholar] [CrossRef] [PubMed]

- Mundhada, A.; Sundaram, S.; Swaminathan, R.; D’ Cruze, L.; Govindarajan, S.; Makaram, N. Differentiation of urothelial carcinoma in histopathology images using deep learning and visualization. J. Pathol. Inform. 2023, 14, 100155. [Google Scholar] [CrossRef]

- Naik, N.; Madani, A.; Esteva, A.; Keskar, N.S.; Press, M.F.; Ruderman, D.; Agus, D.B.; Socher, R. Deep learning-enabled breast cancer hormonal receptor status determination from base-level H&E stains. Nat. Commun. 2020, 11, 5727. [Google Scholar] [CrossRef]

- Seegerer, P.; Binder, A.; Saitenmacher, R.; Bockmayr, M.; Alber, M.; Jurmeister, P.; Klauschen, F.; Müller, K.R. Interpretable Deep Neural Network to Predict Estrogen Receptor Status from Haematoxylin-Eosin Images. In Artificial Intelligence and Machine Learning for Digital Pathology: State-of-the-Art and Future Challenges; Holzinger, A., Goebel, R., Mengel, M., Müller, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 16–37. [Google Scholar] [CrossRef]

- Rawat, R.R.; Ortega, I.; Roy, P.; Sha, F.; Shibata, D.; Ruderman, D.; Agus, D.B. Deep learned tissue “fingerprints” classify breast cancers by ER/PR/Her2 status from H&E images. Sci. Rep. 2020, 10, 7275. [Google Scholar] [CrossRef]

- Liu, Y.; Li, X.; Zheng, A.; Zhu, X.; Liu, S.; Hu, M.; Luo, Q.; Liao, H.; Liu, M.; He, Y.; et al. Predict Ki-67 Positive Cells in H&E-Stained Images Using Deep Learning Independently From IHC-Stained Images. Front. Mol. Biosci. 2020, 7, 00183. [Google Scholar] [CrossRef]

- Shovon, M.S.H.; Islam, M.J.; Nabil, M.N.A.K.; Molla, M.M.; Jony, A.I.; Mridha, M.F. Strategies for Enhancing the Multi-Stage Classification Performances of HER2 Breast Cancer from Hematoxylin and Eosin Images. Diagnostics 2022, 12, 2825. [Google Scholar] [CrossRef]

- Voigt, P.; von dem Bussche, A. The EU General Data Protection Regulation (GDPR), 1st ed.; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- Baid, U.; Pati, S.; Kurc, T.M.; Gupta, R.; Bremer, E.; Abousamra, S.; Thakur, S.P.; Saltz, J.H.; Bakas, S. Federated Learning for the Classification of Tumor Infiltrating Lymphocytes. arXiv 2022, arXiv:2203.16622. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, L.; Li, C.; Cai, Y.; Liang, Y.; Mo, X.; Lu, Q.; Dong, L.; Liu, Y. DeepLRHE: A deep convolutional neural network framework to evaluate the risk of lung cancer recurrence and metastasis from histopathology images. Front. Genet. 2020, 11, 768. [Google Scholar] [CrossRef]

- Huang, K.; Mo, Z.; Zhu, W.; Liao, B.; Yang, Y.; Wu, F.X. Prediction of Target-Drug Therapy by Identifying Gene Mutations in Lung Cancer With Histopathological Stained Image and Deep Learning Techniques. Front. Oncol. 2021, 11, 642945. [Google Scholar] [CrossRef]

- Steinbuss, G.; Kriegsmann, M.; Zgorzelski, C.; Brobeil, A.; Goeppert, B.; Dietrich, S.; Mechtersheimer, G.; Kriegsmann, K. Deep Learning for the Classification of Non-Hodgkin Lymphoma on Histopathological Images. Cancers 2021, 13, 2419. [Google Scholar] [CrossRef]

- Panigrahi, S.; Bhuyan, R.; Kumar, K.; Nayak, J.; Swarnkar, T. Multistage classification of oral histopathological images using improved residual network. Math. Biosci. Eng. 2022, 19, 1909–1925. [Google Scholar] [CrossRef] [PubMed]

- Abdeltawab, H.A.; Khalifa, F.A.; Ghazal, M.A.; Cheng, L.; El-Baz, A.S.; Gondim, D.D. A deep learning framework for automated classification of histopathological kidney whole-slide images. J. Pathol. Inform. 2022, 13, 100093. [Google Scholar] [CrossRef]

- Wang, X.; Zou, C.; Zhang, Y.; Li, X.; Wang, C.; Ke, F.; Chen, J.; Wang, W.; Wang, D.; Xu, X.; et al. Prediction of BRCA Gene Mutation in Breast Cancer Based on Deep Learning and Histopathology Images. Front. Genet. 2021, 12, 661109. [Google Scholar] [CrossRef]

- Le Page, A.L.; Ballot, E.; Truntzer, C.; Derangère, V.; Ilie, A.; Rageot, D.; Bibeau, F.; Ghiringhelli, F. Using a convolutional neural network for classification of squamous and non-squamous non-small cell lung cancer based on diagnostic histopathology HES images. Sci. Rep. 2021, 11, 23912. [Google Scholar] [CrossRef] [PubMed]

- Zormpas-Petridis, K.; Noguera, R.; Ivankovic, D.K.; Roxanis, I.; Jamin, Y.; Yuan, Y. SuperHistopath: A Deep Learning Pipeline for Mapping Tumor Heterogeneity on Low-Resolution Whole-Slide Digital Histopathology Images. Front. Oncol. 2021, 10, 586292. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.W.; Song, D.H.; An, H.J.; Seo, S.B. Classification of subtypes including LCNEC in lung cancer biopsy slides using convolutional neural network from scratch. Sci. Rep. 2022, 12, 1830. [Google Scholar] [CrossRef]

- Abdolahi, M.; Salehi, M.; Shokatian, I.; Reiazi, R. Artificial intelligence in automatic classification of invasive ductal carcinoma breast cancer in digital pathology images. Med. J. Islam. Repub. Iran 2020, 34, 140. [Google Scholar] [CrossRef]

- Sadhwani, A.; Chang, H.W.; Behrooz, A.; Brown, T.; Auvigne-Flament, I.; Patel, H.; Findlater, R.; Velez, V.; Tan, F.; Tekiela, K.; et al. Comparative analysis of machine learning approaches to classify tumor mutation burden in lung adenocarcinoma using histopathology images. Sci. Rep. 2021, 11, 16605. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, R.; Gong, T.; Bao, X.; Gao, Z.; Zhang, H.; Wang, C.; Li, C. A Precision Diagnostic Framework of Renal Cell Carcinoma on Whole-Slide Images using Deep Learning. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 2104–2111. [Google Scholar] [CrossRef]

- Jin, L.; Shi, F.; Chun, Q.; Chen, H.; Ma, Y.; Wu, S.; Hameed, N.U.F.; Mei, C.; Lu, J.; Zhang, J.; et al. Artificial intelligence neuropathologist for glioma classification using deep learning on hematoxylin and eosin stained slide images and molecular markers. Neuro. Oncol. 2021, 23, 44–52. [Google Scholar] [CrossRef] [PubMed]

- Anand, D.; Kurian, N.C.; Dhage, S.; Kumar, N.; Rane, S.; Gann, P.H.; Sethi, A. Deep Learning to Estimate Human Epidermal Growth Factor Receptor 2 Status from Hematoxylin and Eosin-Stained Breast Tissue Images. J. Pathol. Inform. 2020, 11, 19. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Dong, X.; Li, M.; Zhou, P.; Deng, X.; Li, S.; Zhao, X.; Wu, Y.; Qin, J.; Guo, W. Fusing pre-trained convolutional neural networks features for multi-differentiated subtypes of liver cancer on histopathological images. BMC Med. Inform. Decis. Mak. 2022, 22, 122. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. arXiv 2016, arXiv:1608.06993. [Google Scholar] [CrossRef]

- Wessels, F.; Schmitt, M.; Krieghoff-Henning, E.; Kather, J.N.; Nientiedt, M.; Kriegmair, M.C.; Worst, T.S.; Neuberger, M.; Steeg, M.; Popovic, Z.V.; et al. Deep learning can predict survival directly from histology in clear cell renal cell carcinoma. PLoS ONE 2022, 17, e0272656. [Google Scholar] [CrossRef] [PubMed]

- Mi, W.; Li, J.; Guo, Y.; Ren, X.; Liang, Z.; Zhang, T.; Zou, H. Deep learning-based multi-class classification of breast digital pathology images. Cancer Manag. Res. 2021, 13, 4605–4617. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar] [CrossRef]

- Rączkowski, Ł.; Paśnik, I.; Kukiełka, M.; Nicoś, M.; Budzinska, M.A.; Kucharczyk, T.; Szumiło, J.; Krawczyk, P.; Crosetto, N.; Szczurek, E. Deep learning-based tumor microenvironment segmentation is predictive of tumor mutations and patient survival in non-small-cell lung cancer. BMC Cancer 2022, 22, 1001. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Mi, W.; Pan, B.; Guo, Y.; Li, J.; Xu, R.; Zheng, J.; Zou, C.; Zhang, T.; Liang, Z.; et al. Automatic Pancreatic Ductal Adenocarcinoma Detection in Whole Slide Images Using Deep Convolutional Neural Networks. Front. Oncol. 2021, 11, 665929. [Google Scholar] [CrossRef] [PubMed]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Ma, B.; Guo, Y.; Hu, W.; Yuan, F.; Zhu, Z.; Yu, Y.; Zou, H. Artificial Intelligence-Based Multiclass Classification of Benign or Malignant Mucosal Lesions of the Stomach. Front. Pharmacol. 2020, 11, 572372. [Google Scholar] [CrossRef]

- Yan, R.; Yang, Z.; Li, J.; Zheng, C.; Zhang, F. Divide-and-Attention Network for HE-Stained Pathological Image Classification. Biology 2022, 11, 982. [Google Scholar] [CrossRef]

- Yan, R.; Ren, F.; Li, J.; Rao, X.; Lv, Z.; Zheng, C.; Zhang, F. Nuclei-Guided Network for Breast Cancer Grading in HE-Stained Pathological Images. Sensors 2022, 22, 4061. [Google Scholar] [CrossRef]

- Grist, J.T.; Withey, S.; MacPherson, L.; Oates, A.; Powell, S.; Novak, J.; Abernethy, L.; Pizer, B.; Grundy, R.; Bailey, S.; et al. Distinguishing between paediatric brain tumour types using multi-parametric magnetic resonance imaging and machine learning: A multi-site study. arXiv 2019, arXiv:1910.09247. [Google Scholar] [CrossRef]

- Attallah, O. MB-AI-His: Histopathological Diagnosis of Pediatric Medulloblastoma and its Subtypes via AI. Diagnostics 2021, 11, 359. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Attallah, O. CoMB-Deep: Composite Deep Learning-Based Pipeline for Classifying Childhood Medulloblastoma and Its Classes. Front. Neuroinform. 2021, 15, 663592. [Google Scholar] [CrossRef]

- Wright, J.R., Jr.; Albert, C. Broders, tumor grading, and the origin of the long road to personalized cancer care. Cancer Med. 2020, 9, 4490–4494. [Google Scholar] [CrossRef]

- Henry, N.L.; Hayes, D.F. Cancer biomarkers. Mol. Oncol. 2012, 6, 140–146, Personalized cancer medicine. [Google Scholar] [CrossRef]

- Kos, Z.; Dabbs, D.J. Biomarker assessment and molecular testing for prognostication in breast cancer. Histopathology 2016, 68, 70–85. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).