Modeling and Forecasting of nanoFeCu Treated Sewage Quality Using Recurrent Neural Network (RNN)

Abstract

1. Introduction

2. Methodology

2.1. Data Collection and Processing

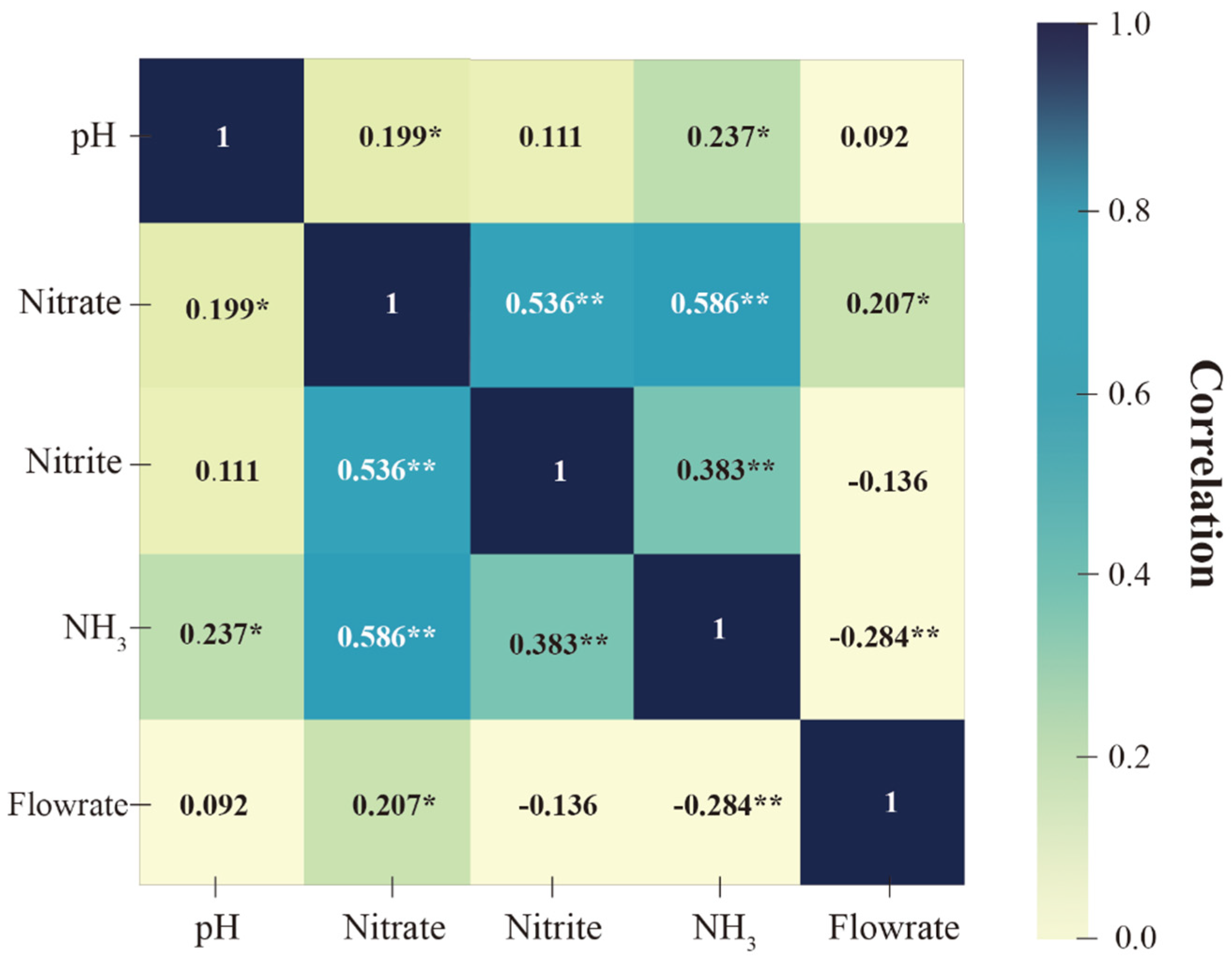

2.2. Pearson Correlation Coefficient

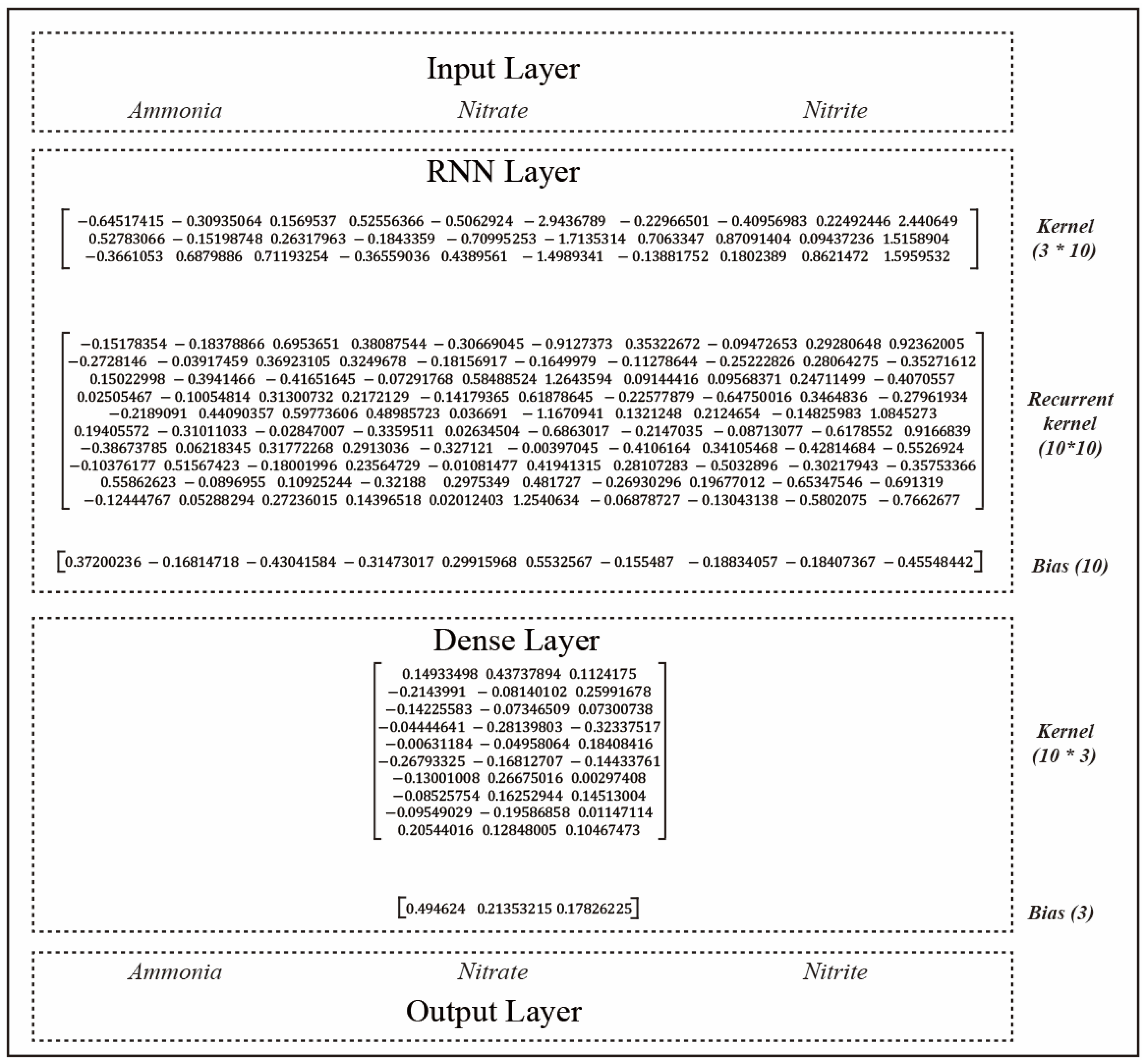

2.3. Model Setup and Implementation

3. Result and Discussion

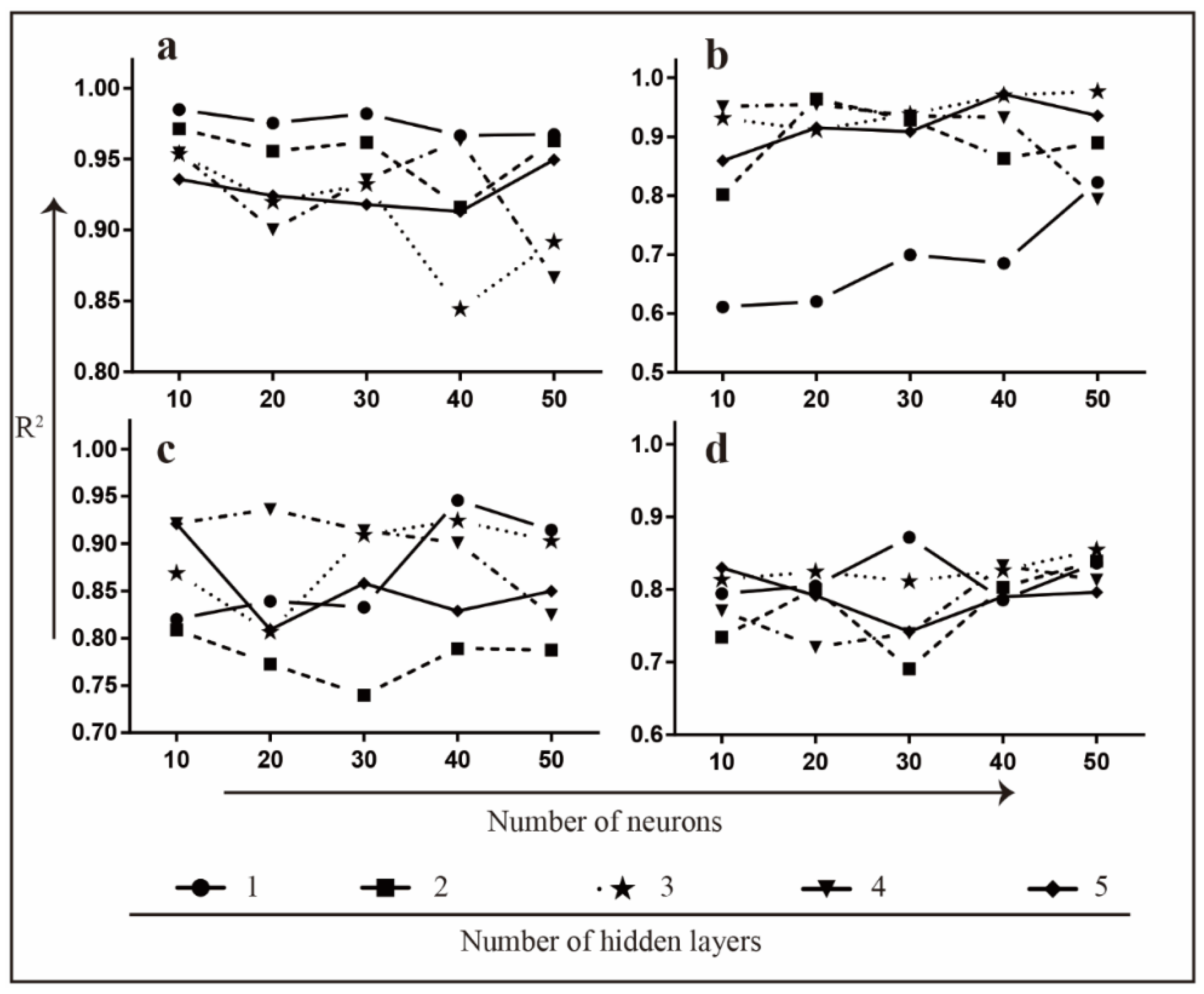

3.1. The Performance of One-to-One Model in Ammonia, Nitrate, Nitrite and pH Prediction

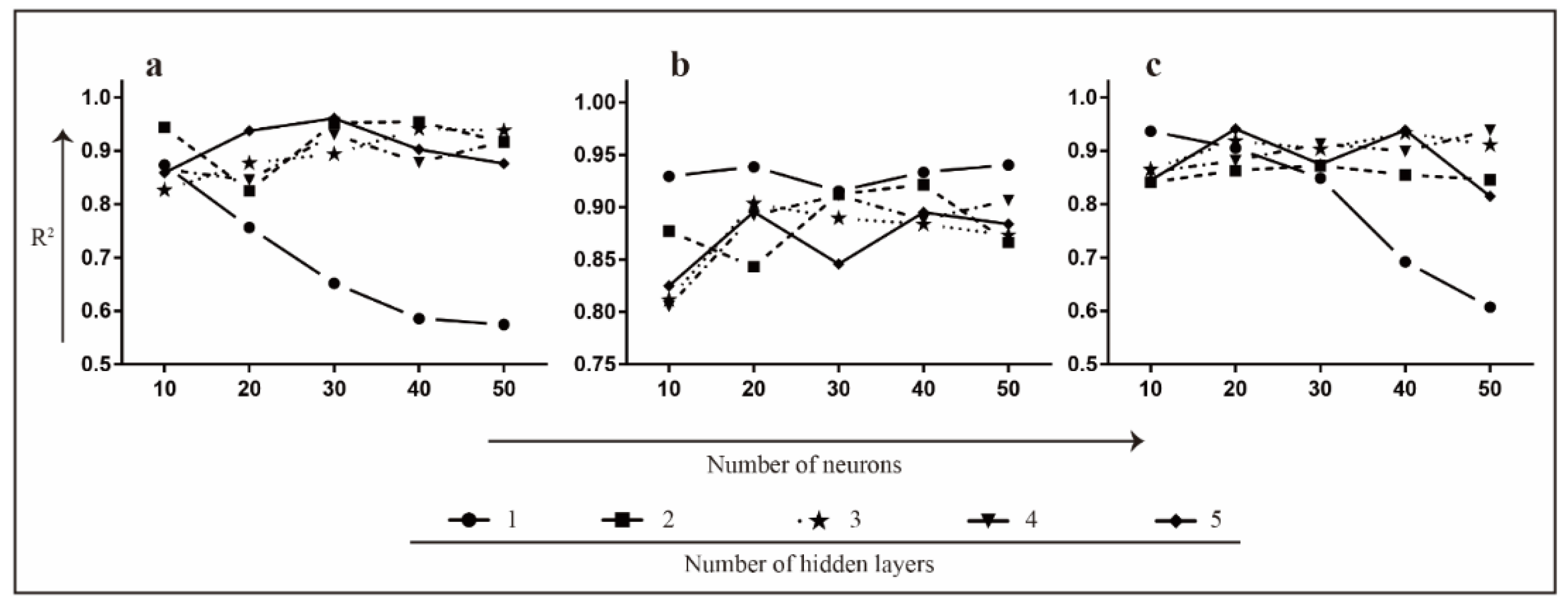

3.2. The Performance of Three-to-Three Model in Ammonia, Nitrate and Nitrite Prediction

3.3. The Comparison of One-to-One and Three-to-Three Models in Ammonia, Nitrate and Nitrite Estimation

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Piesse, M. Global Water Supply and Demand Trends Point towards Rising Water Insecurity. Analysis and Policy Observatory. 2020. Available online: https://apo.org.au/node/276976 (accessed on 27 February 2020).

- Ma, T.; Sun, S.; Fu, G.; Hall, J.W.; Ni, Y.; He, L.; Yi, J.; Zhao, N.; Du, Y.; Pei, T.; et al. Pollution Exacerbates China’s Water Scarcity and Its Regional Inequality. Nat. Commun. 2020, 11, 650. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Han, F.; Zheng, Y.; Wang, N.; Yuan, Y. Inverse Uncertainty Characteristics of Pollution Source Identification for River Chemical Spill Incidents by Stochastic Analysis. Front. Environ. Sci. Eng. 2018, 12, 6. [Google Scholar] [CrossRef]

- Mukate, S.; Wagh, V.; Panaskar, D.; Jacobs, J.A.; Sawant, A. Development of New Integrated Water Quality Index (IWQI) Model to Evaluate the Drinking Suitability of Water. Ecol. Indic. 2019, 101, 348–354. [Google Scholar] [CrossRef]

- Yi, X.; Lin, D.; Li, J.; Zeng, J.; Wang, D.; Yang, F. Ecological Treatment Technology for Agricultural Non-Point Source Pollution in Remote Rural Areas of China. Environ. Sci. Pollut. Res. 2021, 28, 40075–40087. [Google Scholar] [CrossRef]

- Nsenga Kumwimba, M.; Meng, F.; Iseyemi, O.; Moore, M.T.; Zhu, B.; Tao, W.; Liang, T.J.; Ilunga, L. Removal of Non-Point Source Pollutants from Domestic Sewage and Agricultural Runoff by Vegetated Drainage Ditches (VDDs): Design, Mechanism, Management Strategies, and Future Directions. Sci. Total Environ. 2018, 639, 742–759. [Google Scholar] [CrossRef]

- Tuholske, C.; Halpern, B.S.; Blasco, G.; Villasenor, J.C.; Frazier, M.; Caylor, K. Mapping Global Inputs and Impacts from of Human Sewage in Coastal Ecosystems. PLoS ONE 2021, 16, e0258898. [Google Scholar] [CrossRef]

- Littman, R.A.; Fiorenza, E.A.; Wenger, A.S.; Berry, K.L.E.; van de Water, J.A.J.M.; Nguyen, L.; Aung, S.T.; Parker, D.M.; Rader, D.N.; Harvell, C.D.; et al. Coastal Urbanization Influences Human Pathogens and Microdebris Contamination in Seafood. Sci. Total Environ. 2020, 736, 139081. [Google Scholar] [CrossRef]

- Shen, Y.; Linville, J.L.; Urgun-Demirtas, M.; Mintz, M.M.; Snyder, S.W. An Overview of Biogas Production and Utilization at Full-Scale Wastewater Treatment Plants (WWTPs) in the United States: Challenges and Opportunities towards Energy-Neutral WWTPs. Renew. Sustain. Energy Rev. 2015, 50, 346–362. [Google Scholar] [CrossRef]

- Garcia-Segura, S.; Lanzarini-Lopes, M.; Hristovski, K.; Westerhoff, P. Electrocatalytic Reduction of Nitrate: Fundamentals to Full-Scale Water Treatment Applications. Appl. Catal. B 2018, 236, 546–568. [Google Scholar] [CrossRef]

- Wear, S.L.; Acuña, V.; McDonald, R.; Font, C. Sewage Pollution, Declining Ecosystem Health, and Cross-Sector Collaboration. Biol. Conserv. 2021, 255, 109010. [Google Scholar] [CrossRef]

- Chan, M.K.; Abdullah, N.; Rageh, E.H.A.; Kumaran, P.; Tee, Y.S. Oxidation of Ammonia Using Immobilised FeCu for Water Treatment. Sep. Purif. Technol. 2021, 254, 117612. [Google Scholar] [CrossRef]

- Kee, C.M.; Mun, N.K.; Kumaran, P.; Selvam, R.; Kumaran, R.; Raja, S.D.; Shen, T.Y. The Impact of Ammonia Concentration and Reducing Agents on the Ammonia Oxidation Performance of Embedded Nano-FeCu. Mater. Chem. Phys. 2021, 274, 125189. [Google Scholar] [CrossRef]

- Chan, M.K.; Kumaran, P.; Thomas, X.V.; Natasha, E.; Tee, Y.S.; Mohd Aris, A.; Ho, Y.P.; Khor, B.C. Embedded nanoFeCu for Sewage Treatment: Laboratory-scale and Pilot Studies. Can. J. Chem. Eng. 2022, 1, 1–8. [Google Scholar] [CrossRef]

- Gauch, M.; Kratzert, F.; Klotz, D.; Nearing, G.; Lin, J.; Hochreiter, S. Rainfall-Runoff Prediction at Multiple Timescales with a Single Long Short-Term Memory Network. Hydrol. Earth Syst. Sci. 2020, 25, 2045–2062. [Google Scholar] [CrossRef]

- Tran Anh, D.; Duc Dang, T.; Pham Van, S. Improved Rainfall Prediction Using Combined Pre-Processing Methods and Feed-Forward Neural Networks. J 2019, 2, 65–83. [Google Scholar] [CrossRef]

- Saravanan, K.; Anusuya, E.; Kumar, R.; Son, L.H. Real-Time Water Quality Monitoring Using Internet of Things in SCADA. Environ. Monit. Assess 2018, 190, 556. [Google Scholar] [CrossRef]

- Sagan, V.; Peterson, K.T.; Maimaitijiang, M.; Sidike, P.; Sloan, J.; Greeling, B.A.; Maalouf, S.; Adams, C. Monitoring Inland Water Quality Using Remote Sensing: Potential and Limitations of Spectral Indices, Bio-Optical Simulations, Machine Learning, and Cloud Computing. Earth Sci. Rev. 2020, 205, 103187. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, J.; Tan, W.; Lan, H.; Zhang, S.; Xiao, K.; Wang, L.; Lin, H.; Sun, G.; Guo, P. Application of Time Serial Model in Water Quality Predicting. Comput. Mater. Contin. 2023, 74, 67–82. [Google Scholar] [CrossRef]

- Tan, W.; Zhang, J.; Wu, J.; Lan, H.; Liu, X.; Xiao, K.; Wang, L.; Lin, H.; Sun, G.; Guo, P. Application of CNN and Long Short-Term Memory Network in Water Quality Predicting. Intell. Autom. Soft Comput. 2022, 34, 1943–1958. [Google Scholar] [CrossRef]

- Li, T.; Lu, J.; Wu, J.; Zhang, Z.; Chen, L. Predicting Aquaculture Water Quality Using Machine Learning Approaches. Water 2022, 14, 2836. [Google Scholar] [CrossRef]

- Qi, C.; Huang, S.; Wang, X. Monitoring Water Quality Parameters of Taihu Lake Based on Remote Sensing Images and LSTM-RNN. IEEE Access 2020, 8, 188068–188081. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C.; Sun, L.; Guo, D.; Zhang, Y.; Wang, W. A Deep Learning Algorithm for Multi-Source Data Fusion to Predict Water Quality of Urban Sewer Networks. J. Clean. Prod. 2021, 318, 128533. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Thorburn, P.J.; Fitch, P. Multi-Task Temporal Convolutional Network for Predicting Water Quality Sensor Data. In Proceedings of the 26th International Conference, ICONIP 2019, Sydney, NSW, Australia, 12–15 December 2019; pp. 122–130. [Google Scholar]

- Antanasijević, D.; Pocajt, V.; Povrenović, D.; Perić-Grujić, A.; Ristić, M. Modelling of Dissolved Oxygen Content Using Artificial Neural Networks: Danube River, North Serbia, Case Study. Environ. Sci. Pollut. Res. 2013, 20, 9006–9013. [Google Scholar] [CrossRef] [PubMed]

- Daw, A.; Karpatne, A.; Watkins, W.; Read, J.; Kumar, V. Physics-Guided Neural Networks (PGNN): An Application in Lake Temperature Modeling. arXiv 2017, arXiv:1710.11431. [Google Scholar]

- Agatonovic-Kustrin, S.; Beresford, R. Basic Concepts of Artificial Neural Network (ANN) Modeling and Its Application in Pharmaceutical Research. J. Pharm. Biomed. Anal. 2000, 22, 717–727. [Google Scholar] [CrossRef]

- Berner, J.; Grohs, P.; Jentzen, A. Analysis of the Generalization Error: Empirical Risk Minimization over Deep Artificial Neural Networks Overcomes the Curse of Dimensionality in the Numerical Approximation of Black--Scholes Partial Differential Equations. SIAM J. Math. Data Sci. 2020, 2, 631–657. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-Art in Artificial Neural Network Applications: A Survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef]

- Dumont, T.M.; Rughani, A.I.; Tranmer, B.I. Prediction of Symptomatic Cerebral Vasospasm after Aneurysmal Subarachnoid Hemorrhage with an Artificial Neural Network: Feasibility and Comparison with Logistic Regression Models. World Neurosurg. 2011, 75, 57–63. [Google Scholar] [CrossRef]

- Ohn, I.; Kim, Y. Smooth Function Approximation by Deep Neural Networks with General Activation Functions. Entropy 2019, 21, 627. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; El-Shafie, A.; Afan, H.A.; Hameed, M.; Mohtar, W.H.M.W.; Hussain, A. RBFNN versus FFNN for Daily River Flow Forecasting at Johor River, Malaysia. Neural Comput. Appl. 2016, 27, 1533–1542. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D 2020, 404, 132306. [Google Scholar] [CrossRef]

- Chowdhury, S.; Saha, P. das Artificial Neural Network (ANN) Modeling of Adsorption of Methylene Blue by NaOH-Modified Rice Husk in a Fixed-Bed Column System. Environ. Sci. Pollut. Res. 2013, 20, 1050–1058. [Google Scholar] [CrossRef] [PubMed]

- Lazar, M.; Pastravanu, O. A Neural Predictive Controller for Non-Linear Systems. Math. Comput. Simul. 2002, 60, 315–324. [Google Scholar] [CrossRef]

- Singh, K.P.; Basant, A.; Malik, A.; Jain, G. Artificial Neural Network Modeling of the River Water Quality—A Case Study. Ecol. Modell. 2009, 220, 888–895. [Google Scholar] [CrossRef]

- Singh, S.P.; Kumar, A.; Darbari, H.; Singh, L.; Rastogi, A.; Jain, S. Machine Translation Using Deep Learning: An Overview. In Proceedings of the 2017 International Conference on Computer, Communications and Electronics (Comptelix), Jaipur, India, 1–2 July 2017; pp. 162–167. [Google Scholar]

- Wang, Y.; Zheng, G.; Li, Y.; Zhang, F. Full Waveform Prediction of Blasting Vibration Using Deep Learning. Sustainability 2022, 14, 8200. [Google Scholar] [CrossRef]

- Gonzalez, J.; Yu, W. Non-Linear System Modeling Using LSTM Neural Networks. IFAC-PapersOnLine 2018, 51, 485–489. [Google Scholar] [CrossRef]

- Ömer Faruk, D. A Hybrid Neural Network and ARIMA Model for Water Quality Time Series Prediction. Eng. Appl. Artif. Intell. 2010, 23, 586–594. [Google Scholar] [CrossRef]

- Gallego-Schmid, A.; Tarpani, R.R.Z. Life Cycle Assessment of Wastewater Treatment in Developing Countries: A Review. Water Res. 2019, 153, 63–79. [Google Scholar] [CrossRef]

- Agyeman, J.K.; Ameyaw, B.; Li, Y.; Appiah-Kubi, J.; Annan, A.; Oppong, A.; Twumasi, M.A. Modeling the Long-Run Drivers of Total Renewable Energy Consumption: Evidence from Top Five Heavily Polluted Countries. J. Clean. Prod. 2020, 277, 123292. [Google Scholar] [CrossRef]

- Guarascio, M.; Manco, G.; Ritacco, E. Deep Learning. In Encyclopedia of Bioinformatics and Computational Biology; Elsevier: Amsterdam, The Netherlands, 2019; pp. 634–647. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Lei, T. Based on the Neural Network Model to Predict Water Quality; Haikou, D., Ed.; Hainan University: Haikou, China, 2015. [Google Scholar]

- Gao, Y.; Yang, X.; Lu, X.; Li, M.; Wang, L.; Wang, Y. Kinetics and Mechanisms of Cr(VI) Removal by NZVI: Influencing Parameters and Modification. Catalysts 2022, 12, 999. [Google Scholar] [CrossRef]

- Liu, X.; Cao, Z.; Yuan, Z.; Zhang, J.; Guo, X.; Yang, Y.; He, F.; Zhao, Y.; Xu, J. Insight into the Kinetics and Mechanism of Removal of Aqueous Chlorinated Nitroaromatic Antibiotic Chloramphenicol by Nanoscale Zero-Valent Iron. Chem. Eng. J. 2018, 334, 508–518. [Google Scholar] [CrossRef]

- Kissel, D.E.; Cabrera, M.L. Ammonia. In Encyclopedia of Soils in the Environment; Elsevier: Amsterdam, The Netherlands, 2005; pp. 56–64. [Google Scholar]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Amalou, I.; Mouhni, N.; Abdali, A. Multivariate Time Series Prediction by RNN Architectures for Energy Consumption Forecasting. Energy Rep. 2022, 8, 1084–1091. [Google Scholar] [CrossRef]

| Applications | Model Description | Variables | Results | Limitations | References |

|---|---|---|---|---|---|

| River water quality prediction | Combines auto-regressive integrated moving average (ARIMA) and clustering model | The water quality total phosphorus (TP) | Mean absolute error (MAE) = 0.0082 | Inaccurate rainfall data will affect the model’s prediction accuracy. | [19] |

| Predicting water quality data (obtained from the water quality monitoring platform) | CNN-long short-term memory network (LSTM) combined model | Dissolved oxygen (DO) | RMSE = 0.8909 | Multi-layer hidden layer experiments were not explored. Fewer input variables. | [20] |

| Predicting aquaculture water quality | BPNN, RBFNN. SVM. least squares support vector machine (LSSVM). | DO, pH, NH3-N, NO3-N, NO2-N | SVM obtained the most accurate and stable prediction results. | Hyperparameter tuning experiments have not been performed in more detail. | [21] |

| Monitoring water quality parameters | LSTM -RNN | pH, DO, chemical oxygen demand (COD), NH3-H | R2 = 0.83 Mean Relative Error (MRE) = 0.18 | The number of hidden layers can be further adjusted. | [22] |

| Predict the water quality of urban sewer networks. | Multiple linear regression (MLR), Multilayer perception (MLP) RNN, LSTM and gated recurrent unit (GRU) | Biological oxygen demand (BOD), (COD), -N total nitrogen (TN), TP | GRU achieved a 0.82–5.07% higher R2 than RNN and LSTM. | The contribution of each input indicator to the model predictions needs to be explored. | [23] |

| Predicting water quality data | Multi-task temporal convolution network (MTCN) | DO and Temperature | Temperature (RMSE = 0.59) DO(RMSE = 0.49) | Long training time (9 hours:58 minutes) | [24] |

| Prediction of DO in river waters | General regression neural network (GRNN), BPNN, RNN | Water flow, temperature, pH and electrical conductivity | RNN > GRNN > BPNN | No adjustment to the structure and parameters of the individual models. | [25] |

| Lake temperature modeling | physics-guided neural networks (PGNN) | 11 meteorological drivers | Compared to SVM, least squares boosted regression trees (LSBoost) and ANN models, PGNN ensures better generalizability as well as scientific consistency of results. | The spatial and temporal nature of the data is not taken into account. | [26] |

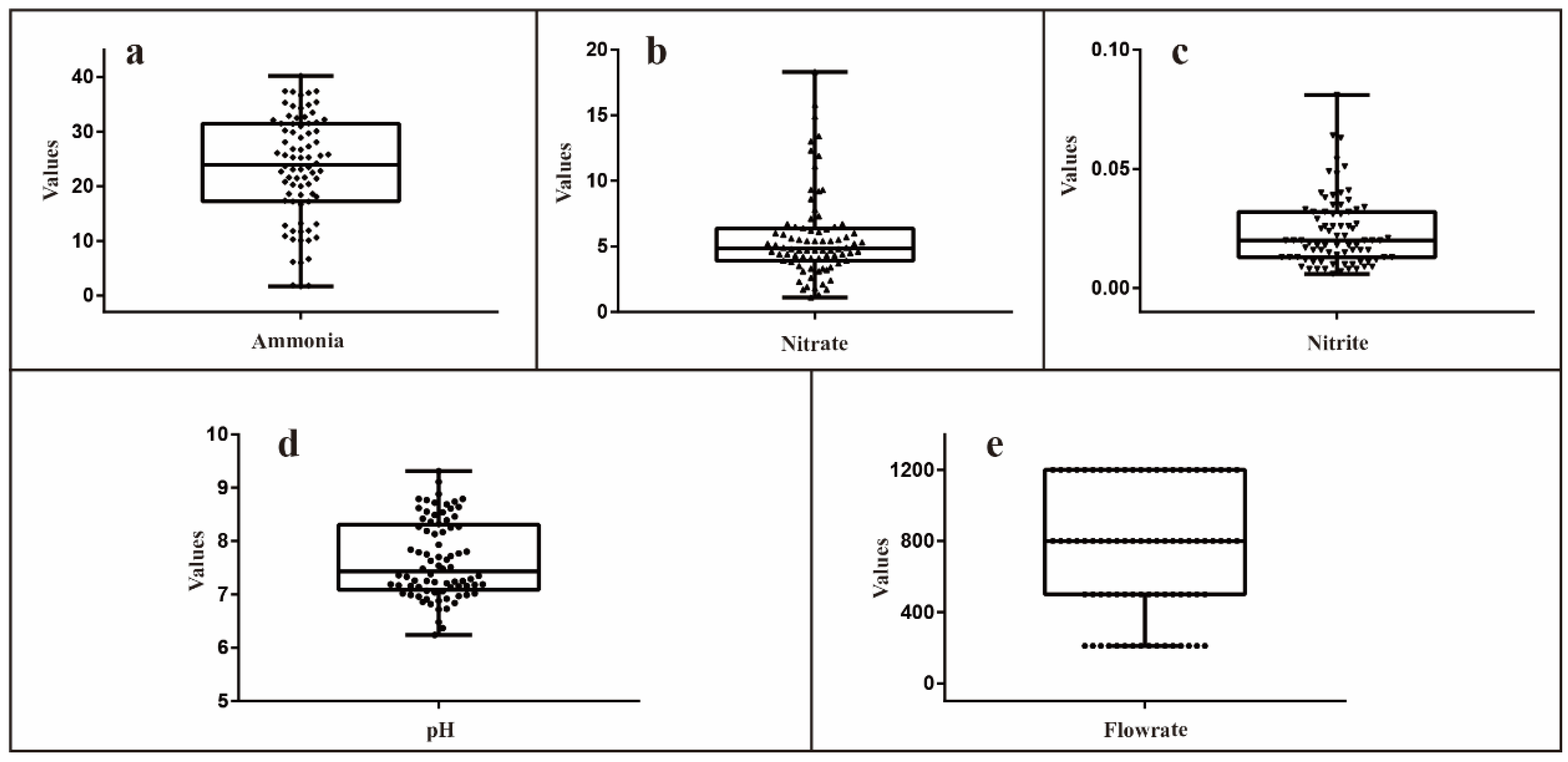

| Data Set | Unit | Count | Mean | Min | Max | Std Dev |

|---|---|---|---|---|---|---|

| pH | 80 | 7.600 | 6.240 | 9.310 | 0.816 | |

| Nitrate | mg/L | 80 | 5.694 | 1.100 | 18.300 | 3.247 |

| Nitrite | mg/L | 80 | 0.02284 | 0.006 | 0.081 | 0.014 |

| Ammonia | mg/L | 80 | 23.434 | 1.700 | 47.400 | 9.731 |

| Flowrate | mL/min | 80 | 742.00 | 210.000 | 1200.000 | 374.788 |

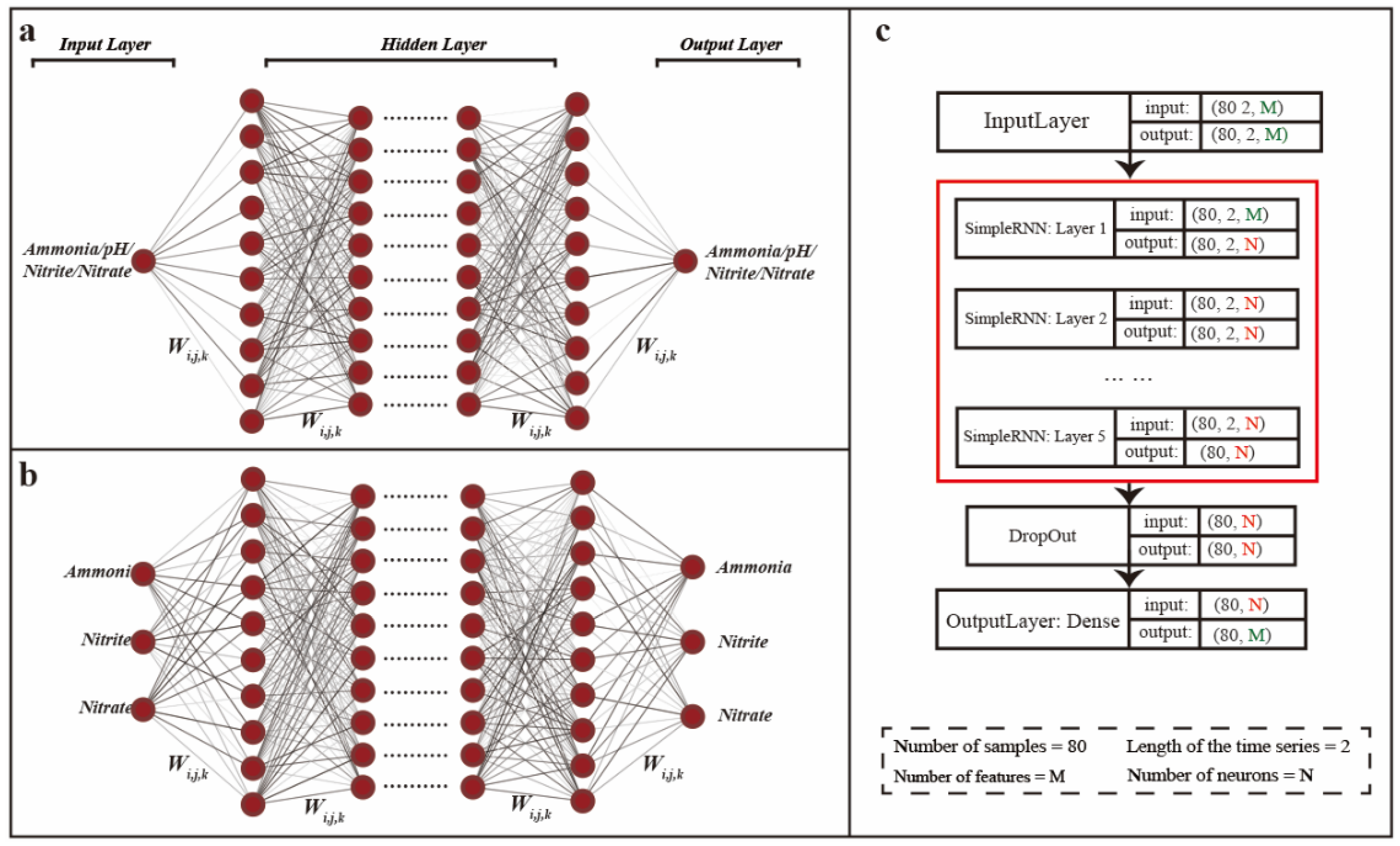

| Inputs at t = 0 h to 7 h | Ammonia, Nitrite, Nitrate, pH |

| Outputs at t = 0 h to 7 h | Ammonia, Nitrite, Nitrate, pH |

| Number of neurons | 10, 20, 30, 40, 50 |

| Number of hidden layers | 1–5 |

| Window size | 2 |

| Activation function | ReLU |

| Number of iterations | 1000 |

| Model | Hidden Layers | One-to-One | Three-to-Three |

|---|---|---|---|

| Hidden Layers | R2 | R2 | |

| Ammonia | Single: 10 neurons | 0.6110 | 0.8736 |

| Nitrate | Single: 10 neurons | 0.8201 | 0.9295 |

| Nitrite | Single: 10 neurons | 0.7943 | 0.9366 |

| Average R2 | - | - | 0.9132 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, D.; Chan, M.; Ng, S. Modeling and Forecasting of nanoFeCu Treated Sewage Quality Using Recurrent Neural Network (RNN). Computation 2023, 11, 39. https://doi.org/10.3390/computation11020039

Cao D, Chan M, Ng S. Modeling and Forecasting of nanoFeCu Treated Sewage Quality Using Recurrent Neural Network (RNN). Computation. 2023; 11(2):39. https://doi.org/10.3390/computation11020039

Chicago/Turabian StyleCao, Dingding, MieowKee Chan, and SokChoo Ng. 2023. "Modeling and Forecasting of nanoFeCu Treated Sewage Quality Using Recurrent Neural Network (RNN)" Computation 11, no. 2: 39. https://doi.org/10.3390/computation11020039

APA StyleCao, D., Chan, M., & Ng, S. (2023). Modeling and Forecasting of nanoFeCu Treated Sewage Quality Using Recurrent Neural Network (RNN). Computation, 11(2), 39. https://doi.org/10.3390/computation11020039