Abstract

Dynamic mode decomposition (DMD) is a data-driven, modal decomposition technique that describes spatiotemporal features of high-dimensional dynamic data. The method is equation-free in the sense that it does not require knowledge of the underlying governing equations. The main purpose of this article is to introduce new alternatives to the currently accepted algorithm for calculating the dynamic mode decomposition. We present two new algorithms which are more economical from a computational point of view, which is an advantage when working with large data. With a few illustrative examples, we demonstrate the applicability of the introduced algorithms.

1. Introduction

Dynamic mode decomposition (DMD) was first introduced by Schmid [1] as a method for analyzing data from numerical simulations and laboratory experiments in fluid dynamics field. The method constitutes a mathematical technique for identifying spatiotemporal coherent structures from high-dimensional data. It can be considered to be a numerical approximation to Koopman spectral analysis, and in this sense, it is applicable to nonlinear dynamical systems (see [2,3,4]). The DMD method combines the favorable features from two of the most powerful data analytic tools: proper orthogonal decomposition (POD) in space and Fourier transforms in time. DMD has gained popularity and it has been applied for a variety of dynamic systems in many different fields such as video processing [5], epidemiology [6], neuroscience [7], financial trading [8,9,10], robotics [11], cavity flows [12,13] and various jets [2,14]. For a review of the DMD literature, we refer the reader to [15,16,17,18]. Since its initial introduction, along with its wide application in various fields, the DMD method has undergone various modifications and improvements. For some recent results on the topics of DMD for non-uniformly sampled data, higher order DMD method, parallel implementations of DMD and some derivative DMD techniques, we recommend to the reader [19,20,21,22,23,24,25,26]; see also [27,28,29,30,31,32,33].

Our goal in the present work is to introduce alternative algorithms for calculating DMD. The new approaches calculate the DMD modes of the Koopman operator using a simpler formula compared to the standard DMD algorithm. The remainder of this work is organized as follows: in the rest of Section 1, we briefly describe the DMD algorithm, in Section 1, we propose and discuss the new approaches for DMD computation and in Section 3, we present numerical results, and the conclusion is in Section 4.

1.1. Description of the Standard DMD Algorithm

Originally, the DMD technique was formulated in terms of a companion matrix [1,2], emphasizing its connections to the Arnoldi algorithm and Koopman operator theory. Later, an SVD-based algorithm was presented in [12]. This algorithm is more numerically stable and is now a commonly accepted approach for performing DMD decomposition. We describe this algorithm in the following. Throughout the paper, we use the following notations: uppercase Latin letters for matrices, lowercase Latin or Greek letters for scalars, and lowercase bold letters for vectors.

Consider a sequential set of data arranged in matrix

where n is the number of state variables and is the number of observations (snapshots). The data could be from measurements, experiments, or simulations collected at the time from a given dynamical system and assume that the data are equispaced in time, with a time, step . We assume that the data Z are generated by linear dynamics, i.e., assume that there exists a linear operator A such that

The goal of the DMD method is to find an eigendecomposition of the (unknown) operator A. To proceed, we use an arrangement of the data set into two large data matrices

Therefore, the xpression (2) is equivalent to

Then the DMD of the data matrix Z is given by the eigendecomposition of A. We can approximate operator A by using singular value decomposition (SVD) of data matrix , where U is an unitary matrix, is an rectangular diagonal matrix with non-negative real numbers on the diagonal, V is an unitary matrix, and is the conjugate transpose of V; see [34]. Then from (4), we obtain

where is the pseudoinverse of X; see [35]. It should be noted that calculating the eigendecomposition of the matrix A can be prohibitively expensive if n is large, i.e., if . As a result, the goal is to compute eigenvectors and eigenvalues without explicitly representing or manipulating A. A low-rank approximation matrix is constructed for this purpose, and its eigendecomposition is calculated to obtain the DMD modes and eigenvalues. The DMD modes and DMD eigenvalues are intended to approximate the eigenvectors and eigenvalues of A.

A reduced SVD of can be used to obtain the low-rank approximation matrix , where is , is diagonal, is , and r is the rank of X, (). Then, using (5), we obtain the low-dimensional representation

The following algorithm (Algorithm 1) provides a robust method for computing DMD modes and eigenvalues.

In its original form [1], the algorithm of the DMD method differs slightly from the one described above. The only difference is that the DMD modes (at step 5) are computed by the formula

where W is the eigenvector matrix of . The DMD modes calculated by Algorithm 1 are called exact DMD modes, because Tu et al. in [16] prove that these are exact eigenvectors of matrix A. The modes computed by (7) are referred to as projected DMD modes. It is worth noting that the DMD method is generalized and extended to a larger class of data sets in [16], where the assumption of evenly spaced measurements was relaxed.

| Algorithm 1 Exact DMD |

Input: Data matrices X and Y, and rank r. Output: DMD modes and eigenvalues 1: Procedure DMD(X,Y,r). 2: (Reduced r-rank SVD of X) 3: (Low-rank approximation of A) 4: (Eigen-decomposition of ) 5: (DMD modes of A) 6: End Procedure |

Finally, knowing the DMD modes and eigenvalues , we can reconstruct the time series of data set Z in (1) by expression

where is the vector of the initial amplitudes of DMD modes.

The DMD discrete-time eigenvalues can also be converted to continuous time eigenvalues (Fourier modes)

A continuous time dynamical system can be reconstructed as a function of time by the expression

where . A prediction of the future state of the system is obtained from the expression (9) for any time t.

1.2. Matrix Similarity

Here, we will briefly describe an important matrix technique called similarity transformation, which we will use in the next section.

Definition 1.

Let A and B be matrices. If there is a non-singular matrix P exists such that

then we say that A and B are similar to each other.

We will state some well-known properties of similar matrices; see [36].

Lemma 1.

If A and B are similar, then they have the same rank.

Lemma 2.

If A and B are similar, then they have the same eigenvalues.

It is easy to show that if A and B are similar and is an eigenvector of B, then is an eigenvector of .

2. New DMD Algorithms

In this section, we introduce two new alternatives to the standard DMD algorithm.

2.1. An Alternative of Exact DMD Algorithm

The DMD algorithms presented in the previous section use the advantage of low dimensionality in the data to make a low-rank approximation of the operator A that best approximates the nonlinear dynamics of the data set.

We suggest that the modal structures can be extracted from the following matrix

rather than the matrix defined by (6). The two matrices and are similar, with transformation matrix

they therefore have the same eigenvalues. As a result, the eigenvectors of the matrix can be expressed in terms of the eigenvectors of .

Let

be eigendecomposition of matrix . Then, using relations (7), (11) and (12) we can easily obtain the following expression:

which yields the formula

for the DMD modes. Expression (13) corresponds to projected DMD modes defined by (7). To be thorough, we will prove that matrix

corresponds to exact DMD modes defined at Step 5 in Algorithm 1; see [37].

Theorem 1.

Let , with , be an eigenpair of defined by (10), then the corresponding eigenpair of A is , where

Proof.

By using reduced SVD and the pseudoinverse of X

we obtain the expression

In addition, , since if , then . This implies that . Hence, is an eigenvector of A with eigenvalue . The proof is completed. □

Now, we are ready to formulate an alternative to the exact DMD method described (Algorithm 1); see Algorithm 2 below.

According to Theorem 1, modes in (14) generated by Algorithm 2 are eigenvectors of the matrix A. Although the matrices and are computationally similar because they have the same but permuted multipliers, the calculation of DMD modes in Algorithm 2 is more economical than the calculation of modes in Algorithm 1.

| Algorithm 2 Alternative exact DMD |

Input: Data matrices X and Y, and rank r. Output: DMD modes and eigenvalues 1: Procedure DMD(X,Y,r). 2: (Reduced r-rank SVD of X) 3: (Low-rank approximation of A) 4: (Eigen-decomposition of ) 5: (DMD modes of A) 6: End Procedure |

2.2. A New DMD Algorithm for Full Rank Dataset

We will assume in this section that matrix is a full rank matrix, i.e., , where and . Our goal is to obtain a more efficient algorithm for calculating DMD modes and eigenvalues in this particular case.

We suggest that the modal structures can be extracted from the following matrix

where is the unitary matrix from the SVD of . Matrices and are obviously similar. From (10) and (15), we obtain the expression

which yields

where is the Moore–Penrose pseudoinverse of X. Denoting the eigen-decomposition of by

where the columns of are eigenvectors and is a diagonal matrix containing the corresponding eigenvalues. From Definition of , and relations (15) and (18), we deduce

or equivalently

Thus, we show that

is the matrix of DMD modes. These are the projected DMD modes (see Theorem 3 below). We will express the exact DMD modes in the next Theorem.

Theorem 2.

Let , with , be an eigenpair of defined by (17). Then, the corresponding eigenpair of A is , where

Proof.

Let us express by using (4)

From the last relation and (16), we get

Furthermore, , because if , then , implying . Hence, is an eigenvector of A with an eigenvalue . □

Next, we resume the results from above in the form of an algorithm.

We intentionally omitted Step 1 of Algorithm 1 (or Algorithm 2) in Algorithm 3, because the pseudo-inverse matrix of full-rank matrix X can be calculated not only by SVD but also by formula

The presented Algorithm 3 has the greatest advantage among the described algorithms from a computational point of view in the case of full-rank data. We will now prove that modes in expression (21) are projected DMD modes.

| Algorithm 3 DMD Algorithm for full rank dataset |

Input: Data matrices X and Y. Output: DMD modes and eigenvalues 1: Procedure DMD(X,Y). 2: (Low-rank approximation of A) 3: (Eigen-decomposition of ) 4: (DMD modes of A) 5: End Procedure |

Theorem 3.

Let , with , be an eigenpair of defined by (15), and let denotes the orthogonal projection matrix onto the column space of X. Then, the vector

is an eigenvector of with an eigenvalue λ. Furthermore, if is given by (22), then .

Proof.

From the reduced SVD , we obtain the orthogonal projection onto the column space of X by . From (17) and the relation , we get

which implies

According to the previous expression, is an eigenvector of with an eigenvalue . Let us now express

which proves the statement of the Theorem. □

2.3. In Terms of Companion Matrix

Let us consider the case where the last snapshot in the data set (1) is in the column space of X, i.e. is a linear combination of . Therefore

In this case, matrix defined by (16) is in type of the Frobenius companion matrix and it relates the data sets exactly , even if the data are generated by nonlinear dynamics. Moreover, in this case, the projected DMD modes (23) and exact DMD modes (22) are identical.

Theorem 4.

Proof.

From the statement of the Theorem, it follows

where is the orthogonal projection onto the image of X. We obtain from the previous relation and the reduced SVD of X

Finally, we can show that defined by (23) is an eigenvector of A. The following relations are fulfilled

which proves the Theorem. □

In this case, a reconstruction of the data matrix Y, using relation yields

where is the eigendecomposition of defined by (18). Using that is a Frobenius companion matrix, and notation (21), we obtain

where is a Vandermonde matrix, i.e.

where and are DMD modes and eigenvalues, respectively. In this formulation, each mode is scaled with associated . The Vandermonde matrix captures the iterative exponentiation of the DMD eigenvalues. The representation (24) and (25) gives us a factorization of the data into spatial modes, amplitudes, and temporal dynamics. Moreover, the amplitudes in this case coincide with the DMD eigenvalues and do not depend on the initial condition.

2.4. Computational Cost and Memory Requirement

Table 1 gives a brief summary of the main matrices in the three algorithms considered. The representations of the corresponding reduced order approximations of the Koopman operator are shown, as well as the formulas for calculating the DMD modes in three cases.

Table 1.

Reduced matrices and DMD modes.

Although the structures of the three low-rank approximation matrices and are similar, the corresponding representations and have a simpler form when determining the DMD modes than . In Algorithm 2, three matrices need to be stored and two matrix multiplications performed, while in Algorithm 3, it is necessary to store only two matrices and perform one matrix multiplication.

Since the reduced matrix in Algorithm 1 is of the same size as the corresponding matrices and in the alternative algorithms, they therefore require the same resources to compute their spectral decompositions. To estimate the computational cost for the three algorithms considered, we will ignore the comparable computations and focus on the different ones. While in Algorithms 1 and 2, the calculation of the corresponding reduced matrices and involves SVD of X and matrix multiplication, in Algorithm 3, matrix is calculated by the pseudo-inverse matrix of X. The DMD modes for the three algorithms are calculated by the corresponding matrix multiplications, as indicated in Table 1. The computational costs are shown in Table 2, see Golub and Van Loan [38].

Table 2.

Computational costs.

From the memory point of view, the corresponding matrices that require the same amount of memory for all three algorithms are: the data matrix Y, the reduced matrix ( or ), and the eigenvectors matrix ( or ). The number of floating point numbers to be stored for the corresponding reduced-order matrix and eigenvector matrix is equal to in all three algorithms. The difference in the required memory for the three algorithms is determined by the matrices needed to calculate the DMD modes. The number of floating point numbers that must be stored for the DMD calculations is shown in Table 3.

Table 3.

Memory requirements for DMD mode matrices.

3. Numerical Illustrative Examples

In this section, we will compare the results obtained by the standard DMD algorithm and the new algorithms (Algorithms 2 and 3) introduced in Section 2. All considered examples are well known in the literature. All numerical experiments and simulations were performed on Windows 7 with MATLAB release R2013a on Acer Aspire 571G laptop with an Intel(R) Core(TM) i3-2328M CPU @2.2GHz processor and 4 GB RAM.

We should note that all three algorithms that we consider in the present work require the use of some of the most expensive functions from a computational point of view: and , respectively, for calculating SVD and spectral decomposition of matrices.

3.1. Example 1: Spatiotemporal Dynamics of Two Signals

We consider an illustrative example of two mixed spatiotemporal signals

and the mixed signal

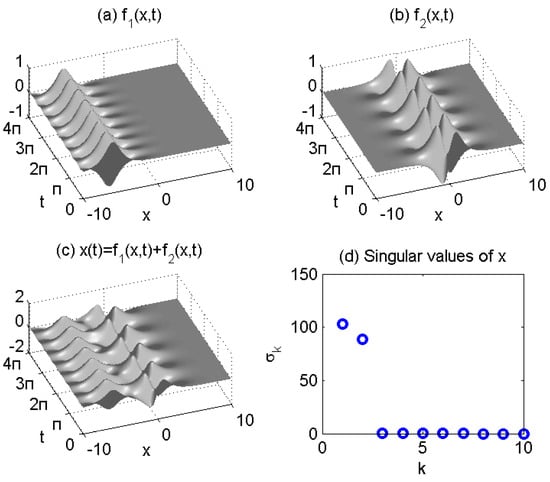

The two signals , and mixed signal X are illustrated in Figure 1a–c.

Figure 1.

Spatiotemporal dynamics of two signals (a) , (b) , and mixed signal in (c) . Singular values of X are shown in (d).

Figure 1d depicts the singular values of data matrix X, indicating that the data can be adequately represented by the rank approximation.

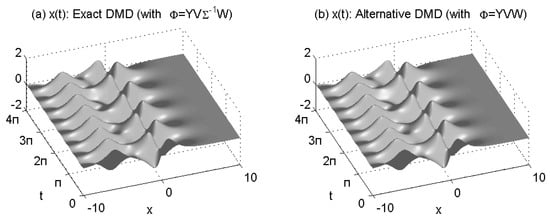

We perform a rank-2 DMD reconstruction of data by using standard DMD (Algorithm 1) and Alternative DMD (Algorithm 2). These reconstructions are shown in Figure 2a,b.

Figure 2.

Rank−2 reconstructions of the signal X by: standard DMD (a) and Alternative DMD (b).

The two reconstructions are nearly exact approximations, with the DMD modes and eigenvalues matching those of the underlying signals and perfectly. Both algorithms reproduce the same continuous-time DMD eigenvalues and . Their imaginary components correspond to the frequencies of oscillation.

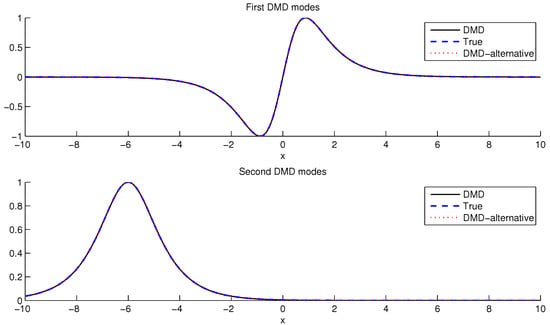

Figure 3 panels compare the first two DMD modes, with true modes plotted alongside modes extracted by Standard DMD (Algorithm 1) and Alternative DMD (Algorithm 2). The DMD modes produced by the two algorithms match exactly to nearly machine precision.

Figure 3.

Firts two DMD modes: true modes, modes extracted by standard DMD and modes extracted by Alternative DMD.

Table 4 compares the execution time results of simulations using Algorithms 1 and 2.

Table 4.

Execution time (in sec.) by Algorithms 1 and 2.

3.2. Example 2: Re = 100 Flow around a Cylinder Wake

We consider a time series of fluid vortex fields for the wake behind a round cylinder at a Reynolds number Re = 100. The Reynolds number is defined as , where D is the cylinder diameter, is the free-stream velocity, and v is the kinematic fluid viscosity. It quantifies the ratio of inertial to viscous forces.

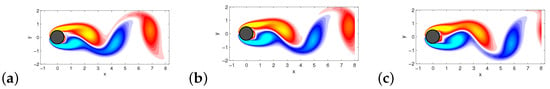

This example is taken from [17], see also [39]. We use the same data set which is publicly available at ‘www.siam.org/books/ot149/flowdata’. Collected data consists snapshots at regular intervals in time, , sampling five periods of vortex shedding. An example of a vorticity field is shown in Figure 4.

Figure 4.

Some vorticity field snapshots for the wake behind a cylinder at are shown in (a–c).

We performed Algorithms 1 and 2 to obtain DMD decomposition and reconstruction of the data. Two algorithms reproduce the same DMD eigenvalues and modes.

The quality of low-rank approximations is measured by the relative error

where is DMD reconstruction of data by using expression (9). Actually, both standard DMD and alternative DMD reconstructions have the same error, see Table 5.

Table 5.

Relative errors for DMD reconstructions by Algorithms 1 and 2.

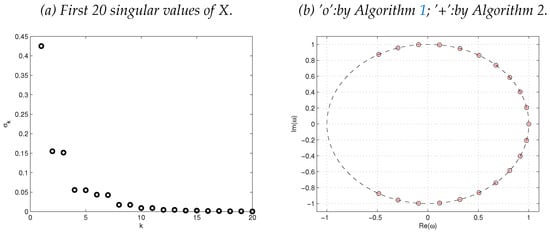

See Figure 5 for DMD eigenvalues and singular values of the data matrix X.

Figure 5.

Singular values of X (a) and DMD eigenvalues computed by Algorithms 1 and 2 (b).

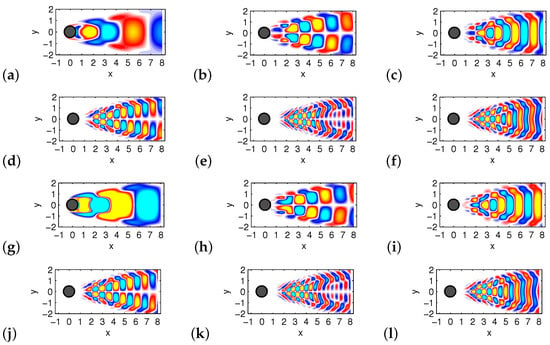

Figure 6 shows the first six DMD modes computed by Algorithms 1 and 2, respectively. The only difference is in the visualization, with contour lines added to the DMD modes obtained by Algorithm 2 (otherwise we obtain the same picture).

Figure 6.

The first six DMD modes computed by Algorithm 1 are shown in (a–f). Corresponding DMD modes computed by Algorithm 2 are in (g–l).

It can be seen from Figure 5 and Figure 6 that the two algorithms produce the same DMD eigenvalues and DMD modes.

Table 6 shows the execution time of this task by Algorithms 1 and 2.

Table 6.

Execution time (in sec.) by Algorithms 1 and 2.

3.3. Example 3: DMD with Different Koopman Observables

We consider the nonlinear Schrödinger (NLS) equation

where is a function of space and time. This equation can be rewritten in the equivalent form

Fourier transforming in gives the differential equation in the Fourier domain variables

By discretizing in the spatial variable we can generate a numerical approximation to the solution of (7); see [17].

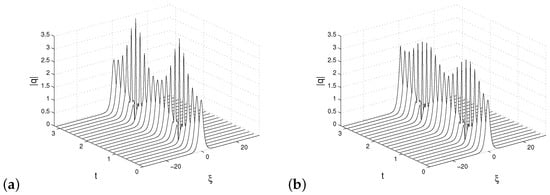

The following parameters were used in the simulation: there is a total of 21 slices of time data over the interval , the state variables are an n-dimensional discretization of , so that , where . The resulting are the columns of the generated data matrix. We analyze the two-soliton solution that has the initial condition . The result of this simulation is shown in Figure 7a.

Figure 7.

The full simulation of the NLS Equation (7) in (a) and DMD reconstruction (b) by standard DMD algorithm, where the observable is given by , where , in panel (b).

We performed low-rank DMD approximation (r = 10) with standard DMD method as shown in Figure 7b. In this case, by standard DMD approximation, it is meant that the state vectors coincide with the Koopman quantities

The obtained approximation is not satisfactory.

To improve the approximation, we can use another Koopman observable

which is based on the NLS nonlinearity; see also [17].

In this case, we define new input data matrices corresponding to X and Y defined by (3), as follows

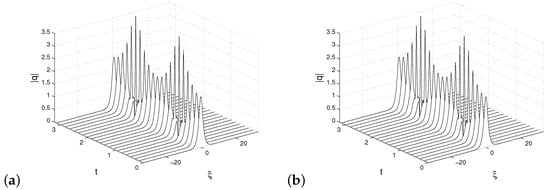

respectively. Following that, the DMD approach is used in the usual way with matrices and instead of X and Y. New approximation gives a superior reconstruction, which is evident from Figure 8. We have performed DMD reconstructions using both algorithms Algorithms 1 and 2.

Figure 8.

DMD reconstructions, based on new observable defined by (31). Reconstruction by Algorithm 1 in (a) and by Algorithm 2 in (b).

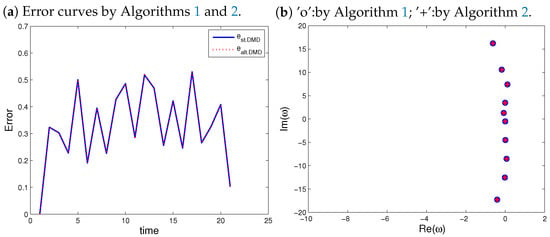

It can be seen from Figure 9b that both algorithms reproduce the same DMD eigenvalues. To measure the quality of approximations, the relative error formula defined by (27) is used. Both reconstructions, by standard DMD (Algorithm 1) and alternative DMD (Algorithm 2), have the same error curve; see Figure 9a.

Figure 9.

Relative errors (a) and DMD eigenvalues (b).

Algorithms 1 and 2 are compared in terms of execution times, the results are included in Table 7.

Table 7.

Execution time (in sec.) by Algorithms 1 and 2.

3.4. Example 4: Standing Wave

It is known that the standard DMD algorithm is not able to represent a standing wave in the data [16]. For example, if measurements of a single sine wave are collected, DMD fails to capture periodic oscillations in the data.

In this case, the data matrix X contains a single row

where each is a scalar and DMD fails to reconstruct the data. There is a simple solution to this rank deficiency problem, which involves stacking multiple time-shifted copies of the data into augmented data matrices

Thus, using delay coordinates achieves an increase in the rank of the data matrix . In fact, we can increase the number s of delay coordinates until the data matrix reaches full rank numerically. Then, we perform the DMD technique on the augmented matrices and .

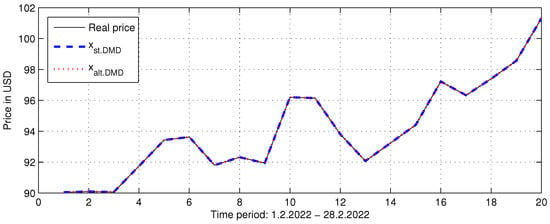

We can demonstrate the rank mismatch issue with an example from finance by considering the evolution in the price of only one type of commodity. In fact, this problem is quite similar to the standing wave problem. Let us consider the price evolution of the Brent Crude Oil for the period 1 February 2022–28 February 2022, containing 20 trading days; see Figure 10.

Figure 10.

Two approximations of Brent Crude Oil price for the period 1 February 2022–28 February 2022 by Standard DMD and Alternative DMD approaches.

The data matrix X is a single row as in (32) containing elements, where each is the closing price on the corresponding day. We construct the augmented matrices and as in (33). In this case, we can choose , which ensures that matrices and will have more rows than columns. For each s in this interval, we obtain a full rank matrix .

Therefore, in this case, we can use the alternative DMD algorithm for full rank data matrices, Algorithm 3. We performed Algorithms 1 and 3 on augmented data matrices and for each . The results show that the best approximation of the measured data is obtained at the highest rank of , , with . In each case, the two algorithms reproduce the same approximation. Figure 10 shows the two approximations for , where it can be seen that both algorithms perfectly approximate the actual price.

Execution times for Algorithms 1 and 3 are computed with the dataset of this example. Table 8 presents a comparison between the two algorithms.

Table 8.

Execution time (in sec.) by Algorithms 1 and 3.

4. Conclusions

The purpose of this study was to introduce two new algorithms for computing approximate DMD modes and eigenvalues. We proved that each generated pairs and by Algorithms 2 and 3, respectively, is an eigenvector/eigenvalue pair of Koopman operator A (Theorems 1 and 2). The matrices of DMD modes and from Algorithms 2 and 3 have a simpler form than the DMD mode matrix from Algorithm 1. They need less memory and require fewer matrix multiplications.

We demonstrate the performance of the presented algorithms with numerical examples from different fields of application. From the obtained results, we can conclude that the introduced approaches give identical results to those of the exact DMD method. Comparison of simulation times shows that better effectiveness is attained by new algorithms. The presented results show that the introduced algorithms are alternatives to the standard DMD algorithm and can be used in various fields of application.

This study motivates several further investigations. Future work on the use of the proposed algorithms will consist of their application to a wider class of dynamical systems, particularly those dealing with full-rank data. Applications to other known methods that use approximate linear dynamics, such as embedding with Kalman filters, will be sought.It may be possible to develop some alternatives to some known variants of the DMD method, such as DMD with control and higher-order DMD.

An interesting direction for future work is the optimization of the introduced algorithms in relation to the required computing resources. One line of work is to implement these algorithms using parallel computing.

Funding

Paper written with financial support of Shumen University under Grant RD-08-144/01.03.2022.

Conflicts of Interest

The author declares no conflict of interest.

References

- Schmid, P.J.; Sesterhenn, J. Dynamic mode decomposition of numerical and experimental data. In Proceedings of the 61st Annual Meeting of the APS Division of Fluid Dynamics, San Antonio, TX, USA, 23–25 November 2008; American Physical Society: Washington, DC, USA, 2008. [Google Scholar]

- Rowley, C.W.; Mezić, I.; Bagheri, S.; Schlatter, P.; Henningson, D.S. Spectral analysis of nonlinear flows. J. Fluid Mech. 2009, 641, 115–127. [Google Scholar] [CrossRef]

- Mezić, I. Spectral properties of dynamical systems, model reduction and decompositions. Nonlinear Dyn. 2005, 41, 309–325. [Google Scholar] [CrossRef]

- Chen, K.K.; Tu, J.H.; Rowley, C.W. Variants of dynamic mode decomposition: Boundary condition, Koopman, and Fourier analyses. J. Nonlinear Sci. 2012, 22, 887–915. [Google Scholar] [CrossRef]

- Grosek, J.; Nathan Kutz, J. Dynamic Mode Decomposition for Real-Time Background/Foreground Separation in Video. arXiv 2014, arXiv:1404.7592. [Google Scholar]

- Proctor, J.L.; Eckhoff, P.A. Discovering dynamic patterns from infectious disease data using dynamic mode decomposition. Int. Health 2015, 7, 139–145. [Google Scholar] [CrossRef]

- Brunton, B.W.; Johnson, L.A.; Ojemann, J.G.; Kutz, J.N. Extracting spatial–temporal coherent patterns in large-scale neural recordings using dynamic mode decomposition. J. Neurosci. Methods 2016, 258, 1–15. [Google Scholar] [CrossRef]

- Mann, J.; Kutz, J.N. Dynamic mode decomposition for financial trading strategies. Quant. Financ. 2016, 16, 1643–1655. [Google Scholar] [CrossRef]

- Cui, L.X.; Long, W. Trading Strategy Based on Dynamic Mode Decomposition: Tested in Chinese Stock Market. Phys. A Stat. Mech. Its Appl. 2016, 461, 498–508. [Google Scholar]

- Kuttichira, D.P.; Gopalakrishnan, E.A.; Menon, V.K.; Soman, K.P. Stock price prediction using dynamic mode decomposition. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 55–60. [Google Scholar] [CrossRef]

- Berger, E.; Sastuba, M.; Vogt, D.; Jung, B.; Ben Amor, H. Estimation of perturbations in robotic behavior using dynamic mode decomposition. J. Adv. Robot. 2015, 29, 331–343. [Google Scholar] [CrossRef]

- Schmid, P.J. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 2010, 656, 5–28. [Google Scholar] [CrossRef]

- Seena, A.; Sung, H.J. Dynamic mode decomposition of turbulent cavity flows for self-sustained oscillations. Int. J. Heat Fluid Flow 2011, 32, 1098–1110. [Google Scholar] [CrossRef]

- Schmid, P.J. Application of the dynamic mode decomposition to experimental data. Exp. Fluids 2011, 50, 1123–1130. [Google Scholar] [CrossRef]

- Mezić, I. Analysis of fluid flows via spectral properties of the Koopman operator. Annu. Rev. Fluid Mech. 2013, 45, 357–378. [Google Scholar] [CrossRef]

- Tu, J.H.; Rowley, C.W.; Luchtenburg, D.M.; Brunton, S.L.; Kutz, J.N. On dynamic mode decomposition: Theory and applications. J. Comput. Dyn. 2014, 1, 391–421. [Google Scholar] [CrossRef]

- Kutz, J.N.; Brunton, S.L.; Brunton, B.W.; Proctor, J.L. Dynamic Mode Decomposition: Data-Driven Modeling of Complex Systems; Society for Industrial and Applied Mathematics: Philadelphia, PL, USA, 2016; pp. 1–234. ISBN 978-1-611-97449-2. [Google Scholar]

- Bai, Z.; Kaiser, E.; Proctor, J.L.; Kutz, J.N.; Brunton, S.L. Dynamic Mode Decomposition for CompressiveSystem Identification. AIAA J. 2020, 58, 561–574. [Google Scholar] [CrossRef]

- Le Clainche, S.; Vega, J.M.; Soria, J. Higher order dynamic mode decomposition of noisy experimental data: The flow structure of a zero-net-mass-flux jet. Exp. Therm. Fluid Sci. 2017, 88, 336–353. [Google Scholar] [CrossRef]

- Anantharamu, S.; Mahesh, K. A parallel and streaming Dynamic Mode Decomposition algorithm with finite precision error analysis for large data. J. Comput. Phys. 2013, 380, 355–377. [Google Scholar] [CrossRef]

- Sayadi, T.; Schmid, P.J. Parallel data-driven decomposition algorithm for large-scale datasets: With application to transitional boundary layers. Theor. Comput. Fluid Dyn. 2016, 30, 415–428. [Google Scholar] [CrossRef]

- Maryada, K.R.; Norris, S.E. Reduced-communication parallel dynamic mode decomposition. J. Comput. Sci. 2020, 61, 101599. [Google Scholar] [CrossRef]

- Li, B.; Garicano-Mena, J.; Valero, E. A dynamic mode decomposition technique for the analysis of non–uniformly sampled flow data. J. Comput. Phys. 2022, 468, 111495. [Google Scholar] [CrossRef]

- Smith, E.; Variansyah, I.; McClarren, R. Variable Dynamic Mode Decomposition for Estimating Time Eigenvalues in Nuclear Systems. arXiv 2022, arXiv:2208.10942. [Google Scholar]

- Jovanović, M.R.; Schmid, P.J.; Nichols, J.W. Sparsity-promoting dynamic mode decomposition. Phys. Fluids 2014, 26, 024103. [Google Scholar] [CrossRef]

- Guéniat, F.; Mathelin, L.; Pastur, L.R. A dynamic mode decomposition approach for large and arbitrarily sampled systems. Phys. Fluids 2014, 27, 025113. [Google Scholar] [CrossRef]

- Cassamo, N.; van Wingerden, J.W. On the Potential of Reduced Order Models for Wind Farm Control: A Koopman Dynamic Mode Decomposition Approach. Energies 2020, 13, 6513. [Google Scholar] [CrossRef]

- Ngo, T.T.; Nguyen, V.; Pham, X.Q.; Hossain, M.A.; Huh, E.N. Motion Saliency Detection for Surveillance Systems Using Streaming Dynamic Mode Decomposition. Symmetry 2020, 12, 1397. [Google Scholar] [CrossRef]

- Babalola, O.P.; Balyan, V. WiFi Fingerprinting Indoor Localization Based on Dynamic Mode Decomposition Feature Selection with Hidden Markov Model. Sensors 2021, 21, 6778. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Sanchez-Esguevillas, A.; Hernandez-Callejo, L.; Arribas, J.I.; Carro, B. Novel Data-Driven Models Applied to Short-Term Electric Load Forecasting. Appl. Sci. 2021, 11, 5708. [Google Scholar] [CrossRef]

- Surasinghe, S.; Bollt, E.M. Randomized Projection Learning Method for Dynamic Mode Decomposition. Mathematics 2021, 9, 2803. [Google Scholar] [CrossRef]

- Li, C.Y.; Chen, Z.; Tse, T.K.; Weerasuriya, A.U.; Zhang, X.; Fu, Y.; Lin, X. A parametric and feasibility study for data sampling of the dynamic mode decomposition: Range, resolution, and universal convergence states. Nonlinear Dyn. 2022, 107, 3683–3707. [Google Scholar] [CrossRef]

- Mezic, I. On Numerical Approximations of the Koopman Operator. Mathematics 2022, 10, 1180. [Google Scholar] [CrossRef]

- Trefethen, L.; Bau, D. Numerical Linear Algebra; Society for Industrial and Applied Mathematics: Philadelphia, PL, USA, 1997. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 3rd ed.; The Johns Hopkins University Press: Baltimore, ML, USA, 1996. [Google Scholar]

- Lancaster, P.; Tismenetsky, M. The Theory of Matrices; Academic Press Inc.: San Diego, CA, USA, 1985. [Google Scholar]

- Nedzhibov, G. Dynamic Mode Decomposition: A new approach for computing the DMD modes and eigenvalues. Ann. Acad. Rom. Sci. Ser. Math. Appl. 2022, 14, 5–16. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; JHU Press: Baltimore, MD, USA, 2012; Volume 3. [Google Scholar]

- Bagheri, S. Koopman-mode decomposition of the cylinder wake. J. Fluid Mech. 2013, 726, 596–623. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).