Precision Calibration of Omnidirectional Camera Using a Statistical Approach

Abstract

1. Introduction

2. Methodology

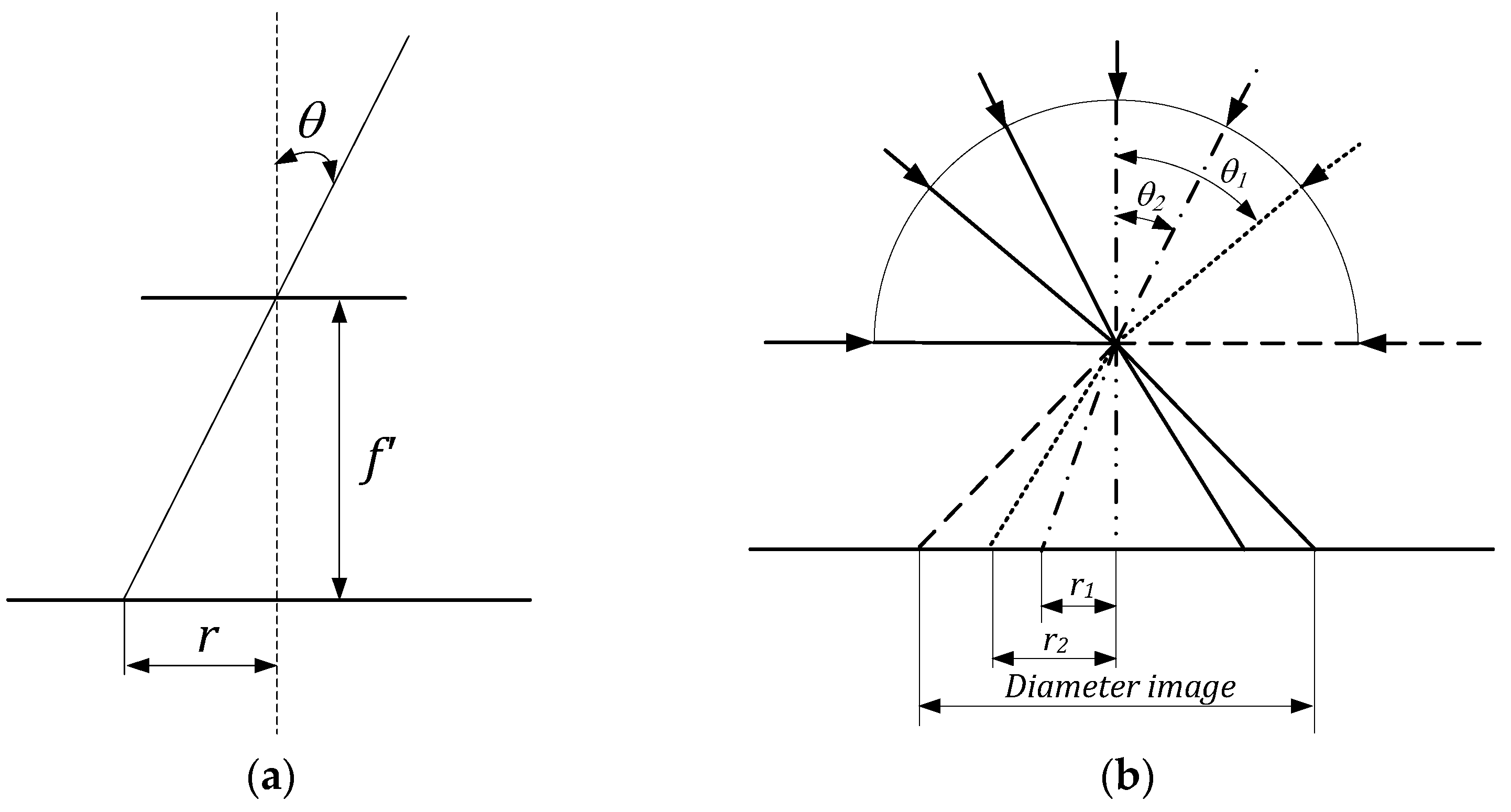

2.1. The Perspective Geometric Model

- Optical systems with super-wide fisheye lenses with a view angle of no less than , capable of capturing at least a hemisphere of the surrounding space.

- Mirror-lens (catadioptric) optical systems are cameras with a conventional lens with a nozzle mounted on it in the form of a mirror with rotational symmetry. The shape of the mirror surface can vary from cone to ellipse.

- Multi-chamber systems, whose large field of view is achieved using several chambers with overlapping fields of view.

- It should work with omnidirectional cameras with a fisheye lens, as well as with catadioptric optical systems.

- The calibration process should be accessible for unqualified users of the system and should not require the use of special technical means.

2.2. Methods for Calibration of Omnidirectional Optical Systems

- Stereographic for .

- Perspective for .

- Fisheye for .

- Linear transformation to the camera coordinate system from the world coordinate system.

- Nonlinear transformation from the camera coordinate system to the image plane. To approximate the fisheye lens model a Taylor polynomial is used [7].

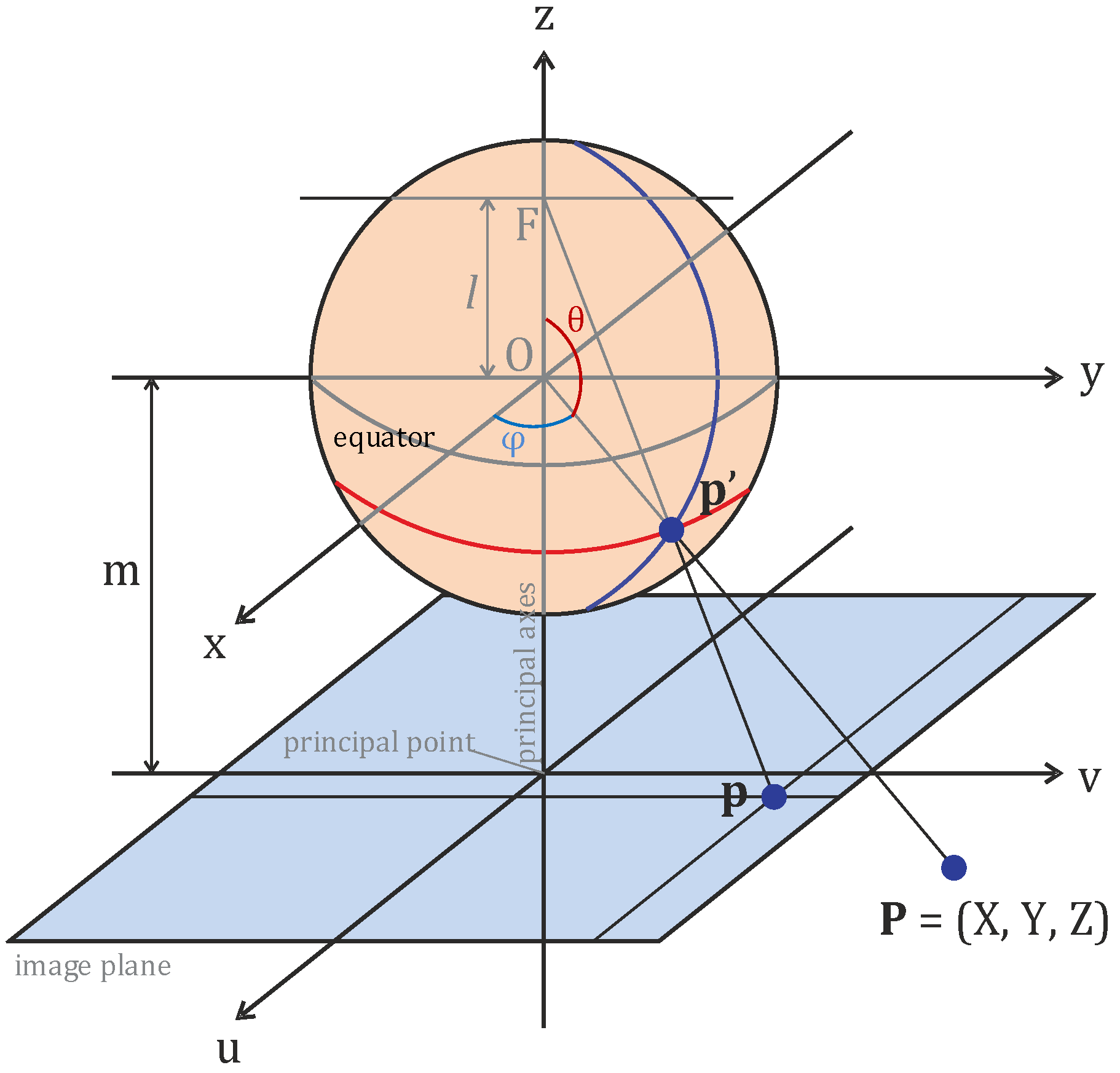

3. Proposed Geometric Projection Approach for Omnidirectional Optical System Calibration

- The catadioptric camera is a centered optical system; therefore, there is a point at which all the reflected rays intersect. This [0,0,0] point is the coordinate system origin

- The optical system focal plane has to coincide with the plane of the image sensor, only minor deviations are permissible.

- The mirror has rotational symmetry about the optical axis.

- The distortion of the lens in the model is not considered since the mirror used in an omnidirectional camera requires a long focal length of the lens. Thus, this lens distortion can be neglected. However, in the case of the fisheye lens, the distortion must be included in the calculated projection function.

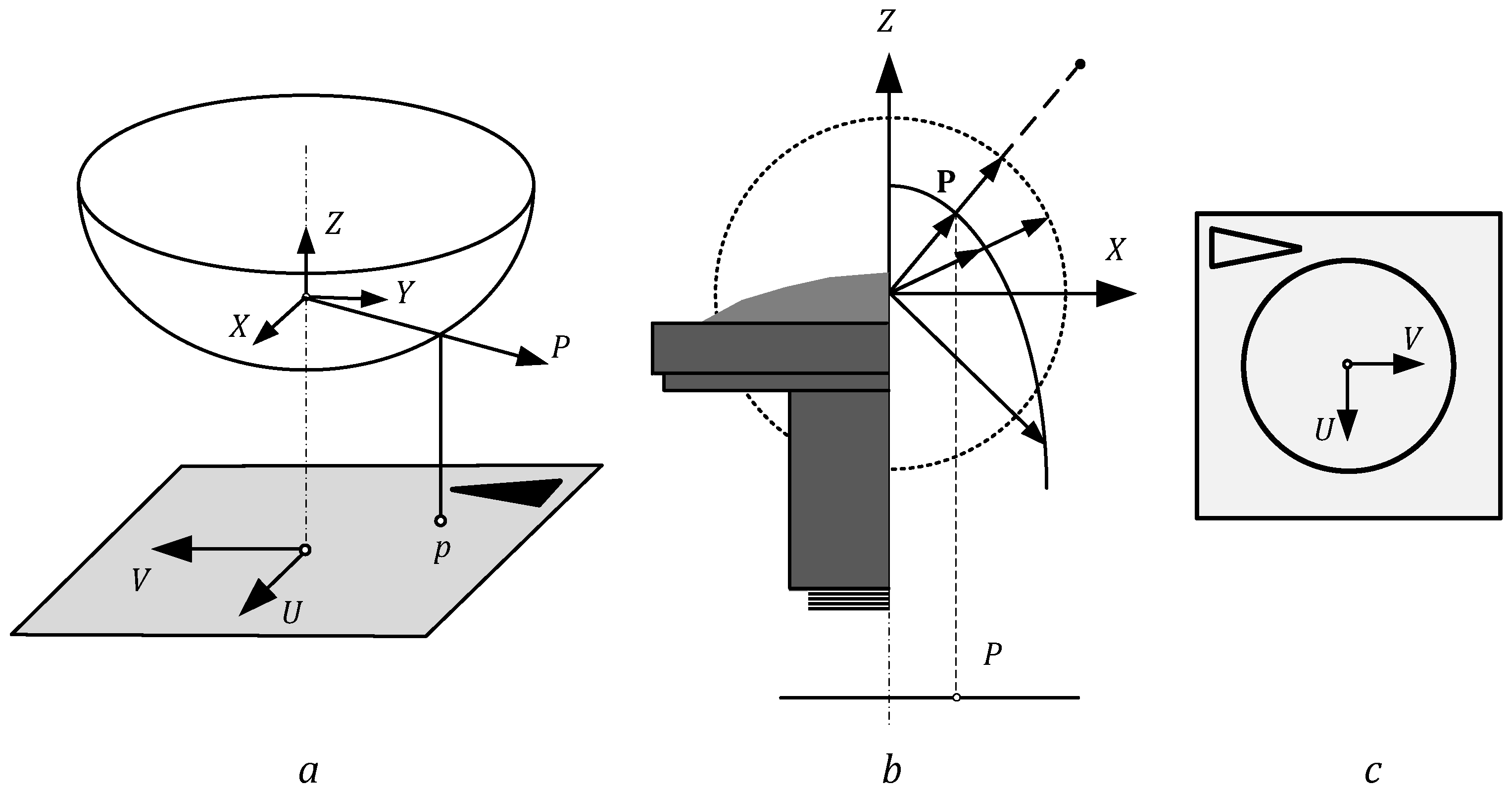

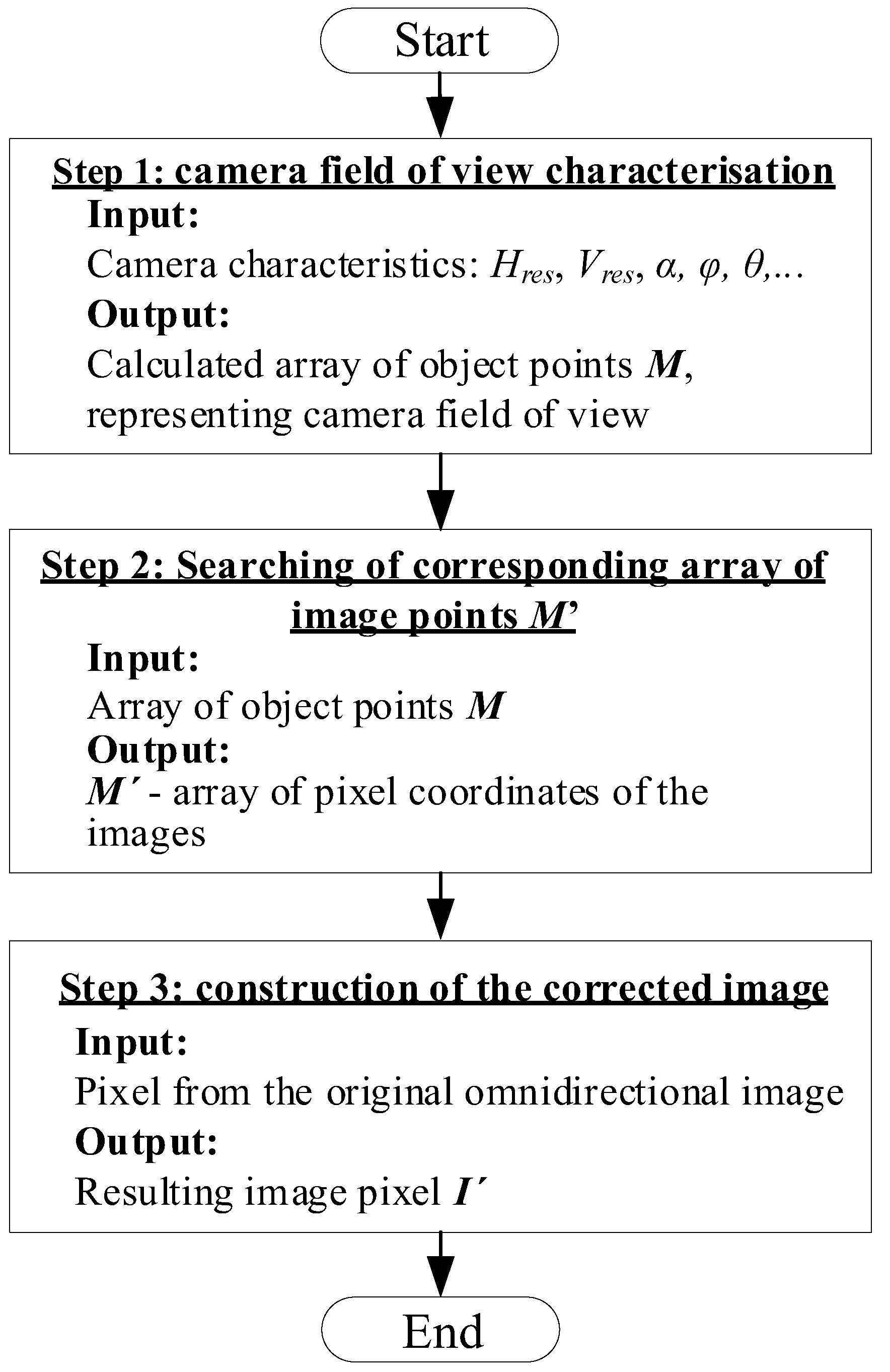

4. Algorithm of Image Conversion for Omnidirectional Optoelectronic Systems

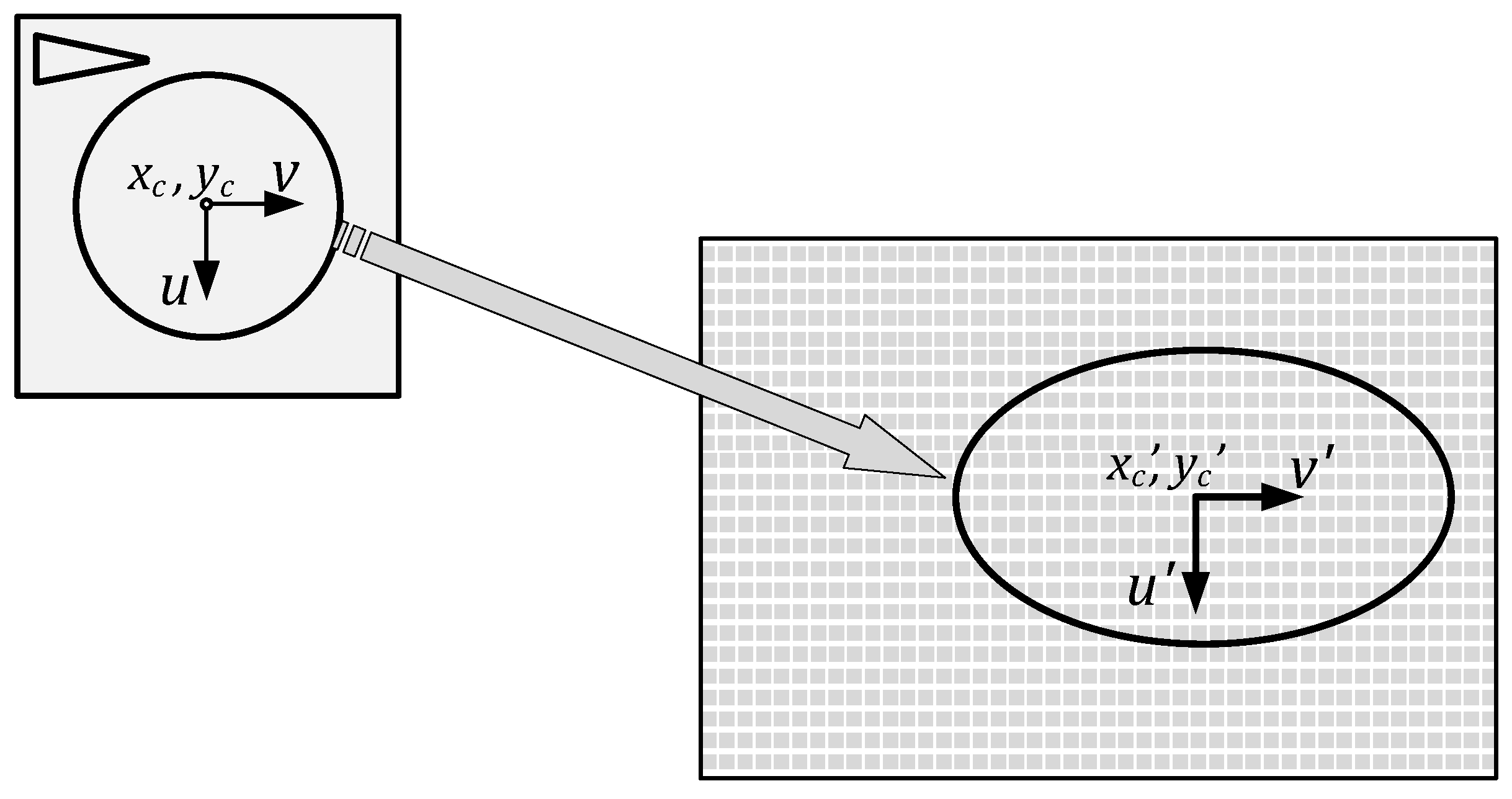

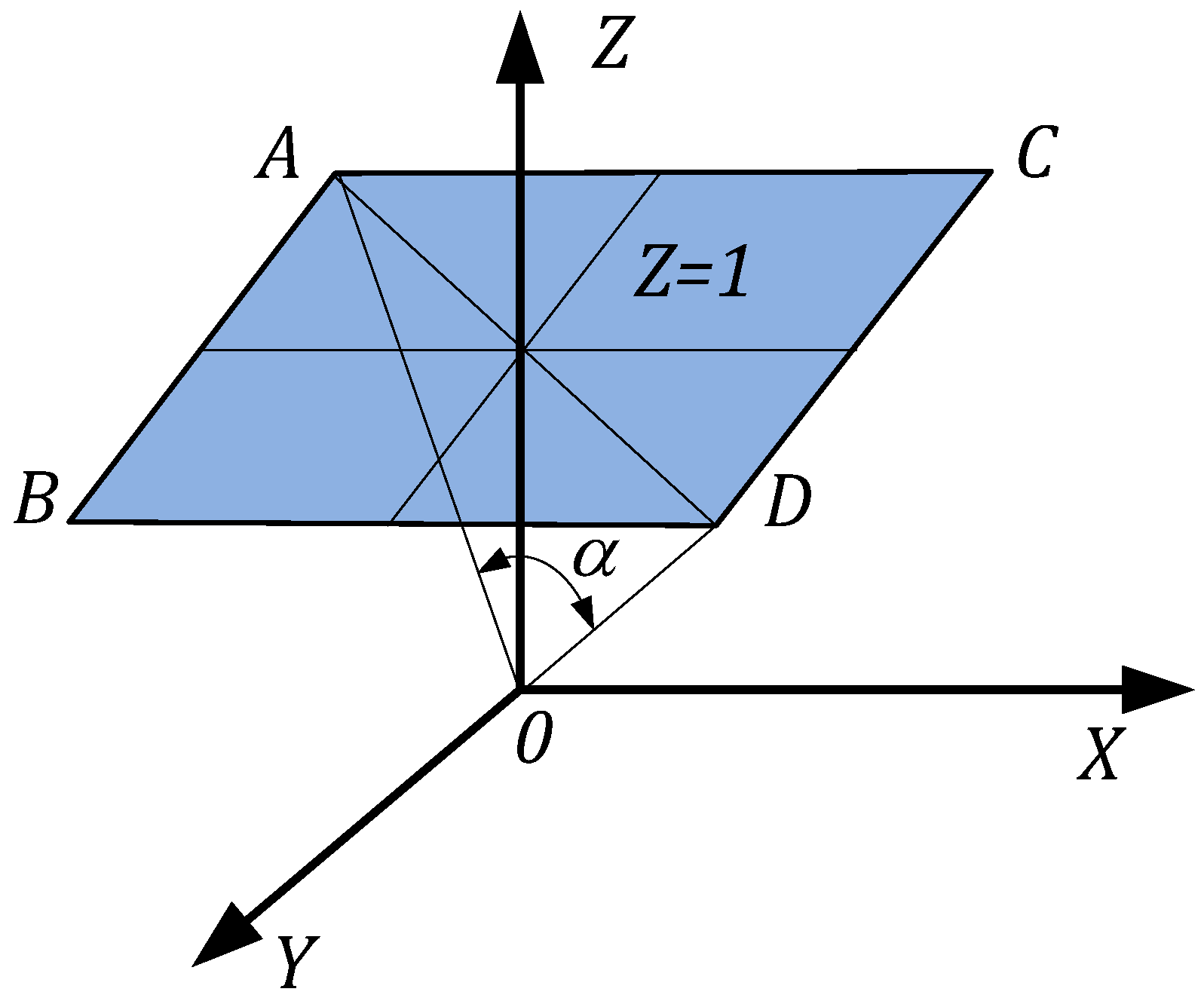

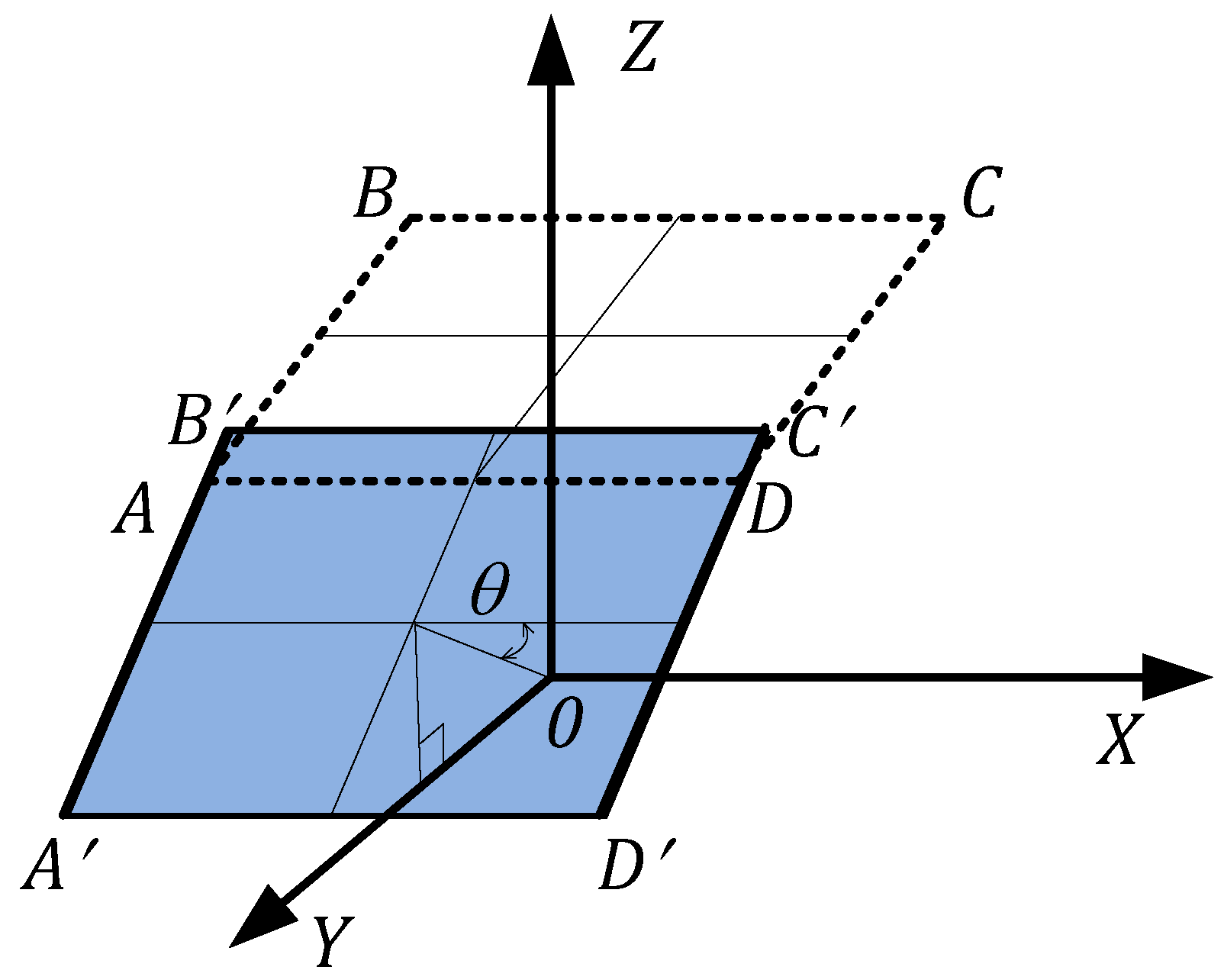

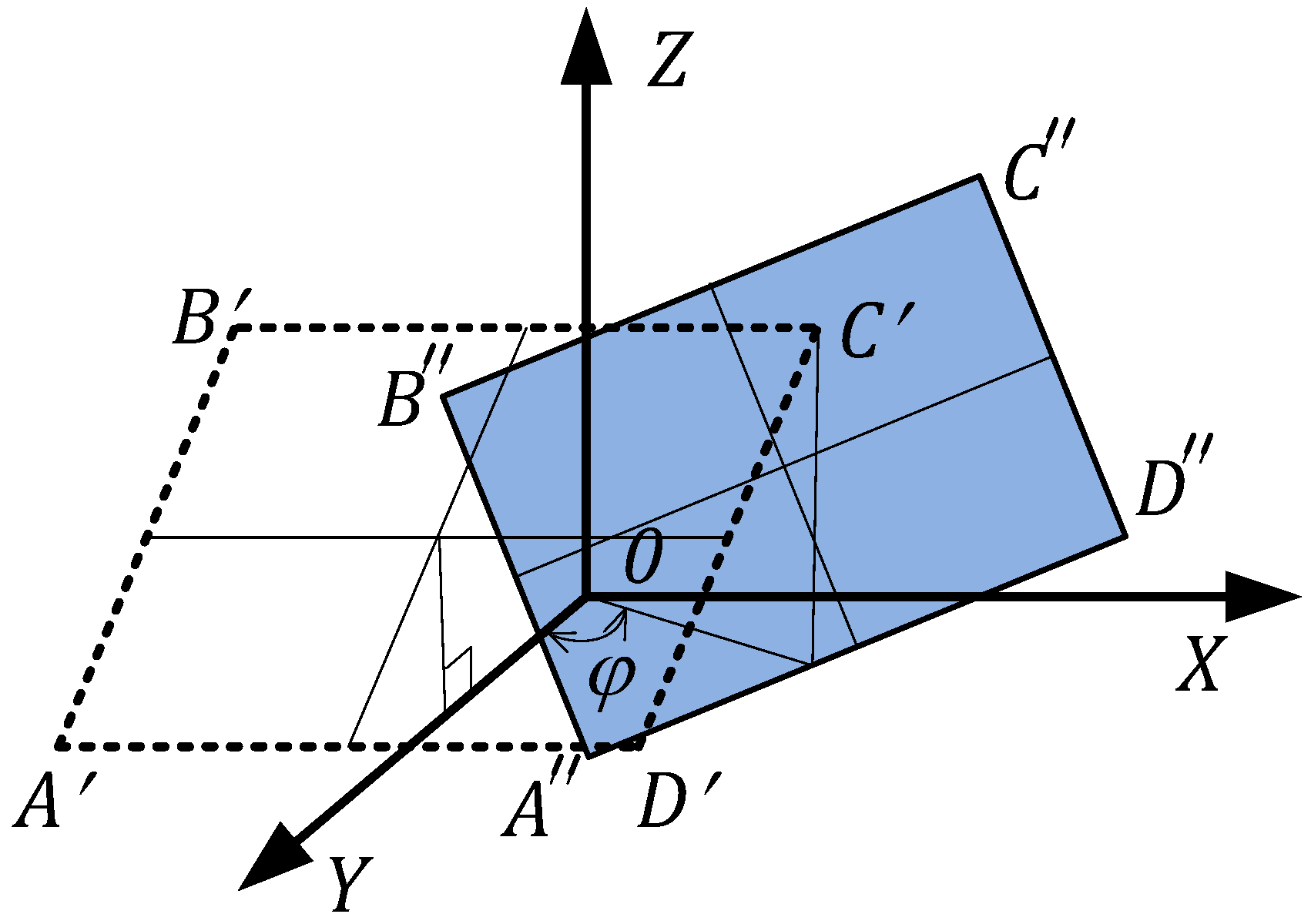

4.1. The First Stage: Formation of a Cloud of Points Characterizing the Field of View of the Virtual Perspective Camera

4.2. The Second Stage: Search for the Coordinates of the Point Images

4.3. The Third Stage: Construction of the Corrected Image

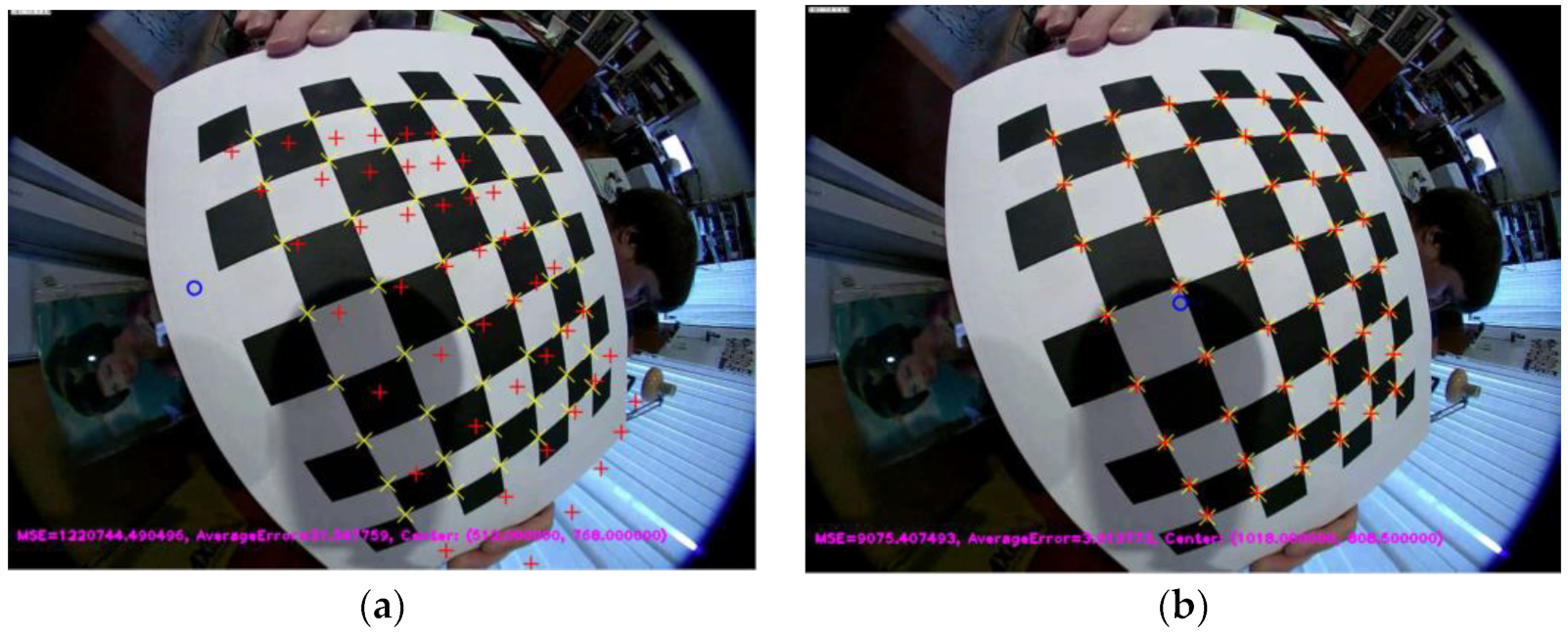

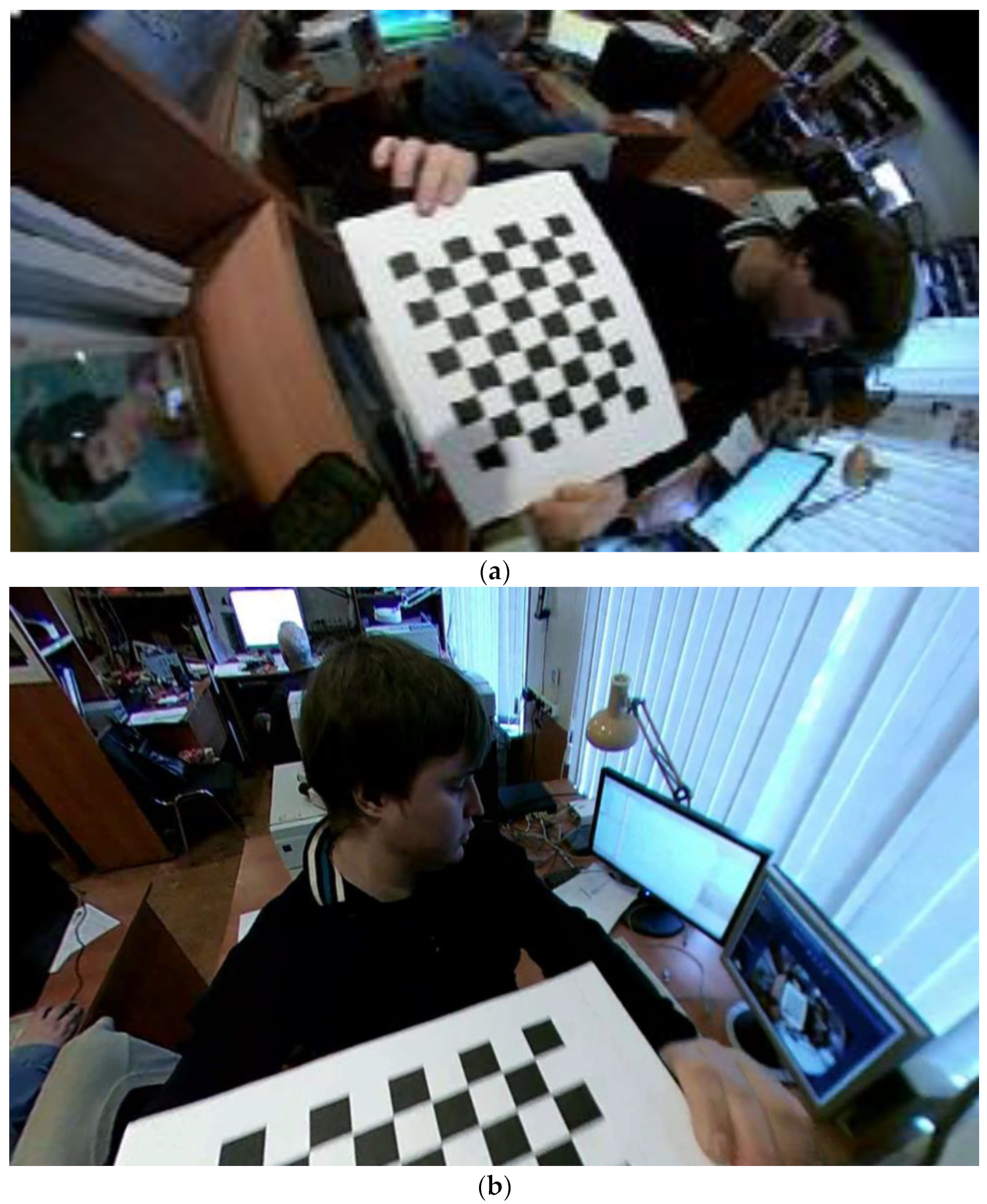

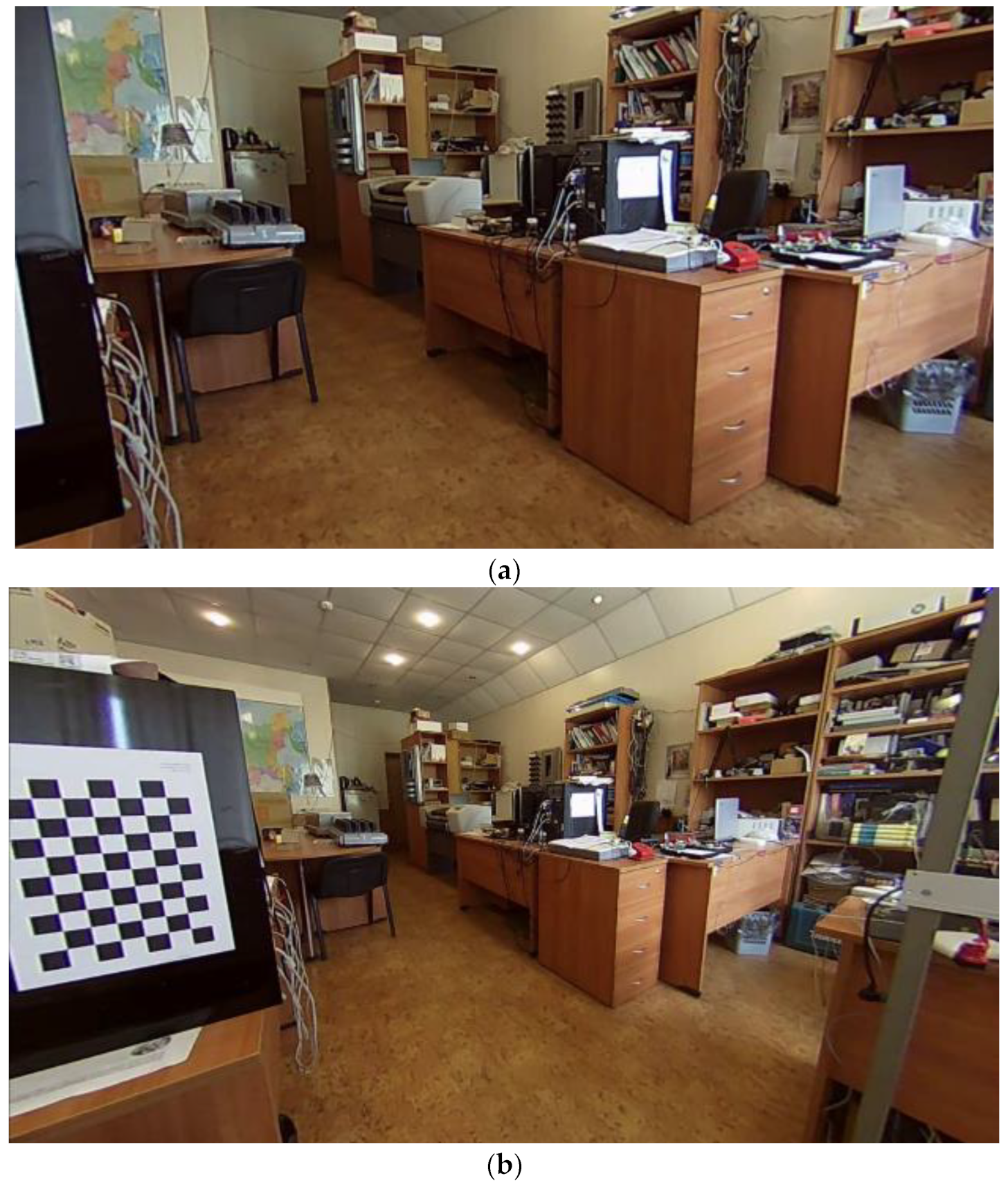

5. Experimental Results

- center of the circular image (pixel) coordinates:

- ,

- standard deviation of re-projection (pixel):

- affine coefficients:

- polynomial coefficients:

- input image size ;

- center of the circular image (pixel) coordinates: ;

- standard deviation of re-projection (pixel): 2.476;

- average error = 2.476 pixel;

- polynomial coefficients:

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yarishev, S.; Konyahin, I.; Timofeev, A. Universal optoelectronic measuring modules in distributed measuring systems. In Proceedings of the Fifth International Symposium on Instrumentation Science and Technology, Shenyang, China, 12 January 2009; SPIE—The International Society for Optical Engineering: Bellingham, DC, USA, 2009. [Google Scholar]

- Konyahin, I.; Timofeev, A.; Yarishev, S. High precision angular and linear measurements using universal optoelectronic measuring modules in distributed measuring systems. Key Eng. Mater. Key Eng. Mater. 2010, 437, 160–164. [Google Scholar]

- Korotaev, V.; Konyahin, I.; Timofeev, A.; Yarishev, S. High precision multimatrix optic-electronic modules for distributed measuring systems. In Proceedings of the Sixth International Symposium on Precision Engineering Measurements and Instrumentation, Hangzhou, China, 28 December 2010; SPIE—The International Society for Optical Engineering: Bellingham, DC, USA, 2010. [Google Scholar]

- Berenguer, Y.; Payá, L.; Valiente, D.; Peidró, A.; Reinoso, O. Relative Altitude Estimation Using Omnidirectional Imaging and Holistic Descriptors. Remote Sens. 2019, 11, 323. [Google Scholar] [CrossRef]

- Caruso, D.; Engel, J.; Cremers, D. Large-scale direct slam for omnidirectional cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Garcia-Fidalgo, E.; Ortiz, A. Vision-based topological mapping and localization methods: A survey. Robot. Auton. Syst. 2014, 64, 1–20. [Google Scholar] [CrossRef]

- Corke, P. Robotics, Vision and Control: Fundamental Algorithms. In MATLAB, 2nd ed.; Springer International Publishing AG: Cham, Switzerland, 2017. [Google Scholar]

- Valiente, D.; Payá, L.; Jiménez, L.M.; Sebastián, J.M.; Reinoso, O. Visual Information Fusion through Bayesian Inference for Adaptive Probability-Oriented Feature Matching. Sensors 2018, 18, 2041. [Google Scholar] [CrossRef] [PubMed]

- Oriolo, G.; Paolillo, A.; Rosa, L.; Vendittelli, M. Humanoid odometric localization integrating kinematic, inertial and visual information. Auton. Robot. 2016, 40, 867–887. [Google Scholar] [CrossRef]

- Puig, L.; Guerrero, J. Scale-space for central catadioptric systems: Towards a generic camera feature extractor. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 12 January 2012. [Google Scholar]

- Wong, W.K.; Fo, J.S.-T. Omnidirectional Surveillance System Featuring Trespasser and Faint Detection for i-Habit. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1559012. [Google Scholar] [CrossRef]

- Morel, J.-M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Dimitrov, K.; Shterev, V.; Valkovski, T. Low-cost system for recognizing people through infrared arrays in smart home systems. In Proceedings of the 2020 XXIX International Scientific Conference Electronics (ET), Sozopol, Bulgaria, 16–18 September 2020. [Google Scholar]

- Onoe, Y.; Yokoya, N.; Yamazawa, K.T.H. Visual surveillance and monitoring system using an omnidirectional video camera. In Proceedings of the Fourteenth International Conference on Pattern Recognition (Cat. No.98EX170), Brisbane, QLD, Australia, 20 August 1998. [Google Scholar]

- Shah, S.; Aggarwal, J. A simple calibration procedure for fish-eye (high distortion) lens camera. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994. [Google Scholar]

- Nayar, S.; Peri, V. Folded catadioptric cameras. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999. [Google Scholar]

- Ng, K.C.; Ishiguro, H.; Trivedi, M.; Sogo, T. An integrated surveillance system—Human tracking and view synthesis using multiple omni-directional vision sensors. Image Vis. Comput. 2004, 22, 551–561. [Google Scholar] [CrossRef]

- Wong, W.; Liew, J.; Loo, C. Omnidirectional surveillance system for digital home security. In Proceedings of the 2009 International Conference on Signal Acquisition and Processing, Kuala Lumpur, Malaysia, 3–5 April 2009. [Google Scholar]

- Lorenz, E.; Hurka, J.; Heinemann, D.; Beyer, H.G. Irradiance Forecasting for the Power Prediction of Grid-Connected Photovoltaic Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 2–10. [Google Scholar] [CrossRef]

- Marquez, R.; Gueorguiev, V.G.; Coimbra, C.F.M. Forecasting of Global Horizontal Irradiance Using Sky Cover Indices. J. Sol. Energy Eng. 2012, 135, 011017. [Google Scholar] [CrossRef]

- Ghonima, M.S.; Urquhart, B.; Chow, C.W.; Shields, J.E.; Cazorla, A.; Kleissl, J. A method for cloud detection and opacity classification based on ground-based sky imagery. Atmospheric Meas. Technol. 2012, 5, 2881–2892. [Google Scholar] [CrossRef]

- Hensel, S.; Marinov, M. Comparison of Algorithms for Short-term Cloud Coverage Prediction. In Proceedings of the 2018 IX National Conference with International Participation (ELECTRONICA), Sofia, Bulgaria, 17–18 May 2018. [Google Scholar]

- Rusinov, M. Technical Optics; Librocom: Saint-Petersburg, Russia, 2011. [Google Scholar]

- Lazarenko, V.; Yarishev, S. The algorithm for transforming a hemispherical field-of-view image. In Proceedings of the 3rd International Meeting on Optical Sensing and Artificial Vision, OSAV’2012, St. Petersburg, Russia; 2012. [Google Scholar]

- Schwalbe, E. Geometric modeling and calibration of fisheye lens camera systems. In Proceedings of the Panoramic Photogrammetry Workshop, Berlin, Germany, 24–25 February 2005. [Google Scholar]

- Tsudikov, M. Reduction of the Image from the Type Chamber “Fisheye” to the Standard Television; Tula State University: Tula, Russia, 2011; pp. 232–237. [Google Scholar]

- Hensel, S.; Marinov, M.B.; Schwarz, R. Fisheye Camera Calibration and Distortion Correction for Ground-Based Sky Imagery. In Proceedings of the XXVII International Scientific Conference Electronics—ET2018, Sozopol, Bulgaria, 13–15 September 2018. [Google Scholar]

- Mei, C.; Rives, P. Single viewpoint omnidirectional camera calibration from planar grids. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA’07, Rome, Italy, 10–14 April 2007. [Google Scholar]

- Geyer, C.; Daniilidis, K. A Unifying Theory for Central Panoramic Systems and Practical Implications. In Computer Vision—ECCV 2000; Springer: Berlin, Heidelberg, 2000. [Google Scholar]

- Ying, X.; Hu, Z. Can We Consider Central Catadioptric Cameras and Fisheye Cameras within a Unified Imaging Model? In Computer Vision—ECCV 2004; Springer: Berlin, Heidelberg, 2004. [Google Scholar]

- Puig, L.; Bastanlar, Y.; Sturm, P.; Guerrero, J.; Barreto, J. Calibration of Central Catadioptric Cameras Using a DLT-Like Approach. Int. J. Comput. Vis. 2011, 93, 101–114. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A flexible technique for accurate omnidirectional camera calibration and structure from motion. In Proceedings of the 4th IEEE International Conference on Computer Vision Systems, ICVS’06, New York, NY, USA, 4–7 January 2006. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, IROS 2006, Beijing, China, 9–15 October 2006. [Google Scholar]

- Juarez-Salazar, R.; Zheng, J.; Diaz-Ramirez, V.H. Distorted pinhole camera modeling and calibration. Appl. Opt. 2020, 59, 11310–11318. [Google Scholar] [CrossRef] [PubMed]

- Fujinon CCTV Lens. 2018. Available online: https://www.fujifilm.com/products/optical_devices/pdf/cctv/fa/fisheye/fe185c046ha-1.pdf (accessed on 3 October 2022).

- Golushko, M.; Yaryshev, S. Optoelectronic Observing System “Typhoon” [Optiko-elektronnaya sistema nablyudeniya “Typhoon”]. Vopr. Radioelektron. 2014, 1, 38–42. [Google Scholar]

- cnAICO-Fisheye Lens/1/2. Available online: https://aico-lens.com/product/1-4mm-c-fisheye-lens-acf12fm014ircmm/ (accessed on 4 October 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lazarenko, V.P.; Korotaev, V.V.; Yaryshev, S.N.; Marinov, M.B.; Djamiykov, T.S. Precision Calibration of Omnidirectional Camera Using a Statistical Approach. Computation 2022, 10, 209. https://doi.org/10.3390/computation10120209

Lazarenko VP, Korotaev VV, Yaryshev SN, Marinov MB, Djamiykov TS. Precision Calibration of Omnidirectional Camera Using a Statistical Approach. Computation. 2022; 10(12):209. https://doi.org/10.3390/computation10120209

Chicago/Turabian StyleLazarenko, Vasilii P., Valery V. Korotaev, Sergey N. Yaryshev, Marin B. Marinov, and Todor S. Djamiykov. 2022. "Precision Calibration of Omnidirectional Camera Using a Statistical Approach" Computation 10, no. 12: 209. https://doi.org/10.3390/computation10120209

APA StyleLazarenko, V. P., Korotaev, V. V., Yaryshev, S. N., Marinov, M. B., & Djamiykov, T. S. (2022). Precision Calibration of Omnidirectional Camera Using a Statistical Approach. Computation, 10(12), 209. https://doi.org/10.3390/computation10120209