Chinese Knowledge Base Question Answering by Attention-Based Multi-Granularity Model

Abstract

:1. Introduction

- Propose a Chinese entity linker which is based on Levenshtein Ratio. The entity linker can effectively handle abbreviation, wrongly labeled and wrongly written entity mentions.

- Propose an attention-based multi-granularity interaction model (ABMGIM). Multi-granularity approach for text embeddings is applied. A nested character-level and word-level approach is used to concatenate the pre-trained embedding of a character with corresponding embedding on word-level. Furthermore, a two-layer Bi-GRUs with element-wise connections structure is incorporated to obtain better hidden representations of the given question, and attention mechanism is utilized for a fine-grained alignment between characters for relation selection.

2. Related Work

3. Our Approach

3.1. Task Definition

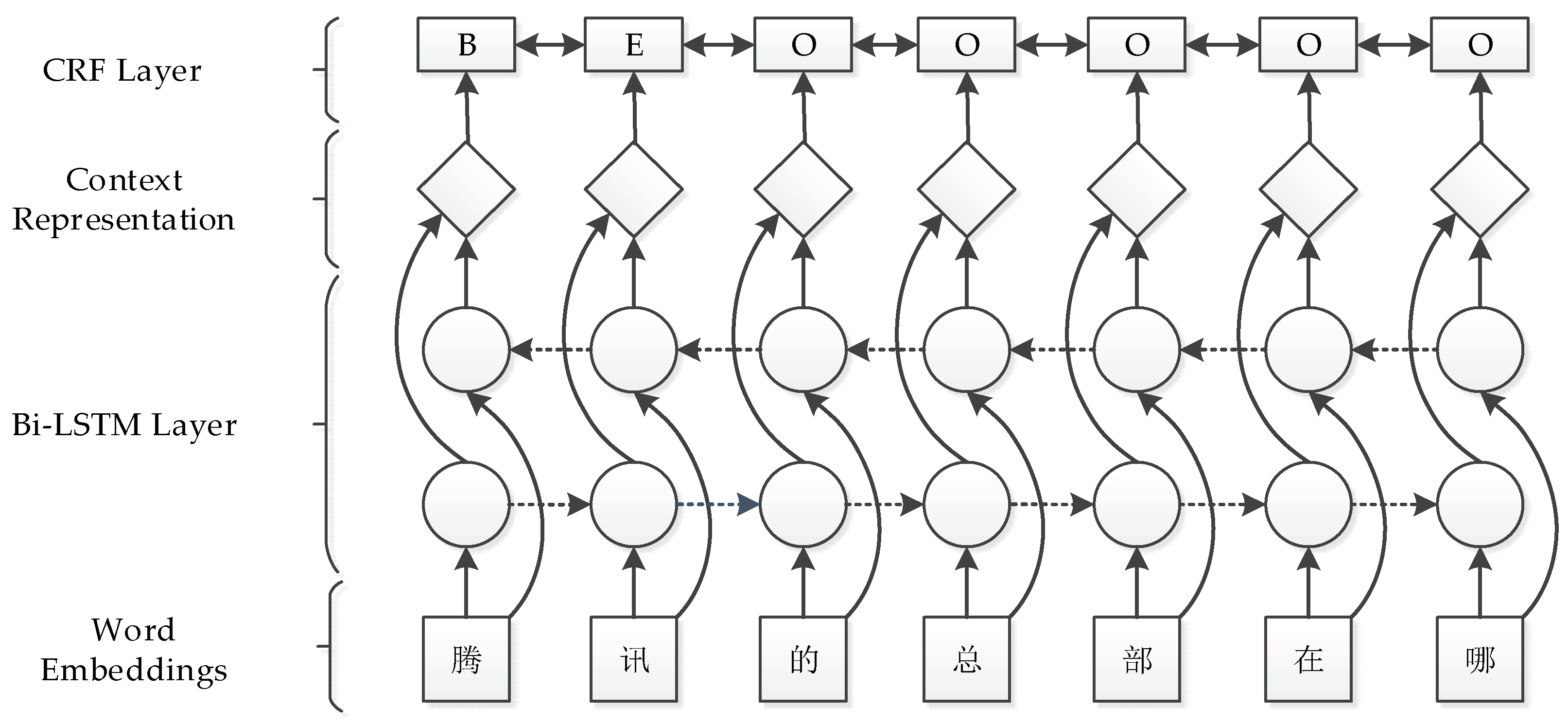

3.2. Topic Entity Extraction

3.2.1. Entity Detection Model

3.2.2. Entity Linking Model

3.3. Relation Selection

3.3.1. Embedding Layer

3.3.2. Relation Representation Layer

3.3.3. Question Representation Layer

3.3.4. Attention Layer

3.3.5. Output Layer

4. Experiments

4.1. Datasets

4.2. Training and Inference

4.3. Evaluation Metrics

4.4. Experiment Setup

4.4.1. Topic Entity Extraction Model

4.4.2. Relation Selection Model

4.5. Result

4.5.1. Topic Entity Extraction

4.5.2. Relation Selection

- Single-layer Bi-GRU question encoder: we also use composite embeddings. One single-layer Bi-GRU is adopted to perform the question context aware representation instead of our two-layer hierarchical matching framework, and the representation results of question and relation are merged to vectors with attention mechanism.

- Two-layer Bi-GRUs without element-wise connections: composite embeddings are adopted. we still use two-layer deep Bi-GRUs network to get the hidden representation of questions but without element-wise connections. Attention mechanism is applied on the second layer Bi-GRU hidden representation.

- Two single-layer Bi-GRUs with element-wise connections: we replace the deep Bi-GRU question encoder with two single-layer Bi-GRUs, with element-wise connections between their hidden states. Other architectures of the network like composite embeddings and attention mechanism remain the same.

- Model without attention: relations are represented with Bi-GRU layers and questions are represented with two-layer hierarchical Bi-GRUs. The semantic similarity is measured by the cosine similarity between final hidden representations: .

- Replace Bi-GRU with Bi-LSTM: simply replace the Bi-GRU layers of question and relation with Bi-LSTM, other structures remain the same.

- Replace Bi-GRU with CNN: unlike GRU that depends on the computations of the previous time step, CNN enables parallelization over every element in a sequence, so it is capable of making full use of the parallel architecture of GPU. We study the performance of fully CNN network on the relation selection of KBQA. The GRU layer for question and relation preprocessing is replaced with a multi-kernel CNN layer, and the dimension of the CNN output is consistent with that of the original GRU layer.

- SPE & Pattern Rule [23]: subject predicate extraction algorithm with several pattern rules. A linear combination of pattern rules including answer patterns, core of questions, question classification method and posttreatment rules for alternative questions is employed to pick up golden answers.

- NBSVM & CNN [18]: NBSVM-based ranking model and CNN-based ranking. N-gram co-occurrence features are extracted to train an SVM model with Naive Bayes features, and CNN-based ranking firstly maps the question and relation as vectors by CNN and then merges two output vectors to get a score. Stacking method is used to ensemble two model to get the final result.

- DSSM Combination [19]: a combination of CNN-based deep structured semantic models and some variant, including Bi-LSTM-DSSM, Bi-LSTM-CNN-DSSM. Bi-LSTM-DSSM extends DSSM by applying bi-directional LSTM, while Bi-LSTM-CNN-DSSM is developed by integrating CNN with Bi-LSTM layer. Finally, cosine similarity is used to measure the matching degree between question and candidate predicates. The three models own different weights in order to give a composite lexical matching score.

4.6. Error Analysis and Discussion

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A nucleus for a web of open data. In Proceedings of the 6th International Semantic Web and 2nd Asian Conference on Asian Semantic Web Conference, Busan, Korea, 11–15 November 2007; pp. 722–735. [Google Scholar]

- Niu, X.; Sun, X.; Wang, H.; Rong, S.; Qi, G.; Yu, Y. Zhishi.Me: Weaving Chinese linking open data. In Proceedings of the 10th International Conference on The Semantic Web—Volume Part II, Bonn, Germany, 23–27 October 2011; pp. 205–220. [Google Scholar]

- Wang, Z.-C.; Wang, Z.-G.; Li, J.-Z.; Pan, J.Z. Knowledge extraction from Chinese wiki encyclopedias. J. Zhejiang Univ. SCI. C 2012, 13, 268–280. [Google Scholar] [CrossRef]

- Berant, J.; Chou, A.; Frostig, R.; Liang, P. Semantic parsing on freebase from question-answer pairs. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Seattle, WA, USA, 18–21 October 2013; pp. 1533–1544. [Google Scholar]

- Dong, L.; Wei, F.; Zhou, M.; Xu, K. Question answering over freebase with multi-column convolutional neural networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 260–269. [Google Scholar]

- Yu, L.; Hermann, K.M.; Blunsom, P.; Pulman, S. Deep learning for answer sentence selection. arXiv, 2014; arXiv:1412.1632. [Google Scholar]

- Bordes, A.; Usunier, N.; Chopra, S.; Weston, J. Large-scale simple question answering with memory networks. arXiv, 2015; arXiv:1506.02075. [Google Scholar]

- Golub, D.; He, X. Character-level question answering with attention. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1598–1607. [Google Scholar]

- Zhang, Y.; Liu, K.; He, S.; Ji, G.; Liu, Z.; Wu, H.; Zhao, J. Question answering over knowledge base with neural attention combining global knowledge information. arXiv, 2016; arXiv:1606.00979. [Google Scholar]

- Yih, W.-T.; Chang, M.-W.; He, X.; Gao, J. Semantic parsing via staged query graph generation: Question answering with knowledge base. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 1321–1331. [Google Scholar]

- Yin, W.; Yu, M.; Xiang, B.; Zhou, B.; Schütze, H. Simple question answering by attentive convolutional neural network. arXiv, 2016; arXiv:1606.03391. [Google Scholar]

- Yu, M.; Yin, W.; Hasan, K.S.; dos Santos, C.; Xiang, B.; Zhou, B. Improved neural relation detection for knowledge base question answering. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 571–581. [Google Scholar]

- Cai, Q.; Yates, A. Large-scale semantic parsing via schema matching and lexicon extension. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; pp. 423–433. [Google Scholar]

- Liang, P.; Jordan, M.I.; Klein, D. Learning dependency-based compositional semantics. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologie, Portland, OR, USA, 19–24 June 2011; pp. 590–599. [Google Scholar]

- Yao, X.; Van Durme, B. Information extraction over structured data: Question answering with freebase. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 956–966. [Google Scholar]

- Dai, Z.; Li, L.; Xu, W. CFO: Conditional focused neural question answering with large-scale knowledge bases. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 800–810. [Google Scholar]

- Yang, F.; Gan, L.; Li, A.; Huang, D.; Chou, X.; Liu, H. Combining deep learning with information retrieval for question answering. In Natural Language Understanding and Intelligent Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 917–925. [Google Scholar]

- Xie, Z.; Zeng, Z.; Zhou, G.; He, T. Knowledge base question answering based on deep learning models. In Natural Language Understanding and Intelligent Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 300–311. [Google Scholar]

- Bordes, A.; Weston, J.; Usunier, N. Open question answering with weakly supervised embedding models. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases—Volume 8724; Springer: Berlin/Heidelberg, Germany, 2014; pp. 165–180. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Dur, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Lake Tahoe, NV, USA, 2013; pp. 2787–2795. [Google Scholar]

- Jain, S. Question answering over knowledge base using factual memory networks. In Proceedings of the NAACL Student Research Workshop, San Diego, CA, USA, 13–15 June 2016; pp. 109–115. [Google Scholar]

- Lai, Y.; Lin, Y.; Chen, J.; Feng, Y.; Zhao, D. Open domain question answering system based on knowledge base. In Natural Language Understanding and Intelligent Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 722–733. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv, 2014; arXiv:1409.0473. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the 18th International Conference on Machine Learning, San Francisco, CA, USA, 28 June–1 July 2001; pp. 282–289. [Google Scholar]

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions, and reversals. Sov. Phys. Doklady 1966, 10, 707–710. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Ling, W.; Luís, T.; Marujo, L.; Astudillo, R.F.; Amir, S.; Dyer, C.; Black, A.W.; Trancoso, I. Finding function in form: Compositional character models for open vocabulary word representation. arXiv, 2015; arXiv:1508.02096. [Google Scholar]

- Cho, K.; Merrienboer, B.V.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv, 2014; arXiv:1409.1259. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Lstm can solve hard long time lag problems. In Proceedings of the 9th International Conference on Neural Information Processing Systems, Singapore, 18–22 November 1996; pp. 473–479. [Google Scholar]

- Cui, Y.; Chen, Z.; Wei, S.; Wang, S.; Liu, T.; Hu, G. Attention-over-attention neural networks for reading comprehension. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 593–602. [Google Scholar]

- Zhang, H.; Xu, G.; Liang, X.; Huang, T. An attention-based word-level interaction model: Relation detection for knowledge base question answering. arXiv, 2018; arXiv:1801.09893. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv, 2012; arXiv:1212.5701. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 13–15 June 2016; pp. 260–270. [Google Scholar]

- Dong, C.; Zhang, J.; Zong, C.; Hattori, M.; Di, H. Character-based lstm-crf with radical-level features for chinese named entity recognition. In Natural Language Understanding and Intelligent Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 239–250. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. Lstm: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Reimers, N.; Gurevych, I. Optimal hyperparameters for deep lstm-networks for sequence labeling tasks. arXiv, 2017; arXiv:1707.06799. [Google Scholar]

| Type | Times | Instance | Disposal |

|---|---|---|---|

| Space in predicate between Chinese characters | 367,218 | 别 名/Alias | Delete space |

| Predicate prefixes “-” or “·” | 163,285 | - 行政村数/- Number of administrative villages | Delete prefixes |

| Appendix labels in predicate | 9110 | 人口 (2009) [1]/Population (2009) [1] | Delete appendix labels |

| Predicate is the same as object | 193,716 | 陈祝龄旧居|||天津市文物保护单位|||天津市文物保护单位/Former Residence of Chen Zhuling|||Tianjin heritage conservation unit|||Tianjin heritage conservation unit | Delete the record |

| Hyper Parameter | Batch Size | Gradient Clip | Embedding Size | Dropout Rate | Learning Rate |

|---|---|---|---|---|---|

| Value | 20 | 5 | 100 | 0.5 | 0.001 |

| Parameter | Search Space | Value |

|---|---|---|

| Embedding dim. d | 200 | |

| Dim of GRU , | 150 | |

| Dropout rate | 0.35 | |

| Batch size | 256 |

| Entity Detection | Entity Linking | Overall Entity Extraction | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Raw | |||||||||

| 97.41 | 97.32 | 97.36 | 96.56 | 98.72 | 99.05 | 99.41 | 96.16 | 96.07 | 96.11 |

| Analysis Content | Model | Acc. |

|---|---|---|

| Hierarchical matching framework | replace hierarchical matching framework with single-layer Bi-GRU question encoder | 80.39 |

| replace hierarchical matching framework with two-layer Bi-GRUs without element-wise connections | 79.26 | |

| replace hierarchical matching framework with two single-layer Bi-GRUs with element-wise connections | 76.54 | |

| Structure unit | model without attention | 79.92 |

| replace Bi-GRU with Bi-LSTM | 81.51 | |

| replace Bi-GRU with CNN | 79.03 | |

| Text embeddings | replace composite embeddings with word embeddings | 78.36 |

| replace composite embeddings with character embeddings | 79.58 | |

| ABMGIM (Our approach) | 81.74 |

| Model | Acc. |

|---|---|

| SPE & Pattern Rule (Lai et al., 2016) | 82.47 |

| NBSVM & CNN (Yang et al., 2016) | 81.59 |

| DSSM Combination (Xie et al., 2016) | 79.57 |

| ABMGIM (Our approach) | 81.74 |

| Cause of Error | Counts |

|---|---|

| Missing entities | 2 |

| Wrong entities | 5 |

| Wrong predicates | 34 |

| Ambiguity | 16 |

| Dataset caused errors | 43 |

| Total | 100 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, C.; Huang, T.; Liang, X.; Li, F.; Fu, K. Chinese Knowledge Base Question Answering by Attention-Based Multi-Granularity Model. Information 2018, 9, 98. https://doi.org/10.3390/info9040098

Shen C, Huang T, Liang X, Li F, Fu K. Chinese Knowledge Base Question Answering by Attention-Based Multi-Granularity Model. Information. 2018; 9(4):98. https://doi.org/10.3390/info9040098

Chicago/Turabian StyleShen, Cun, Tinglei Huang, Xiao Liang, Feng Li, and Kun Fu. 2018. "Chinese Knowledge Base Question Answering by Attention-Based Multi-Granularity Model" Information 9, no. 4: 98. https://doi.org/10.3390/info9040098

APA StyleShen, C., Huang, T., Liang, X., Li, F., & Fu, K. (2018). Chinese Knowledge Base Question Answering by Attention-Based Multi-Granularity Model. Information, 9(4), 98. https://doi.org/10.3390/info9040098