2. The Fascination with the Future: Foresight Studies Require an Appropriate Methodology

Confronted with the challenge of correctly understanding, representing, and managing the future(s) of humankind and, especially, future discoveries in science and breakthroughs in technology, we have, primarily, to correctly deal with our perplexities and expectations related to them, and to find the most probable behavior of such complex systems as the human brain/mind, human society, science and/or technology for our best future(s).

Let us remember that, when “the simple yet infinitely complex question of where is technology taking us?” [

5] was asked, our times were described as the “Age of Surprise.”

It is a “concept originally described by the U.S. Air Force Center for Strategy and Technology at The Air University” [

5], a part of the Blue Horizons project [

6], writes Reuven Cohen.

In fact, the Age of Surprise is a situation in which “the exponential advancement of technology” has just reached “a critical point where not even governments can project the direction humanity is headed” [

5].

What is interesting for this paper’s theme is the forecast made by some researchers of “an eventual Singularity where the lines between humans and machines are blurred” [

5].

There is increasing awareness related to technological changes and breakthroughs.

Under this metaphor, but also considering the necessity of finding the best solutions in order to not engage humankind in catastrophic, global, and existential risks [

7] and, especially and more specifically, in

existential technological risks, it is essential, from a scientific approach,

to not accept that it is natural to find what we expect to find,

nor to project our expected finds as sound scientific results. Yet, it is also important

to not reduce the complexity of the analyzed systems—TS or AGI—to the linearity of a predictable use of data, when dealing with the future(s).

In fact,

to date, there is no such scientific field

as future studies [

8]/

foresight studies, even though there are such claims [

9].

Because future-related reasoning is probabilistic, a hard science of the future is problematic, as we cannot decide with any accuracy about the truth or falsehood of our judgments on future actions, or on the existence of future beings and future artifacts. As one of the reviewers of this paper rightly observed, in science we make predictions that can be “evaluated according to available experience” and this is a sign that “nevertheless, the science is possible.” Indeed, a probabilistic truth engages a re-evaluation of the classic two-dimensional models of reasoning in favor of a discussion related to an unified model of reasoning [

10].

The sense of the above revised statement is related to some of the observations made by Samuel Arbesman in his book

The Half-life of Facts: Why Everything We Know Has an Expiration Date [

11]. Even though I could not access the contents of the book, I have understood, from the review by Roger M. Stein, that

in science, acquired knowledge has a pace of overturn [

12], and, from the paper of James A. Evans on “Future Science,” that

innovation has been decreasing, instead of accelerating, in the last several decades [

13].

This is why it is difficult and problematic to claim the status of a “hard” science for foresight studies.

Perhaps the explanation for such a problematic status could be related not only to the complexity of the future-related models of the evolution of science and technology (as proven in “The Age of Surprise” report), but, also, to Aristotle’s legacy [

14] regarding the problem of future contingents [

15] and/or to Charles Sanders Peirce’s observation that, in order to reach, through inquiry, an objective scientific understanding we have to eliminate such human factors as expectations and bias ([

16] pp. 111–112).

A major issue in the study of future(s) is reaching the best probably correct understanding. For such an accomplishment, we have to accept both the complexity of the world and the complexity of the human being(s).

This is why, first of all, we need to reject any reductionist perspective.

There is a powerful reductionist tendency in scientific studies, as “the world is still dominated by a mechanistic, cause and effect mindset with origins in the Industrial Revolution and the Newtonian scientific philosophy” [

17].

Beyond the radical claim in the sentence quoted above, the existence of such reductionist tendencies is of importance for TS researches, as such tendencies are detectable in various perspectives on AC, AGI, and TS as well. This is why, as one of the reviewers concluded, this paper is focused on “criticism of the TS/AC/AGI claims based on reductionism and extrapolation.”

In the following pages, I will briefly consider some examples of the perverse power of our expectations related to the future(s) of technology and, especially related to TS, I will engage in a short discussion based on the observation that there are few studies on TS deployed from a complexity perspective.

3. The Apophenic Face(s) of Our Technology- and Science-Related Expectations: The “Law of Accelerating Returns” (LAR)

Contemplating the timeline of humankind, when change is perceived as affecting both societies and individuals, debates and controversies related to the future flourish until the reaching of a new equilibrium and a new status quo regarding the perception(s) and understanding(s) of the possible future(s)—not necessarily predictable and predicted in those discussions.

a. Is the Change in Science and Technology Accelerating?

One of the most appealing ideas in the debates of our times is so-called “accelerating change” (AC)/the “law of accelerating returns” (LAR).

When considering the idea of AC, one should go back to the idea of “progress itself” [

18].

This is not about AC in technology only—e.g., as claimed by Ray Kurzweil [

19]. It is about AC in science, too—e.g., as claimed by John M. Smart, the Foresight U, and the FERN teams [

18].

For some researchers, AC is an ontological feature, almost a law of nature as it “is one of the most future-important, pervasive, and puzzling features of our universe,” everywhere observable, including “the twenty-thousand year history of human civilization” [

18].

Meanwhile, for other researchers it emerges universally [

20].

In general terms, AC is just a perceived change of rhythm in the regularity of the advances of technology and science through the ages.

The challenge it brings with it, observes Richard Paul, is “to trace the general implications of what are identified as the two central characteristics of the future: accelerating change and intensifying complexity” [

21]. The major concerns are related to the pace of AC and to the perception of an increasing complexity: “how are we to understand how this change and complexity will play itself out? How are we to prepare for it?” [

21].

The fascination with AC is an expression of our interest in the study of the future, in so-called “foresight studies” [

22].

In fact, it is such a rapidly growing topic that, using the simplest Google search, on 4 October 2018, I found not only about 16,800,000 results for “foresight” about 11,700,000 results for “foresight studies,” and about 14,900,000 results for “foresight study,” but also 20,400 results for “foresight science,” too.

At the same time, the perceived AC seems to be more likely just a subjective projection of our assumptions/presuppositions, even when discovering the gap(s) between our linear and predictable expectations about the pace(s) of changes in technology and science and our own minds’ power to process the complexity of the information related to the new developments in science and new breakthroughs in technology.

Some critics of AC—Theodore Modis [

23]; Jonathan Huebner [

24]; Andrey Korotayev, Artemy Malkov, and Daria Khaltourina [

25]; James A. Evans [

13]; Julia Lane [

26] and bloggers such as Richard Jones [

27] and David Moschella [

28], among others—argued that “the rate of technological innovation has not only ceased to rise, but is actually now declining” [

29] and/or argued that there are other possible projections of the LAR (Law of Accelerating Returns) besides the one proposed by Kurzweil.

To date, there is neither general acceptance of AC’s existence, nor a final rejection of it.

Under these circumstances, a statement such as “one can criticize ‘proofs’ of AC for a subjective selection of technologies, but no one can claim that within the selective set of technologies there is no AC” (made by one of the reviewers of this paper) is most likely a subjective one.

My main concerns are: How is it/could it be/should it be established that a “selective set of technologies” should be generally accepted? and Is it permitted to extrapolate those particular findings to a universal set of technologies or even to the status of a universal phenomenon?

In the meantime, it is well known that there are several models of Singularity and of TS—such as those presented in the taxonomies of John Smart [

30] and Anders Sandberg [

31], for example.

In John Smart’s classification, all three types of Singularity (computational, developmental, and technological) “assume the proposition of the universe-as-a-computing-system” [

30]. In fact, it is an “assumption, also known as the “infopomorphic” paradigm,” that “proposes that information processing is both a process and purpose intrinsic to all physical structures,” when “the interrelationships between certain information processing structures can occasionally undergo irreversible, accelerating discontinuities” [

30].

I think this is why Amnon H. Eden, James H. Moor, Johnny H. Søraker, and Eric Steinhart chose as the title of the book they co-edited on TS:

Singularity Hypotheses: A Scientific and Philosophical Assessment [

32].

Because, for now, the “infomorphic” paradigm/assumption is still debated and because this is the ground/ultimate source of the TS hypothesis, the results of the debates on TS cannot be, consequently, boldly and clearly related to truth or falsehood.

As TS is deeply related to AC—it is not possible without it!—a good method for an appropriate TS study is to remember the claims of one of the most well-known defenders of TS, Kurzweil.

John Smart is considered among the “prominent explorers and advocates of the technological Singularity idea,” along with brilliant researchers such as John Von Neumann, I.J. Good, Hans Moravec, Vernor Vinge, Danny Hillis, Eliezer Yudkowsky, Damien Broderick, Ben Goertzel and “a small number of other futurists, and most eloquently to date, Ray Kurzweil” [

30].

For that “eloquence,” I choose to refer here, especially, to Kurzweil.

(In the meantime, the “infopomorphic” assumption remains active for TS’s defenses).

One of Kurzweil’s main ideas is that change is exponential and not “intuitively linear”—as is the Kurzweil’s case, he thinks, even with “sophisticated commentators” who “extrapolate the current pace of change over the next ten years or one hundred years to determine their expectations.” Meanwhile, “a serious assessment of the history of technology” will reveal that “technological change is exponential” for “a wide variety of technologies, ranging from electronic to biological … the acceleration of progress and growth applies to each of them” [

33].

However, despite Kurzweil’s belief/beliefs—a “natural” result of his own expectations—it was observed that our world is changing, but not as fast as we would be tempted to think, based on our own perceptions: “the world is changing. But change is not accelerating.” So, the very idea of an exponential growth of change is disputable [

34].

b. Has the Road toward TS a Single Shape/Route?

A second main idea of Kurzweil’s is related to the inevitability of TS. It is based on a subjective extension, following Moravec, of the so-called “Moore’s law” of exponential growth in computing power, having the shape of an asymptote, as a graphic representation of AC, toward a human-like AGI’s existence and accelerated growth.

Even in popular science it was observed that the so-called LAR has “altered public perception of Moore’s law,” because, contrary to the common belief promoted by Kurzweil and Moravec, Moore is

only making predictions related to the performance(s) of “semiconductor circuits” and not “predictions regarding all forms of technology” [

29].

In fact, there were numerous and various debates on the very existence of Kurzweil’s extension of Moore’s law [

35,

36]. In the references we cite just four of them.

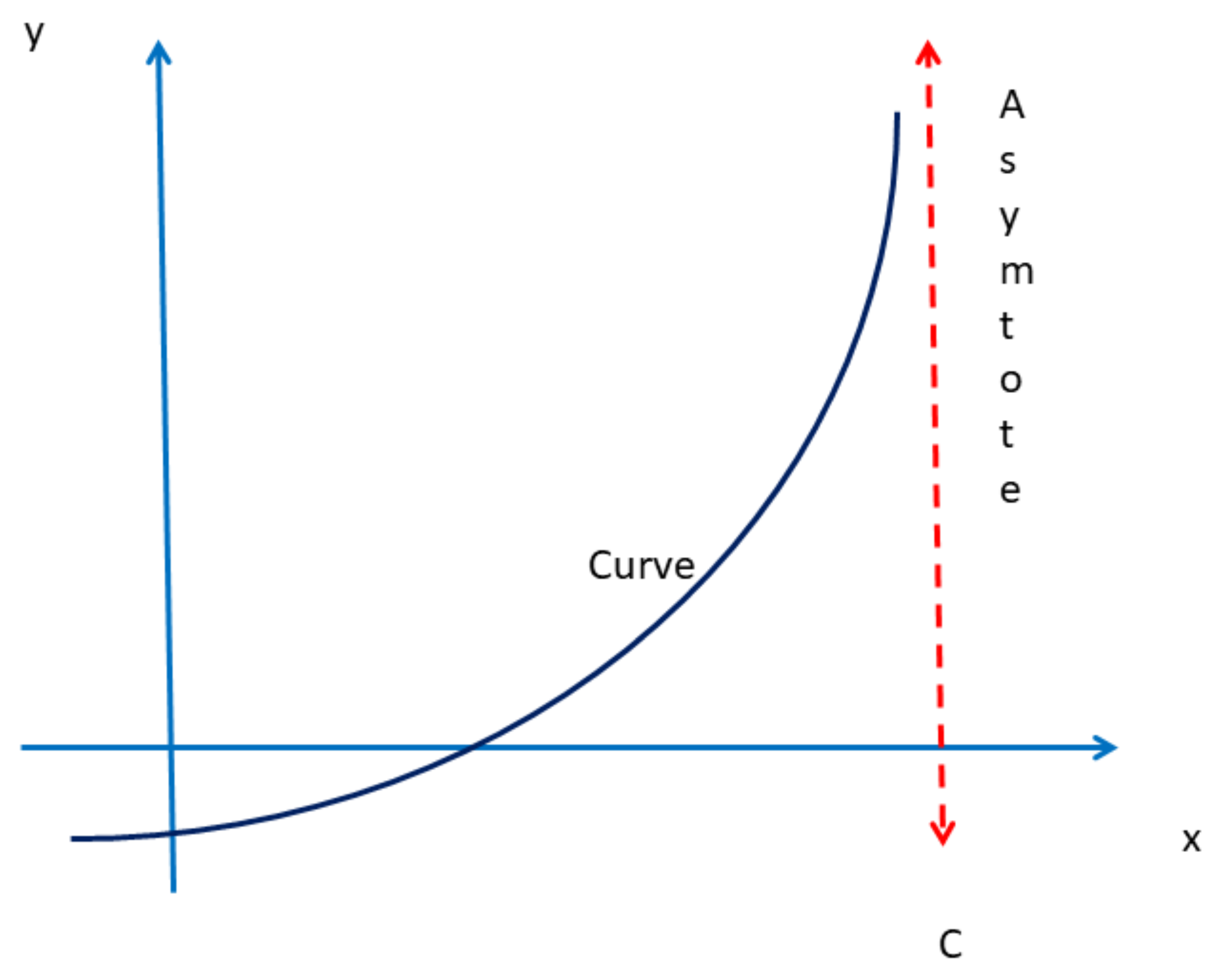

Most likely, it is not possible to claim exponential growth in the form of a vertical-like asymptote where the curve approaches +∞ [

37] (

Figure 1).

(One of this paper’s reviewers noted “the exponential growth doesn’t have a vertical asymptote.” Indeed, but

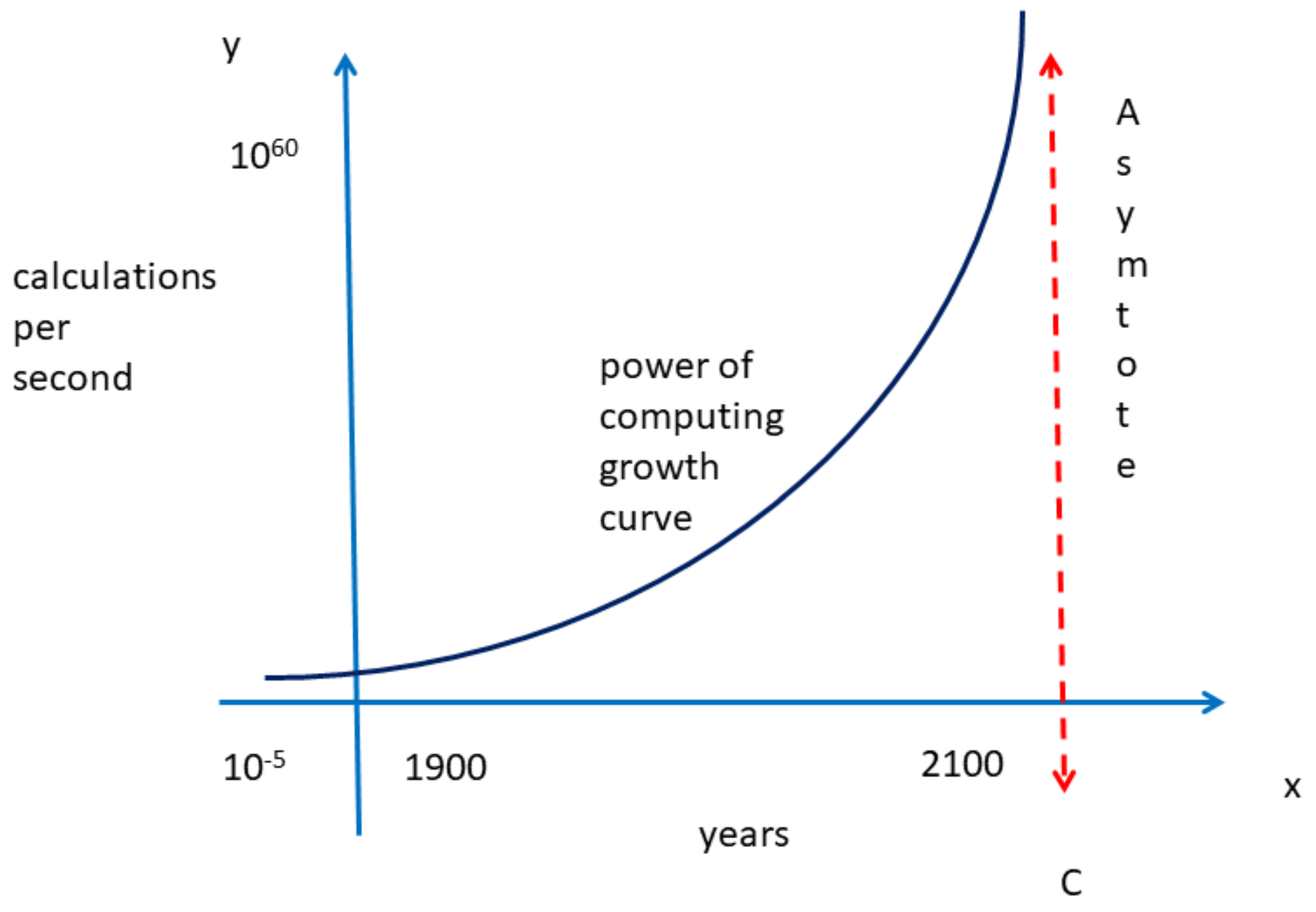

this asymptotic-like shape seems to be used by Kurzweil in order to represent the growth of computing power as in

Figure 2 toward a vertical endless growth. More likely the cause is quite simple: it is better at fitting

his expectations and shows the patterns

he was looking for. As Korotayev observes, Kurzweil uses

three graphs to illustrate the countdown to Singularity [

38] (page 75). Yet he argues that Kurzweil did not know about the mathematical Singularity, nor was he aware of the differences between exponential and hyperbolic growth, etc.

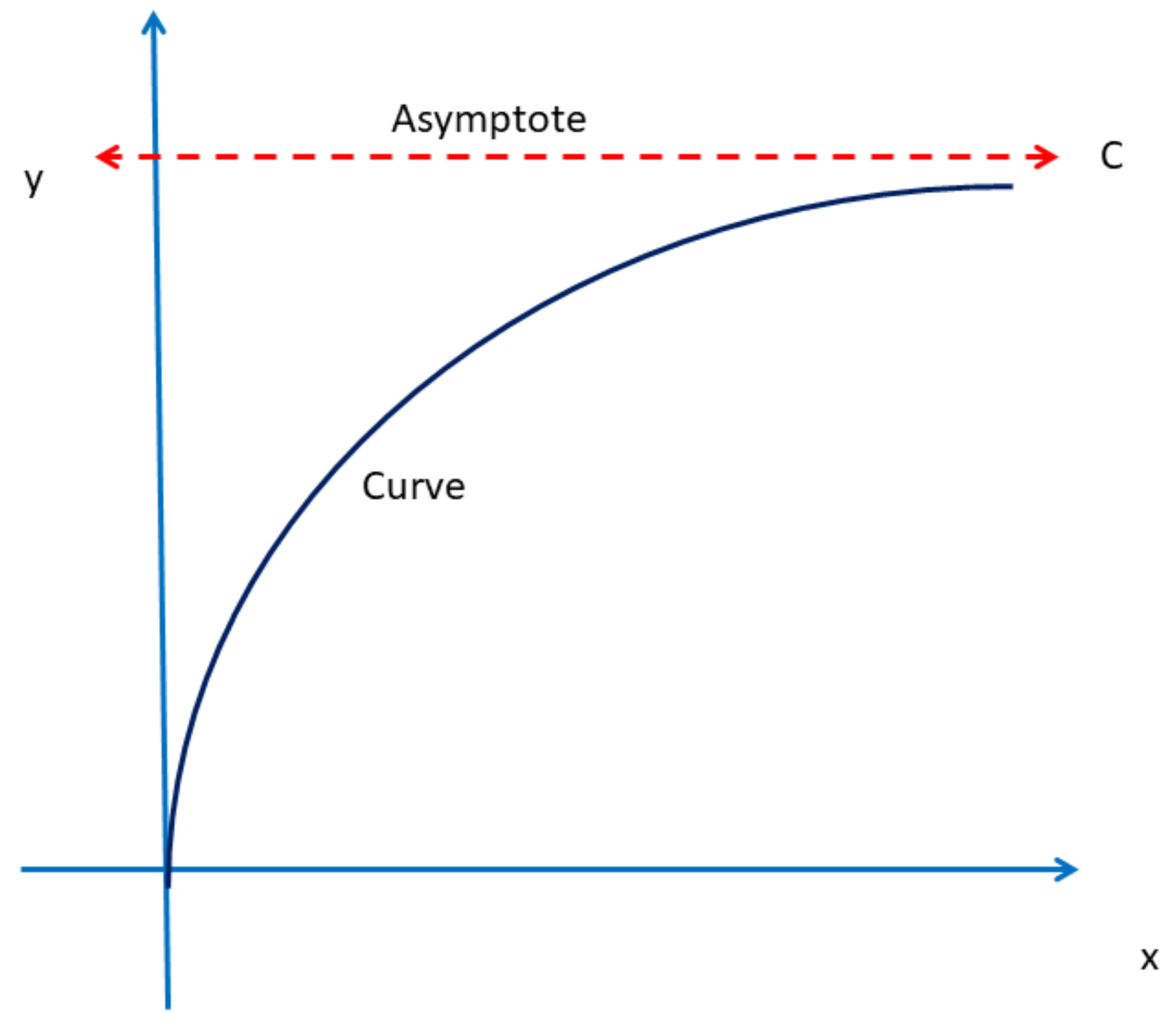

But, instead, in the form of a horizontal one (

Figure 3),

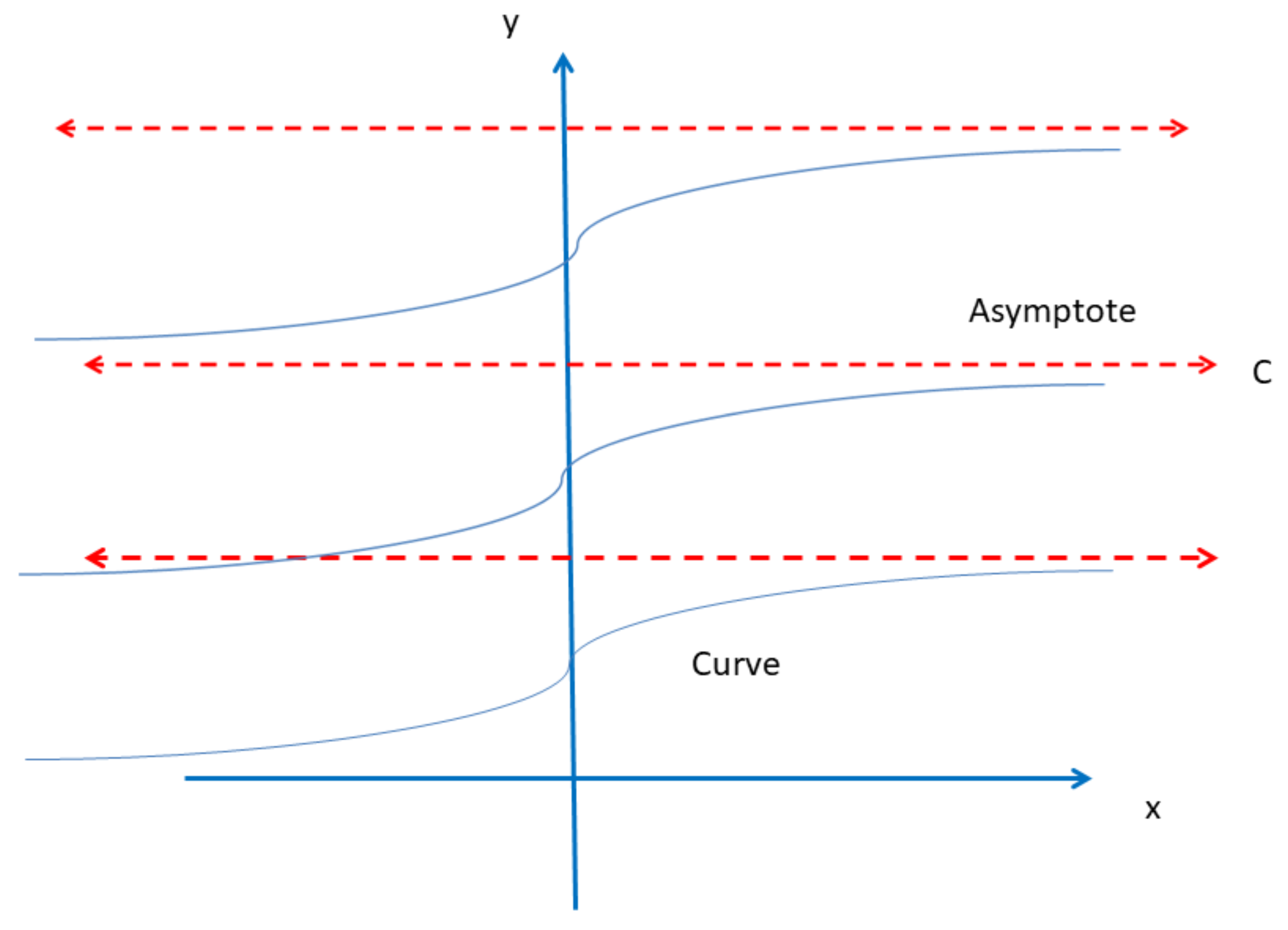

Or even, more naturally, in the form of successive horizontal asymptotes, but vertically arranged (

Figure 4),

I think the

Figure 4 fits more naturally the history of breakthroughs in science and technology, as it evokes a succession of jumps in the evolution towards TS.

Indeed, two asymptotes will constitute a hyperbola under certain conditions.

One of the reviewers observed, “The asymptote in TS appears, because each next type of cybernetic systems develops with a higher exponential growth rate, and the time between metasystem transactions to novel cybernetic systems becomes smaller.”

However, Kurzveil’s TS is related to the growth of computing power, which is related to his extrapolation of Moore’s Law. Under these circumstances, let us remember Tom Simonite’s paper from the

MIT Technology Review, “Moore’s Law Is Dead. Now What?”, where he quotes Horst Simon, deputy director of the Lawrence Berkeley National Laboratory, saying “the world’s most powerful calculating machines appear to be already feeling the effects of Moore’s Law’s end times. The world’s top supercomputers aren’t getting better at the rate they used to” [

40].

The reviewer continues: “consequently, it doesn’t really matter if the exponential growth of individual technologies continues infinitely or becomes S-shaped curve with a horizontal asymptote if new technologies outperform older technologies with higher growth rate.”

I have some difficulties in understanding the extrapolation made above.

A first one is related to the observation made by Andrey Korotayev, who states: “let us stress again that the mathematical analysis demonstrates rather rigorously that the development acceleration pattern within Kurzweil’s series is NOT exponential (as is claimed by Kurzweil), but hyperexponential, or, to be more exact, hyperbolic” [

38] (p. 84).

This is why some researchers, studying the macro-regularities in the evolution of humankind, came to different conclusions about the possible evolution of AC. A second perplexity is related to the observation that it really matters if the exponential growth of individual technologies is a S-shaped curve [

23].

Andrey Korotayev’s conclusions, in his re-analysis of 21st-century Singularity, are: “the existence of sufficiently rigorous global macroevolutionary regularities (describing the evolution of complexity on our planet for a few billions of years)” are “surprisingly accurately described by extremely simple mathematical functions.” Moreover, he thinks, “there is no reason “to expect an unprecedented (many orders of magnitude) acceleration of the rates of technological development” near/in the region of the so-called “Singularity point.” Instead, “there are more grounds for interpreting this point as an indication of an inflection point, after which the pace of global evolution will begin to slow down systematically in the long term” [

38].

This is why I used the representation within

Figure 4 (above). The main idea is not to correctly represent the succession of the exponential growth, but to highlight the discontinuities in the evolution of technology. I agree with Richard Jones, who observes: “the key mistake here is to think that ‘Technology’ is a single thing, that by itself can have a rate of change, whether that’s fast or slow.” Indeed, “there are many technologies, and at any given time some will be advancing fast, some will be in a state of stasis, and some may even be regressing.” Moreover, “it’s very common for technologies to have a period of rapid development, with a roughly constant fractional rate of improvement, until physical or economic constraints cause progress to level off” [

27].

So, the mathematical representation of the AC, as leading to TS, could have different perspectives beyond the graphic defended by Kurzweil, and his expectations related to the road toward TS are problematic.

c. Is There an Explanation for Kurzweil’s “Discoveries”?

One could ask: Why have Kurzweil and the defenders of AC reached the idea of exponential growth?

An appealing but unexpected answer is quite simple: they have searched for data to confirm their expectations.

As Michael Shermer observed, human beings are “pattern-seeking story-telling animals” [

41].

This is why “we are quite adept at telling stories about patterns, whether they exist or not” [

41].

He named this tendency “patternicity” [

42].

Observing and evaluating, we are looking for and finding “patterns in our world and in our lives”; then, we “weave narratives around those patterns to bring them to life and give them meaning.” Michael Shermer concludes: “such is the stuff of which myth, religion, history, and science are made” [

43].

In Kurzweil’s “discoveries,” we have both a subjective extension of an expectation—AC, and an alteration of the data in order to fit the model “discovered”—the exponential growth of the returns toward the Singularity point.

Kurzweil’s LAR is an example of connection created by its own expectations.

Yet, as one of the reviewers underlined, Kurzweil is neither the one who discovered TS nor the only one who is promoting it. However, again, due to his “eloquence,” he is exemplary for the “infomorphic” paradigm. While his assumption remains controversial, even some clever works—such as Valentin F. Turchin’s [

44], mentioned by one of the reviewers of this paper—found a cybernetic approach in human evolution [

44], while others promoted an entire cybernetic philosophy—as was the case with Mihai Draganescu’s works [

45,

46,

47], or have even questioned if we are living in a computer simulation [

48]. One example of this flourishing debate and controversy is Ken Wharton’s paper dismissing the computer simulation theory [

49], or Zohar Ringel and Dmitry L. Kovrizhin’s [

50] or Andrew Masterson’s conclusions on the same subject [

51].

Those “infomorphic” paradigm-related theories of everything are, very probably, just examples of the (false) perceptions (and beliefs) created by our expectations.

As already underlined above, the status of AC as an objective tendency is still under debate. So is the status of LAR.

These are perceptions (and beliefs) created by our expectations—examples of patternicity. AC (and LAR) are just examples of a specific patternicity case: apophenia.

Apophenia is defined by the

Merriam-Webster Dictionary as “the tendency to perceive a connection or meaningful pattern between unrelated or random things (such as objects or ideas)” [

2], by the RationalWiki as “the experience of seeing meaningful patterns or connections in random or meaningless data” [

52] and by

The Skeptic’s Dictionary as “the spontaneous perception of connections and meaningfulness of unrelated phenomena” [

53].

Until now, several types of

apophenia have been studied:

clustering illusion (“the cognitive bias of seeing a pattern in what is actually a random sequence of numbers or events” [

54]);

confirmation bias (“the tendency for people to (consciously or unconsciously) seek out information that conforms to their pre-existing view points, and subsequently ignore information that goes against them, both positive and negative” [

55]);

gambler’s fallacy (“the logical fallacy that a random process becomes less random, and more predictable, as it is repeated” [

56]); and

pareidolia (“the phenomenon of recognizing patterns, shapes, and familiar objects in a vague and sometimes random stimulus” [

57]).

AC and LAR seem to be just cognitive biases related to the representation of future-related expectations.

4. There Is More than Apophenia in Kurzweil’s TS; It Is Pareidolia

My two hypotheses about Kurzweil’s famous “law of accelerating returns” (LAR) as undoubtedly leading to TS are the following.

1.

LAR is more likely just a new case of apophenia [

58,

59]—as it shows “the spontaneous perception of connections and meaningfulness of unrelated phenomena” [

53] and for centuries people have been perceiving the changes in science and technology as accelerating [

23,

58,

60].

One of the reviewers of this paper wrote, “one absolutely cannot agree that exponential growth is a false pattern observed in random data as supposed by the notion of apophenia.”

This is Kurzweil’s opinion, too.

However, it is a false pattern not only because he manipulated data [

23], but also, and more importantly, because exponential growth is just one of the possible models of growth [

61] and it cannot continue indefinitely, but sometimes makes an+ inflexion and becomes an exponential decay [

61,

62] or just a slowdown [

38].

The models of growth could have several types of representation, not only the exponential one.

“There is a whole hierarchy of conceivable growth rates that are slower than exponential and faster than linear (in the long run)” [

61] and “growth rates may also be faster than exponential.” In extreme cased, “when growth increases without bound in finite time, it is called hyperbolic growth. In between exponential and hyperbolic growth lie more classes of growth behavior” [

38,

61]. Ideally, growth continues “without bound in finite time” [

38,

61]. Sometimes exponential growth is simply slowdown [

38,

63].

When rejecting exponential growth as a most likely false pattern, we come up against the following problem: it is based on the evolutionary acquisition of patternicity as a specific human adaptive behavior, in order to ensure or facilitate individual or collective survival.

Indeed, “the search for pertinent patterns in the world is ubiquitous among animals, is one of the main brain tasks and is crucial for survival and reproduction.” In the meantime, “it leads to the occurrence of false positives, known as patternicity: the general tendency to find meaningful/familiar patterns in meaningless noise or suggestive cluster” [

64].

When claiming AC and consequently LAR and TS are objective tendencies, we are assuming everything is eventually explainable in a Mendeleevian-like table, in a solid, monolithic, and somehow mechanical explanation—so every other possibility should be rejected. Or, the world, human society, human beings, and very evolution of science and technology are complex and hardly predictable, as demonstrated in “The Age of Surprise” report [

6].

Claiming AC/LAR/TS are objective tendencies leads, necessarily, to a subjective selection of data considered trustworthy because it fits our expectations. Or this is a new form of apophenia.

So, how can we trust the claims related to TS’s possibility or even inevitability? Under these circumstances, as one of the reviewers correctly observed, when, maybe, “we can easily trust the claims related to TS’s possibility,” “we cannot so easily trust the claims related to TS’s inevitability.” I would add here: our trust is also a patternicity result.

2. TS could be considered more likely as a new case of pareidolia [

41], because TS is AGI-based, and AGI is commonly and uncritically understood as a human-like intelligence.

The specificity of LAR’s apophenia and TS’s pareidolia (through AGI) is related to the direction of our perceptions and expectations—they are both future-related.

The arguments for such claims will be deployed in the following pages.

a. The Perfect New World of TS

As we saw, Kurzweil’s expectations (and beliefs) are the following: “technological change is exponential, contrary to the common-sense ‘intuitive linear’ view”; the “returns” are increasing exponentially; there is “exponential growth in the rate of exponential growth”; machine intelligence will surpass, within just a few decades, human intelligence, “leading to The Singularity—technological change so rapid and profound it represents a rupture in the fabric of human history” based on “the merger of biological and nonbiological intelligence, immortal software-based humans, and ultra-high levels of intelligence that expand outward in the universe at the speed of light” [

19].

For Kurzweil and the Singularitarians [

65]—the adepts, the defenders and those who promote Singularitarianism [

66] in almost a religious way [

67,

68]—these expectations (and beliefs) seem to be confirmed by the pace of progress in science and technology.

Or, rather, there is no one pace of progress, but paces of progress.

Some critics of LAR observed that there are not only different rates of AC in technical innovation and scientific discovery, but also very different phenomena and processes appropriate to be mathematically included in graphical representation(s) of the accelerating change [

38].

Meanwhile, for other researchers, TS is just intellectual fraud [

69].

From such a perspective, Kurzweil’s LAR and Richard W. Paul’s plead for AC are just unnecessary reductions of the complexity of the tendencies in the evolution of science and technology, to the linearity and predictability of our expectations.

Unifying and reducing all the rhythms and paces of progress to one perspective and equation is an exercise of the imagination, under the question: How will the perfect future world be?

b. (Again) “Accelerating Change” (AC) as Apophenia

Considering apophenia and pareidolia, let us remember, once more, some of their characteristics.

They can occur simultaneously, observes Robert Todd Carroll, as in the case of “seeing a birthmark pattern on a goat as the Arabic word for Allah and thinking you’ve received a message from God” or, as when seeing not only “the Virgin Mary in tree bark but believing the appearance is a divine sign” [

58]. Here he discovers both

apophenia and

pareidolia.

Yet, “seeing an alien spaceship in a pattern of lights in the sky is an example of pareidolia,” but it becomes apophenia if you believe the aliens have picked you as their special envoy [

62].

Moreover, continues Carroll, commonly, “apophenia provides a psychological explanation for many delusions based on sense perception”—“UFO sightings”; “hearing of sinister messages on records played backwards”—whereas “pareidolia explains Elvis, Bigfoot, and Loch Ness Monster sightings” [

58].

Seeing the pattern of exponential acceleration in the pace of technological change and representing the exponential growth as an asymptote is apophenia, because there is no general consensus about the objective existence of AC (and LAR) and, in an attempt to break the ultimate unpredictability of the complexity of future evolution of technology and science, unrelated and/or unclearly related phenomena and/or processes are connected and considered meaningful based on our profound need for order [

70].

It is a semiotic situation as “any fact becomes important when it’s connected to another” (Eco).

Even though it is a model that seems to work for particular cases, it still proves the ultimate weakness of a reductionist approach, as was true with the Ptolemaic model, too. Let us remember that from a false statement we can reach both a true or a false conclusion—in this case, from a reductionist subjective model we could have both falsehood and truth in the idea of change in the evolution of technology. Because of this truth‒falsehood status of the observation-based idea, there is sometimes accelerating change in some technologies’ evolution; we cannot extrapolate to AC (as a general objective tendency) and consider the model we use—based on a positivist assumption of the technology’s progress—to be necessarily true.

It is a special case of

patternicity, an apophenia, when, based on subjective selection and

arbitrary inferences [

71], various evolutionary tendencies, from various fields, are merged into a single evolutionary pattern.

The graphic representation Kurzweil used to illustrate the growth of computing power is

Figure 2 (above).

The data related to technological change and advancement have been altered, manipulated, and adjusted in order to fit his expectations related to AC, LAR, and TS.

Observing Kurzweil’s “methodology,” Nathan Pensky concluded, “Thus, ‘evolution’ can mean whatever Kurzweil wants it to mean.” It requires joining “disparate types of ‘evolution’” [

72].

Under these conditions, “the graph takes an exponential curve not because humans have moved inexorably along a track of “accelerating returns,” but because Kurzweil has “ordered” data points in order to “reflect the narrative he likes” [

72].

The future-related TS’s

apophenia [

73] is a good example of the

intentionality fallacy described by David Christopher Lane [

74] and explained by Sandra L. Hubscher in her article on

apophenia from

The Skeptic’s Dictionary [

59].

c. TS (through AGI) as Pareidolia

As a new type of

pareidolia, TS could be described as a wrong, subjective visual representation of the expectation related to human future(s), under a human-like AGI presupposition/assumption, also using

arbitrary inferences [

71].

The best example is this common and uncritical expectation: AGI, leading, inexorable, to TS will be human-like—at least in its first stages.

As in this

Information special issue, serious arguments have been deployed against such an idea [

75].

Let us illustrate the enthusiastic defense of human-like AGI based on a short survey of bombastic headlines in the media: “One step closer to having human emotions: Scientists teach computers to feel ‘regret’ to improve their performance [

76], “Daydreaming simulated by computer model” [

77], “Kimera Systems Delivers Nigel—World’s First Artificial General Intelligence” [

78], “Meet the world’s ‘first psychopath AI’” [

79], “Human-like A.I. will emerge in 5 to 10 years, say experts” [

80], etc.

The expectation that AI/AGI is/will be human-like or will be congruent with human intelligence(s) and emotion(s) is the ground for a narcissistic and future-related TS pareidolia. In fact, AI/AGI could have different characteristics than human intelligence, even though we human beings have created the so-called “intelligent software” [

81].

One cause of the anthropocentric claims of the Singularitarians could be this: we are often forgetting that, from a false assumption—in this case, there is AC/LAR and AGI will be, necessarily, a human-like intelligence—based on an apophenic/pareidolic future related hope, one can deduce anything.

An example is Roman Yampolskyi’s rejection of Toby Walsh’s idea that the Singularity may not be near [

82], in this special issue of

Information.

TS’s pareidolia cannot be validated as true or false, even by the most brilliant minds.

5. The TS-Related “Complexity Fallacy”

Another cause of such anthropocentric claims could be related to a wrong understanding and management of complexity, not only in the research on TS, but in the very way we are creating our hardware and software.

All these discussions, debates, and controversies related to AC, AI/AGI, or TS seem to be like the birds’ uncoordinated songs in a wood and not like a symphony. There is a rise in different perspectives, definitions, and claims related to them.

Under these circumstances, systematizations and classifications have been proposed in papers and/or books/collected papers signed/edited by various researchers: Anders Sandberg [

31], Nick Bostrom [

83], Nikita Danaylov (Socrates) [

84], Amnon H. Eden, James H. Moor, Johnny H. Søraker and Eric Steinhart [

32], Eliezer S. Yudkowsky [

85], IEEE’s

Spectrum. Special Report on Singularity [

86], John Brockman [

87], Adriana Braga and Robert K. Logan [

75], etc.

In fact, the history of science and technology is full of attempts to reduce the richness of the facts, phenomena, entities, and beings to a Mendeleevian-like table. “This is the Faustian knowledge management philosophy assumed by the Wizard Apprentice” [

88].

This is “a sign of a deep belief in the power of the taxonomy.” It is also “an effect of the so-called presupposition of the ‘generic (=linear and fully predictable) universality’—one of the best expressions of a mechanistic perspective on the world.” It is about “claiming that we could fully reverse a deduction,” usually “through strait abduction, in an attempt to rebuild the so called unity of the unbroken original mirror of the human knowledge using its fragments” [

88].

This is about dismissing complexity for the profit of reductionist simplicity.

I would name this reduction of what is complex—and so, nonlinear and unpredictable, but also partially predictable—to what is linear and predictable, when naturally predictable (just complicated), the complexity fallacy. There are many AC, LAR, AI/AFI, and TS approaches deploying such a fallacy as an effect of an unconscious patternicity.

This situation suggests that AC, LAR, AI/AGI, and TS have to be studied from a perspective really based on complexity, as was already suggested by Paul Allen—with his “complexity break” argument against TS [

89] and Viorel Guliciuc—with his examples of differences between computers’ and human networked minds’ functioning [

88].

Paull Allen observes, “the amazing intricacy of human cognition should serve as caution for those who claim that the Singularity is close,” as “without having a scientifically deep understanding of the cognition, we cannot create the software that could spark the Singularity.” So, “rather than the ever-accelerating advancement predicted by Kurzweil,” it is more likely “that progress towards this understanding is fundamentally slowed by the complexity break” [

89].

Complexity break is described in these words: “as we go deeper and deeper in our understanding of natural systems,” we find we need “more and more specialized knowledge to characterize them, and we are forced to continuously expand our scientific theories in more and more complex ways.” So, “understanding the detailed mechanisms of human cognition is a task that is subject to this complexity brake” [

89].

As quoted in this special issue of

Information, “human minds are incredibly complex” [

76] and the way humans think in patterns is very different from AI/AGI data processing [

90]. So, an AGI leading to TS should necessarily embody human-like emotions in cognition [

91].

Moreover, AI researchers—and let us assume that the same is applicable to AGI and TS researchers—“are only just beginning to theorize about how to effectively model the complex phenomena that give human cognition its unique flexibility: uncertainty, contextual sensitivity, rules of thumb, self-reflection, and the flashes of insight that are essential to higher level thought” [

89].

As Robert K. Logan and Adriana Braga argued in their essay on the weakness of the AGI hypothesis, there is real danger in devaluing “aspects of human intelligence” as one cannot ignore or consider in a reductionist way “imagination, aesthetics, altruism, creativity, and wisdom” [

75].

There is no need here to consider again the discussion about the strong AGI’s hypothesis and the dangers AGI’s (human-like) misunderstanding could bring with it, already detailed in their paper and/or in other papers from this special issue of

Information [

90,

91,

92].

Instead, what it is important for this paper is the conclusion of one of the papers from this special issue that even id “it is possible to build a computer system that follows the same laws of thought and shows similar properties as the human mind,” “such an AGI will have neither a human body nor human experience, it will not behave exactly like a human, nor will it be “smarter than a human” on all tasks” [

93]. A similar conclusion related to something other than full human-like evolution of AGI is underlined by other researchers, too [

81].

Accepting these observations, let us add the findings of Thomas W. Meeks and Dilip V. Jeste in the neurobiology of wisdom when dealing with uncertainty: “prosocial attitudes/behaviors, social decision making/pragmatic knowledge of life, emotional homeostasis, reflection/self-understanding, value relativism/tolerance” [

94].

The AGI strong hypothesis is not just “very complicated,” as noted by one of the reviewers of this paper, but complex. That is, complexity cannot be reduced to complicatedness.

However, the next observation of the reviewer can be fully accepted: “The author may want to revise the conclusion ‘AGI is impossible’ to ‘The possibility of AGI cannot be established by the arguments provided via TS and AC’.”

Indeed, we do not have, for now, enough evidence to decide if (human-like) AGI is possible or impossible, nor enough arguments to sustain the truth of the claim that AC, through AGI, will necessarily lead to TS.

This is why most of the current perspectives on AGI and TS seem to be unprepared to really deal with their complexities, and this is “why they are facing so many difficulties, uncertainties and so much haziness in the full and appropriate understanding of the TS” [

88].

6. Conclusions

a. The appropriate study of AC, AI/AGI, and/or TS requires the complexity of networked minds in order to manage the complexity break and avoid the complexity fallacy and different forms of wrong patternicity.

The argument for such a claim is, somehow unexpectedly, offered by the very functioning of the social networks specialized and focused on research.

CrowdForge [

95], EteRNA [

96], and other experiments [

97], for example, proved the power of networked minds having a research task,

when dealing with missing data, to obtain, each time, “impossible” correct results, when the most powerful computers and software were not able to reach any correct result.

EteRNA players, for example, were “extremely good at designing RNA’s.” Their results were most surprising “because the top algorithms published by scientists are not nearly so good. The gap is pretty dramatic.” This chasm was attributed to the fact that “humans are much better than machines at thinking through the scientific process and making intelligent decisions based on past results.” This conclusion is of the greatest importance for AGI and/or TS studies as “a computer is completely flummoxed by not knowing the rules”; when human “players are unfazed: they look at the data, they make their designs, and they do phenomenally” [

96].

What could explain the clear gap between human networked minds and computers’ results?

I think the answer is this: our intelligent artifacts are built based on linear, predictable, and predictable reasoning and not based on complex, nonlinear, partially predictable, and unpredictable reasoning.

“Linear and predictable” in the above claim means without “imagination, aesthetics, altruism, creativity, and wisdom” [

80]. Our intelligent artifacts are executing sets of logical steps—algorithms. They cannot imagine, create, feel, or be wise. Everything they do is measurable and predictable.

Human reasoning is so complex that it cannot be reduced to a single logical rule/type of reasoning or to any set of logical rules/types of reasoning covering all possibilities. Human reasoning is more than “complicated”: it is complex and so irreducible to a machine-like model. In most cases, in human reasoning there is no unique logical rule compulsory to obtain a result—just because from a false claim/sentence/proposition we will always be in a position to obtain both the truth and the falsehood. Human logical “machines” have holes in their functionning. There is some predictability in human reasoning, but it remains not fully predictable.

Yet, when it comes to the future, and especially TS, considered as a “rupture in the fabric of human history” [

19], we cannot have enough predictable information about how it would be because we do not know what, why, and how exactly TS will be or, even, more likely,

if TS will be, so it is unpredictable.

For example, even the merging of humans with machines is complicated, as there are many meanings, types, and grades of merging [

98].

So, any number of networked computers will retain this weakness: they cannot find a result if some data is missing, when social networked minds can—as in the examples above.

We have to keep in mind “the power of the human mind to collectively surpass the power of computation of our ‘smartest’ machines just because the machine (=AI/AGI), being created using a linear reasoning, cannot deal with the complexity” [

88].

b. TS would require redesigning AGI based on complexity—which we are not sure is possible.

Reaching TS (through AGI) seems to not be possible without reaching real complexity (not complicatedness!) in designing our “intelligent” artifacts. Redesigning hardware‒software systems based on nonlinearity and unpredictability is not yet possible without fully understanding the complexity of our human, not-machine, minds. Maybe it will never be possible. Until then, TS is more likely a creation of our best expectations, an example of pareidolia, based on reductionism, subjective extrapolation, and imagination.

So, let us think (digitally) wisely and wait for the surprises the future(s) is/are preparing for us already!