3. The Partial-Knowledge Ising Model

Analyzing systems without the use of dynamical equations may be counter-intuitive, but it is done routinely; one can analyze 3D systems for which there are no dynamics, by definition. A particularly useful approach is found in classical statistical mechanics (CSM), because in that case one never knows the exact microscopic details, providing a well-defined framework in which to calculate probabilities that result from partial knowledge.

A specific example of this CSM-style logic can be found in the classical Ising model. Specifically, one can imagine agents with partial knowledge about the exact connections of the Ising model system, and then reveal knowledge to those agents in stages. The resulting probability-updates will be seen to have exactly the features thought to be impossible in dynamical systems. The fact that this updating is natural in a classical, dynamics-free system such as the Ising model will demonstrate that it is also natural in a dynamics-free (“all at once”) analysis of spacetime systems.

The classical Ising model considered here is very small to ensure simplicity: 3 or 4 lattice sites labeled by the index

j, with each site associated with some discrete variable

. The total energy of the system is taken to be proportional to

where the sum

is over neighboring lattice sites. If the system is known to be in equilibrium at an inverse temperature

, the (joint) probability of any complete configuration

σ is proportional to

. These relative probabilities can be transformed into absolute probabilities by the usual normalization procedure, dividing by the partition function

where the sum is over all allowable configurations. Therefore, the probability for any specific configuration is given by

Several obvious features should be stressed here, for later reference. Most importantly, there is exactly one actual state of the system σ at any instant; other configurations are possible, but only one is real. This implies that (at any instant) the probabilities are subjective, in that they represent degrees of belief but not any feature of reality. Therefore, one could learn information about the system (for example, that ) that would change without changing the system itself. (Indeed, learning such knowledge would also change Z, as it would restrict the sum to only global configurations where .) Finally, note that the probabilities do not take the form of local conditional probabilities, but are more naturally viewed as joint probabilities over all lattice points that make up the complete configuration σ.

The below analysis will work for any finite value of β, but it is convenient to take . At this temperature, for a minimal system of two connected lattice sites, the lattice values are twice as likely to have the same sign as they are to have opposite signs: .

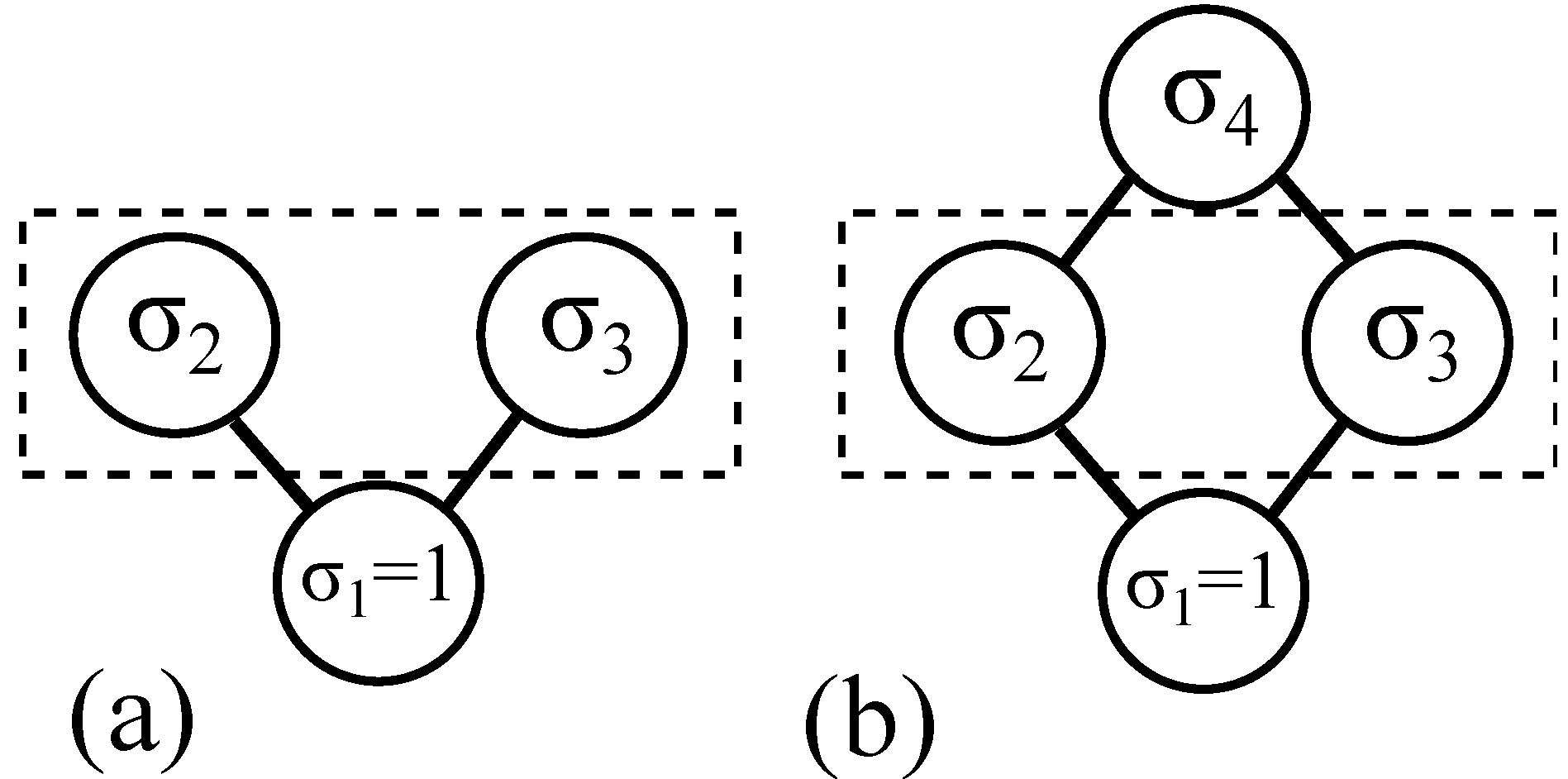

For a simple lattice that will prove particularly relevant to quantum theory, consider

Figure 1a. Each circle is a lattice site, and the lines show which sites are adjacent. With only three lattice sites, and knowledge that

, it is a simple manner to calculate the probabilities

. For

, the above equations yield probabilities:

Figure 1.

Two geometries of an Ising model; in both cases one knows the bottom lattice site is

. A dashed box indicates the subsystem of interest. The central example is where one does not know whether the geometry is that of

Figure 1a or

1b.

For

Figure 1b, the probabilities require a slightly more involved calculation because the fourth lattice site changes the global geometry (from a 1D to a 2D Ising model). In this case, the joint probability

can be found by first calculating

and then summing over both possibilities

. This process yields different probabilities:

The most interesting example is a further restriction where an agent

does not know whether the actual geometry is that of

Figure 1a or

1b. Specifically, one knows that

, and that

and

are both adjacent to

. But one does not know whether

is also adjacent to

and

(

Figure 1b), or whether

is not even a lattice site (

Figure 1a).

This is not to say there is no fact of the matter; this example presumes that is some particular geometry—it is merely unknown. This is not quite the same as the unknown values of a lattice site (which also have some particular state at any given instant), because the Ising model provides no clues as to how to calculate the probability of a geometry. All allowable states may have a known value of from Equation (1), but without knowledge of which states are allowable one cannot calculate Z from Equation (2), and therefore one cannot calculate probabilities in the configuration space .

The obvious solution to such a dilemma is to use a configuration space conditional on the unknown geometry or , assigning probabilities to . Indeed, this has already been done in the above analysis using the notation . Note that one cannot include G in the configuration space as because (unlike ) such a distribution would have to be normalized, and there is no information as to how to apportion the probabilities between the and cases.

Given this natural and (presumably) unobjectionable response to such a lack of knowledge, the next section will explore a crucial mistake that would lead one to conclude that no underlying reality exists for this CSM-based model, despite the fact that an underlying reality does indeed exist (by construction). Then, by applying the above logic to a dynamics-free scenario in space and time, one can find a strong analogy to probabilities in quantum theory with an unknown future measurement.

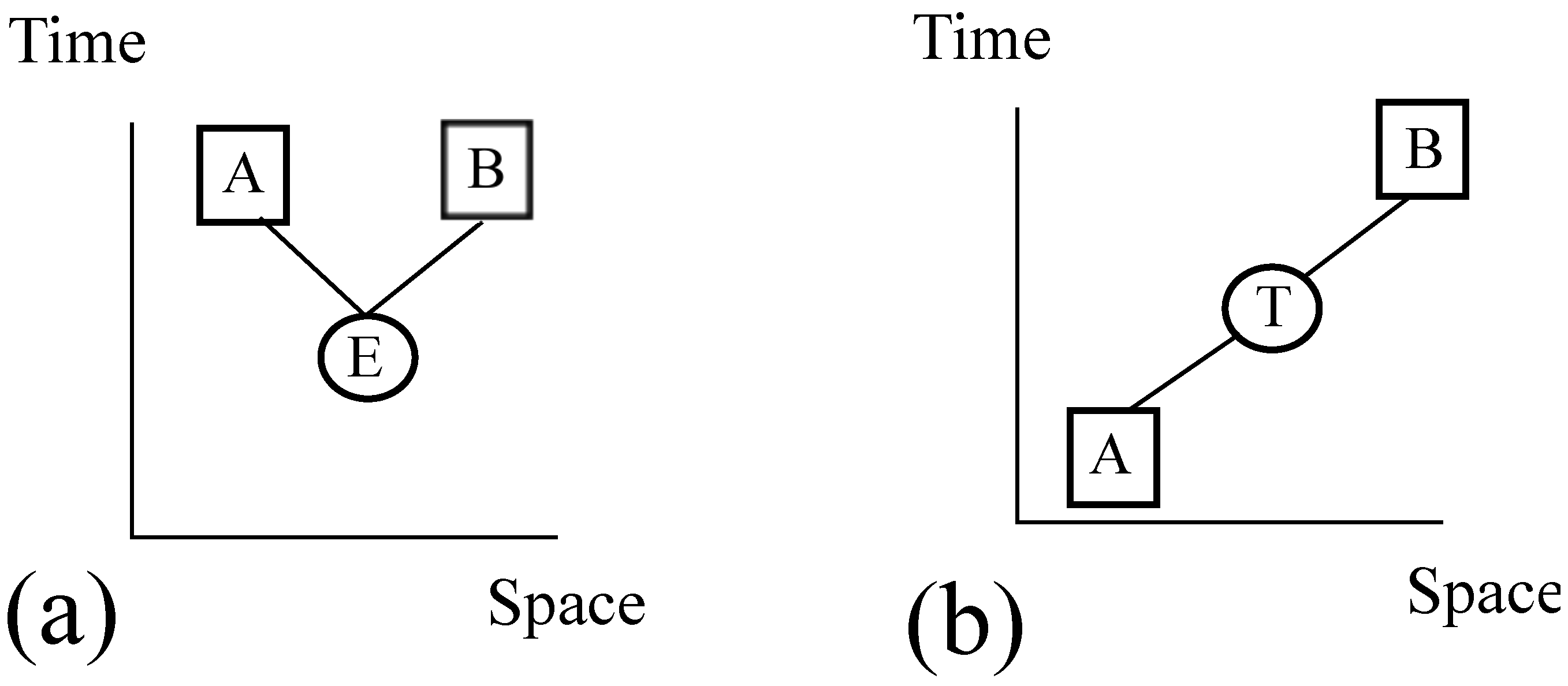

6. Lessons for the Quantum State

While the analysis in the preceding sections can inform a realistic interpretation of quantum theory, it also implies that assigning probabilities to instantaneous configuration spaces is not the most natural perspective. The above CSM-style analysis is performed on the entire system “at once”, rather than one time-slice at a time, in keeping with the spirit of the Lagrangian Schema discussed in

Section 2. The implication is that the best way to understand quantum phenomena is not to think in terms of instantaneous states, but rather in terms of entire histories.

Restricting one’s attention to instantaneous subsystems is necessary if one would like to compare the above story to that of traditional quantum theory, but it is certainly not necessary in general. Indeed, general relativity tells us that global foliations of spacetime regions into spacelike hypersurfaces may not even always be possible or meaningful. Moreover, if the foliation is subjective, the objective reality lies in the 4D spacetime block. It is here where the histories reside, and to be realistic, one particular microhistory must really be there—say, some multicomponent covariant field . A physics experiment is then about learning which microhistory μ actually occurs, via information-based updating; we gain relevant information upon preparation, measurement setting, and measurement itself.

Given this notion of an underlying reality, one can then work backwards and deduce what the traditional quantum state must correspond to. Consider a typical experimenter as an agent informed about a system’s preparation, but uninformed about that system’s eventual measurement. With no information about the future measurement geometry, the agent would like to keep track of all possible eventual measurements, or for all possible values of G (including all possible times when measurement(s) will be made). This must correspond to the quantum state, .

We can check this result in a variety of ways. First of all, as the number of possible measurements increases, the dimensionality of the quantum state must naturally increase. This is correct, in that as particle number increases, the number of experimental options also increases (one can perform different measurements on each particle, and joint measurements as well). This increase in the space of G with particle number therefore explains the increase of the dimensionality of Hilbert space with particle number.

This perspective also naturally explains the reduction of the Hilbert space dimension for the case of identical particles. For two distinguishable particles, the experimenter can make a choice between (A,B)—measurement A on particle 1 and measurement B on particle 2—or (B,A), the opposite. But for two identical particles, the space of G (and the experimenter’s freedom) is reduced; there is no longer a difference between (A,B) and (B,A). And with a smaller space of G, the quantum state need not contain as much information, even if it encodes all possible future measurements.

Further insights can be gained by considering the (standard, apparent) wavefunction collapse when a measurement is finally made. Even if the experimenter refuses to update their prior probabilities upon learning the choice of measurement setting (the traditional mistake, motivated by the Independence Assumption), it would be even more incoherent for that agent to not update upon gaining direct new information about μ itself. So we do update our description of the system upon measurement, and apply a discontinuity to .

However, through the above analysis, it should be clear that no physically discontinuous event need correspond to the collapse of

ψ. After all, when one learns about the value of a lattice site in the Ising model, one simply updates one’s assignment of configuration-space probabilities; no one would suggest that this change of

corresponds to a discontinuous change of the classical system. (The system might certainly be altered by the measurement procedure, but in a continuous manner, not as a sudden collapse.) Similarly, for a history-counting derivation of probabilities in a spacetime system, learning about an outcome would lead to an analogous updating. This picture is therefore consistent with the epistemic view of collapse propounded by Spekkens and others [

1,

2].

From this perspective, the quantum state represents a collection of (classical) information that a limited-knowledge agent has about a spacetime system at any one time. And it is not our best-possible description. In many cases we actually do know what measurement will be made on the system, or at least a vast reduction in the space of possible measurements. This indicates that a lower-dimensional quantum state is typically available. Indeed, quantum chemists are already aware of this, as they have developed techniques to compute the energies of complex quantum systems without using the full dimensionality of the Hilbert space implied by a strict reading of quantum physics (e.g., density functional theory).

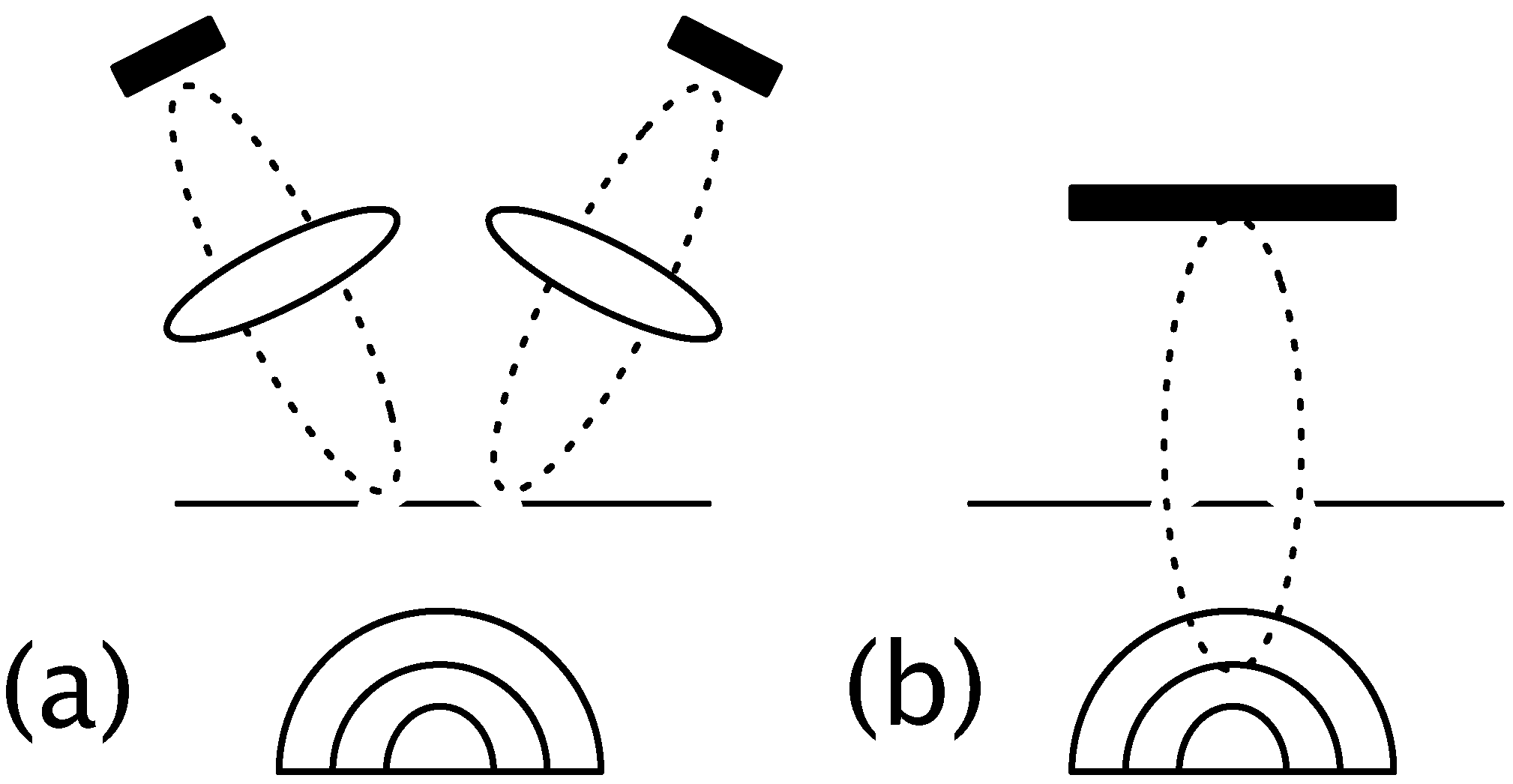

If we could determine exactly how to write

in terms of

, then it would be perfectly natural to update

twice: once upon learning the type of measurement

G, and then again upon learning the outcome. It seems evident that this two-step process would make the collapse seem far less unsettling, as the first update would reduce the possibilities to those that could exist in spacetime. For example, in the which-slit experiment depicted in

Figure 2a, if one’s possibility-space of histories had already been reduced to a photon on one detector

or a photon on the other detector, there would be no need to talk about superluminal influences upon measurement.

7. Conclusions

Before quantum experiments were ever performed, it may have seemed possible that the Independence Fallacy would have turned out to be harmless. In classical scenarios, dynamic laws do seem to accurately describe our universe, and refusing to update the past based on knowledge of future experimental geometry does seem to be justified. And yet, in the quantum case, this assumption prevents one from ascribing probability distributions to everything that happens when we don’t look. If the ultimate source of the Independence Fallacy lies in the dynamical laws of the Newtonian Schema, then before giving up on a spacetime-based reality, we should perhaps first consider the Lagrangian Schema as an alternative. The above analysis demonstrates that this alternative looks quite promising.

Still, the qualitative analogy between the Ising model and the double slit experiment can only be pushed so far. And one can go

too far in the no-dynamics direction: considering

all histories, as in the path integral, would lead to the conclusion that the future would be almost completely uncorrelated with the past, contradicting macroscopic observations. But with the above framework in mind, and the path integral as a starting point, there are promising quantitative approaches. One intriguing option [

16] is to limit the space of possible spacetime histories (perhaps those for which the total Lagrangian density is always zero). Such a limitation can be seen to cluster the remaining histories around classical (action-extremized) solutions, recovering Euler-Lagrange dynamics as a general guideline in the many-particle limit. Better yet, for at least one model, Schulman’s ansatz from

Section 5 can be derived from a history-counting approach on an arbitrary spin state. And as with any deeper-level theory that purports to explain higher-level behavior, intriguing new predictions are also indicated.

Even before the technical project of developing such models is complete, the above analysis strongly indicates that one can have a spacetime-based reality to underly and explain quantum theory. If this is the case, the “It from Bit” idea would lose its strongest arguments; quantum information could be about something real rather than the other way around. It is true that this approach requires one to give up the intuitive NSU story of dynamical state evolution, so one may still choose to cling to dynamics, voiding the above analysis. But to this author, at least, losing a spacetime-based reality to dynamics does not seem like a fair trade-off. After all, if maintaining dynamics as a deep principle leads one into some nebulous “informational immaterialism” [

8], one gives up on any reality on which dynamics might operate in the first place.

More common than “It from Bit” is the view that maintains the Independence Fallacy by elevating configuration space into some new high-dimensional reality in its own right [

21]. Ironically, that resulting state is

interdependent across the entire 3N-dimensional configuration space, with the lone exception that it remains independent of its future. While this is certainly a possibility, the alternative proposed here is simply to link everything together in standard 4D spacetime, with full histories as the most natural entities on which to assign probability distributions. So long as one does not additionally impose dynamical laws, there is no theorem that one of these histories cannot be real. Furthermore, by analyzing entire 4D histories rather than states in a 3N-dimensional configuration space, one seems less likely to make conceptual mistakes based on pre-relativistic intuitions wherein time and space are completely distinct.

The central lesson of the above analysis is that—whether or not one

wants to give up NSU-style dynamics—action principles provide us with a logical framework in which we

can give up dynamics. The promise, if we do so, is a solution to the quantum reality problem [

3], where quantum states can be ordinary information about something that really does happen in spacetime. This would not only motivate better descriptions of what is happening when we don’t look (incorporating our knowledge of future measurements), but would formally put quantum theory on the same dimensional footing as classical general relativity. After nearly a century of efforts to quantize gravity, perhaps it is time to acknowledge the possibility of an alternate strategy: casting the quantum state as

information about a generally-covariant, spacetime-based reality.