3. Benchmark Datasets and Evaluation Metrics

This section presents and discusses benchmark datasets used for story generation and evaluation metrics that assess the performance of story generation models.

Table 1 provides a compilation of the datasets documented in the literature for VSG. Among these datasets, VIST [

19] is prominently utilized by the research community. This dataset serves as a primary benchmark, and most publications in this field report their experimental results on it. The VIST-Edit dataset [

20] provides human-edited versions of machine-generated stories, offering insights into human preferences for story quality. The Visual Writing Prompts dataset [

21] is noteworthy for its focus on stories that are visually grounded on the characters and objects present in the images. These datasets allow for the exploration of more complex and imaginative narrative structures, serving as a resource to train models to generate fictional stories.

The evaluation metrics, used to assess the performance of VSG models, provide quantitative measures of the quality of generated narratives. Most of these metrics were originally developed for machine translation or summarization but have been adopted by the community for other tasks such as image captioning and story generation: instead of comparing a generated text with a reference translation or summary, the generated text is compared with a reference caption in the case of image captioning, or with a reference story in the case of story generation. These metrics include Bilingual Evaluation Understudy (BLEU) [

29], Self-BLEU [

30], Metric for Evaluation of Translation with Explicit ORdering (METEOR) [

31], Consensus-based Image Description Evaluation (CIDEr) [

32], Recall-Oriented Understudy for Gisting Evaluation (ROUGE) [

33], Perplexity [

3], and Bert Score [

34]. These metrics guide the development and refinement of VSG models by offering insights into their linguistic fluency, textual diversity, and mostly lexical overlap with reference texts. They focus on specific linguistic and content-related aspects, providing quantitative measures for precision, recall, and consensus. However, they are limited by their focus on surface-level textual similarities rather than deep narrative alignment: since a story can be retold in various ways, employing different perspectives while preserving the original message, the corresponding generated text might deviate significantly from the reference text in terms of specific wording.

Huang et al. [

19] conducted an analysis to evaluate how well BLEU and METEOR correlate with human judgment in the context of VSG according to different metrics. The results in

Table 2 show that METEOR, which places a strong emphasis on paraphrasing, has higher correlation with human judgment than BLEU. Nevertheless, in spite of METEOR showing the highest correlation with human judgment, this value is still low, suggesting that a large gap still exists between automatic metrics and human judgment.

To evaluate VSG models, additional metrics that capture the creative aspects of storytelling may be necessary. These metrics might assess the uniqueness, novelty, and diversity of generated narratives, ensuring that models produce stories that go beyond mere data memorization. Coherence metrics could delve deeper into the logical flow of events, ensuring that generated narratives maintain a consistent and plausible storyline.

The proliferation of diverse Large Language Models (LLMs), such as the GPT [

35,

36,

37,

38], Llama [

39,

40], Mistral [

41,

42], and Claude [

43,

44,

45,

46] families, has led to the development of systematic scoring systems, aiming for objective assessments across a wide array of language understanding tasks. These scoring systems include Multi-turn Benchmark (MT-Bench) [

47] and Chatbot Arena [

47], briefly described below. While they were initially developed for the evaluation of chatbots by human evaluators, they have been adapted to operate using a LLM as the evaluator achieving results close to those obtained by human evaluators.

As LLMs continue to improve, they show potential in replacing human annotators in many tasks [

48]. Metrics to evaluate stories could be developed based on LLMs [

47], possibly following the underlying principles discussed above.

MT-Bench is a benchmark tailored to test the multi-turn conversation and instruction-following capabilities of LLM-based chat assistants. It includes 80 high-quality multi-turn questions distributed across diverse categories such as writing, roleplay, reasoning, and knowledge domains. In the MT-Bench evaluation process, the user is presented with two distinct conversations, each generated by a different LLM-based chat assistant and is then tasked with deciding which assistant, A, B, or indicating a tie, better followed the instructions and answered the questions. For VSG evaluation, this framework could be adapted by using an LLM as an automated judge to compare stories generated by different models, evaluating them based on criteria such as narrative coherence, visual grounding, and creativity.

Chatbot Arena introduces a crowd-sourcing approach, fostering anonymous battles between LLM-based chatbot models. Users interact with two anonymous models simultaneously, posing identical questions to both and subsequently voting for the model that provides the preferred response. The Elo rating system [

47] is employed to rank the models based on their performance in these battles. This rating system assigns a numerical score to each model based on their battle outcomes, adjusting these scores higher or lower after each encounter to reflect their relative ability to satisfy user queries effectively compared to their opponents. A similar automated evaluation system for VSG could employ an LLM judge to assess pairs of generated stories given the same image sequence, ranking models based on story quality across multiple dimensions without requiring human annotators. For VSG adaptation, MT-Bench-style prompts could evaluate stories on visual grounding, narrative coherence, and creativity dimensions. A VSG Chatbot Arena could present story pairs from identical image sequences for comparative ranking.

In summary, while current metrics provide insights into specific dimensions of VSG, the development of new metrics may be necessary to holistically evaluate creativity, coherence, and emotional engagement, ensuring a more comprehensive understanding of the capabilities and limitations of these models. While LLMs show promise as automated evaluators, their use requires addressing several challenges. Prompt sensitivity can affect consistency, model updates may change evaluation criteria, and training data biases may favor certain narrative styles. Subjective dimensions like creativity remain difficult to calibrate. Rigorous validation is needed, including correlation studies with human judgments, inter-annotator reliability testing, and robustness evaluation. Despite these limitations, LLMs offer potential for scalable evaluation frameworks if properly validated.

5. Visual Story Generation

VSG combines computer vision and NLP to create coherent narratives from sequences of images or videos. This task extends beyond simple image captioning by requiring models to understand temporal relationships, infer causal connections between visual scenes, and generate engaging stories that capture the underlying narrative flow. Unlike video captioning, which focuses on objective description of visual content, VSG can also speculate on the motivations and emotions of characters since such interpretations do not contradict the visual content.

The field has evolved from early encoder–decoder architectures using CNN and RNN to Transformer-based models that leverage large-scale pretraining. These approaches must address challenges including visual understanding, temporal coherence, and narrative creativity while maintaining grounding in the provided visual content. The following subsections present the key methodologies and their contributions to advancing VSG capabilities.

5.3. Transitional Adaptation of Pretrained Models for Visual Storytelling

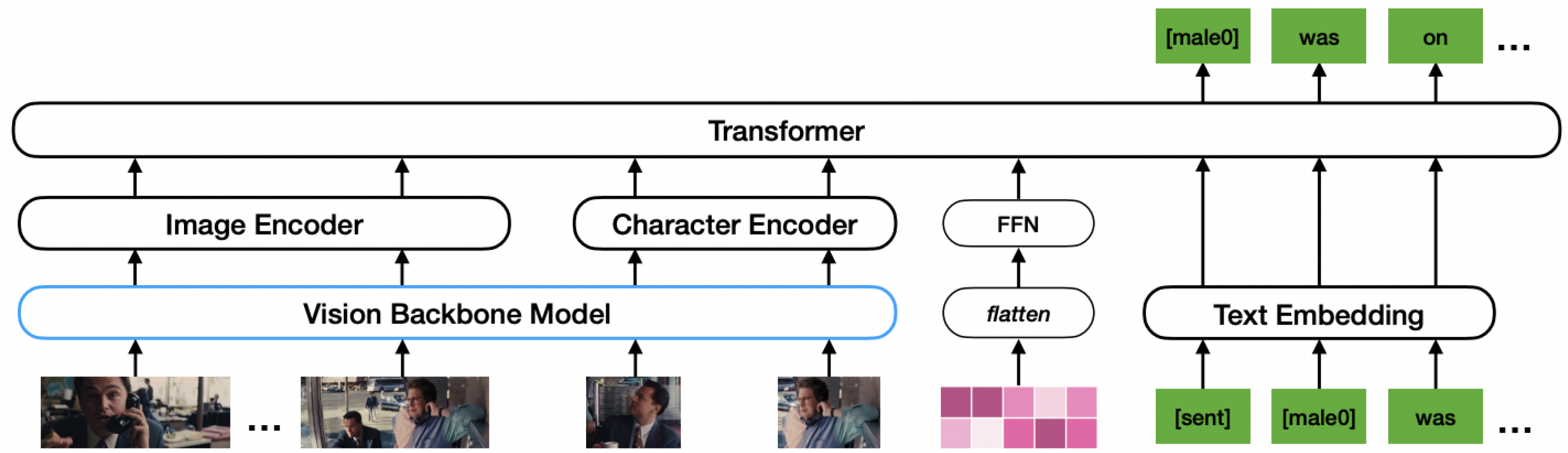

Yu et al. [

24] proposed Transitional Adaptation of Pretrained Model (TAPM), aimed at refining the generation of textual descriptions for visual content, particularly in the context of VSG tasks. It aims at bridging the gap between pretrained language models and visual encoders. In contrast with previous models for vision-to-language generation tasks, which typically pretrain a visual encoder and a language generator separately and then jointly fine-tune them for the target task, TAPM proposes a transitional adaptation task to harmonize the visual encoder and language model for downstream tasks like VSG, mitigating discord between visual specificity and language fluency arising from separate training on large corpora of visual and text data. TAPM introduces an approach that adapts multimodal modules through a simpler alignment task focusing solely on visual inputs, eliminating the need for text labels.

TAPM components aim at enhancing the quality of textual descriptions for visual content in storytelling tasks. They are a visual encoder, a language generator, adaptation training, sequential coherence loss, training with adaptation loss, and a fine-tuning and inference process. The visual encoder, a pretrained model, extracts features from images or videos. In TAPM, it becomes integral during the adaptation phase, where it integrates with the language generator to fuse visual and textual information. The pretrained language generator model is responsible for converting visual information into textual descriptions. During the adaptation phase, it generates video and text embeddings, aligning textual representations with the corresponding visual features, based on a sequential coherence loss function.

The loss function divides sequential coherence into three components: past, current, and future matching losses. The past matching loss uses a Fully Connected (FC) layer

to project the text representation

of video

i, drawing it nearer to the visual representation

of the preceding video

and distancing it from those of non-sequential videos. The future matching loss projects

via a distinct FC layer

, aligns with the subsequent visual representation

. The current matching loss then aligns the current visual representation

with

through another FC layer

. These components are unified by the FC layer projections in their respective visual spaces, pulling the embeddings of correct matches closer and pushing incorrect matches further apart. Margin ranking losses are utilized to implement this concept, contrasting correct matches with incorrect ones. The final sequential coherence loss for a given video

i is formulated as shown in Equation (

1), where cos is the cosine similarity, and

j represents indices of incorrect matches.

TAPM uses a split-training strategy to optimize model performance. Initially, the visual encoder undergoes adaptation training with the adaptation loss, while the text encoder and language generator remain fixed. Subsequently, all components are jointly updated with the generation loss, allowing the model to optimize the adaptation task before addressing the more challenging generation objective. After the adaptation and split-training phases, TAPM undergoes fine-tuning. The model is then ready for the inference phase, generating captions for unseen visual inputs.

Table 5 and

Table 6 show results for TAPM against selected baselines on the LSMDC 2019 and VIST datasets.

Table 7 shows human evaluation results in which TAPM surpasses adversarial baselines on LSMDC 2019. On VIST, it surpasses the XE [

25] and AREL [

25] baselines in relevance, expressiveness, and concreteness, as shown in

Table 8. These results highlight the strengths in word choice and contextual accuracy, showcasing its ability to capture causal relationships between images. However, the score is still far from human performance, indicating that there is still room for improvement.

Author Contributions

Investigation, D.A.P.O.; writing—original draft preparation, D.A.P.O.; writing—review and editing, D.A.P.O., E.R. and D.M.d.M. supervision, E.R. and D.M.d.M.; funding acquisition, D.A.P.O. and D.M.d.M. All authors have read and agreed to the published version of the manuscript.

Funding

Daniel A. P. Oliveira is supported by a scholarship granted by Fundação para a Ciência e Tecnologia (FCT), with reference 2021.06750.BD. Additionally, this work was supported by Portuguese national funds through FCT, with reference UIDB/50021/2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article as it is a survey paper reviewing existing literature.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Hühn, P.; Meister, J.; Pier, J.; Schmid, W. Handbook of Narratology; De Gruyter Handbook; De Gruyter: Berlin, Germany, 2014. [Google Scholar]

- Abbott, H.P. The Cambridge Introduction to Narrative, 2nd ed.; Cambridge Introductions to Literature, Cambridge University Press: Cambridge, UK, 2008. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Texts in Computer Science; Springer: London, UK, 2010. [Google Scholar]

- Gervas, P. Computational Approaches to Storytelling and Creativity. AI Mag. 2009, 30, 49. [Google Scholar] [CrossRef]

- Krishna, R.; Hata, K.; Ren, F.; Fei-Fei, L.; Niebles, J.C. Dense-Captioning Events in Videos. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 706–715. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.C.; Salakhutdinov, R.; Zemel, R.S.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Fan, A.; Lewis, M.; Dauphin, Y. Hierarchical Neural Story Generation. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Peng, N.; Ghazvininejad, M.; May, J.; Knight, K. Towards Controllable Story Generation. In Proceedings of the First Workshop on Storytelling, New Orleans, LA, USA, 5 June 2018; pp. 43–49. [Google Scholar]

- Simpson, J. Oxford English Dictionary: Version 3.0: Upgrade Version; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Lau, S.Y.; Chen, C.J. Designing a Virtual Reality (VR) Storytelling System for Educational Purposes. In Technological Developments in Education and Automation; Springer: Dordrecht, The Netherlands, 2008; pp. 135–138. [Google Scholar]

- Mitchell, D. Cloud Atlas: A Novel; Random House Publishing Group: New York, NY, USA, 2008. [Google Scholar]

- DiBattista, M. Novel Characters: A Genealogy; Wiley: Hoboken, NJ, USA, 2011; pp. 14–20. [Google Scholar]

- Griffith, K. Writing Essays About Literature; Cengage Learning: Boston, MA, USA, 2010; p. 40. [Google Scholar]

- Truby, J. The Anatomy of Story: 22 Steps to Becoming a Master Storyteller; Faber & Faber: London, UK, 2007; p. 145. [Google Scholar]

- Rowling, J. Harry Potter and the Sorcerer’s Stone; Harry Potter, Pottermore Publishing: London, UK, 2015. [Google Scholar]

- Dibell, A. Elements of Fiction Writing—Plot; F+W Media: Cincinnati, OH, USA, 1999; pp. 5–6. [Google Scholar]

- Pinault, D. Story-Telling Techniques in the Arabian Nights; Studies in Arabic Literature; Brill: Leiden, The Netherlands, 1992; Volume 15. [Google Scholar]

- Huang, T.H.K.; Ferraro, F.; Mostafazadeh, N.; Misra, I.; Agrawal, A.; Devlin, J.; Girshick, R.; He, X.; Kohli, P.; Batra, D.; et al. Visual Storytelling. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1233–1239. [Google Scholar]

- Hsu, T.Y.; Huang, C.Y.; Hsu, Y.C.; Huang, T.H. Visual Story Post-Editing. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D., Màrquez, L., Eds.; Association for Computational Linguistics: Florence, Italy, 2019; pp. 6581–6586. [Google Scholar]

- Hong, X.; Sayeed, A.; Mehra, K.; Demberg, V.; Schiele, B. Visual Writing Prompts: Character-Grounded Story Generation with Curated Image Sequences. Trans. Assoc. Comput. Linguist. 2023, 11, 565–581. [Google Scholar] [CrossRef]

- Park, C.C.; Kim, G. Expressing an Image Stream with a Sequence of Natural Sentences. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Rohrbach, A.; Torabi, A.; Rohrbach, M.; Tandon, N.; Pal, C.J.; Larochelle, H.; Courville, A.C.; Schiele, B. Movie Description. Int. J. Comput. Vis. 2016, 123, 94–120. [Google Scholar] [CrossRef]

- Yu, Y.; Chung, J.; Yun, H.; Kim, J.; Kim, G. Transitional Adaptation of Pretrained Models for Visual Storytelling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 15–19 June 2021; pp. 12658–12668. [Google Scholar]

- Wang, X.; Chen, W.; Wang, Y.F.; Wang, W.Y. No Metrics Are Perfect: Adversarial Reward Learning for Visual Storytelling. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Gurevych, I., Miyao, Y., Eds.; Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 899–909. [Google Scholar]

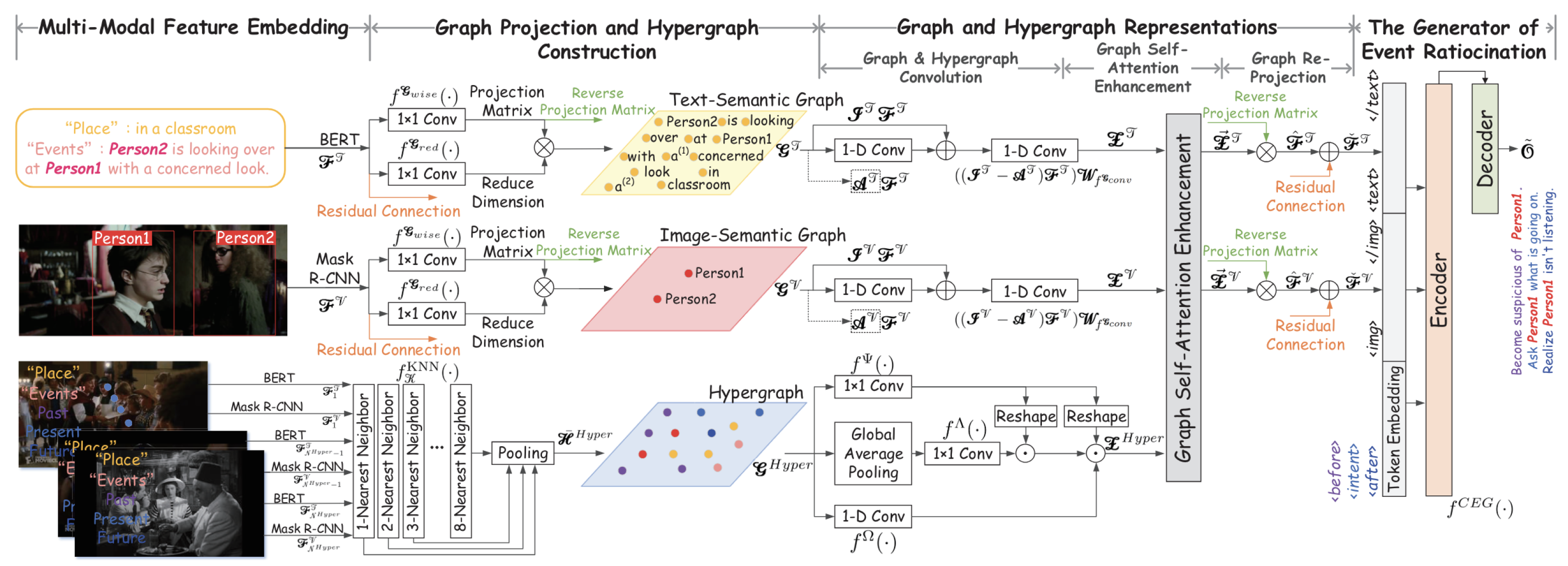

- Zheng, W.; Yan, L.; Gou, C.; Wang, F.Y. Two Heads are Better Than One: Hypergraph-Enhanced Graph Reasoning for Visual Event Ratiocination. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; Machine Learning Research. 2021; Volume 139, pp. 12747–12760. [Google Scholar]

- Kim, K.M.; Heo, M.O.; Choi, S.H.; Zhang, B.T. DeepStory: Video story QA by deep embedded memory networks. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2016–2022. [Google Scholar]

- Das, P.; Xu, C.; Doell, R.; Corso, J. A Thousand Frames in Just a Few Words: Lingual Description of Videos through Latent Topics and Sparse Object Stitching. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2634–2641. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; Isabelle, P., Charniak, E., Lin, D., Eds.; Association for Computational Linguistics: Philadelphia, PA, USA, 2002; pp. 311–318. [Google Scholar]

- Zhu, Y.; Lu, S.; Zheng, L.; Guo, J.; Zhang, W.; Wang, J.; Yu, Y. Texygen: A Benchmarking Platform for Text Generation Models. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization; Goldstein, J., Lavie, A., Lin, C.Y., Voss, C., Eds.; Association for Computational Linguistics: Ann Arbor, MI, USA, 2005; pp. 65–72. [Google Scholar]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. CIDEr: Consensus-based image description evaluation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2014; pp. 4566–4575. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2019, arXiv:1904.09675. [Google Scholar]

- OpenAI. Hello GPT-4o. 2024. Available online: https://openai.com/index/hello-gpt-4o (accessed on 9 February 2025).

- OpenAI. New Models and Developer Products Announced at DevDay. 2024. Available online: https://openai.com/blog/new-models-and-developer-products-announced-at-devday (accessed on 3 January 2024).

- OpenAI. GPT-4 and GPT-4 Turbo: Models Documentation. 2024. Available online: https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo (accessed on 4 March 2024).

- OpenAI. GPT-3.5: Models Documentation. 2024. Available online: https://platform.openai.com/docs/models/gpt-3-5 (accessed on 3 January 2024).

- Touvron, H.; Martin, L.; Stone, K.R.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Meta. Introducing Meta Llama 3: The Most Capable Openly Available LLM to Date. 2024. Available online: https://ai.meta.com/blog/meta-llama-3/ (accessed on 28 May 2024).

- Mistral AI team. Mixtral of Experts. Mistral AI Continues Its Mission to Deliver Open Models to the Developer Community, Introducing Mixtral 8x7B, a High-Quality Sparse Mixture of Experts Model. 2023. Available online: https://mistral.ai/news/mixtral-of-experts/ (accessed on 10 September 2025).

- Mistral AI team. Mistral NeMo: Our New Best Small Model. 2024. Available online: https://mistral.ai/news/mistral-nemo, (accessed on 27 July 2025).

- Anthropic. Introducing Claude 4. 2025. Available online: https://www.anthropic.com/news/claude-4 (accessed on 6 August 2025).

- Anthropic. Claude-2.1: Overview and Specifications. 2024. Available online: https://www.anthropic.com/index/claude-2-1 (accessed on 3 January 2024).

- Anthropic. Claude-2: Overview and Specifications. 2024. Available online: https://www.anthropic.com/index/claude-2 (accessed on 3 January 2024).

- Anthropic. Introducing Claude. 2024. Available online: https://www.anthropic.com/index/introducing-claude (accessed on 3 January 2024).

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.P.; et al. Judging LLM-as-a-judge with MT-Bench and Chatbot Arena. arXiv 2023, arXiv:2306.05685. [Google Scholar]

- Gilardi, F.; Alizadeh, M.; Kubli, M. ChatGPT outperforms crowd workers for text-annotation tasks. Proc. Natl. Acad. Sci. USA 2023, 120, e2305016120. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2014; pp. 3156–3164. [Google Scholar]

- Yao, L.; Torabi, A.; Cho, K.; Ballas, N.; Pal, C.; Larochelle, H.; Courville, A. Describing Videos by Exploiting Temporal Structure. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 7–13 December 2015; pp. 4507–4515. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2017; pp. 6077–6086. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gülçehre, Ç.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Olimov, F.; Dubey, S.; Shrestha, L.; Tin, T.T.; Jeon, M. Image Captioning using Multiple Transformers for Self-Attention Mechanism. arXiv 2021, arXiv:2103.05103. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Yu, J.; Li, J.; Yu, Z.; Huang, Q. Multimodal Transformer With Multi-View Visual Representation for Image Captioning. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 4467–4480. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video Swin Transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2021; pp. 3192–3201. [Google Scholar]

- Lin, K.; Li, L.; Lin, C.C.; Ahmed, F.; Gan, Z.; Liu, Z.; Lu, Y.; Wang, L. SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2021; pp. 17928–17937. [Google Scholar]

- Kazemi, V.; Elqursh, A. Show, Ask, Attend, and Answer: A Strong Baseline For Visual Question Answering. arXiv 2017, arXiv:1704.03162. [Google Scholar] [CrossRef]

- Bao, H.; Wang, W.; Dong, L.; Liu, Q.; Mohammed, O.K.; Aggarwal, K.; Som, S.; Piao, S.; Wei, F. VLMo: Unified Vision-Language Pre-Training with Mixture-of-Modality-Experts. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 32897–32912. [Google Scholar]

- Wang, W.; Bao, H.; Dong, L.; Bjorck, J.; Peng, Z.; Liu, Q.; Aggarwal, K.; Mohammed, O.K.; Singhal, S.; Som, S.; et al. Image as a Foreign Language: BEIT Pretraining for Vision and Vision-Language Tasks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 19175–19186. [Google Scholar]

- Wang, P.; Yang, A.; Men, R.; Lin, J.; Bai, S.; Li, Z.; Ma, J.; Zhou, C.; Zhou, J.; Yang, H. OFA: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Wang, P.; Wang, S.; Lin, J.; Bai, S.; Zhou, X.; Zhou, J.; Wang, X.; Zhou, C. ONE-PEACE: Exploring One General Representation Model Toward Unlimited Modalities. arXiv 2023, arXiv:2305.11172. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Changpinyo, S.; Piergiovanni, A.J.; Padlewski, P.; Salz, D.M.; Goodman, S.; Grycner, A.; Mustafa, B.; Beyer, L.; et al. PaLI: A Jointly-Scaled Multilingual Language-Image Model. arXiv 2022, arXiv:2209.06794. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Conference Track Proceedings. Bengio, Y., LeCun, Y., Eds.; ICLR: San Diego, CA, USA, 2015. [Google Scholar]

- Modi, Y.; Parde, N. The Steep Road to Happily Ever after: An Analysis of Current Visual Storytelling Models. In Proceedings of the Second Workshop on Shortcomings in Vision and Language, Minneapolis, MN, USA, 6 June 2019; Bernardi, R., Fernandez, R., Gella, S., Kafle, K., Kanan, C., Lee, S., Nabi, M., Eds.; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 47–57. [Google Scholar]

- Huang, Q.; Gan, Z.; Celikyilmaz, A.; Wu, D.O.; Wang, J.; He, X. Hierarchically Structured Reinforcement Learning for Topically Coherent Visual Story Generation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Jung, Y.; Kim, D.; Woo, S.; Kim, K.; Kim, S.; Kweon, I.S. Hide-and-Tell: Learning to Bridge Photo Streams for Visual Storytelling. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12February 2020. [Google Scholar]

- Park, J.S.; Rohrbach, M.; Darrell, T.; Rohrbach, A. Adversarial Inference for Multi-Sentence Video Description. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2018; pp. 6591–6601. [Google Scholar]

- Lukin, S.; Hobbs, R.; Voss, C. A Pipeline for Creative Visual Storytelling. In Proceedings of the First Workshop on Storytelling, New Orleans, LA, USA, 5 June 2018; Mitchell, M., Huang, T.H.K., Ferraro, F., Misra, I., Eds.; Association for Computational Linguistics: New Orleans, LA, USA, 2018; pp. 20–32. [Google Scholar]

- Halperin, B.A.; Lukin, S.M. Envisioning Narrative Intelligence: A Creative Visual Storytelling Anthology. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 23–28 April 2023. CHI ’23. [Google Scholar]

- Halperin, B.A.; Lukin, S.M. Artificial Dreams: Surreal Visual Storytelling as Inquiry Into AI ’Hallucination’. In Proceedings of the 2024 ACM Designing Interactive Systems Conference, New York, NY, USA, 1–5 July 2024; pp. 619–637. [Google Scholar]

- Hsu, C.C.; Chen, Z.Y.; Hsu, C.Y.; Li, C.C.; Lin, T.Y.; Huang, T.H.; Ku, L.W. Knowledge-Enriched Visual Storytelling. AAAI Conf. Artif. Intell. 2020, 34, 7952–7960. [Google Scholar] [CrossRef]

- Hsu, C.C.; Chen, Y.H.; Chen, Z.Y.; Lin, H.Y.; Huang, T.H.K.; Ku, L.W. Dixit: Interactive Visual Storytelling via Term Manipulation. In Proceedings of the World Wide Web Conference, New York, NY, USA, 13–17 May 2019; pp. 3531–3535. [Google Scholar]

- Hsu, C.y.; Chu, Y.W.; Huang, T.H.; Ku, L.W. Plot and Rework: Modeling Storylines for Visual Storytelling. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 4443–4453. [Google Scholar]

- Holtzman, A.; Buys, J.; Du, L.; Forbes, M.; Choi, Y. The Curious Case of Neural Text Degeneration. arXiv 2019, arXiv:1904.09751. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. arXiv 2017, arXiv:1703.06870. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Technical Report. 2019. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 15 September 2025).

- Gonzalez-Rico, D.; Pineda, G.F. Contextualize, Show and Tell: A Neural Visual Storyteller. arXiv 2018, arXiv:1806.00738. [Google Scholar] [CrossRef]

- Caba Heilbron, F.; Escorcia, V.; Ghanem, B.; Niebles, J.C. ActivityNet: A Large-Scale Video Benchmark for Human Activity Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 961–970. [Google Scholar]

- Yu, L.; Bansal, M.; Berg, T.L. Hierarchically-Attentive RNN for Album Summarization and Storytelling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; Palmer, M., Hwa, R., Riedel, S., Eds.; Association for Computational Linguistics: Copenhagen, Denmark, 2017; pp. 966–971. [Google Scholar]

- Kim, T.; Heo, M.O.; Son, S.; Park, K.W.; Zhang, B.T. GLAC Net: GLocal Attention Cascading Networks for Multi-image Cued Story Generation. arXiv 2018, arXiv:1805.10973. [Google Scholar]

- Liu, H.; Yang, J.; Chang, C.H.; Wang, W.; Zheng, H.T.; Jiang, Y.; Wang, H.; Xie, R.; Wu, W. AOG-LSTM: An adaptive attention neural network for visual storytelling. Neurocomputing 2023, 552, 126486. [Google Scholar] [CrossRef]

- Gu, J.; Wang, H.; Fan, R. Coherent Visual Storytelling via Parallel Top-Down Visual and Topic Attention. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 257–268. [Google Scholar] [CrossRef]

- Suwono, N.; Chen, J.; Hung, T.; Huang, T.H.; Liao, I.B.; Li, Y.H.; Ku, L.W.; Sun, S.H. Location-Aware Visual Question Generation with Lightweight Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Singapore, 2023; pp. 1415–1432. [Google Scholar]

- Belz, J.H.; Weilke, L.M.; Winter, A.; Hallgarten, P.; Rukzio, E.; Grosse-Puppendahl, T. Story-Driven: Exploring the Impact of Providing Real-time Context Information on Automated Storytelling. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, 13–16 October 2024. UIST ’24. [Google Scholar]

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 Technical Report. arXiv 2025, arXiv:2505.09388. [Google Scholar] [CrossRef]

- Comanici, G.; Bieber, E.; Schaekermann, M.; Pasupat, I.; Sachdeva, N.; Dhillon, I.; Blistein, M.; Ram, O.; Zhang, D.; Rosen, E.; et al. Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic Capabilities. arXiv 2025, arXiv:2507.06261. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).