Smartphone-Based Seamless Scene and Object Recognition for Visually Impaired Persons

Abstract

1. Introduction

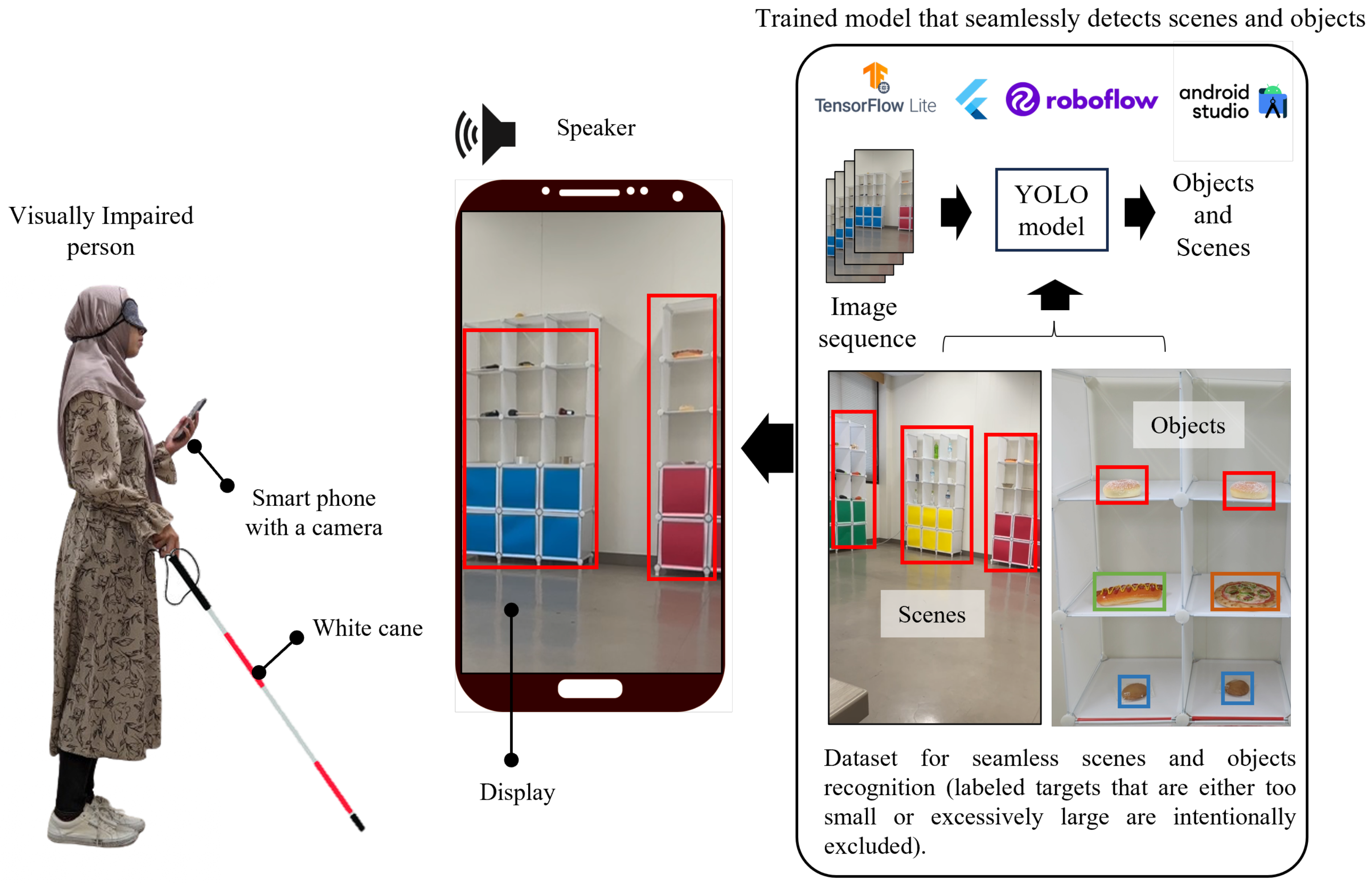

1.1. Background

1.2. Related Work

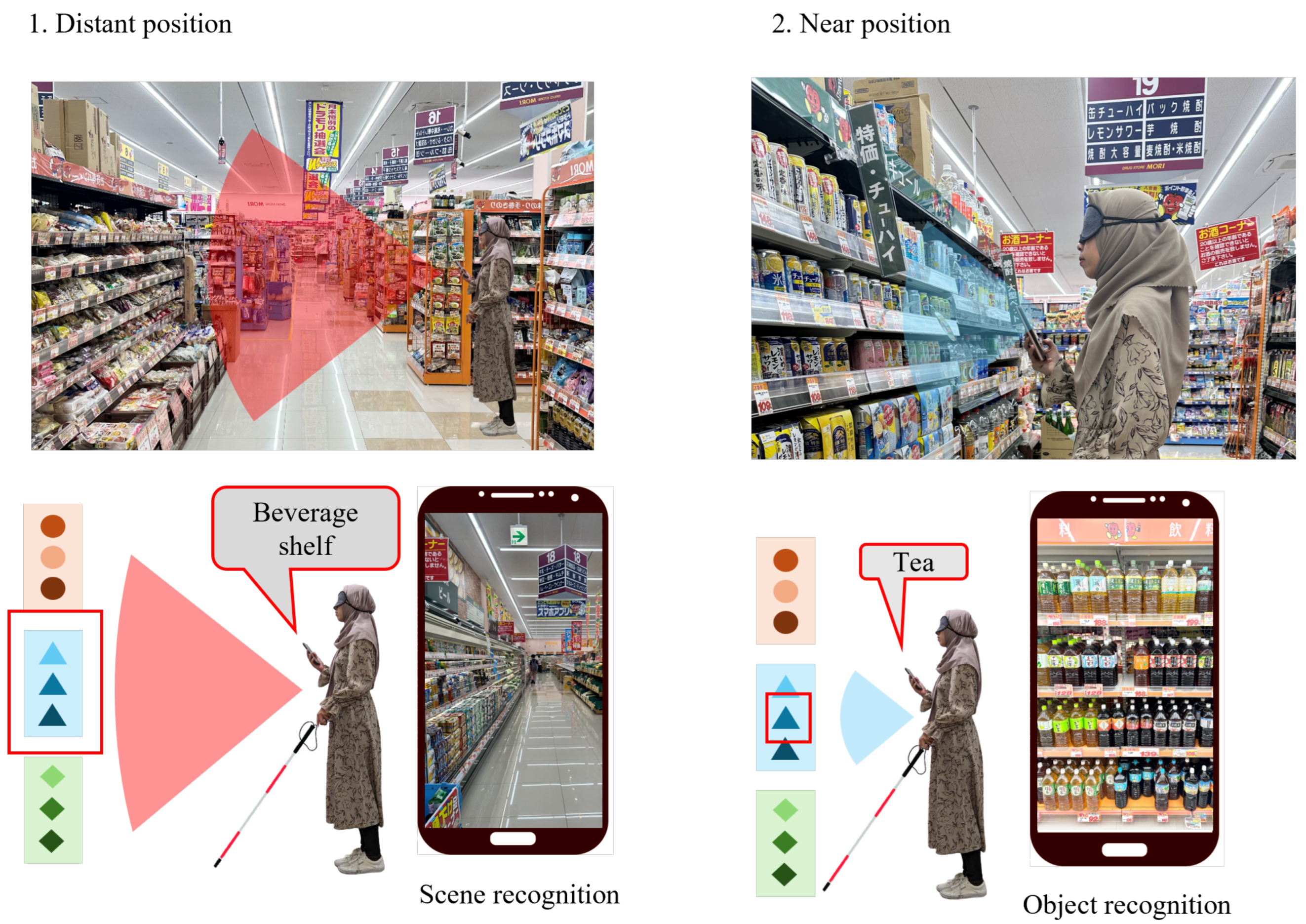

2. System Overview

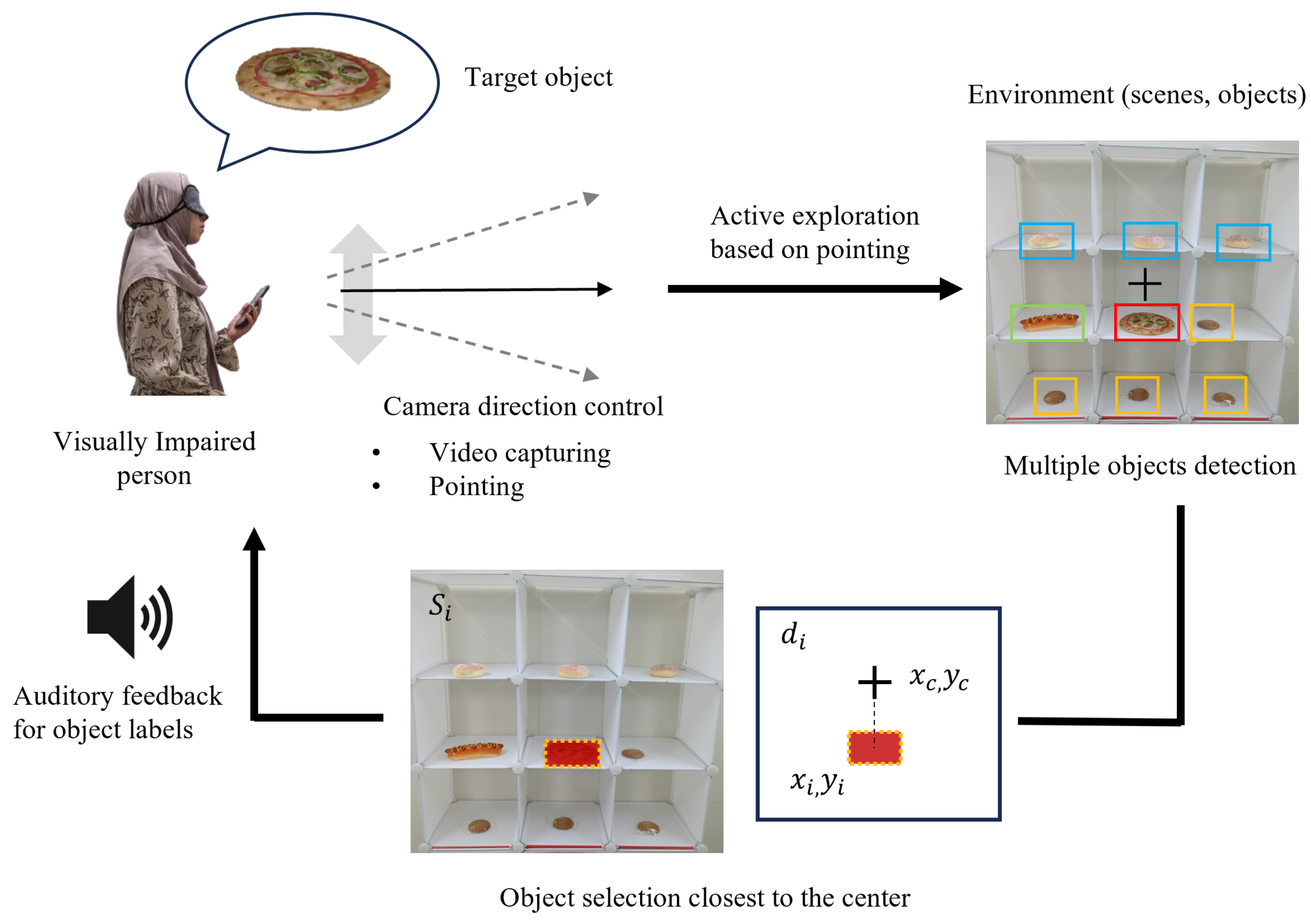

2.1. Human-in-the-Loop Design

2.2. Seamless Scene-Object Recognition

2.3. Multiple Scenes and Object Recognition

3. Tested Smartphone Application for Supporting Visually Impaired Persons

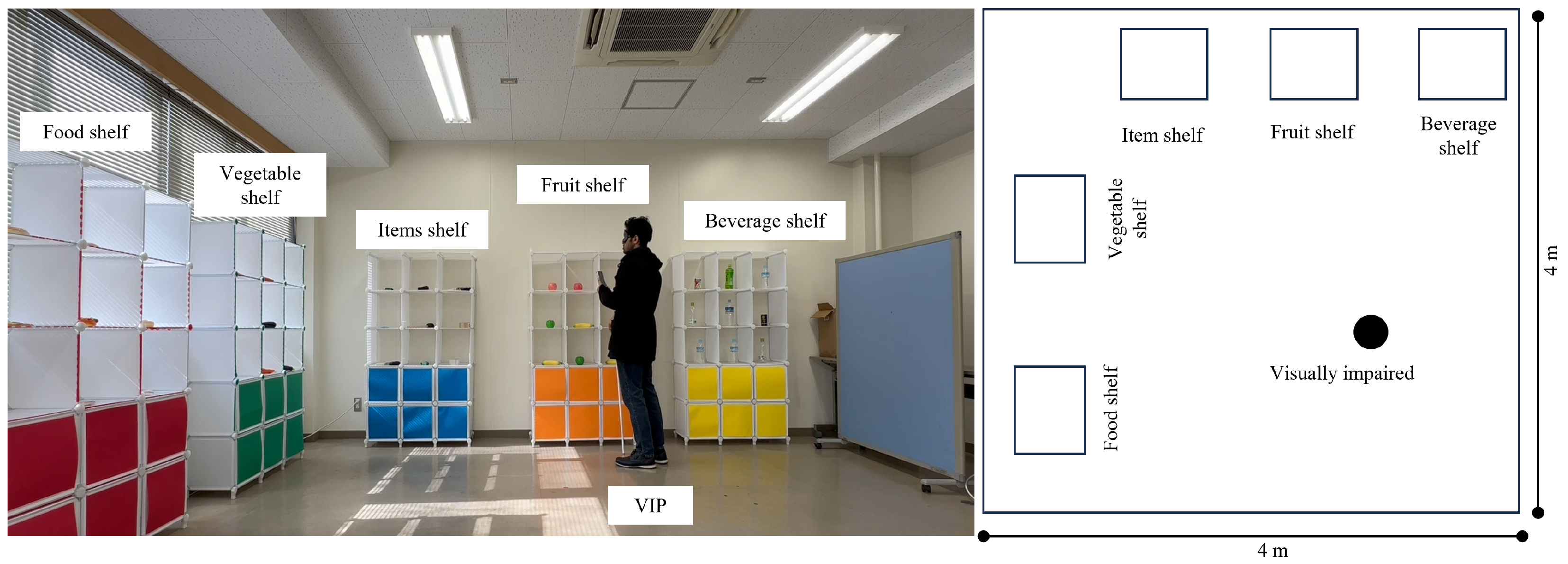

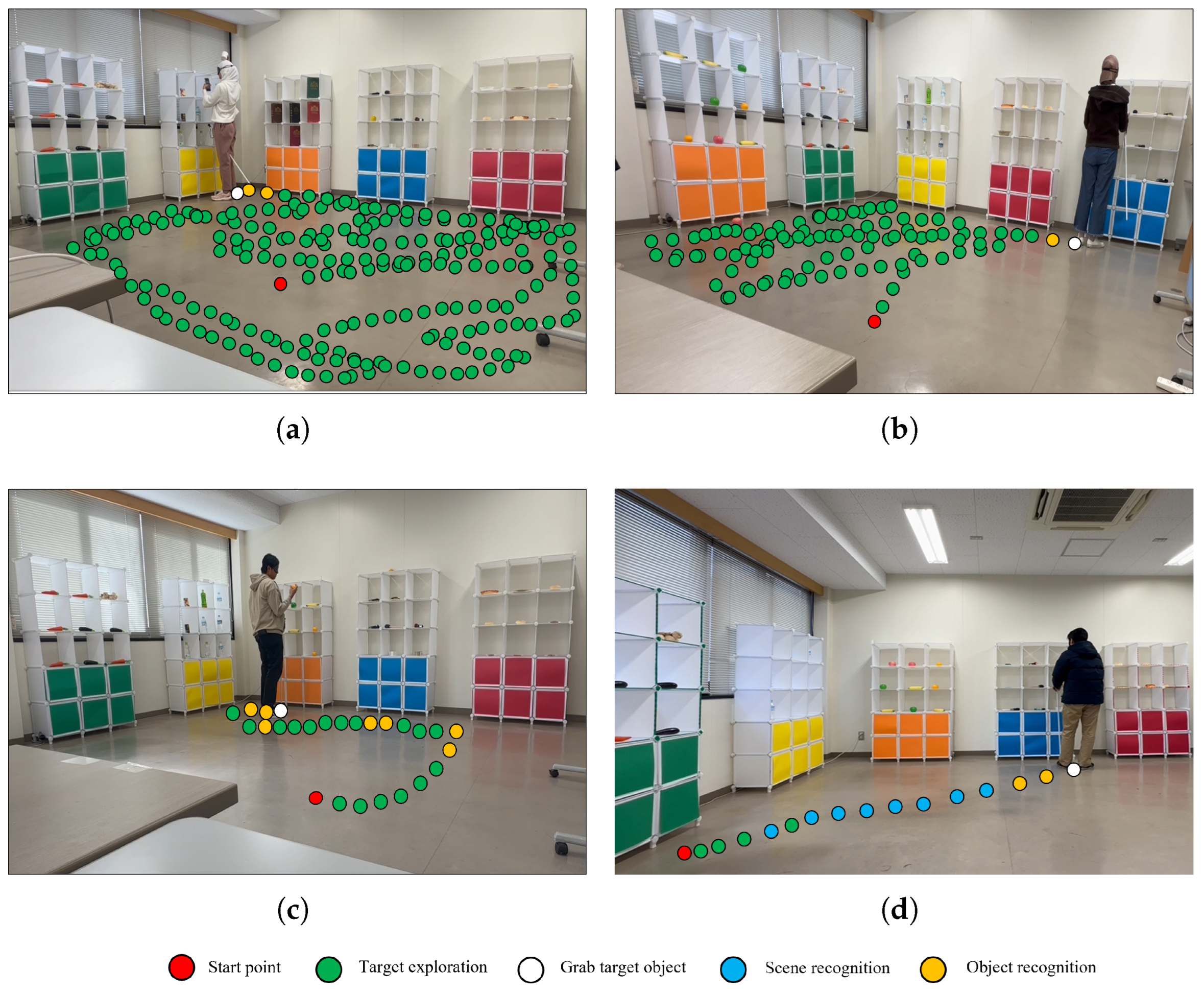

4. Experiments

4.1. Training Process

4.2. Participants and Instructions

4.3. Experimental Conditions

4.4. Evaluation Metrics

5. Result and Evaluation

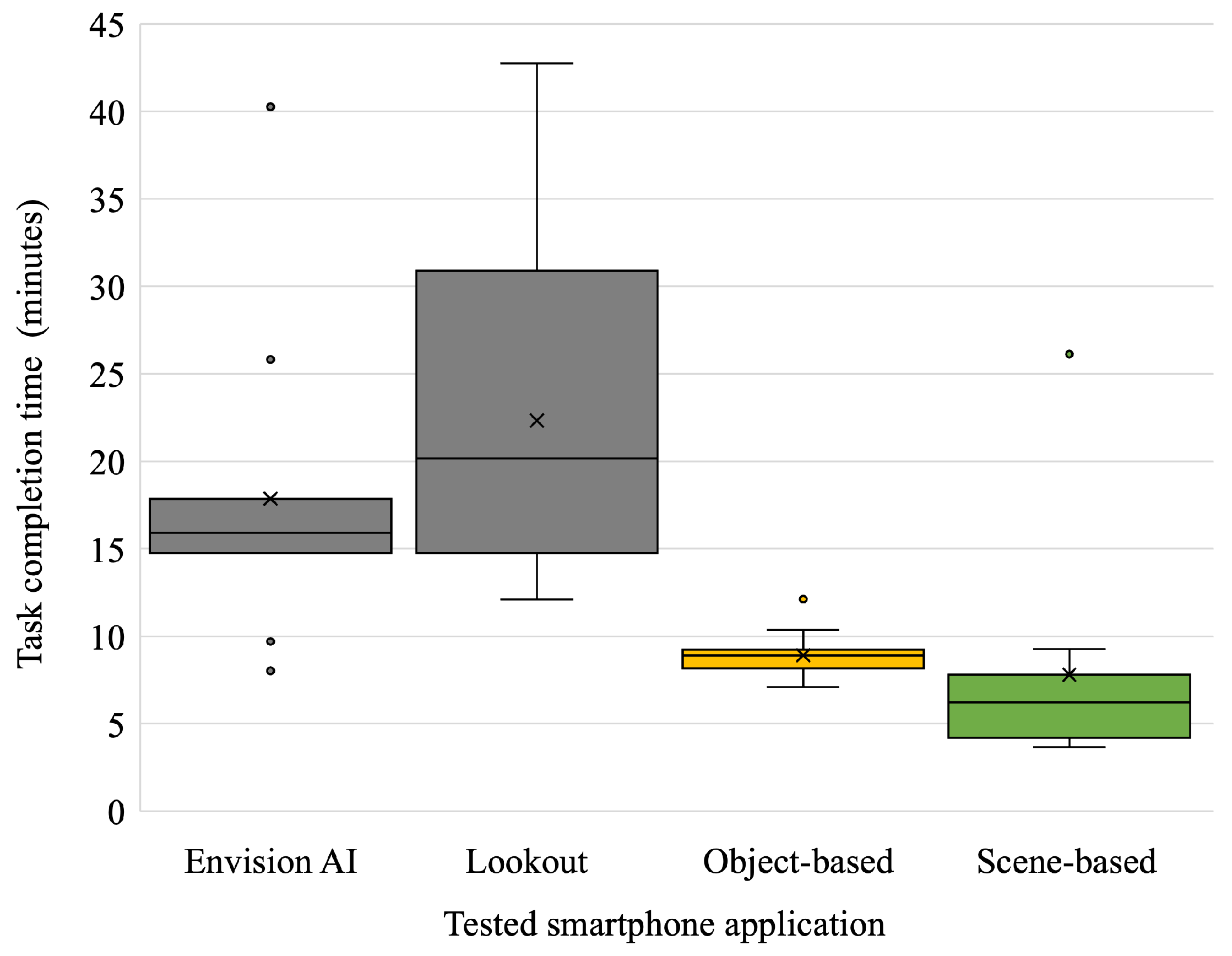

5.1. Time Consumption

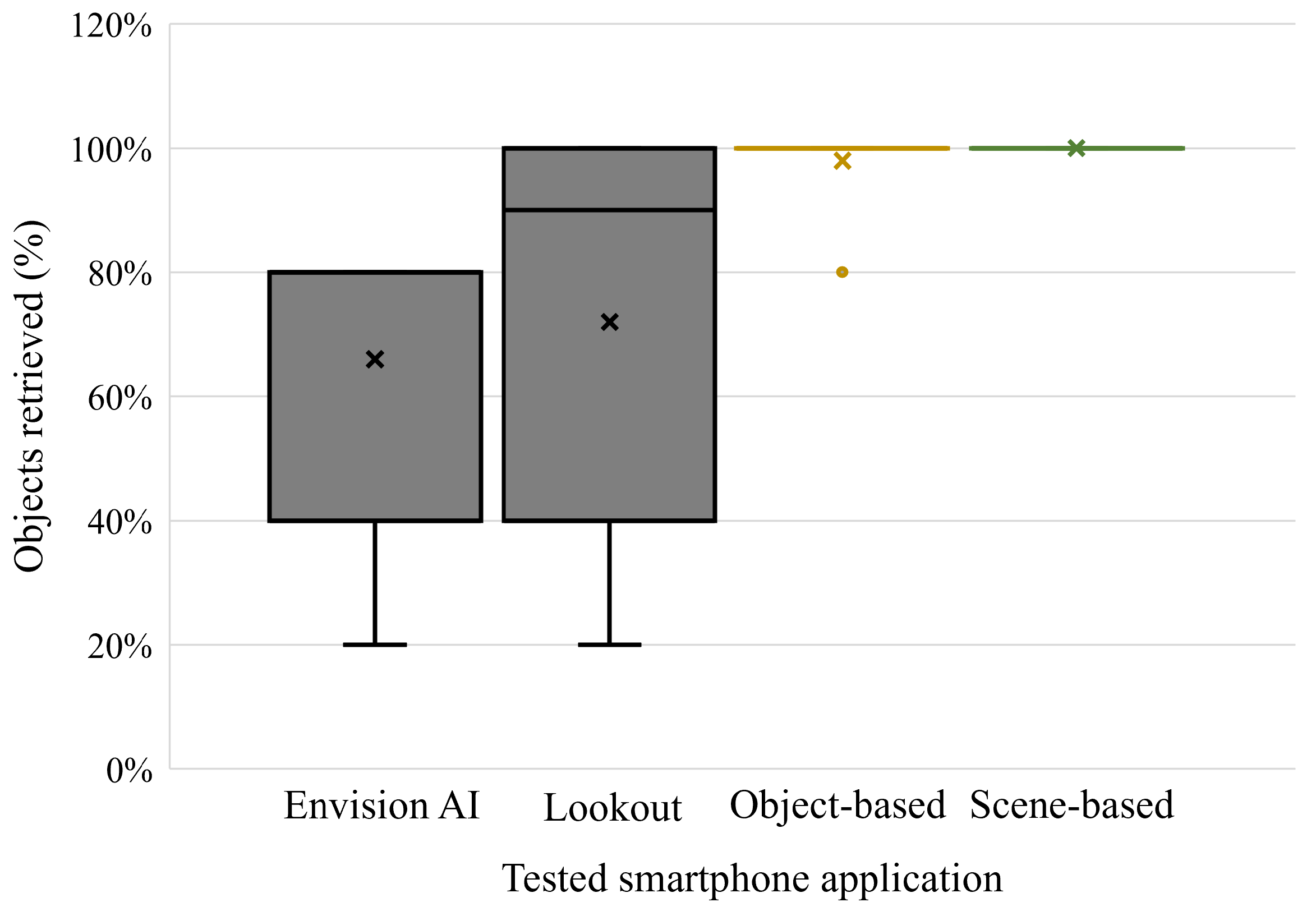

5.2. Number of Recognized Targets

6. Limitation and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VIP | Visually impaired person |

| YOLO | You Only Look Once |

| OCR | Optical Character Recognition |

| SD | Standard deviation |

References

- Envision Technologies. Envision AI. Available online: https://www.letsenvision.com (accessed on 3 February 2025).

- Google. Lookout—Assisted Vision. Available online: https://play.google.com/store/apps/details?id=com.google.android.apps.accessibility.reveal (accessed on 3 February 2025).

- Rahman, F.A.; Handayani, A.N.; Takayanagi, M.; He, Y.; Fukuda, O.; Yamaguchi, N.; Okumura, H. Assistive Device for Visual Impaired Person Based on Real Time Object Detection. In Proceedings of the 2020 4th International Conference on Vocational Education and Training (ICOVET), Malang, Indonesia, 19–19 September 2020; pp. 190–194. [Google Scholar] [CrossRef]

- Rahman, F.A.; Yamaguchi, N.; Handayani, A.N.; Okumura, H.; Yeoh, W.L.; Fukuda, O. The development of a smartphone application based on object detection and indoor navigation to assist visually impaired. In Proceedings of the 2023 8th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 23–25 November 2023; Volume 8, pp. 111–116. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Plikynas, D.; Žvironas, A.; Budrionis, A.; Gudauskis, M. Indoor Navigation Systems for Visually Impaired Persons: Mapping the Features of Existing Technologies to User Needs. Sensors 2020, 20, 636. [Google Scholar] [CrossRef] [PubMed]

- Kandalan, R.N.; Namuduri, K. Techniques for Constructing Indoor Navigation Systems for the Visually Impaired: A Review. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 492–506. [Google Scholar] [CrossRef]

- Khan, S.; Nazir, S.; Khan, H.U. Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review. IEEE Access 2021, 9, 26712–26734. [Google Scholar] [CrossRef]

- Yu, X.; Saniie, J. Visual Impairment Spatial Awareness System for Indoor Navigation and Daily Activities. J. Imaging 2025, 11, 9. [Google Scholar] [CrossRef] [PubMed]

- Brilli, D.D.; Georgaras, E.; Tsilivaki, S.; Melanitis, N.; Nikita, K. AIris: An AI-Powered Wearable Assistive Device for the Visually Impaired. In Proceedings of the 2024 10th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Heidelberg, Germany, 1–4 September 2024; pp. 1236–1241. [Google Scholar] [CrossRef]

- Liu, H.; Liu, R.; Yang, K.; Zhang, J.; Peng, K.; Stiefelhagen, R. HIDA: Towards Holistic Indoor Understanding for the Visually Impaired via Semantic Instance Segmentation with a Wearable Solid-State LiDAR Sensor. arXiv 2021, arXiv:2107.03180. [Google Scholar]

- BN, R.; Guru, R.; A, A.M. Small Object Detection for Indoor Assistance to the Blind using YOLO NAS Small and Super Gradients. arXiv 2024, arXiv:2409.07469. [Google Scholar]

- Luan, X.; Zhang, J.; Xu, M.; Silamu, W.; Li, Y. Lightweight Scene Text Recognition Based on Transformer. Sensors 2023, 23, 4490. [Google Scholar] [CrossRef] [PubMed]

- Ng, S.C.; Kwok, C.P.; Chung, S.H.; Leung, Y.Y.; Pang, H.S.; Lam, C.Y.; Lau, K.C.; Tang, C.M. An Intelligent Mobile Application for Assisting Visually Impaired in Daily Consumption Based on Machine Learning with Assistive Technology. Int. J. Artif. Intell. Tools 2021, 30, 2140002. [Google Scholar] [CrossRef]

- Pratap, A.; Kumar, S.; Chakravarty, S. Adaptive Object Detection for Indoor Navigation Assistance: A Performance Evaluation of Real-Time Algorithms. arXiv 2025, arXiv:2501.18444. [Google Scholar] [CrossRef]

- Pfitzer, N.; Zhou, Y.; Poggensee, M.; Kurtulus, D.; Dominguez-Dager, B.; Dusmanu, M.; Pollefeys, M.; Bauer, Z. MR.NAVI: Mixed-Reality Navigation Assistant for the Visually Impaired. arXiv 2025, arXiv:2506.05369. [Google Scholar]

- Said, Y.; Atri, M.; Albahar, M.A.; Ben Atitallah, A.; Alsariera, Y.A. Scene Recognition for Visually-Impaired People’s Navigation Assistance Based on Vision Transformer with Dual Multiscale Attention. Mathematics 2023, 11, 1127. [Google Scholar] [CrossRef]

- Norkhalid, A.M.; Faudzi, M.A.; Ghapar, A.A.; Rahim, F.A. Mobile Application: Mobile Assistance for Visually Impaired People—Speech Interface System (SIS). In Proceedings of the 2020 8th International Conference on Information Technology and Multimedia (ICIMU), Selangor, Malaysia, 24–26 August 2020; pp. 329–333. [Google Scholar] [CrossRef]

- Sargsyan, E.; Oriola, B.; Serrano, M.; Jouffrais, C. Audio-Vibratory You-Are-Here Mobile Maps for People with Visual Impairments. Proc. ACM Hum.-Comput. Interact. 2024, 8, 624–648. [Google Scholar] [CrossRef]

- Dwyer, B.; Nelson, J.; Hansen, T. Roboflow (Version 1.0) [Software]. 2024. Available online: https://roboflow.com (accessed on 26 July 2025).

- Google. Android Studio. Available online: https://developer.android.com/studio (accessed on 3 January 2025).

- Flutter Team. Flutter (Version 3.16.4). Cross-Platform Mobile Application Framework. Available online: https://flutter.dev (accessed on 3 January 2025).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, D.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org (accessed on 26 July 2025).

| Smartphone App | Envision AI | Lookout | Object-Based | Scene-Based |

|---|---|---|---|---|

| Display sample |  |  |  |  |

| Search target | One object specified in advance | One object specified in advance | Any multiple objects | Any multiple objects and scenes |

| Feedback type | Detection sound (no feedback for undetected items) | Detection sound and object name (no feedback for undetected items) | Object names | Object and scene names |

| UI/UX | Many buttons for setting targets | Many buttons for setting targets | Ready to recognize multiple targets | Ready to recognize multiple targets |

| Parameter | Value |

|---|---|

| Image size | 640 × 640 |

| Learning rate | 0.01 |

| Batch size | 16 |

| Epoch | 100 |

| Classes | mAP@50 | mAP@50:95 | Precision | Recall |

|---|---|---|---|---|

| Beverage Shelf | 0.991 | 0.896 | 0.939 | 0.965 |

| Coffee | 0.981 | 0.854 | 0.922 | 0.947 |

| Juice | 0.978 | 0.862 | 0.873 | 0.955 |

| Tea | 0.991 | 0.867 | 0.917 | 0.947 |

| Mineral Water | 0.989 | 0.879 | 0.959 | 0.950 |

| Food Shelf | 0.986 | 0.879 | 0.929 | 0.962 |

| Pizza | 0.990 | 0.892 | 0.951 | 0.965 |

| Donut | 0.990 | 0.887 | 0.933 | 0.967 |

| Sweet | 0.995 | 0.898 | 0.946 | 0.973 |

| Hot Dog | 0.990 | 0.863 | 0.951 | 0.960 |

| Fruit Shelf | 0.988 | 0.890 | 0.966 | 0.978 |

| Banana | 0.995 | 0.897 | 0.975 | 0.986 |

| Green Apple | 0.994 | 0.913 | 0.938 | 0.964 |

| Red Apple | 0.988 | 0.880 | 0.933 | 0.985 |

| Orange | 0.993 | 0.930 | 0.957 | 0.992 |

| Vegetable Shelf | 0.992 | 0.921 | 0.968 | 0.970 |

| Carrot | 0.993 | 0.911 | 0.977 | 0.980 |

| Eggplant | 0.983 | 0.897 | 0.958 | 0.977 |

| Garlic | 0.990 | 0.920 | 0.967 | 0.969 |

| Items Shelf | 0.993 | 0.923 | 0.979 | 0.984 |

| Flashlight | 0.993 | 0.921 | 0.975 | 0.969 |

| Glove | 0.991 | 0.923 | 0.970 | 0.978 |

| Hammer | 0.993 | 0.925 | 0.969 | 0.981 |

| Measuring Tape | 0.990 | 0.926 | 0.974 | 0.991 |

| Scissors | 0.991 | 0.921 | 0.980 | 0.982 |

| Tape | 0.991 | 0.920 | 0.953 | 0.981 |

| Subject | Envision AI | Lookout | Object-Based | Scene-Based |

|---|---|---|---|---|

| Subject 1 | 16:10 | 14:45 | 10:21 | 09:15 |

| Subject 2 | 15:40 | 15:36 | 08:43 | 04:07 |

| Subject 3 | 08:02 | 16:20 | 07:06 | 03:39 |

| Subject 4 | 25:49 | 20:09 | 07:12 | 06:06 |

| Subject 5 | 40:15 | 30:53 | 09:14 | 06:14 |

| Subject 6 | 17:30 | 12:06 | 08:54 | 04:33 |

| Subject 7 | 14:45 | 13:55 | 08:18 | 07:05 |

| Subject 8 | 14:46 | 42:44 | 12:07 | 04:12 |

| Subject 9 | 09:42 | 36:12 | 09:01 | 06:43 |

| Subject 10 | 15:55 | 20:35 | 08:10 | 26:07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, F.A.; Pusparani, F.A.; Yeoh, W.L.; Fukuda, O. Smartphone-Based Seamless Scene and Object Recognition for Visually Impaired Persons. Information 2025, 16, 808. https://doi.org/10.3390/info16090808

Rahman FA, Pusparani FA, Yeoh WL, Fukuda O. Smartphone-Based Seamless Scene and Object Recognition for Visually Impaired Persons. Information. 2025; 16(9):808. https://doi.org/10.3390/info16090808

Chicago/Turabian StyleRahman, Fisilmi Azizah, Ferina Ayu Pusparani, Wen Liang Yeoh, and Osamu Fukuda. 2025. "Smartphone-Based Seamless Scene and Object Recognition for Visually Impaired Persons" Information 16, no. 9: 808. https://doi.org/10.3390/info16090808

APA StyleRahman, F. A., Pusparani, F. A., Yeoh, W. L., & Fukuda, O. (2025). Smartphone-Based Seamless Scene and Object Recognition for Visually Impaired Persons. Information, 16(9), 808. https://doi.org/10.3390/info16090808