Enhancing Ancient Ceramic Knowledge Services: A Question Answering System Using Fine-Tuned Models and GraphRAG

Abstract

1. Introduction

- To address the prevalence of ceramic imagery in ancient ceramic literature, this study employs the GLM-4V-9B vision-language model. By providing contextually informed prompts, the model generates textual descriptions of images, substantially preserving the original information from ancient ceramic pictures. This approach furnishes a more comprehensive knowledge source for research in the ancient ceramics domain.

- This study utilizes ERNIE 4.0 Turbo, which supports multi-document input, to generate QA pairs via global and local prompt engineering. Following the manual removal of duplicate and low-quality content, a final set of 2143 representative QA pairs is generated. This curated dataset effectively encapsulates knowledge within the ancient ceramics field, providing robust data support for subsequent research.

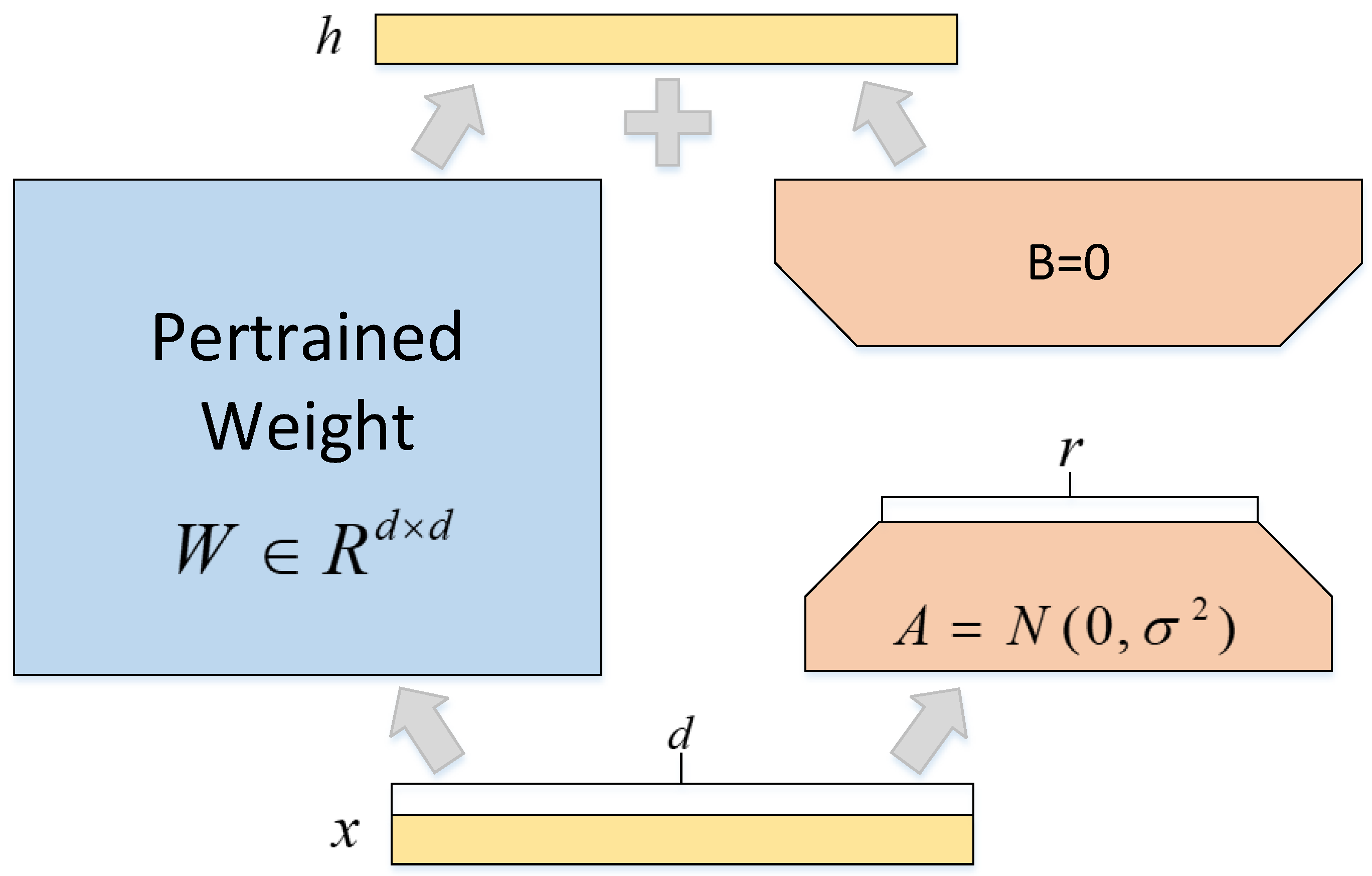

- The Qwen2.5-7B-Instruct model serves as the base architecture in this research. Leveraging LoRA fine-tuning technology, the model is trained on the generated QA pair dataset. The resultant fine-tuned model, Qwen2.5-LoRA, is deployed for answer generation. Experimental results demonstrate that the fine-tuned model significantly enhances question-answering performance specifically within the ancient ceramics domain.

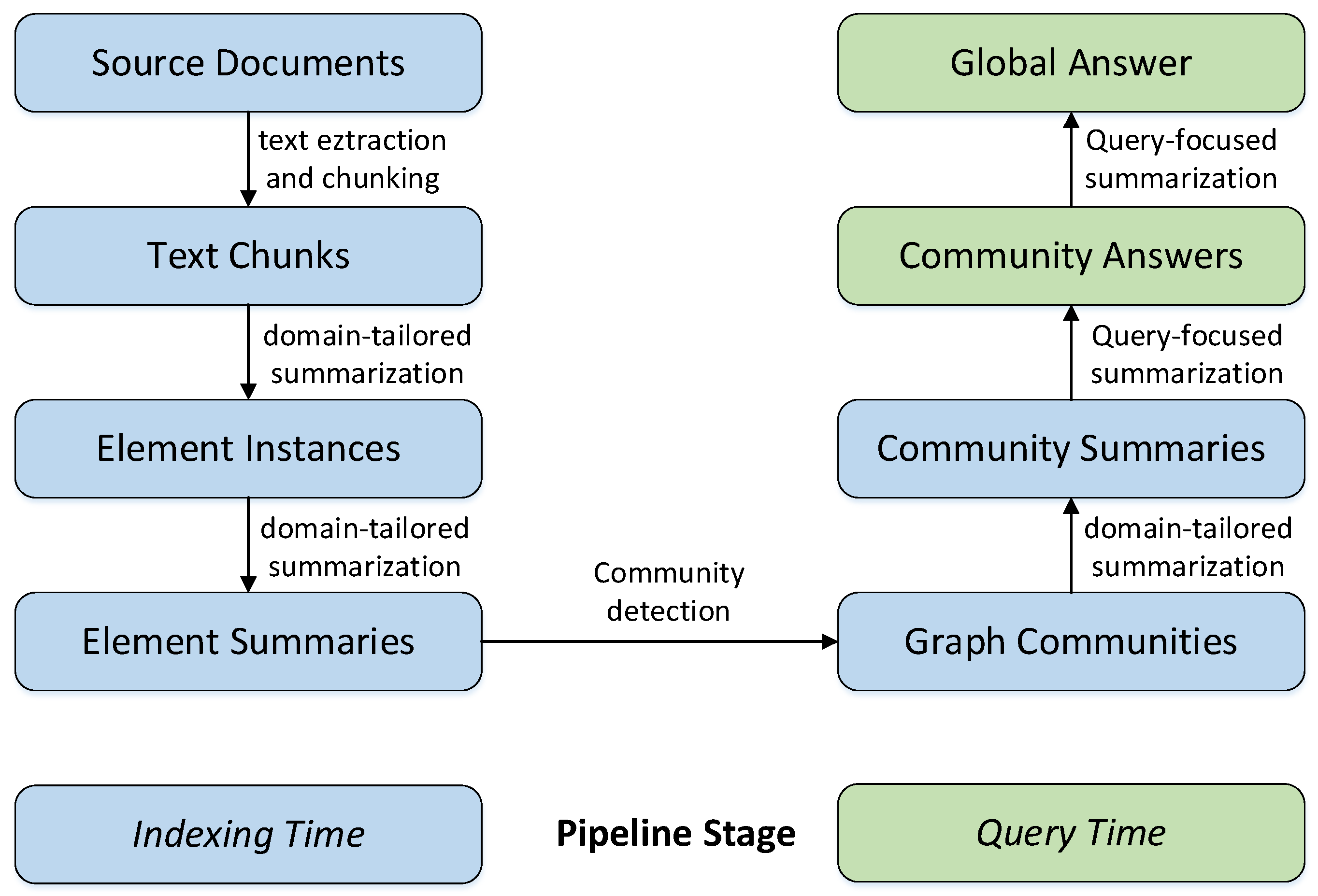

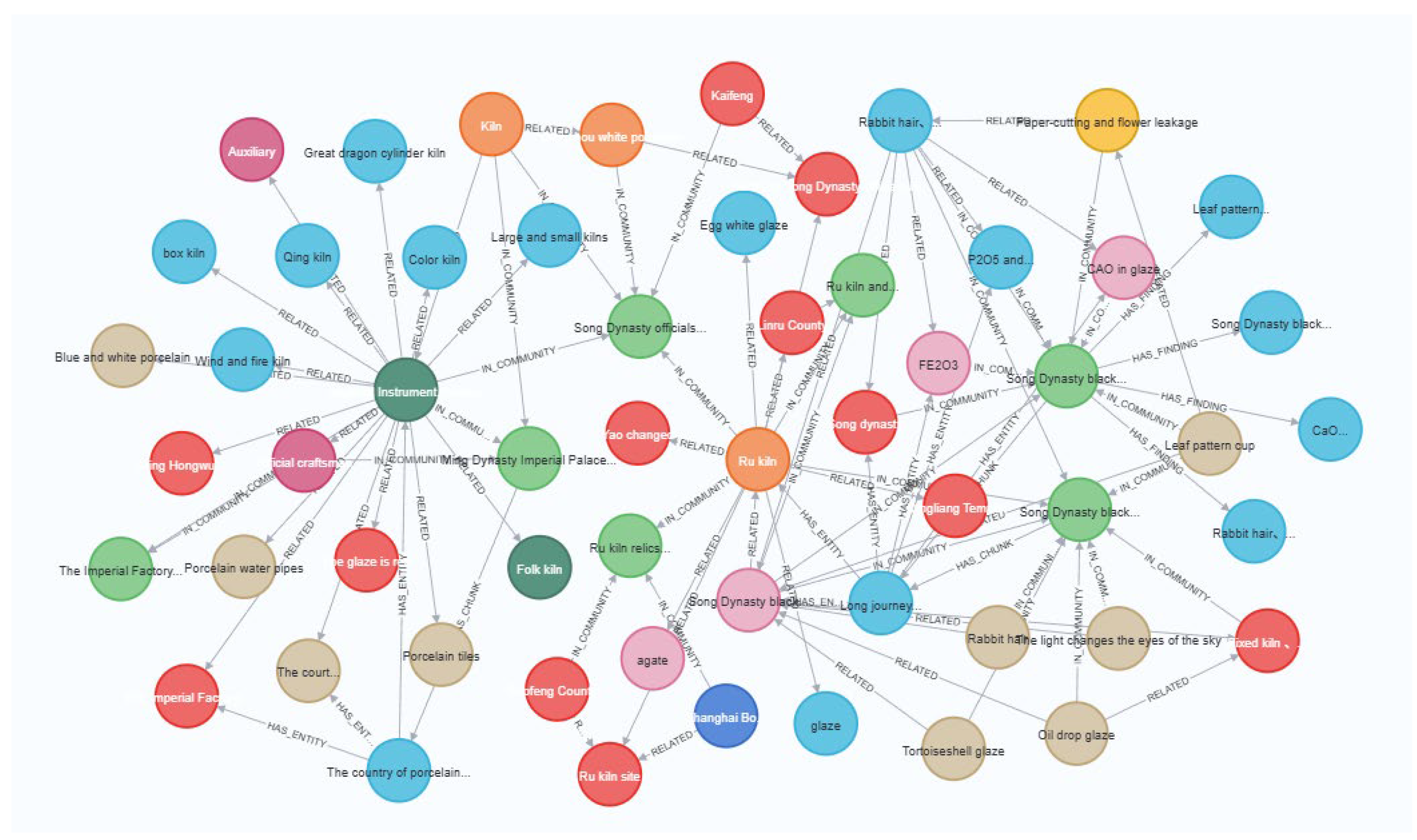

- This study integrates the GraphRAG framework with external knowledge bases to structurally process heterogeneous knowledge. For questions requiring multi-hop reasoning, graph path analysis provides the large language model with precise, structured contextual information. This enhanced retrieval architecture markedly improves the accuracy of the question-answering system when handling complex queries.

2. Related Work

3. Research Methods

3.1. Applications of Vision-Language Models

3.2. Question–Answer Pair Dataset Construction

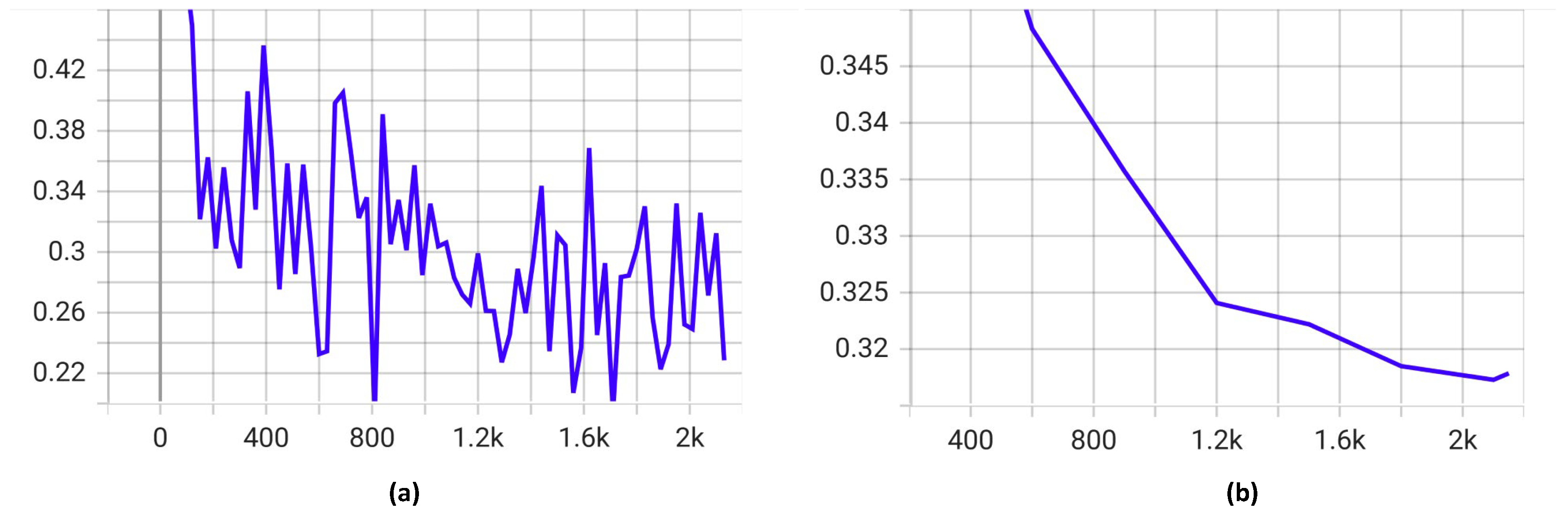

3.3. Large Language Model Fine-Tuning

3.3.1. LLaMA-Factory Framework

3.3.2. LoRA Fine-Tuning

3.3.3. Large Language Model Selection

3.4. GraphRAG

4. Results and Analysis

4.1. Evaluation Indicators

4.2. Experimental Environment and Results

4.3. Comparison with Other Models

4.4. Ablation Experiment

4.5. Manual Evaluation Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LoRA | Low-Rank Adaptation |

| GraphRAG | Graph Retrieval-Augmented Generation |

| AI | Artificial Intelligence |

| GANs | Generative Adversarial Networks |

| LLMs | Large Language Models |

| VLMs | Vision-Language Models |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| BERTscore | Bidirectional Encoder Representations from Transformers score |

| LCS | Longest Common Subsequence |

| GPU | Graphics Processing Unit |

| CPU | Central Processing Unit |

References

- Poddar, A.K. Impact of Global Digitalization on Traditional Cultures. Int. J. Interdiscip. Soc. Community Stud. 2024, 20, 209. [Google Scholar] [CrossRef]

- Yu, Q. Inheritance and Innovation of Cultural and Creative Design in Jingdezhen under the Background of Cultural and Tourism Integration. Design 2024, 9, 640. [Google Scholar] [CrossRef]

- Sun, H.; He, Y.; Wang, R.; Su, J.; Li, H.; Zhang, J.; Zheng, X. Discussion: Constructing a Scientific Theory and Methodology System Oriented Towards Empirical and Practical Aspects, Contemporary Reflections on the Construction of Cultural Heritage Disciplines. China Cult. Herit. 2025, 2, 4. [Google Scholar]

- Bakker, F.T.; Antonelli, A.; Clarke, J.A.; Cook, J.A.; Edwards, S.V.; Ericson, P.G.P.; Faurby, S.; Ferrand, N.; Gelang, M.; Gillespie, R.G.; et al. The Global Museum: Natural history collections and the future of evolutionary science and public education. PeerJ 2020, 8, 8225. [Google Scholar] [CrossRef] [PubMed]

- Girdhar, N.; Coustaty, M.; Doucet, A. Digitizing history: Transitioning historical paper documents to digital content for information retrieval and mining—A comprehensive survey. IEEE Trans. Comput. Soc. Syst. 2024, 11, 6151–6180. [Google Scholar] [CrossRef]

- Lin, T.; Vermol, V.V.; Yu, J.; Jiang, H. Cultural inheritance and technological innovation in modern ceramics: A historical study based on the evolution of individual practice and aesthetic consciousness of ceramic artists. Herança 2025, 8, 3. [Google Scholar]

- Kumar, P. Large language models (LLMs): Survey, technical frameworks, and future challenges. Artif. Intell. Rev. 2024, 57, 260. [Google Scholar] [CrossRef]

- Wang, X.; Tan, G. Research on Decision-making of Autonomous Driving in Highway Environment Based on Knowledge and Large Language Model. J. Syst. Simul. 2025, 37, 1246–1255. [Google Scholar]

- Zou, H.; Wang, Y.; Huang, A. A novel domain knowledge augmented large language model based medical conversation system for sustainable smart city development. Sustain. Cities Soc. 2025, 128, 106444. [Google Scholar] [CrossRef]

- Xiong, J.; Pan, L.; Liu, Y.; Zhu, L.; Zhang, L.; Tan, S. Enhancing Plant Protection Knowledge with Large Language Models: A Fine-Tuned Question-Answering System Using LoRA. Appl. Sci. 2025, 15, 3850. [Google Scholar] [CrossRef]

- Zheng, B.; Liu, F.; Zhang, M.; Tong, Q.; Cui, S.; Ye, Y.; Guo, Y. Image captioning for cultural artworks: A case study on ceramics. Multimed. Syst. 2023, 29, 3223–3243. [Google Scholar] [CrossRef]

- Chen, H. Large knowledge model: Perspectives and challenges. arXiv 2023, arXiv:2312.02706. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Ding, N.; Lv, X.; Wang, Q.; Chen, Y.; Zhou, B.; Liu, Z.; Sun, M. Sparse low-rank adaptation of pre-trained language models. arXiv 2023, arXiv:2311.11696. [Google Scholar]

- Zhang, Q.; Chen, S.; Bei, Y.; Zheng, Y.; Hua, Z.; Hong, Z.; Dong, J.; Chen, H.; Zhang, Y.; Huang, X. A survey of graph retrieval-augmented generation for customized large language models. arXiv 2025, arXiv:2501.13958. [Google Scholar]

- Abgaz, Y.; Rocha Souza, R.; Methuku, J.; Koch, G.; Dorn, A. A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies. J. Imaging 2021, 7, 121. [Google Scholar] [CrossRef] [PubMed]

- Fang, T.; Hui, Z.; Rey, W.P.; Yang, A.; Liu, B.; Xie, Z. Digital restoration of historical buildings by integrating 3D PC reconstruction and GAN algorithm. J. Artif. Intell. Technol. 2024, 4, 179–187. [Google Scholar] [CrossRef]

- Deng, Y.; Lei, W.; Lin, W.; Cai, D. A survey on proactive dialogue systems: Problems, methods, and prospects. arXiv 2023, arXiv:2305.02750. [Google Scholar] [CrossRef]

- Rajaraman, V. From ELIZA to ChatGPT: History of human-computer conversation. Resonance 2023, 28, 889–905. [Google Scholar] [CrossRef]

- Kamble, K.; Russak, M.; Mozolevskyi, D.; Ali, M.; Russak, M.; AlShikn, W. Expect the Unexpected: FailSafe Long Context QA for Finance. arXiv 2025, arXiv:2502.06329. [Google Scholar] [CrossRef]

- Acharya, K.; Velasquez, A.; Song, H.H. A survey on symbolic knowledge distillation of large language models. IEEE Trans. Artif. Intell. 2024, 5, 5928–5948. [Google Scholar] [CrossRef]

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fatema, K.; Fahad, M.N.; Sakib, S.; Mim, M.M.J. A review on large language models: Architectures, applications, taxonomies, open issues and challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Ling, Z.; Delnevo, G.; Salomoni, P.; Mirri, S. Findings on machine learning for identification of archaeological ceramics-a systematic literature review. IEEE Access 2024, 12, 100167–100185. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, J.; Sheng, J.; Lu, S. Vision-language models for vision tasks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef]

- Hong, W.; Wang, W.; Ding, M.; Yu, W.; Lv, Q.; Wang, Y.; Cheng, Y.; Huang, S.; Ji, J.; Zho, X. Cogvlm2: Visual language models for image and video understanding. arXiv 2024, arXiv:2408.16500. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, R.; Zhang, J.; Ye, Y.; Luo, Z.; Feng, Z.; Ma, Y. Llamafactory: Unified efficient fine-tuning of 100+ language models. arXiv 2024, arXiv:2403.13372. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-tuning: Optimizing continuous prompts for generation. arXiv 2021, arXiv:2101.00190. [Google Scholar] [CrossRef]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. Qlora: Efficient finetuning of quantized llms. Adv. Neural Inf. Process. Syst. 2023, 36, 10088–10115. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Duan, X.; Liu, W.; Gao, D.; Liu, S.; Huang, Y. A Quantitative Evaluation Method Based on Consistency Metrics for Large Model Benchmarks. In Proceedings of the International Conference on Modeling, Natural Language Processing and Machine Learning, Xi’an, China, 17–19 May 2024; pp. 39–48. [Google Scholar]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Metropolitansky, D.; Ness, R.O.; Larson, J. From local to global: A graph rag approach to query-focused summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. Bertscore: Evaluating text generation with bert. arXiv 2019, arXiv:1904.09675. [Google Scholar]

| QA Type | Prompt Template |

|---|---|

| Global Synthesis | You are an expert in large language model question–answer generation. Thoroughly review all information across {all documents}, integrate insights comprehensively, and consider from the user’s perspective: What questions would users likely pose to the AI Ancient Ceramics Technology and Culture Knowledge System? Ensure questions have definitive answers and must not fabricate inquiries. Present output in QA pair format. |

| Local Fine-grained | You are an expert in large language model question–answer generation. Meticulously examine the complete content within {single document}, adopt the user’s viewpoint, and deduce: What specific questions would users address to the AI Ancient Ceramics Technology and Culture Knowledge System? Ensure questions have definitive answers and must not fabricate inquiries. Present output in QA pair format. |

| Ranking | Model Name | Overall Score | Hard Score | Science Score | Liberal Arts Score |

|---|---|---|---|---|---|

| 1 | Qwen2.5-7B-Instruct | 60.61 | 33.92 | 74.63 | 73.28 |

| 2 | GLM-4-9B-Chat | 56.83 | 29.33 | 69.22 | 71.94 |

| 3 | Gemma-2-9b-it | 55.48 | 29.03 | 67.78 | 69.63 |

| 4 | MiniCPM3-4B | 53.16 | 26.56 | 63.04 | 69.87 |

| 5 | Llama-3.1-8B-Instruct | 51.42 | 25.67 | 63.27 | 65.30 |

| 6 | Yi-1.5-6B-Chat | 48.69 | 25.16 | 57.03 | 63.89 |

| Training Parameter | Parameter Value |

|---|---|

| learning_rate | 1 × 10−4 |

| num_train_epochs | 3 |

| gradient_accumulation_steps | 4 |

| per_device_train_batch_size | 1 |

| lora_rank | 8 |

| lora_alpha | 32 |

| lora_dropout | 0.05 |

| Method | ROUGE-1/% | ROUGE-2/% | ROUGE-L/% | BERTScore_F1/% |

|---|---|---|---|---|

| Llama-3.1-8B-Instruct | 34.29 | 11.64 | 19.35 | 87.61 |

| Gemma-2-9b-it | 31.73 | 13.75 | 20.59 | 88.24 |

| GLM-4-9B-Chat | 28.39 | 13.67 | 18.48 | 87.93 |

| Qwen2.5-7B-Instruct | 33.84 | 13.71 | 22.39 | 89.16 |

| Qwen2.5-IoRA + GraphRAG | 57.92 | 48.46 | 52.17 | 93.68 |

| Method | ROUGE-1/% | ROUGE-2/% | ROUGE-L/% | BERTScore_F1/% |

|---|---|---|---|---|

| Qwen2.5-7B-Instruct | 33.84 | 13.71 | 22.39 | 89.16 |

| Qwen2.5-7B-Instruct + GraphRAG | 38.24 | 17.37 | 26.54 | 91.24 |

| Qwen2.5-IoRA | 53.61 | 43.93 | 47.85 | 92.79 |

| Qwen2.5-IoRA + GraphRAG | 57.92 | 48.46 | 52.17 | 93.68 |

| Method | Factual Accuracy | Terminolocal Rigor | Information Completeness | Linguistic Coherence | Logical Consistency |

|---|---|---|---|---|---|

| Llama-3.1-8B-Instruct | 3.6 | 3.8 | 3.8 | 4.0 | 4.2 |

| Gemma-2-9b-it | 3.8 | 4.2 | 3.8 | 3.8 | 4.0 |

| GLM-4-9B-Chat | 4.0 | 3.8 | 4.0 | 4.2 | 4.2 |

| Qwen2.5-7B-Instruct | 4.4 | 4.2 | 4.6 | 4.2 | 4.4 |

| Qwen2.5-7B-Instruct + GrphRAG | 6.4 | 6.2 | 6.6 | 6.0 | 6.2 |

| Qwen2.5-IoRA | 7.6 | 7.8 | 8.0 | 8.2 | 8.2 |

| Qwen2.5-IoRA + GraphRAG | 9.6 | 9.4 | 9.6 | 9.4 | 9.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Liu, B. Enhancing Ancient Ceramic Knowledge Services: A Question Answering System Using Fine-Tuned Models and GraphRAG. Information 2025, 16, 792. https://doi.org/10.3390/info16090792

Chen Z, Liu B. Enhancing Ancient Ceramic Knowledge Services: A Question Answering System Using Fine-Tuned Models and GraphRAG. Information. 2025; 16(9):792. https://doi.org/10.3390/info16090792

Chicago/Turabian StyleChen, Zhi, and Bingxiang Liu. 2025. "Enhancing Ancient Ceramic Knowledge Services: A Question Answering System Using Fine-Tuned Models and GraphRAG" Information 16, no. 9: 792. https://doi.org/10.3390/info16090792

APA StyleChen, Z., & Liu, B. (2025). Enhancing Ancient Ceramic Knowledge Services: A Question Answering System Using Fine-Tuned Models and GraphRAG. Information, 16(9), 792. https://doi.org/10.3390/info16090792