HiPC-QR: Hierarchical Prompt Chaining for Query Reformulation

Abstract

1. Introduction

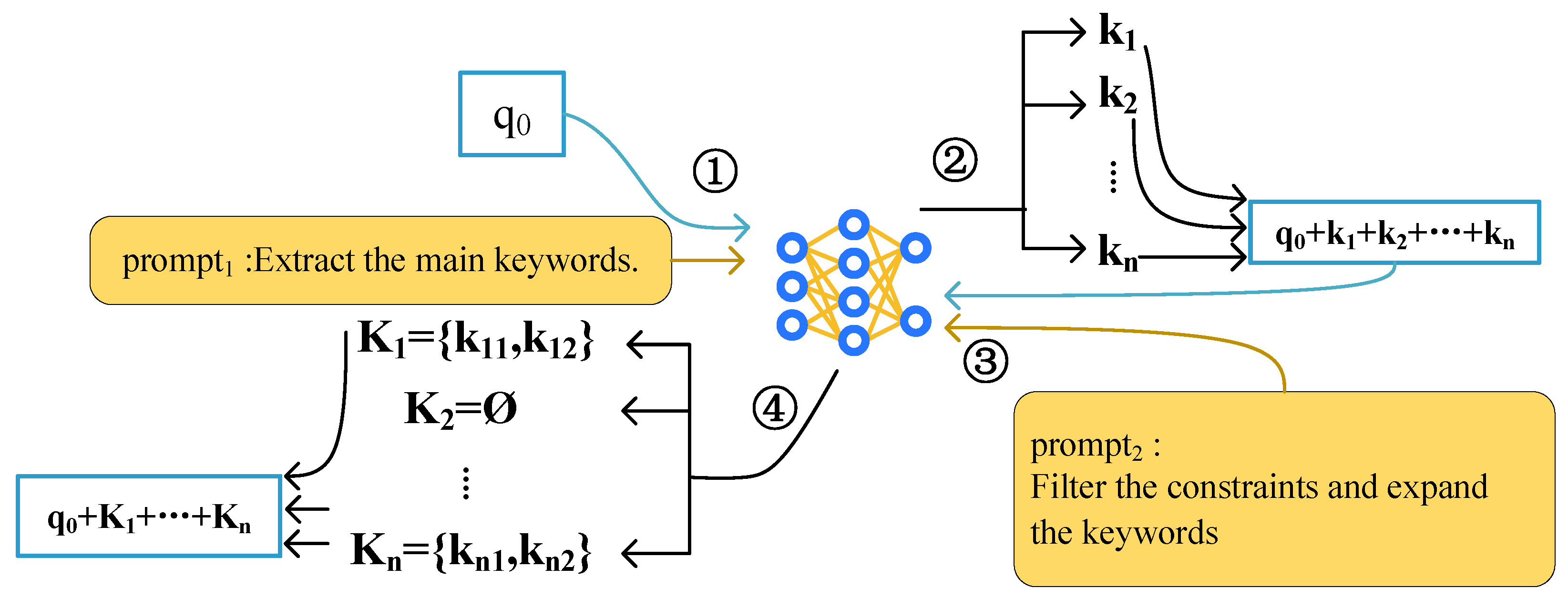

- A novel strategy for controlled query reformulation: We introduce HiPC-QR, a conceptual approach that addresses the limitations of complex queries by distinguishing between constraining and non-constraining keywords. This strategy enables precise simplification (via removal of overly restrictive constraints) and targeted enhancement (via expansion of flexible keywords).

- A practical two-step prompt chaining framework to realize HiPC-QR: Building on the above strategy, we design a concrete two-stage LLM-driven framework. In the first step, the model extracts query keywords and their semantic roles; in the second step, it selectively filters or expands keywords based on these roles. This framework ensures that the conceptual strategy can be effectively implemented in practice without causing semantic drift.

2. Related Work

2.1. Traditional Query Reformulation Methods

2.2. Query Reformulation Using Large Language Models

3. Methodology

3.1. Query Reformulation Framework Based on Prompt Chaining

3.2. Keyword Extraction

3.3. Keyword Filtering and Expansion

- Constraint Detection: Identify and relax overly specific spatiotemporal or numerical constraints (e.g., precise timestamps, narrow location ranges) that may unnecessarily limit the retrieval scope.

- Semantic Expansion: Recognize terms with high semantic relevance to the original query and suggest meaningful synonyms or related expressions that preserve the intent while increasing coverage.

4. Experimental Setup

4.1. Datasets and Evaluation Metrics

- R@1K: This metric measures the proportion of relevant documents retrieved among the top 1000 results, as defined in Equation (5). A higher R@1K indicates better coverage of the retrieval system.

- MRR@10: This metric evaluates the ranking quality by measuring the reciprocal rank of the first relevant document within the top 10 retrieved results, as defined in Equation (6), where denotes the rank position of the first relevant document for query q within the top 10 results and is the total number of queries. If no relevant document is found within the top 10, is taken as 0. A higher MRR@10 score indicates better ranking performance.

- MAP (Mean Average Precision): MAP is defined as the mean of the average precision (AP) values across all queries:where is the total number of queries and is the average precision for query q:In Equation (8), denotes the precision at rank i and is an indicator function equal to 1 if the document at position i is relevant (otherwise, it is equal to 0).

- NDCG@10 (Normalized Discounted Cumulative Gain at 10): This metric evaluates ranking quality by considering both the relevance and position and is calculated using two equations: Equation (9) defines DCG@10, while Equation (10) provides the specific form of NDCG@10 as the ratio of DCG@10 to the ideal IDCG@10. In Equation (9), is the relevance score of the document at rank i, and in Equation (10), IDCG@10 is the ideal DCG value obtained from a perfect ranking.The NDCG@10 metric is particularly effective for datasets with multilevel relevance annotations (e.g., DL19 and DL20), where documents are assigned graded relevance scores (e.g., 0: not relevant; 1: marginally relevant; 2: relevant; 3: highly relevant).

4.2. LLM Deployment and Retrieval Configuration

4.3. Comparison Methods

- , the number of candidate expansion terms (search range: 5–95, step size: 5);

- , the number of feedback documents (search range: 5–50, step size: 5);

- , the weight of the original query in the expanded version (search range: 0.2–0.8, step size: 0.1).

5. Experimental Results and Analysis

5.1. Analysis of Experimental Results

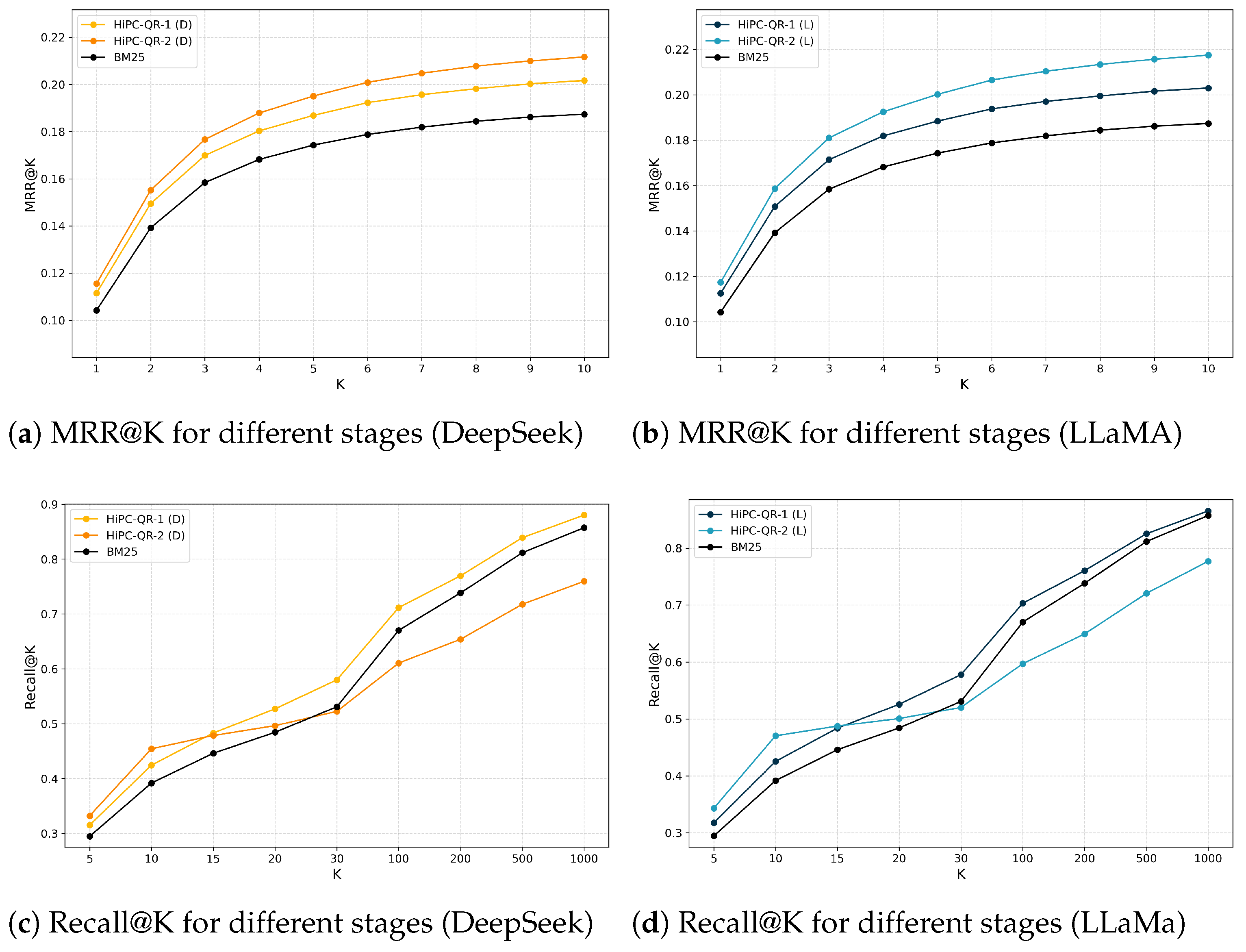

5.2. Ablation Study

5.3. Comparison with Generative Methods

5.4. Handling Constraints in Query Reformulation

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Robertson, S.E. On term selection for query expansion. J. Doc. 1990, 46, 359–364. [Google Scholar] [CrossRef]

- Lavrenko, V.; Croft, W.B. Relevance-based language models. In Proceedings of the ACM SIGIR Forum; ACM: New York, NY, USA, 2017; Volume 51, pp. 260–267. [Google Scholar]

- Kuzi, S.; Shtok, A.; Kurland, O. Query expansion using word embeddings. In Proceedings of the 25th ACM International on Conference on Information and Knowledge Management, Indianapolis, IN, USA, 24–28 October 2016; pp. 1929–1932. [Google Scholar]

- Roy, D.; Paul, D.; Mitra, M.; Garain, U. Using word embeddings for automatic query expansion. arXiv 2016, arXiv:1606.07608. [Google Scholar] [CrossRef]

- Zamani, H.; Croft, W.B. Embedding-based query language models. In Proceedings of the 2016 ACM International Conference on the Theory of Information Retrieval, Newark, DE, USA, 12–16 September 2016; pp. 147–156. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Khattab, O.; Zaharia, M. Colbert: Efficient and effective passage search via contextualized late interaction over bert. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 39–48. [Google Scholar]

- MacAvaney, S.; Yates, A.; Cohan, A.; Goharian, N. CEDR: Contextualized embeddings for document ranking. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 1101–1104. [Google Scholar]

- Nogueira, R.; Yang, W.; Cho, K.; Lin, J. Multi-stage document ranking with BERT. arXiv 2019, arXiv:1910.14424. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Peng, B.; Li, C.; He, P.; Galley, M.; Gao, J. Instruction tuning with gpt-4. arXiv 2023, arXiv:2304.03277. [Google Scholar] [CrossRef]

- Jagerman, R.; Zhuang, H.; Qin, Z.; Wang, X.; Bendersky, M. Query expansion by prompting large language models. arXiv 2023, arXiv:2305.03653. [Google Scholar] [CrossRef]

- Nogueira, R.; Lin, J.; Epistemic, A. From doc2query to docTTTTTquery. Online Preprint 2019, 6. [Google Scholar]

- Wang, X.; MacAvaney, S.; Macdonald, C.; Ounis, I. Generative query reformulation for effective adhoc search. arXiv 2023, arXiv:2308.00415. [Google Scholar] [CrossRef]

- Wang, L.; Yang, N.; Wei, F. Query2doc: Query expansion with large language models. arXiv 2023, arXiv:2303.07678. [Google Scholar] [CrossRef]

- Vemuru, S.; John, E.; Rao, S. Handling Complex Queries Using Query Trees. Authorea Preprints 2023. [Google Scholar] [CrossRef]

- Azad, H.K.; Deepak, A. Query expansion techniques for information retrieval: A survey. Inf. Process. Manag. 2019, 56, 1698–1735. [Google Scholar] [CrossRef]

- Bajaj, P.; Campos, D.; Craswell, N.; Deng, L.; Gao, J.; Liu, X.; Majumder, R.; McNamara, A.; Mitra, B.; Nguyen, T.; et al. Ms marco: A human generated machine reading comprehension dataset. arXiv 2016, arXiv:1611.09268. [Google Scholar]

- Craswell, N.; Mitra, B.; Yilmaz, E.; Campos, D.; Voorhees, E.M. Overview of the TREC 2019 deep learning track. arXiv 2020, arXiv:2003.07820. [Google Scholar] [CrossRef]

- Carpineto, C.; Romano, G. A survey of automatic query expansion in information retrieval. ACM Comput. Surv. (CSUR) 2012, 44, 1–50. [Google Scholar] [CrossRef]

- Robertson Stephen, E.; Steve, W.; Susan, J.; Micheline, H.B.; Mike, G. Okapi at TREC-3. In Proceedings of the Third Text REtrieval Conference (TREC 1994), Gaithersburg, MA, USA, 2–4 November 1994. [Google Scholar]

- Abdul-Jaleel, N.; Allan, J.; Croft, W.B.; Diaz, F.; Larkey, L.; Li, X.; Smucker, M.D.; Wade, C. UMass at TREC 2004: Novelty and HARD. In Proceedings of the TREC-13, Gaithersburg, MA, USA, 16–19 November 2004; pp. 715–725. [Google Scholar]

- Croft, W.B.; Metzler, D.; Strohman, T. Search Engines: Information Retrieval in Practice; Addison-Wesley Reading: Boston, MA, USA, 2010; Volume 520. [Google Scholar]

- Amati, G.; Van Rijsbergen, C.J. Probabilistic models of information retrieval based on measuring the divergence from randomness. ACM Trans. Inf. Syst. (TOIS) 2002, 20, 357–389. [Google Scholar] [CrossRef]

- Nogueira, R.; Yang, W.; Lin, J.; Cho, K. Document expansion by query prediction. arXiv 2019, arXiv:1904.08375. [Google Scholar] [CrossRef]

- Formal, T.; Piwowarski, B.; Clinchant, S. SPLADE: Sparse lexical and expansion model for first stage ranking. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, MontrEal, QC, Canada, 11–15 July 2021; pp. 2288–2292. [Google Scholar]

- Lin, J.; Ma, X. A few brief notes on deepimpact, coil, and a conceptual framework for information retrieval techniques. arXiv 2021, arXiv:2106.14807. [Google Scholar] [CrossRef]

- Xiong, L.; Xiong, C.; Li, Y.; Tang, K.F.; Liu, J.; Bennett, P.; Ahmed, J.; Overwijk, A. Approximate nearest neighbor negative contrastive learning for dense text retrieval. arXiv 2020, arXiv:2007.00808. [Google Scholar] [CrossRef]

- Zheng, Z.; Hui, K.; He, B.; Han, X.; Sun, L.; Yates, A. BERT-QE: Contextualized query expansion for document re-ranking. arXiv 2020, arXiv:2009.07258. [Google Scholar] [CrossRef]

- Wang, X.; Macdonald, C.; Tonellotto, N.; Ounis, I. ColBERT-PRF: Semantic pseudo-relevance feedback for dense passage and document retrieval. ACM Trans. Web 2023, 17, 1–39. [Google Scholar] [CrossRef]

- Wang, X.; Macdonald, C.; Tonellotto, N.; Ounis, I. Pseudo-relevance feedback for multiple representation dense retrieval. In Proceedings of the 2021 ACM SIGIR International Conference on Theory of Information Retrieval, New York, NY, USA, 11–15 July 2021; pp. 297–306. [Google Scholar]

- Yu, H.; Xiong, C.; Callan, J. Improving query representations for dense retrieval with pseudo relevance feedback. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Virtual, 1–5 November 2021; pp. 3592–3596. [Google Scholar]

- Li, H.; Mourad, A.; Zhuang, S.; Koopman, B.; Zuccon, G. Pseudo relevance feedback with deep language models and dense retrievers: Successes and pitfalls. ACM Trans. Inf. Syst. 2023, 41, 1–40. [Google Scholar] [CrossRef]

- Feng, J.; Tao, C.; Geng, X.; Shen, T.; Xu, C.; Long, G.; Zhao, D.; Jiang, D. Synergistic Interplay between Search and Large Language Models for Information Retrieval. arXiv 2023, arXiv:2305.07402. [Google Scholar] [CrossRef]

- Gao, L.; Ma, X.; Lin, J.; Callan, J. Precise zero-shot dense retrieval without relevance labels. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 1762–1777. [Google Scholar]

- Mackie, I.; Sekulic, I.; Chatterjee, S.; Dalton, J.; Crestani, F. GRM: Generative relevance modeling using relevance-aware sample estimation for document retrieval. arXiv 2023, arXiv:2306.09938. [Google Scholar] [CrossRef]

- Shen, T.; Long, G.; Geng, X.; Tao, C.; Zhou, T.; Jiang, D. Large language models are strong zero-shot retriever. arXiv 2023, arXiv:2304.14233. [Google Scholar] [CrossRef]

- Mackie, I.; Chatterjee, S.; Dalton, J. Generative relevance feedback with large language models. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 2026–2031. [Google Scholar]

- Dhole, K.D.; Agichtein, E. Genqrensemble: Zero-shot llm ensemble prompting for generative query reformulation. In Proceedings of the European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2024; pp. 326–335. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Saravia, E. Prompt Engineering Guide. GitHub. 2022. Available online: https://github.com/dair-ai/Prompt-Engineering-Guide (accessed on 1 June 2023).

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Lin, J.; Ma, X.; Lin, S.C.; Yang, J.H.; Pradeep, R.; Nogueira, R. Pyserini: A Python toolkit for reproducible information retrieval research with sparse and dense representations. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, New York, NY, USA, 11–15 July 2021; pp. 2356–2362. [Google Scholar]

- Thakur, N.; Reimers, N.; Rücklé, A.; Srivastava, A.; Gurevych, I. Beir: A heterogenous benchmark for zero-shot evaluation of information retrieval models. arXiv 2021, arXiv:2104.08663. [Google Scholar] [CrossRef]

| Content | Purpose | |

|---|---|---|

| Instruction | “Extract the main key terms” | Direct the model to identify semantically central elements in the query. |

| Output Format | “Keywords: <keywords>” | Ensure structured output, facilitating further processing and analysis. |

| Content | Purpose | |

|---|---|---|

| Instruction 1 | “Perform rigorous constraint detection on the query to identify and optimize overly specific spatiotemporal/numerical constraints (e.g., excessively precise temporal or spatial limitations) while preserving essential core conditions.” | Constraint detection. |

| Instruction 2 | “Identify any key terms that can be replaced with synonyms or related terms, considering the original intent of the query.” | Semantic expansion. |

| Output Format | “Reformulated query: <reformulated query>” | Ensure structured output, facilitating further processing and analysis. |

| Original_Query | US stock price fluctuations between 3:15 PM and 3:30 PM yesterday |

| Step 1 prompt | Given the original query: {original_query}, extract the main key terms. Return a list of the key terms or important concepts from the query. Keywords: <keywords> |

| Keywords | [US, stock price, fluctuations, yesterday, 3:15 PM–3:30 PM] |

| Step 2 prompt | Given the original query: {original_query} and the extracted key terms: {keywords}, perform the following tasks: 1. Perform rigorous constraint detection on the query to identify and optimize overly specific spatiotemporal/numerical constraints (e.g., excessively precise temporal or spatial limitations) while preserving essential core conditions. 2. Identify any key terms that can be replaced with synonyms or related terms, considering the original intent of the query. Reformulated query: <reformulated query> |

| Reformulated query | US stock price (performance) fluctuations (upward/downward trends) yesterday (last trading day). |

| DL19 | DL20 | Dev | ||||||

|---|---|---|---|---|---|---|---|---|

| Model | MAP | NDGC@10 | R@1K | MAP | NDGC@10 | R@1K | MRR@10 | R@1K |

| Retrieval Baseline | ||||||||

| BM25 | 0.290 | 0.497 | 0.745 | 0.288 | 0.488 | 0.803 | 0.187 | 0.857 |

| Query Reformulation Methods | ||||||||

| RM3 [22] | 0.334 | 0.515 | 0.795 | 0.302 | 0.492 | 0.829 | 0.165 | 0.870 |

| Rocchio [23] | 0.340 | 0.528 | 0.795 | 0.312 | 0.491 | 0.833 | 0.168 | 0.873 |

| GRF_Queries [38] | 0.272 | 0.455 | 0.729 | 0.231 | 0.373 | 0.637 | ||

| GRF_Keywords [38] | 0.190 | 0.349 | 0.648 | 0.201 | 0.314 | 0.572 | ||

| GenQREnsemble (L) | 0.222 | 0.375 | 0.667 | 0.203 | 0.349 | 0.646 | ||

| GenQREnsemble (D) | 0.327 | 0.513 | 0.807 | 0.279 | 0.465 | 0.833 | ||

| HiPC-QR-1 (L) | 0.302 | 0.509 | 0.750 | 0.307 | 0.513 | 0.821 | 0.203 | 0.865 |

| HiPC-QR-2 (L) | 0.289 | 0.485 | 0.756 | 0.282 | 0.477 | 0.793 | 0.218 | 0.777 |

| HiPC-QR-1 (D) | 0.324 | 0.542 | 0.801 | 0.312 | 0.511 | 0.816 | 0.202 | 0.880 |

| HiPC-QR-2 (D) | 0.311 | 0.500 | 0.783 | 0.299 | 0.477 | 0.819 | 0.212 | 0.760 |

| Method | MRR@10 | R@1K |

|---|---|---|

| Baseline BM25 | 0.1874 | 0.8573 |

| HiPC-QR-1 (L) | 0.2030 | 0.8654 |

| HiPC-QR-2 (L | 0.1551 | 0.7981 |

| HiPC-QR-2 (L | 0.2175 | 0.7770 |

| HiPC-QR-1 (D) | 0.2017 | 0.8803 |

| HiPC-QR-2 (D | 0.1106 | 0.6491 |

| HiPC-QR-2 (D | 0.2117 | 0.7596 |

| Method | NDCG@10 | Improvement over GRF_Keywords | Outperformed Queries (Count/%) | Std Dev |

|---|---|---|---|---|

| GRF_Keywords | 0.348714 | — | — | 0.273745 |

| HiPC-QR-1 (L) | 0.509351 | 46.1% | 28/65.1% | 0.26014 |

| HiPC-QR-1 (D) | 0.542188 | 55.5% | 31/72.1% | 0.230846 |

| Method | NDCG@10 | Improvement over GRF_Queries | Outperformed Queries (Count / %) | Std Dev |

| GRF_Queries | 0.455084 | — | — | 0.306530 |

| HiPC-QR-2 (L) | 0.484772 | 6.5% | 24/55.8% | 0.266856 |

| HiPC-QR-2 (D) | 0.499916 | 9.9% | 22/51.2% | 0.266682 |

| Method | Query (ID:833860) | Ndcg@10 |

|---|---|---|

| Original Query | what is the most popular food in switzerland | 0.4827 |

| GRF_Keywords | Switzerland, Food, Popular, List, Relevant, Documents | 0.2900 |

| GRF_Query | Swiss food, Swiss cuisine, Traditional Swiss food, Popular Swiss food, Most popular Swiss food, famous Swiss food, best Swiss food | 0.7744 |

| HiPC-QR-1 (L) | what is the most popular food in switzerland|popular food, Switzerland | 0.6170 |

| HiPC-QR-2 (L) | what is the most popular (food, dish) in (Switzerland, Swiss cuisine)? | 0.9202 |

| HiPC-QR-1 (D) | what is the most popular food in switzerland|Switzerland, popular food | 0.6170 |

| HiPC-QR-2 (D) | What are the most famous foods (popular food) in Switzerland? | 0.9355 |

| Method | Query (ID:1116380) | Map@1000 |

|---|---|---|

| Original Query | what is a nonconformity? earth science | 0.0043 |

| HiPC-QR-1 (L) | what is a nonconformity? earth science | nonconformity, earth science | 0.0051 |

| HiPC-QR-2 (L) | what is a nonconformity (geologic nonconformity)? | 0.5283 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, H.; Li, H.; Gonçalves, T. HiPC-QR: Hierarchical Prompt Chaining for Query Reformulation. Information 2025, 16, 790. https://doi.org/10.3390/info16090790

Yang H, Li H, Gonçalves T. HiPC-QR: Hierarchical Prompt Chaining for Query Reformulation. Information. 2025; 16(9):790. https://doi.org/10.3390/info16090790

Chicago/Turabian StyleYang, Hua, Hanyang Li, and Teresa Gonçalves. 2025. "HiPC-QR: Hierarchical Prompt Chaining for Query Reformulation" Information 16, no. 9: 790. https://doi.org/10.3390/info16090790

APA StyleYang, H., Li, H., & Gonçalves, T. (2025). HiPC-QR: Hierarchical Prompt Chaining for Query Reformulation. Information, 16(9), 790. https://doi.org/10.3390/info16090790