A Review on Gas Pipeline Leak Detection: Acoustic-Based, OGI-Based, and Multimodal Fusion Methods

Abstract

1. Introduction

- (1)

- It provides a comprehensive review of gas leak detection methodologies based on acoustic sensing, OGI, and multimodal fusion, along with a comparative analysis between the conventional algorithms and SOTA approaches;

- (2)

- It synthesizes critical datasets for infrared visual recognition methods and evaluates the performance metrics of the SOTA models in this domain;

- (3)

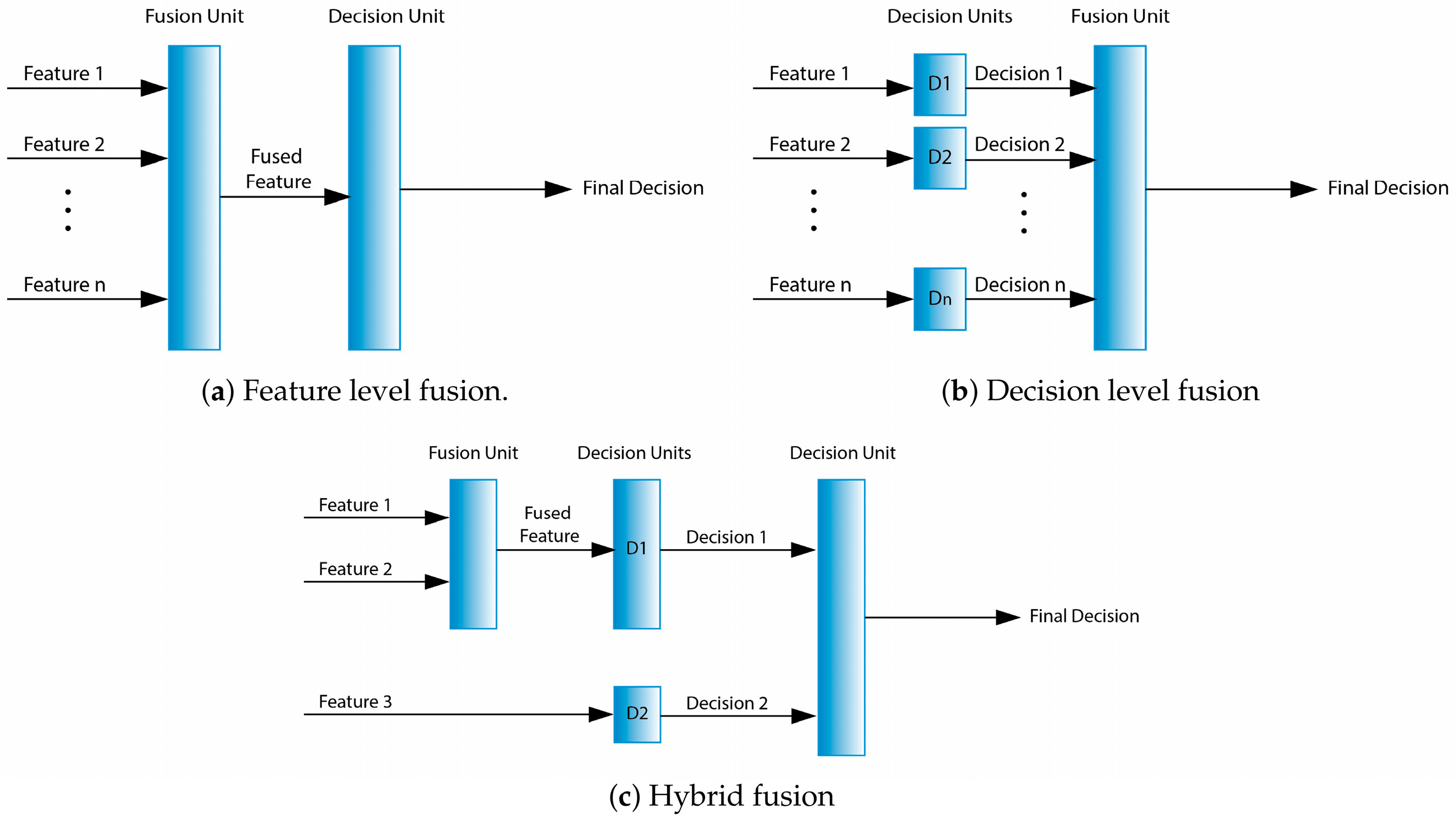

- It elucidates the comparative advantages and limitations of feature-level versus decision-level multimodal fusion, analyzes the underlying causes for the absence of graph neural network (GNN) integration in current frameworks, and proposes future research trajectories for multimodal fusion technologies.

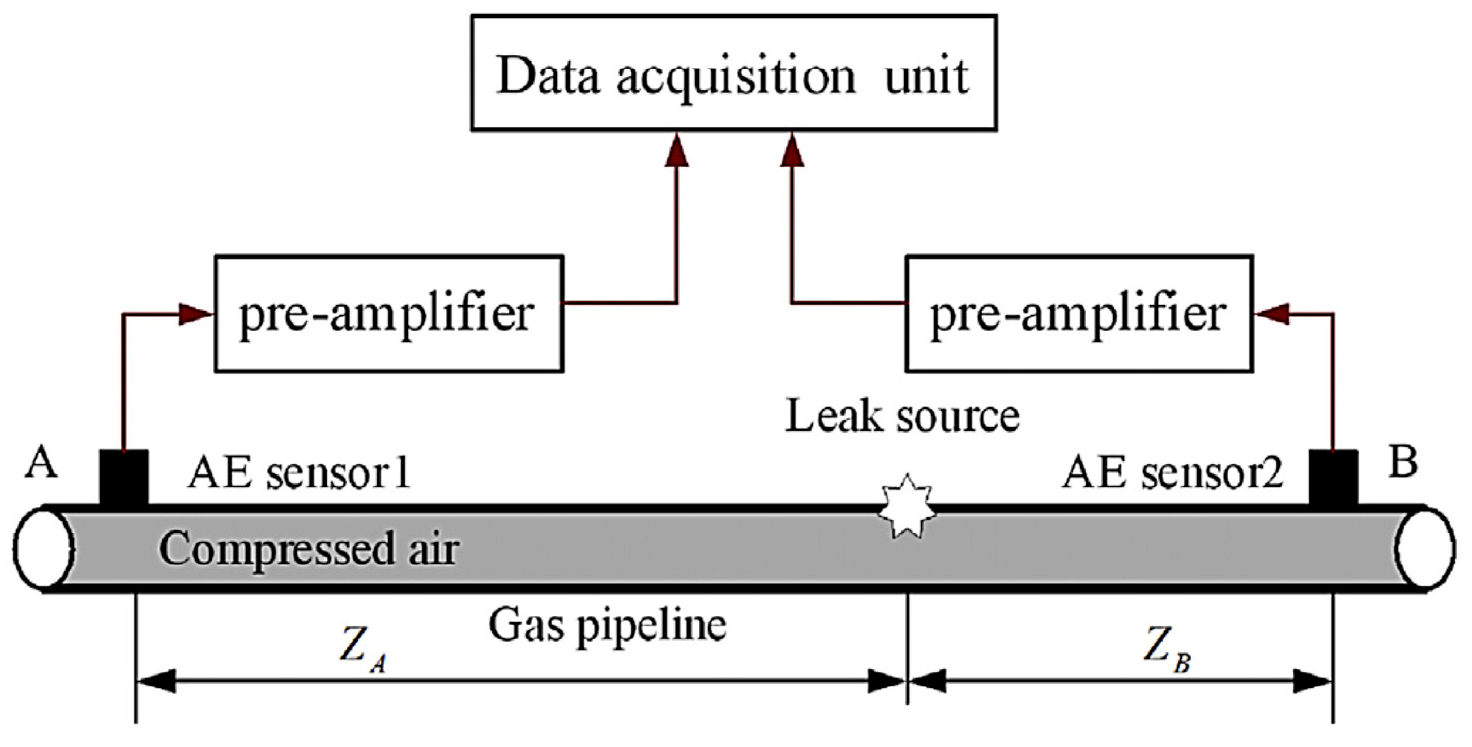

2. Acoustic-Based Leakage Detection System

2.1. Technical Framework and Implementation

2.1.1. Principle and Classification of Acoustic Detection

2.1.2. Acoustic Sensors

2.1.3. Acoustic Detection Process

- •

- Signal acquisition: The system collects raw acoustic signals from target objects using broadband microphones, piezoelectric sensors, etc.;

- •

- Feature engineering: Time and frequency domain analysis are typically employed to extract features;

- •

- Pattern recognition: Leak detection is ultimately achieved through energy threshold detection, machine learning, or end-to-end recognition via deep learning.

2.1.4. Key Challenges and Trade-Offs

- •

- Micro-leakage or small-leakage detection: Acoustic signals generated by leaks are susceptible to masking by ambient noise, potentially leading to false positives or missed detections [63]. Furthermore, small leaks may not induce significant pressure drops in the system [9], making timely identification difficult. According to acoustic detection principles, a smaller leakage orifice diameter produces higher-frequency signals with shorter detectable distances. Concurrently, higher-frequency signals necessitate increased sampling rates (as dictated by the Nyquist–Shannon theorem), thereby elevating computational requirements [23,60,61,62];

- •

- Signal distortion and attenuation: Acoustic waves reflecting off the surface of the pipeline can cause signal distortion, affecting the accurate determination of the leak location. The acoustic signals generated by leaks exhibit non-stationary characteristics in both time and frequency domains, meaning that the amplitude, frequency, and phase of the signals may vary over time. This non-stationarity makes it challenging for conventional signal-processing methods to effectively identify and analyze leak signals, especially under low SNR conditions, where the characteristics of the leak signals are not prominent. Noise reduction algorithms must balance between noise suppression and maintaining the integrity of the target signal [12,13]. Additionally, high-frequency components of signals experience significant attenuation during propagation through pipelines, resulting in weak correlation of leakage signals across a wide frequency band [24].

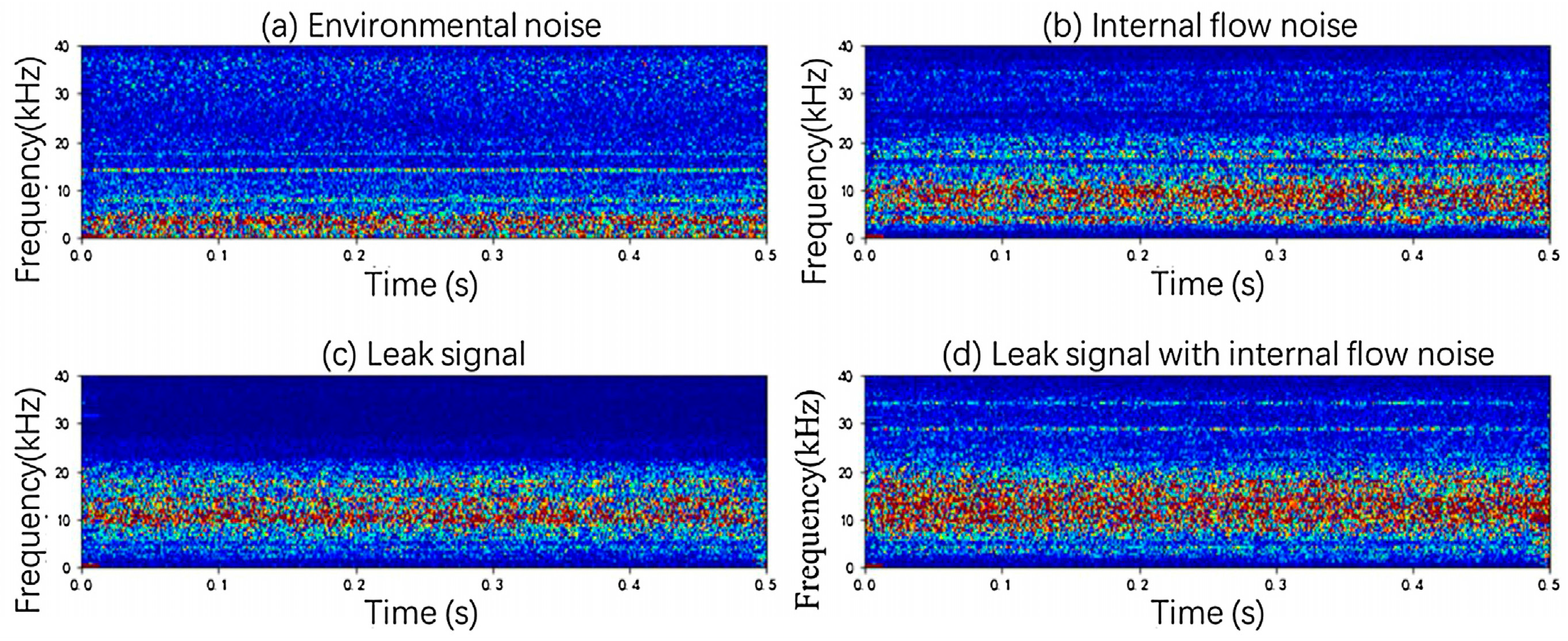

2.2. Spectral Analysis

2.3. Visualization of Acoustic Signature

3. OGI-Based Leakage Detection System

3.1. Technical Framework and Implementation

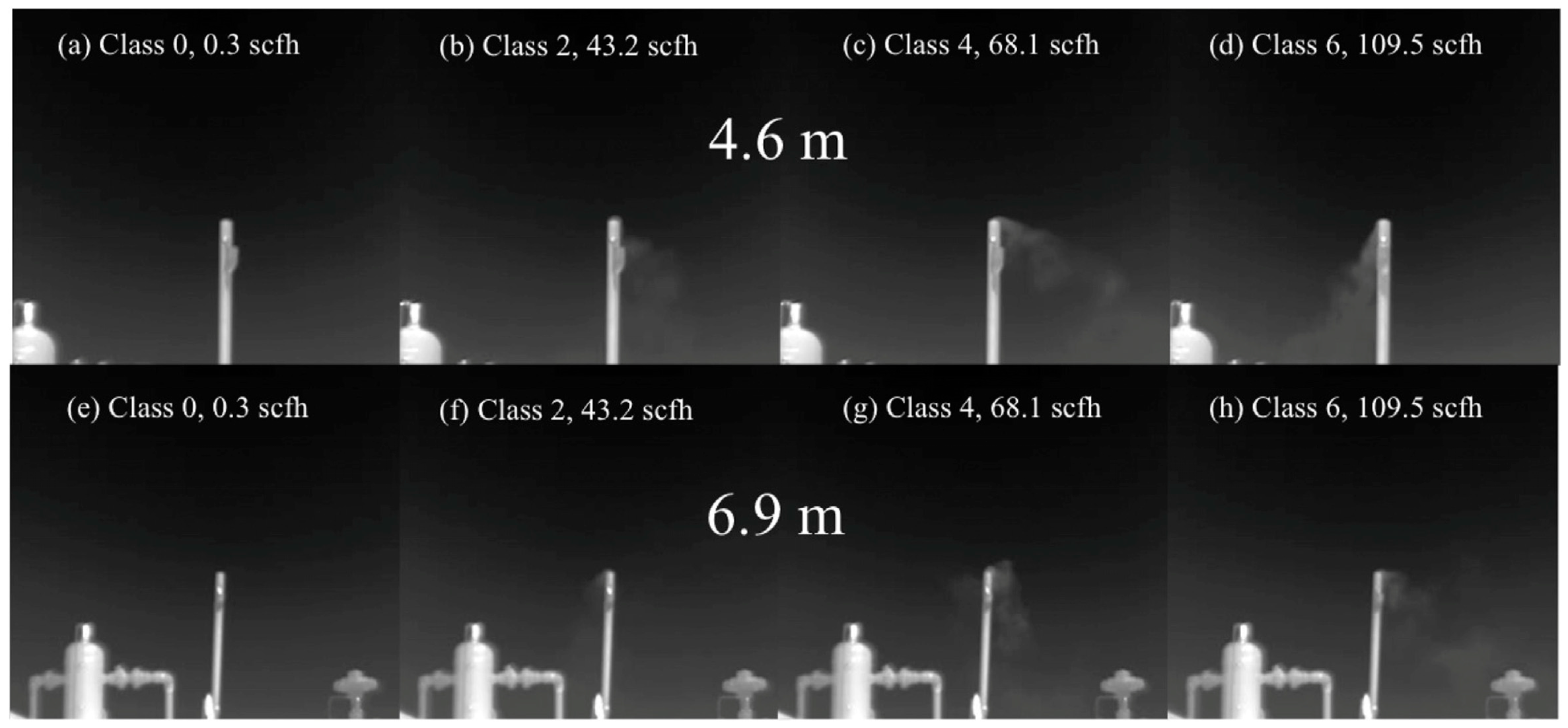

3.1.1. Infrared Imaging, Thermal Imaging, and Optical Gas Imaging

3.1.2. Principle and Classification of OGI Technologies

3.1.3. OGI-Based Leakage Detection Process

3.1.4. Key Challenges of OGI-Based Gas Leak Detection

- •

- Low image resolution, low contrast, and lack of visible texture [28,29,30,34]. The video captured by infrared cameras is of single-channel grayscale images, with no significant color or texture contrast between the gas and the background. The blurred boundaries of the gas increase detection difficulty;

- •

- Complex background interference [31]. In complex environments such as chemical plants, non-leak sources like heat sources in the background, light variations, and ambient temperature fluctuations may act as background interference. These factors can affect the identification and localization of leak sources, further complicating detection;

- •

- •

- Gas as a non-rigid target presents challenges in detection and annotation, along with a lack of large-scale standardized benchmark datasets [28,38,73]. The shape of gas plumes has no fixed pattern and evolves over time, making manual annotation standards difficult to unify. Detecting such plumes is more challenging than detecting rigid objects like vehicles, pedestrians, or animals. Currently, there is no industry-wide image or video benchmark dataset available; this limitation affects model reproducibility and comparability across studies.

3.2. Background Subtraction

3.3. Optical Flow Estimation

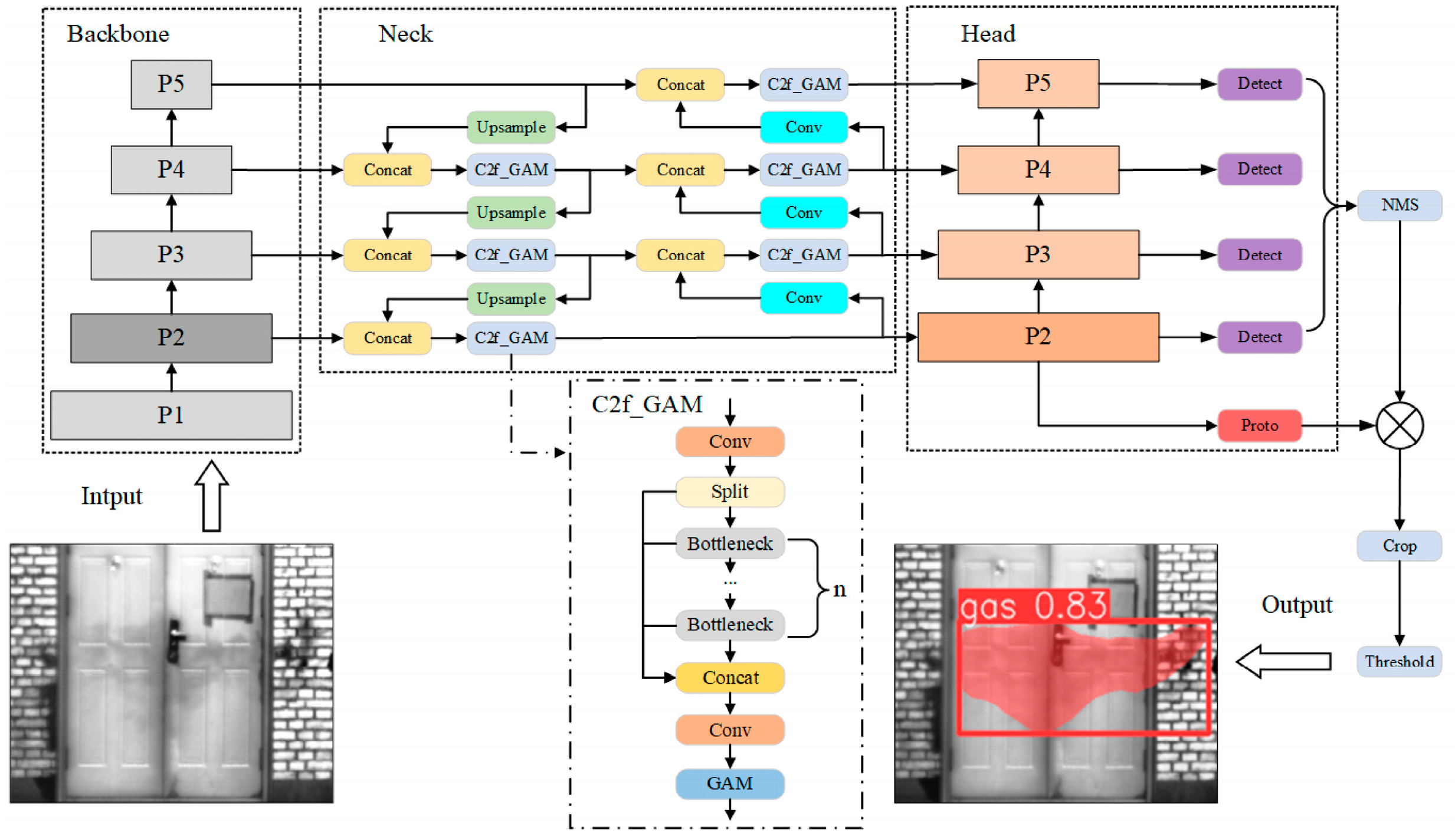

3.4. Convolutional Neural Network

3.4.1. Fully Connected Convolutional Networks

3.4.2. Fully Convolutional Networks

3.5. Vision Transformer

3.6. Non-Visual Methods

4. Multimodal Fusion Detection System

4.1. Consistency, Complementarity, and Compatibility

4.2. Classification of Multimodal Fusion

4.3. Feature-Level Fusion

4.3.1. Encoder–Decoder Fusion

4.3.2. Attention Mechanism Fusion

4.3.3. Generative Fusion

4.4. Decision-Level Fusion

5. Discussion

- (1)

- In engineering practice, “acoustic signal visualization” is often broadly termed “acoustic imaging” [20,123]. However, strictly defined acoustic-imaging techniques require spatial resolution capabilities for physical location mapping [124]. To ensure scientific terminology usage, this study adheres to precise technical definitions, clearly distinguishing between the two concepts: acoustic imaging specifically refers to sound field reconstruction technologies with spatial resolution capabilities, while acoustic signal visualization encompasses broader signal transformation and representation methods;

- (2)

- This paper did not differentiate between real-time monitoring and shutdown maintenance. Taking acoustic detection technologies as an example:

- •

- Acoustic emission (AE), as a passive monitoring technique, relies on elastic waves released by structural damage itself. It is suitable for real-time condition monitoring during equipment operation, but its localization accuracy is constrained by wave velocity calculation errors and sensor placement [7,14,18,19,22,23,66];

- •

- Ultrasonic guided waves (UGW) actively excite low-frequency ultrasonic waves, offering sub-millimeter detection sensitivity for internal defects, such as pipeline cracks or corrosion. However, due to the need to control the excitation energy and avoid interference, UGW is typically implemented during equipment shutdown maintenance [8,16,17].

- (3)

- Monitoring key thermodynamic and state parameters (e.g., temperature, flow rate, pressure/negative pressure waves) is effective for identifying large-diameter leaks, though localization is typically limited to a segmental range [125]. However, these methods, especially conventional threshold-based approaches, are generally inadequate or unreliable for detecting micro- and small-diameter leaks due to the subtlety of the signal changes relative to system noise. The detection of micro-leaks often requires more advanced, sensitive, and costly techniques [126];

- (4)

- As ref. [106] mentioned, graph neural networks (GNNs) can serve as effective tools for feature fusion. However, no relevant research has been reported in the field of pipeline gas leak detection thus far. The existing literature only applied GNN to the topological modeling of complex pipeline networks under negative-pressure wave (NPD) detection scenarios [127]. This technical gap may stem from the inherent modeling constraints of GNN—their core relies on “node-edge” structures to define entity relationships. Multimodal gas leak detection requires the fusion of heterogeneous data sources, which conventional graph structures struggle to directly represent for cross-modal feature interactions. Therefore, breaking away from node-centric modeling paradigms and exploring novel graph representation frameworks for non-structured data patterns will be a key research direction for advancing GNN applications in multimodal leak detection.

6. Conclusions

- •

- Advanced fusion architectures: Transformer-based networks, self-supervised learning paradigms, and digital twin-integrated systems;

- •

- Foundation development: Large-scale benchmark datasets, optimized feature extraction, and multi-sensor diagnostics;

- •

- Deployment optimization: Hardware-aware model lightweighting and cross-modal calibration frameworks.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AE | Acoustic Emission |

| AM | Attention Module |

| BiGRU | Bidirectional Gated Recurrent Unit |

| BiLSTM | Bidirectional Long Short-Term Memory |

| BiFPN | Bidirectional Feature Pyramid Network |

| CBAM | Convolutional Block Attention Module |

| CNN | Convolutional Neural Network |

| CNR | Contrast-to-Noise Ratio |

| ConvLSTM | Convolutional Long Short-Term Memory |

| CR | Cow Rumen |

| CWT | Continuous Wavelet Transform |

| DCN | Deformable Convolutional Network |

| DCT | Discrete Cosine Transform |

| DenseASPP | Dense Atrous Spatial Pyramid Pooling |

| DWT | Discrete Wavelet Transform |

| ECA | Efficient Channel Attention |

| FCN | Fully Convolutional Network |

| FCNN | Fully Convolutional Neural Network |

| FPA | Focal Plane Array |

| FPN | Feature Pyramid Network |

| FPS | Frames Per Second |

| FRFT | Fractional Fourier Transform |

| GADF | Gramian Angular Difference Field |

| GAN | Generative Adversarial Network |

| GNN | Graph Neural Network |

| GSP | Galvanized Steel Pipe |

| IIG | Industrial Invisible Gas |

| IGS | Infrared Gas Segmentation |

| IoU | Intersection Over Union |

| IR | Infrared |

| LBB | Leak Before Break |

| LLE | Locally Linear Embedding |

| LNG | Liquefied Natural Gas |

| LWIR | Long-Wave Infrared |

| mAP | Mean Average Precision |

| MTF | Markov Transition Field |

| MEKF | Markov Extended Kalman Filter |

| MOS | Metal Oxide Semiconductor |

| MR | Methane Release |

| MhLSa | Multi-head Linear Self-Attention |

| MsFFA | Multi-scale Fusion Feature Attention |

| MWIR | Mid-Wave Infrared |

| NI | National Instruments |

| NLP | Natural Language Processing |

| NPP | Nuclear Power Plant |

| OGI | Optical Gas Imaging |

| ppm | Parts Per Million |

| PSO | Particle Swarm Optimization |

| QOGI | Quantitative Optical Gas Imaging |

| RBFN | Radial Basis Function Network |

| ROI | Region of Interest |

| RP | Recurrence Plot |

| SE | Spectral Enhancement |

| SENet | Squeeze-and-Excitation Network |

| SIFT | Scale-Invariant Feature Transform |

| SNR | Signal-to-Noise Ratio |

| SOTA | State of The Art |

| STFT | Short-Time Fourier Transform |

| S-transform | Stockwell transform |

| SVM | Support Vector Machines |

| SWIR | Short-Wave Infrared |

| UAV | Unmanned Aerial Vehicle |

| UGW | Ultrasonic Guided Waves |

| VGGNet | Visual Geometry Group Network |

| VMD | Variational Mode Decomposition |

| ViBe | Visual Background Extractor |

| ViT | Vision Transformer |

| VOC | Volatile Organic Compound |

| WOA | Whale Optimization Algorithm |

| WT | Wavelet Transform |

| YOLO | You Only Look Once |

References

- Ding, Q.; Yang, Z.G.; Zheng, H.L.; Lou, X. Failure analysis on abnormal leakage of TP321 stainless steel pipe in hydrogen-eliminated system of nuclear power plant. Eng. Fail. Anal. 2018, 89, 286–292. [Google Scholar] [CrossRef]

- Meribout, M.; Khezzar, L.; Azzi, A.; Ghendour, N. Leak detection systems in oil and gas fields: Present trends and future prospects. Flow Meas. Instrum. 2020, 75, 101772. [Google Scholar] [CrossRef]

- Zou, S. Panjin Officials Punished After Chemical Plant Explosion. China Daily. 2024. Available online: https://www.chinadaily.com.cn/a/202410/28/WS671f593ca310f1265a1ca0aa.html (accessed on 17 August 2025).

- Ray, B. Malaysia Gas Pipeline Fire: An In-Depth Look at the Puchong Blaze. Breslin Media. 2025. Available online: https://breslin.media/safety-lessons-puchong-gas-fire (accessed on 17 August 2025).

- Li, C.; Yang, M. Applicability of Leak-Before-Break (LBB) Technology for the Primary Coolant Piping System to CPR1000 Nuclear Power Plants in China. In Proceedings of the 20th International Conference on Structural Mechanics in Reactor Technology (SMiRT 20), Espoo, Finland, 9–14 August 2009. [Google Scholar]

- Fiates, J.; Vianna, S.S. Numerical modelling of gas dispersion using OpenFOAM. Process Saf. Environ. Prot. 2016, 104, 277–293. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, C.; Xie, J.; Zhang, Y.; Liu, P.; Liu, Z. MFCC-LSTM framework for leak detection and leak size identification in gas-liquid two-phase flow pipelines based on acoustic emission. Measurement 2023, 219, 113238. [Google Scholar] [CrossRef]

- Jiang, Q.; Qu, W.; Xiao, L. Pipeline damage identification in nuclear industry using a particle swarm optimization-enhanced machine learning approach. Eng. Appl. Artif. Intell. 2024, 133, 108467. [Google Scholar] [CrossRef]

- Cai, B.P.; Yang, C.; Liu, Y.H.; Kong, X.D.; Gao, C.T.; Tang, A.B.; Liu, Z.K.; Ji, R.J. A data-driven early micro-leakage detection and localization approach of hydraulic systems. J. Cent. South Univ. 2021, 28, 1390–1401. [Google Scholar] [CrossRef]

- Lu, J.; Fu, Y.; Yue, J.; Zhu, L.; Wang, D.; Hu, Z. Natural gas pipeline leak diagnosis based on improved variational modal decomposition and locally linear embedding feature extraction method. Process Saf. Environ. Prot. 2022, 164, 857–867. [Google Scholar] [CrossRef]

- Xiao, Q.; Li, J.; Sun, J.; Feng, H.; Jin, S. Natural-gas pipeline leak location using variational mode decomposition analysis and cross-time–frequency spectrum. Measurement 2018, 124, 163–172. [Google Scholar] [CrossRef]

- Yao, L.; Zhang, Y.; He, T.; Luo, H. Natural gas pipeline leak detection based on acoustic signal analysis and feature reconstruction. Appl. Energy 2023, 352, 121975. [Google Scholar] [CrossRef]

- Yao, L.; Zhang, Y.; Wang, L.; Li, R.; He, T. Natural Gas Pipeline Leak Detection Based on Dual Feature Drift in Acoustic Signals. IEEE Trans. Ind. Inform. 2024, 21, 1950–1959. [Google Scholar] [CrossRef]

- Han, X.; Zhao, S.; Cui, X.; Yan, Y. Localization of CO2 gas leakages through acoustic emission multi-sensor fusion based on wavelet-RBFN modeling. Meas. Sci. Technol. 2019, 30, 085007. [Google Scholar] [CrossRef]

- Xiao, R.; Hu, Q.; Li, J. Leak detection of gas pipelines using acoustic signals based on wavelet transform and Support Vector Machine. Measurement 2019, 146, 479–489. [Google Scholar] [CrossRef]

- Li, S.; Zhang, J.; Yan, D.; Wang, P.; Huang, Q.; Zhao, X.; Cheng, Y.; Zhou, Q.; Xiang, N.; Dong, T. Leak detection and location in gas pipelines by extraction of cross spectrum of single non-dispersive guided wave modes. J. Loss Prev. Process Ind. 2016, 44, 255–262. [Google Scholar] [CrossRef]

- Li, S.; Han, M.; Cheng, Z.; Xia, C. Multi-modal identification of leakage-induced acoustic vibration in gas-filled pipelines by selection of coherent frequency band. Int. J. Press. Vessel. Pip. 2020, 188, 104193. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, J.; Yu, Y.; Qin, R.; Wang, J.; Zhang, S.; Su, Y.; Wen, G.; Cheng, W.; Chen, X. Microleakage acoustic emission monitoring of pipeline weld cracks under complex noise interference: A feasible framework. J. Sound Vib. 2025, 604, 118980. [Google Scholar] [CrossRef]

- Huang, Q.; Shi, X.; Hu, W.; Luo, Y. Acoustic emission-based leakage detection for gas safety valves: Leveraging a multi-domain encoding learning algorithm. Measurement 2025, 242, 116011. [Google Scholar] [CrossRef]

- Yuan, Y.; Cui, X.; Han, X.; Gao, Y.; Lu, F.; Liu, X. Multi-condition pipeline leak diagnosis based on acoustic image fusion and whale-optimized evolutionary convolutional neural network. Eng. Appl. Artif. Intell. 2025, 153, 110886. [Google Scholar] [CrossRef]

- Yan, W.; Liu, W.; Zhang, Q.; Bi, H.; Jiang, C.; Liu, H.; Wang, T.; Dong, T.; Ye, X. Multisource multimodal feature fusion for small leak detection in gas pipelines. IEEE Sens. J. 2023, 24, 1857–1865. [Google Scholar] [CrossRef]

- Saleem, F.; Ahmad, Z.; Siddique, M.F.; Umar, M.; Kim, J.M. Acoustic Emission-Based pipeline leak detection and size identification using a customized One-Dimensional densenet. Sensors 2025, 25, 1112. [Google Scholar] [CrossRef]

- Quy, T.B.; Kim, J.M. Leak detection in a gas pipeline using spectral portrait of acoustic emission signals. Measurement 2020, 152, 107403. [Google Scholar] [CrossRef]

- Zuo, J.; Zhang, Y.; Xu, H.; Zhu, X.; Zhao, Z.; Wei, X.; Wang, X. Pipeline leak detection technology based on distributed optical fiber acoustic sensing system. IEEE Access 2020, 8, 30789–30796. [Google Scholar] [CrossRef]

- Lu, Q.; Li, Q.; Hu, L.; Huang, L. An effective Low-Contrast SF6 gas leakage detection method for infrared imaging. IEEE Trans. Instrum. Meas. 2021, 70, 5009009. [Google Scholar] [CrossRef]

- Shen, Z.; Schmoll, R.; Kroll, A. Measurement of fluid flow velocity by using infrared and visual cameras: Comparison and evaluation of optical flow estimation algorithms. In Proceedings of the 2023 IEEE Sensors, Vienna, Austria, 29 October–1 November 2023; IEEE: New York, NY, USA, 2023; pp. 1–4. [Google Scholar]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Wang, J.; Tchapmi, L.P.; Ravikumar, A.P.; McGuire, M.; Bell, C.S.; Zimmerle, D.; Savarese, S.; Brandt, A.R. Machine vision for natural gas methane emissions detection using an infrared camera. Appl. Energy 2020, 257, 113998. [Google Scholar] [CrossRef]

- Shi, J.; Chang, Y.; Xu, C.; Khan, F.; Chen, G.; Li, C. Real-time leak detection using an infrared camera and Faster R-CNN technique. Comput. Chem. Eng. 2020, 135, 106780. [Google Scholar] [CrossRef]

- Wang, J.; Ji, J.; Ravikumar, A.P.; Savarese, S.; Brandt, A.R. VideoGasNet: Deep learning for natural gas methane leak classification using an infrared camera. Energy 2022, 238, 121516. [Google Scholar] [CrossRef]

- Bin, J.; Bahrami, Z.; Rahman, C.A.; Du, S.; Rogers, S.; Liu, Z. Foreground fusion-based liquefied natural gas leak detection framework from surveillance thermal imaging. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 7, 1151–1162. [Google Scholar] [CrossRef]

- Bhatt, R.; Gokhan Uzunbas, M.; Hoang, T.; Whiting, O.C. Segmentation of low-level temporal plume patterns from IR video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Lin, H.; Gu, X.; Hu, J.; Gu, X. Gas leakage segmentation in industrial plants. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; IEEE: New York, NY, USA, 2020; pp. 1639–1644. [Google Scholar]

- Xu, S.; Wang, X.; Sun, Q.; Dong, K. MWIRGas-YOLO: Gas Leakage Detection Based on Mid-Wave Infrared Imaging. Sensors 2024, 24, 4345. [Google Scholar] [CrossRef]

- Xiaojing, G.U.; Haoqi, L.; Dewu, D.; Xingsheng, G.U. An infrared gas imaging and instance segmentation based gas leakage detection method. J. East China Univ. Sci. Technol. 2023, 49, 76–86. [Google Scholar]

- Wang, Q.; Xing, M.; Sun, Y.; Pan, X.; Jing, Y. Optical gas imaging for leak detection based on improved deeplabv3+ model. Opt. Lasers Eng. 2024, 175, 108058. [Google Scholar] [CrossRef]

- Yu, H.; Wang, J.; Wang, Z.; Yang, J.; Huang, K.; Lu, G.; Deng, F.; Zhou, Y. A lightweight network based on local–global feature fusion for real-time industrial invisible gas detection with infrared thermography. Appl. Soft Comput. 2024, 152, 111138. [Google Scholar] [CrossRef]

- Sarker, T.T.; Embaby, M.G.; Ahmed, K.R.; AbuGhazaleh, A. Gasformer: A transformer-based architecture for segmenting methane emissions from livestock in optical gas imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 5489–5497. [Google Scholar]

- Jing, W.; Pan, Y.; Minghe, W.; Li, L.; Weiqi, J.; Wei, C.; Bingcai, S. Thermal imaging detection method of leak gas clouds based on support vector machine. Acta Opt. Sin. 2022, 42, 0911002. [Google Scholar]

- Bin, J.; Rogers, S.; Liu, Z. Vision Fourier transformer empowered multi-modal imaging system for ethane leakage detection. Inf. Fusion 2024, 106, 102266. [Google Scholar] [CrossRef]

- Kang, Y.; Shi, K.; Tan, J.; Cao, Y.; Zhao, L.; Xu, Z. Multimodal Fusion Induced Attention Network for Industrial VOCs Detection. IEEE Trans. Artif. Intell. 2024, 5, 6385–6398. [Google Scholar] [CrossRef]

- Li, K.; Xiong, K.; Jiang, J.; Wang, X. A convolutional block multi-attentive fusion network for underground natural gas micro-leakage detection of hyperspectral and thermal data. Energy 2025, 319, 134870. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, L.; Cheng, Z.; Xu, J.; Li, Q. Flow Faster RCNN: Deep Learning Approach for Infrared Gas Leak Detection in Complex Chemical Plant Surroundings. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; IEEE: New York, NY, USA, 2023; pp. 7823–7830. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. Ultralytics/yolov5: v3.0; Zenodo: Geneva, Switzerland, 2020. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Narkhede, P.; Walambe, R.; Mandaokar, S.; Chandel, P.; Kotecha, K.; Ghinea, G. Gas detection and identification using multimodal artificial intelligence based sensor fusion. Appl. Syst. Innov. 2021, 4, 3. [Google Scholar] [CrossRef]

- Narkhede, P.; Walambe, R.; Chandel, P.; Mandaokar, S.; Kotecha, K. MultimodalGasData: Multimodal dataset for gas detection and classification. Data 2022, 7, 112. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, E. Development of A Multimodal Deep Feature Fusion with Ensemble Learning Architecture for Real-Time Gas Leak Detection. In Proceedings of the 2024 IEEE 3rd International Conference on Computing and Machine Intelligence (ICMI), Mt Pleasant, MI, USA, 13–14 April 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- El Barkani, M.; Benamar, N.; Talei, H.; Bagaa, M. Gas Leakage Detection Using Tiny Machine Learning. Electronics 2024, 13, 4768. [Google Scholar] [CrossRef]

- Sharma, A.; Khullar, V.; Kansal, I.; Chhabra, G.; Arora, P.; Popli, R.; Kumar, R. Gas Detection and Classification Using Multimodal Data Based on Federated Learning. Sensors 2024, 24, 5904. [Google Scholar] [CrossRef]

- Rahate, A.; Mandaokar, S.; Chandel, P.; Walambe, R.; Ramanna, S.; Kotecha, K. Employing multimodal co-learning to evaluate the robustness of sensor fusion for industry 5.0 tasks. Soft Comput. 2023, 27, 4139–4155. [Google Scholar] [CrossRef]

- Attallah, O. Multitask deep learning-based pipeline for gas leakage detection via E-nose and thermal imaging multimodal fusion. Chemosensors 2023, 11, 364. [Google Scholar] [CrossRef]

- Blackstock, D.T. Fundamentals of Physical Acoustics; John Wiley & Sons: Hoboken, NJ, USA, 2000; pp. 2–4. [Google Scholar]

- Rathod, V.T. A review of acoustic impedance matching techniques for piezoelectric sensors and transducers. Sensors 2020, 20, 4051. [Google Scholar] [CrossRef]

- Yang, D.; Hou, N.; Lu, J.; Ji, D. Novel leakage detection by ensemble 1DCNN-VAPSO-SVM in oil and gas pipeline systems. Appl. Soft Comput. 2022, 115, 108212. [Google Scholar] [CrossRef]

- Shimanskiy, S.; Iijima, T.; Naoi, Y. Development of acoustic leak detection and localization methods for inlet piping of Fugen nuclear power plant. J. Nucl. Sci. Technol. 2004, 41, 183–195. [Google Scholar] [CrossRef]

- Tariq, S.; Bakhtawar, B.; Zayed, T. Data-driven application of MEMS-based accelerometers for leak detection in water distribution networks. Sci. Total Environ. 2022, 809, 151110. [Google Scholar] [CrossRef]

- Song, Y.; Li, S. Gas leak detection in galvanised steel pipe with internal flow noise using convolutional neural network. Process Saf. Environ. Prot. 2021, 146, 736–744. [Google Scholar] [CrossRef]

- Zhang, J.; Lian, Z.; Zhou, Z.; Xiong, M.; Lian, M.; Zheng, J. Acoustic method of high-pressure natural gas pipelines leakage detection: Numerical and applications. Int. J. Press. Vessel. Pip. 2021, 194, 104540. [Google Scholar] [CrossRef]

- da Cruz, R.P.; da Silva, F.V.; Fileti, A.M.F. Machine learning and acoustic method applied to leak detection and location in low-pressure gas pipelines. Clean Technol. Environ. Policy 2020, 22, 627–638. [Google Scholar] [CrossRef]

- Ning, F.; Cheng, Z.; Meng, D.; Wei, J. A framework combining acoustic features extraction method and random forest algorithm for gas pipeline leak detection and classification. Appl. Acoust. 2021, 182, 108255. [Google Scholar] [CrossRef]

- Zaman, D.; Tiwari, M.K.; Gupta, A.K.; Sen, D. A review of leakage detection strategies for pressurised pipeline in steady-state. Eng. Fail. Anal. 2020, 109, 104264. [Google Scholar] [CrossRef]

- Ning, F.; Cheng, Z.; Meng, D.; Duan, S.; Wei, J. Enhanced spectrum convolutional neural architecture: An intelligent leak detection method for gas pipeline. Process Saf. Environ. Prot. 2021, 146, 726–735. [Google Scholar] [CrossRef]

- Siddique, M.F.; Ahmad, Z.; Ullah, N.; Kim, J. A hybrid deep learning approach: Integrating short-time fourier transform and continuous wavelet transform for improved pipeline leak detection. Sensors 2023, 23, 8079. [Google Scholar] [CrossRef]

- Saleem, F.; Ahmad, Z.; Kim, J.M. Real-Time Pipeline Leak Detection: A Hybrid Deep Learning Approach Using Acoustic Emission Signals. Appl. Sci. 2024, 15, 185. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wehling, R.L. Infrared spectroscopy. In Food Analysis; Springer: Boston, MA, USA, 2010; pp. 407–420. [Google Scholar]

- Hodgkinson, J.; Tatam, R.P. Optical gas sensing: A review. Meas. Sci. Technol. 2012, 24, 012004. [Google Scholar] [CrossRef]

- Olbrycht, R.; Kałuża, M. Optical gas imaging with uncooled thermal imaging camera-impact of warm filters and elevated background temperature. IEEE Trans. Ind. Electron. 2019, 67, 9824–9832. [Google Scholar] [CrossRef]

- Gibson, G.M.; Sun, B.; Edgar, M.P.; Phillips, D.B.; Hempler, N.; Maker, G.T.; Malcolm, G.P.; Padgett, M.J. Real-time imaging of methane gas leaks using a single-pixel camera. Opt. Express 2017, 25, 2998–3005. [Google Scholar] [CrossRef]

- Ilonze, C.; Wang, J.; Ravikumar, A.P.; Zimmerle, D. Methane quantification performance of the quantitative optical gas imaging (QOGI) system using single-blind controlled release assessment. Sensors 2024, 24, 4044. [Google Scholar] [CrossRef]

- Wang, J.; Lin, Y.; Zhao, Q.; Luo, D.; Chen, S.; Chen, W.; Peng, X. Invisible gas detection: An RGB-thermal cross attention network and a new benchmark. Comput. Vis. Image Underst. 2024, 248, 104099. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2014; Volume 27. [Google Scholar]

- Guo, W.; Du, Y.; Du, S. LangGas: Introducing Language in Selective Zero-Shot Background Subtraction for Semi-Transparent Gas Leak Detection with a New Dataset. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville TN, USA, 11–15 June 2025; pp. 4490–4500. [Google Scholar]

- Yu, H.; Wang, J.; Yang, J.; Huang, K.; Zhou, Y.; Deng, F.; Lu, G.; He, S. GasSeg: A lightweight real-time infrared gas segmentation network for edge devices. Pattern Recognit. 2025, 170, 111931. [Google Scholar] [CrossRef]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 26 August 2004; IEEE: New York, NY, USA, 2004; Volume 2, pp. 28–31. [Google Scholar]

- Qasim, S.; Khan, K.N.; Yu, M.; Khan, M.S. Performance evaluation of background subtraction techniques for video frames. In Proceedings of the 2021 International Conference on Artificial Intelligence (ICAI), Islamabad, Pakistan, 5–7 April 2021; IEEE: New York, NY, USA, 2021; pp. 102–107. [Google Scholar]

- DeCuir-Gunby, J.T.; Marshall, P.L.; McCulloch, A.W. Developing and using a codebook for the analysis of interview data: An example from a professional development research project. Field Methods 2011, 23, 136–155. [Google Scholar] [CrossRef]

- Wang, Z.; Shen, X.; Sun, J.; Qiu, B.; Yu, Q. Improved Visual Background Extractor Based on Motion Saliency. In Proceedings of the 2020 IEEE International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 6–8 November 2020; IEEE: New York, NY, USA, 2020; Volume 1, pp. 209–212. [Google Scholar]

- Fleet, D.; Weiss, Y. Optical flow estimation. In Handbook of Mathematical Models in Computer Vision; Springer: Boston, MA, USA, 2006; pp. 237–257. [Google Scholar]

- Bruhn, A.; Weickert, J.; Schnörr, C. Lucas/Kanade meets Horn/Schunck: Combining local and global optic flow methods. Int. J. Comput. Vis. 2005, 61, 211–231. [Google Scholar] [CrossRef]

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High accuracy optical flow estimation based on a theory for warping. In Proceedings of the Computer Vision—ECCV 2004: 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; Proceedings, Part IV 8; Springer: Berlin/Heidelberg, Germany, 2004; pp. 25–36. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Image Analysis: 13th Scandinavian Conference, SCIA 2003, Halmstad, Sweden, 29 June–2 July 2003; Proceedings 13; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Yang, M.; Yu, K.; Zhang, C.; Li, Z.; Yang, K. Denseaspp for semantic segmentation in street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3684–3692. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Siddiqui, M.F.H.; Javaid, A.Y. A multimodal facial emotion recognition framework through the fusion of speech with visible and infrared images. Multimodal Technol. Interact. 2020, 4, 46. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 16133–16142. [Google Scholar]

- Tang, Q.; Liang, J.; Zhu, F. A comparative review on multi-modal sensors fusion based on deep learning. Signal Process. 2023, 213, 109165. [Google Scholar] [CrossRef]

- Cui, C.; Yang, H.; Wang, Y.; Zhao, S.; Asad, Z.; Coburn, L.A.; Wilson, K.T.; Landman, B.A.; Huo, Y. Deep multimodal fusion of image and non-image data in disease diagnosis and prognosis: A review. Prog. Biomed. Eng. 2023, 5, 022001. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, C.; Geng, B. Deep multimodal data fusion. ACM Comput. Surv. 2024, 56, 216. [Google Scholar] [CrossRef]

- Schmidt, R.M. Recurrent neural networks (rnns): A gentle introduction and overview. arXiv 2019, arXiv:1912.05911. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; Volume 30. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, Z.; Oates, T. Imaging time-series to improve classification and imputation. arXiv 2015, arXiv:1506.00327. [Google Scholar] [CrossRef]

- Liang, J.; Liang, S.; Ma, L.; Zhang, H.; Dai, J.; Zhou, H. Leak detection for natural gas gathering pipeline using spa-tio-temporal fusion of practical operation data. Eng. Appl. Artif. Intell. 2024, 133, 108360. [Google Scholar] [CrossRef]

- Wang, Q.; Sun, Y.; Jing, Y.; Pan, X.; Xing, M. YOLOGAS: An Intelligent Gas Leakage Source Detection Method Based on Optical Gas Imaging. IEEE Sens. J. 2024, 24, 35621–35627. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Ahmad, S.; Ahmad, Z.; Kim, C.H.; Kim, J.M. A method for pipeline leak detection based on acoustic imaging and deep learning. Sensors 2022, 22, 1562. [Google Scholar] [CrossRef]

- Norton, S.J. Theory of Acoustic Imaging; Stanford University: Stanford, CA, USA, 1977; pp. 1–2. [Google Scholar]

- Ling, K.; Han, G.; Ni, X.; Xu, C.; He, J.; Pei, P.; Ge, J. A new method for leak detection in gas pipelines. Oil Gas Facil. 2015, 4, 097–106. [Google Scholar] [CrossRef]

- Ukil, A.; Libo, W.; Ai, G. Leak detection in natural gas distribution pipeline using distributed temperature sensing. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; IEEE: New York, NY, USA, 2016; pp. 417–422. [Google Scholar]

- Zhang, X.; Shi, J.; Huang, X.; Xiao, F.; Yang, M.; Huang, J.; Yin, X.; Usmani, A.S.; Chen, G. Towards deep probabilistic graph neural network for natural gas leak detection and localization without labeled anomaly data. Expert Syst. Appl. 2023, 231, 120542. [Google Scholar] [CrossRef]

| Method | Characteristic | Temporal/Frequency Resolution | Complexity | Real-Time Capability | Leak Size (mm)/ Leak Rate (L/s) |

|---|---|---|---|---|---|

| STFT [20,59,64,65] | Linear time–frequency analysis | Low/Low | Low | Yes, 1.04 s/sample [64] | |

| WT [14,15,53,66] | Multiscale analysis | Dynamic time-frequency resolution. Scale reciprocity | Medium | No, dependent on hardware acceleration, with implementation potential [14,15] | |

| FRFT [18] | Rotated time–frequency plane | Medium/Medium | Medium | Yes, but with GPU acceleration | 1.006~1.288 L/min |

| VMD [10,11] | Constrained optimization | High/High | High | No, dependent on FPGA acceleration for feature extraction | Leak or not |

| MTF [19] | Time–series-to-probability matrix | - | Low | Yes, 17.97 ms/sample | Leak or not |

| S-Transform [20] | Adaptive-window STFT | Dynamic time–frequency resolution. Frequency reciprocity | High | No, requires the complete signal segment to generate time-frequency representations | |

| PR [20] | Phase-space visualization | - | High | No, computational complexity leads to exponentially growing processing times with increasing signal length |

| Datasets | Type | Describe | Ref. | Task | Evaluation Metrics | Edge Computing | |||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | mAP50 | IoU | Model | Device | |||||

| GasVid | IR videos | 669,600 frames of eight size classes | [28] | Binary classification | 91–99% | - | - | - | - |

| [30] | 8-Classes Classification | 39.1% | - | - | - | - | |||

| Gas-DB | IR/RGB images | Over 1000 RGB-T images | [74] | Semantic segmentation | - | - | 56.52% | - | - |

| IIG | IR images | 11,186 images with bounding boxes | [37] | Object detection | - | 82.7% | - | 7.7 M with 81.75 FPS | Snapdragon865/IMX6Quad (Qualcomm, San Diego, CA, USA) |

| SimGas | IR videos | Synthetically generated, pixel-level segmentation masks, 12,000 frames | [75] | Semantic segmentation | - | - | 69% | 0.5 FPS | NVIDIA RTX 3090 (NVIDIA, Santa Clara, CA, USA) |

| IGS | IR images | 6426 images | [76] | Semantic segmentation | - | - | 90.68% (mIoU) | 6.79 M with 62.1 FPS | IMX6Quad (NXP, Eindhoven, The Netherlands) |

| CR/MR | IR images | MR: ~6800 images CR: ~340 images | [38] | Semantic segmentation | - | - | 88.56% (mIoU) | 3.65 M with 97.45 FPS | CPU: Intel Core i9 11900F (2.50 GHz/32 GB) (Intel, Santa Clara, CA, USA) GPU: NVIDIA RTX3090 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, Y.; Bao, C.; He, Z.; Jian, Y.; Wang, X.; Huang, H.; Song, X. A Review on Gas Pipeline Leak Detection: Acoustic-Based, OGI-Based, and Multimodal Fusion Methods. Information 2025, 16, 731. https://doi.org/10.3390/info16090731

Gong Y, Bao C, He Z, Jian Y, Wang X, Huang H, Song X. A Review on Gas Pipeline Leak Detection: Acoustic-Based, OGI-Based, and Multimodal Fusion Methods. Information. 2025; 16(9):731. https://doi.org/10.3390/info16090731

Chicago/Turabian StyleGong, Yankun, Chao Bao, Zhengxi He, Yifan Jian, Xiaoye Wang, Haineng Huang, and Xintai Song. 2025. "A Review on Gas Pipeline Leak Detection: Acoustic-Based, OGI-Based, and Multimodal Fusion Methods" Information 16, no. 9: 731. https://doi.org/10.3390/info16090731

APA StyleGong, Y., Bao, C., He, Z., Jian, Y., Wang, X., Huang, H., & Song, X. (2025). A Review on Gas Pipeline Leak Detection: Acoustic-Based, OGI-Based, and Multimodal Fusion Methods. Information, 16(9), 731. https://doi.org/10.3390/info16090731