Education, Neuroscience, and Technology: A Review of Applied Models

Abstract

1. Introduction

2. Materials and Methods

- -

- P (Participants): students and teachers in formal education (primary, secondary, higher education, and teacher training);

- -

- I (Intervention): implementation of neuroeducational models in teaching;

- -

- C (Comparison): traditional teaching methods vs. neuroeducation-based approaches in different educational populations;

- -

- O (Outcomes): impact on learning, conceptual understanding, academic performance, and teacher training and perception.

2.1. Study Selection Criteria

2.2. Search Strategy

2.2.1. PubMed

2.2.2. Web of Science

2.2.3. LILACS

2.2.4. ScienceDirect

2.3. Inclusion and Exclusion Criteria

2.4. Data Extraction

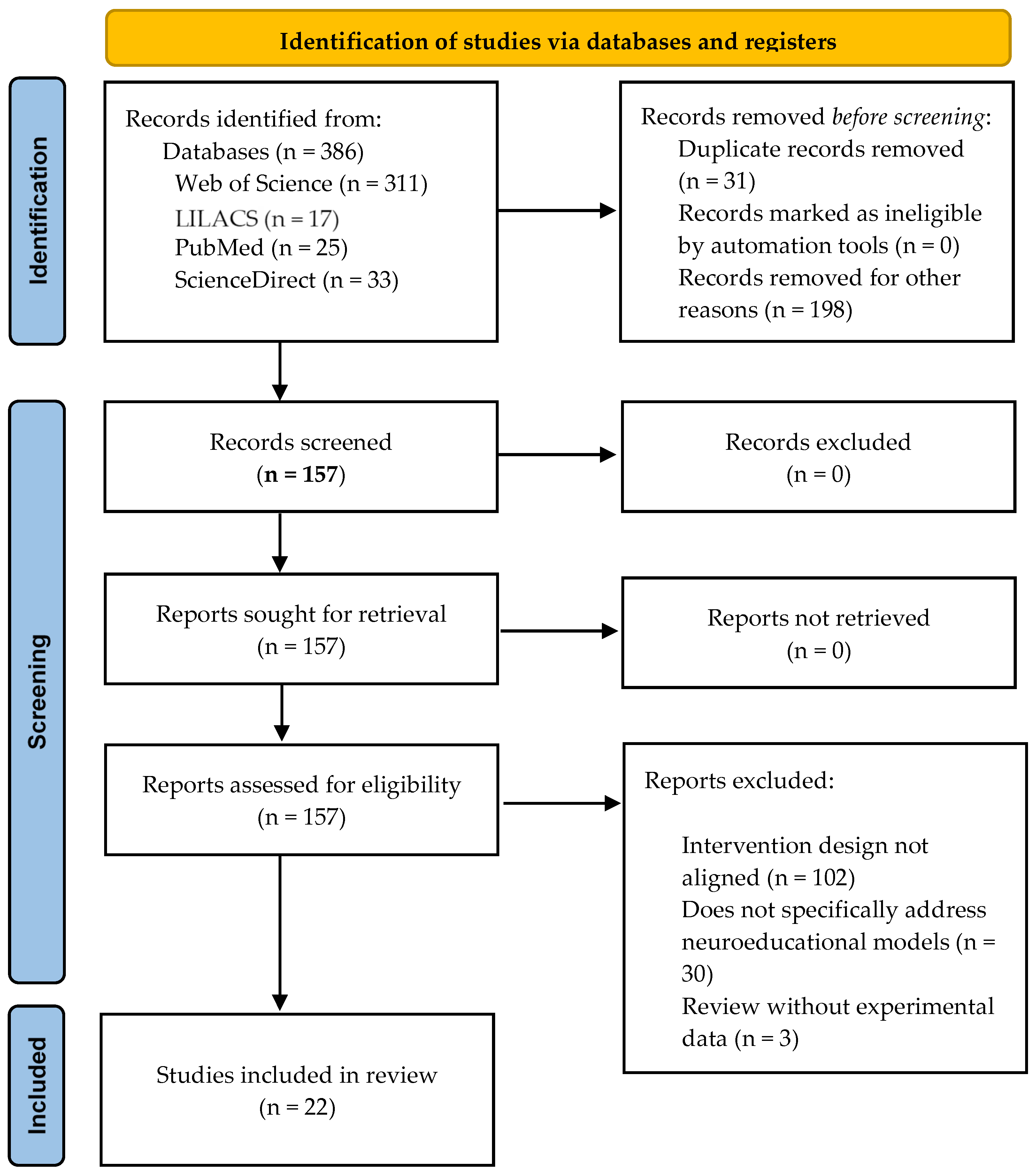

2.5. Presentation of Results: Adherence to the PRISMA Quality Initiative

2.6. Quality Assessment

3. Results

3.1. Study Selection and Data Extraction Process

3.2. Study Characteristics: Summary of Results

3.3. Relationship Between Neuroeducational Interventions and Learning Outcomes

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Immordino-Yang, M.H.; Damasio, A. We feel, therefore we learn: The relevance of affective and social neuroscience to education. Mind Brain Educ. 2007, 1, 3–10. [Google Scholar] [CrossRef]

- Tokuhama-Espinosa, T. Neuroeducación: Solo se Puede Aprender Aquello que se Ama, 3rd ed.; Editorial Kairós: Barcelona, Spain, 2021. [Google Scholar]

- Doidge, N. The Brain’s Way of Healing: Remarkable Discoveries and Recoveries from the Frontiers of Neuroplasticity; Penguin Life: New York, NY, USA, 2015. [Google Scholar]

- Gazzaniga, M.S. The Cognitive Neuroscience of Mind: A Tribute to Michael, S. Gazzaniga; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Maguire, E.A. Neuroeducation: The bridge between neuroscience and education. In Neuroscience in Education; Della Sala, S., Anderson, M., Eds.; Oxford University Press: Oxford, UK, 2018; pp. 21–36. [Google Scholar]

- Howard-Jones, P.A. Neuroscience and education: Myths and messages. Nat. Rev. Neurosci. 2014, 15, 817–824. [Google Scholar] [CrossRef]

- Dekker, S.; Lee, N.C.; Howard-Jones, P.; Jolles, J. Neuromyths in education: Prevalence and predictors of misconceptions among teachers. Front. Psychol. 2012, 3, 429. [Google Scholar] [CrossRef] [PubMed]

- Ansari, D.; De Smedt, B.; Grabner, R.H. Neuroeducation—A critical overview of an emerging field. Neuroethics 2017, 10, 105–117. [Google Scholar] [CrossRef]

- Sousa, D.A. How the Brain Learns; Corwin Press: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Immordino-Yang, M.H. Emotions, Learning, and the Brain: Exploring the Educational Implications of Affective Neuroscience; W. W. Norton & Company: New York, NY, USA, 2016. [Google Scholar]

- Hattie, J.; Yates, G. Visible Learning and the Science of How We Learn; Routledge: New York, NY, USA, 2014. [Google Scholar]

- Thomas, M.S.C.; Ansari, D.; Knowland, V.C.P. Annual research review: Educational neuroscience—Progress and prospects. J. Child Psychol. Psychiatry 2019, 60, 477–492. [Google Scholar] [CrossRef] [PubMed]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning, 3rd ed.; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar] [CrossRef]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L.B. Artificial Intelligence and Education: Promise and Implications for Teaching and Learning; UNESCO: Paris, France, 2021; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000377071 (accessed on 10 July 2020).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Moher, D. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Dhungel, S.; Mahat, B.; Limbu, P.; Thapa, S.; Awasthi, J.R.; Thapaliya, S.; Jha, M.K.; Kunwar, A.J. Advantage of neuroeducation in managing mass psychogenic illness among rural school children in Nepal. IBRO Neurosci. Rep. 2023, 14, 435–440. [Google Scholar] [CrossRef]

- Ballesta-Claver, J.; Sosa Medrano, I.; Gómez Pérez, I.A.; Ayllón Blanco, M.F. Propuesta neuroeducativa para un aprendizaje tecno-activo de la enseñanza de las ciencias: Un cambio universitario necesario. Rev. Electrón. Interuniv. Form. Profr. 2024, 27, 35–50. [Google Scholar] [CrossRef]

- Zheng, K.; Shen, Z.; Chen, Z.; Che, C.; Zhu, H. Application of AI-empowered scenario-based simulation teaching mode in cardiovascular disease education. BMC Med. Educ. 2024, 24, 1003. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Kong, W.; Zhang, G.; Pu, H.; Wang, Y.; Ye, L.W.; Shang, L. The effects of ‘small private online course + flipped classroom’ teaching on job competency of nuclear medicine training trainees. BMC Med. Educ. 2024, 24, 1542. [Google Scholar] [CrossRef]

- Dehghani, A.; Fakhravari, F.; Hojat, M. The effect of the team members teaching design vs. regular lectures method on the self-efficacy of multiple sclerosis patients in Iran. Randomised Controlled Trial. Investig. Educ. Enferm. 2024, 42, e13. [Google Scholar] [CrossRef] [PubMed]

- Syväoja, H.J.; Sneck, S.; Kukko, T.; Asunta, P.; Räsänen, P.; Viholainen, H.; Kulmala, J.; Hakonen, H.; Tammelin, T.H. Effects of physically active maths lessons on children’s maths performance and maths-related affective factors: Multi-arm cluster randomized controlled trial. Br. J. Educ. Psychol. 2024, 94, 839–861. [Google Scholar] [CrossRef] [PubMed]

- Drollinger-Vetter, B.; Buff, A.; Lipowsky, F.; Philipp, K.; Vogel, S. Fostering pedagogical content knowledge on “probability” in preservice primary teachers within formal teacher education: A longitudinal experimental field study. Teach. Teach. Educ. 2025, 105, 104953. [Google Scholar] [CrossRef]

- Vetter, M.; O’Connor, H.T.; O’Dwyer, N.; Chau, J.; Orr, R. ‘Maths on the move’: Effectiveness of physically-active lessons for learning maths and increasing physical activity in primary school students. J. Sci. Med. Sport 2020, 23, 735–739. [Google Scholar] [CrossRef]

- Rodríguez-López, E.S.; Calvo-Moreno, S.O.; Cimadevilla Fernández-Pola, E.; Fernández-Rodríguez, T.; Guodemar-Pérez, J.; Ruiz-López, M. Aprendizaje de la anatomía musculoesquelética a través de las nuevas tecnologías: Ensayo clínico aleatorizado. Rev. Lat. Am. Enferm. 2020, 28, e3237. [Google Scholar] [CrossRef]

- Coelho, M.D.M.F.; Miranda, K.C.L.; Melo, R.C.D.O.; Gomes, L.F.D.S.; Monteiro, A.R.M.; Moreira, T.M.M. Use of a therapeutic communication application in the nursing undergraduate program: Randomized clinical trial. Rev. Lat. Am. Enferm. 2021, 29, e3456. [Google Scholar] [CrossRef]

- Brügge, E.; Ricchizzi, S.; Arenbeck, M.; Keller, M.N.; Schur, L.; Stummer, W.; Holling, M.; Lu, M.H.; Darici, D. Large language models improve clinical decision making of medical students through patient simulation and structured feedback: A randomized controlled trial. BMC Med. Educ. 2024, 24, 1391. [Google Scholar] [CrossRef]

- Kliziene, I.; Cizauskas, G.; Sipaviciene, S.; Aleksandraviciene, R.; Zaicenkoviene, K. Effects of a physical education program on physical activity and emotional well-being among primary school children. Int. J. Environ. Res. Public Health 2021, 18, 7536. [Google Scholar] [CrossRef]

- Di Lieto, M.C.; Pecini, C.; Castro, E.; Inguaggiato, E.; Cecchi, F.; Dario, P.; Cioni, G.; Sgandurra, G. Empowering executive functions in 5- and 6-year-old typically developing children through educational robotics: An RCT study. Front. Psychol. 2020, 10, 3084. [Google Scholar] [CrossRef]

- Faure, T.; Weyers, I.; Voltmer, J.B.; Westermann, J.; Voltmer, E. Test-reduced teaching for stimulation of intrinsic motivation (TRUST): A randomized controlled intervention study. BMC Med. Educ. 2024, 24, 718. [Google Scholar] [CrossRef] [PubMed]

- Mugo, A.M.; Nyaga, M.N.; Ndwiga, Z.N.; Atitwa, E.B. Evaluating learning outcomes of Christian religious education learners: A comparison of constructive simulation and conventional method. Heliyon 2024, 10, e32632. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, X.; Sun, X. The reciprocal relationship among Chinese senior secondary students’ intrinsic and extrinsic motivation and cognitive engagement in learning mathematics: A three-wave longitudinal study. ZDM Math. Educ. 2023, 55, 399–412. [Google Scholar] [CrossRef]

- Zhao, W.; Cao, Y.; Hu, L.; Lu, C.; Liu, G.; Gong, M.; He, J. A randomized controlled trial comparison of PTEBL and traditional teaching methods in “Stop the Bleed” training. BMC Med. Educ. 2024, 24, 462. [Google Scholar] [CrossRef]

- Hirt, C.N.; Eberli, T.D.; Jud, J.T.; Rosenthal, A.; Karlen, Y. One step ahead: Effects of a professional development program on teachers’ professional competencies in self-regulated learning. Teach. Teach. Educ. 2025, 159, 104977. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, Y.; Wang, P.; Qian, A.; Hong, H.; Xu, S. Application of clinical thinking training system based on entrustable professional activities in emergency teaching. BMC Med. Educ. 2024, 24, 1294. [Google Scholar] [CrossRef] [PubMed]

- Channegowda, N.Y.; Pai, D.R.; Manivasakan, S. Simulation-based teaching versus traditional small group teaching for first-year medical students among high and low scorers in respiratory physiology: A randomized controlled trial. J. Educ. Eval. Health Prof. 2025, 22, 8. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Shi, T.-L.; Wang, R.-Y.; Li, N.; Hao, Y.-H.; Zhang, J.-L.; Tang, M.; Liu, S.; Qin, G.-M.; Mi, W. Implementation and evaluation of the three action teaching model with learning plan guidance in preventive medicine course. Front. Psychol. 2024, 15, 1508432. [Google Scholar] [CrossRef]

- Tomás, B.H.; Ciliska, D.; Dobbins, M.; Micucci, S. Un proceso para revisar sistemáticamente la literatura: Proporcionar evidencia de investigación para intervenciones de enfermería en salud pública. Cosmovisiones Evid. Enfermería Basada 2004, 1, 176–184. [Google Scholar]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Studies published between 2020 and 2025. | Studies published prior to 2020. |

| Open-access or freely accessible full-text studies. | Articles without full-text access. |

| Experimental studies, case studies, or meta-analyses. | Research focused solely on clinical contexts without educational application. |

| Focus on neuroeducational models applied in formal educational settings (schools, universities, and teacher training). | Studies discussing neuromyths unrelated to applied neuroeducation. |

| Methodologies including neuroscientific measures (e.g., neuroimaging and cognitive assessments) or practical applications of neuroscience-based models in education. | Studies lacking empirical evidence on neuroeducational model implementation. |

| Studies written in English, Spanish, or Portuguese (languages in which the review team is proficient). | Studies published in other languages due to linguistic limitations of the review team. |

| Author (Year) | Country | Study Design | Comparisons and Intervention Characteristics | Study Objectives | Participants | Measured Variables and Scales | Intervention | Results |

|---|---|---|---|---|---|---|---|---|

| Dhungel et al. (2023) [16] | Nepal | Quasi-experimental pre-test–post-test design | Comparison between students from schools affected and not affected by mass hysteria; 4-week intervention including educational theater, anatomical models, and classes on neurology and stress; data collected pre- and post-intervention using questionnaires | To evaluate the impact of neuroeducational tools on awareness of mass hysteria | N = 234 (female students, grades 6–10) | Structured questionnaire developed by the research team to assess knowledge of the nervous system and stress. Content validated by a panel of neuroscience and psychology experts; no formal psychometric testing (e.g., reliability or statistical validity) was conducted. | 4-week intervention (1 session/week); included educational theater, anatomical models, and classes on the nervous system and stress; data collected pre- and post-intervention. | Improved awareness of stress, but no significant change in understanding of the nervous system. |

| Ballesta Claver et al. (2024) [17] | Spain | Pre-experimental pre-test–post-test design | Intervention applied to a single group without a control group; the same participants were assessed before and after the implementation of a neuroeducational intervention in a university teacher training setting | To evaluate the impact of neuroeducation in higher education teaching | N = 77 (prospective primary school teachers) | Two ad hoc content questionnaires developed by the research team were used, along with a validated Likert-type neuroeducational scale (Cronbach’s α = 0.968; KMO = 0.934) measuring dimensions related to learning, attention, emotions, and the development of executive functions. The scale was specifically designed for this study and does not have a commercial or previously published version. | Neuroeducational intervention with a constructivist approach, focused on active learning and the use of neuroeducational resources (technology, visual aids, debates, etc.). Duration: 6 weeks. Data collected before and after the intervention. | A significant effect on learning was observed, with an average 27% increase in acquired knowledge. |

| Zheng et al. (2024) [18] | China | Experimental | Comparison between scenario-based simulation teaching (experimental group) and traditional cardiology instruction (control group) | To evaluate the impact of simulation-based teaching on learning about cardiovascular diseases | N = 66 medical students: control group (N = 32); experimental group (N = 34) | Performance evaluations in cardiovascular diagnosis, focusing on measuring the accuracy of diagnoses made by medical students and assessing their ability to apply knowledge in practical scenarios; specifically, post-tests, Mini-CEX, clinical critical thinking scale, satisfaction surveys (experimental group), and semi-structured interviews. Rubrics are not specified, but standardized and qualitative mixed instruments were used. | Scenario-based simulation teaching using AI. Experimental group: AI-supported simulation in cardiovascular diagnostics. Control group: traditional instruction (no simulation). Intervention duration: 5 weeks. | Simulation significantly improved diagnostic performance, with a 15% increase in diagnostic accuracy. |

| Wang et al. (2024) [19] | China | Experimental | Comparison between a small private online course (SPOC) + flipped classroom (experimental group) and traditional teaching (control group) | To evaluate the impact of the “small private online course + flipped classroom” model on professional competencies in nuclear medicine | N = 103 first-year residents: experimental group (N = 52); control group (N = 51) | Questionnaires (pre- and post-class, 20 questions, maximum score: 100); final exam (theoretical and practical, maximum score: 100). Course satisfaction: 5-domain questionnaire. Course effectiveness: assessment of 6 competency and skill areas. Knowledge assessment: objective questionnaires before each class (20 questions), theoretical and practical tests after each session, and a final exam (50% theoretical/50% practical) on equipment handling. Performance was assessed using standardized faculty criteria. Satisfaction and perceived course effectiveness were measured in areas such as professional skills, patient care, communication, teamwork, teaching, and learning. Questionnaire validation is not reported, though detailed content tables (S3 and S4) are referenced. | Experimental group: blended learning combining a small private online course (SPOC) with a flipped classroom approach, designed to improve workplace competencies among nuclear medicine residents. Participants accessed online content before attending in-person sessions to apply their knowledge. Control group: traditional lecture-based instruction. Intervention duration: 10 weeks. | The implementation of the blended learning model (SPOC + flipped classroom) significantly improved residents’ workplace competencies, with a 22% increase in performance scores. Results also showed higher overall satisfaction and perceived educational effectiveness among residents. |

| Dehghani et al. (2024) [20] | Iran | Randomized controlled trial (RCT) | Comparison between team-based teaching, lecture-based teaching, and a control group in patients with multiple sclerosis | To evaluate the impact of team-based teaching on patients’ self-efficacy | N = 48 patients with multiple sclerosis, randomly assigned to three groups: TMTD (n = 16), lecture-based (n = 16), and control (n = 16) | Rigby et al.’s validated Self-Efficacy Scale. Measured variables: self-efficacy related to daily activities, motor skills, participation in health-related decision making, and emotional regulation. | All three groups received six training sessions over a period of 12 weeks (two sessions per week). TMTD group (Teaching Method Through Teamwork): Intervention based on teamwork, including group dynamics, interactive discussions, and collaborative problem-solving, aligned with neuroeducational models that promote social collaboration and activate brain areas associated with memory and decision making. Lecture-based group: Traditional lectures focused on individual, passive learning, with no significant interaction between participants. Control group: Received no educational intervention. Intervention duration: 12 weeks. | Team-based teaching improved patient self-efficacy by 21%. Significant improvements were observed across all self-efficacy dimensions in the intervention groups, including health decision making, motor skills, and emotional regulation. |

| Syväoja et al. (2024) [21] | Finland | Randomized controlled trial (RCT) | Comparison between physically active math instruction (experimental group) and traditional teaching methods (control group) | To evaluate the impact of physical activity during math instruction | Students with signed parental consent (N = 397, mean age: 9.3 years): experimental group: n = 265; control group: n = 132 | Curriculum-Based Mathematics Test: To assess mathematical performance, an adapted test battery was used, including tasks on multiplication, division, geometry, time, column methods, and problem-solving. Results were measured by the total number of correct answers. Self-Reported Questionnaire on Affective Factors Related to Mathematics: To measure enjoyment and self-perception, a modified version of the Fennema–Sherman Mathematics Attitude Scale was used, adapted for Finnish third-grade students, employing a 5-point Likert scale. The Modified Abbreviated Math Anxiety Scale (mAMAS) was used to assess math anxiety in both learning and testing situations. Motor Skills Assessment: To measure baseline motor skills, validated batteries such as the Körperkoordinationstest für Kinder (KTK), the Movement Assessment Battery for Children, Second Edition (MABC-2), and the Eurofit protocol were used. Educational Support Needs Questionnaire: Teachers completed a questionnaire to assess students’ need for educational support (intensified or special), which was included as a confounding covariate. Physical Activity (PA) Monitoring: An accelerometer was used to measure the amount and intensity of physical activity during math lessons in a subsample of 172 children. Data Imputation and Statistical Analysis: Linear mixed-effects models (LMEs), adjusted for gender, educational support needs, and arithmetic fluency, were used for data analysis. Multiple imputation (MI) models were also applied to manage missing data. All instruments used were validated and widely applied in previous educational research. No self-developed instruments were reported, as the scales and tests used were adaptations or established tools. | Experimental group: physically active math lessons based on neuroeducational models. Control group: traditional teaching without physical activity. Intervention duration: 12 weeks. | The active teaching group showed a significant improvement in math performance (+14%) and motivation (+0.8 on the Likert scale). No improvements were observed in the control group. |

| Drollinger-Vetter et al. (2025) [22] | Switzerland | Pre-test–post-test experimental study | Evaluation of changes in pre-service teachers’ pedagogical content knowledge (PCK) in probability; single experimental group | To assess the impact of formal education on the development of pedagogical content knowledge in probability | N = 512 (pre-service primary school teachers) | Two main instruments were used to assess pedagogical content knowledge (PCK) in probability: Mathematical Knowledge Questionnaire: This instrument assessed conceptual mastery of probability. While it was designed specifically for this study, no detailed information about its validation process was provided. Pedagogical Content Knowledge Scale (PCK Scale): This scale measured teachers’ ability to effectively teach probabilistic concepts. It was adapted from previously validated tools used in similar contexts, though reliability or validity metrics for this study were not specified. | Mathematics education training program with a focus on probability over the course of one academic year. The intervention is considered neuroeducational, as it actively stimulates cognition and neural plasticity through problem solving, promotes meaningful learning by linking new knowledge to prior experience, fosters social interaction that activates brain regions associated with motivation and cognitive processing, and uses spaced repetition to support long-term memory consolidation. Intervention duration: one academic year. | Significant improvement in pedagogical content knowledge, with better performance in probabilistic problem-solving tasks. |

| Vetter et al. (2025) [23] | Australia | Randomized controlled trial (RCT); pre-test–post-test design | Comparison between two groups: physically active math lessons (playground); traditional classroom-based math lessons (classroom) | To evaluate the effectiveness of physically active lessons for learning mathematics and increasing physical activity among primary school students | N = 172 primary school students: experimental group: n = 86; control group: n = 86 | Multiplication test (designed by the authors), general mathematics test (standardized). Physical activity was measured using accelerometers. | 3 × 30-min lessons per week for 6 weeks, delivered either in a physically active environment (playground) or traditional classroom setting (classroom). Data were collected before and after the intervention. The intervention leveraged the principle of neuroplasticity, whereby physical activity may foster brain connectivity that facilitates learning. Additionally, integrating movement with academic content (in this case, mathematics) is known to enhance intrinsic motivation and attention, key factors in the neuroscience of learning. | Significant improvement in multiplication scores in the playground group. No significant differences were found in general math performance. Total physical activity and moderate-to-vigorous physical activity levels were significantly higher in the playground group. |

| Rodríguez-López et al. (2020) [24] | Brazil | Randomized clinical trial | Comparison between traditional teaching (control group) and teaching using interactive technologies (augmented reality and 3D models) (experimental group) | To evaluate the effectiveness of interactive technologies in anatomy education | N = 62 physiotherapy students: control group: N = 43; experimental group: N = 19 | Theoretical test (knowledge), practical test (application), and perception questionnaire (satisfaction and motivation). Variables measured: academic performance and learning perception. All assessments were developed by the authors; no formal statistical validation was reported. | The experimental group used augmented reality applications and digital 3D models to study musculoskeletal anatomy during lessons, while the control group followed traditional lecture-based instruction. Intervention duration: 8 weeks. | The experimental group achieved higher scores in both theoretical and practical tests, and reported greater satisfaction and motivation toward learning. |

| Coelho et al. (2021) [25] | Brazil | Randomized clinical trial | Comparison between an experimental group using an educational mobile app on therapeutic communication and a control group receiving traditional instruction without the app | To evaluate the effect of using a mobile app on communication skills in nursing education | N = 68 nursing students (randomized): analyzed: N = 60 (30 in the experimental group and 30 in the control group) | Questionário de Conhecimento sobre Comunicação Terapêutica (QCCoT) and the Therapeutic Communication Skills Self-Assessment Scale. Measured variables: theoretical knowledge and perceived communication skills. | The intervention involved the use of an interactive mobile application specifically developed to improve therapeutic communication. The app included clinical simulations, practical exercises, real-case analysis, immediate feedback, and self-assessment modules. It was used over a 6-week period as a complement to theoretical instruction. | The experimental group showed a significant improvement in theoretical knowledge and self-assessed communication skills compared to the control group. |

| Brügge et al. (2024) [26] | USA | Randomized clinical trial | Comparison between experimental group: use of large language models (LLMs) during clinical simulations and control group; clinical simulations without LLM assistance | To evaluate the impact of large language models (LLMs) on clinical decision making in medical students using simulated patient interviews and structured feedback | N = 21 participants who completed the study: control group: 11 participants; feedback group (experimental): 10 participants | Clinical Reasoning Indicator—History-Taking Inventory (CRI-HTI): assessed clinical decision making based on simulated history-taking interactions. | Control group: participated in simulated patient interviews without feedback. Experimental group: participated in simulated history-taking exercises with AI-generated performance feedback. Intervention duration: 3 months, 4 sessions. | The feedback group showed significantly greater improvement in scores compared to the control group, particularly in contextualization and information gathering during simulated clinical interviews. |

| Kliziene et al. (2021) [27] | Lithuania | Quasi-experimental pre-test–post-test design | Intra-group comparison before and after the implementation of a 20-week structured physical education program conducted during school hours for primary school children | To evaluate the effect of structured physical education on emotional well-being and physical activity levels in children | N = 162 primary school students aged 10–12 years (85 boys and 77 girls) | Well-being: assessed using the Strengths and Difficulties Questionnaire (SDQ). Physical activity level: measured with the Physical Activity Questionnaire for Older Children (PAQ-C), validated for pediatric populations. | Emotional structured physical education program: 45-min sessions twice per week for 20 weeks, focused on physical and psychosocial development. The intervention is considered neuroeducational as it targets emotional and physical well-being-two key factors in optimizing learning from a neuroscience perspective. Emotional well-being and physical activity are fundamental components in brain stimulation and enhancement, directly impacting students’ learning capacity. Data were collected pre- and post-intervention. | Significant improvements in emotional well-being and physical activity levels were observed, with positive effects noted in both boys and girls. |

| Di Lieto et al. (2020) [28] | Italy | Randomized controlled trial (RCT) | Comparison between: experimental group: educational robotics intervention to enhance executive functions; control group: no intervention | To evaluate the impact of educational robotics on the development of executive functions in typically developing 5- to 6-year-old children | N = 128 children (ages 5–6): experimental group (educational robotics): N = 64; control group (no intervention): N = 64 | Executive function assessment: A task battery was used to evaluate planning, inhibition, and working memory. Perception questionnaires: Included scales measuring children’s motivation and enjoyment of the activities, completed by children and parents. All instruments used were validated and showed high reliability (α > 0.75). | Intervention duration: 8 weeks. Experimental group: participated in an educational robotics program, consisting of two 40-min sessions per week. Activities were designed to promote planning, problem-solving, and decision-making skills Control group: engaged in traditional pedagogical activities with no robotics component. Data were collected before and after the intervention. | The experimental group showed significant improvements in all executive function tasks, especially in working memory and inhibitory control. |

| Faure et al. (2024) [29] | Germany | Randomized controlled trial (RCT) | Comparison among two intervention groups: Stress Management Intervention (IVSM) and Friendly Feedback Intervention (IVFF) vs. a control group (CG) during an anatomy dissection course | To evaluate the impact of friendly feedback and stress management on intrinsic motivation and stress reduction during an anatomy dissection course in medical students | N = 166 medical students (85% of those enrolled in the course): Group 1 (IVFF): N = 55; Group 2 (IVSM): N = 55; control group: N = 56 | Perceived Stress Scale (PSS): standardized instrument for measuring stress levels. Anxiety Scale: questionnaire to assess anxiety (e.g., Beck Anxiety Inventory). Intrinsic and Extrinsic Motivation Scale: questionnaire such as the Academic Motivation Scale (AMS). Self-Efficacy Scale: measure of confidence in one’s abilities, similar to the General Self-Efficacy Scale. Positive and Negative Affect Scale (e.g., PANAS): used to assess emotional states. | Group IVFF: formal assessments replaced with frequent, friendly feedback. Group IVSM: stress management intervention. Intervention duration: two academic semesters with measurements at nine time points. | The friendly feedback group (IVFF) showed significant reductions in stress, anxiety, and negative affect, as well as improvements in intrinsic motivation, positive affect, and self-efficacy. Perceived academic performance was not affected. |

| Mugo et al. (2024) [30] | Kenya | Experimental, randomized group design | Comparison between a group using constructive simulation (experimental group) and a group using conventional methods in Christian Religious Education (control group) | To compare learning outcomes in Christian Religious Education using constructive simulation versus conventional teaching methods | N = 90 secondary school students: constructive simulation group: N = 50; conventional method group: N = 40 | Academic performance exams: written and oral tests validated by subject-matter experts. Intrinsic Motivation Scale for Religious Learning: validated Likert-type scale (α > 0.80). | Experimental group (constructive simulation): instruction through virtual environments and hands-on activities. Control group (conventional method): content-centered doctrinal classes. Intervention duration: 8 weeks. Pre- and post-intervention data collection. | The constructive simulation group outperformed the conventional group in academic achievement, intrinsic motivation, and understanding of religious content. |

| Zhang et al. (2023) [31] | China | Moderated mediation model | Comparison of different teaching strategies (interactive strategies, experimental group vs. traditional strategies, control group) and their impact on students learning engagement | To analyze the impact of teachers’ teaching strategies on students’ learning engagement, considering mediating factors such as motivation and emotional support | N = 300 secondary school students: experimental group: N = 150; control group: N = 150 | Academic Engagement Scale (Student Engagement Scale): measures students’ participation and interest in academic activities. Intrinsic and Extrinsic Motivation Scale: assesses students’ levels of motivation to learn, both internal and external. | Teaching strategies: Experimental group: interactive teaching strategies that promote active participation and student motivation. Control group: traditional strategies with more passive teaching. The study was conducted over the course of one school semester. | Interactive teaching strategies significantly increased students’ learning engagement compared to traditional strategies. Intrinsic motivation and emotional support mediated the relationship between teaching strategies and student engagement. |

| Zhao et al. (2024) [32] | China | Randomized controlled trial | Comparison between the experimental group using PTEBL teaching (Problems, Teamwork, and Evidence-Based Learning) and the control group using traditional teaching in the “Stop the Bleed” (STB) training course | To evaluate the effectiveness of PTEBL on hemostasis skills, emergency preparedness, and teamwork | N = 153 third-year medical students: PTEBL group (N = 77) and traditional group (N = 76) | Ad hoc questionnaire with items on mastery of STB techniques, emergency preparedness, and teamwork. Likert-type response scale (psychometric validation not specified). | Four-hour STB course using PTEBL methodology vs. traditional method. Pre-test–post-test evaluation through questionnaires. | PTEBL was equally effective in STB skills, significantly improved teamwork (94.8% vs. 81.6%), and correlated with clinical reasoning; no significant differences were found in overall preparedness or practical skills. |

| Hirt et al. (2025) [33] | Switzerland | Quasi-experimental study with control group (pre-test–post-test) | Comparison between the experimental group (SRL training) and control group with no intervention | To examine the impact of a professional development program on teachers’ competencies in SRL as promoters and self-regulated learners | N = 54 lower secondary school teachers: experimental group N = 31; control group n = 23 | Instrument: SRL-QuTA Questionnaire (Self-Regulated Learning Questionnaire for Teachers Agency). Variables: teachers’ self-efficacy in SRL, knowledge about SRL, promotion of SRL practices, and application of SRL in teaching; 5-point Likert scales (1 = strongly disagree, 5 = strongly agree). | Intervention in the experimental group consisted of a multi-day professional development program including theoretical sessions, practical exercises, self-assessment, and reflection on strategies to implement SRL in the classroom. Duration: 5 sessions, each lasting 5.5 to 6 h. | Significant positive effects on competencies as SRL promoters. No significant changes observed as self-regulated learners. Initial competencies did not influence development. |

| Wang et al. (2024) [34] | China | Experimental (quasi-experimental) study | Comparison between a training system based on Entrustable Professional Activities (EPAs) (experimental group) and traditional emergency medicine teaching (control group) | To evaluate the impact of an EPA-based training system on students clinical thinking | N = 210 medical students (106 in the experimental group and 104 in the control group) | Clinical skills scale adapted for emergency medicine. Variables included: clinical reasoning, decision making, clinical judgment, and integrated clinical skills. Evaluation was conducted using specific rubrics and structured practical exams (OSCEs). Psychometric validation was not specified. | Experimental group: training based on EPAs, clinical simulations, and performance-oriented assessments. Control group: traditional theoretical teaching without the use of EPAs. Duration: 6 months. | The EPA group showed statistically significant improvements in clinical reasoning and decision making, with a higher level of overall clinical competence compared to the control group. |

| Channegowda et al. (2025) [35] | India | Randomized controlled trial (RCT) | Comparison between traditional small-group teaching (control group) and simulation-based teaching for first-year medical students (experimental group), further differentiated by high and low academic performance | To evaluate the effectiveness of simulation-based teaching in learning respiratory physiology compared to traditional methods | N = 250 first-year medical students Some were excluded for not completing the intervention; final sample: N = 107: control group: N = 52; experimental group: N = 55 | Validated multiple-choice knowledge test (MCQ) reviewed by experts; academic performance assessed before and after the intervention. | Simulation-based teaching using interactive clinical case scenarios with simulators vs. traditional small-group teaching. Duration: 4 weeks. Pre- and post-intervention data collection to assess learning outcomes. | Low-performing students who received simulation-based teaching showed significant improvement in knowledge compared to those receiving traditional teaching. No significant differences were observed among high-performing students. |

| Chen et al. (2024) [36] | China | Quasi-experimental pre-test–post-test design | Comparison between an experimental group (Three-Actions Model + learning plan guide) and a control group (traditional teaching) | To evaluate the effect of the Three-Actions Model on student satisfaction, academic performance, and engagement | 95 medical students: experimental group: N = 47; control group: N = 48 | Subjective Evaluation System (SES): measures learning, emotions, engagement, and achievement (validated). Biggs’ Study Process Questionnaire: measures deep and surface learning approaches. Course exams: objective knowledge tests. | The experimental group received an intervention that included personalized study planning, continuous reflection throughout the course, and periodic evaluations with feedback. The control group received traditional teaching without these elements. The experimental group achieved higher exam scores (mean of 79.44 vs. 70.00). The study is neuroeducational because it promotes self-regulation, metacognition, and active student engagement, stimulating executive functions such as planning and attentional control. Additionally, it integrates emotional and motivational factors, which are essential to learning from a brain-based perspective. | The experimental group, which used the Three-Actions Teaching Model with a learning plan guide, showed significantly higher academic performance compared to the control group. Students in the experimental group had a better overall experience in terms of learning methods, emotions, engagement, and performance. This suggests that the students in the experimental group experienced improvements in learning strategies, emotional involvement, participation, and academic achievement. |

| Articles | 1 | 2 | 3 | 4 | 5 | 6 | Overall Score |

|---|---|---|---|---|---|---|---|

| Dhungel et al. (2023) [16] | M | M | L | L | H | H | L |

| Ballesta Claver et al. (2024) [17] | M | M | L | L | H | H | L |

| Zheng et al. (2024) [18] | H | H | H | M | H | H | H |

| Wang et al. (2024) [19] | M | M | M | L | H | H | M |

| Dehghani et al. (2024) [20] | H | H | M | L | H | H | M |

| Syväoja et al. (2024) [21] | M | H | M | L | H | M | M |

| Drollinger-Vetter et al. (2025) [22] | M | H | M | L | H | M | M |

| Vetter et al. (2025) [23] | M | H | M | L | H | M | M |

| Rodríguez-López et al. (2020) [24] | M | H | L | L | H | M | M |

| Coelho et al. (2021) [25] | M | H | M | L | H | M | M |

| Brügge et al. (2024) [26] | H | H | M | L | H | M | M |

| Kliziene et al. (2021) [27] | M | H | M | L | H | M | M |

| Di Lieto et al. (2020) [28] | M | H | M | L | H | M | M |

| Faure et al. (2024) [29] | M | H | M | L | H | M | M |

| Mugo et al. (2024) [30] | M | H | M | L | H | M | M |

| Zhang et al. (2023) [31] | M | H | M | L | H | M | M |

| Zhao et al. (2024) [32] | M | H | M | L | H | M | M |

| Hirt et al. (2025) [33] | M | H | M | L | H | M | M |

| Wang et al. (2024) [34] | M | H | M | L | H | M | M |

| Channgowda et al. (2025) [35] | H | H | M | M | H | M | M |

| Chen et al. (2024) [36] | M | H | M | L | H | M | M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Granado De la Cruz, E.; Gago-Valiente, F.J.; Gavín-Chocano, Ó.; Pérez-Navío, E. Education, Neuroscience, and Technology: A Review of Applied Models. Information 2025, 16, 664. https://doi.org/10.3390/info16080664

Granado De la Cruz E, Gago-Valiente FJ, Gavín-Chocano Ó, Pérez-Navío E. Education, Neuroscience, and Technology: A Review of Applied Models. Information. 2025; 16(8):664. https://doi.org/10.3390/info16080664

Chicago/Turabian StyleGranado De la Cruz, Elena, Francisco Javier Gago-Valiente, Óscar Gavín-Chocano, and Eufrasio Pérez-Navío. 2025. "Education, Neuroscience, and Technology: A Review of Applied Models" Information 16, no. 8: 664. https://doi.org/10.3390/info16080664

APA StyleGranado De la Cruz, E., Gago-Valiente, F. J., Gavín-Chocano, Ó., & Pérez-Navío, E. (2025). Education, Neuroscience, and Technology: A Review of Applied Models. Information, 16(8), 664. https://doi.org/10.3390/info16080664