1. Introduction

The digital transformation of education has significantly reshaped both the delivery of knowledge and the assessment of learning outcomes. In higher education, the rapid adoption of online platforms for teaching, assessment, and evaluation has faced growing challenges related to access, affordability, academic integrity, and quality assurance [

1,

2]. These challenges are further intensified by the emergence of generative artificial intelligence (AI), which is transforming educational practices at a fundamental level and reshaping the entire learning and evaluation ecosystem [

3]. Recent advancements in large language models (LLMs) have enabled the development of powerful content generating AI tools that can interpret user intent, engage in natural language dialogue, and offer interactive, personalised, and scalable support. In higher education, these technologies are no longer limited to tutoring functions, as they are increasingly being embedded into assessment design, feedback generation, and academic support.

While generative AI introduces significant opportunities for personalised learning experience and adaptive assessment process [

4], it also raises concerns around the assessment accuracy [

5]. Such developments have prompted renewed demands for updated quality standards from organizations such as the European Association for Quality Assurance in Higher Education (ENQA) [

6], particularly in response to the growing integration of AI technologies in assessment practices.

At the same time, higher education accreditation procedures place strong emphasis on the quality of student assessment as a key indicator of educational effectiveness, requiring modern, transparent, and multi-component evaluation methods that align with intended learning outcomes. For example, under certain criteria [

7], lecturers are expected to demonstrate the use of varied assessment formats, including interactive and digital tools, while ensuring academic integrity, and alignment with course objectives.

Given the rapid adoption of AI-based tools into educational practice, along with increasing regulatory demands, there is a growing imperative to rethink and redesign assessment models to ensure that they remain relevant, reliable, and equitable within digitally mediated, AI-enhanced learning environments [

8]. Despite the proliferation of AI-powered instruments in higher education, existing assessment frameworks often lack structured guidance on how to incorporate such technologies in a pedagogically appropriate and responsible manner.

Our study addresses this gap by proposing and validating a structured framework for integrating generative AI into assessment processes in higher education. It takes into account the roles, responsibilities, and expectations of three key stakeholder groups: instructors, students, and higher education quality assurance bodies. To verify the framework, a case study was conducted within the context of a university-level course on business data analysis. A comparative evaluation was performed between assessments graded by a team of academic lecturers and those generated by ChatGPT. This comparison between human- and AI-based assessments reveals key challenges and opportunities, contributing to the refinement of the framework application in line with both quality assurance standards and pedagogical objectives.

The main objectives of this study are as follows:

To identify the needs, expectations, challenges, and perspectives of key actors regarding the integration of AI-based tools for assessment purposes;

To propose quality assurance measures and practical guidelines for the responsible use of generative AI in formal assessment settings;

To design a new conceptual framework that enables a systematic and stakeholder-sensitive integration of generative AI into assessment practices in higher education;

To support the development of transparent, scalable, and learner-centred evaluations that maintain academic integrity while embracing technological innovation;

To examine the technological and pedagogical implications of deploying AI-powered systems for designing, administering, and evaluating academic assessments.

The primary contribution of this paper is the development of a conceptual framework for innovative assessment in higher education, offering a holistic approach for embedding AI-based instruments across different stages of the evaluation cycle. The proposed model supports both formative and summative assessments, emphasising transparency and fairness. By proactively addressing potential challenges, including overdependence on automation and improper AI tool usage, the framework facilitates a more robust, accountable, and inclusive assessment environment aligned with contemporary educational objectives.

The structure of the paper is as follows:

Section 2 presents an overview of recent advancements in generative AI technologies, focusing particularly on LLM-based platforms and their educational applications.

Section 3 reviews existing research on the integration of generative AI in academic assessment, covering its benefits, limitations, and institutional responses.

Section 4 introduces the proposed conceptual assessment framework, describing its three branches (teaching staff, learners, and quality assurance authorities), their interactions, and practical implementation aspects. The paper concludes by summarising key findings, outlining implications for academic policy and practice, and providing recommendations for future research directions.

2. State-of-the-Art Review of Generative AI Tools and Their Assessment Applications

Generative AI tools, particularly those built upon advanced LLMs and next-generation intelligent chatbots, originated as software applications to facilitate human-computer interaction through natural language. Initially used in customer service and business communication to automate routine tasks, such as answering frequently asked questions, these tools have quickly grown in complexity and capability, now offering sophisticated solutions in multiple domains, including education.

Within higher education contexts, generative AI has evolved to become integral not only to instructional support but also to the design, delivery, and evaluation of academic assessments. Generative assessment tools specifically refer to software platforms that leverage AI to create educational content, streamline grading, and assist learners in achieving targeted learning outcomes. By integrating capabilities such as natural language processing, machine vision, and multimodal data interpretation, these tools enhance instructional efficiency and allow for greater personalisation in educational practice [

9,

10]. Nevertheless, despite the expanding role of generative AI in education, many lecturers, administrators, and policymakers continue to face challenges in fully understanding the capabilities and implications of these emerging technologies. As generative AI becomes more deeply embedded within academic environments, exploring its transformative potential for assessment practices becomes essential.

This section examines the current state of generative AI tools and their relevance to academic assessment. The first subsection begins with a classification of assessment types in higher education based on key criteria. Next, we explore the emerging use of generative AI to support both instructors and students, with a focus on instructional efficiency and enhancing personalisation. The second subsection reviews established metrics for quality evaluation in higher education, followed in the third subsection by an overview of the technical capabilities of leading generative AI tools. Finally, we present a comparative analysis of these tools in terms of their functionalities within educational assessment settings.

2.2. Standards, Policies and Procedures for Assessment Quality in Higher Education

Quality assessment in higher education requires that assessment practices be valid, reliable, fair, and aligned with the intended learning outcomes. High-quality assessment accurately reflects what students are expected to learn and demonstrates whether those outcomes have been achieved. Validity refers to the degree to which an assessment measures what it is intended to measure, while reliability relates to the consistency of results across different assessors, contexts, and student cohorts. Fairness entails that all students are assessed under comparable conditions, with appropriate accommodation to support equity and inclusion. Additional indicators of assessment quality include alignment with curriculum objectives, transparency of assessment criteria, and the provision of constructive feedback [

13].

To uphold these standards, institutions typically use internal moderation processes, external examiner reviews, student feedback, benchmarking against national qualification frameworks, and systematic analyses of grade distributions. Increasingly, digital tools and analytics enhance institutions’ capacity to monitor assessment effectiveness and maintain academic integrity.

Internationally, frameworks such as ISO 21001:2018 [

14] provide structured guidance for educational organisations, emphasising learner satisfaction, outcome alignment, and continuous improvement in assessment practices. Although its predecessor, ISO 29990:2010, [

15] specifically targeted non-formal learning, its key principles, such as needs analysis and outcome-based evaluation, remain integral within current guidelines.

Within Europe, the Standards and Guidelines for Quality Assurance (ESG), established by the ENQA, serve as a key regional benchmark. ESG Standard 1.3 focuses specifically on student-centred learning and assessment, underscoring the importance of fairness, transparency, alignment with learning objectives, and timely feedback [

16]. These principles have shaped quality assurance systems across Europe and influence institutional practice at national and institutional levels.

National agencies such as the UK’s Quality Assurance Agency (QAA) provide detailed guidance on assessment practices [

17]. Their frameworks cover areas such as the reliability of marking, moderation protocols, assessment-objective alignment, and the balanced integration of formative and summative methods. These guidelines promote transparency, support diverse assessment types, and ensure that feedback is timely and meaningful.

At the institutional level, internal quality assurance policies typically define assessment expectations in terms of validity, fairness, and consistency. Tools such as grading rubrics, standardised criteria, and peer moderation are used to ensure alignment with programme-level outcomes and to enhance the reliability of evaluation. Institutions often conduct regular reviews, audits, and data-driven evaluations to track assessment performance and drive continuous improvement in teaching and curriculum design [

18].

Collectively, these international standards, national regulations, and institutional policies provide a comprehensive structure for ensuring robust assessment practices. They ensure that assessment not only serves as a tool for evaluation but also supports meaningful student learning, accountability, and educational enhancement within contemporary higher education systems.

2.3. Generative AI Tools for Assessment in Higher Education

Assessment tools in higher education have evolved significantly, transitioning from traditional paper-based examinations and manual grading toward digitally enhanced, personalised evaluation methods. Early developments, such as computer-based testing, increased grading efficiency and objectivity, paving the way for Learning Management Systems (LMSs) that supported online quizzes, automated scoring, and instant feedback [

19]. Subsequently, the integration of learning analytics and adaptive assessment platforms enabled educators to track student progress and tailor instruction dynamically. Massive Open Online Courses (MOOCs) often incorporate peer review systems, automated grading, and interactive tasks, enabling them to accommodate thousands of learners simultaneously [

20]. More recently, AI-driven platforms have emerged, facilitating automated question generation, rubric-based grading, and scalable formative assessment [

21].

The rise of generative AI technologies has further transformed the assessment landscape, offering capabilities for real-time, interactive, and context-aware evaluations. Institutions exploring these advanced tools encounter new opportunities for personalised assessment while addressing critical challenges concerning academic integrity, transparency, and equity. Below is an overview of prominent generative AI-based platforms, each tailored to specific assessment needs within higher education.

ChatGPT (OpenAI, San Francisco, CA, USA;

https://openai.com/chatgpt, accessed on 10 April 2025) is one of the most widely used generative AI tools in education. Based on the GPT-4.5 architecture, ChatGPT enables instructors to generate quiz questions, test prompts, scoring rubrics, and feedback explanations. With a user-friendly interface and conversational capabilities, it supports academic writing, peer simulation, and self-assessment for students. Its ability to provide consistent, context-sensitive responses makes it suitable for both formative and summative assessment support. While the basic version is free, advanced features are available through the ChatGPT Plus subscription.

Copilot (Microsoft, Redmond, WA, USA;

https://copilot.microsoft.com, accessed on 10 April 2025) is embedded into Microsoft 365 suite and enables instructors and students to generate content, summarize documents, create study guides, and automate tasks such as rubric generation and feedback provision. Its integration into Word, Excel, and Teams enhances workflow efficiency in assessment processes.

Eduaide.ai (Eduaide, Annapolis, MD, USA;

https://eduaide.ai, accessed on 10 April 2025) is a dedicated platform for educators that leverages generative AI to support teaching and assessment design. It provides over 100 instructional resource formats, including quiz generators, essay prompts, rubrics, and feedback templates. Instructors can generate differentiated tasks aligned with Bloom’s taxonomy or customize assessments by difficulty, format, or objective. Eduaide.ai also includes alignment tools to ensure curriculum coherence and academic standards, making it suitable for both classroom and online environments.

Gradescope (Turnitin, Oakland, CA, USA;

https://www.gradescope.com, accessed on 10 April 2025) is a grading platform enhanced with AI features such as automatic grouping of similar answers, rubric-based scoring, and feedback standardization. It supports handwritten, typed, and code-based responses and is widely used in STEM courses. While not generative per se, Gradescope’s AI-assisted evaluation streamlines the grading process, improves consistency, and facilitates large-class assessments with reduced instructor workload.

GrammarlyGO (Grammarly, San Francisco, CA, USA;

https://www.grammarly.com, accessed on 10 April 2025) is an AI-based writing assistant that offers contextual feedback on grammar, clarity, and tone. It supports students in developing academic writing skills and can serve as a real-time feedback mechanism during essay composition. For instructors, GrammarlyGO helps in providing formative feedback, especially in large-scale or asynchronous courses. The tool offers suggestions for revision while maintaining a focus on the original intent, making it suitable for self-assessment and peer-review training. GrammarlyGO assists instructors in delivering formative feedback efficiently, especially in large-scale or online courses.

Quizgecko (Quizgecko, London, UK;

https://quizgecko.com, accessed on 10 April 2025) is an AI-powered quiz generator that enables instructors to create assessment items from any text input, such as lecture notes, articles, or web content. It supports multiple-choice, true/false, short answer, and fill-in-the-blank formats. Quizgecko also allows export to LMS platforms and offers analytics on question difficulty and answer patterns. This tool is useful for formative assessments, quick reviews, and automated test creation aligned with instructional content.

Table 1 presents a comparative overview of key characteristics, functionalities, supported platforms, input limits, and access types of the discussed generative AI-based assessment tools.

Depending on their primary function and technological scope, generative AI tools for assessment can be grouped into several distinct categories:

Content-generation tools—these platforms (e.g., ChatGPT, Eduaide.ai, Copilot) focus on the automatic creation of assessment elements such as quiz questions, prompts, rubrics, and feedback. They support instructors in designing assessments aligned with learning taxonomies and learning outcomes.

Interactive quiz generators—tools like Quizgecko use generative algorithms to convert any text into structured assessment formats, such as multiple-choice, fill-in-the-blank, or true/false questions. Their focus is on streamlining formative assessment, particularly in asynchronous or online contexts.

AI-assisted feedback systems—platforms such as GrammarlyGO provide real-time, formative feedback on student writing, helping learners improve clarity, coherence, and argumentation. These tools are particularly useful in large classes and for supporting autonomous learning.

Grading automation systems—tools like Gradescope apply AI models to evaluate student submissions using rubrics, group similar responses, and standardise feedback. They are widely adopted in STEM education and are integrated with major LMSs.

Learning assistants and peer support tools—ChatGPT and similar apps serve as AI tutors that guide students through problem-solving steps and help reinforce foundational concepts, indirectly supporting assessment preparation through personalised coaching.

These AI tools also vary according to their intended users. Eduaide.ai and Copilot primarily serve educators, assisting with assessment design and workflow automation. GrammarlyGO targets students directly, providing immediate, actionable feedback. Tools like ChatGPT and Copilot offer flexible usage scenarios suitable for both instructors and students, depending on instructional contexts and needs.

Deployment options further differentiate these tools. Most operate via web interfaces, ensuring broad accessibility. GrammarlyGO’s mobile deployment accommodates informal or flexible study environments. Gradescope and Quizgecko offer compatibility with institutional LMS platforms, while Microsoft Copilot integrates AI functionalities seamlessly within widely used productivity tools such as Word and Excel.

Different access models also influence the adoption and usage of these tools. Platforms such as ChatGPT, Eduaide.ai, Quizgecko, and GrammarlyGO typically follow freemium models with subscription-based advanced features. Gradescope primarily operates under institutional licenses, managed centrally by universities or departments, and Copilot requires a Microsoft 365 subscription, aligning with enterprise workflows.

Underlying AI models further distinguish these tools. ChatGPT and Copilot leverage advanced LLMs like GPT-4.5, capable of handling complex, contextually nuanced tasks, thus enhancing their effectiveness in supporting comprehensive assessments and feedback. Gradescope’s approach, which focuses on clustering and rubric-based evaluation rather than generative capabilities, prioritises grading efficiency and transparency, making it particularly suited for structured and high-stakes assessments.

Each AI tool also aligns differently with assessment types. GrammarlyGO and Quizgecko are particularly well-suited for formative purposes, providing iterative, low-stakes learning reinforcement. ChatGPT, Eduaide.ai, and Gradescope support both formative and summative assessment contexts, facilitating assessment creation, scoring, and rubric-based feedback. Copilot bridges both categories effectively by aiding material preparation, content summarisation, and feedback provision within institutional workflows.

Alignment with pedagogical frameworks further differentiates these tools. Eduaide.ai explicitly integrates Bloom’s taxonomy, enabling targeted assessments tailored to distinct cognitive levels. ChatGPT, through guided prompts, can foster reflective and metacognitive learning processes. A shared advantage of LLM-based platforms is their inherent adaptability and personalisation, tailoring outputs to specific instructional contexts and individual learner needs.

Despite these benefits, there are important limitations to consider. Generative tools such as ChatGPT and GrammarlyGO can produce outputs that are difficult to verify, raising concerns regarding source traceability and explainability—critical aspects in high-stakes assessments. In contrast, tools like Gradescope emphasise transparent evaluation criteria, structured feedback, and clear grading standards. Additionally, risks such as AI-generated inaccuracies, known as hallucinations, necessitate careful oversight by educators to ensure assessment validity and reliability.

In this subsection, we have explored the evolving role of generative AI tools in reshaping higher education assessment procedures. These tools vary considerably in their functionality, degree of automation, adaptability, and LMS integration. They enable a broad spectrum of assessment activities, from automated content creation and real-time formative feedback to personalised learning analytics, opening new possibilities for designing inclusive, scalable, and pedagogically rich assessment experiences.

The next section discusses related work, outlining existing assessment frameworks within higher education and summarising current research on user attitudes towards AI-driven assessment tools.

4. Framework for Generative AI-Supported Assessment in Higher Education

In this section, we introduce a new conceptual framework that supports the integration of generative AI tools, particularly intelligent chatbots, into university-level assessment practices. It promotes a more personalised, scalable, and explainable assessment ecosystem through the responsible use of AI.

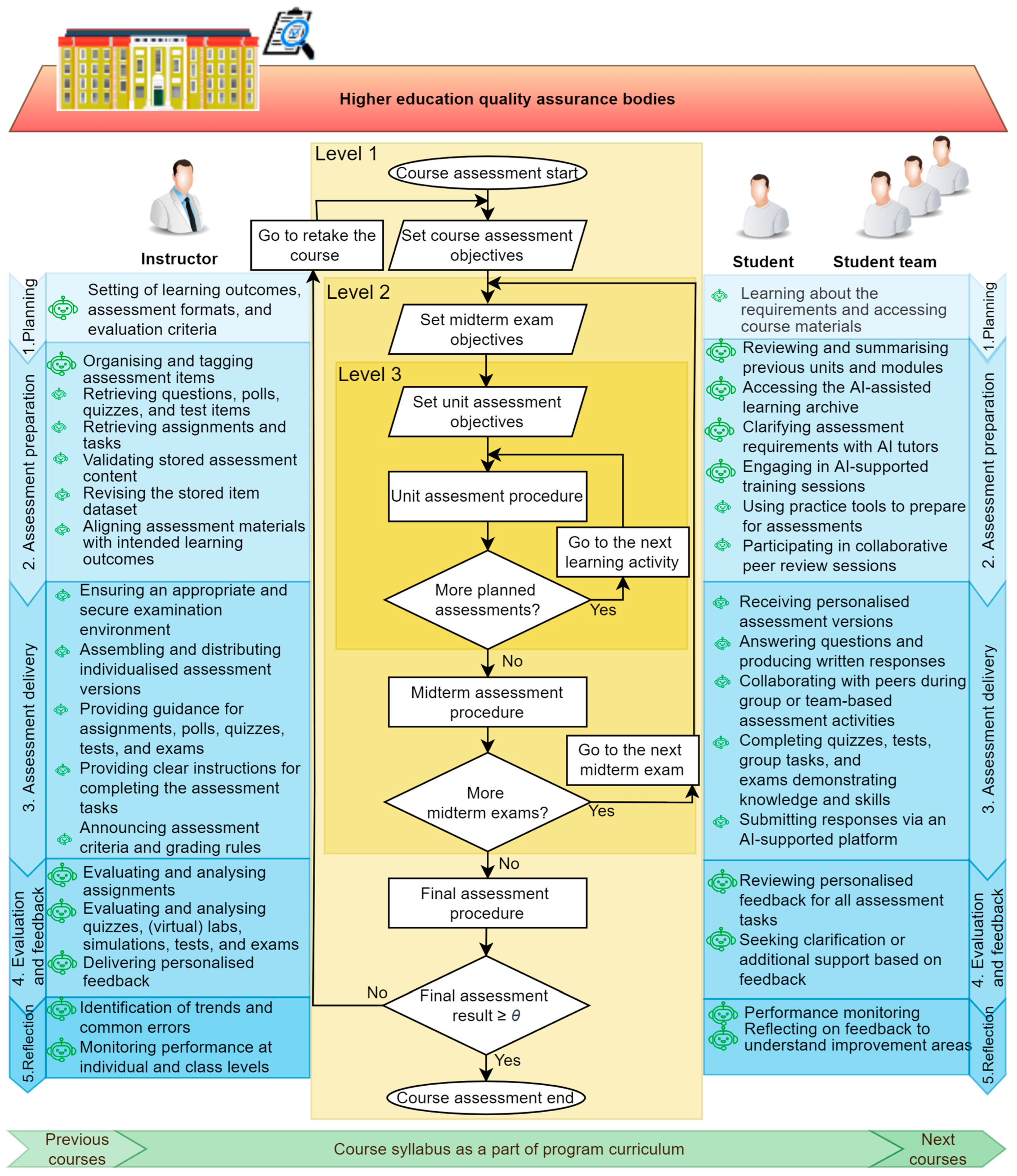

This framework is designed to address the emerging challenges and opportunities in higher education assessment by structuring the interaction between three key participants groups. In the proposed framework (

Figure 1), the stakeholders (represented by the left, right, and upper branches of the flowchart) are supported by generative AI through their distinct functions. The framework has a hierarchical structure, and it is organized into three levels, corresponding to the standard phases of the academic assessment process: (1) course-level assessment, (2) midterm testing, and (3) unit tasks.

Level 1. Course Assessment

At the course assessment level, generative AI supports the final, cumulative evaluation of students’ performance. For lecturers, AI tools assist in designing comprehensive exam formats, generating final exam questions aligned with course outcomes, and producing consistent, rubric-based grading and feedback at scale. For students, intelligent chatbots offer support in reviewing course content, simulating exam environments, and answering last-minute queries with tailored feedback and revision prompts. For control authorities, generative AI enables the systematic monitoring of assessment quality, fairness, and alignment with accreditation standards. AI dashboards can summarise grading patterns and highlight anomalies in final results, ensuring transparency and integrity in the summative assessment process.

Level 2. Midterm Assessment

At the midterm exam level, generative AI enhances the quality and responsiveness of ongoing evaluations conducted mid-course. For lecturers, AI can generate customised midterm questions, assist in balancing question difficulty, and evaluate structured responses using predefined rubrics. Chatbots can also assist in clarifying exam instructions and interpreting student performance trends. For students, AI tools provide personalised practice tasks, simulate exam-style questions, and offer constructive feedback on performance to guide future learning. For control authorities, this stage offers an opportunity to monitor continuous assessment integrity. AI tools can ensure consistency in exam administration, track progress data, and assess the degree to which midterm evaluations align with curriculum benchmarks.

Level 3. Unit Assessments

At the unit assessment level, generative AI facilitates frequent, formative evaluations embedded within the teaching-learning cycle. Lecturers benefit from AI-enabled automation of low-stakes quizzes, knowledge checks, and quick feedback mechanisms, allowing real-time insight into students’ understanding of specific topics. Students interact with AI chatbots to clarify confusing concepts, test their knowledge, and receive immediate feedback to support mastery learning. These interactions also promote critical thinking and self-regulated learning strategies. For control authorities, this level provides granular data on assessment engagement and learning progression. AI tools can help identify early signs of disengagement or learning gaps, enabling timely interventions and supporting evidence-based quality assurance at the micro-curricular level.

The framework is also based on a five-step assessment procedure that reflects the typical phases of the academic assessment process. At each step, the roles of generative AI are illustrated through the supportive functions it provides to key stakeholders.

Step 1. Assessment Planning

The assessment process begins with assessment planning, in which lecturers determine the intended learning outcomes, assessment formats, and criteria for evaluation. During this phase, generative AI plays a significant role by generating example rubrics, suggesting assessment scenarios aligned with curricular goals, and producing sample tasks based on best practices. This ensures that students and accreditation bodies receive clear information about assessment expectations and standards from the outset.

Step 2. Preparation and Training

The next step is dedicated to student readiness and formative learning. Here, students engage with AI-driven self-assessment modules and formative quizzes designed to reinforce key concepts and provide individualised feedback. Lecturers use AI-generated review materials and adaptive question banks to better address areas where students may struggle, while control bodies receive summary metadata for monitoring the transparency and scope of preparatory activities.

Step 3. Assessment Delivery

Moving to assessment delivery, students complete the required assessments under conditions supported by AI tools. Generative AI can help clarify instructions, simulate realistic test scenarios, or, in objective formats, assist in online proctoring to safeguard academic integrity. For lecturers, AI-powered platforms facilitate the scheduling and delivery of assessments and in some cases automate aspects of answer validation or compliance checking. Accreditation and quality assurance units retain oversight through access to digital records and procedural logs.

Step 4. Evaluation and Feedback

Following the administration, the process advances to evaluation and feedback, where AI tools assist lecturers with marking, providing draft feedback, and checking the consistency of scores against predefined rubrics. Students receive detailed, rubric-based feedback in a timely and transparent fashion, while control bodies can audit both the feedback quality and the traceability of the evaluation process through AI-generated reports and explainability tools.

Step 5. Reflection and Improvements

Finally, in the last step, all stakeholders are engaged in reviewing assessment outcomes and identifying opportunities for enhancement. Students receive AI-personalised learning recommendations based on their performance and are encouraged to reflect on their results and set future goals. Lecturers analyse assessment data and student engagement metrics to refine subsequent assessment design and teaching approaches. Control bodies synthesise anonymized results and analytics to ensure that the overall process remains fair, consistent, and effective across cohorts and departments.

This framework supports alignment between technological advancements and academic integrity, aiding all actors within the university ecosystem. It is flexible enough to be adapted for different educational contexts and disciplines, and it encourages a balance between automation and human judgment in the assessment process. In the next section, we validate the effectiveness of the framework through a practical experiment involving final exam evaluation.

According to the proposed framework, generative AI tools play a supportive role throughout the five-step assessment procedure, implemented across the three primary levels of academic evaluation: unit assessments, midterm exams, and final exams. At the unit assessment level, they can assist students during the early stages of the process by offering access to curated learning resources, clarifying task requirements, and providing formative feedback on drafts and quiz attempts. For lecturers, this stage allows chatbots to handle routine queries, automate initial grading of objective tasks, and flag common misconceptions, enabling more targeted instructional interventions.

During the midterm exam phase, intelligent tools can be used to support students through personalised revision tools, practice tasks, and simulated test environments. Lecturers can rely on chatbot-generated analytics to identify learning gaps and adjust mid-semester teaching strategies accordingly. At this level, AI tools can also assist in rubric generation and consistent grading of structured responses, saving time while maintaining assessment quality.

In the final exam stage, generative AI tools continue to provide real-time clarification of exam-related questions and post-assessment feedback. They help students reflect on their performance, guide them through self-assessment prompts, and offer suggestions for improvement. For instructors, intelligent instruments can streamline final grading procedures and generate performance reports that support curriculum review and course evaluation.

While generative AI tools offer significant benefits, such as immediate feedback, reduced administrative workload, and individualised student support, it is essential to emphasise that all critical decisions regarding instructional design, assessment criteria, and course outcomes remain the responsibility of the instructor. The tool function is to complement, not replace, the academic judgment and pedagogical expertise of educators.

For students, these tools enhance the learning experience by reducing wait times, enabling self-paced exploration of concepts, and promoting deeper engagement through interactive dialogue. By posing open-ended questions and encouraging evidence-based responses, they can stimulate critical thinking, support argument development, and present alternative perspectives.

Furthermore, integrating generative AI into this structured assessment model supports key didactic principles such as flexibility, accessibility, consistency, and systematic progression. AI tools help educators identify knowledge gaps, personalise learning trajectories, and reinforce skill development through continuous, tailored practice. Their interactive and adaptive nature allows them to be applied effectively across various assessment contexts—for systematisation, revision, reflection, and mastery—resulting in a more inclusive, engaging, and pedagogically sound assessment environment.

The third branch (top section of

Figure 1) of the proposed framework addresses the role of control authorities, such as quality assurance units, academic oversight committees, and accreditation bodies, in upholding assessment standards and institutional integrity. Generative AI can support these stakeholders through advanced analytics and automated reporting tools that enable real-time monitoring of assessment practices across departments or academic programmes. For example, AI-powered dashboards can consolidate data on grading trends, feedback consistency, and student performance, helping control authorities identify anomalies, evaluate alignment with intended learning outcomes, and verify compliance with institutional and regulatory standards.

Additionally, generative AI can assist in auditing assessment materials for originality, fairness, and alignment with curriculum objectives. Tools based on natural language processing can evaluate the cognitive level of test items (e.g., using Bloom’s taxonomy), check for redundancy or bias in exam questions, and flag potential academic integrity issues. AI-driven analysis of student feedback and peer evaluations can further inform quality reviews. By integrating these capabilities, control authorities can enhance transparency, streamline accreditation processes, and uphold academic standards more efficiently and consistently across the institution.

The implementation of GAI in assessment brings not only opportunities but also challenges that must be carefully managed. The risk of AI bias, where automated systems amplify existing inequalities, remains a significant challenge in educational assessment. Bias can arise from skewed training data, algorithmic design, or lack of regular system audits. To reduce this risk, we recommend ongoing monitoring of AI tools for disparate impact, transparency in model selection and data sources, and periodic human review of automated decisions. Additionally, while generative AI systems can streamline processes and handle large volumes of assessment tasks, it is critical to avoid over-reliance on automation. Human educators should remain actively involved in assessment processes, providing contextual judgment and oversight to ensure fairness and adaptability. Institutional policies should promote a balanced approach, combining the strengths of both human and generative AI-based evaluation.

The proposed framework offers a holistic and multi-level approach to integrating generative AI into assessment processes, balancing innovation with pedagogical integrity, and supporting all key stakeholders in achieving a more transparent and adaptive higher education ecosystem. While the framework emphasises intelligent chatbot-enabled interventions, it also accommodates broader generative AI functionalities, including rubric generation, automated feedback, and analytics dashboards.

The educators can employ the methodology described in the following section to assess the efficiency of generative AI in a specific university course.

5. Verification of the Proposed Generative AI-Based Assessment Framework

To evaluate the effectiveness of the proposed generative-AI-enhanced assessment framework, we conducted an experimental study in a data-analysis course during the winter semester of 2024–2025. The course’s final examination was marked in parallel: first by a team of three subject-expert instructors and then by ChatGPT 4.5, following the same rubric. This pilot comparison allowed us to measure the reliability and validity of the AI-based approach against traditional human assessment.

The case study covered the scripts of fifteen students (S1–S15). The exam comprised sixteen questions designed to test analytical, interpretative, and applied competencies: six open-ended items (Q1–Q6), each worth four or six points, and ten closed-response items (Q7–Q16), each worth one point, for a maximum total of 40 points. The teaching team independently (without AI) evaluates the students’ written submissions and assigns a final grade to each student based on a predefined scale linking total points to corresponding grades. The AI performs the same evaluation, having been provided with the criteria and scale used by the lecturers, and grades the submissions in accordance with these instructions.

For the purposes of this experimental validation, a single open-ended question (Q1) was selected. This question involved a specialised task requiring interpretation of GARCH model output and was intended to assess students’ statistical reasoning and applied econometric skills. It was evaluated on a scale of up to four points. This task was independently assessed by both a team of lecturers and ChatGPT using a structured evaluation rubric specifically developed for this purpose (

Table 3). The rubric comprised seven criteria: understanding of the output table, explanation of the model’s purpose, interpretation of key parameters, use of statistical significance, identification of convergence warnings, structure and clarity of the response, and authenticity or originality. Its design reflects the cognitive levels outlined in Bloom’s taxonomy, incorporating both lower-order skills (e.g., understanding and applying statistical output) and higher-order thinking (e.g., analysing parameter relationships and demonstrating original interpretation), thereby supporting a comprehensive evaluation of students’ cognitive performance. Each criterion was scored on a scale from 0 to 4, resulting in a maximum possible score of 20 points per submission. To align with the final exam format, these rubric scores were proportionally scaled down to a four-point grading system—the maximum allocated for this specific open-ended question.

The results from the comparison of the obtained points showed a reasonable closeness between the two assessments, with ChatGPT successfully replicating human judgement in many instances, particularly when student responses followed structured patterns and demonstrated clear technical accuracy. However, discrepancies emerged in responses that required contextual understanding or critical insight, where lecturer evaluation more effectively captured the depth and nuance of reasoning.

For example, in several cases (Students 1, 4, 6, and 10), both ChatGPT and the lecturers assigned identical or closely aligned scores, reflecting a high degree of agreement in well-structured and technically sound answers. In a further eight cases, the difference between the two assessments was minimal—just one point. Only one case showed a two-point difference. Notably, Student 3 received a score of 1 from ChatGPT but 4 from the lecturers, indicating that the AI may have underestimated the student’s depth of interpretation or originality of reasoning.In this instance, the lecturers awarded additional marks for unconventional thinking, even though the student’s response was inaccurate and incomplete with respect to the specific assignment. In this case, human subjectivity was evident, though positively oriented, whereas the chatbot, by contrast, was unable to deviate from the prescribed criteria.

Overall, these validation results demonstrate that the proposed generative AI-based assessment framework can provide consistent and scalable evaluation for clearly defined open-ended tasks. Nevertheless, the findings also highlight the value of a hybrid assessment approach, whereby AI tools support preliminary scoring and formative feedback, while final grading decisions remain under academic supervision. Such a model ensures both operational efficiency and pedagogical integrity, particularly in high-stakes assessment scenarios.

The remaining open-ended questions were evaluated using the same procedure. The closed-type questions were excluded from the ChatGPT-based assessment, as their scoring relies on fixed, unambiguous answers that are best evaluated using standard automated or manual marking procedures.

To evaluate the consistency between the lecturer-based and GAI-based assessments at the level of the final exam, we compared the total scores and corresponding grades assigned to each of the 15 students (

Table 4). The results indicate a high degree of alignment. In 12 out of 15 cases, the final grades assigned by ChatGPT matched those given by the lecturer team. In one case (Student 1), ChatGPT assigned a grade one level higher than the human assessors did, while in another two cases (Student 3 and Student 15), it assigned a grade one level lower. These minor discrepancies suggest that the GAI-based assessment approach is largely consistent with expert human judgement in grading summative assessments.

To further quantify the difference between the two approaches, we calculated several standard error metrics, mean absolute error (MAE), mean squared error (MSE), and root mean squared error (RMSE), for each open-ended question as well as for the total exam score (

Table 5).

The results show that ChatGPT achieved the closest agreement with lecturer scoring on Q3, with a MAE of just 0.53, suggesting very high consistency. Q1 and Q5 also demonstrated good alignment, each with a MAE of 0.93. These tasks likely involved well-structured, objective responses where ChatGPT performs reliably. Slightly higher error values were observed for Q2 and Q6, where MAEs were 1.07 and 1.00, respectively. This indicates acceptable, though somewhat less precise, agreement. In these cases, discrepancies may be due to borderline answers or interpretative elements that require human judgment. In contrast, Q4 presented the greatest challenge for ChatGPT, with the highest MAE (1.87) and RMSE (2.71). This question required students to submit an Excel file containing solver settings as part of a hands-on optimisation task, making it an example of assessment by doing. At the time of the experiment (December 2024), ChatGPT had limited capabilities for interpreting or evaluating attached spreadsheet files with embedded solver configurations, which likely contributed to the discrepancy in scoring.

When considering the final exam as a whole, the overall MAE was 2.93 points and the RMSE was 3.46 points (on a 40-point scale), indicating that ChatGPT scores generally differ from lecturer scores by approximately one grade level. While this reflects moderate accuracy, it also highlights the limitations of full automation in high-stakes summative assessment.

These findings suggest that generative AI is well suited for evaluating structured tasks and providing formative feedback but still requires human oversight for complex or high-impact assessments. Its integration into the assessment process is most effective when used to complement, rather than replace, academic judgment.

Each student received detailed feedback on their performance in the final exam, including both the assigned grades and targeted recommendations for addressing identified knowledge gaps. This individualised feedback supports the formative dimension of the assessment process and promotes self-directed learning. Based on the overall consistency of results between the generative AI-based and human-led evaluations, the proposed framework can be considered a reliable tool for assessing student performance in higher education settings. It offers a scalable solution for supporting both formative feedback and summative grading. For stakeholders such as academic staff, curriculum developers, and institutional quality assurance bodies, the framework provides a structured approach to integrating generative AI in assessment processes. Its use is especially recommended in contexts where efficiency, consistency, and timely feedback are essential, provided that it is complemented by appropriate human oversight in more interpretive or high-stakes tasks.

Discussion

The results of our pilot evaluation confirm that the proposed generative AI-based framework provides a reliable and scalable approach to assessment in higher education, particularly for structured analytical tasks. The strong alignment between intelligent tools and educator-assigned scores demonstrates its suitability for automating routine evaluation, while remaining limitations in complex tasks reinforce the need for a hybrid human-AI model.

Compared to existing frameworks, our approach offers several advantages. Smolansky et al. [

22] propose a six-dimension model focused on perceptions of quality, but do not operationalise assessment at the task level; our rubric-based approach fills that gap. Similarly, Thanh et al. [

12] show that generative AI performs well at lower levels of Bloom’s taxonomy, an observation echoed in our findings, though we extend it by explicitly aligning rubric criteria with cognitive levels. Agostini and Picasso [

23] emphasise sustainable and scalable feedback, an aspect also incorporated into our model. However, our framework includes full-cycle validation using real exam data. Kolade et al. [

24] advocate rethinking assessment for lifelong learning but offer primarily conceptual insights; our work complements this by providing empirical evidence and practical implementation. Perkins et al. (2024) [

30] stress ethical integration via their AI Assessment Scale; our framework similarly ensures academic oversight and responsible use. Finally, Khlaif et al. [

26] present a model to guide educators in adapting assessments for the generative AI era. Our tested approach provides a ready-to-use structure for those in the “Adopt” or “Explore” phase of that model.

In summary, our framework builds on existing research by combining theoretical foundations with practical validation, supporting the effective and responsible integration of generative AI into assessment processes.

Different stakeholder groups can benefit from our framework for applying generative AI in higher education assessment. Lecturers can use the proposed framework to enhance assessment design by integrating generative AI tools for feedback, grading, and personalised task creation. The model also helps educators in managing workloads and documenting AI-supported innovations for teaching evaluations and institutional reporting. For students, the framework encourages the transparent use of generative AI in self-assessment, assignment revision, and project development. It fosters digital literacy and empowers learners to take an active and responsible role in AI-enhanced educational settings. Faculty quality assurance committees can adopt the framework as a benchmarking instrument to support the internal review of assessment practices. It facilitates structured evaluation of assessment formats, the appropriateness of AI tool integration, and alignment with intended learning outcomes. Furthermore, it provides a foundation for developing faculty-specific policies and training programmes to guide responsible AI use in educational evaluation.

At the institutional level, university-level governance bodies, such as academic boards, digital innovation units, or teaching and learning centres, can use the framework to ensure consistency across programmes and to support strategic planning in AI-supported assessment. It serves as a practical tool for monitoring compliance with institutional standards, promoting pedagogical innovation, and ensuring that AI adoption aligns with academic values and digital transformation goals. National accreditation agencies may also benefit from the framework by incorporating its principles into updated evaluation criteria that reflect the rise of digital and AI-enhanced assessment practices. It offers a structured reference for assessing transparency, fairness, and educational effectiveness at the programme and institutional levels. In this context, the framework supports policy development, informs audit procedures, and highlights best practices for maintaining academic quality in the era of generative AI.

6. Conclusions and Future Research

In this paper, we present a new conceptual framework for integrating generative AI into university-level assessment practices. The framework is organised into three branches and three levels, corresponding to key stakeholder groups and assessment purposes, respectively. It supports the systematic use of generative AI tools across the full assessment cycle within academic courses. By combining traditional methods with emerging technologies, the framework enables consistent evaluation, targeted feedback, and predictive insights into the effectiveness and objectivism of assessments in digitally enhanced higher education environment.

Building on earlier chatbot-based pedagogical models, the framework extends their functionality to include assessment-specific features such as automated task generation, rubric-based scoring, and real-time feedback delivery. It is designed to enhance transparency, personalise the evaluation process, and support institutional compliance and accreditation. Its structure is flexible and scalable, allowing application across diverse academic programmes and disciplines.

Initial experiments highlight the potential of new AI technology in supporting self-assessment, exam preparation, and formative and summative evaluation. Our case study revealed that current AI-based tools can successfully replicate human evaluation practices in accurately assessing student submissions.

We propose several recommendations for university stakeholders aiming to implement this framework: (1) institutional strategies for AI integration should align technological adoption with pedagogical objectives; (2) universities should invest in upskilling both instructors and learners in AI literacy and digital competence; and (3) regulatory and quality assurance bodies should establish clear guidelines for AI-supported assessment practices. Lifelong learning and micro-credentialing systems may also benefit from the framework by offering personalised learning pathways supported by AI analytics.

This study has several constraints. First, our empirical validation is currently restricted to a single academic institution, course, and student group, which limits the generalisability of the findings. Second, real-time testing of AI-assisted assessments in live class settings has not yet been fully implemented. Third, our dataset is static and does not capture changes in students’ assessment behaviour over time. Lastly, the correlation between the level of generative AI adoption and actual learning or teaching outcomes remains unexplored.

Future research will address these limitations by doing the following: (1) expanding the scope of the study to include multiple universities, a broader range of academic disciplines, and a more diverse group of participants, including both educators and learners; (2) evaluating the effectiveness of AI-supported assessments through longitudinal analysis; and (3) benchmarking our framework against best practices in international settings. We also plan to investigate the ethical and psychological dimensions of AI-assisted grading and explore hybrid models where human expertise is combined with machine intelligence for optimal assessment design and delivery.