Abstract

This paper presents a Generalized Sentiment Analytics Framework (GSAF) for understanding public sentiments on different key societal issues in real time. The framework uses natural language processing techniques for computing sentiments and displays them in different emotions leveraging publicly available social media data (i.e., X threads (formally Twitter)). As a case study of our developed framework, we have leveraged over 3 million tweets to map, analyze, and visualize public sentiment state-wise across the United States on different societal issues. With X as a key social media platform, this study harnesses its vast user base to provide real-time insights into emotional responses surrounding key societal and political events. Built using R and the Shiny web framework, the platform offers users interactive visualizations of emotion-specific sentiments, such as anger, joy, and trust, displayed on a U.S. state-level choropleth map. The platform allows keyword-based searches and employs advanced text-processing techniques to filter and clean tweet data for robust analysis. Furthermore, it implements efficient caching mechanisms to enhance performance, comparing various strategies like LRU and Size-Based Eviction. This research highlights the potential of sentiment analysis for policymaking, marketing, and public discourse, providing a valuable tool for understanding and predicting public sentiment trends.

1. Introduction

In the contemporary digital landscape, social media platforms not only serve as a conduit for communication but also as rich sources of data that reflect public sentiment on a myriad of topics. Social media is pivotal in understanding public sentiment on pressing issues, offering real-time insights into the collective mood. Twitter has emerged as a major social media platform and generated great interest from sentiment analysis researchers [1].With its vast user base, Twitter offers a unique opportunity to gauge public opinion and emotional responses in real time. In this work, we have created a generalized and interactive platform for computing sentiment scores from social media data (e.g., tweets), analyzing them and visualizing the result over web platform (Project repository: https://github.com/Khanisic/Sentiment-Analysis-Web-Application (accessed on 24 January 2025)). As a case study of the efficacy of our developed model, we have harnessed sentiment scores from over 3 million collected tweets, analyzed them, and visualized the shifts of public sentiment on different key societal issues on the U.S. state-wise choropleth map.

Sentiment analysis on Twitter data (tweets) is an interesting area of study that researchers are interested in [1,2,3]. Sentiment analysis is a technique used to determine the emotional tone behind words. Traditional approaches to sentiment analysis can be broadly categorized into lexicon-based methods, machine learning-based methods, and hybrid approaches. Lexicon-based methods, such as those using the NRC Emotion Lexicon [4] or VADER [5], rely on predefined dictionaries of words associated with specific emotions or polarities. These methods are computationally efficient and do not require labeled training data, making them suitable for large-scale analyses [6]. However, they often struggle with context-specific meanings, sarcasm, and irony [7]. Machine learning-based methods, on the other hand, leverage supervised or unsupervised learning techniques to classify sentiment. Supervised approaches, such as Support Vector Machines (SVMs) [8] and Naive Bayes [9], require labeled datasets for training and have been widely used in sentiment analysis tasks. More recently, deep learning models, such as Convolutional Neural Networks (CNNs) [10] and Recurrent Neural Networks (RNNs) [11], have shown remarkable performance in capturing complex linguistic patterns. Transformer-based models like BERT [12] and RoBERTa [13] have further advanced the field by enabling context-aware sentiment analysis with state-of-the-art accuracy.

The number of research projects and apps that use textual analytics, natural language processing (NLP), and other forms of artificial intelligence has grown tremendously [3]. Moreover, Twitter sentiment analysis can be used to predict various phenomena, such as stock market movements. Research has shown that the collective mood states expressed on Twitter can correlate with stock market indices, highlighting the platform’s potential for real-time economic insights [14]. Leveraging Twitter for sentiment analysis not only helps in understanding public sentiment on a range of topics but also provides actionable insights for businesses, policymakers, and researchers [15].

The significance of understanding public sentiment extends beyond mere observation; it influences policymaking, business strategies, and societal norms [16]. Our application, developed using R version 4.3.2 [17] libraries within the Shiny web framework [18], not only aids in visualizing these sentiments on a granular level but also enhances our comprehension of the collective emotional landscape across the United States. This is particularly pertinent in an era where digital discourse is often a precursor to real-world actions and reactions.

This paper delves into the technical and methodological approaches employed to extract, process, and analyze the sentiment of tweets, culminating in a state-wise sentiment map of the U.S. Our system allows users to filter sentiments by specific emotions—such as anger, joy, and trust—providing insights into the prevailing emotional climate related to various societal issues. Furthermore, in reducing data retrieval latency, we have employed a number of caching techniques in our framework and found that Size-Based Eviction performs the best. Lastly, to study the performance of the developed framework, we have leveraged over 3 million tweets to map, analyze, and visualize public sentiment state-wise across the 50 U.S. states.

The rest of this paper is organized as follows: The motivation of this work is stated in Section 2. Section 4 demonstrates the implementation details, including data collection, cleaning, computing sentiment scores, and their visualization. Section 5 describes the used ML techniques in sentiment analysis. Section 6 discusses the incorporation of different cache techniques in the proposed interactive model. Section 8 describes the general applicability of our proposed model in different use cases. Finally, we conclude this paper in Section 9.

2. Motivation

Despite the abundance of data generated by social media [19] platforms like Twitter (currently X), extracting meaningful insights from this vast stream of information is quite challenging. A number of research attempted to use Twitter data to gauge public sentiments in different areas of interest. Samuel et al. [20] investigated the complex emotional landscape during the COVID-19 pandemic by analyzing public sentiment on Twitter. The study focused on the socio-economic impact of lockdown measures, specifically examining public reactions to the debates around reopening the economy. Utilizing textual analytics, including visualization and statistical validation, the research identified a predominantly positive sentiment toward early reopening scenarios. Another research [21] focused on analyzing approximately 1.9 million tweets related to COVID-19, collected from January 23 to 7 March 2020. The researchers used machine learning techniques, including Latent Dirichlet Allocation (LDA), to identify and categorize the main themes and sentiments expressed in the tweets. This approach enabled them to efficiently analyze large volumes of unstructured text data, revealing insights into public sentiment and emotional responses during the pandemic. Lastly, another relevant paper [22] focuses on detecting and predicting political leanings through sentiment analysis of tweets, employing natural language processing and machine learning techniques to classify sentiments expressed in tweets about political topics. Motivated by these approaches, our study aims to advance the field of sentiment analysis by harnessing a robust dataset of over 3 million tweets to develop a dynamic and interactive sentiment mapping framework. While previous studies have laid foundational work in analyzing public sentiments during pivotal events, our research seeks to integrate these methodologies to offer more granular, real-time insights into state-specific emotional responses across the United States.

By employing R libraries within the Shiny web framework, we provide an innovative platform that allows users not only to view sentiment trends but also to interact with the data through visualizations such as sentiment-mapped U.S. states. This interactive capability enables users to filter sentiments by specific emotions and timeframes, offering a nuanced understanding of how public sentiments evolve in response to ongoing societal and political events across different time frames. Through this approach, our research contributes to the broader understanding of public sentiment, providing a valuable tool for policymakers, researchers, and the general public to gauge the emotional pulse of the nation effectively.

3. Contribution

Understanding public sentiment on key societal issues in real time is a critical yet challenging task due to the vast and dynamic nature of social media data. Existing sentiment analysis tools often lack granularity, real-time capabilities, and interactive features, making it difficult to capture nuanced emotional responses across different regions and timeframes. This research addresses these limitations by developing a Generalized Sentiment Analytics Framework (GSAF) that leverages machine learning and natural language processing to analyze and visualize public sentiment state-wise across the United States. By harnessing over 3 million tweets, the framework provides actionable insights into emotional trends, enabling policymakers, marketers, and researchers to make data-driven decisions based on real-time public discourse.

3.1. Innovations of GSAF

- 1.

- Real-Time State-Level Sentiment Mapping: GSAF uniquely visualizes sentiment at the granular state level in real time, enabling policymakers, researchers, and businesses to quickly identify regional variations and tailor interventions or strategies accordingly.

- 2.

- Interactive and User-Driven Exploration: The platform allows users to interactively filter and explore sentiment by specific keywords, emotions, and timeframes, enabling customized insights tailored to user needs.

- 3.

- Comprehensive Emotion Classification: Leveraging the NRC Emotion Lexicon, GSAF provides comprehensive categorization of tweets into multiple emotional dimensions, surpassing traditional binary sentiment classification. This richer emotional categorization allows deeper insights into public moods and reactions.

- 4.

- Scalable Data Processing and Visualization: With a robust workflow designed to handle millions of tweets efficiently, GSAF is capable of real-time sentiment visualization through scalable algorithms, optimizing both data retrieval and processing speeds.

By addressing the limitations inherent in existing sentiment analytics frameworks, GSAF offers significant innovation in sentiment analytics, providing stakeholders with powerful, actionable, and timely insights into regional variations in public sentiment.

3.2. Research Questions and Hypotheses

To provide a clearer structure and direction for this research, we define the following explicit research questions and hypotheses:

Research Questions:

- 1.

- RQ1: How can we develop a generalized framework for real-time sentiment analysis that captures nuanced emotional states (e.g., anger, joy, trust) from social media data?

- 2.

- RQ2: What are the most effective caching techniques for reducing computational latency in large-scale sentiment analysis applications?

- 3.

- RQ3: How does public sentiment vary across different U.S. states, and what factors contribute to these variations?

Hypotheses:

Hypothesis 1.

A lexicon-based sentiment analysis approach, combined with advanced text preprocessing techniques, can effectively capture nuanced emotional states from social media data.

Hypothesis 2.

Size-Based Eviction caching will outperform other caching techniques (e.g., LRU, LFU) in reducing computational latency for large-scale sentiment analysis.

Hypothesis 3.

Public sentiment will exhibit significant geographical and temporal variations, with specific emotions (e.g., anger, joy) dominating in certain states and timeframes.

In the rest of this paper, we try to answer the above-mentioned research questions and prove the hypotheses.

4. Workflow of Interactive Platform

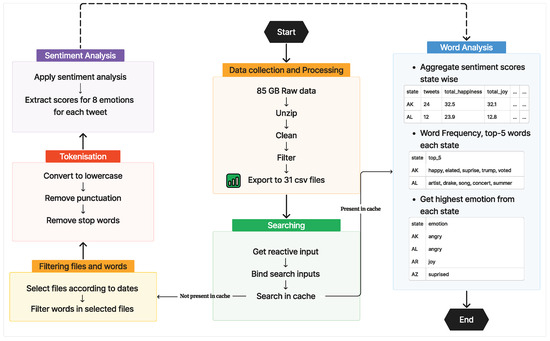

The workflow of developing our interactive platform is shown in Figure 1. This illustrates the stages of data collection, keyword-based search, and visualization of sentiment analysis. In the data collection phase, tweets from October 2022 were gathered from archive.org, processed to focus on U.S.-originating content, and converted into CSV files. The search phase allows users to input keywords and filter the dataset using a caching mechanism to optimize performance. A thorough text-cleaning process is applied, followed by sentiment analysis, which categorizes emotional content such as joy or anger. Finally, the results are visualized through interactive U.S. state-level choropleth maps, offering insights into emotion-specific trends across different regions. The pseudocode of the workflow of our developed interactive platform is shown in Algorithm 1.

| Algorithm 1: Pseudo-code of the workflow of our developed interactive platform. |

|

Figure 1.

Workflow of data collection, preprocessing, keyword search, sentiment analysis, and visualization using our developed platform.

The core of our workflow (Algorithm 1) comprises two nested loops: one over the selected date range and one over the user-specified search terms. Within each iteration, the system reads M tweets (per day) of average length L, filters them, and performs text preprocessing (lowercasing, stopword removal) and sentiment analysis. These text-processing operations typically scale on the order of O( M × L ) per iteration. Consequently, for F days and W words, the worst-case complexity is O(F × W × M × L ). Because F ≤ 31 and W ≤ 5 (limiting 5 words only per search) in our implementation, they are effectively small constants. Therefore, in practical terms, the runtime complexity of our workflow scales primarily as O(M × L).

Moreover, the system employs a caching mechanism to avoid reprocessing. If a particular (word, file) combination has already been analyzed, the results are loaded directly from the disk, further reducing the overhead in repeated queries. This design ensures that the computationally expensive sentiment analysis steps are only performed once per (word, day) pair, making the system efficient for interactive usage over multiple queries.

4.1. Data Collection and Prepossessing

In the preliminary phase of this research work, the data acquisition involved collecting a comprehensive dataset of tweets archived on archive.org [23], a repository known for its extensive archival capabilities. Specifically, our selection criteria focused on tweets in the month of October 2022. This decision was strategically made due to the incomplete data availability in subsequent months—November and December—where several days’ worth of tweets were missing. Consequently, October 2022 represented the most recent and complete dataset available for our study, ensuring a robust basis for analysis.

After data collection, data wrangling phases started as shown in Figure 2. We acquired day-wise zipped files, cumulatively amounting to approximately 83 GB of compressed data. Each of these files, corresponding to a single day, comprised 1440 individual zipped JSON [24] files, reflecting the number of minutes in a day. This granular approach allowed for a high-resolution snapshot of daily Twitter activity. Subsequently, we embarked on a meticulous data cleaning process. Each JSON file was extracted and filtered using node.js and other JavaScript libraries to retain only the tweets originating from the United States, which were relevant to our study’s geographical focus. Moreover, we streamlined the dataset by preserving essential attributes such as the content of the tweet, its timestamp, and the location metadata.

Figure 2.

Data collection and processing of tweets.

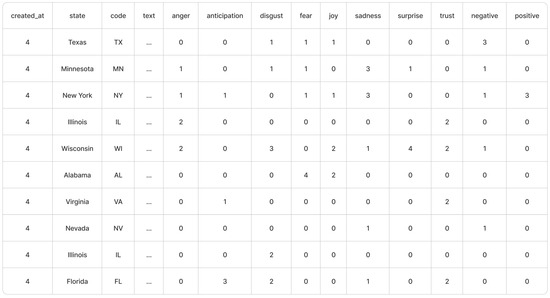

To enhance manageability and facilitate further analysis, we consolidated the 1440 files from each day into a single zipped file, thus yielding 31 consolidated files—one for each day of October. The final transformation stage involved converting these JSON files into CSV format, a necessary step to make the data compatible with our analytical tools in R. The resultant dataset comprised 31 CSV [25] files, an example of a csv file is shown in Figure 3, encompassing a total of approximately 3.5 million tweets, thereby providing a substantial empirical foundation for our subsequent analytical endeavors.

Figure 3.

Example of a table in the cache folder.

4.2. Searching Tweets by Keyword

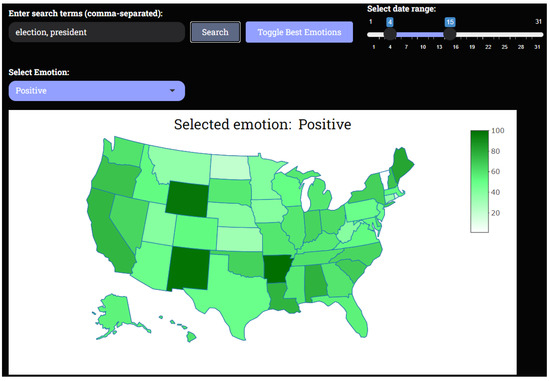

The search process is initiated when the user inputs one or more keywords and selects a specific date range through the user interface as shown in Figure 4. This user interaction, particularly the activation of the search button (input $searchBtn), triggers an event-driven reactive expression (eventReactive). This key element of our Shiny application’s server-side logic is responsible for orchestrating a complex sequence of data processing tasks that follows.

Figure 4.

Search bar with selection of dates.

Upon activation, the script dynamically constructs file paths for CSV files that correspond to the dates selected by the user. This is a critical step as it directs the subsequent data handling strategy. The system then checks for the existence of preprocessed data in a specially designed caching mechanism. If the required data is already available in the cache, it is used directly which significantly reduces processing time and computational load. If the data is not in the cache, the script proceeds with the processing phase.

The next stage involves the filtration of tweets. Using the dplyr [26] and stringr [27] packages, the script efficiently filters the dataset to retain only those tweets that contain the specified search terms. Following filtration, the text data undergoes a rigorous cleaning process. This process includes converting all text to lowercase, removing punctuation, and excluding stopwords. There are increasing applications of natural language processing techniques for information retrieval, indexing, topic modelling and text classification in engineering contexts. A standard component of such tasks is the removal of stopwords, which are uninformative components of the data [28]. This is accomplished by using a series of transformations provided by the tm [29] text mining package. These steps are crucial for normalizing the data and ensuring that the subsequent analysis is based on clean and consistent text.

This methodical approach to searching and processing tweets allows for a highly efficient and user-responsive system, enabling the analysis of large datasets in a manner that is both time-effective and resource-efficient. Through this process, users can derive meaningful insights from social media data, tailored specifically to their inputted criteria.

4.3. Applying Sentiment Analysis and Tokenization

Following the initial filtering of tweets to include only those containing specified search terms, the script constructs a text corpus using the Corpus function from the tm package, which serves as a structured container for the text data. This corpus, sourced from the filtered_tweets${text}, undergoes a series of transformations aimed at normalizing the textual content, thereby enhancing the accuracy and reliability of subsequent analyses. First, the tm_map function applies a content_transformer to convert all text within the corpus to lowercase, ensuring uniformity across the dataset. Subsequently, punctuation is removed to eliminate unnecessary characters that do not contribute to semantic meaning. The corpus is further refined by excluding common English stopwords—words such as “and”, “the”, and “is”, which are prevalent but generally irrelevant to sentiment analysis.

After these preprocessing steps, the cleaned text is extracted from the corpus using the sapply function, converting the content back into a character vector format suitable for further processing. The cleaned text replaces the original text in the filtered_tweets dataframe, ensuring that only relevant and cleaned text is retained for analysis. To ensure robustness, the script also filters out any remaining NA values or empty strings that might skew the analysis.

Subsequent steps involve the application of sentiment analysis to the cleaned text using the get_nrc_sentiment function from the tidytext package. This function assesses the emotional content of the text based on the NRC [30] Word-Emotion Association Lexicon, assigning sentiment scores across various emotional dimensions such as joy, sadness, and anger. These sentiment scores are then appended row-wise to the row_wise_sentiment_scores_only dataframe, while the filtered_tweets are stored in the just_tweets_text dataframe for potential future use.

To enhance efficiency in data handling and retrieval, the processed tweets and their corresponding sentiment scores are combined using the cbind function, forming a unified dataset (cache_file). This dataset is then named dynamically based on the search term and the original file name, and it is saved to a specified cache directory to facilitate quick access in future queries, thus minimizing the need for re-processing. The caching mechanism is crucial for handling large datasets and speeds up response times for repeated queries. The cached file is written out as a CSV file for durability and ease of access.

Finally, this newly created cache file is appended to the all_tweets dataframe, which cumulatively stores all tweets processed during the session. This consolidated dataset can be used for comprehensive analysis and reporting, encapsulating all relevant data processed during the user’s session. This workflow not only ensures that the data is thoroughly cleaned and analyzed but also optimally stored for efficient retrieval, embodying a robust approach to data management in text-based sentiment analysis applications.

4.4. Plotting on a Map

The final stage of our application involves visualizing the sentiment analysis results on a state-wise map of the United States. This is achieved using the plot_ly function from the Plotly (version number v5.24.1) library, which enables the creation of interactive choropleth maps.

Once the sentiment analysis has been conducted and the data has been processed, the application generates two types of visualizations depending on the user’s preferences. These visualizations help users interpret the aggregated sentiment data more effectively.

- Emotion-specific choropleth map: Users can select specific emotions—such as anger, joy, sadness, or trust—from a dropdown menu. Based on this selection, the application generates a choropleth map, where the intensity of color in each state represents the sentiment score for that emotion. The z parameter in the plot_ly function is mapped to the sentiment score for the chosen emotion, and a custom color scale is applied to enhance visual differentiation between high and low sentiment scores. The higher the sentiment score for a specific emotion, the darker the corresponding color on the map, giving users an immediate visual representation of the emotional climate across the United States. A few example for positive, trust, negative emotions can be seen in Figure 5 and Figure 6.

Figure 5. US State-wise choropleth map of positive sentiment for the searched key words ‘election’ and ‘president’.

Figure 5. US State-wise choropleth map of positive sentiment for the searched key words ‘election’ and ‘president’. Figure 6. US State-wise Choropleth Map of negative sentiment for the searched key words ‘election’ and ‘president’.

Figure 6. US State-wise Choropleth Map of negative sentiment for the searched key words ‘election’ and ‘president’. - Dominant emotion map: Additionally, users can also view a map that highlights the predominant emotion in each state. This map uses color to represent the dominant emotion for every state, allowing users to quickly identify which emotion (anger, joy, fear, etc.) is most prevalent in each region. The script calculates the highest sentiment score for each state across all emotions, and this dominant emotion is displayed with a distinct color on the map. A predefined color scheme is used for each emotion to provide a clear and consistent visual cue, making it easy to distinguish emotional trends on a national scale. A few examples of trust, positive, and negative emotions can be seen in Figure 7.

Figure 7. US state-wise dominant emotions for the key word ‘election’.

Figure 7. US state-wise dominant emotions for the key word ‘election’. - State-wise word frequency and top 8 words: In addition to the previously mentioned display of maps, we also have 2 features that try to grasp the emotion and overall sentiment of the words with respect to their corresponding states. Firstly we have the State-wise word frequency table that takes into account the top 5 most common words from each state as shown in Figure 8. This will help us know what words are used in the tweets, and when we look at the top 5 words, we can sometimes come to a conclusion of what event(s) have happened or what emotion is being shown for that particular state. Lastly, in addition to this, we also have the display of the top 8 most frequent words overall which gives us an insight into which keywords are currently trending across the states.

Figure 8. US state-wise top 5 frequent words.

Figure 8. US state-wise top 5 frequent words.

Both maps are interactive, allowing users to hover over individual states to see detailed sentiment scores or the most dominant emotion. This interactivity, facilitated by Plotly, provides users with a more engaging experience, allowing for deeper exploration of sentiment data.

By visualizing sentiment data in these two formats—emotion-specific and dominant emotion—our application provides users with a comprehensive tool for understanding the emotional landscape of the U.S. on various topics, enabling more informed decisions based on public sentiment.

5. Methodology

This section outlines the methodology used to perform sentiment analysis on a large collection of tweets. We will describe our machine learning strategy, which employs a lexicon-based approach consistent with unsupervised learning techniques. We chose a lexicon-based approach over deep learning methods due to its scalability, efficiency, and interpretability for large-scale, real-time sentiment analysis. Lexicon-based methods, such as the NRC Emotion Lexicon, do not require labeled training data, making them cost-effective and accessible for analyzing diverse societal issues [4]. While deep learning models like BERT offer superior performance in capturing context and nuance, they are computationally intensive and less interpretable, limiting their suitability for real-time applications [12]. Lexicon-based methods provide a lightweight, domain-independent solution for processing large datasets, such as our collection of 3 million tweets, without the need for extensive computational resources [6].

The underlying algorithms and tools, including the NRC Emotion Lexicon and essential R packages like tidytext, will be discussed. Additionally, we will detail the data preprocessing steps taken to prepare the text for analysis, explore the sentiment analysis method using the NRC Emotion Lexicon, and assess the advantages and limitations of our approach. Finally, we will explain how this methodology fits within a broader machine learning framework, paving the way for potential future enhancements.

5.1. Keyword Selection and Analysis

In Table 1, we give an example of a keyword searched, a tweet pertaining to that keyword. The time period selected as a filter for the keyword and lastly the underlying context or background behind the tweet for the keyword. However this is an example of an understanding for a keyword searched in the framework. There are multiple tweets for a certain keyword that correspond to a set time period.

Table 1.

In the result analysis section of this paper, used Keywords, Examples, Time Periods, and a short Background.

- Keyword: The primary term used to filter tweets for analysis.

- Example Tweet: A representative tweet containing the keyword, illustrating the type of content analyzed.

- Time Period: The timeframe during which tweets were collected and analyzed.

- Background: A brief explanation of the societal or political context surrounding the keyword.

5.2. Machine Learning Approach

Our analysis utilizes principles from the field of machine learning to conduct sentiment analysis on a vast corpus of tweets. Unlike traditional machine learning methods that often require training predictive models with labelled data, we adopt a lexicon-based method aligned with unsupervised learning techniques. This approach enables us to extract emotional insights from textual data without the need for extensive labelled datasets, thereby enhancing scalability and efficiency.

5.3. Underlying Algorithms

We employ a lexicon-based approach for sentiment analysis, utilizing the NRC Emotion Lexicon through the get_nrc_sentiment function from the tidytext package in R. Lexicon-based methods assign predefined sentiment scores to words based on a sentiment lexicon [31]. The NRC Emotion Lexicon associates words with eight basic emotions—anger, fear, anticipation, trust, surprise, sadness, joy, and disgust—and two sentiments, positive and negative [4].

These approaches are advantageous due to their simplicity and interpretability. They do not require labeled training data, making them suitable for large-scale analyses [6]. However, they may not capture context-specific meanings, sarcasm, or irony, which can limit their effectiveness in certain applications [7]. Despite these limitations, the lexicon-based method is appropriate for our analysis because of its scalability and the comprehensive emotional mapping provided by the NRC Emotion Lexicon.

5.4. Choice of Tools

The analysis was conducted using R, selected for its robust statistical capabilities and extensive ecosystem of packages suited for text mining and data manipulation. The tidytext package facilitates tidy text mining, allowing seamless integration with other tidyverse packages [32]. The tm package offers a comprehensive framework for text mining applications, including text preprocessing and transformations [33]. The dplyr package provides efficient data manipulation verbs essential for handling large datasets [34].

These tools are effective in processing and analyzing large volumes of data, as demonstrated by their ability to handle over 3 million tweets in this study. Their functionalities enable efficient data loading, cleaning, transformation, and analysis, which are critical steps in the text mining workflow.

5.5. Data Preprocessing

Data preprocessing is a vital phase in preparing textual data for analysis. The following techniques were employed:

- Tokenization: We broke down the text into individual words or tokens using the unnest_tokens function from the tidytext package.

- Normalization: To ensure uniformity across the dataset, all text was converted to lowercase using tm_map with the content_transformer(tolower) function.

- Stop word Removal: Common English stop words that do not carry significant meaning were removed using the removeWords function from the tm package with the stopwords(“english”) list.

The rationale behind these preprocessing choices was to balance computational efficiency with the need for accurate sentiment analysis. For instance, while stemming or lemmatization could have been applied to reduce words to their base forms, we opted to retain the full words to ensure the emotional context was preserved. This decision was particularly important given the nuanced nature of the NRC Emotion Lexicon, which relies on the specific emotional associations of complete words. Additionally, the removal of punctuation and stopwords streamlined the dataset, making it more manageable for large-scale analysis without sacrificing the richness of the emotional content. These preprocessing steps help reduce noise and focus the analysis on the most informative components of the text data.

5.6. Sentiment Analysis Approach

The sentiment analysis was conducted using the NRC Emotion Lexicon, a comprehensive list of English words and their associations with emotions and sentiments [4]. Developed through crowdsourcing, this lexicon allows for mapping words to specific emotional categories, facilitating nuanced analysis of textual data.

The NRC Emotion Lexicon is particularly relevant for capturing the emotional content of tweets, as it encompasses a wide range of emotions beyond simple positive or negative sentiments. By applying this lexicon, the analysis can detect subtle emotional cues and variations within the data, providing a more detailed understanding of public sentiment.

5.7. Advantages and Limitations

The lexicon-based approach offers several advantages:

- Simplicity and Efficiency: It is easy to implement and computationally less intensive, making it suitable for large datasets.

- No Training Data Required: Eliminates the need for annotated datasets, which can be costly and time-consuming to produce.

- Interpretability: Provides clear mappings between words and emotions, enhancing the interpretability of results.

However, there are limitations:

- Context Insensitivity: May not account for context, idioms, or colloquial expressions common in social media.

- Negation Handling: Struggles with phrases where negation alters sentiment (e.g., “not happy”).

- Sarcasm and Irony: Ineffective in detecting sarcasm or ironic statements, which can lead to misinterpretation.

Despite these limitations, the lexicon-based method is suitable for our analysis due to its scalability and the broad coverage of emotions, aligning with the study’s objectives.

5.8. Integration with Machine Learning Framework

While the primary sentiment analysis technique employed is lexicon-based, the overall methodology aligns with the broader machine learning framework through the following aspects:

- Automated Feature Extraction: The processes of tokenization, normalization, and stop word removal automate the extraction of relevant features from textual data, a fundamental aspect of machine learning pipelines.

- Scalability and Efficiency: Managing over 3 million tweets requires an approach that is both scalable and efficient, characteristics inherent to many machine learning algorithms.

- Data-Driven Insights: Aggregating and normalizing sentiment scores across different states facilitates data-driven decision-making and insights, a core objective in machine learning applications.

- Potential for Future ML Integration: The structured sentiment scores and emotional categorizations can serve as input features for more complex supervised or unsupervised machine learning models, such as clustering or classification algorithms, enhancing the depth of analysis.

By situating the lexicon-based sentiment analysis within a machine learning framework, the study ensures that the methodology not only leverages established machine learning principles but also lays the groundwork for potential extensions involving more sophisticated techniques.

6. Cache Techniques Analysis

Caching plays a critical role in enhancing the efficiency and responsiveness of data processing, especially in environments dealing with large volumes of real-time data, such as sentiment analysis on Twitter. In our analysis of over 3 million tweets, it was crucial to implement a caching mechanism that could efficiently store and retrieve data, thus reducing the time and computational resources required to process repeated queries. We will have a fixed cache size, let’s say 15 MB, to perform our computations effectively, we will explore some of the cache techniques to understand the best technique that fits our use.

However, other performance optimization strategies exist, each with its own strengths and limitations. For instance, parallel processing [35] leverages multi-core architectures to distribute computational tasks across multiple processors, significantly speeding up data processing for large-scale datasets. While parallel processing excels in handling computationally intensive tasks, it requires substantial hardware resources and careful load balancing, unlike caching, which is more lightweight and focused on reducing redundant data access. Another strategy is indexing [36], commonly used in database systems to accelerate query performance by creating data structures that allow for faster lookups. Indexing is particularly effective for read-heavy applications but incurs additional storage overhead and can slow down write operations. In contrast, caching does not alter the underlying data structure and is more flexible in handling dynamic queries.

The choice of caching strategy impacts the overall system performance, particularly in reducing latency during data retrieval. By evaluating different caching techniques, such as LRU, LFU, and Size-Based Eviction, we aimed to determine which method best aligns with the dynamic nature of our platform, where both repeated and unique queries occur frequently. Caching ensures that users experience minimal delays when interacting with the sentiment analysis system, allowing for real-time data analysis at scale.

In this section, we analyze five caching techniques: LRU (Least Recently Used) [37], LFU (Least Frequently Used) [37], Size-Based Eviction, MRU (Most Recently Used) [37], and FIFO (First-In-First-Out) [37]. The analysis considers their time efficiency, hit count, hit rate, miss count, and removal rate to determine which technique best suits the requirements of our project.

But first, Table 2 shows us our search queries that we will be using for analysing the cache techniques. For each computation, we run through all the search terms for its respective dates and store files one by one in the cache and if the cache size is full, we remove the files will we can accommodate the next incoming file in the cache folder. This computation is similar to the one mentioned in the Section 4.

Table 2.

Search Queries with dates.

6.1. Cache Metrics Explanation

To assess the effectiveness of the various cache techniques in this analysis, we used several key performance metrics: hit count, hit rate, miss count, removal count, and miss rate. Each of these metrics offers insight into the performance and efficiency of a caching strategy, especially when processing large datasets like the over 3 million tweets in our sentiment analysis project.

6.1.1. Hit Count

It represents the total number of times data was successfully retrieved from the cache, avoiding the need for time-consuming data processing. A higher hit count indicates that the cache is working efficiently to store and serve frequently accessed data. As shown in Figure 9, the Size-Based Eviction and Least Recently Used (LRU) techniques demonstrate the highest hit counts, suggesting that these methods are well-suited to environments with repeated queries.

Figure 9.

Impact of Hit Count on varying cache sizes.

6.1.2. Latency

It is the amount of time taken in secs for us to access the entire list of words with removal and insertion of files in the cache folder. We test the latency across 5 different cache techniques and come to a conclusion that Size Based Eviction fares best in the analysis. It takes the lowest time to access all the required files and also in removing and inserting new files into the cache folder as shown in Figure 10.

Figure 10.

Total Execution time vs. Cache Memory Size.

6.1.3. Hit Rate

It is the proportion of successful cache hits relative to the total number of requests made. It reflects the efficiency of the cache in serving relevant data. In Figure 11, we see that Size-Based Eviction again shows the highest hit rate, followed closely by LRU. The hit rate is crucial in ensuring fast response times in sentiment analysis applications, where real-time data access is essential.

Figure 11.

Impact of Hit Rate on varying cache sizes.

6.1.4. Miss Count

It measures how often the requested data is not found in the cache, requiring the system to fetch and process the data from the original source. A lower miss count is preferable, as it implies that the cache is successfully storing frequently accessed data. Figure 12 shows that Size-Based Eviction has the lowest miss count, indicating fewer cache misses and better overall performance in this scenario.

Figure 12.

Impact of Miss Count on varying cache sizes.

6.1.5. Removal Count

The removal count tracks the number of times data was removed from the cache to make room for new data. This is especially important when dealing with limited cache size, as frequent removal can degrade performance by evicting potentially useful data. In Figure 13, we observe that Size-Based Eviction and LRU have lower removal counts compared with other methods, indicating that they manage cache space more efficiently by retaining frequently used data longer.

Figure 13.

Impact of Removal Count on varying cache sizes.

By breaking down these metrics, we can see that Size-Based Eviction consistently performs best across most of these categories, followed by LRU. These metrics allow us to make informed decisions about which caching techniques are most efficient for our specific needs, particularly in handling large datasets and real-time sentiment analysis queries.

6.2. Discussion

Best Choice: Size-Based Eviction emerges as the most effective caching technique for this project. It consistently outperforms the other techniques in terms of time efficiency, hit rate, and removal rate. This method is ideal when cache space is limited and smaller items are more frequently reused, making it well-suited for large-scale data processing and sentiment analysis applications.

Runner-up: LRU is a close second, performing well across multiple metrics, particularly in large cache environments. Its consistency in hit rate and reduced removal count makes it a strong candidate when frequently reused data needs to be retained.

Consideration for Simplicity: FIFO offers a simpler, efficient solution when ease of implementation is important. While it does not perform as well as other algorithms, it is suitable for simpler applications where time complexity is less critical.

Not Recommended: MRU and LFU have slower time performance and lower hit rates compared with other techniques. They may be less suitable for high-performance environments like this project, where speed and accuracy in data retrieval are crucial.

6.2.1. Impact of Search Queries on Cache Analysis

Repeated keywords like “voting”, “elon”, and “president” help certain caching strategies perform better. LRU benefits from frequent reuse of these terms, keeping them in cache longer, leading to improved hit rates. LFU also performs well, as it tracks frequency, though with slightly higher overhead. MRU struggles with repeated terms since it evicts recently used items, leading to more cache misses. Size-Based Eviction handles repeated terms effectively due to the small size of search terms, while FIFO doesn’t benefit much, evicting data regardless of reuse.

6.2.2. Effect of Date Ranges on Cache Efficiency

Overlapping date ranges (e.g., 2–6 and 5–10) improve the performance of LRU and LFU, as previously cached data for earlier dates is reused efficiently. MRU performs worse since it evicts recently used data, leading to more misses. Size-Based Eviction remains effective regardless of date range overlap due to its focus on data size rather than recency. FIFO performs poorly with overlapping ranges, as older cached data is evicted without consideration for future reuse.

6.2.3. Analysis of Unique Search Terms

Unique terms like “boat” and “war” result in more cache misses across all strategies, but LRU and LFU perform better when these terms are accessed multiple times. MRU quickly evicts unique terms, making it less effective for one-off queries. Size-Based Eviction handles unique terms well due to their small size, while FIFO evicts them quickly, resulting in higher miss rates for less frequent terms.

6.3. Latency with and Without Cache

Caching is a fundamental technique used to improve the performance of data retrieval operations in web applications. In our sentiment analysis platform, we implemented a caching mechanism to store preprocessed tweet data associated with specific search terms and date ranges. This section examines the impact of the caching mechanism on the application’s latency, measured as the time taken to process a user’s search query and display the results.

We conducted an experiment over four weeks using the search terms “elon”, “musk”, and “twitter” which were highly popular at that time period and thus represent a substantial volume of data. For each week, we measured the latency with and without the caching mechanism in place. The results are presented in Figure 14.

Figure 14.

Impact of cache on latency over four weeks for search terms “elon”, “musk”, and “twitter”.

As illustrated in the figure, the latency without caching is significantly higher compared with when caching is utilized. Specifically, the average latency without cache ranged from approximately 127.46 s to 257.41 s, whereas with cache, the latency was drastically reduced, ranging from approximately 2.38 s to 4.07 s.

The caching mechanism reduces latency by eliminating the need to re-process and re-analyze tweet data for queries that have been previously executed. When a user submits a search query, the system first checks if the results for the given search terms and date range are already available in the cache. If they are, the system retrieves the preprocessed data, bypassing the time-consuming steps of data cleaning, filtering, and sentiment analysis. This leads to a substantial improvement in response time, enhancing the user experience.

The observed variations in latency across the weeks can be attributed to fluctuations in the volume of tweets associated with the search terms during those periods. In Week 4, for instance, there was a spike in latency without cache, reaching up to 257.41 s. This increase correlates with heightened Twitter activity surrounding events related to Elon Musk’s acquisition of Twitter, resulting in a larger dataset to process.

Overall, the implementation of caching in our sentiment analysis platform proved to be highly effective in reducing latency. By leveraging cache storage, we achieved an average reduction in latency of over 98 percent, significantly improving the application’s performance and scalability.

6.4. Sentiment Scores for Different Emotions

To analyze the temporal dynamics of public sentiment related to specific topics, we examined the cumulative sentiment scores over four consecutive weeks for the search terms “elon”, “musk”, and “twitter”. These terms were selected due to their high relevance and the significant public discourse surrounding Elon Musk’s acquisition of Twitter during the study period.

Figure 15 displays the normalized cumulative sentiment scores for six key emotions: anger, anticipation, fear, joy, negative, and positive. The scores are normalized by the total number of tweets each week to account for variations in tweet volumes, enabling a consistent comparison over time.

Figure 15.

Shifting of sentiment scores on different emotions over time.

From the figure, we observe that the positive sentiment consistently scores higher than the negative sentiment across all four weeks, although both exhibit fluctuations. The positive sentiment peaks in Week 1 at approximately 0.67 and slightly decreases over the following weeks, stabilizing around 0.64. The negative sentiment shows a slight increase from Week 1 to Week 3, peaking at approximately 0.47, before dropping to 0.40 in Week 4.

The emotions of anticipation and joy are prominent among the specific emotions analyzed. Anticipation starts at approximately 0.36 in Week 1, dips slightly in Week 2 and Week 4, and remains relatively stable throughout the period. This suggests a sustained level of eagerness or expectation among users regarding developments related to Elon Musk and Twitter.

Joy maintains a steady level around 0.28 in the first three weeks but declines to approximately 0.23 in Week 4. This decrease may reflect a reduction in positive feelings as the initial excitement waned or in response to unfolding events that tempered users’ enthusiasm.

The anger and fear emotions display interesting patterns. Anger increases from approximately 0.20 in Week 1 to 0.24 in Week 3, indicating growing frustration or discontent among users during that period. It then decreases to 0.19 in Week 4. Similarly, fear peaks in Week 3 at approximately 0.26, suggesting heightened concern or anxiety, before declining in Week 4.

These peaks in anger and fear during Week 3 coincide with significant events or announcements related to Twitter’s management changes, which may have caused unease among users. The subsequent decrease in these emotions in Week 4 could indicate a settling of initial reactions or adjustments to the new developments.

Overall, the sentiment curves indicate that while positive emotions remain dominant, there is a noticeable interplay between various emotions over time, reflecting the complex nature of public reactions to major events in the tech industry.

By analyzing these sentiment trends, stakeholders such as social media platforms, public relations teams, and policymakers can better comprehend the evolving emotional pulse of the public. This understanding enables them to tailor communication strategies, address public concerns proactively, and foster positive engagement.

6.5. Summary

The structure of our search queries, which include repeated terms and overlapping date ranges, plays a crucial role in determining the effectiveness of different caching techniques:

- Best Performers: Size-Based Eviction and LRU emerge as the best caching strategies for this project. Both techniques handle repeated queries and overlapping date ranges efficiently, leading to higher hit rates and lower cache misses. Size-Based Eviction, in particular, is highly efficient due to the small size of search terms.

- Worst Performers: MRU and FIFO are less efficient for repeated queries, overlapping dates, and unique search terms. These techniques lead to higher miss rates and don’t leverage the frequency or recency of the data effectively.

For our project, Size-Based Eviction and LRU would be optimal choices, particularly when handling large volumes of data that involve repeated keywords and overlapping date ranges.

7. Results

Our sentiment analysis applied to a dataset comprising over 3 million tweets focused on categorizing the emotions expressed across the United States on key societal issues during October 2022. The results provided insights into public sentiment by examining emotions such as anger, joy, trust, fear, and sadness. These patterns were visualized using a state-wise sentiment map, offering a deeper understanding of how emotional responses vary regionally.

7.1. Emotional Breakdown and State-Wise Sentiment

The sentiment analysis revealed that joy and trust were the predominant emotions in tweets related to topics such as education and local governance, particularly in states such as California, New York, and Florida. On the other hand, anger and fear were strongly associated with politically charged terms such as “election” and “president”, with states like Texas, Georgia, and Pennsylvania showing higher levels of negative sentiment.

Interestingly, the emotional responses varied depending on both the time frame and the topic. For example, during the midterm elections, the emotion of trust saw a significant rise, especially in politically active states, reflecting increased public confidence in the electoral process. Conversely, fear and sadness dominated tweets related to economic uncertainty and public health issues in the days preceding major political announcements.

7.2. Dominant Emotion Mapping

A key feature of our analysis was the dominant emotion mapping across U.S. states. This visualization identified the most prevalent emotion in each region based on the discussed topics. For example, joy was dominant in states where conversations focused on community and social initiatives, whereas anger was more prevalent in states with heightened political debate.

These sentiment trends offer insight not only into the emotional climate but also into the topics driving discourse in different regions. For instance, election-related tweets evoked a broader spectrum of emotions, with anger and trust alternating as dominant emotions based on state and time frame.

7.3. Sentiment Trends over Time

By segmenting the sentiment analysis by date, notable fluctuations in public sentiment emerged over different periods. During significant political events, such as election debates or policy announcements, negative emotions like anger and fear surged, followed by periods of joy or trust, particularly when positive resolutions were achieved.

Tweets concerning major news events like the economy or pandemic restrictions exhibited consistent levels of sadness and fear, signaling that these concerns remained persistent over time. Conversely, tweets about social topics, such as sports and entertainment, maintained higher levels of joy and trust, indicating a more positive tone in those discussions.

7.4. Keyword-Specific Sentiment

In analyzing specific keywords, such as “election”, “president”, and “voting”, we found that these terms triggered strong emotional responses. The term “election” predominantly elicited trust, particularly in politically stable regions, but also saw pockets of anger and fear in swing states. Keywords related to economic issues, like “inflation” and “jobs”, were mostly associated with fear and sadness, reflecting widespread public concern.

By contrast, non-political terms such as “community” and “sports” evoked overwhelmingly positive sentiments, with joy and trust being the dominant emotions. This suggests that non-contentious, social topics generally elicit a more positive emotional response, regardless of the region.

7.5. Scalability and Limitations

The Generalized Sentiment Analytics Framework (GSAF) demonstrates strong scalability and real-time capabilities but faces certain limitations that warrant discussion.

7.5.1. Scalability

The framework efficiently processes large datasets, as shown by its application to over 3 million tweets. Caching mechanisms, such as Size-Based Eviction, reduce computational overhead by minimizing redundant processing, enabling efficient handling of repeated queries. The modular architecture allows for the integration of additional data sources, while parallel processing ensures low latency for real-time applications. However, as datasets grow, storage requirements for caching become a bottleneck, and unique queries may experience higher latency due to real-time processing demands. Optimizing preprocessing pipelines for such cases remains a future challenge.

7.5.2. Limitations

The framework’s lexicon-based approach struggles with contextual nuances, such as sarcasm and irony, leading to potential misclassifications. For example, a sarcastic tweet like “Great job, another delay!” might be labeled as positive. Additionally, the NRC Emotion Lexicon is limited to English, restricting its applicability to non-English tweets and overlooking cultural variations in emotional expression. Twitter data also introduces demographic bias, as it over-represents younger, tech-savvy users, skewing sentiment results for topics with uneven engagement across demographics.

Temporal sensitivity is another issue, as rapid sentiment fluctuations during breaking news events may not reflect sustained public opinion. The broad emotion categories in the NRC Lexicon (e.g., joy, anger) fail to capture subtle emotional variations, such as distinguishing between “anticipation” and “excitement”. Furthermore, state-level sentiment mapping may mask localized variations within states, particularly in large or diverse regions.

8. Applications of Findings in Real-World Scenarios

The results of our sentiment analysis have significant real-world applications across various sectors, such as policymaking, marketing, and public health campaigns. By understanding the emotional tone of public discourse, stakeholders can make informed decisions that better reflect the public’s feelings, concerns, and expectations.

8.1. Policymaking

Governments and policymakers can use sentiment analysis to gauge public reactions to new policies, political debates, or social issues. For example, during election periods, monitoring emotions such as trust or anger can provide insights into voter confidence or dissatisfaction with current political leaders. In our analysis, states showing increased trust during elections could indicate voter confidence in the electoral process. Policymakers can use these insights to adjust communication strategies, respond to public concerns more proactively, and tailor their campaigns accordingly.

8.2. Marketing and Business Strategy

For companies, understanding public sentiment is crucial for brand reputation management, customer engagement, and product development. Analyzing consumer sentiment towards products or services can inform more effective marketing campaigns. Our findings show that terms related to community and entertainment evoked positive emotions such as joy and trust, indicating that emotionally uplifting content may foster stronger brand associations. Additionally, companies can detect negative sentiment spikes related to economic issues like “inflation” and adjust their marketing messages to better align with the public mood.

8.3. Public Health Campaigns

Public sentiment surrounding health issues, particularly during crises such as the COVID-19 pandemic, can guide public health communication strategies. Heightened emotions of fear and sadness in health-related discourse suggest that campaigns should focus on reassuring the public and providing clear, actionable advice. By tracking real-time sentiment, public health officials can monitor reactions to new health guidelines or vaccines, allowing for more targeted and effective communication to build trust and reduce fear.

8.4. Value for Stakeholders

The insights gained from understanding public sentiment hold great value for different stakeholders:

- Government officials can monitor public sentiment to adjust policies and communication strategies based on real-time feedback.

- Companies and marketers can optimize their marketing strategies, tailor customer engagement, and anticipate potential issues based on consumer sentiment.

- Researchers and social scientists can use sentiment data to study social behaviors, trends, and emotional responses to various societal events, helping to predict future shifts in public opinion.

While Section 7 and Section 8 provide a high-level overview of our sentiment analysis results, a more detailed Exploratory Data Analysis (EDA) is presented in Appendix A. This EDA examines the relationship between tweet length and emotion (Figure A1 and Figure A2), the correlation among different emotional states (Figure A3 and Figure A4), and specific weekly trends observed during Elon Musk’s takeover of Twitter (Figure A5 and Figure A6). These supplementary visualizations offer deeper insights into the nuances of tweet structure, emotional co-occurrence, and geographic sentiment shifts throughout high-impact events.

9. Conclusions and Future Work

The sentiment analysis of over 3 million tweets using our developed interactive platform provided a valuable glimpse into the emotional landscape of the United States during a socially and politically significant period. Emotions such as trust, anger, joy, and fear fluctuated depending on the topic and location, offering a nuanced understanding of public sentiment.

The developed interactive sentiment mapping tool allowed users to filter emotions and time frames, revealing how public sentiment evolves in response to ongoing societal and political events. The findings underscore the importance of sentiment analysis in gauging public emotions and understanding the underlying drivers of discourse on key societal issues. Additionally, the extensive result analysis shows that the interactive platform with the size-based-eviction caching technique performs the best in terms of reducing overall computation latency in getting the sentiment results of a given topic.

For future work, the main goal is to host this sentiment analysis platform as a live, interactive Software as a Service (SaaS), allowing external users to access and explore public sentiment data in real time. By offering the platform as a SaaS, it will provide a scalable solution for researchers, policymakers, businesses, and marketers to gain insights from social media sentiment. The platform will feature subscription-based pricing models, generating revenue while delivering valuable tools for analyzing public discourse, filtering emotional responses by state, topic, and time, and visualizing sentiment trends dynamically.

Author Contributions

Conceptualization, A.M.K.M., G.G.M.N.A. and S.S.K.; Methodology, A.M.K.M. and G.G.M.N.A.; Software, A.M.K.M.; Validation, A.M.K.M. and G.G.M.N.A.; Formal analysis, G.G.M.N.A. and S.S.K.; Investigation, G.G.M.N.A. and S.S.K.; Writing—original draft, A.M.K.M., G.G.M.N.A. and S.S.K.; Writing—review & editing, G.G.M.N.A. and S.S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Bradley University Student Engagement Award (SEA) grant number SEA #1331464.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in Sentiment-Analysis-Web-Application https://github.com/Khanisic/Sentiment-Analysis-Web-Application (accessed on 24 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| LRU | Least Recently Used |

| LFU | Least Frequently Used |

| MRU | Most Recently Used |

| FIFO | First-In-First-Out |

| GSAF | Generalized Sentiment Analytics Framework |

| NLP | Natural Language Processing |

| SaaS | Software as a Service |

Appendix A. Exploratory Data Analysis

This section includes an analysis of tweet length in relation to emotions, as well as correlations between different emotions. Lastly, we will have a narrative of the events that transpired during Elon Musk’s Twitter (X) take over [38] through our framework’s capabilities of generating emotion-specific maps.

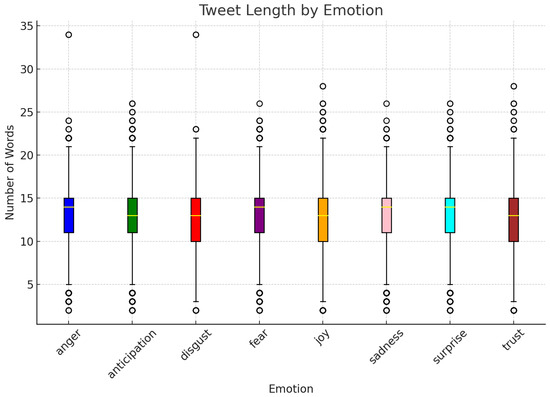

Appendix A.1. Tweet Length vs. Emotion

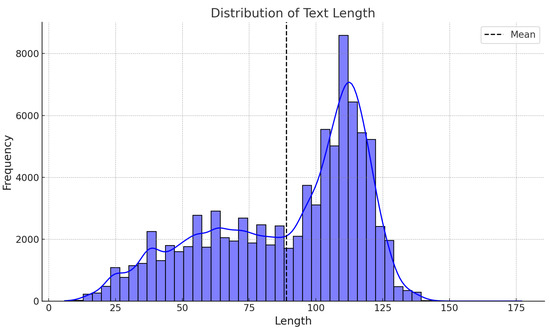

The relationship between tweet length and emotion was analyzed using boxplots and distributions. As seen in Figure A1, tweet lengths vary across emotions. While certain emotions like anticipation and trust are associated with relatively longer tweets, emotions such as sadness and fear tend to exhibit shorter average tweet lengths.

Figure A1.

Tweet length vs. Emotion.

The distribution of text length, as shown in Figure A2, reveals a peak around the mean length, which is denoted by the dashed line. This indicates that most tweets fall within a specific range of lengths, which could be reflective of typical tweet composition styles across emotions.

Figure A2.

Frequency Distribution of Tweet length.

Appendix A.2. Correlation of Emotions

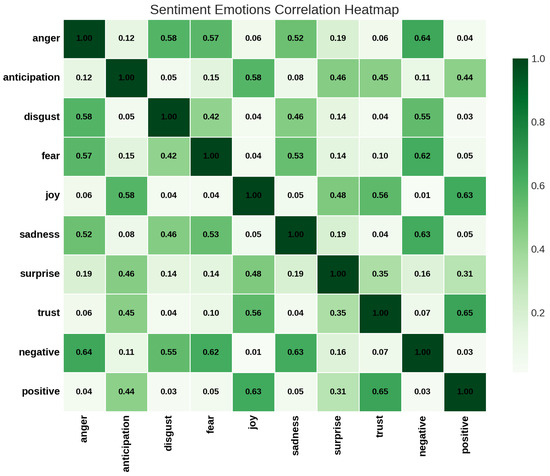

A heatmap visualization in Figure A3 illustrates the correlations between different emotions. Key observations include:

- Strong correlations between negative emotions like anger, fear, and sadness.

- Positive emotions such as joy and trust show a significant correlation, reflecting their shared influence in optimistic tweets.

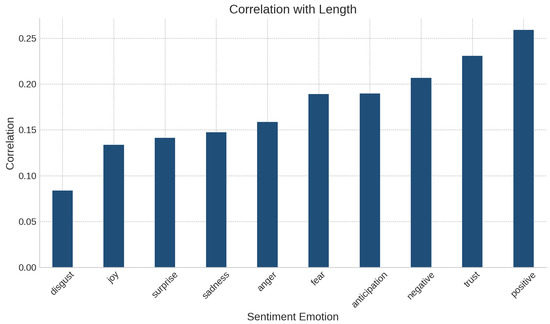

The correlation of emotions with tweet length, as shown in Figure A4, reveals that positive sentiments tend to have a stronger association with longer tweet lengths, while emotions like disgust exhibit a weaker correlation. This pattern emphasizes the variance in tweet structures based on the underlying emotional content.

Figure A3.

Heat map of emotions with each other.

Figure A4.

Correlation of Emotions with length.

Appendix A.3. Insights from Correlations

The correlations revealed by the heatmap and other analyses provide several key insights into the relationships between emotions, their intensities, and their association with tweet length:

- Strong Correlation Among Negative Emotions:

- -

- Anger, Fear, and Sadness: These emotions show a strong positive correlation, as they often co-occur in tweets expressing distress, frustration, or negativity. For instance, tweets about tragic events may evoke both sadness and fear simultaneously.

- -

- Disgust and Anger: Disgust is highly correlated with anger, reflecting the overlap in contexts where individuals express moral outrage or aversion.

- Positive Emotion Interconnections:

- -

- Joy and Trust: A strong positive correlation exists between these emotions, likely because they are associated with optimistic or hopeful tweets. For example, joyful tweets often convey trust in others or in a situation, such as during celebrations or positive announcements.

- Emotion Intensities and Polarization:

- -

- The correlation between negative and positive emotions is very low (near zero), highlighting the distinct contexts in which these emotions occur. This suggests that tweets are typically polarized—either expressing strong negativity or positivity, rather than a mix of both.

- Tweet Length and Positive Emotions:

- -

- Positive emotions, such as joy and trust, show a higher correlation with longer tweet lengths. This may be because positive sentiments often involve descriptive, celebratory, or explanatory tweets, which require more words to articulate.

- Negative Emotions and Conciseness:

- -

- Negative emotions like anger, disgust, and fear tend to have weaker correlations with tweet length, implying that these sentiments are often expressed more concisely. For example, short and sharp expressions like “This is horrible!” or “I’m scared” might dominate such tweets.

- Anticipation and Surprise:

- -

- Moderate correlations between anticipation and other emotions, such as trust and joy, suggest that this emotion often accompanies optimism.

- -

- Similarly, surprise correlates moderately with both positive and negative emotions, indicating its versatility in different contexts (e.g., “I’m shocked!” could be used positively or negatively).

- General Trend Between Emotions and Length:

- -

- Emotions that require detailed context or explanation (e.g., joy, trust) tend to have higher correlations with length, whereas emotions that are reactive or instinctive (e.g., anger, disgust) often result in shorter tweets.

These insights highlight how emotions are interconnected and how their expression in tweets is shaped by both emotional context and the constraints of the medium. They also emphasize the distinction between positive and negative emotional content in social media communication.

Appendix A.4. Weekly Trends of Emotions

In this subsection, we analyze the emotional trends of 3 emotions observed during Elon Musk’s takeover of Twitter (now known as X) by utilizing our framework’s capabilities to generate emotion-specific maps. These maps highlight the geographical distribution and intensity of various emotions expressed across the United States during the event.

- Disgust: As shown in Figure A6, the emotion of disgust was highly prevalent in certain regions, likely reflecting strong negative reactions to specific policy changes or controversial announcements made during the transition.

- Fear: The maps in Figure A7 reveal that fear was more geographically distributed, with notable spikes in areas where users expressed uncertainty about the future of the platform or their personal data security.

- Anticipation: Finally, Figure A5 illustrates regions with heightened anticipation, where users expressed eagerness for future updates or changes to the platform under Musk’s leadership.

Figure A5.

Weekly sentiment trend of the emotion anticipation for the words—‘elon’, ‘musk’, and ‘twitter’.

Figure A6.

Weekly sentiment trend of the emotion disgust for the words “elon”, “musk”, and “twitter”.

Figure A7.

Weekly sentiment trend of the emotion fear for the words “elon”, “musk”, and “twitter”.

Through these emotion-specific maps, we can observe how public sentiment varied geographically and temporally, providing a nuanced understanding of the emotional landscape during this significant event.

References

- Zimbra, D.; Abbasi, A.; Zeng, D.; Chen, H. The State-of-the-Art in Twitter Sentiment Analysis: A Review and Benchmark Evaluation. ACM Trans. Manag. Inf. Syst. 2018, 9, 5. [Google Scholar] [CrossRef]

- Giachanou, A.; Crestani, F. Like It or Not: A Survey of Twitter Sentiment Analysis Methods. ACM Comput. Surv. 2016, 49, 2–28. [Google Scholar] [CrossRef]

- Samuel, J.; Ali, G.G.M.N.; Rahman, M.M.; Esawi, E.; Samuel, Y. COVID-19 Public Sentiment Insights and Machine Learning for Tweets Classification. Information 2020, 11. [Google Scholar] [CrossRef]

- Mohammad, S.M.; Turney, P.D. Crowdsourcing a Word–Emotion Association Lexicon. Comput. Intell. 2013. Available online: https://api.semanticscholar.org/CorpusID:9388645 (accessed on 24 January 2025).

- Hutto, C.J.; Gilbert, E. VADER: A Parsimonious Rule-Based Model for Sentiment Analysis of Social Media Text. In Proceedings of the International AAAI Conference on Web and Social Media, Ann Arbor, MI, USA, 1–4 June 2014. [Google Scholar]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.D.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.H.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. Available online: https://api.semanticscholar.org/CorpusID:16768404 (accessed on 24 January 2025). [CrossRef]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment Classification Using Machine Learning Techniques. arXiv 2002, arXiv:cs.CL/0205070. [Google Scholar]

- Millennianita, F.; Athiyah, U.; Muhammad, A.W. Comparison of Naïve Bayes Classifier and Support Vector Machine Methods for Sentiment Classification of Responses to Bullying Cases on Twitter. J. Mechatronics Artif. Intell. 2024, 1, 11–26. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Aydin, C.R.; Güngör, T. Combination of Recursive and Recurrent Neural Networks for Aspect-Based Sentiment Analysis Using Inter-Aspect Relations. IEEE Access 2020, 8, 77820–77832. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding; North American Chapter of the Association for Computational Linguistics: Minneapolis, MN, USA, 2019. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:abs/1907.11692. [Google Scholar]

- Bollen, J.; Mao, H.; Zeng, X.-J. Twitter Mood Predicts the Stock Market. arXiv 2010. Available online: https://api.semanticscholar.org/CorpusID:14727513 (accessed on 24 January 2025).

- Jayalakshmi, V.; Lakshmi, M. Twitter Sentiment Analysis Tweets Using Hugging Face Harnessing NLP for Social Media Insights. In Advancements in Smart Computing and Information Security; Rajagopal, S., Popat, K., Meva, D., Bajeja, S., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 378–389. [Google Scholar]

- Srivastava, M.; Khatri, S.K.; Sinha, S.; Ahluwalia, A.; Johri, P. Understanding Relation between Public Sentiments and Government Policy Reforms. In Proceedings of the 2018 International Conference on Recent Innovations in Telecommunications and Internet of Things (ICRITO), Amity University, Noida, India, 30–31 August 2018; pp. 213–218. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 24 January 2025).

- Chang, W.; Cheng, J.; Allaire, J.J.; Sievert, C.; Schloerke, B.; Xie, Y.; Allen, J.; McPherson, J.; Dipert, A.; Borges, B.; et al. Shiny: Web Application Framework for R, R Package Version 1.7.1. 2021. Available online: https://CRAN.R-project.org/package=shiny (accessed on 24 January 2025).

- Belcastro, L.; Cantini, R.; Marozzo, F. Knowledge Discovery from Large Amounts of Social Media Data. Appl. Sci. 2022, 12, 1209. [Google Scholar] [CrossRef]

- Samuel, J.; Rahman, M.M.; Ali, G.G.M.N.; Samuel, Y.; Pelaez, A.; Chong, P.H.J.; Yakubov, M. Feeling Positive About Reopening? New Normal Scenarios From COVID-19 US Reopen Sentiment Analytics. IEEE Access 2020, 8, 142173–142190. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Chen, J.; Chen, C.; Zheng, C.; Li, S.; Zhu, T. Public discourse and sentiment during the COVID-19 pandemic: Using Latent Dirichlet Allocation for topic modeling on Twitter. PLoS ONE 2020, 15, e0239441. [Google Scholar] [CrossRef] [PubMed]

- Kowsik, V.V.S.; Yashwanth, L.; Harish, S.; Kishore, A.; Renji, S.; Jose, A.C.; Dhanyamol, M.V. Sentiment analysis of twitter data to detect and predict political leniency using natural language processing. J. Intell. Inf. Syst. 2024, 62, 765–785. [Google Scholar] [CrossRef]

- Archive.org. Twitter Data Archive for October 2022. 2022. Available online: https://archive.org/details/twitterstream (accessed on 24 January 2025).

- json.org. JSON. 2001. Available online: https://www.json.org/json-en.html (accessed on 24 January 2025).

- Wikipedia Contributors. Comma-Separated Values. 2022. Available online: https://en.wikipedia.org/wiki/Comma-separated_values (accessed on 24 January 2025).

- Wickham, H.; François, R.; Henry, L.; Müller, K. Dplyr: A Grammar of Data Manipulation, R package Version 1.0.7. 2021. Available online: https://CRAN.R-project.org/package=dplyr (accessed on 24 January 2025).

- Wickham, H. Stringr: Simple, Consistent Wrappers for Common String Operations, R Package Version 1.4.0. 2021. Available online: https://CRAN.R-project.org/package=stringr (accessed on 24 January 2025).

- Sarica, S.; Luo, J. Stopwords in technical language processing. PLoS ONE 2021, 16, e0254937. [Google Scholar] [CrossRef] [PubMed]

- Feinerer, I. Introduction to the tm Package Text Mining in R. 2024. Available online: https://cran.r-project.org/web/packages/tm/tm.pdf (accessed on 24 January 2025).

- NRC Word-Emotion Association Lexicon. Available online: https://saifmohammad.com/WebPages/NRC-Emotion-Lexicon.htm (accessed on 24 January 2025).

- Zhang, L.; Liu, B. Sentiment Analysis and Opinion Mining. Synth. Lect. Hum. Lang. Technol. 2012. Available online: https://api.semanticscholar.org/CorpusID:38022159 (accessed on 24 January 2025).

- Silge, J.; Robinson, D. Tidytext: Text Mining and Analysis Using Tidy Data Principles in R. J. Open Source Softw. 2016, 1, 37. Available online: https://api.semanticscholar.org/CorpusID:53223972 (accessed on 24 January 2025). [CrossRef]

- Feinerer, I.; Hornik, K.; Meyer, D. Text Mining Infrastructure in R. J. Stat. Softw. 2008, 25, 1–54. Available online: https://api.semanticscholar.org/CorpusID:51738608 (accessed on 24 January 2025). [CrossRef]

- Wickham, H.; François, R. A Grammar of Data Manipulation. 2015. Available online: https://api.semanticscholar.org/CorpusID:62077685 (accessed on 24 January 2025).

- Spiceworks. (n.d.). What Is Parallel Processing? Spiceworks. Available online: https://www.spiceworks.com/tech/iot/articles/what-is-parallel-processing (accessed on 10 March 2025).

- Crown Records Management. 22 October 2024. Data Indexing Strategies for Faster & Efficient Retrieval. Crown Records Management. Available online: https://www.crownrms.com/insights/data-indexing-strategies/ (accessed on 24 January 2025).

- Wikipedia Contributors. Cache Replacement Policies. Available online: https://en.wikipedia.org/wiki/Cache_replacement_policies (accessed on 13 September 2024).

- New York Times. Elon Musk Completes Twitter Deal. 27 October 2022. Available online: https://www.nytimes.com/2022/10/27/technology/elon-musk-twitter-deal-complete.html (accessed on 22 November 2024).