AcademiCraft: Transforming Writing Assistance for English for Academic Purposes with Multi-Agent System Innovations

Abstract

1. Introduction

- We introduce AcademiCraft, a novel MAS-based EAP writing assistance that not only corrects and enhances texts but also provides detailed explanations for revisions, fostering a deeper understanding of academic writing conventions.

- We develop an innovative approach that integrates LLM-based agents for different aspects of text revision, including grammar correction, sentence-level enhancement, paragraph coherence, and chapter-level organization, providing comprehensive writing support.

- Through extensive empirical evaluation, we demonstrate AcademiCraft’s superior performance compared to leading commercial tools across multiple metrics, including grammatical accuracy, text coherence, and academic language use.

2. Related Work

2.1. EAP

2.2. EAP Writing Assistance

Common Challenges

2.3. Prompt Engineering

2.4. AI Agent

3. Objectives

4. Approaches

- Sentence refers to a single, standalone sentence that requires revision primarily focused on grammatical accuracy, academic phraseology, and lexical precision.

- Paragraph encompasses text consisting of two or more sentences forming a cohesive unit with a central idea, requiring analysis of internal coherence, logical flow, and collective academic tone.

- Chapter refers to multiple paragraphs organized together, requiring higher-level structural assessment including moves analysis and cross-paragraph coherence.

4.1. Agent Interaction and Workflow Formalization

4.2. Computational Analysis

5. Experiments

- How effective is AcademiCraft at improving text quality across different granularities compared to existing commercial tools?

- Does AcademiCraft provide consistent performance benefits for both L1 and L2 English writers?

- What are the specific strengths and limitations of our MAS-based approach in different aspects of academic writing assistance systems?

5.1. Baselines

5.2. Data

5.3. Metrics

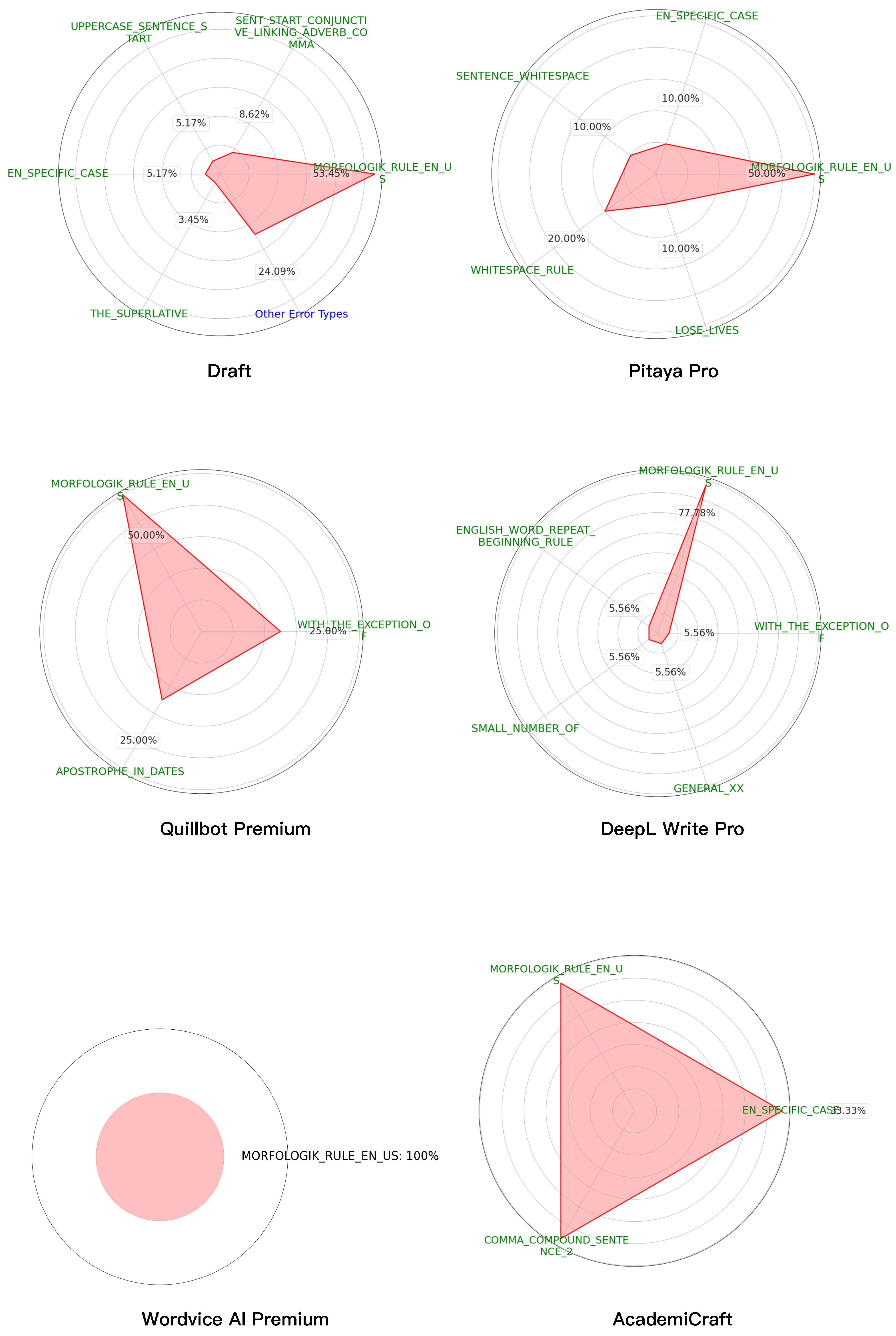

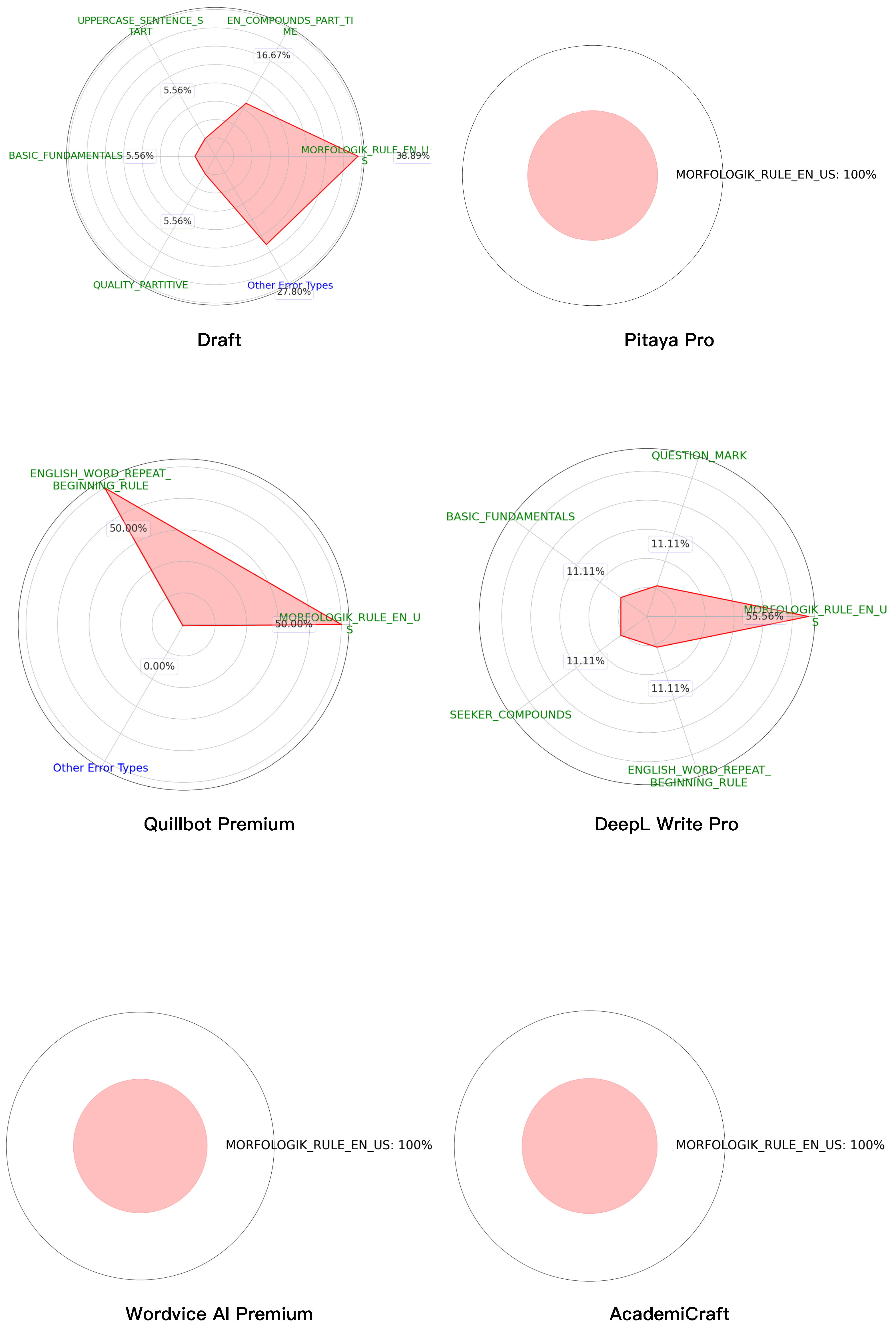

5.3.1. Sentence Revision

- Grammatical error rate (GER) is used to evaluate the grammatical error rate within a single text. GER measures the ratio of the number of grammatical errors to the total number of words in the text, thereby assessing the grammatical accuracy of the text. This metric is calculated using the language-tool-python (https://github.com/jxmorris12/language_tool_python (accessed on 1 July 2024)). The formula for the calculation is as follows:

- The proportion of grammatical error types is used to evaluate the relative frequency of various types of grammatical errors in a text. This metric reflects the distribution of different types of grammatical errors by counting the instances of each type of error and calculating their proportion in the total number of grammatical errors. This metric is calculated using the language-tool-python

- GLUE [69] is used to evaluate the semantic similarity between the draft and the revised draft. This metric employs the all-MiniLM-L6-v2 [70] model from the SentenceTransformers [71] library. Specifically, the draft and revised draft texts are encoded into vectors using the model, and then the cosine similarity between these vectors is calculated. The formula is as follows:where and represent the vector representations of the draft and revised draft, respectively.

- Flesch reading ease (FRE) [72] is used to evaluate the readability of a text. This metric is calculated using the NLTK (https://github.com/nltk/nltk (accessed on 1 July 2024). The calculation formula is as follows:The FRE score ranges from 0 to 100, with higher values indicating easier readability. The specific segments are interpreted as follows:

- –

- 0–30: Extremely difficult text, suitable for highly specialized academic articles.

- –

- 31–50: Very difficult text, suitable for academic papers.

- –

- 51–60: Moderately difficult text, suitable for articles at the undergraduate level and above.

- –

- 61–70: Fairly easy-to-read text, suitable for high school-level articles.

- –

- 71–80: Easy text, suitable for middle school-level articles.

- –

- 81–100: Very easy text, suitable for elementary school-level articles.

- The Gunning fog index (GFI) [73] is used to measure the readability of a text, particularly evaluating the difficulty level for readers to comprehend the material. This metric determines the text’s complexity by calculating the proportion of sentence length and complex words. A higher value indicates a more difficult text to read. Thus, in academic writing, a high GFI value typically signifies more in-depth and specialized content. This metric is calculated using the NLTK. The specific calculation formula is as follows:

5.3.2. Paragraph Revision

- TL Freq FW Log (TFFL) is used to evaluate the frequency of academic vocabulary in the text. This metric reflects the academic level of the text by calculating the logarithmic frequency of specific frequent words (such as academic words). The calculation of this metric is based on the TAALES [74] tool, which extracts the frequency of academic vocabulary in the text and takes the logarithm to better quantify the use of academic vocabulary. The specific calculation formula is as follows:

- BNC-written bigram proportion (BWBP) is used to evaluate the use of bigrams in the text. This metric reflects the natural fluency and linguistic authenticity of the text by calculating the proportion of bigrams found in the written section of the BNC. The calculation of this metric is based on extracting bigrams from the text and comparing them with those in the BNC-written corpus to determine their usage proportion. This metric is calculated using the TAALES. The specific calculation formula is as follows:

- BNC-written trigram proportion (BWTP) is used to evaluate the use of trigrams in the text. This metric reflects the natural fluency and linguistic authenticity of the text by calculating the proportion of trigrams found in the written section of the BNC. This metric is calculated using the TAALES. The specific calculation formula is as follows:

- Syntactic overlap of sentence noun phrases (SOSNPs) is used to evaluate the repetition degree of noun phrases between adjacent sentences within a single draft, measuring the coherence of the text. By calculating the frequency and overlap of noun phrases in adjacent sentences, this metric reflects whether the author maintains consistent themes and coherent discourse between sentences. This metric is calculated using the TAACO [75]. The specific calculation formula is as follows:

- Syntactic overlap of sentence verb phrases (SOSVPs) is used to evaluate the repetition degree of verb phrases between adjacent sentences within a single draft, measuring the coherence of the text. By calculating the frequency and overlap of verb phrases in adjacent sentences, this metric reflects whether the author maintains consistent action or state descriptions between sentences, thereby enhancing the coherence of the text. This metric is calculated using the TAACO. The specific calculation formula is as follows:

- Latent semantic analysis full sentence similarity (LSAFSS) is used to evaluate the semantic similarity between sentences within a single draft. This metric measures the degree of similarity in a semantic space using latent semantic analysis (LSA) [76], thereby reflecting the coherence and consistency of the text. Specifically, LSAFSS maps each sentence into a semantic space and calculates the cosine similarity between adjacent sentences in this space. This metric is calculated using the TAACO. The specific calculation formula is as follows:where represents the vector representation of the i-th sentence in the semantic space, and n is the total number of sentences in the draft.

- Latent Dirichlet allocation full sentence similarity (LDAFSS) is used to evaluate the topic similarity between sentences within a single draft. This metric measures the degree of similarity in a topic space using latent Dirichlet allocation (LDA) [77], thereby reflecting the coherence and consistency of the text. Specifically, LDAFSS maps each sentence into a topic space and calculates the cosine similarity between adjacent sentences in this space. This metric is calculated using the TAACO. The specific calculation formula is as follows:where represents the vector representation of the i-th sentence in the topic space, and n is the total number of sentences in the draft.

- Word2Vec Full sentence similarity (W2VFSS) is used to evaluate the word vector similarity between sentences within a single draft. This metric measures the degree of similarity in a word vector space using the Word2Vec [78], thereby reflecting the coherence and consistency of the text. Specifically, W2VFSS maps each sentence into the word vector space and calculates the cosine similarity between adjacent sentences in this space. This metric is calculated using the TAACO. The specific calculation formula is as follows:where represents the vector representation of the i-th sentence in the word vector space, and n is the total number of sentences in the draft.

5.3.3. Chapter Revision

- Syntactic overlap of paragraph noun phrases (SOPNPs) is used to evaluate the repetition degree of noun phrases within a single draft, measuring the internal consistency and coherence of the text. By calculating the frequency and overlap of noun phrases within the text, this metric reflects whether the author maintains a high level of consistency and logical coherence within paragraphs. This metric is calculated using the TAACO. The specific calculation formula is as follows:

- Syntactic overlap of paragraph verb phrases (SOPVPs) is used to evaluate the repetition degree of verb phrases within a single draft, measuring the internal consistency and coherence of the text. By calculating the frequency and overlap of verb phrases within the text, this metric reflects whether the author maintains consistent actions or states descriptions within paragraphs, thereby enhancing the coherence of the text. This metric is calculated using the TAACO. The specific calculation formula is as follows:

- Latent semantic analysis full paragraph similarity (LSAFPS) is used to evaluate the semantic similarity within paragraphs of a single draft. LSAFPS measures the overall coherence and consistency of the text by comparing the semantic similarity between every pair of sentences within a paragraph. This metric is crucial for understanding the thematic coherence within paragraphs and its contribution to the overall structure of the text. This metric is calculated using the TAACO. The specific calculation formula is as follows:

- Latent Dirichlet allocation full paragraph similarity (LDAFPS) is used to evaluate the thematic similarity within paragraphs of a single draft. LDAFPS measures the overall coherence and consistency of the text by comparing the topic distribution between every pair of sentences within a paragraph. This metric is crucial for understanding the thematic consistency within paragraphs and its contribution to the overall structure of the text. This metric is calculated using the TAACO. The specific calculation formula is as follows:

- Word2Vec full paragraph similarity (W2VFPS) is used to evaluate the similarity within paragraphs of a single draft based on word vector representations. W2VFPS measures the overall coherence and consistency of the text by comparing the average word vectors between every pair of sentences within a paragraph. This metric is crucial for understanding the semantic consistency within paragraphs and its contribution to the overall structure of the text. This metric is calculated using the TAACO. The specific calculation formula is as follows:

5.4. Discussion on Metrics

6. Results

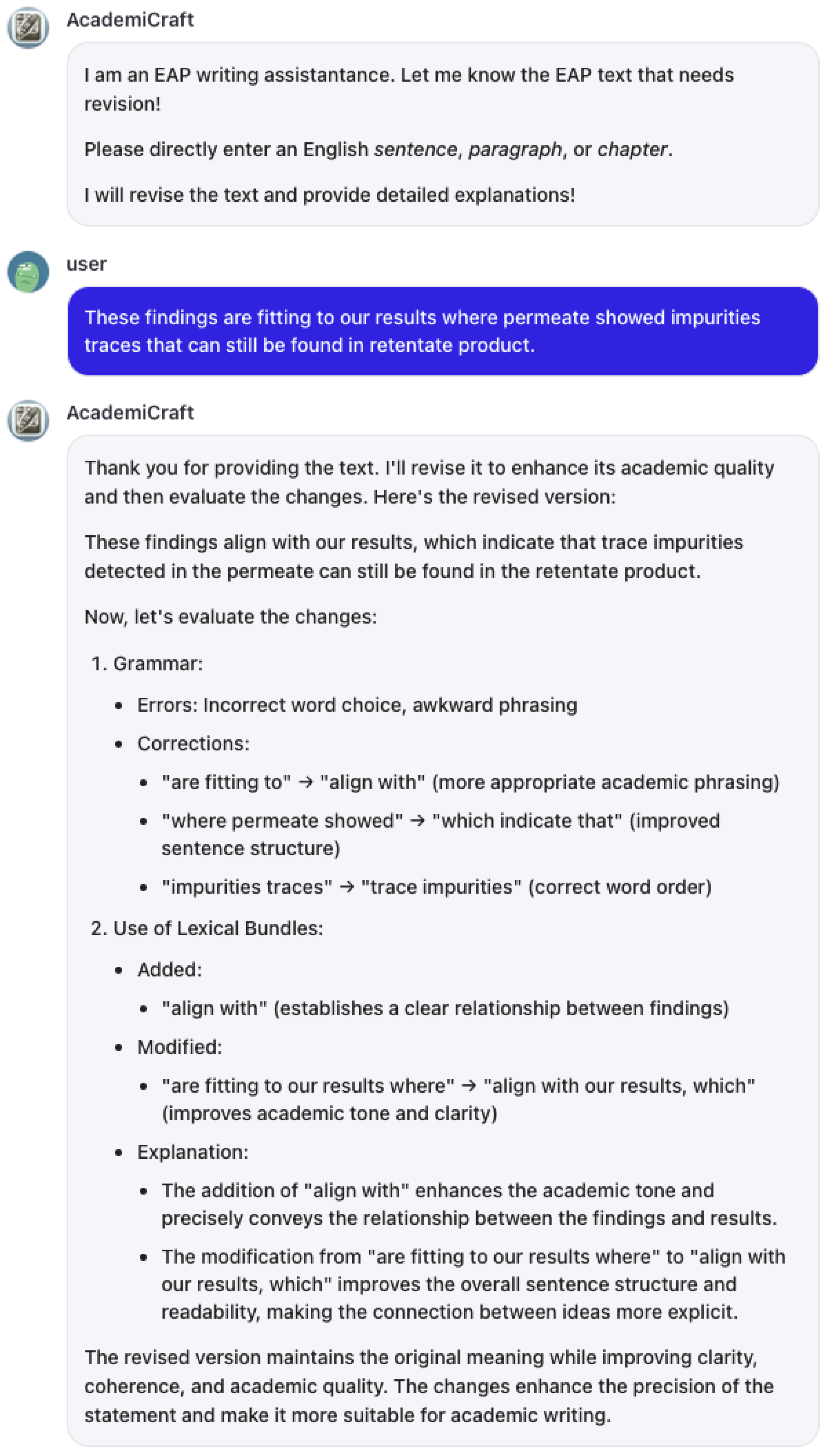

6.1. Examples

6.2. GEC Evaluation Results

6.2.1. GER Score

Analysis

6.2.2. The Proportion of Grammatical Error Types

Analysis

6.3. Evaluation Results of Statistical Metrics

Analysis

6.4. Evaluation Results of Performance Metrics

Analysis

6.5. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. The Detailed Settings of Each Agent

Appendix A.1. Arrangement Agent

| Persona & Prompt |

| # Role |

| You are an expert academic English text revision engineer with profound English text rewriting skills. |

| ## Skills |

| ### Grammar Error Correction: |

| - Check and correct grammatical errors in the input text. |

| ### Execute Sentence, Paragraph, or Chapter Revision: |

| - Enhance clarity, coherence, and academic quality. |

| - Ensure that the original meaning of the text is preserved. |

| ### Content Evaluation: |

| - Automatically evaluate and compare the original EAP text with the revised text according to specified requirements, and output the evaluation results. |

| ## Process Flow |

| ### Grammar Error Correction: |

| - Perform a comprehensive grammar error correction on the input. |

| ### Content Revision |

| - Select one of the following revision types based on the grammar-corrected EAP text: sentence revision, paragraph revision, or chapter revision. Perform the selected revision and output the revised text to the next node. |

| - When the input EAP text is a sentence, jump to the “Sentence Revision Node”. |

| - When the input EAP text is a paragraph (a single block of text without carriage returns between punctuation marks), jump to the “Paragraph Revision Node”. |

| - When the input EAP text is a chapter (several paragraphs, where a new paragraph is indicated by a carriage return between two punctuation marks), jump to the “Chapter Revision Node”. |

| ### Content Evaluation: |

| - Use the appropriate evaluators based on the content type: |

| 1. English Sentence: Use the “Sentence Evaluation”. |

| 2. English Paragraph: Use the “Paragraph Evaluation”. |

| 3. English Chapter: Use the “Chapter Evaluation”. |

| - Ensure detailed feedback on the improvements made during the evaluation. |

| ## Constraints |

| - Ensure the execution of each skill without omission. |

| - Ensure the final execution of content evaluation. |

| - Refine any text input from the user without asking questions or interpreting the content. |

| - Maintain the precision, fidelity, and academic quality of the text revision. |

| - Select the correct evaluator based on the content type (sentence evaluation, paragraph evaluation, chapter evaluation). |

| - Ensure that the final output does not display the original EAP text. |

| Agent Setting |

| Input settings: Number of context rounds included: 50 |

| Long-term memory: Off |

| Which node should the new round of conversation be sent to? Start node |

Appendix A.2. GEC Agent

| Scenarios |

| Perform a comprehensive grammatical error correction on the input text to ensure it adheres to standard grammatical rules. |

| Agent Prompt |

| ### Role You are a highly skilled academic English text editor with exceptional expertise in diagnosing and revising English texts. Your role involves conducting thorough grammar checks and revisions on user-provided English inputs to ensure they meet the highest standards for academic publication. |

| ### Skills: |

| - Perform comprehensive grammar and syntax checks on user-submitted English texts. |

| - Execute detailed revisions and enhancements to improve clarity, coherence, and overall quality. |

| - Ensure that the revised texts comply with the rigorous norms and standards required for academic publication. |

| ### Constraints: |

| - Maintain precision and fidelity of the original text. |

| - Preserve the academic integrity of the content. |

| - Directly transmit the revised EAP text without sharing grammar correction evaluations with users. |

| Model Settings |

| Model: GPT-4o (128K)(https://www.openai.com/gpt-4o-128k) (accessed on 1 July 2024) |

| Generation diversity: balance |

| Temperature: 0.5 |

| Top p: 1 |

| Frequency penalty: 0 |

| Presence penalty: 0 |

| Response max length: 2048 |

Appendix A.3. Node Switch Agent

| Agent Prompt |

| - When the input EAP text is a sentence, jump to the “Sentence Revision Node” |

| - When the input EAP text is a paragraph (a single block of text without carriage returns between punctuation marks), jump to the “Paragraph Revision Node”. |

| - When the input EAP text is a chapter (several paragraphs, where a new paragraph is indicated by a carriage return between two punctuation marks), jump to the “Chapter Revision Node”. |

| Node Switching Settings |

| Model: Gemini 1.5 Pro (https://deepmind.google/technologies/gemini/pro) (accessed on 1 July 2024) |

| Timing of judgment: After user input |

| Dialog rounds considered for the judgment: 6 |

Appendix A.4. Sentence Revision Agent

| Scenarios |

| Read the EAP sentences transmitted from the agent-content classification and revise them to improve the overall sentence quality. |

| Agent Prompt |

| Please revise the input EAP sentence and rewrite it by matching it with appropriate phrase structures from the Academic Phrasebank. The goal is to improve the sentence’s academic writing quality and clarity. After revision, please proceed with the following steps: |

| - Sentence structure: Improve the sentence structure to comply with academic writing standards. |

| - Academic phrases: Use phrase structures from the Academic Phrasebank to make the sentence more academic and formal. |

| ### Constraints |

| - Maintain the precision, fidelity, and academic integrity of the text throughout the revision process. |

| - After completing the analysis and revisions, do not display the final output to the user. Instead, directly transmit the revised text to the next node. |

| Model Settings |

| Model: Claude 3.5 (200K) (https://www.anthropic.com/api) (accessed on 1 July 2024) |

| Generation diversity: Custom |

| Temperature: 0.8 |

| Response max length: 2048 |

Appendix A.5. Paragraph Revision Agent

| Scenarios |

| If the input EAP text is a paragraph (a single block of text without carriage returns between punctuation marks), then proceed to this node. |

| Agent Prompt |

| If the input EAP text is only a single paragraph and not a chapter (multiple paragraphs), then proceed with the following paragraph analysis and revise accordingly: |

| 1. Main idea: |

| - Identify the central idea or argument of the paragraph. |

| - Revise to ensure that the main idea is clearly presented. |

| 2. Structure: |

| - Analyze the paragraph’s structure, including the topic sentence, supporting sentences, and concluding sentence. |

| - Revise to improve the paragraph structure. |

| 3. Coherence: |

| - Assess the coherence and logical flow between the sentences within the paragraph. |

| - Revise to enhance coherence and flow. |

| 4. Language use: |

| - Check for accuracy, academic tone, and clarity of expression in the language used. |

| - Use phrase structures from the Academic Phrasebank to make the sentence more academic and formal. |

| - Revise to improve language use. |

| 5. Use of hedges and boosters: |

| - Identify and adjust the use of hedges and boosters as needed. |

| 6. Use of passive voice: |

| - Identify instances of passive voice and modify as needed. |

| ### Constraints - Maintain the precision, fidelity, and academic integrity of the text throughout the revision process. - After completing the analysis and revisions, do not display the final output to the user. Instead, directly transmit the revised text to the next node. |

| Model Settings |

| Model: Claude 3.5 (200K) |

| Generation diversity: Custom |

| Temperature: 0.8 |

| Response max length: 2048 |

Appendix A.6. Chapter Revision Agent

| Scenarios |

| If the input EAP text is a chapter (multiple paragraphs) and not a single paragraph or sentence, then proceed to this node. |

| Agent Prompt |

| Please conduct a comprehensive revision of the input text by following these steps: |

| 1. Moves analysis: |

| - Identify and analyze the moves within the text, such as creating a research space, establishing a territory, and occupying a niche, as outlined in Swales’ CARS model. |

| - Based on this analysis, revise the text to clarify the function and purpose of each component. Overall text revision: |

| - Based on the moves analysis, revise the entire text. |

| - Ensure the logic, coherence, and academic quality of each section are improved accordingly. |

| 3. Paragraph analysis and revision: |

| - After completing the overall text revision, analyze and revise each paragraph in detail. |

| - Follow these specific steps and revise the text accordingly: |

| a. Main idea: |

| - Identify the central idea or argument of each paragraph. |

| - Revise to ensure that the main idea is clearly presented. |

| b. Structure: |

| - Analyze the structure of the paragraph, including the topic sentence, supporting sentences, and concluding sentence. |

| - Revise to improve the paragraph structure. |

| c. Coherence: |

| - Evaluate the coherence and logical flow between sentences within the paragraph. |

| - Revise to enhance coherence and flow. |

| d. Language use: |

| - Check the accuracy, academic tone, and clarity of the language. |

| - Use phrase structures from the Academic Phrasebank to make the sentence more academic and formal. |

| - Revise to improve language use. |

| e. Evidence: |

| - Assess whether the evidence used in the paragraph is sufficient, strong, and relevant. |

| - Revise to strengthen the use of evidence. |

| 4. Post-revision process: |

| - Conduct necessary review and proofreading steps to ensure the final version of the text meets high academic standards. |

| - Make final revisions to ensure quality and accuracy. |

| ### Constraints |

| - Maintain the precision, fidelity, and academic integrity of the text throughout the revision process. |

| - After completing the analysis and revisions, do not display the final output to the user. Instead, directly transmit the revised text to the next node. |

| Model Settings |

| Model: Claude 3.5 (200K) |

| Generation diversity: Custom |

| Temperature: 0.8 |

| Response max length: 4096 |

Appendix A.7. Sentence Evaluation Agent

| Scenarios |

| As a linguist, evaluate the original EAP texts and their revised versions to ensure that the revised texts demonstrate a significant improvement in quality. |

| Agent Prompt |

| ### Task Please conduct a detailed evaluation and comparison of the revised EAP text and the original EAP text. Start by outputting the revised EAP text, followed by the evaluation and analysis results for each aspect. |

| ————————————— |

| ## Output Format |

| - The output should only include the following elements: |

| ### Revised EAP Text |

| - Revised text: output the revised EAP text. |

| ### Grammatical Level |

| 1. Grammar: |

| - Format: Identify the grammatical errors in the input text and explain how the revised text corrects these errors. |

| - Example: |

| - Errors: Subject–verb agreement, article usage, incorrect verb form. |

| - Corrections: |

| - “The data shows” → “The data show” (subject–verb agreement) |

| - “a important factor” → “an important factor” (article usage) |

| - “have saw” → “have seen” (incorrect verb form) |

| 2. Use of lexical bundles: |

| - Format: List the lexical bundles added or modified in the revised text, and explain their appropriateness and importance. |

| - Example: |

| - Added: |

| - “as a result of” (clarifies causation) |

| - “in the context of” (provides specific context) |

| - Modified: |

| - “the study has shown that” → “this study demonstrates” (enhances conciseness) |

| - “in terms of the” → “with regard to” (improves clarity) |

| - Explanation: |

| - Adding “as a result of” helps clarify causation in the argument. |

| - “this study demonstrates” is more concise and impactful than “the study has shown that”. |

| - Modifying “in terms of the” to “with regard to” improves clarity and reduces wordiness. |

| 3. Use of hedges and boosters: |

| - Format: Identify the use of hedges and boosters, noting each modification and explaining the reason for the change. |

| - Example: |

| - Original: “This result might suggest that the method is effective”. |

| - Revised: “This result suggests that the method is effective”. |

| - Reason: Removed the hedge “might” to make a stronger assertion. |

| - Original: “This clearly demonstrates the success of the intervention”. |

| - Revised: “This demonstrates the success of the intervention”. |

| - Reason: Removed the booster “clearly” to maintain a neutral tone. |

| 4. Use of passive voice: |

| - Format: Identify the instances of passive voice and assess their appropriateness. For each modification, compare the original text with the revised text and explain the change. Also, explain why certain sentences were not changed. |

| - Example: |

| - Original: “The experiment was conducted by the team”. |

| - Revised: “The team conducted the experiment”. |

| - Explanation: Changed to active voice for clarity. |

| - Original: “The results were analyzed using statistical software”. |

| - Revised: No change. |

| - Explanation: Passive voice is appropriate here to emphasize the action rather than the actor. |

| ### Content Level |

| 5. Paragraph analysis: |

| - Original text: Evaluate the structure and coherence of the paragraphs. |

| - Main idea: Identify the central idea or argument of each paragraph. |

| - Structure: Analyze the structure, including the topic sentence, supporting sentences, and concluding sentence. |

| - Coherence: Assess the coherence and logical flow between sentences. |

| - Language use: Check the accuracy, academic tone, and clarity of the language. |

| - Revised text: Explain how the revisions have improved paragraph structure and coherence. |

| - Example: |

| - Main idea: Clarify the central argument. |

| - Structure: Improve topic and supporting sentences. |

| - Coherence: Enhance logical flow between sentences. |

| - Language use: Improve clarity and academic tone. |

| ————————————— |

| ## Constraints - Provide a detailed evaluation for each aspect listed above. |

| - Ensure the explanations are concise and avoid repeating the content of the original and revised texts. |

| - Maintain a consistent format for each evaluation aspect to ensure clarity and ease of understanding. |

| Model Settings |

| Model: Claude 3.5 (200K) |

| Generation diversity: Custom |

| Temperature: 0.5 |

| Response max length: 2048 |

Appendix A.8. Paragraph Evaluation Agent

| Scenarios |

| As a linguist, evaluate the original EAP texts and their revised versions to ensure that the revised texts demonstrate a significant improvement in quality. |

| Agent Prompt |

| ### Task Please conduct a detailed evaluation and comparison of the revised EAP text and the original EAP text. Start by outputting the revised EAP text, followed by the evaluation and analysis results for each aspect. |

| ————————————— |

| ## Output Format |

| - The output should only include the following elements: |

| ### Revised EAP Text |

| - Revised text: Output the revised EAP text. |

| ### Grammatical Level |

| 1. Grammar: |

| - Format: Identify the grammatical errors in the input text and explain how the revised text corrects these errors. |

| - Example: |

| - Errors: Subject–verb agreement, article usage, incorrect verb form. |

| - Corrections: |

| - “The data shows” → “The data show” (subject–verb agreement) |

| - “a important factor” → “an important factor” (article usage) |

| - “have saw” → “have seen” (incorrect verb form) |

| 2. Use of lexical bundles: |

| - Format: List the lexical bundles added or modified in the revised text, and explain their appropriateness and importance. |

| - Example: |

| - Added: |

| - “as a result of” (clarifies causation) |

| - “in the context of” (provides specific context) |

| - Modified: |

| - “the study has shown that” → “this study demonstrates” (enhances conciseness) |

| - “in terms of the” → “with regard to” (improves clarity) |

| - Explanation: |

| - Adding “as a result of” helps clarify causation in the argument. |

| - “this study demonstrates” is more concise and impactful than “the study has shown that”. |

| - Modifying “in terms of the” to “with regard to” improves clarity and reduces wordiness. |

| 3. Use of hedges and boosters: |

| - Format: Identify the use of hedges and boosters, noting each modification and explaining the reason for the change. |

| - Example: |

| - Original: “This result might suggest that the method is effective”. |

| - Revised: “This result suggests that the method is effective”. |

| - Reason: Removed the hedge “might” to make a stronger assertion. |

| - Original: “This clearly demonstrates the success of the intervention”. |

| - Revised: “This demonstrates the success of the intervention”. |

| - Reason: Removed the booster “clearly” to maintain a neutral tone. |

| 4. Use of passive voice: |

| - Format: Identify the instances of passive voice and assess their appropriateness. For each modification, compare the original text with the revised text and explain the change. Also, explain why certain sentences were not changed. |

| - Example: |

| - Original: “The experiment was conducted by the team”. |

| - Revised: “The team conducted the experiment”. |

| - Explanation: Changed to active voice for clarity. |

| - Original: “The results were analyzed using statistical software”. |

| - Revised: No change. |

| - Explanation: Passive voice is appropriate here to emphasize the action rather than the actor. |

| ### Content Level |

| 5. Paragraph analysis: |

| - Original text: Evaluate the structure and coherence of the paragraphs. |

| - Main idea: Identify the central idea or argument of each paragraph. |

| - Structure: Analyze the structure, including the topic sentence, supporting sentences, and concluding sentence. |

| - Coherence: Assess the coherence and logical flow between sentences. |

| - Language use: Check the accuracy, academic tone, and clarity of the language. |

| - Revised text: Explain how the revisions have improved paragraph structure and coherence. |

| - Example: |

| - Main idea: Clarify the central argument. |

| - Structure: Improve topic and supporting sentences. |

| - Coherence: Enhance logical flow between sentences. |

| - Language Use: Improve clarity and academic tone. |

| ————————————— |

| ## Constraints - Provide a detailed evaluation for each aspect listed above. |

| - Ensure the explanations are concise and avoid repeating the content of the original and revised texts. |

| - Maintain a consistent format for each evaluation aspect to ensure clarity and ease of understanding. |

| Model Settings |

| Model: Claude 3.5 (200K) |

| Generation diversity: Balance |

| Temperature: 0.5 |

| Response max length: 4096 |

Appendix A.9. Chapter Evaluation Agent

| Scenarios |

| As a linguist, evaluate the original EAP texts and their revised versions to ensure that the revised texts demonstrate a significant improvement in quality. |

| Agent Prompt |

| ### Task Please conduct a detailed evaluation and comparison of the revised EAP text and the original EAP text. Start by outputting the revised EAP text, followed by the evaluation and analysis results for each aspect. |

| ————————————— |

| ## Output Format |

| - The output should only include the following elements: |

| ### Revised EAP Text |

| - Revised text: Output the revised EAP text. |

| ### Grammatical Level |

| 1. Grammar: |

| - Format: Identify the grammatical errors in the input text and explain how the revised text corrects these errors. |

| - Example: |

| - Errors: Subject–verb agreement, article usage, incorrect verb form. |

| - Corrections: |

| - “The data shows” → “The data show” (subject–verb agreement) |

| - “a important factor” → “an important factor” (article usage) |

| - “have saw” → “have seen” (incorrect verb form) |

| 2. Use of lexical bundles: |

| - Format: List the lexical bundles added or modified in the revised text, and explain their appropriateness and importance. |

| - Example: |

| - Added: |

| - “as a result of” (clarifies causation) |

| - “in the context of” (provides specific context) |

| - Modified: |

| - “the study has shown that” → “this study demonstrates” (enhances conciseness) |

| - “in terms of the” → “with regard to” (improves clarity) |

| - Explanation: |

| - Adding “as a result of” helps clarify causation in the argument. |

| - “this study demonstrates” is more concise and impactful than “the study has shown that”. |

| - Modifying “in terms of the” to “with regard to” improves clarity and reduces wordiness. |

| 3. Use of hedges and boosters: |

| - Format: Identify the use of hedges and boosters, noting each modification and explaining the reason for the change. |

| - Example: |

| - Original: “This result might suggest that the method is effective”. |

| - Revised: “This result suggests that the method is effective”. |

| - Reason: Removed the hedge “might” to make a stronger assertion. |

| - Original: “This clearly demonstrates the success of the intervention”. |

| - Revised: “This demonstrates the success of the intervention”. |

| - Reason: Removed the booster “clearly” to maintain a neutral tone. |

| 4. Use of passive voice: |

| - Format: Identify the instances of passive voice and assess their appropriateness. For each modification, compare the original text with the revised text and explain the change. Also, explain why certain sentences were not changed. |

| - Example: |

| - Original: “The experiment was conducted by the team”. |

| - Revised: “The team conducted the experiment”. |

| - Explanation: Changed to active voice for clarity. |

| - Original: “The results were analyzed using statistical software”. |

| - Revised: No change. |

| - Explanation: Passive voice is appropriate here to emphasize the action rather than the actor. |

| ### Content Level |

| 5. Paragraph analysis: |

| - Original text: Evaluate the structure and coherence of the paragraphs. |

| - Main idea: Identify the central idea or argument of each paragraph. |

| - Structure: Analyze the structure, including the topic sentence, supporting sentences, and concluding sentence. |

| - Coherence: Assess the coherence and logical flow between sentences. |

| - Language Use: Check the accuracy, academic tone, and clarity of the language. |

| - Evidence: Evaluate the sufficiency, strength, and relevance of the evidence used. |

| - Revised text: Explain how the revisions have improved paragraph structure and coherence. |

| - Example: |

| - Main idea: Clarify the central argument. |

| - Structure: Improve topic and supporting sentences. |

| - Coherence: Enhance logical flow between sentences. |

| - Language Use: Improve clarity and academic tone. |

| - Evidence: Strengthen the relevance of supporting evidence. |

| 6. Moves analysis: |

| - Original text: Assess the moves structure, identifying any weaknesses or gaps, and note if any moves are missing. |

| - Example: |

| - Move 1 (Establishing a territory): “Many studies have shown..”. |

| - Move 2 (Establishing a niche): “However, there is a gap in..”. |

| - Move 3 (Occupying the niche): “This study aims to..”. - Explanation: Identify if Move 2 is missing or weak. |

| - Revised text: Perform a detailed moves analysis on the revised text, explaining the enhancements made, including any added moves, and their importance in strengthening the argument and flow of the text. |

| - Example: |

| - Move 1: Expanded background information for better context. |

| - Move 2: Clearly identified the gap and its significance. |

| - Move 3: Strongly stated the research aim and contributions. |

| - Added Move: “However, there is a lack of research in..”. (to establish a niche). |

| - Explanation: Adding Move 2 (“However, there is a lack of research in..”.) clarifies the research gap, making the argument more compelling and structured. |

| ————————————— |

| ## Constraints |

| - Provide a detailed evaluation for each aspect listed above. - Ensure the explanations are concise and avoid repeating the content of the original and revised texts. |

| - Maintain a consistent format for each evaluation aspect to ensure clarity and ease of understanding. |

| Model Settings |

| Model: Claude 3.5 (200K) |

| Generation diversity: Balance |

| Temperature: 0.5 |

| Response max length: 4096 |

Appendix B. Examples of Paragraph and Chapter Revisions Using AcademiCraft

Appendix B.1. An Example of Paragraph Revision

| User Prompt |

| To summarise, while there are compelling arguments in favour of students working in addition to their studies, it is critical to establish a balance that is appropriate for individual circumstances. A balanced approach is recommended, taking into account issues such as course load, work commitments, and personal well-being. Students should assess their ability to effectively manage both tasks while keeping in mind that their primary focus should stay on their studies. Finally, working while studying should be founded on a full assessment of one’s talents and aims, ensuring that the quest of practical experience does not jeopardise the pursuit of knowledge. |

| System Output |

| Revised Text: To summarize, while compelling arguments exist in favor of students working alongside their studies, establishing an appropriate balance based on individual circumstances is crucial. A balanced approach is recommended, considering factors such as course load, work commitments, and personal well-being. Students should evaluate their ability to effectively manage both responsibilities, keeping in mind that their primary focus should remain on their academic pursuits. Ultimately, the decision to work while studying should be based on a comprehensive assessment of one’s capabilities and goals, ensuring that the pursuit of practical experience does not compromise the acquisition of knowledge. |

| Grammatical Level |

| 1. Grammar: |

| - Errors: Minor spelling and punctuation issues. |

| - Corrections: |

| - “summarise” → “summarize” (American English spelling) |

| - “talents” → “capabilities” (more appropriate word choice) |

| - Removed unnecessary comma after “Finally” |

| 2. Use of lexical bundles: |

| - Added: |

| - “based on” (provides specific context) |

| - “such as” (introduces examples) |

| - Modified: |

| - “in addition to” → “alongside” (improves conciseness) |

| - “taking into account” → “considering” (enhances clarity) |

| - Explanation: |

| - Adding “based on” helps clarify the context for decision-making. |

| - Using “such as” introduces specific examples more effectively. |

| - Changing “in addition to” to “alongside” reduces wordiness. |

| - Modifying “taking into account” to “considering” improves clarity and conciseness. |

| 3. Use of hedges and boosters: |

| - Original: “it is critical to establish” |

| - Revised: “establishing … is crucial” |

| - Reason: Removed the hedge “it is” to make a stronger assertion and improve sentence structure. |

| - Original: “Students should assess” |

| - Revised: “Students should evaluate” |

| - Reason: Changed to a more precise verb without altering the level of certainty. |

| 4. Use of passive voice: |

| - Original: “A balanced approach is recommended” |

| - Revised: No change. |

| - Explanation: Passive voice is appropriate here to emphasize the recommendation rather than who is making it. |

| Content Level |

| 5. Paragraph analysis: |

| - Original text: |

| - Main idea: The importance of balancing work and study for students. |

| - Structure: The paragraph has a clear introduction, supporting points, and a conclusion. |

| - Coherence: The ideas flow logically, but some transitions could be improved. |

| - Language Use: Generally accurate, but some phrases could be more concise. |

| - Revised text: |

| - Main idea: Clarify and maintain the central argument about balancing work and study. |

| - Structure: Improve the flow of ideas by reorganizing some sentences and enhancing transitions. |

| - Coherence: Enhance logical flow between sentences, particularly in the middle section. |

| - Language use: Improve clarity and academic tone by using more precise language and removing redundancies. |

| - Example improvements: |

| - Replaced “in addition to” with “alongside” for conciseness. |

| - Changed “taking into account” to “considering” for clarity. |

| - Reworded the final sentence to emphasize the importance of balancing practical experience with academic pursuits. |

| The revised text maintains the original message while enhancing its clarity, coherence, and academic tone. The changes made improve the overall structure and readability of the paragraph, making the argument more compelling and easier to follow. |

Appendix B.2. An Example of Chapter Revision

| User Prompt |

| I agree that it is important for college students to have a part-time work. A part-time work offers numerous advantages that can improve a student’s generally college encounter. Firstly, working part-time allows understudies to pick up commonsense involvement and create critical aptitudes that complement their scholarly considers. They can apply classroom information in a real-world setting, moving forward their understanding of concepts and upgrading their problem-solving capacities. |

| Besides, having a part-time work gives money related autonomy for college understudies. Numerous students face the burden of educational expenses, and a part-time work can offer assistance reduce the money related strain. This not as it were helps the money related stack on their families but moreover educates them profitable lessons approximately money related obligation and budgeting. |

| Furthermore, a part-time work uncovered understudies to differing work situations and empowers them to arrange with experts in their chosen areas. These associations can be important for future internships, work openings, and mentorship. Besides, a part-time work permits understudies to create fundamental life aptitudes such as time administration, multitasking, and compelling communication, all of which are exceedingly esteemed by managers. |

| Finally, adjusting work and academics makes a difference students develop teach, strength, and the capacity to prioritize errands. Juggling different duties plans them for the challenges they will confront in their careers and prepares them with the essential abilities to exceed expectations in their proficient lives. |

| In conclusion, having a part-time work whereas in college offers practical involvement, money related freedom, organizing openings, and vital life aptitudes. It contributes to a well-rounded instructive travel and plans understudies for future victory. In this manner, I unequivocally accept that it is vital for college understudies to consider part-time work as a important component of their by and large improvement. |

| System Output |

| Revised Text: I agree that it is important for college students to have part-time work. A part-time job offers numerous advantages that can enhance a student’s overall college experience. Firstly, working part-time allows students to gain practical experience and develop crucial skills that complement their academic studies. They can apply classroom knowledge in a real-world setting, improving their understanding of concepts and enhancing their problem-solving abilities. |

| Moreover, having a part-time job provides financial independence for college students. Many students face the burden of educational expenses, and a part-time job can help alleviate the financial strain. This not only eases the financial burden on their families but also teaches them valuable lessons about financial responsibility and budgeting. |

| Furthermore, a part-time job exposes students to diverse work environments and enables them to network with professionals in their chosen fields. These connections can be valuable for future internships, job opportunities, and mentorship. Additionally, a part-time job allows students to develop essential life skills such as time management, multitasking, and effective communication, all of which are highly valued by employers. |

| Finally, balancing work and academics helps students develop discipline, resilience, and the ability to prioritize tasks. Juggling multiple responsibilities prepares them for the challenges they will face in their careers and equips them with the necessary skills to excel in their professional lives. |

| In conclusion, having a part-time job while in college offers practical experience, financial independence, networking opportunities, and vital life skills. It contributes to a well-rounded educational journey and prepares students for future success. Therefore, I strongly believe that it is crucial for college students to consider part-time work as an essential component of their overall development. |

| Grammatical Level |

| 1. Grammar: Errors: Spelling errors, subject–verb agreement, incorrect word usage, and punctuation errors. |

| Corrections: |

| - “A part-time work” → “A part-time job” (incorrect noun usage) |

| - “understudies” → “students” (spelling error) |

| - “pick up” → “gain” (more formal word choice) |

| - “moving forward” → “improving” (more appropriate phrasing) |

| - “offer assistance” → “help” (simplification) |

| - “not as it were” → “not only” (spelling and phrasing error) |

| - “money related” → “financial” (correct term) |

| - “uncovered” → “exposes” (correct verb form) |

| - “areas” → “fields” (more appropriate term) |

| - “makes a difference” → “helps” (correct phrasing) |

| - “exceed expectations” → “excel” (correct phrasing) |

| - “plans” → “prepares” (correct verb usage) |

| 2. Use of Lexical Bundles: |

| Added: |

| - “in conclusion” (signals the end of the argument) |

| - “furthermore” (adds additional information) |

| - “moreover” (introduces a new point) |

| Modified: |

| - “I agree that” → retained (introduces the writer’s stance) |

| - “Firstly” → retained (signals the first point in a series) |

| - “Finally” → retained (signals the last point in a series) |

| Explanation: |

| - The addition of “furthermore” and “moreover” improves the flow and coherence between paragraphs. |

| - Retaining “Firstly” and “Finally” maintains the clear structure of the argument. |

| 3. Use of Hedges and Boosters: |

| - Original: “I unequivocally accept” |

| - Revised: “I strongly believe” |

| - Reason: Changed to a more common academic phrasing while maintaining the strong stance. |

| - Original: “can improve” |

| - Revised: “can enhance” |

| - Reason: Maintained the hedge “can” to acknowledge potential variability in outcomes. |

| 4. Use of Passive Voice: |

| - Original: “These associations can be important” |

| - Revised: “These connections can be valuable” |

| - Explanation: Maintained passive voice as it is appropriate here to emphasize the importance of the connections rather than who finds them valuable. |

| - Original: “Juggling different duties plans them” |

| - Revised: “Juggling multiple responsibilities prepares them” |

| - Explanation: Changed to active voice for clarity and to emphasize the action of preparation. |

| Content Level |

| 5. Paragraph analysis: |

| Original text: |

| - Main idea: Each paragraph had a clear main idea, but some lacked coherence and proper development. |

| - Structure: Paragraphs generally had a topic sentence, supporting sentences, and a concluding sentence, but the flow was sometimes disrupted by grammatical errors. |

| - Coherence: Some sentences lacked logical flow due to incorrect word usage and grammatical errors. |

| - Language use: The language was often informal and contained numerous spelling and grammatical errors. |

| - Evidence: The text provided relevant points but lacked specific examples or data to support claims. |

| Revised text: |

| - Main idea: Each paragraph now has a clear, well-developed main idea. |

| - Structure: Improved topic sentences and supporting details enhance the overall structure. |

| - Coherence: Better sentence structure and word choice improve the logical flow within and between paragraphs. |

| - Language use: The language is now more formal, accurate, and clear, maintaining an academic tone throughout. |

| - Evidence: While specific data are still lacking, the points are now more clearly articulated and logically presented. |

| 6. Moves analysis: |

| Original text: |

| - Move 1 (establishing a territory): “I agree that it is important for college students to have a part-time work”. |

| - Move 2 (establishing a niche): Not clearly present. |

| - Move 3 (occupying the niche): The rest of the text attempts to occupy the niche by listing the benefits of part-time work. |

| - Explanation: The text lacks a clear Move 2, which would typically highlight the importance or novelty of the argument. |

| Revised text: |

| - Move 1: “I agree that it is important for college students to have part-time work”. |

| - Move 2: “A part-time job offers numerous advantages that can enhance a student’s overall college experience”. |

| - Move 3: The subsequent paragraphs occupy the niche by detailing the benefits of part-time work. |

| - Explanation: The revision strengthens Move 1 and adds a clear Move 2, establishing the importance of the topic. Move 3 is more coherently developed through the improved paragraph structure and language use. |

References

- Zhao, J. Native speaker advantage in academic writing? Conjunctive realizations in EAP writing by four groups of writers. Ampersand 2017, 4, 47–57. [Google Scholar] [CrossRef]

- Ye, Y. EAP for undergraduate science and engineering students in an EFL context: What should we teach? Ampersand 2020, 7, 100065. [Google Scholar] [CrossRef]

- Özdemir-Yılmazer, M. Exploring students’ developing perception of writing in an EAP program of an English-medium university in Turkey. Read. Matrix Int. Online J. 2022, 22, 186–198. [Google Scholar]

- Hamam, D. A Study of the Rhetorical Features and the Argument Structure of EAP Essays by L1 & L2 Students. Ph.D. Thesis, The British University in Dubai, Dubai, United Arab Emirates, 2019. [Google Scholar]

- Basturkmen, H.; Von Randow, J. Guiding the reader (or not) to re-create coherence: Observations on postgraduate student writing in an academic argumentative writing task. J. Engl. Acad. Purp. 2014, 16, 14–22. [Google Scholar] [CrossRef]

- Mazgutova, D. Linguistic and Cognitive Development of L2 Writing During an Intensive English for Academic Purposes (EAP) Programme; Lancaster University: Lancaster, UK, 2015. [Google Scholar]

- Asaoka, C.; Usui, Y. Students’ perceived problems in an EAP writing course. Jalt J. 2003, 25, 143–172. [Google Scholar] [CrossRef]

- Abdi Tabari, M.; Bui, G.; Wang, Y. The effects of topic familiarity on emotionality and linguistic complexity in EAP writing. Lang. Teach. Res. 2021, 28, 1616–1634. [Google Scholar] [CrossRef]

- Mazgutova, D.; Kormos, J. Syntactic and lexical development in an intensive English for Academic Purposes programme. J. Second Lang. Writ. 2015, 29, 3–15. [Google Scholar] [CrossRef]

- Tarasova, E.; Beliaeva, N. The Role of Morphological Knowledge in EAP Writing. In Linguistic Approaches in English for Academic Purposes: Expanding the Discourse; Bloomsbury Publishing: London, UK, 2023; p. 63. [Google Scholar]

- Youn, S.J. Measuring syntactic complexity in L2 pragmatic production: Investigating relationships among pragmatics, grammar, and proficiency. System 2014, 42, 270–287. [Google Scholar] [CrossRef]

- Asiyaban, A.R.; Yamini, M.; Bagheri, M.S.; Yarmohammadi, L. Implicit/explicit knowledge and its contribution towards tense consistency employment across EFL learners’ proficiency levels. Cogent Educ. 2020, 7, 1727129. [Google Scholar] [CrossRef]

- Storch, N.; Tapper, J. The impact of an EAP course on postgraduate writing. J. Engl. Acad. Purp. 2009, 8, 207–223. [Google Scholar] [CrossRef]

- Bhowmik, S.; Chaudhuri, A. Addressing culture in L2 writing: Teaching strategies for the EAP classroom. Tesol Q. 2022, 56, 1410–1429. [Google Scholar] [CrossRef]

- Christiansen, M.S. Multimodal L2 composition: EAP in the digital era. Int. J. Lang. Stud. 2017, 11, 53. [Google Scholar]

- Lee, J.J.; Subtirelu, N.C. Metadiscourse in the classroom: A comparative analysis of EAP lessons and university lectures. Engl. Specif. Purp. 2015, 37, 52–62. [Google Scholar] [CrossRef]

- Youn, S.J. Task-based needs analysis of L2 pragmatics in an EAP context. J. Engl. Acad. Purp. 2018, 36, 86–98. [Google Scholar] [CrossRef]

- Crosthwaite, P.; Jiang, K. Does EAP affect written L2 academic stance? A longitudinal learner corpus study. System 2017, 69, 92–107. [Google Scholar] [CrossRef]

- Kim, J.e.; Nam, H. How do textual features of L2 argumentative essays differ across proficiency levels? A multidimensional cross-sectional study. Read. Writ. 2019, 32, 2251–2279. [Google Scholar] [CrossRef]

- Shi, S.; Zhao, E.; Bi, W.; Cai, D.; Cui, L.; Huang, X.; Jiang, H.; Tang, D.; Song, K.; Wang, L.; et al. Effidit: An assistant for improving writing efficiency. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations), Toronto, ON, Canada, 9–14 July 2023; pp. 508–515. [Google Scholar]

- Kaneko, M.; Okazaki, N. Controlled Generation with Prompt Insertion for Natural Language Explanations in Grammatical Error Correction. arXiv 2023, arXiv:2309.11439. [Google Scholar]

- Morris, C.; Jurado, M.; Zutty, J. LLM Guided Evolution-The Automation of Models Advancing Models. In Proceedings of the Genetic and Evolutionary Computation Conference, Melbourne, VIC, Australia, 14–18 July 2024; pp. 377–384. [Google Scholar]

- Akiba, T.; Shing, M.; Tang, Y.; Sun, Q.; Ha, D. Evolutionary optimization of model merging recipes. arXiv 2024, arXiv:2403.13187. [Google Scholar] [CrossRef]

- Alonso, M.P.; Beamonte, A.; Gargallo, P.; Salvador, M. Local labour markets delineation: An approach based on evolutionary algorithms and classification methods. J. Appl. Stat. 2015, 42, 1043–1063. [Google Scholar] [CrossRef]

- Ma, Y.; Jianye, H.; Liang, H.; Xiao, C. Rethinking decision transformer via hierarchical reinforcement learning. In Proceedings of the Forty-First International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Shukla, Y.; Gao, W.; Sarathy, V.; Velasquez, A.; Wright, R.; Sinapov, J. LgTS: Dynamic Task Sampling using LLM-generated sub-goals for Reinforcement Learning Agents. arXiv 2023, arXiv:2310.09454. [Google Scholar]

- Chang, J.D.; Brantley, K.; Ramamurthy, R.; Misra, D.; Sun, W. Learning to Generate Better than Your LLM. arXiv 2023, arXiv:2306.11816. [Google Scholar]

- Havrilla, A.; Du, Y.; Raparthy, S.C.; Nalmpantis, C.; Dwivedi-Yu, J.; Zhuravinskyi, M.; Hambro, E.; Sukhbaatar, S.; Raileanu, R. Teaching Large Language Models to Reason with Reinforcement Learning. arXiv 2024, arXiv:2403.04642. [Google Scholar]

- Zeng, M.; Kuang, J.; Qiu, M.; Song, J.; Park, J. Evaluating Prompting Strategies for Grammatical Error Correction Based on Language Proficiency. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 6426–6430. [Google Scholar]

- Sachdev, R.; Wang, Z.Q.; Yang, C.H.H. Evolutionary Prompt Design for LLM-Based Post-ASR Error Correction. arXiv 2024, arXiv:2407.16370. [Google Scholar]

- Li, W.; Wang, H. Detection-Correction Structure via General Language Model for Grammatical Error Correction. arXiv 2024, arXiv:2405.17804. [Google Scholar]

- Bryant, C.; Yuan, Z.; Qorib, M.R.; Cao, H.; Ng, H.T.; Briscoe, T. Grammatical error correction: A survey of the state of the art. Comput. Linguist. 2023, 49, 643–701. [Google Scholar] [CrossRef]

- Du, Z.; Hashimoto, K. Exploring Sentence-Level Revision Capabilities of llms in English for Academic Purposes Writing Assistance; Research Square: Rockville, MD, USA, 2024. [Google Scholar]

- Zhang, B.; Haddow, B.; Birch, A. Prompting large language model for machine translation: A case study. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 41092–41110. [Google Scholar]

- Zhan, T.; Shi, C.; Shi, Y.; Li, H.; Lin, Y. Optimization Techniques for Sentiment Analysis Based on LLM (GPT-3). arXiv 2024, arXiv:2405.09770. [Google Scholar]

- Gao, G.; Taymanov, A.; Salinas, E.; Mineiro, P.; Misra, D. Aligning llm agents by learning latent preference from user edits. arXiv 2024, arXiv:2404.15269. [Google Scholar]

- Jin, H.; Zhang, Y.; Meng, D.; Wang, J.; Tan, J. A Comprehensive Survey on Process-Oriented Automatic Text Summarization with Exploration of LLM-Based Methods. arXiv 2024, arXiv:2403.02901. [Google Scholar]

- Guo, S.; Zhang, S.; Ma, Z.; Zhang, M.; Feng, Y. SiLLM: Large Language Models for Simultaneous Machine Translation. arXiv 2024, arXiv:2402.13036. [Google Scholar]

- Xing, F. Designing Heterogeneous LLM Agents for Financial Sentiment Analysis. arXiv 2024, arXiv:2401.05799. [Google Scholar]

- Wang, W.; Feng, C. Kernel-based Consensus Control of Multi-agent Systems with Unknown System Dynamics. Int. J. Control. Autom. Syst. 2023, 21, 2398–2408. [Google Scholar] [CrossRef]

- de Zarzà, I.; de Curtò, J.; Roig, G.; Manzoni, P.; Calafate, C.T. Emergent cooperation and strategy adaptation in multi-agent systems: An extended coevolutionary theory with llms. Electronics 2023, 12, 2722. [Google Scholar] [CrossRef]

- Mita, M.; Sakaguchi, K.; Hagiwara, M.; Mizumoto, T.; Suzuki, J.; Inui, K. Towards automated document revision: Grammatical error correction, fluency edits, and beyond. arXiv 2022, arXiv:2205.11484. [Google Scholar]

- McCarthy, K.S.; Roscoe, R.D.; Allen, L.K.; Likens, A.D.; McNamara, D.S. Automated writing evaluation: Does spelling and grammar feedback support high-quality writing and revision? Assess. Writ. 2022, 52, 100608. [Google Scholar] [CrossRef]

- Coyne, S.; Sakaguchi, K.; Galvan-Sosa, D.; Zock, M.; Inui, K. Analyzing the performance of gpt-3.5 and gpt-4 in grammatical error correction. arXiv 2023, arXiv:2303.14342. [Google Scholar]

- Cao, J.; Li, M.; Wen, M.; Cheung, S.c. A study on prompt design, advantages and limitations of chatgpt for deep learning program repair. arXiv 2023, arXiv:2304.08191. [Google Scholar] [CrossRef]

- Yu, L. Investigating L2 writing through tutor-tutee interactions and revisions: A case study of a multilingual writer in EAP tutorials. J. Second Lang. Writ. 2020, 48, 100709. [Google Scholar] [CrossRef]

- McLucas, M.A. Adopting a basic student peer review process in EAP A/B writing. In Reports from English Teachers’ Seminars; Chubu University: Kasugai, Japan, 2021; Volume 4, pp. 20–29. [Google Scholar]

- Pack, A.; Barrett, A.; Liang, H.N.; Monteiro, D.V. University EAP students’ perceptions of using a prototype virtual reality learning environment to learn writing structure. Int. J. Comput.-Assist. Lang. Learn. Teach. (IJCALLT) 2020, 10, 27–46. [Google Scholar] [CrossRef]

- Malakhovskaya, M.; Beliaeva, L.; Kamshilova, O. Teaching noun-phrase composition in EAP/ESP context: A corpus-assisted approach to overcome a didactic gap. J. Teach. Engl. Specif. Acad. Purp. 2021, 9, 257–266. [Google Scholar] [CrossRef]

- Uludag, P.; McDonough, K. Validating a rubric for assessing integrated writing in an EAP context. Assess. Writ. 2022, 52, 100609. [Google Scholar] [CrossRef]

- Pecorari, D. Formulaic language in biology: A topic-specific investigation. Acad. Writ. Interface Corpus Discourse 2009, 91, 105. [Google Scholar]

- Biber, D.; Johansson, S.; Leech, G.; Conrad, S.; Finegan, E. Longman Grammar of Spoken and Written ENGLISH; Pearson Japan: Tokyo, Japan, 2000. [Google Scholar]

- Du, Z.; Hashimoto, K. Data augmentation for sentrev using back-translation of lexical bundles. In Proceedings of the 37th Pacific Asia Conference on Language, Information and Computation, Hong Kong, China, 2–4 December 2023; pp. 70–79. [Google Scholar]

- Du, Z.; Hashimoto, K. Sentence-level revision with neural reinforcement learning. In Proceedings of the 35th Conference on Computational Linguistics and Speech Processing (ROCLING 2023), Taipei City, Taiwan, 20–21 October 2023; pp. 202–209. [Google Scholar]

- Hyon, S.; Chen, R. University Faculty Writing and EAP Education: Beyond the Research Article. In Proceedings of the Annual Meeting of the American Association of Applied Linguistics, St. Louis, MO, USA, 24–27 February 2001. [Google Scholar]

- Morley, J. Academic Phrasebank; University of Manchester: Manchester, UK, 2014. [Google Scholar]

- Akbas, E.; Hardman, J. Strengthening or weakening claims in academic knowledge construction: A comparative study of hedges and boosters in postgraduate academic writing. Educ. Sci. Theory Pract. 2018, 18, 831–859. [Google Scholar]

- Salichah, I. Hedges and Boosters in Undergraduate Students’ Research Articles. Ph.D. Thesis, Universitas Negeri Malang, Malang, Indonesia, 2015. [Google Scholar]

- Bacang, B.C.; Rillo, R.M.; Alieto, E.O. The Gender Construct in the Use of Rhetorical Appeals, Hedges, and Boosters in ESL Writing: A Discourse Analysis. Online Submiss. 2019, 25, 210–224. [Google Scholar]

- Herminingsih, D.I.; Isro’iyah, L. The metadiscourse analysis in abstracts of multidisciplinary sciences journal articles: Hedges vs boosters. Int. Linguist. Res. 2023, 6, 24. [Google Scholar]

- Hinkel, E. Tense, aspect and the passive voice in L1 and L2 academic texts. Lang. Teach. Res. 2004, 8, 5–29. [Google Scholar] [CrossRef]

- Du, Z.; Hashimoto, K. Decoding Academic Language: The Symbiotic Relationship Between Boosters, Hedges, and Voice in EAP. In Proceedings of the 2024 12th International Conference on Information and Education Technology (ICIET), Yamaguchi, Japan, 18–20 March 2024; pp. 46–52. [Google Scholar] [CrossRef]

- Swales, J.M. Genre Analysis: English in Academic and Research Settings; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Swales, J. Create a research space (CARS) model of research introductions. InWriting About Writing: A College Reader; Bedford/st Martins: New York, NY, USA, 2014; pp. 12–15. [Google Scholar]

- Tang, W.; Liu, L.; Long, G. Interpretable time-series classification on few-shot samples. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Granger, S. The computer learner corpus: A versatile new source of data for SLA research. In Learner English on Computer; Granger, S., Ed.; Addison Wesley Longman: London, UK; New York, NY, USA, 1998; pp. 3–18. [Google Scholar]

- Du, Z.; Hashimoto, K. Tcnaec: Advancing sentence-level revision evaluation through diverse non-native academic english insights. IEEE Access 2023, 11, 144939–144952. [Google Scholar]

- Ishikawa, S. A New Horizon in Learner Corpus Studies: The Aim of the ICNALE Project. In Corpus-Based Studies in Language Use, Language Learning, and Language Documentation; Weir, G., Ishikawa, S., Poonpon, K., Eds.; Rodopi: Amsterdam, The Netherlands, 2011; pp. 3–11. [Google Scholar]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S. GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, Brussels, Belgium, 1 November 2018; pp. 353–355. [Google Scholar] [CrossRef]

- Chen, P.; Ghattas, O. Stein variational reduced basis Bayesian inversion. arXiv 2020, arXiv:2002.10924. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Flesch, R. How to Write Plain English: A Book for Lawyers and Consumers; Harper & Row: New York, NY, USA, 1949. [Google Scholar]

- Gunning, R. The Technique of Clear Writing; McGraw-Hill: New York, NY, USA, 1952. [Google Scholar]

- Kyle, K.; Crossley, S.; Berger, C. The tool for the automatic analysis of lexical sophistication (TAALES): Version 2.0. Behav. Res. Methods 2018, 50, 1030–1046. [Google Scholar]

- Crossley, S.A.; Kyle, K.; Dascalu, M. The Tool for the Automatic Analysis of Cohesion 2.0: Integrating semantic similarity and text overlap. Behav. Res. Methods 2019, 51, 14–27. [Google Scholar] [CrossRef]

- Landauer, T.K.; Foltz, P.W.; Laham, D. An Introduction to Latent Semantic Analysis. Discourse Process. 1998, 25, 259–284. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Church, K.W. Word2Vec. Nat. Lang. Eng. 2017, 23, 155–162. [Google Scholar] [CrossRef]

- Kobayashi, M.; Mita, M.; Komachi, M. Large Language Models Are State-of-the-Art Evaluator for Grammatical Error Correction. arXiv 2024, arXiv:2403.17540. [Google Scholar]

- Chan, C.M.; Chen, W.; Su, Y.; Yu, J.; Xue, W.; Zhang, S.; Fu, J.; Liu, Z. Chateval: Towards better llm-based evaluators through multi-agent debate. arXiv 2023, arXiv:2308.07201. [Google Scholar]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.; et al. Judging llm-as-a-judge with mt-bench and chatbot arena. Adv. Neural Inf. Process. Syst. 2024, 36, 46595–46623. [Google Scholar]

- Shankar, S.; Zamfirescu-Pereira, J.; Hartmann, B.; Parameswaran, A.G.; Arawjo, I. Who Validates the Validators? Aligning LLM-Assisted Evaluation of LLM Outputs with Human Preferences. arXiv 2024, arXiv:2404.12272. [Google Scholar]

- Liu, Y.; Zhou, H.; Guo, Z.; Shareghi, E.; Vulic, I.; Korhonen, A.; Collier, N. Aligning with human judgement: The role of pairwise preference in large language model evaluators. arXiv 2024, arXiv:2403.16950. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL), Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Brown, P.F.; Pietra, S.A.D.; Pietra, V.J.D.; Mercer, R.L. A Statistical Approach to Machine Translation. Comput. Linguist. 1993, 19, 263–311. [Google Scholar]

- Reiter, E. A structured review of the validity of BLEU. Comput. Linguist. 2018, 44, 393–401. [Google Scholar]

- Kuribayashi, T.; Oseki, Y.; Ito, T.; Yoshida, R.; Asahara, M.; Inui, K. Lower Perplexity is Not Always Human-Like. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 5203–5217. [Google Scholar] [CrossRef]

- Karademir, T.; Alper, A.; Soğuksu, A.F.; Karababa, Z.C. The development and evaluation of self-directed digital learning material development platform for foreign language education. Interact. Learn. Environ. 2021, 29, 600–617. [Google Scholar] [CrossRef]

- Granger, S.; Bestgen, Y. The use of collocations by intermediate vs. advanced non-native writers: A bigram-based study. Int. Rev. Appl. Linguist. Lang. Teach. 2014, 52, 229–252. [Google Scholar] [CrossRef]

- Johnson, M.D. Cognitive task complexity and L2 written syntactic complexity, accuracy, lexical complexity, and fluency: A research synthesis and meta-analysis. J. Second Lang. Writ. 2017, 37, 13–38. [Google Scholar] [CrossRef]

- Smirnova, E. Corpus Analysis of Academic Discourse Features: Implications for eap/esp Writing. Ph.D. Thesis, Universidade de Vigo, Vigo, Spain, 2023. [Google Scholar]

- Hyland, K. The ‘other’ English: Thoughts on EAP and academic writing. Eur. Engl. Messenger 2006, 15, 34–38. [Google Scholar]

- Gebril, A.; Plakans, L. Toward a transparent construct of reading-to-write tasks: The interface between discourse features and proficiency. Lang. Assess. Q. 2013, 10, 9–27. [Google Scholar] [CrossRef]

- García-Ostbye, I.C.; Martínez-Sáez, A. Reading challenges in higher education: How suitable are online genres in English for medical purposes. ESP Today 2023, 11, 53–74. [Google Scholar] [CrossRef]

- Azadnia, M. A corpus-based analysis of lexical richness in EAP texts written by Iranian TEFL students. Teach. Engl. A Second Lang. Q. (Formerly J. Teach. Lang. Skills) 2021, 40, 61–90. [Google Scholar]

| Product | Pitaya Pro | Wordvice AI Premium | QuillBot Premium | Effidit | DeepL Write Pro |

|---|---|---|---|---|---|

| Charge? | Yes | Yes | Yes | No | Yes |

| Function | Rewrite | AI Paraphraser | Paraphrasing Tool | Text Polishing | - |

| Text Limit | 6000 words | 10,000 words | 80,000 words | 300 words | None |

| Text Level | Corpus | Draft | Pitaya | QuillBot | DeepL Write | Wordvice AI | AcademiCraft |

|---|---|---|---|---|---|---|---|

| Pro | Premium | Pro | Premium | (Our) | |||

| Sentence | LOCNESS | 2.90 | 0.50 | 0.44 | 0.91 | 0.50 | 0 |

| TCNAEC | 1.65 | 1.67 | 1.35 | 3.65 | 1.21 | 1.53 | |

| Paragraph | LOCNESS | 2.26 | 0.43 | 0.08 | 0.65 | 0.02 | 0.06 |

| ICNALE | 1.04 | 0.06 | 0.09 | 0.52 | 0.06 | 0.14 | |

| Chapter | LOCNESS | 3.43 | 1.36 | 1.12 | 1.31 | 0.12 | 0.90 |

| ICNALE | 3.89 | 0.45 | 0.60 | 0.79 | 0 | 0 |

| Text Level | Corpus | Text | TFFL | BWBP | BWTP | SOSNP | SOSVP | SOPNP | SOPVP |

|---|---|---|---|---|---|---|---|---|---|

| Paragraph | LOCNESS | Draft | 4.66 | 0.54 | 0.19 | 0.82 | 0.76 | - | - |

| Pitaya Pro | 4.73 | 0.42 | 0.13 | 0.80 | 0.25 | - | - | ||

| QuillBot Premium | 4.72 | 0.47 | 0.16 | 0.83 | 0.34 | - | - | ||

| DeepL Write Pro | 4.77 | 0.53 | 0.22 | 0.56 | 0.46 | - | - | ||

| Wordvice AI Premium | 4.71 | 0.44 | 0.15 | 1.05 | 0.34 | - | - | ||

| AcademiCraft (Our) | 4.66 | 0.43 | 0.14 | 0.82 | 0.26 | - | - | ||

| ICNALE | Draft | 4.57 | 0.49 | 0.15 | 0.92 | 0.59 | - | - | |

| Pitaya Pro | 4.68 | 0.34 | 0.07 | 0.84 | 0.25 | - | - | ||

| QuillBot Premium | 4.62 | 0.38 | 0.08 | 0.78 | 0.33 | - | - | ||

| DeepL Write Pro | 4.75 | 0.45 | 0.09 | 1.22 | 0.45 | - | - | ||

| Wordvice AI Premium | 4.67 | 0.36 | 0.14 | 1.17 | 0.24 | - | - | ||

| AcademiCraft (Our) | 4.65 | 0.35 | 0.06 | 0.92 | 0.18 | - | - | ||

| Chapter | LOCNESS | Draft | 4.68 | 0.41 | 0.16 | 0.89 | 0.58 | 4.65 | 5.03 |

| Pitaya Pro | 2.57 | 0.33 | 0.09 | 0.80 | 0.28 | 5.93 | 4.19 | ||

| QuillBot Premium | 4.73 | 0.37 | 0.12 | 0.83 | 0.39 | 6.97 | 6.99 | ||

| DeepL Write Pro | 4.79 | 0.40 | 0.16 | 0.80 | 0.41 | 8.78 | 6.71 | ||

| Wordvice AI Premium | 4.75 | 0.35 | 0.10 | 1.12 | 0.53 | 6.31 | 2.90 | ||

| AcademiCraft (Our) | 4.69 | 0.37 | 0.11 | 0.95 | 0.41 | 7.59 | 5.47 | ||

| ICNALE | Draft | 4.57 | 0.47 | 0.14 | 1.08 | 0.89 | 5.45 | 4.71 | |

| Pitaya Pro | 4.64 | 0.36 | 0.07 | 0.61 | 0.23 | 4.31 | 1.23 | ||

| QuillBot Premium | 4.67 | 0.38 | 0.10 | 0.89 | 0.54 | 5.20 | 2.51 | ||

| DeepL Write Pro | 4.74 | 0.44 | 0.14 | 0.82 | 0.33 | 4.34 | 2.44 | ||

| Wordvice AI Premium | 4.64 | 0.33 | 0.06 | 1.14 | 0.16 | 4.03 | 0.50 | ||

| AcademiCraft (Our) | 4.59 | 0.36 | 0.08 | 0.74 | 0.24 | 6.49 | 2.85 |

| Text Level | Corpus | Text | FRE | GFI | GLUE | LSAFSS | LDAFSS | W2VFSS | LSAFPS | LDAFPS | W2VFPS |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Sentence | LOCNESS | Draft | 52.68 | 13.87 | - | - | - | - | - | - | - |

| Pitaya Pro | 33.22 | 17.27 | 0.81 | - | - | - | - | - | - | ||

| QuillBot Premium | 38.76 | 16.27 | 0.86 | - | - | - | - | - | - | ||

| DeepL Write Pro | 28.69 | 18.94 | 0.82 | - | - | - | - | - | - | ||

| Wordvice AI Premium | 32.88 | 17.32 | 0.81 | - | - | - | - | - | - | ||

| AcademiCraft (Our) | 13.10 | 21.60 | 0.74 | - | - | - | - | - | - | ||

| TCNAEC | Draft | 52.66 | 13.30 | - | - | - | - | - | - | - | |

| Pitaya Pro | 22.47 | 18.48 | 0.85 | - | - | - | - | - | - | ||

| QuillBot Premium | 34.27 | 16.85 | 0.91 | - | - | - | - | - | - | ||

| DeepL Write Pro | 25.78 | 19.38 | 0.88 | - | - | - | - | - | - | ||

| Wordvice AI Premium | 10.55 | 21.83 | 0.81 | - | - | - | - | - | - | ||

| AcademiCraft (Our) | 11.37 | 22.09 | 0.84 | - | - | - | - | - | - | ||

| Paragraph | LOCNESS | Draft | 53.43 | 14.84 | - | 0.47 | 0.95 | 0.79 | - | - | - |

| Pitaya Pro | 36.98 | 17.71 | 0.89 | 0.46 | 0.93 | 0.78 | - | - | - | ||

| QuillBot Premium | 35.22 | 17.74 | 0.89 | 0.47 | 0.91 | 0.81 | - | - | - | ||

| DeepL Write Pro | 36.20 | 18.14 | 0.89 | 0.49 | 0.94 | 0.82 | - | - | - | ||

| Wordvice AI Premium | 24.80 | 20.34 | 0.85 | 0.49 | 0.87 | 0.78 | - | - | - | ||

| AcademiCraft (Our) | 35.83 | 17.66 | 0.90 | 0.51 | 0.88 | 0.80 | - | - | - | ||

| ICNALE | Draft | 42.08 | 17.87 | - | 0.43 | 0.84 | 0.69 | - | - | - | |

| Pitaya Pro | 30.40 | 17.70 | 0.86 | 0.39 | 0.89 | 0.71 | - | - | - | ||

| QuillBot Premium | 27.24 | 18.63 | 0.84 | 0.43 | 0.91 | 0.75 | - | - | - | ||

| DeepL Write Pro | 27.90 | 19.38 | 0.88 | 0.47 | 0.95 | 0.81 | - | - | - | ||

| Wordvice AI Premium | 15.08 | 21.56 | 0.88 | 0.45 | 0.85 | 0.74 | - | - | - | ||

| AcademiCraft (Our) | 23.24 | 18.74 | 0.89 | 0.50 | 0.92 | 0.80 | - | - | - | ||

| Chapter | LOCNESS | Draft | 57.41 | 13.53 | - | 0.49 | 0.97 | 0.87 | 0.57 | 0.99 | 0.89 |

| Pitaya Pro | 37.30 | 16.98 | 0.90 | 0.48 | 0.94 | 0.88 | 0.59 | 0.94 | 0.90 | ||

| QuillBot Premium | 34.59 | 17.77 | 0.90 | 0.49 | 0.95 | 0.89 | 0.48 | 0.79 | 0.73 | ||

| DeepL Write Pro | 38.53 | 16.89 | 0.89 | 0.50 | 0.95 | 0.89 | 0.64 | 0.97 | 0.91 | ||

| Wordvice AI Premium | 23.62 | 20.72 | 0.84 | 0.55 | 0.97 | 0.91 | 0.59 | 0.84 | 0.78 | ||

| AcademiCraft (Our) | 41.48 | 16.35 | 0.90 | 0.50 | 0.96 | 0.89 | 0.64 | 0.97 | 0.91 | ||

| ICNALE | Draft | 58.96 | 13.39 | - | 0.58 | 0.97 | 0.88 | 0.43 | 0.58 | 0.53 | |

| Pitaya Pro | 34.74 | 16.06 | 0.87 | 0.53 | 0.95 | 0.89 | 0.42 | 0.56 | 0.52 | ||

| QuillBot Premium | 29.37 | 17.86 | 0.82 | 0.55 | 0.95 | 0.90 | 0.44 | 0.57 | 0.52 | ||

| DeepL Write Pro | 31.86 | 18.03 | 0.80 | 0.58 | 0.96 | 0.90 | 0.47 | 0.54 | 0.56 | ||

| Wordvice AI Premium | 18.22 | 19.73 | 0.78 | 0.64 | 0.97 | 0.91 | 0.35 | 0.43 | 0.40 | ||

| AcademiCraft (Our) | 33.32 | 16.79 | 0.86 | 0.62 | 0.98 | 0.91 | 0.65 | 0.82 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, Z.; Hashimoto, K. AcademiCraft: Transforming Writing Assistance for English for Academic Purposes with Multi-Agent System Innovations. Information 2025, 16, 254. https://doi.org/10.3390/info16040254

Du Z, Hashimoto K. AcademiCraft: Transforming Writing Assistance for English for Academic Purposes with Multi-Agent System Innovations. Information. 2025; 16(4):254. https://doi.org/10.3390/info16040254

Chicago/Turabian StyleDu, Zhendong, and Kenji Hashimoto. 2025. "AcademiCraft: Transforming Writing Assistance for English for Academic Purposes with Multi-Agent System Innovations" Information 16, no. 4: 254. https://doi.org/10.3390/info16040254

APA StyleDu, Z., & Hashimoto, K. (2025). AcademiCraft: Transforming Writing Assistance for English for Academic Purposes with Multi-Agent System Innovations. Information, 16(4), 254. https://doi.org/10.3390/info16040254