Unpacking AI Chatbot Dependency: A Dual-Path Model of Cognitive and Affective Mechanisms

Abstract

1. Introduction

2. Literature Review

2.1. AI Chatbot Dependency

2.2. Theoretical Frameworks

2.2.1. Uses and Gratifications Theory

2.2.2. Compensatory Internet Use Theory

2.2.3. Attachment Theory

2.3. Conceptual Variables and Hypotheses Development

2.3.1. Cognitive Reliance

2.3.2. Emotional Attachment

2.3.3. Information-Seeking

2.3.4. Efficiency

2.3.5. Entertainment

2.3.6. Companionship

2.3.7. Loneliness

2.3.8. Anxiety

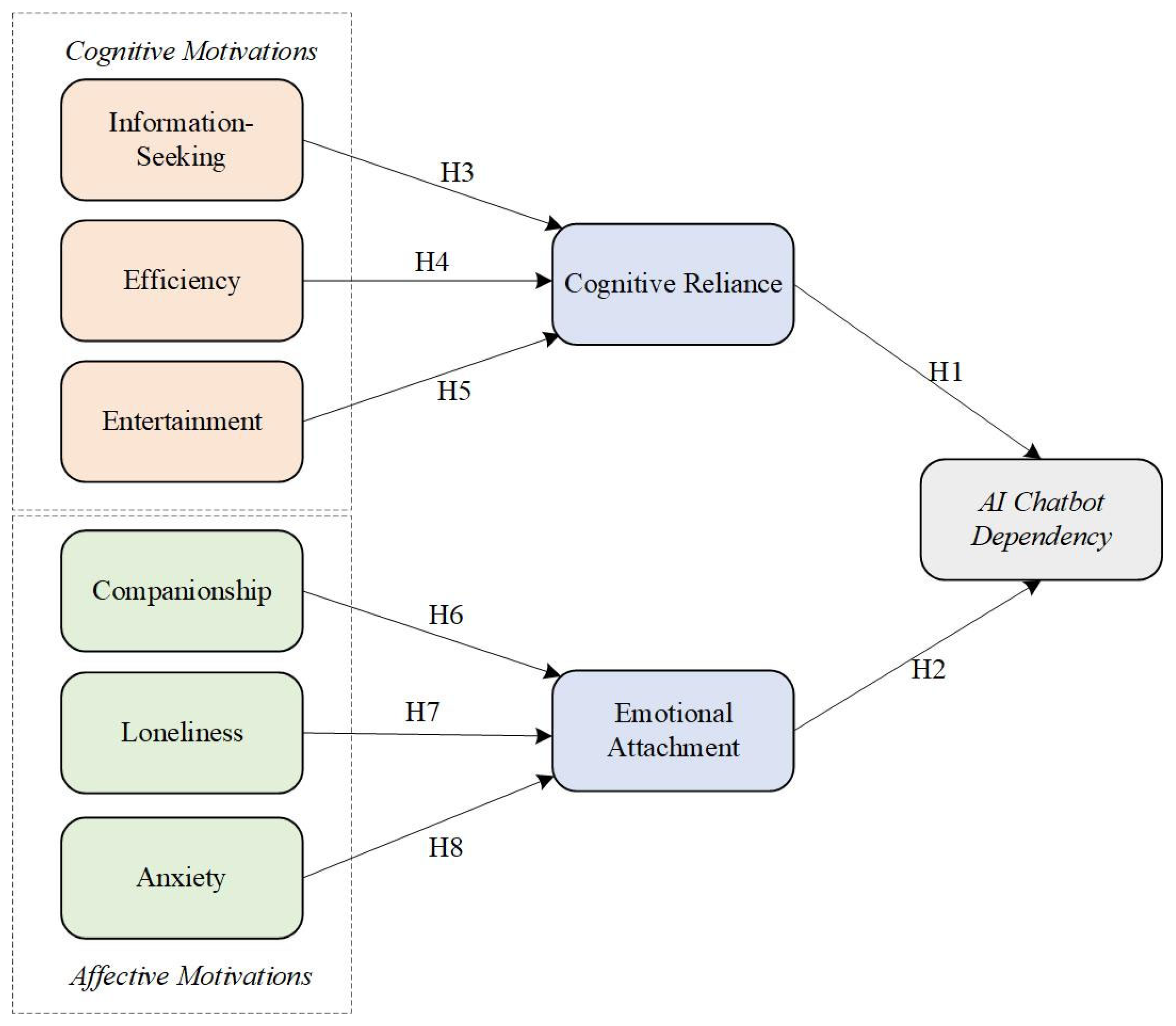

2.4. Conceptual Research Model

3. Methodology

3.1. Participants and Procedures

3.2. Instruments

3.3. Data Analysis

4. Results

4.1. The Measurement Model

4.2. The Structural Model and Hypotheses Testing

5. Discussion

6. Implications

6.1. Theoretical Implications

6.2. Practical Implications

7. Limitations and Future Research Directions

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Constructs | Items | Descriptions | References |

|---|---|---|---|

| Information-Seeking | IS1 | I use AI chatbots to obtain information quickly and accurately. | Papacharissi and Rubin [70] |

| IS2 | I rely on AI chatbots to learn new things that interest me. | Whiting and Williams [71] | |

| IS3 | AI chatbots help me stay informed about topics I care about. | Xie et al. [72] | |

| Efficiency | EF1 | Using AI chatbots saves me time in completing daily or work-related tasks. | LaRose and Eastin [73] |

| EF2 | AI chatbots make my work or study more efficient. | Venkatesh et al. [74] | |

| EF3 | I use AI chatbots because they simplify complicated tasks. | Zhai and Ma [75] | |

| Entertainment | EN1 | I use AI chatbots for fun or relaxation. | Whiting and Williams [71] |

| EN2 | Chatting with AI chatbots is enjoyable. | Sundar et al. [20] | |

| EN3 | I use AI chatbots when I want to relieve boredom. | Seok et al. [76] | |

| Companionship | CO1 | I use AI chatbots because they make me feel accompanied. | Wang et al. [77] |

| CO2 | AI chatbots can act as my companions when I feel alone. | Cheng et al. [78] | |

| CO3 | Interacting with AI chatbots makes me feel cared for. | ||

| Loneliness | LO1 | I use AI chatbots when I feel lonely. | Shen and Wang [79] |

| LO2 | I turn to AI chatbots when I lack someone to talk to. | Zhang et al. [80] | |

| LO3 | When I am alone, I prefer chatting with AI chatbots. | ||

| Anxiety | AN1 | I use AI chatbots to relieve my stress or anxiety. | Abd-Alrazaq et al. [81] |

| AN2 | Talking with AI chatbots helps calm me down when I feel nervous. | ||

| AN3 | I turn to AI chatbots when I feel significant anxiety or emotional distress. | Kim et al. [82] | |

| Cognitive Reliance | CR1 | I often depend on AI chatbots to make decisions for me. | LaRose and Eastin [73] |

| CR2 | I feel uneasy when I cannot access AI chatbots for help. | Xie et al. [83] | |

| CR3 | I find myself checking AI chatbots even for simple questions. | ||

| Emotional Attachment | EA1 | I feel emotionally connected to the AI chatbot I often use. | Heng and Zhang [68] |

| EA2 | I miss interacting with AI chatbots when I cannot use them. | ||

| EA3 | I consider my favorite AI chatbot to be an important part of my daily life. | ||

| AI Chatbot Dependency | AICD1 | I find it hard to control the amount of time I spend using AI chatbots. | Shawar and Atwell [84] |

| AICD2 | I feel restless or anxious when I cannot use AI chatbots. | Kwon et al. [85] | |

| AICD3 | My use of AI chatbots sometimes interferes with my normal activities. | Montag and Elhai [86] |

References

- Murtaza, Z.; Sharma, I.; Carbonell, P. Examining chatbot usage intention in a service encounter: Role of task complexity, communication style, and brand personality. Technol. Forecast. Soc. Change 2024, 209, 123806. [Google Scholar] [CrossRef]

- Badu, D.; Joseph, D.; Kumar, R.M.; Alexander, E.; Sasi, R.; Joseph, J. Emotional AI and the rise of pseudo-intimacy: Are we trading authenticity for algorithmic affection? Front. Psychol. 2025, 16, 1679324. [Google Scholar] [CrossRef] [PubMed]

- Richet, J.-L. AI companionship or digital entrapment? Investigating the impact of anthropomorphic AI-based chatbots. J. Innov. Knowl. 2025, 10, 100835. [Google Scholar] [CrossRef]

- Moylan, K.; Doherty, K. Expert and Interdisciplinary Analysis of AI-Driven Chatbots for Mental Health Support: Mixed Methods Study. J. Med. Internet Res. 2025, 27, e67114. [Google Scholar] [CrossRef]

- Ciechanowski, L.; Przegalinska, A.; Magnuski, M.; Gloor, P. In the shades of the uncanny valley: An experimental study of human–chatbot interaction. Future Gener. Comput. Syst. 2019, 92, 539–548. [Google Scholar] [CrossRef]

- Liao, Q.V.; Geyer, W.; Muller, M.; Khazaen, Y. Conversational interfaces for information search. In Understanding and Improving Information Search: A Cognitive Approach; Springer: Berlin/Heidelberg, Germany, 2020; pp. 267–287. [Google Scholar]

- Hsu, P.-F.; Nguyen, T.; Wang, C.-Y.; Huang, P.-J. Chatbot commerce—How contextual factors affect Chatbot effectiveness. Electron. Mark. 2023, 33, 14. [Google Scholar] [CrossRef]

- Adam, M.; Wessel, M.; Benlian, A. AI-based chatbots in customer service and their effects on user compliance. Electron. Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Følstad, A.; Brandtzæg, P.B. Chatbots and the new world of HCI. Interactions 2017, 24, 38–42. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, Z. Emotional attachment as the key mediator: Expanding UTAUT2 to examine how perceived anthropomorphism in intelligent agents influences the sustained use of DouBao (Cici) among EFL learners. Educ. Inf. Technol. 2025, 30, 1–23. [Google Scholar] [CrossRef]

- Huang, H.; Shi, L.; Pei, X. When AI Becomes a Friend: The “Emotional” and “Rational” Mechanism of Problematic Use in Generative AI Chatbot Interactions. Int. J. Hum. Comput. Interact. 2025, 41, 1–19. [Google Scholar] [CrossRef]

- Yankouskaya, A.; Babiker, A.; Rizvi, S.; Alshakhsi, S.; Liebherr, M.; Ali, R. LLM-D12: A Dual-Dimensional Scale of Instrumental and Relational Dependencies on Large Language Models. ACM Trans. Web 2025, 19, 1–33. [Google Scholar] [CrossRef]

- Logg, J.M.; Minson, J.A.; Moore, D.A. Algorithm appreciation: People prefer algorithmic to human judgment. Organ. Behav. Hum. Decis. Process. 2019, 151, 90–103. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 2015, 144, 114. [Google Scholar] [CrossRef] [PubMed]

- Schuetzler, R.M.; Grimes, G.M.; Scott Giboney, J. The impact of chatbot conversational skill on engagement and perceived humanness. J. Manag. Inf. Syst. 2020, 37, 875–900. [Google Scholar] [CrossRef]

- Gaffney, H.; Mansell, W.; Tai, S. Conversational agents in the treatment of mental health problems: Mixed-method systematic review. JMIR Ment. Health 2019, 6, e14166. [Google Scholar] [CrossRef]

- Vaidyam, A.N.; Wisniewski, H.; Halamka, J.D.; Kashavan, M.S.; Torous, J.B. Chatbots and conversational agents in mental health: A review of the psychiatric landscape. Can. J. Psychiatry 2019, 64, 456–464. [Google Scholar] [CrossRef]

- Katz, E.; Blumler, J.G.; Gurevitch, M. Uses and gratifications research. Public Opin. Q. 1973, 37, 509–523. [Google Scholar] [CrossRef]

- Sundar, S.S.; Bellur, S.; Oh, J.; Jia, H.; Kim, H.-S. Theoretical importance of contingency in human-computer interaction: Effects of message interactivity on user engagement. Commun. Res. 2016, 43, 595–625. [Google Scholar] [CrossRef]

- Rubin, A.M. Uses-and-gratifications perspective on media effects. In Media Effects; Routledge: Abingdon-on-Thames, UK, 2009; pp. 181–200. [Google Scholar]

- Ruggiero, T.E. Uses and gratifications theory in the 21st century. Mass Commun. Soc. 2000, 3, 3–37. [Google Scholar] [CrossRef]

- Chang, Y.; Lee, S.; Wong, S.F.; Jeong, S.-P. AI-powered learning application use and gratification: An integrative model. Inf. Technol. People 2022, 35, 2115–2139. [Google Scholar] [CrossRef]

- Biru, I.R.; Rahmawati, R.F.; Salsabila, B.A.D. Analysis of Motivation for Continued Use of Meta AI on WhatsApp: Uses and Gratification Theory Approach. J. Artif. Intell. Eng. Appl. (JAIEA) 2025, 4, 2162–2168. [Google Scholar] [CrossRef]

- Xie, C.; Wang, Y.; Cheng, Y. Does artificial intelligence satisfy you? A meta-analysis of user gratification and user satisfaction with AI-powered chatbots. Int. J. Hum.–Comput. Interact. 2024, 40, 613–623. [Google Scholar] [CrossRef]

- Pathak, A. AI Chatbots and Interpersonal Communication: A Study on Uses and Gratification amongst Youngsters. IIS Univ. J. Arts 2024, 13, 355–366. [Google Scholar]

- Kardefelt-Winther, D. A conceptual and methodological critique of internet addiction research: Towards a model of compensatory internet use. Comput. Hum. Behav. 2014, 31, 351–354. [Google Scholar] [CrossRef]

- Boursier, V.; Gioia, F.; Musetti, A.; Schimmenti, A. Facing loneliness and anxiety during the COVID-19 isolation: The role of excessive social media use in a sample of Italian adults. Front. Psychiatry 2020, 11, 586222. [Google Scholar] [CrossRef]

- Servidio, R.; Soraci, P.; Pisanti, R.; Boca, S. From loneliness to problematic social media use: The mediating roles of fear of missing out, self-esteem, and social comparison. Psicol. Soc. 2025, 20, 89–112. [Google Scholar]

- Meng, J.; Rheu, M.; Zhang, Y.; Dai, Y.; Peng, W. Mediated social support for distress reduction: AI Chatbots vs. Human. In Proceedings of the ACM on Human-Computer Interaction, Hamburg, Germany, 23–28 April 2023; Volume 7, pp. 1–25. [Google Scholar]

- Yao, R.; Qi, G.; Sheng, D.; Sun, H.; Zhang, J. Connecting self-esteem to problematic AI chatbot use: The multiple mediating roles of positive and negative psychological states. Front. Psychol. 2025, 16, 1453072. [Google Scholar] [CrossRef]

- Bowlby, J. Attachment and Loss, 3rd ed.; The Hogarth Press and Institute of Psycho-Analysis: London, UK, 1969; Volume 1. [Google Scholar]

- Hoffman, G.; Forlizzi, J.; Ayal, S.; Steinfeld, A.; Antanitis, J.; Hochman, G.; Hochendoner, E.; Finkenaur, J. Robot presence and human honesty: Experimental evidence. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; pp. 181–188. [Google Scholar]

- Złotowski, J.; Yogeeswaran, K.; Bartneck, C. Can we control it? Autonomous robots threaten human identity, uniqueness, safety, and resources. Int. J. Hum. Comput. Stud. 2017, 100, 48–54. [Google Scholar] [CrossRef]

- Skjuve, M.; Følstad, A.; Fostervold, K.I.; Brandtzaeg, P.B. My chatbot companion-a study of human-chatbot relationships. Int. J. Hum. Comput. Stud. 2021, 149, 102601. [Google Scholar] [CrossRef]

- Shah, T.R.; Purohit, S.; Das, M.; Arulsivakumar, T. Do I look real? Impact of digital human avatar influencer realism on consumer engagement and attachment. J. Consum. Mark. 2025, 42, 416–430. [Google Scholar] [CrossRef]

- Wetzels, R.; Wetzels, M.; Grewal, D.; Doek, B. Evoking trust in smart voice assistants. J. Serv. Manag. 2025, 36, 1–27. [Google Scholar] [CrossRef]

- Sokolova, K.; Perez, C. You follow fitness influencers on YouTube. But do you actually exercise? How parasocial relationships, and watching fitness influencers, relate to intentions to exercise. J. Retail. Consum. Serv. 2021, 58, 102276. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Truong, T.T.H.; Chen, J.S. When empathy is enhanced by human–AI interaction: An investigation of anthropomorphism and responsiveness on customer experience with AI chatbots. Asia Pac. J. Mark. Logist. 2025, 37, 1–18. [Google Scholar] [CrossRef]

- Sundar, S.S.; Limperos, A.M. Uses and grats 2.0: New gratifications for new media. J. Broadcast. Electron. Media 2013, 57, 504–525. [Google Scholar] [CrossRef]

- Rose, S. Towards an Integrative Theory of Human-AI Relationship Development; Association for Information Systems: Atlanta, GA USA, 2024. [Google Scholar]

- Raney, A.A.; Janicke-Bowles, S.H.; Oliver, M.B.; Dale, K.R. Introduction to Positive Media Psychology; Routledge: Abingdon-on-Thames, UK, 2020. [Google Scholar]

- Hawkley, L.C.; Cacioppo, J.T. Loneliness matters: A theoretical and empirical review of consequences and mechanisms. Ann. Behav. Med. 2010, 40, 218–227. [Google Scholar] [CrossRef] [PubMed]

- Spielberger, C.D. Assessment of state and trait anxiety: Conceptual and methodological issues. South. Psychol. 1985, 2, 6–16. [Google Scholar]

- Gnewuch, U.; Morana, S.; Maedche, A. Towards Designing Cooperative and Social Conversational Agents for Customer Service. In Proceedings of the 38th International Conference on Information Systems (ICIS), Seoul, Republic of Korea, 10–13 December 2017. [Google Scholar]

- Küper, A.; Krämer, N. Psychological traits and appropriate reliance: Factors shaping trust in AI. Int. J. Hum.–Comput. Interact. 2025, 41, 4115–4131. [Google Scholar] [CrossRef]

- Liao, Q.V.; Gruen, D.; Miller, S. Questioning the AI: Informing design practices for explainable AI user experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–15. [Google Scholar]

- Romeo, G.; Conti, D. Exploring automation bias in human–AI collaboration: A review and implications for explainable AI. AI Soc. 2025, 40, 1–20. [Google Scholar] [CrossRef]

- Aghaziarati, A.; Rahimi, H. The Future of Digital Assistants: Human Dependence and Behavioral Change. J. Foresight Health Gov. 2025, 2, 52–61. [Google Scholar]

- Alalwan, A.A.; Algharabat, R.; Abu El Samen, A.; Albanna, H.; Al-Okaily, M. Examining the impact of anthropomorphism and AI-chatbots service quality on online customer flow experience–exploring the moderating role of telepresence. J. Consum. Mark. 2025, 42, 448–471. [Google Scholar] [CrossRef]

- Hastuti, N.T. Redefining Emotional Presence: Lived Experiences of AI-Mediated Romantic Relationships with Chatbot-Based Virtual Companions. Humanexus J. Humanist. Soc. Connect. Stud. 2025, 1, 245–252. [Google Scholar]

- Edalat, A.; Hu, R.; Patel, Z.; Polydorou, N.; Ryan, F.; Nicholls, D. Self-initiated humour protocol: A pilot study with an AI agent. Front. Digit. Health 2025, 7, 1530131. [Google Scholar] [CrossRef] [PubMed]

- Mei, K.-Q.; Chen, Y.-J.; Liu, S.-T. Navigating “Limerence”: Designing a Customized ChatGPT-Based Assistant System. In Proceedings of the International Conference on Human-Computer Interaction, Gothenburg, Sweden, 22–27 June 2025; pp. 162–172. [Google Scholar]

- Hong, S.J. What drives AI-based risk information-seeking intent? Insufficiency of risk information versus (Un) certainty of AI chatbots. Comput. Hum. Behav. 2025, 162, 108460. [Google Scholar] [CrossRef]

- Liao, W.; Weisman, W.; Thakur, A. On the motivations to seek information from artificial intelligence agents versus humans: A risk information seeking and processing perspective. Sci. Commun. 2024, 46, 458–486. [Google Scholar] [CrossRef]

- Subaveerapandiyan, A.; Vijay Kumar, R.; Prabhu, S. Marine information-seeking behaviours and AI chatbot impact on information discovery. Inf. Discov. Deliv. 2025, 53, 206–216. [Google Scholar] [CrossRef]

- Zhou, T.; Li, S. Understanding user switch of information seeking: From search engines to generative AI. J. Librariansh. Inf. Sci. 2024, 56, 09610006241244800. [Google Scholar] [CrossRef]

- Pham, V.K.; Pham Thi, T.D.; Duong, N.T. A study on information search behavior using AI-powered engines: Evidence from chatbots on online shopping platforms. Sage Open 2024, 14, 21582440241300007. [Google Scholar] [CrossRef]

- Mei, Y. AI & entertainment: The revolution of customer experience. Lect. Notes Educ. Psychol. Public Media 2023, 30, 274–279. [Google Scholar] [CrossRef]

- Yang, H.; Lee, H. Understanding user behavior of virtual personal assistant devices. Inf. Syst. E-Bus. Manag. 2019, 17, 65–87. [Google Scholar] [CrossRef]

- Park, G.; Chung, J.; Lee, S. Effect of AI chatbot emotional disclosure on user satisfaction and reuse intention for mental health counseling: A serial mediation model. Curr. Psychol. 2023, 42, 28663–28673. [Google Scholar] [CrossRef]

- Shahab, H.; Mohtar, M.; Ghazali, E.; Rauschnabel, P.A.; Geipel, A. Virtual reality in museums: Does it promote visitor enjoyment and learning? Int. J. Hum.–Comput. Interact. 2023, 39, 3586–3603. [Google Scholar] [CrossRef]

- Pani, B.; Crawford, J.; Allen, K.-A. Can generative artificial intelligence foster belongingness, social support, and reduce loneliness? A conceptual analysis. In Applications of Generative AI; Lyu, Z., Ed.; Springer International Publishing: Cham, Switzerland, 2024; pp. 261–276. [Google Scholar] [CrossRef]

- Zou, W.; Liu, Z.; Lin, C. The influence of individuals’ emotional involvement and perceived roles of AI chatbots on emotional self-efficacy. Inf. Commun. Soc. 2025, 28, 1–21. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, S.; Wen, F.; Liu, K. How loneliness leads to the conversational AI usage intention: The roles of anthropomorphic interface, para-social interaction. Curr. Psychol. 2025, 44, 8177–8189. [Google Scholar] [CrossRef]

- Yan, W.; Xiaowei, G.; Xiaolin, Z. From Para-social Interaction to Attachment: The Evolution of Human-AI Emotional Relationships. J. Psychol. Sci. 2025, 48, 948–961. [Google Scholar]

- Heng, S.; Zhang, Z. Attachment Anxiety and Problematic Use of Conversational Artificial Intelligence: Mediation of Emotional Attachment and Moderation of Anthropomorphic Tendencies. Psychol. Res. Behav. Manag. 2025, 18, 1775–1785. [Google Scholar] [CrossRef]

- Wu, X.; Liew, K.; Dorahy, M.J. Trust, Anxious Attachment, and Conversational AI Adoption Intentions in Digital Counseling: A Preliminary Cross-Sectional Questionnaire Study. JMIR AI 2025, 4, e68960. [Google Scholar] [CrossRef]

- Papacharissi, Z.; Rubin, A.M. Predictors of Internet use. J. Broadcast. Electron. Media 2000, 44, 175–196. [Google Scholar] [CrossRef]

- Whiting, A.; Williams, D. Why people use social media: A uses and gratifications approach. Qual. Mark. Res. Int. J. 2013, 16, 362–369. [Google Scholar] [CrossRef]

- Xie, Y.; Zhao, S.; Zhou, P.; Liang, C. Understanding continued use intention of AI assistants. J. Comput. Inf. Syst. 2023, 63, 1424–1437. [Google Scholar] [CrossRef]

- LaRose, R.; Eastin, M.S. A social cognitive theory of Internet uses and gratifications: Toward a new model of media attendance. J. Broadcast. Electron. Media 2004, 48, 358–377. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Zhai, N.; Ma, X. Automated writing evaluation (AWE) feedback: A systematic investigation of college students’ acceptance. Comput. Assist. Lang. Learn. 2022, 35, 2817–2842. [Google Scholar] [CrossRef]

- Seok, J.; Lee, B.H.; Kim, D.; Bak, S.; Kim, S.; Kim, S.; Park, S. What Emotions and Personalities Determine Acceptance of Generative AI?: Focusing on the CASA Paradigm. Int. J. Hum.–Comput. Interact. 2025, 41, 11436–11458. [Google Scholar] [CrossRef]

- Wang, X.; Lin, X.; Shao, B. How does artificial intelligence create business agility? Evidence from chatbots. Int. J. Inf. Manag. 2022, 66, 102535. [Google Scholar] [CrossRef]

- Cheng, X.; Bao, Y.; Zarifis, A.; Gong, W.; Mou, J. Exploring consumers’ response to text-based chatbots in e-commerce: The moderating role of task complexity and chatbot disclosure. Internet Res. 2022, 32, 496–517. [Google Scholar] [CrossRef]

- Shen, X.; Wang, J.-L. Loneliness and excessive smartphone use among Chinese college students: Moderated mediation effect of perceived stressed and motivation. Comput. Hum. Behav. 2019, 95, 31–36. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Xia, M.; Han, M.; Yan, L.; Lian, S. The relationship between loneliness and mobile phone addiction among Chinese college students: The mediating role of anthropomorphism and moderating role of family support. PLoS ONE 2023, 18, e0285189. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.A.; Rababeh, A.; Alajlani, M.; Bewick, B.M.; Househ, M. Effectiveness and safety of using chatbots to improve mental health: Systematic review and meta-analysis. J. Med. Internet Res. 2020, 22, e16021. [Google Scholar] [CrossRef]

- Kim, M.; Lee, S.; Kim, S.; Heo, J.-I.; Lee, S.; Shin, Y.-B.; Cho, C.-H.; Jung, D. Therapeutic potential of social chatbots in alleviating loneliness and social anxiety: Quasi-experimental mixed methods study. J. Med. Internet Res. 2025, 27, e65589. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Pentina, I.; Hancock, T. Friend, mentor, lover: Does chatbot engagement lead to psychological dependence? J. Serv. Manag. 2023, 34, 806–828. [Google Scholar] [CrossRef]

- Shawar, B.A.; Atwell, E. Chatbots: Are they really useful? J. Lang. Technol. Comput. Linguist. 2007, 22, 29–49. [Google Scholar] [CrossRef]

- Kwon, S.K.; Shin, D.; Lee, Y. The application of chatbot as an L2 writing practice tool. Lang. Learn. Technol. 2023, 27, 1–19. [Google Scholar] [CrossRef]

- Montag, C.; Elhai, J.D. Introduction of the AI-Interaction Positivity Scale and its relations to satisfaction with life and trust in ChatGPT. Comput. Hum. Behav. 2025, 172, 108705. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling; Guilford Publications: New York, NY, USA, 2023. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Nunnally, J.; Bernstein, I. Psychometric Theory, 3rd ed.; MacGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Jöreskog, K.G.; Sörbom, D. LISREL 8: Structural Equation Modeling with the SIMPLIS Command Language; Scientific Software International: Chapel Hill, NC, USA, 1993. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: Abingdon-on-Thames, UK, 2013. [Google Scholar]

- Hair, J.F. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Lim, J.; Hwang, W. Proactivity of chatbots, task types and user’s characteristics when interacting with artificial intelligence (AI) chatbots. Int. J. Hum.–Comput. Interact. 2025, 41, 11848–11866. [Google Scholar] [CrossRef]

- Chen, Y.; Ma, X.; Wu, C. The concept, technical architecture, applications and impacts of satellite internet: A systematic literature review. Heliyon 2024, 10, e33793. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Xie, Y.; Chen, D.; Ji, Z.; Wang, J. Effects of attractions and social attributes on peoples’ usage intention and media dependence towards chatbot: The mediating role of parasocial interaction and emotional support. BMC Psychol. 2025, 13, 986. [Google Scholar] [CrossRef]

- Yeh, S.-F.; Wu, M.-H.; Chen, T.-Y.; Lin, Y.-C.; Chang, X.; Chiang, Y.-H.; Chang, Y.-J. How to guide task-oriented chatbot users, and when: A mixed-methods study of combinations of chatbot guidance types and timings. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–16. [Google Scholar]

- Lin, Z.; Ng, Y.-L. Unraveling gratifications, concerns, and acceptance of generative artificial intelligence. Int. J. Hum.–Comput. Interact. 2025, 41, 10725–10742. [Google Scholar] [CrossRef]

- Lee, J.H.; Shin, D.; Hwang, Y. Investigating the capabilities of large language model-based task-oriented dialogue chatbots from a learner’s perspective. System 2024, 127, 103538. [Google Scholar] [CrossRef]

- Sánchez Cuadrado, J.; Pérez-Soler, S.; Guerra, E.; De Lara, J. Automating the Development of Task-oriented LLM-based Chatbots. In Proceedings of the 6th ACM Conference on Conversational User Interfaces, Luxembourg, 8–10 June 2024; pp. 1–10. [Google Scholar]

- Zhang, N.; Xu, J.; Zhang, X.; Wang, Y. Social robots supporting children’s learning and development: Bibliometric and visual analysis. Educ. Inf. Technol. 2024, 29, 12115–12142. [Google Scholar] [CrossRef]

- Wyatt, Z. Talk to Me: AI Companions in the Age of Disconnection. Med. Clin. Sci. 2025, 7, 050. [Google Scholar]

- Adewale, M.D.; Muhammad, U.I. From virtual companions to forbidden attractions: The seductive rise of artificial intelligence love, loneliness, and intimacy—A systematic review. J. Technol. Behav. Sci. 2025, 3, 1–18. [Google Scholar] [CrossRef]

- Liu, A.R.; Pataranutaporn, P.; Maes, P. The Heterogeneous Effects of AI Companionship: An Empirical Model of Chatbot Usage and Loneliness and a Typology of User Archetypes. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, Madrid, Spain, 20–22 October 2025; pp. 1585–1597. [Google Scholar]

- Huang, Y.; Huang, H. Exploring the effect of attachment on technology addiction to generative AI chatbots: A structural equation modeling analysis. Int. J. Hum.–Comput. Interact. 2025, 41, 9440–9449. [Google Scholar] [CrossRef]

- Dinh, C.-M.; Park, S. How to increase consumer intention to use Chatbots? An empirical analysis of hedonic and utilitarian motivations on social presence and the moderating effects of fear across generations. Electron. Commer. Res. 2024, 24, 2427–2467. [Google Scholar] [CrossRef]

- Wang, Y. Emotional Dependence Path of Artificial Intelligence Chatbot Based on Structural Equation Modeling. Procedia Comput. Sci. 2024, 247, 1089–1094. [Google Scholar] [CrossRef]

- Biswas, M.; Murray, J. “Incomplete Without Tech”: Emotional Responses and the Psychology of AI Reliance. In Proceedings of the Annual Conference Towards Autonomous Robotic Systems, London, UK, 21–23 August 2024; pp. 119–131. [Google Scholar]

- Lee, Y.-F.; Hwang, G.-J.; Chen, P.-Y. Technology-based interactive guidance to promote learning performance and self-regulation: A chatbot-assisted self-regulated learning approach. Educ. Technol. Res. Dev. 2025, 73, 1–26. [Google Scholar] [CrossRef]

- Guan, R.; Raković, M.; Chen, G.; Gašević, D. How educational chatbots support self-regulated learning? A systematic review of the literature. Educ. Inf. Technol. 2025, 30, 4493–4518. [Google Scholar] [CrossRef]

- Saracini, C.; Cornejo-Plaza, M.I.; Cippitani, R. Techno-emotional projection in human–GenAI relationships: A psychological and ethical conceptual perspective. Front. Psychol. 2025, 16, 1662206. [Google Scholar] [CrossRef]

- Shen, J.; DiPaola, D.; Ali, S.; Sap, M.; Park, H.W.; Breazeal, C. Empathy toward artificial intelligence versus human experiences and the role of transparency in mental health and social support chatbot design: Comparative study. JMIR Ment. Health 2024, 11, e62679. [Google Scholar] [CrossRef] [PubMed]

| Group | Construct | Definition in This Study | Supporting Literature |

|---|---|---|---|

| Mediator | Cognitive Reliance | The extent to which individuals delegate cognitive tasks to external agents, such as chatbots, to simplify decision-making or problem-solving. | [39] |

| Emotional Attachment | A user’s affective bond with a chatbot, marked by feelings of closeness, comfort, and emotional security. | [40] | |

| Instrumental Motivation | Information-Seeking | The deliberate use of chatbots to acquire relevant or novel information to fulfill cognitive needs. | [41] |

| Efficiency | The desire to use chatbots to save time, reduce effort, and streamline tasks. | [42] | |

| Entertainment | The use of chatbots for enjoyment, amusement, and emotional satisfaction. | [43] | |

| Affective Motivation | Companionship | The desire to experience social connection or relational closeness via chatbot interaction. | [21] |

| Loneliness | A perceived gap between desired and actual social relationships, driving engagement with emotionally responsive chatbots. | [44] | |

| Anxiety | A negative emotional state that motivates users to seek comfort, control, or predictability through chatbot interaction. | [45] |

| Variable | Category | Frequency (n) | Percentage (%) |

|---|---|---|---|

| Gender | Male | 161 | 45.48 |

| Female | 192 | 54.24 | |

| Prefer not to say | 1 | 0.28 | |

| Age (years) | 18–24 | 238 | 67.23 |

| 25–34 | 97 | 27.40 | |

| 35–45 | 19 | 5.37 | |

| Education Level | Undergraduate | 202 | 57.06 |

| Master’s | 118 | 33.33 | |

| Doctoral | 12 | 3.39 | |

| Other | 22 | 6.22 | |

| Occupation | Student | 218 | 61.58 |

| Working professional | 123 | 34.75 | |

| Other | 13 | 3.67 | |

| Chatbot Usage Frequency | Daily | 136 | 38.42 |

| Several times per week | 151 | 42.65 | |

| Occasionally | 67 | 18.93 | |

| AI Chatbot Used in the Past Month | DeepSeek R1 | 110 | 31.07 |

| Baidu Ernie Bot 4.0 | 82 | 23.16 | |

| Doubao 9.0 | 70 | 19.77 | |

| ChatGPT-4o (third-party access) | 40 | 11.30 | |

| Tencent Hunyuan T1 | 25 | 7.15 | |

| Others (e.g., iFlytek Spark 4.0, Kimi1.5, and Qwen3) | 27 | 7.55 |

| Construct/Items | Mean | Std. Dev. | Standardized Factor Loading (>0.70) | Cronbach’s α (>0.70) | Composite Reliability (>0.70) | Average Variance Extracted (>0.50) |

|---|---|---|---|---|---|---|

| AI Chatbot Dependency | 0.875 | 0.913 | 0.777 | |||

| AICD1 | 4.221 | 0.687 | 0.895 | |||

| AICD2 | 4.353 | 0.713 | 0.882 | |||

| AICD3 | 4.271 | 0.664 | 0.867 | |||

| Cognitive Reliance | 0.879 | 0.922 | 0.797 | |||

| CR1 | 4.262 | 0.657 | 0.889 | |||

| CR2 | 4.414 | 0.632 | 0.907 | |||

| CR3 | 4.332 | 0.614 | 0.882 | |||

| Emotional Attachment | 0.868 | 0.906 | 0.763 | |||

| EA1 | 4.011 | 0.697 | 0.874 | |||

| EA2 | 4.092 | 0.683 | 0.860 | |||

| EA3 | 4.115 | 0.651 | 0.886 | |||

| Information-Seeking | 0.871 | 0.916 | 0.785 | |||

| IS1 | 4.313 | 0.650 | 0.892 | |||

| IS2 | 4.237 | 0.682 | 0.861 | |||

| IS3 | 4.370 | 0.701 | 0.905 | |||

| Efficiency | 0.884 | 0.919 | 0.790 | |||

| EF1 | 4.452 | 0.602 | 0.901 | |||

| EF2 | 4.328 | 0.619 | 0.879 | |||

| EF3 | 4.381 | 0.670 | 0.887 | |||

| Entertainment | 0.841 | 0.858 | 0.669 | |||

| EN1 | 3.892 | 0.782 | 0.823 | |||

| EN2 | 4.003 | 0.747 | 0.831 | |||

| EN3 | 3.951 | 0.723 | 0.799 | |||

| Companionship | 0.849 | 0.865 | 0.682 | |||

| CP1 | 3.943 | 0.731 | 0.812 | |||

| CP2 | 3.893 | 0.763 | 0.837 | |||

| CP3 | 3.981 | 0.70 | 0.828 | |||

| Loneliness | 0.881 | 0.909 | 0.769 | |||

| LO1 | 4.052 | 0.679 | 0.878 | |||

| LO2 | 4.120 | 0.642 | 0.892 | |||

| LO3 | 4.081 | 0.663 | 0.861 | |||

| Anxiety | 0.866 | 0.901 | 0.752 | |||

| AN1 | 4.176 | 0.721 | 0.869 | |||

| AN2 | 4.104 | 0.703 | 0.855 | |||

| AN3 | 4.158 | 0.712 | 0.878 |

| Constructs | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| 1. AI Chatbot Dependency | |||||||||

| 2. Cognitive Reliance | 0.763 | ||||||||

| 3. Emotional Attachment | 0.792 | 0.721 | |||||||

| 4. Information-Seeking | 0.692 | 0.749 | 0.664 | ||||||

| 5. Efficiency | 0.747 | 0.733 | 0.683 | 0.702 | |||||

| 6. Entertainment | 0.717 | 0.699 | 0.765 | 0.662 | 0.666 | ||||

| 7. Companionship | 0.744 | 0.678 | 0.804 | 0.655 | 0.662 | 0.761 | |||

| 8. Loneliness | 0.724 | 0.707 | 0.780 | 0.635 | 0.651 | 0.700 | 0.686 | ||

| 9. Anxiety | 0.713 | 0.705 | 0.770 | 0.626 | 0.648 | 0.713 | 0.714 | 0.730 |

| Fit Index | Value | Recommended Threshold | Reference |

|---|---|---|---|

| χ2/df | 2.360 | <3.0 | Hair et al. [88] |

| CFI | 0.961 | >0.90 (good), >0.95 (excellent) | Hu and Bentler [91] |

| TLI | 0.948 | >0.90 | Hu and Bentler [91] |

| RMSEA | 0.047 | <0.08 (acceptable), <0.05 (excellent) | Kline [87] |

| SRMR | 0.041 | <0.08 | Hu and Bentler [91] |

| GFI | 0.931 | >0.90 | Jöreskog and Sörbom [92] |

| Hypothesis | Structural Path | Path Coefficient | R2 | F2 | Empirical Evidence |

|---|---|---|---|---|---|

| H1 | CR → AICD | 0.473 | 0.592 | 0.164 | Supported |

| H2 | EA → AICD | 0.360 | 0.098 | Supported | |

| H3 | IS → CR | 0.422 | 0.523 | 0.168 | Supported |

| H4 | EF → CR | 0.349 | 0.084 | Supported | |

| H5 | EN → CR | 0.071 | 0.031 | Not supported | |

| H6 | CP → EA | 0.243 | 0.471 | 0.076 | Supported |

| H7 | LO → EA | 0.389 | 0.151 | Supported | |

| H8 | AN → EA | 0.221 | 0.076 | Supported |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, N.; Ma, X.; Ding, X. Unpacking AI Chatbot Dependency: A Dual-Path Model of Cognitive and Affective Mechanisms. Information 2025, 16, 1025. https://doi.org/10.3390/info16121025

Zhai N, Ma X, Ding X. Unpacking AI Chatbot Dependency: A Dual-Path Model of Cognitive and Affective Mechanisms. Information. 2025; 16(12):1025. https://doi.org/10.3390/info16121025

Chicago/Turabian StyleZhai, Na, Xiaomei Ma, and Xiaojun Ding. 2025. "Unpacking AI Chatbot Dependency: A Dual-Path Model of Cognitive and Affective Mechanisms" Information 16, no. 12: 1025. https://doi.org/10.3390/info16121025

APA StyleZhai, N., Ma, X., & Ding, X. (2025). Unpacking AI Chatbot Dependency: A Dual-Path Model of Cognitive and Affective Mechanisms. Information, 16(12), 1025. https://doi.org/10.3390/info16121025