Understanding the Rise of Automated Machine Learning: A Global Overview and Topic Analysis

Abstract

1. Introduction

- a systematic overview of the existing literature on AutoML and its current applications;

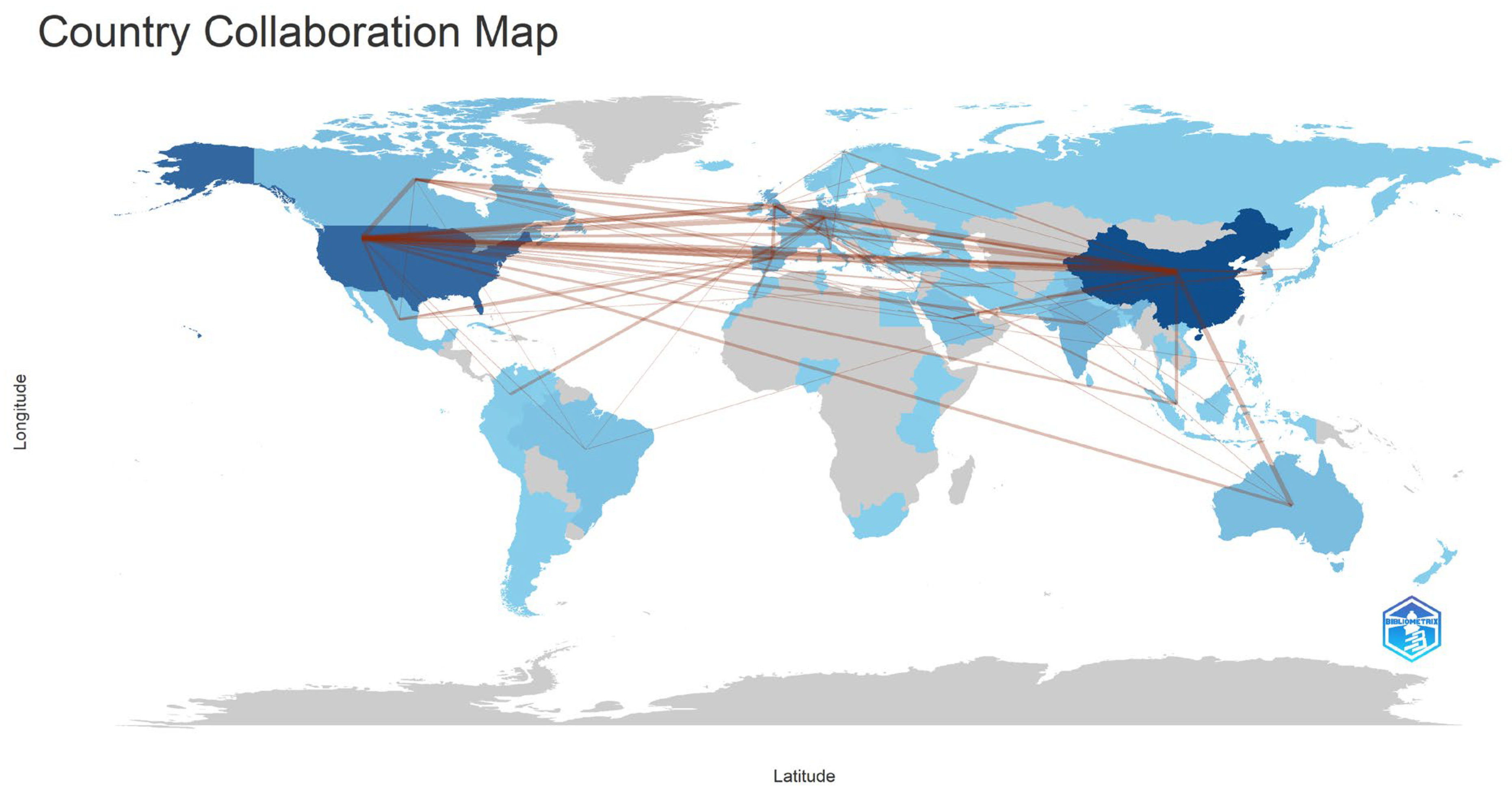

- identification of leading authors, institutions, and countries that drive innovation in this area, guiding new researchers in identifying potential collaborators;

- revealing main themes and patterns in AutoML, such as growth rate of publications, shift in focus areas, and emerging topics, helping researchers in aligning their studies with current and future research areas;

- facilitating interdisciplinary collaboration by mapping the connections between AutoML and other fields, leading to innovative approaches and solutions based on the expertise of researchers from different disciplines;

- establishing a framework for future studies by synthesizing the existing knowledge and by identifying key areas for future research.

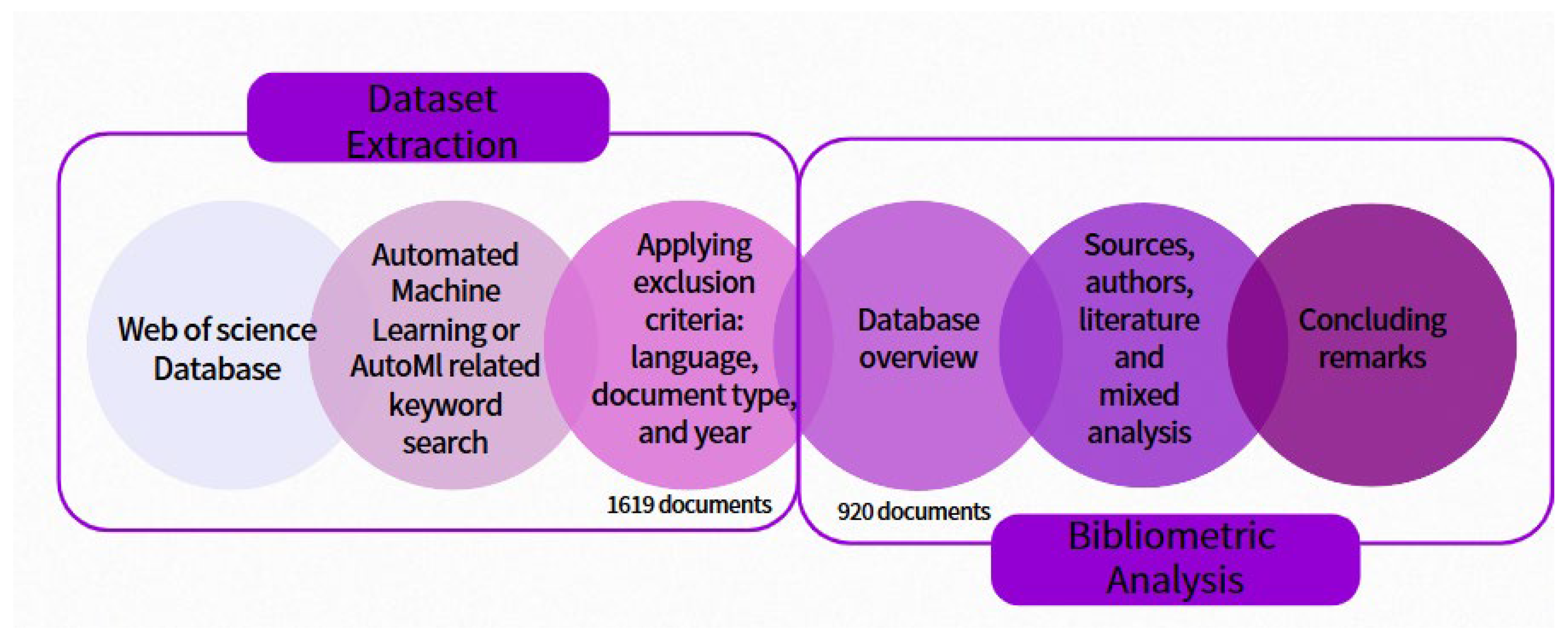

2. Materials and Methods

2.1. Dataset Extraction

- Science Citation Index Expanded (SCIE)—1900–present;

- Social Sciences Citation Index (SSCI) 1975–present;

- Arts & Humanities Citation Index (A&HCI)—1975–present;

- Emerging Sources Citation Index (ESCI) 2005–present;

- Conference Proceedings Citation Index—Science (CPCI-S)—1990–present;

- Conference Proceedings Citation Index—Social Sciences and Humanities (CPCI-SSH)—1990–present;

- Book Citation Index—Science (BKCI-S)—2010–present;

- Book Citation Index—Social Sciences and Humanities (BKCI-SSH)—2010–present;

- Current Chemical Reactions (CCR-Expanded)—2010–present;

- Index Chemicus (IC)—2010–present.

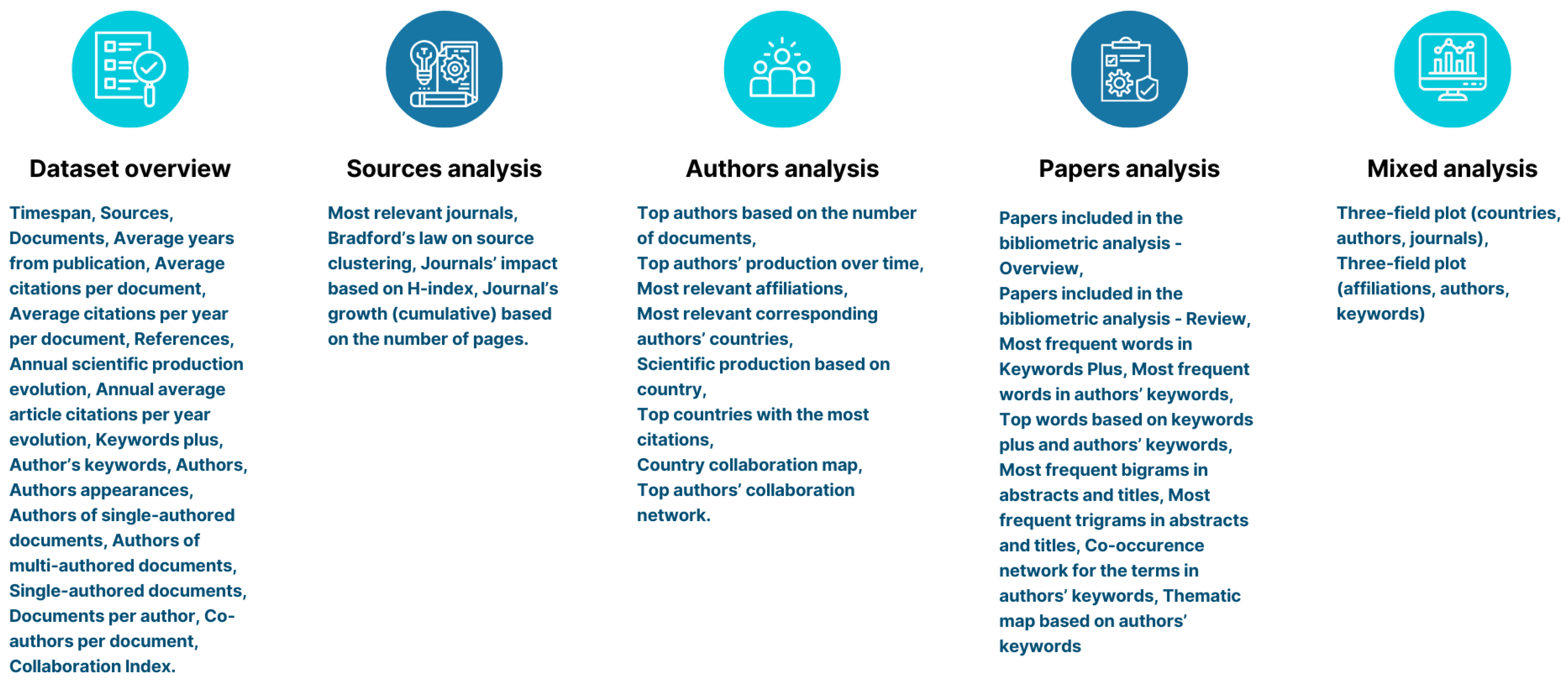

2.2. Bibliometric Analysis

2.3. Topic Analysis

3. Results

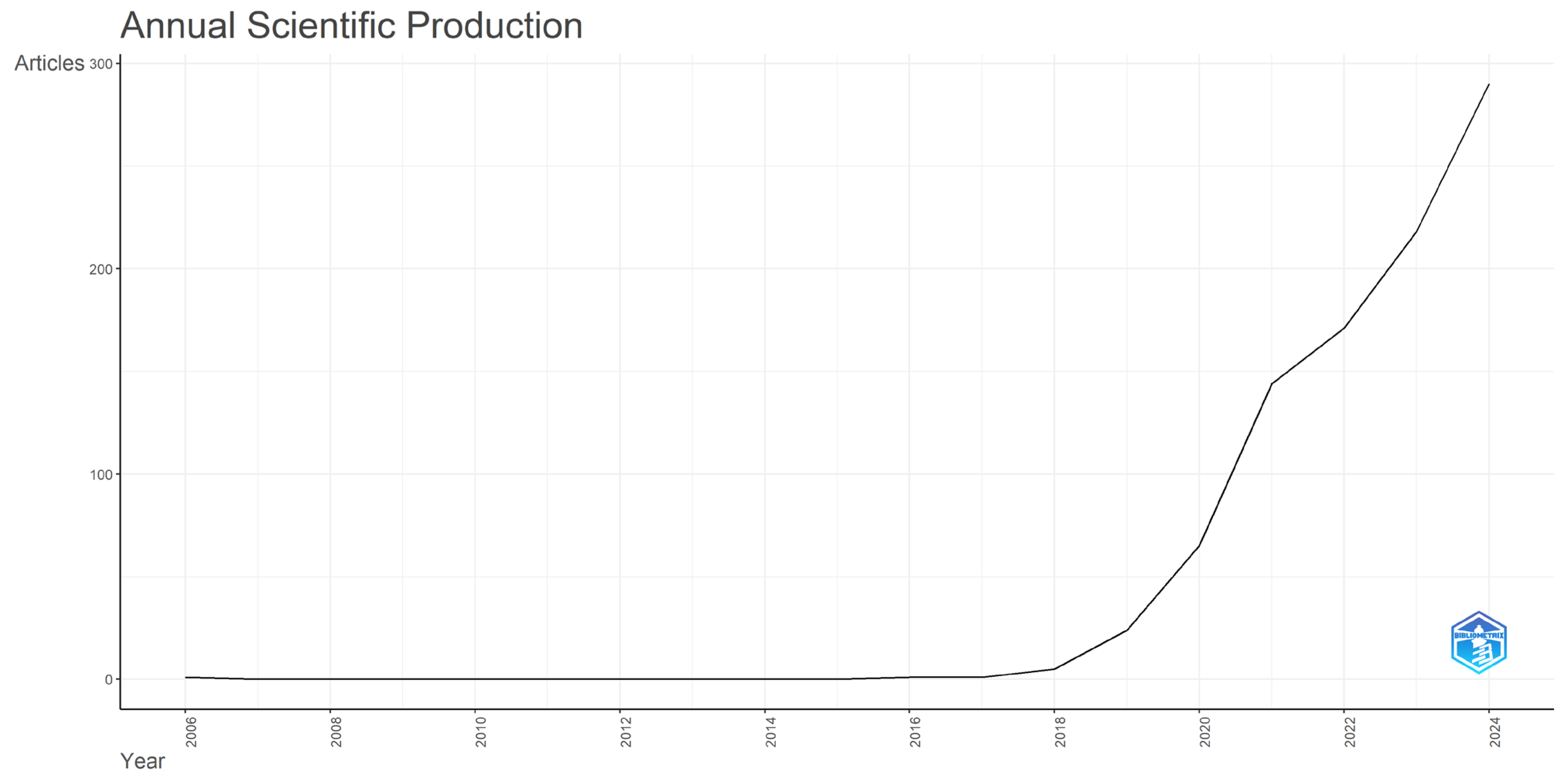

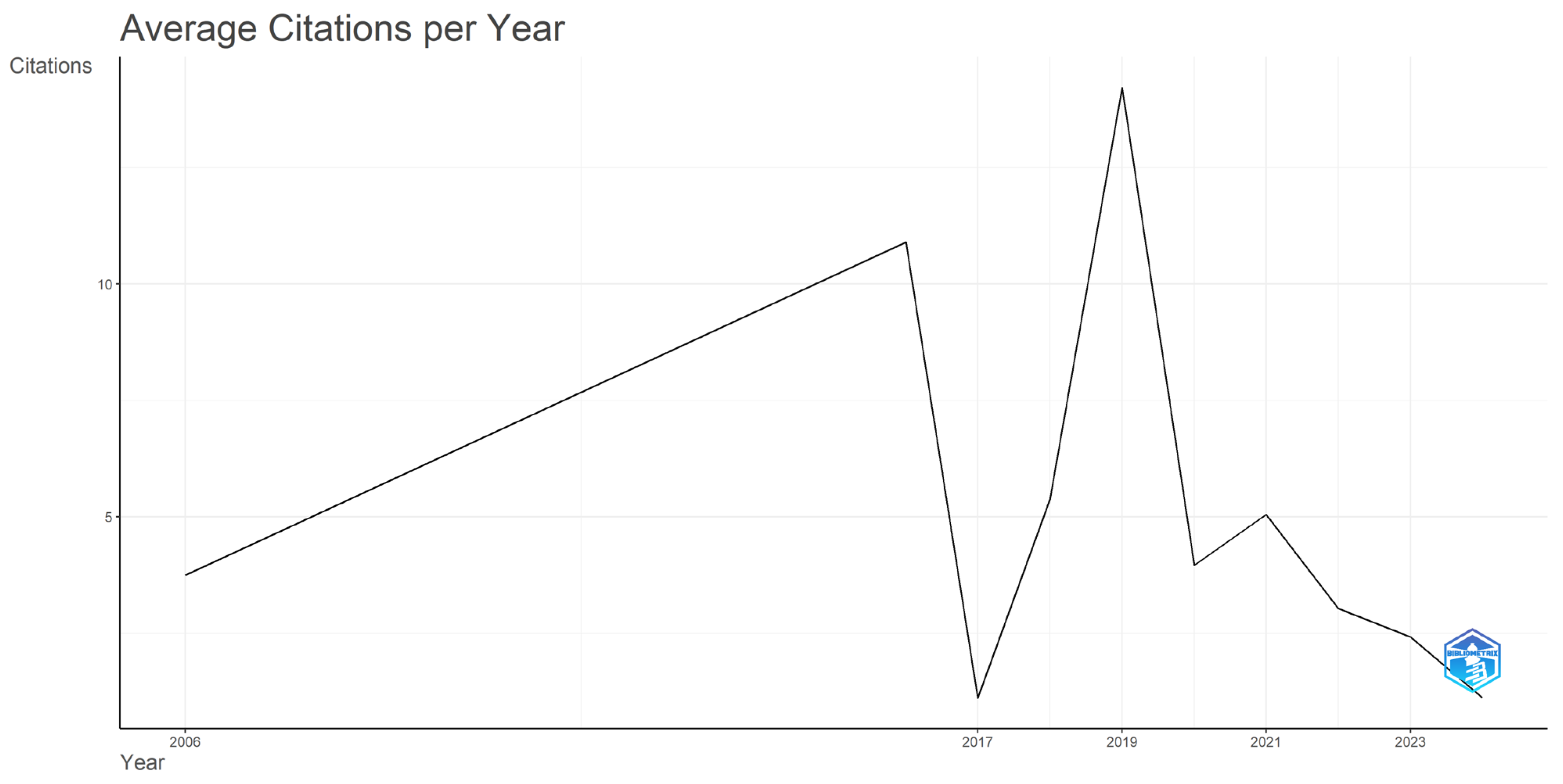

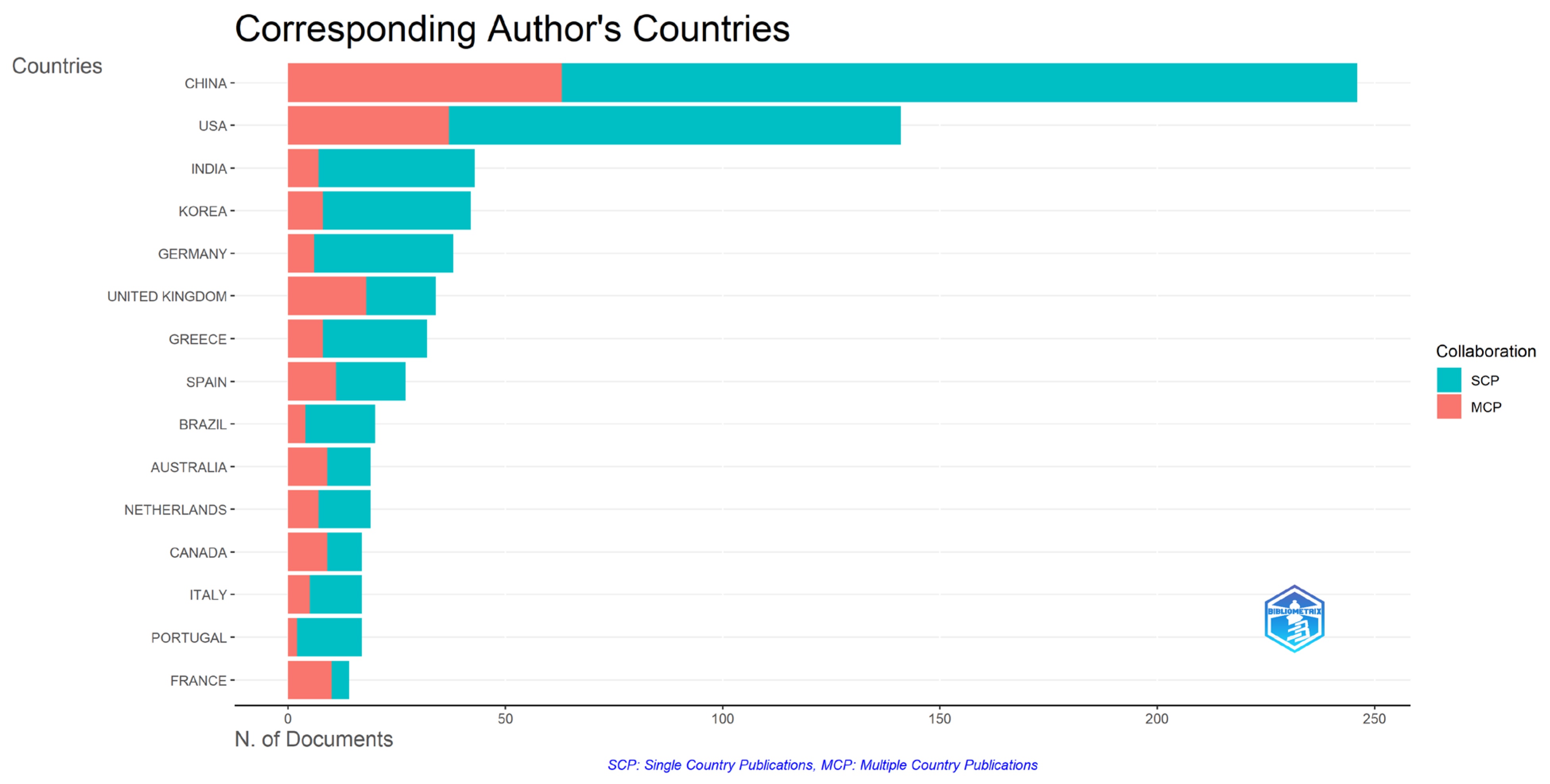

3.1. Dataset Overview

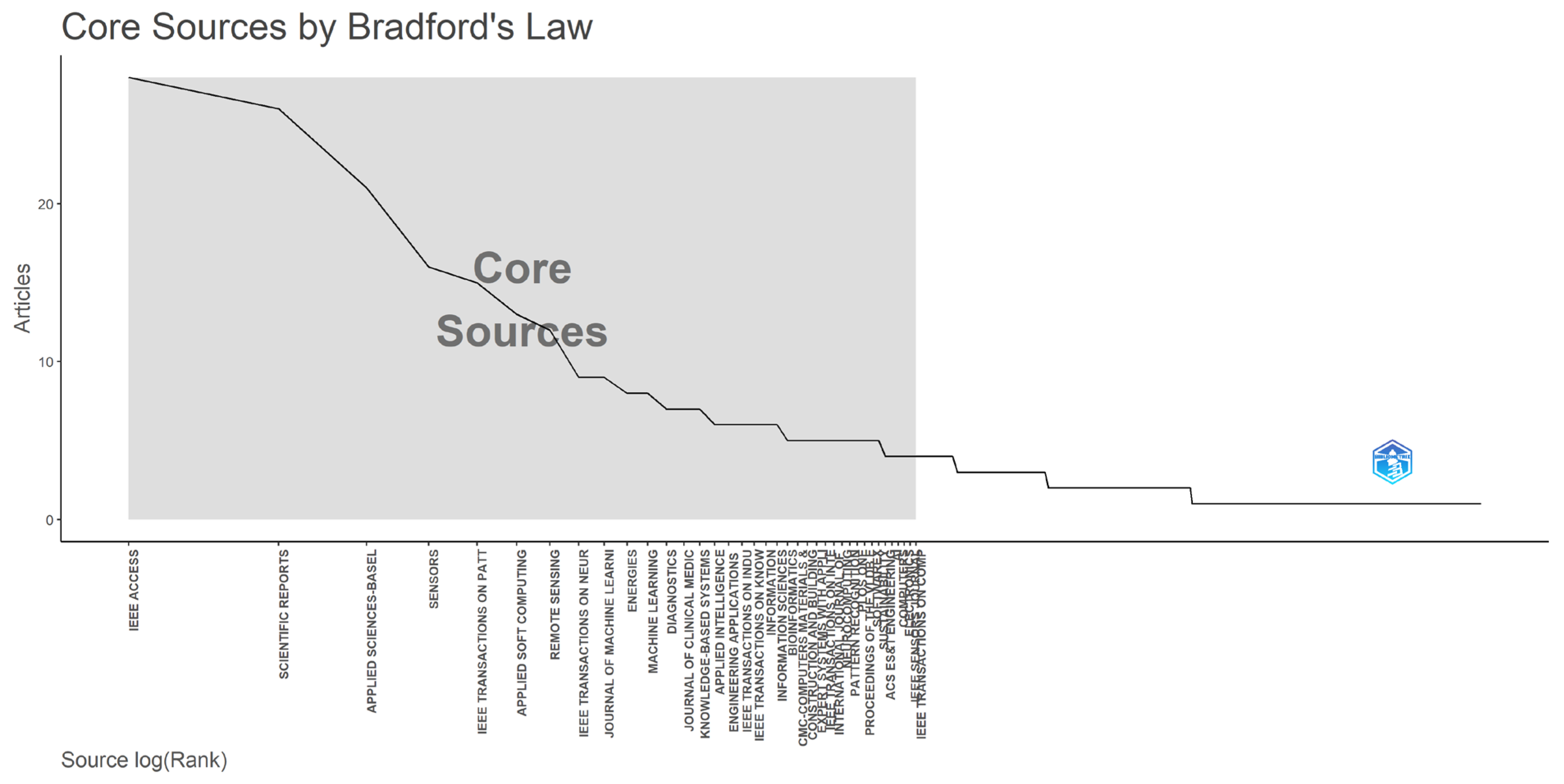

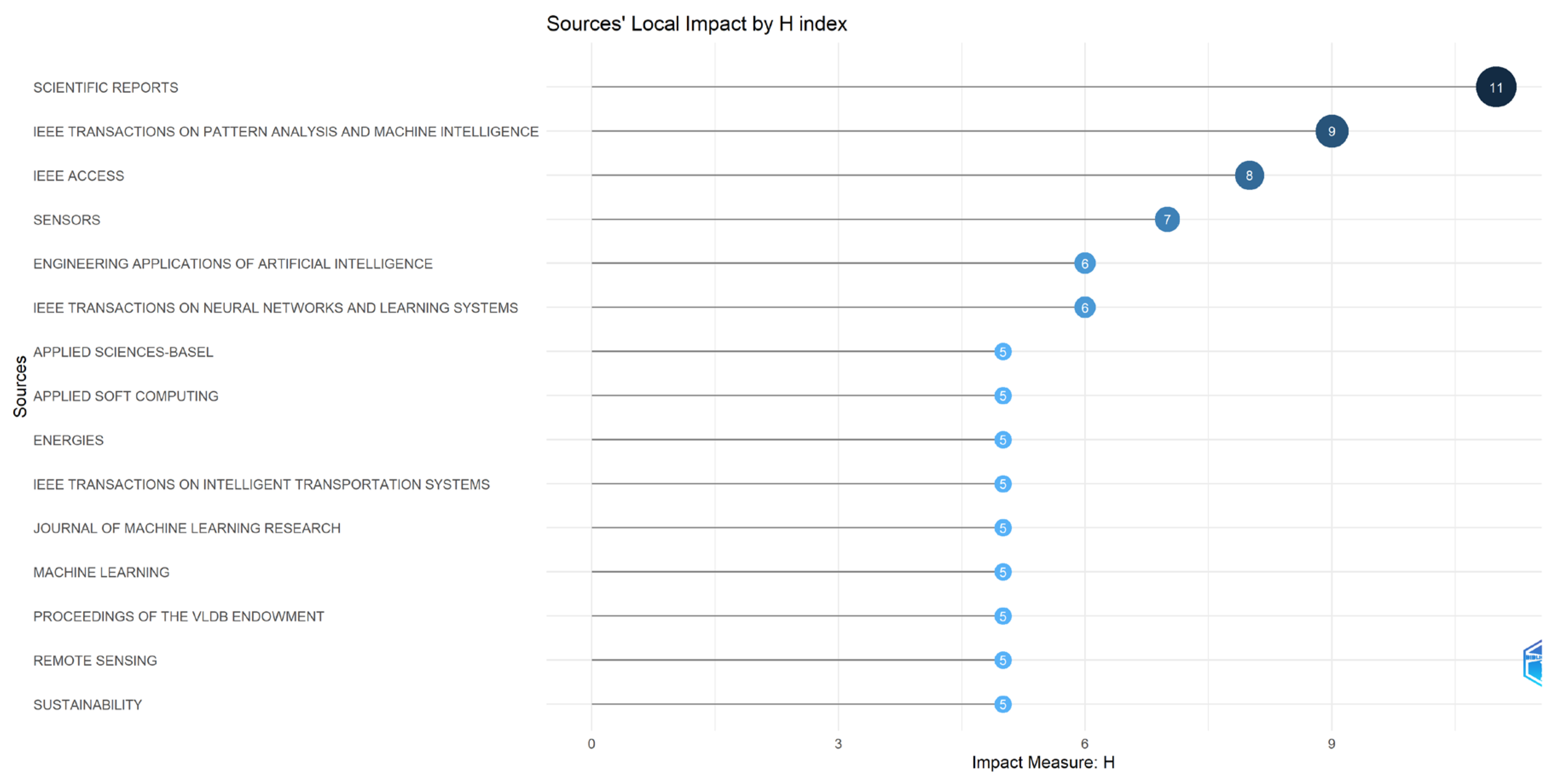

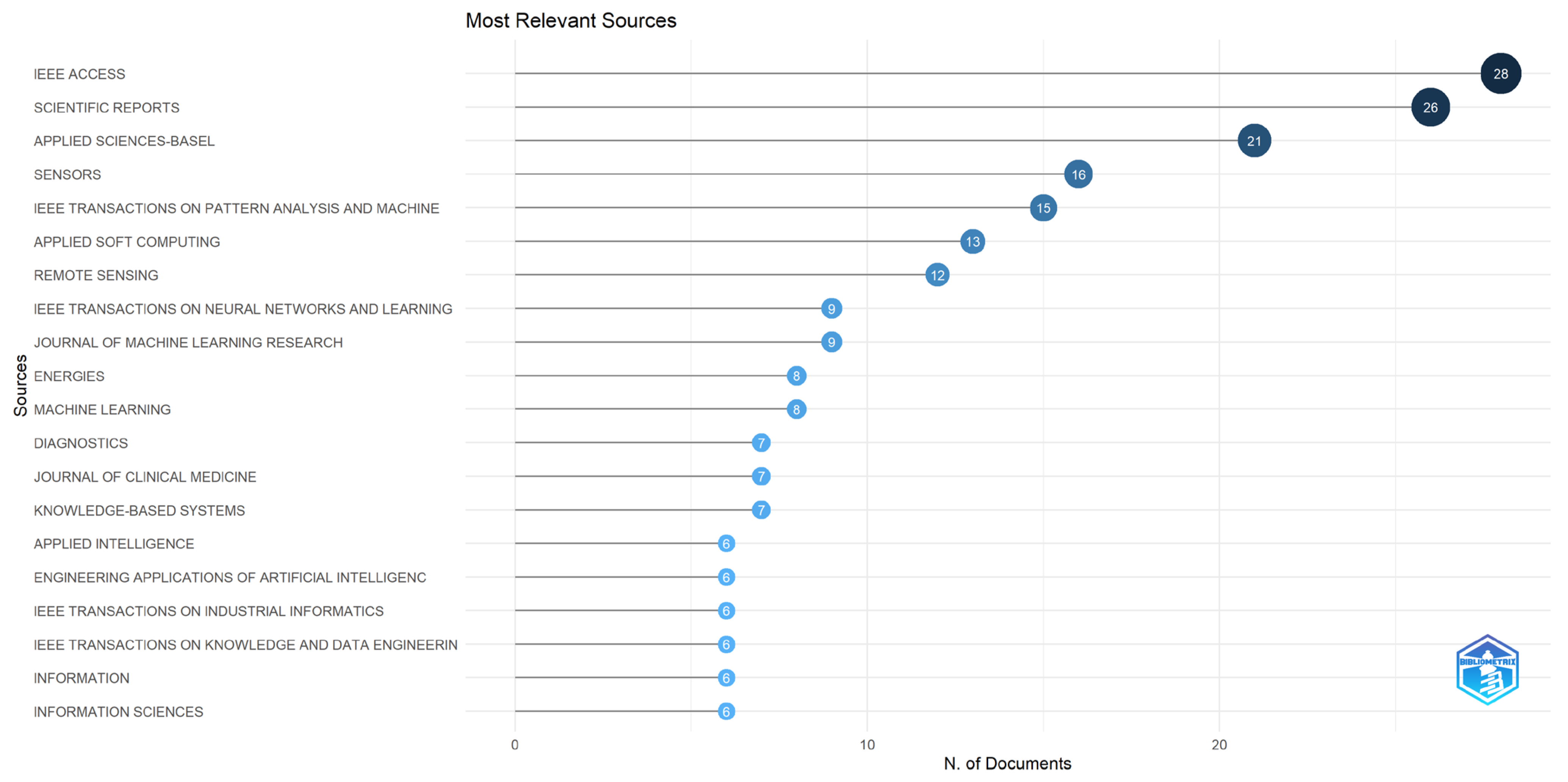

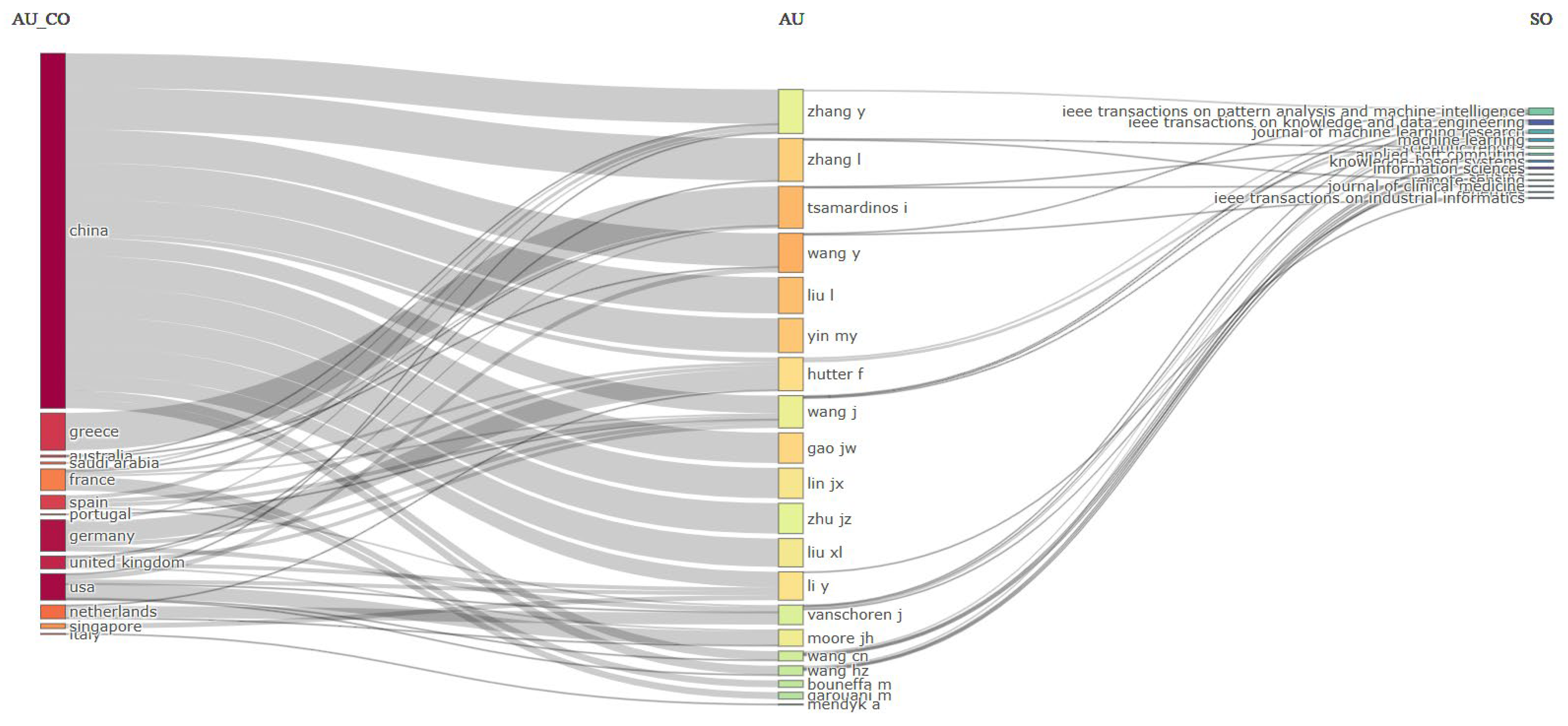

3.2. Sources

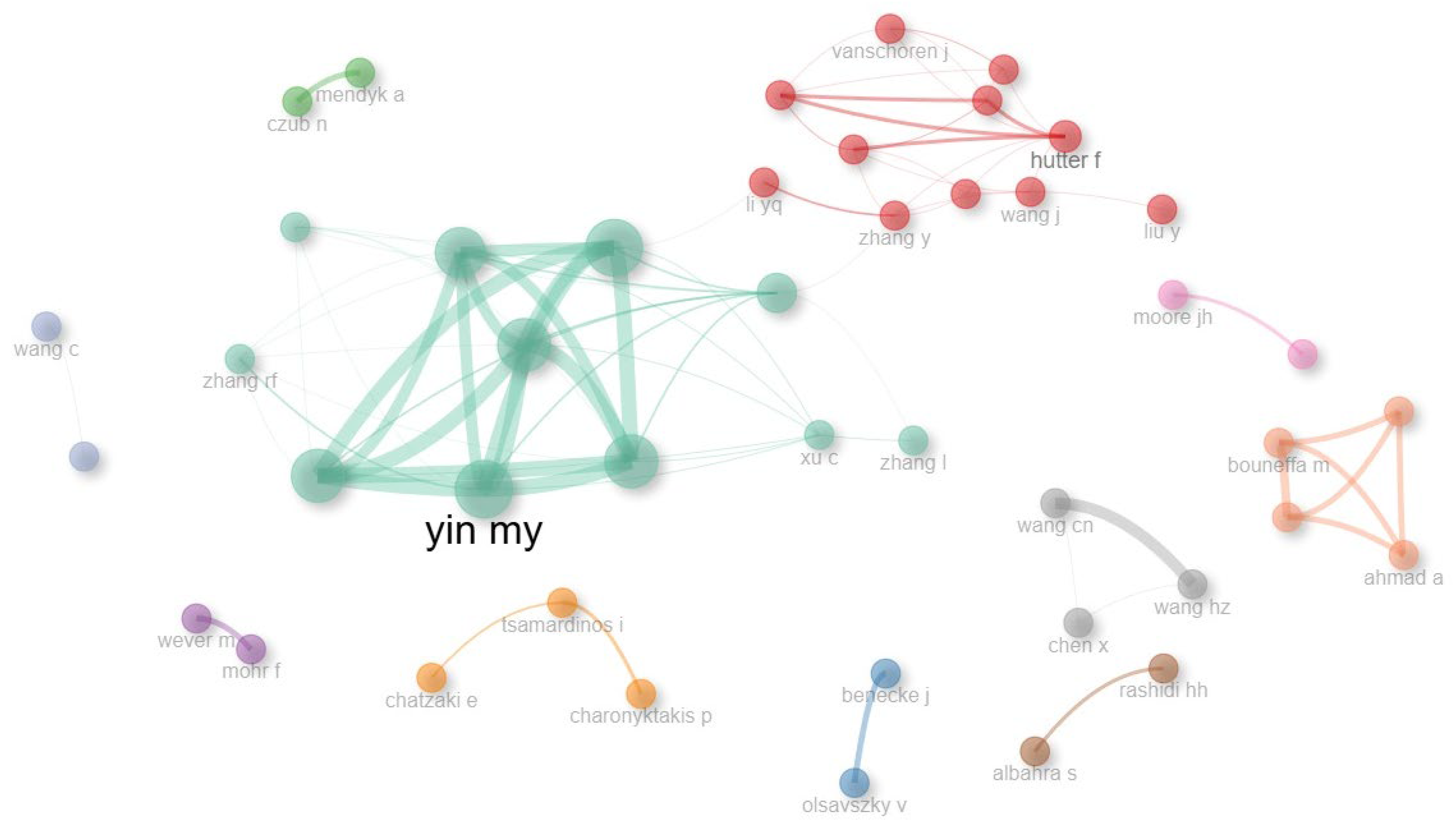

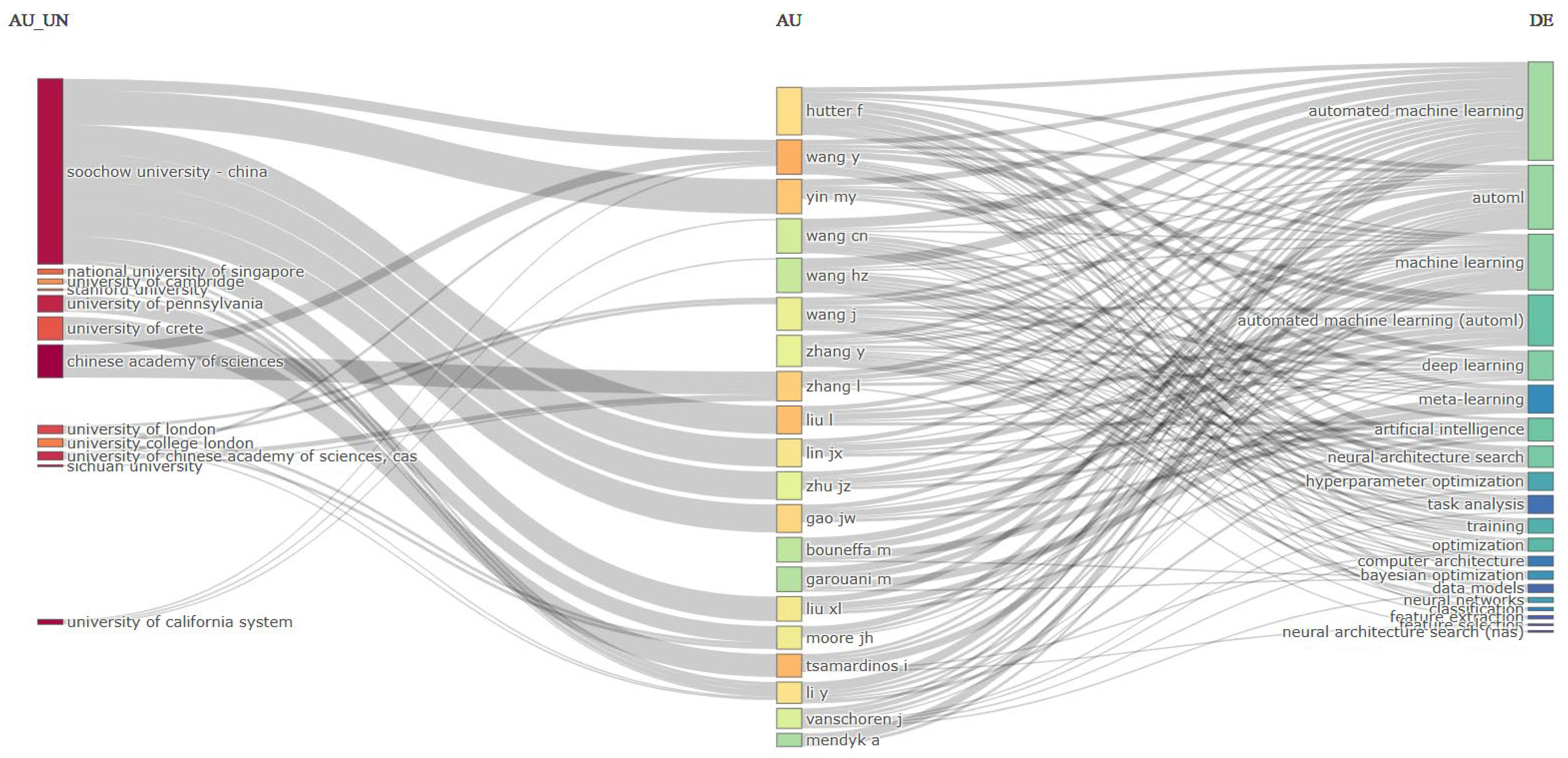

3.3. Authors

3.4. Analysis of the Literature

3.4.1. Top Ten Most Cited Papers—Overview

3.4.2. Top Ten Most Cited Papers—Review

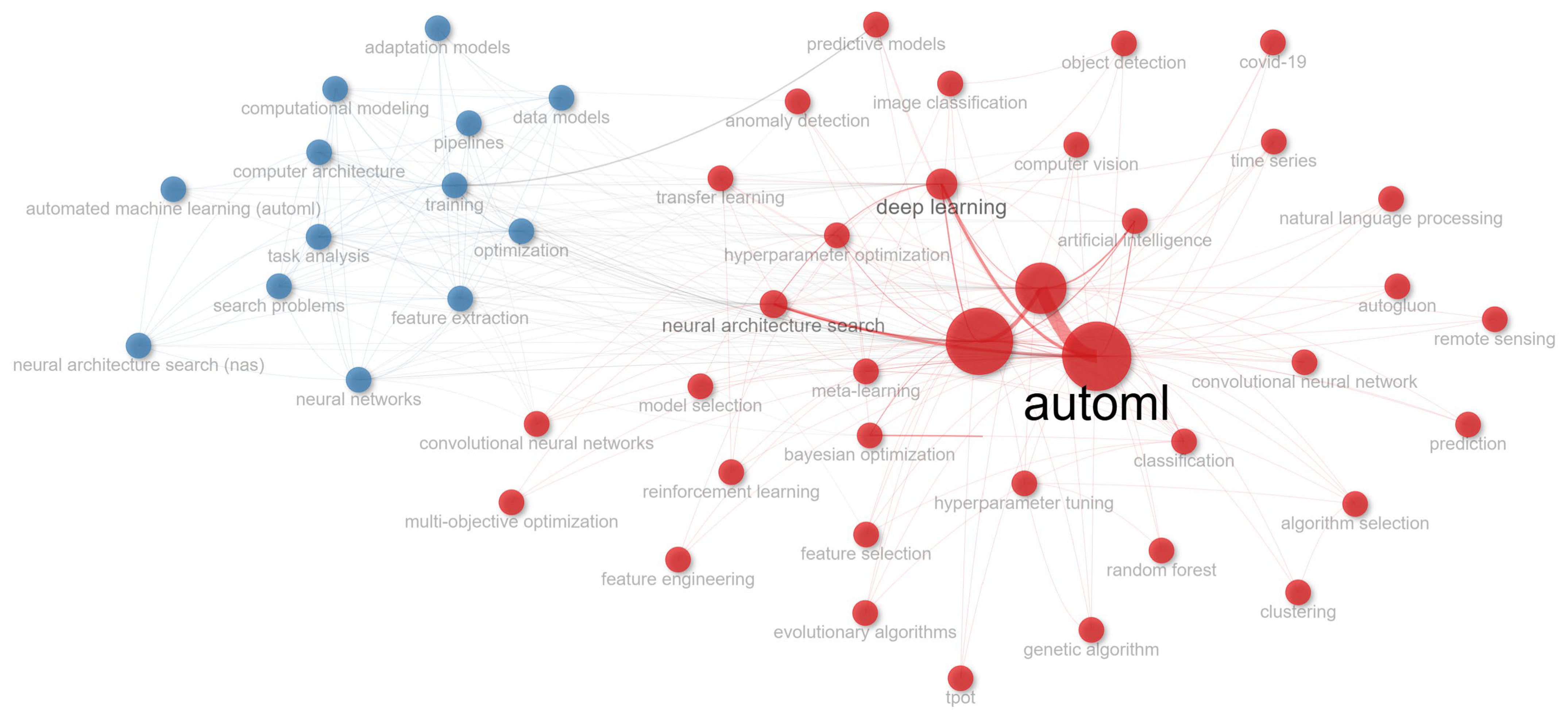

3.4.3. Words Analysis

3.5. Mixed Analysis

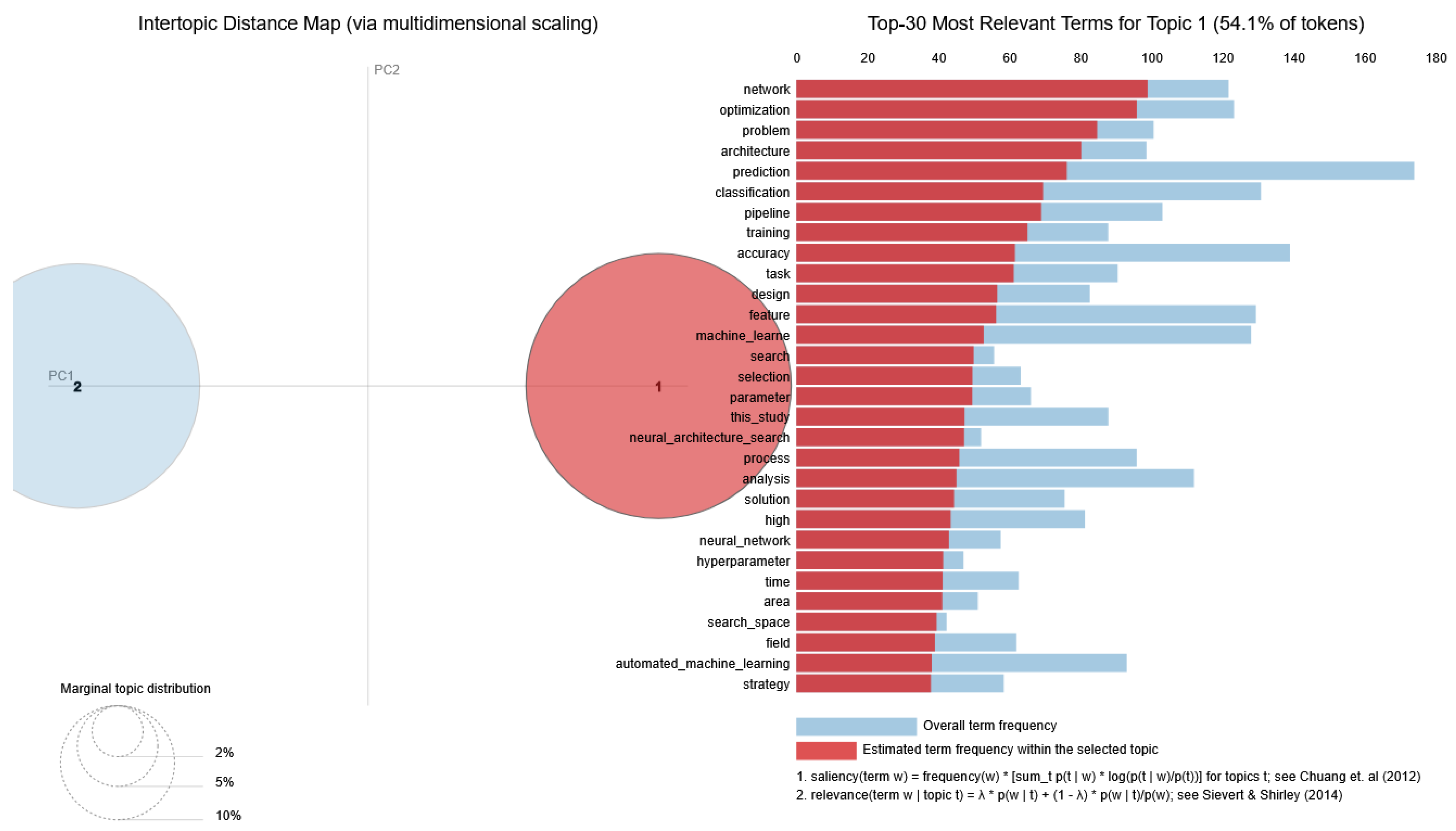

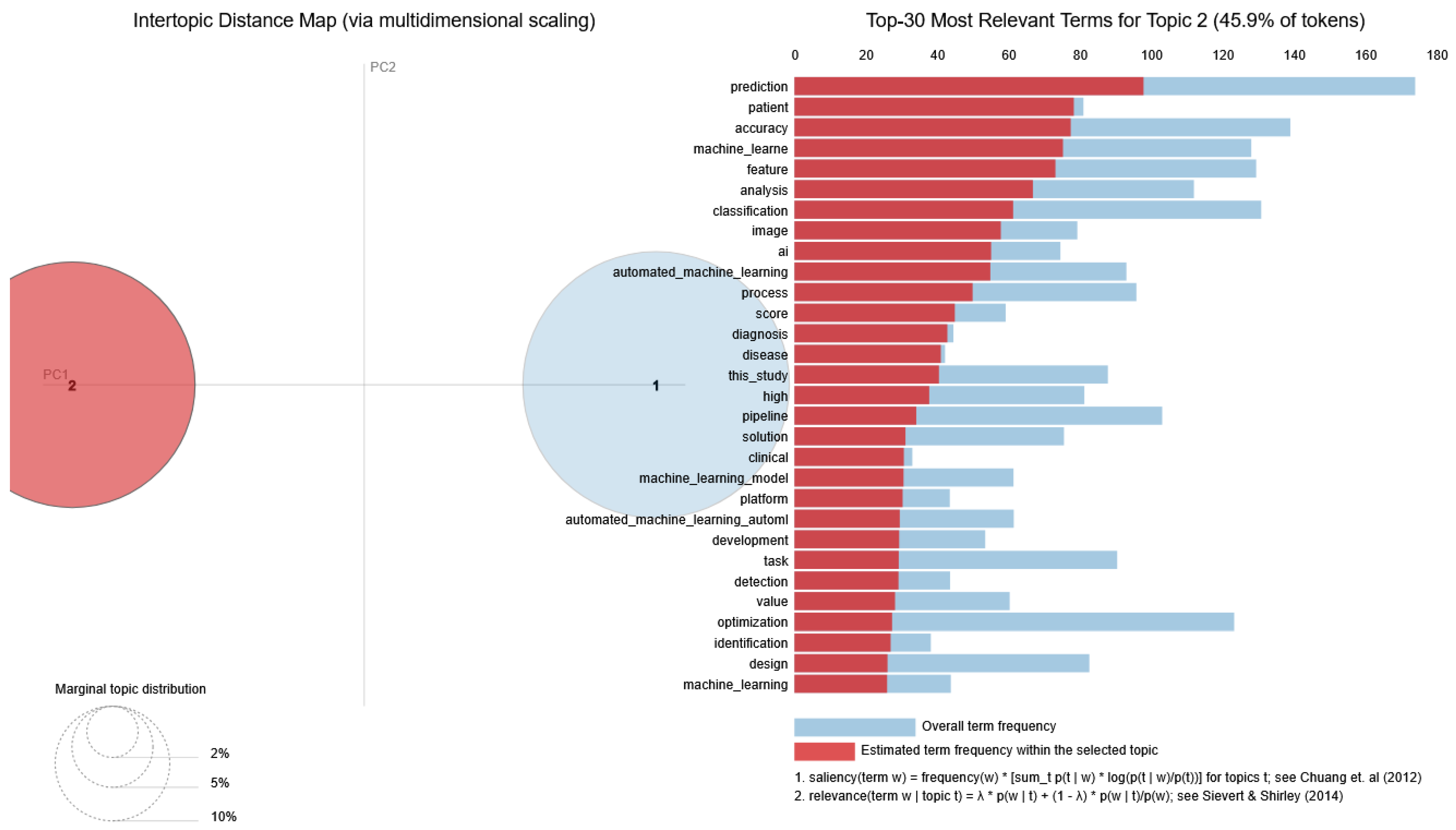

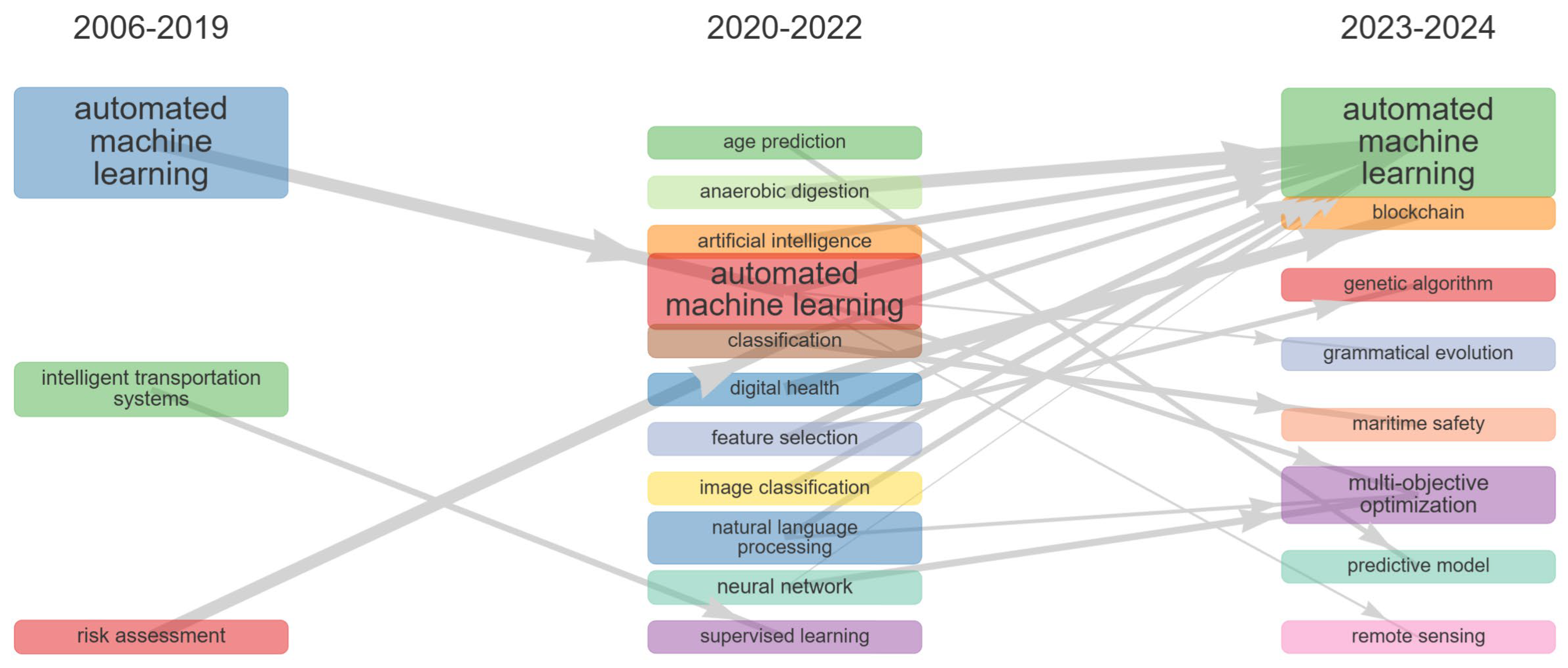

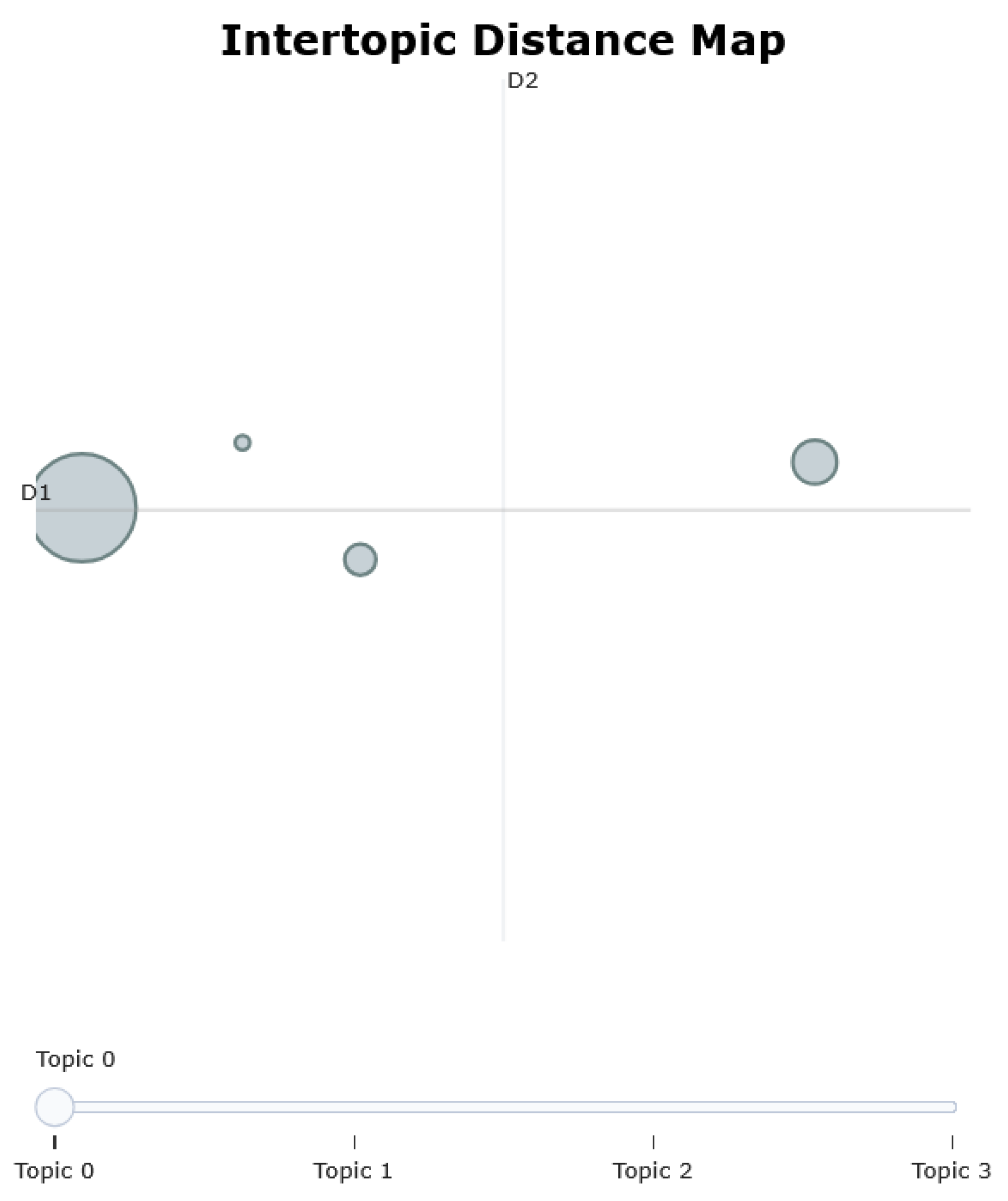

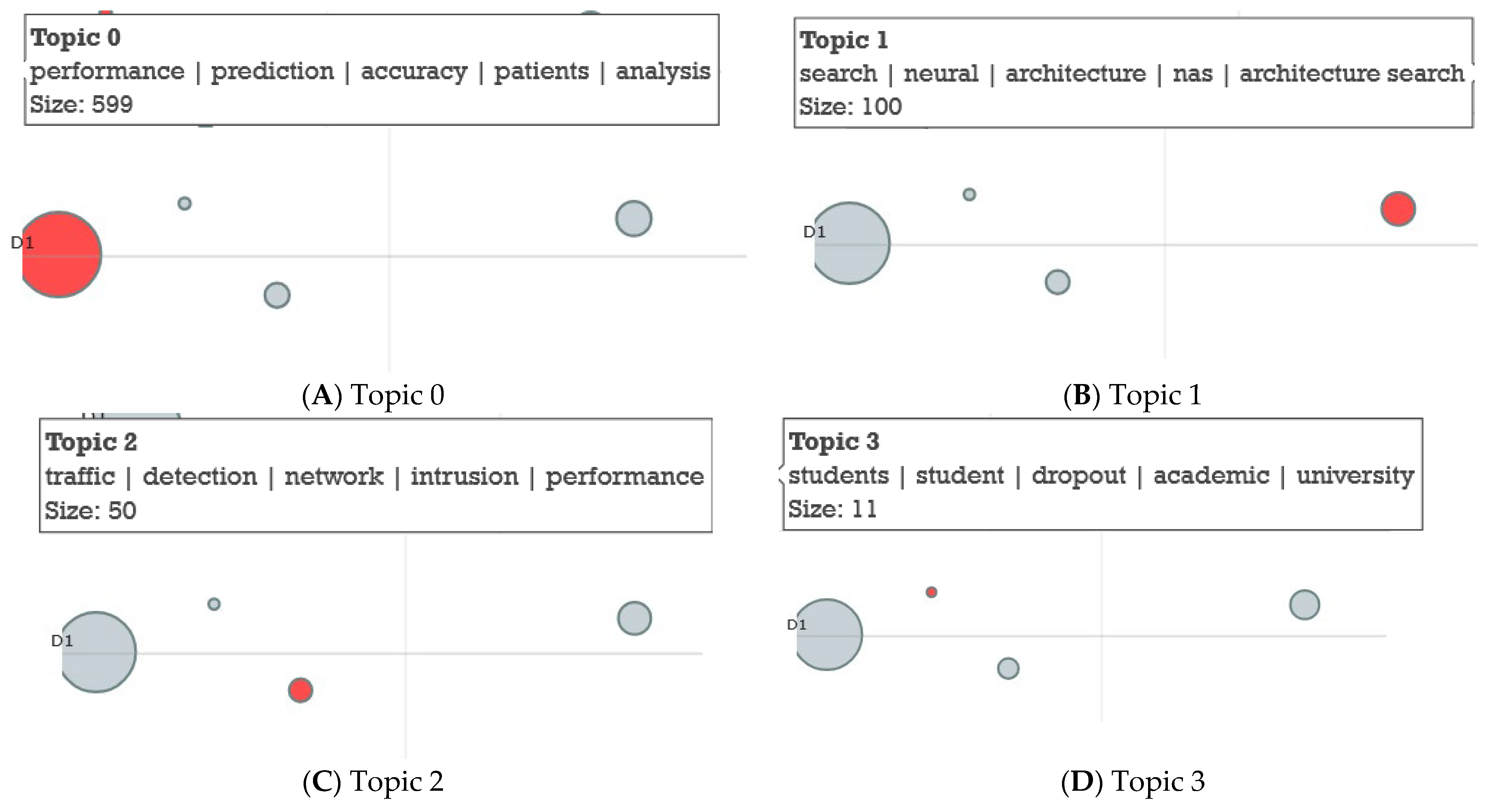

3.6. Topic Analysis

4. Discussions and Limitations

4.1. Bibliometric Analysis Results and Comparison with Other Studies

4.2. Topics Versus Themes Discussion

4.3. Discussions of Specific Themes

4.3.1. Implications of AutoML in Medicine

4.3.2. Implications of AutoML in Finance

4.4. Key Limitations to Applicability of AutoML

4.5. Limitations Related to Dataset Extraction and Used Database

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Bradford’s Law on Source Clustering

Appendix A.2. Journals’ Impact Based on H-Index

Appendix A.3. Author Productivity Based on Lotka’s Law

Appendix A.4. Top Nine Authors’ Local Impact by H-Index

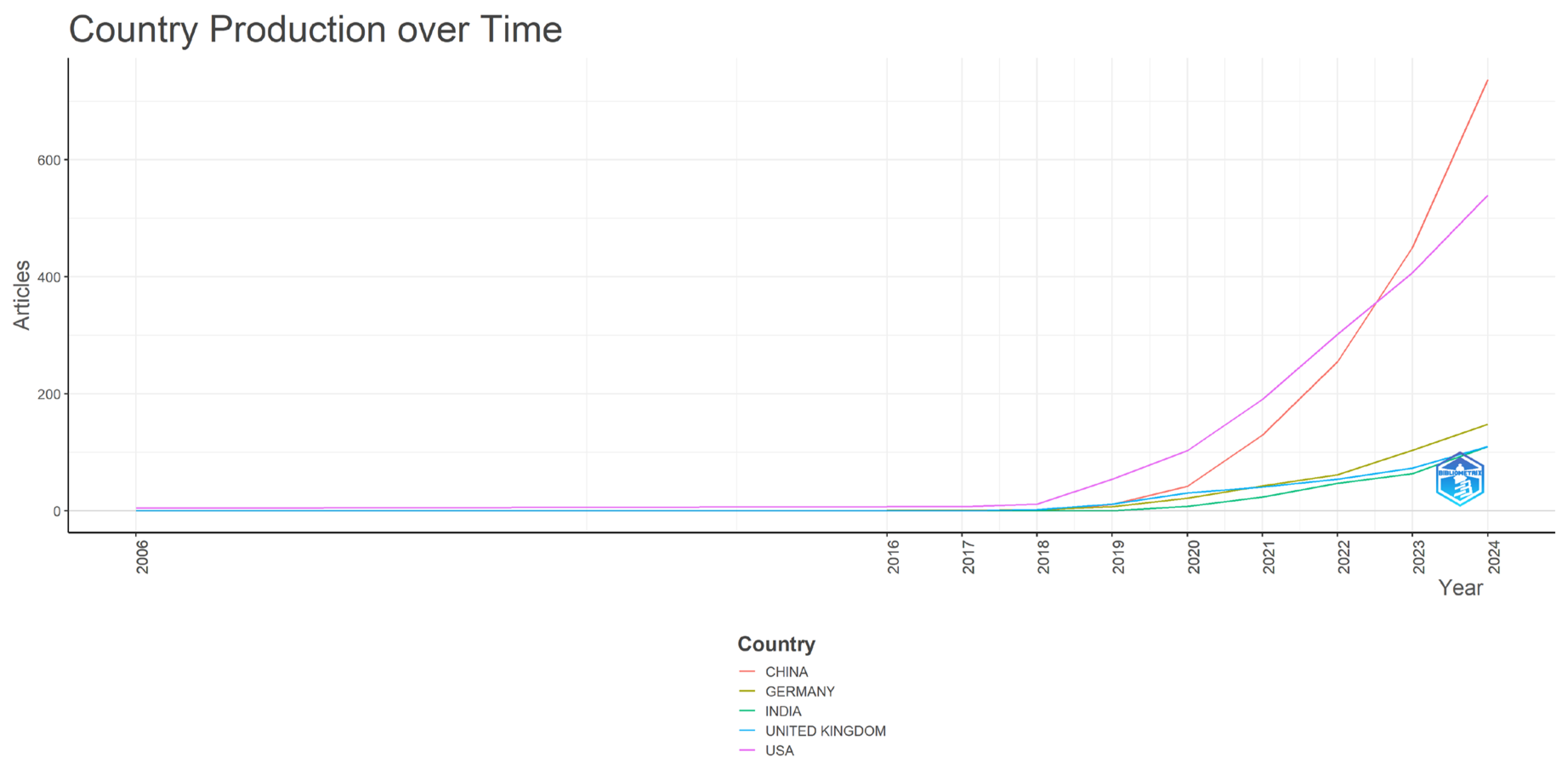

Appendix A.5. Top Five Countries’ Production over Time

Appendix A.6. Brief Summary of the Content of Top Ten Most Global Cited Documents

| No. | Paper (First Author, Year, Journal, Reference) | Title | Data | Purpose |

|---|---|---|---|---|

| 1 | Elsken T., 2019, Journal of Machine Learning Research [77] | Neural Architecture Search: A Survey | Authors did not use data; they explained the concepts in a theoretic manner | To provide an overview of existing work |

| 2 | He X., 2021, Knowledge-Based Systems [8] | AutoML: A Survey of the State-of-the-Art | Authors used CIFAR-10 datasets and ImageNet datasets to compare the algorithms | To present a comprehensive and up-to-date review of the state of the art (SOTA) in AutoML |

| 3 | Feurer M., 2019, The Springer Series on Challenges in Machine Learning [78] | Automated Machine Learning Methods, Systems, Challenges | Authors did not use data; they explained the concepts in a theoretical manner | To illustrate overview of general methods in AutoML |

| 4 | Le TT., 2020, Bioinformatics [79] | Scaling tree-based automated machine learning to biomedical big data with a feature set selector | Authors used both simulated datasets and a real-world RNA expression dataset | To implement two new features in TPOT that help increase the system’s scalability: Feature Set Selector (FSS) and Template |

| 5 | Alaa AM., 2019, PLoS ONE [80] | Cardiovascular disease risk prediction using automated machine learning: A prospective study of 423,604 UK Biobank participants | Authors used a dataset with real records from patients | To test whether ML techniques based on an automated ML framework could improve CVD risk prediction compared to traditional approaches |

| 6 | Zöller MA., 2021, Journal of Artificial Intelligence Research [81] | Benchmark and Survey of Automated Machine Learning Frameworks 2021 | Authors did not use data; they explained the concepts in a theoretical manner | To benchmark 14 most popular AutoML and hyperparameter optimization (HPO) frameworks |

| 7 | Chen Z., 2021, Nucleic Acids Research [82] | LearnPlus: a comprehensive and automated machine-learning platform for nucleic acid and protein sequence analysis, prediction and visualization | Authors use the datasets from a study by Han et al. [129] | To introduce a novel machine learning platform, iLeanPlus |

| 8 | Lindauer M., 2022, Journal of Machine Learning Research [83] | SMAC3: A Versatile Bayesian Optimization Package for Hyperparameter Optimization | Authors used the Letter Dataset for the hyperparameter optimization benchmark | To introduce SMAC3, an open-source Bayesian optimization package for hyperparameter optimization |

| 9 | Karmaker SK., 2021, ACM Computing Surveys (CSUR) [84] | AutoML to Date and Beyond: Challenges and Opportunities | Authors did not use data; they explained the concepts in a theoretical manner | To define a new classification system for AutoML |

| 10 | Xu H, 2023, Soft Computing [85] | A data-driven approach for intrusion and anomaly detection using automated machine learning for the Internet of Things | Authors used data generated by traditional networks that they improved by using Synthetic Minority Oversampling Technique (SMOTE) algorithm | To present a data-driven approach method to detect intrusion and anomaly detection |

Appendix A.7. Co-Occurrence Network for the Terms in Authors’ Keywords

Appendix A.8. Thematic Map Based on Authors’ Keywords

References

- Trabelsi, M.A. The Impact of Artificial Intelligence on Economic Development. J. Electron. Bus. Digit. Econ. 2024, 3, 142–155. [Google Scholar] [CrossRef]

- Haleem, A.; Javaid, M.; Qadri, M.A.; Suman, R. Understanding the Role of Digital Technologies in Education: A Review. Sustain. Oper. Comput. 2022, 3, 275–285. [Google Scholar] [CrossRef]

- Sun, Y.; Lee, H.; Simpson, O. Machine Learning in Communication Systems and Networks. Sensors 2024, 24, 1925. [Google Scholar] [CrossRef]

- Imdadullah, K. The Role of Technology in the Economy. Bull. Bus. Econ. 2023, 12, 427–434. [Google Scholar] [CrossRef]

- Oladimeji, D.; Gupta, K.; Kose, N.A.; Gundogan, K.; Ge, L.; Liang, F. Smart Transportation: An Overview of Technologies and Applications. Sensors 2023, 23, 3880. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.; Choi, J.-Y.; Shim, J.; Kim, M.; Choi, M. Benefits of Information Technology in Healthcare: Artificial Intelligence, Internet of Things, and Personal Health Records. Healthc. Inform. Res. 2023, 29, 323–333. [Google Scholar] [CrossRef]

- Sun, S.; Cao, Z.; Zhu, H.; Zhao, J. A Survey of Optimization Methods from a Machine Learning Perspective. IEEE Trans. Cybern. 2020, 50, 3668–3681. [Google Scholar] [CrossRef]

- He, X.; Zhao, K.; Chu, X. AutoML: A Survey of the State-of-the-Art. Knowl.-Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.; Blum, M.; Hutter, F. Efficient and Robust Automated Machine Learning. In Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2015; Volume 28. [Google Scholar]

- Kotthoff, L.; Thornton, C.; Hoos, H.H.; Hutter, F.; Leyton-Brown, K. Auto-WEKA 2.0: Automatic Model Selection and Hyperparameter Optimization in WEKA. J. Mach. Learn. Res. 2017, 18, 1–5. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Salehin, I.; Islam, M.d.S.; Saha, P.; Noman, S.M.; Tuni, A.; Hasan, M.d.M.; Baten, M.d.A. AutoML: A Systematic Review on Automated Machine Learning with Neural Architecture Search. J. Inf. Intell. 2024, 2, 52–81. [Google Scholar] [CrossRef]

- Tuggener, L.; Amirian, M.; Rombach, K.; Lorwald, S.; Varlet, A.; Westermann, C.; Stadelmann, T. Automated Machine Learning in Practice: State of the Art and Recent Results. In Proceedings of the 2019 6th Swiss Conference on Data Science (SDS), Bern, Switzerland, 14 June 2019; pp. 31–36. [Google Scholar]

- Romero, R.A.A.; Deypalan, M.N.Y.; Mehrotra, S.; Jungao, J.T.; Sheils, N.E.; Manduchi, E.; Moore, J.H. Benchmarking AutoML Frameworks for Disease Prediction Using Medical Claims. BioData Min. 2022, 15, 15. [Google Scholar] [CrossRef]

- Rodríguez, M.; Leon, D.; Lopez, E.; Hernandez, G. Globally Explainable AutoML Evolved Models of Corporate Credit Risk. In Applied Computer Sciences in Engineering; Communications in Computer and Information Science; Figueroa-García, J.C., Franco, C., Díaz-Gutierrez, Y., Hernández-Pérez, G., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 1685, pp. 19–30. ISBN 978-3-031-20610-8. [Google Scholar]

- Padhi, D.K.; Padhy, N.; Panda, B.; Bhoi, A.K. AutoML Trading: A Rule-Based Model to Predict the Bull and Bearish Market. J. Inst. Eng. India Ser. B 2024, 105, 913–928. [Google Scholar] [CrossRef]

- Khan, M.; Dey, R.; Kassim, S.; Mahajan, R.A.; Ghosh, A.; William, P. Machine Learning-Driven Trading Strategies: An Empirical Analysis of BSE Blue-Chip Stocks Using Advanced Algorithmic Approaches. In Proceedings of the 2025 International Conference on Quantum Photonics, Artificial Intelligence, and Networking (QPAIN), Rangpur, Bangladesh, 31 July–2 August 2025; pp. 1–5. [Google Scholar]

- Amberkhani, A.; Bolisetty, H.; Narasimhaiah, R.; Jilani, G.; Baheri, B.; Muhajab, H.; Muhajab, A.; Ghazinour, K.; Shubbar, S. Revolutionizing Cryptocurrency Price Prediction: Advanced Insights from Machine Learning, Deep Learning and Hybrid Models. In Advances in Information and Communication; Lecture Notes in Networks and Systems; Arai, K., Ed.; Springer Nature: Cham, Switzerland, 2025; Volume 1285, pp. 274–286. ISBN 978-3-031-84459-1. [Google Scholar]

- Yahia, A.; Mouhssine, Y.; El Alaoui, A.; El Alaoui, S.O. Exploring Machine Learning-Based Methods for Anomalies Detection: Evidence from Cryptocurrencies. Int. J. Data Sci. Anal. 2025, 20, 3951–3964. [Google Scholar] [CrossRef]

- Hassan, M.; Kabir, M.E.; Islam, M.K.; Alam, E.; Rambe, A.H.; Jusoh, M.; Sameer, M. Mapping the Machine Learning Landscape in Autonomous Vehicles: A Scientometric Review of Research Trends, Applications, Challenges, and Future Directions. IEEE Access 2025, 13, 182036–182077. [Google Scholar] [CrossRef]

- Rosário, A.T.; Boechat, A.C. How Automated Machine Learning Can Improve Business. Appl. Sci. 2024, 14, 8749. [Google Scholar] [CrossRef]

- Ferreira, L.; Pilastri, A.; Martins, C.M.; Pires, P.M.; Cortez, P. A Comparison of AutoML Tools for Machine Learning, Deep Learning and XGBoost. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Mahrishi, M.; Sharma, G.; Morwal, S.; Jain, V.; Kalla, M. Chapter 7 Data Model Recommendations for Real-Time Machine Learning Applications: A Suggestive Approach. In Machine Learning for Sustainable Development; Kant Hiran, K., Khazanchi, D., Kumar Vyas, A., Padmanaban, S., Eds.; De Gruyter: Berlin, Germany, 2021; pp. 115–128. ISBN 978-3-11-070251-4. [Google Scholar]

- Schuh, G.; Stroh, M.-F.; Benning, J. Case-Study-Based Requirements Analysis of Manufacturing Companies for Auto-ML Solutions. In Advances in Production Management Systems. Smart Manufacturing and Logistics Systems: Turning Ideas into Action; IFIP Advances in Information and Communication Technology; Kim, D.Y., Von Cieminski, G., Romero, D., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 663, pp. 43–50. ISBN 978-3-031-16406-4. [Google Scholar]

- Zhao, R.; Yang, Z.; Liang, D.; Xue, F. Automated Machine Learning in the Smart Construction Era: Significance and Accessibility for Industrial Classification and Regression Tasks. In Proceedings of the 28th International Symposium on Advancement of Construction Management and Real Estate; Lecture Notes in Operations Research; Li, D., Zou, P.X.W., Yuan, J., Wang, Q., Peng, Y., Eds.; Springer Nature: Singapore, 2024; pp. 2005–2020. ISBN 978-981-97-1948-8. [Google Scholar]

- Sandu, A.; Cotfas, L.-A.; Delcea, C.; Ioanăș, C.; Florescu, M.-S.; Orzan, M. Machine Learning and Deep Learning Applications in Disinformation Detection: A Bibliometric Assessment. Electronics 2024, 13, 4352. [Google Scholar] [CrossRef]

- Innes, H.; Innes, M. De-Platforming Disinformation: Conspiracy Theories and Their Control. Inf. Commun. Soc. 2023, 26, 1262–1280. [Google Scholar] [CrossRef]

- Oji, M. Conspiracy Theories, Misinformation, Disinformation and the Coronavirus: A Burgeoning of Post-Truth in the Social Media. J. Afr. Media Stud. 2022, 14, 439–453. [Google Scholar] [CrossRef]

- Lewandowsky, S. Climate Change Disinformation and How to Combat It. Annu. Rev. Public Health 2021, 42, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Hassan, I.; Musa, R.M.; Latiff Azmi, M.N.; Razali Abdullah, M.; Yusoff, S.Z. Analysis of Climate Change Disinformation across Types, Agents and Media Platforms. Inf. Dev. 2024, 40, 504–516. [Google Scholar] [CrossRef]

- Lanoszka, A. Disinformation in International Politics. Eur. J. Int. Secur. 2019, 4, 227–248. [Google Scholar] [CrossRef]

- Mejias, U.A.; Vokuev, N.E. Disinformation and the Media: The Case of Russia and Ukraine. Media Cult. Soc. 2017, 39, 1027–1042. [Google Scholar] [CrossRef]

- McKay, S.; Tenove, C. Disinformation as a Threat to Deliberative Democracy. Political Res. Q. 2021, 74, 703–717. [Google Scholar] [CrossRef]

- Marín-Rodríguez, N.J.; González-Ruiz, J.D.; Valencia-Arias, A. Incorporating Green Bonds into Portfolio Investments: Recent Trends and Further Research. Sustainability 2023, 15, 14897. [Google Scholar] [CrossRef]

- Tătaru, G.-C.; Domenteanu, A.; Delcea, C.; Florescu, M.S.; Orzan, M.; Cotfas, L.-A. Navigating the Disinformation Maze: A Bibliometric Analysis of Scholarly Efforts. Information 2024, 15, 742. [Google Scholar] [CrossRef]

- Domenteanu, A.; Cotfas, L.-A.; Diaconu, P.; Tudor, G.-A.; Delcea, C. AI on Wheels: Bibliometric Approach to Mapping of Research on Machine Learning and Deep Learning in Electric Vehicles. Electronics 2025, 14, 378. [Google Scholar] [CrossRef]

- Web of Science. Available online: https://www.webofscience.com/ (accessed on 18 March 2025).

- Sandu, A.; Cotfas, L.-A.; Stănescu, A.; Delcea, C. Guiding Urban Decision-Making: A Study on Recommender Systems in Smart Cities. Electronics 2024, 13, 2151. [Google Scholar] [CrossRef]

- Domenteanu, A.; Delcea, C.; Florescu, M.-S.; Gherai, D.S.; Bugnar, N.; Cotfas, L.-A. United in Green: A Bibliometric Exploration of Renewable Energy Communities. Electronics 2024, 13, 3312. [Google Scholar] [CrossRef]

- Cotfas, L.-A.; Sandu, A.; Delcea, C.; Diaconu, P.; Frăsineanu, C.; Stănescu, A. From Transformers to ChatGPT: An Analysis of Large Language Models Research. IEEE Access 2025, 13, 146889–146931. [Google Scholar] [CrossRef]

- Singh, V.K.; Singh, P.; Karmakar, M.; Leta, J.; Mayr, P. The Journal Coverage of Web of Science, Scopus and Dimensions: A Comparative Analysis. Scientometrics 2021, 126, 5113–5142. [Google Scholar] [CrossRef]

- Panait, M.; Cibu, B.R.; Teodorescu, D.M.; Delcea, C. European Fund Absorption and Contribution to Business Environment Development: Research Output Analysis Through Bibliometric and Topic Modeling Analysis. Businesses 2025, 5, 45. [Google Scholar] [CrossRef]

- Domenteanu, A.; Cibu, B.; Delcea, C.; Cotfas, L.-A. The World of Agent-Based Modeling: A Bibliometric and Analytical Exploration. Complexity 2025, 2025, 2636704. [Google Scholar] [CrossRef]

- Birkle, C.; Pendlebury, D.A.; Schnell, J.; Adams, J. Web of Science as a Data Source for Research on Scientific and Scholarly Activity. Quant. Sci. Stud. 2020, 1, 363–376. [Google Scholar] [CrossRef]

- Iqbal, S.; Hassan, S.-U.; Aljohani, N.R.; Alelyani, S.; Nawaz, R.; Bornmann, L. A Decade of In-Text Citation Analysis Based on Natural Language Processing and Machine Learning Techniques: An Overview of Empirical Studies. Scientometrics 2021, 126, 6551–6599. [Google Scholar] [CrossRef]

- Cobo, M.J.; Martínez, M.A.; Gutiérrez-Salcedo, M.; Fujita, H.; Herrera-Viedma, E. 25 years at Knowledge-Based Systems: A Bibliometric Analysis. Knowl.-Based Syst. 2015, 80, 3–13. [Google Scholar] [CrossRef]

- Bakır, M.; Özdemir, E.; Akan, Ş.; Atalık, Ö. A Bibliometric Analysis of Airport Service Quality. J. Air Transp. Manag. 2022, 104, 102273. [Google Scholar] [CrossRef]

- Valente, A.; Holanda, M.; Mariano, A.M.; Furuta, R.; Da Silva, D. Analysis of Academic Databases for Literature Review in the Computer Science Education Field. In Proceedings of the 2022 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 8–11 October 2022; pp. 1–7. [Google Scholar]

- Liu, W. The Data Source of This Study Is Web of Science Core Collection? Not Enough. Scientometrics 2019, 121, 1815–1824. [Google Scholar] [CrossRef]

- Liu, F. Retrieval Strategy and Possible Explanations for the Abnormal Growth of Research Publications: Re-Evaluating a Bibliometric Analysis of Climate Change. Scientometrics 2023, 128, 853–859. [Google Scholar] [CrossRef]

- Donner, P. Document Type Assignment Accuracy in the Journal Citation Index Data of Web of Science. Scientometrics 2017, 113, 219–236. [Google Scholar] [CrossRef]

- Marius Profiroiu, C.; Cibu, B.; Delcea, C.; Cotfas, L.-A. Charting the Course of School Dropout Research: A Bibliometric Exploration. IEEE Access 2024, 12, 71453–71478. [Google Scholar] [CrossRef]

- Camelia, D. Grey Systems Theory in Economics—Bibliometric Analysis and Applications’ Overview. Grey Syst. Theory Appl. 2015, 5, 244–262. [Google Scholar] [CrossRef]

- Delcea, C.; Domenteanu, A.; Ioanăș, C.; Vargas, V.M.; Ciucu-Durnoi, A.N. Quantifying Neutrosophic Research: A Bibliometric Study. Axioms 2023, 12, 1083. [Google Scholar] [CrossRef]

- Řehůřek, R.; Sojka, P. Software Framework for Topic Modelling with Large Corpora. In Proceedings of the LREC 2010 Workshop on New Challenges for NLP, Valletta, Malta, 22 May 2010. [Google Scholar] [CrossRef]

- Grootendorst, M. BERTopic: Neural Topic Modeling with a Class-Based TF-IDF Procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Montani, I.; Honnibal, M.; Boyd, A.; Landeghem, S.V.; Peters, H. Explosion/spaCy: V3.7.2: Fixes for APIs and Requirements 2023. Available online: https://zenodo.org/records/10009823 (accessed on 2 October 2025).

- Aria, M.; Cuccurullo, C. Bibliometrix: An R-Tool for Comprehensive Science Mapping Analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Ofer, D.; Kaufman, H.; Linial, M. What’s next? Forecasting Scientific Research Trends. Heliyon 2024, 10, e23781. [Google Scholar] [CrossRef]

- Vincent-Lancrin, S. What Is Changing in Academic Research? Trends and Futures Scenarios. Euro J. Educ. 2006, 41, 169–202. [Google Scholar] [CrossRef]

- KeyWords Plus Generation, Creation, and Changes. Available online: https://support.clarivate.com/ScientificandAcademicResearch/s/article/KeyWords-Plus-generation-creation-and-changes?language=en_US (accessed on 17 November 2024).

- Brookes, B.C. Bradford’s Law and the Bibliography of Science. Nature 1969, 224, 953–956. [Google Scholar] [CrossRef]

- Kawimbe, S. The H-Index Explained: Tools, Limitations and Strategies for Academic Success. Adv. Soc. Sci. Res. J. 2024, 11, 56–61. [Google Scholar] [CrossRef]

- Ahmad, M.; Batcha, D.M.S.; Jahina, S.R. Testing Lotka’s Law and Pattern of Author Productivity in the Scholarly Publications of Artificial Intelligence. arXiv 2021, arXiv:2102.09182. [Google Scholar] [CrossRef]

- Altarturi, H.H.M.; Nor, A.R.M.; Jaafar, N.I.; Anuar, N.B. A Bibliometric and Content Analysis of Technological Advancement Applications in Agricultural E-Commerce. Electron. Commer. Res. 2025, 25, 805–848. [Google Scholar] [CrossRef]

- Feurer, M.; Eggensperger, K.; Falkner, S.; Lindauer, M.; Hutter, F. Auto-Sklearn 2.0: Hands-Free AutoML via Meta-Learning. arXiv 2020, arXiv:2007.04074. [Google Scholar] [CrossRef]

- Yin, M.; Liang, X.; Wang, Z.; Zhou, Y.; He, Y.; Xue, Y.; Gao, J.; Lin, J.; Yu, C.; Liu, L.; et al. Identification of Asymptomatic COVID-19 Patients on Chest CT Images Using Transformer-Based or Convolutional Neural Network–Based Deep Learning Models. J. Digit. Imaging 2023, 36, 827–836. [Google Scholar] [CrossRef]

- Romano, J.D.; Le, T.T.; Fu, W.; Moore, J.H. TPOT-NN: Augmenting Tree-Based Automated Machine Learning with Neural Network Estimators. Genet. Program. Evolvable Mach. 2021, 22, 207–227. [Google Scholar] [CrossRef]

- Mohr, F.; Wever, M. Naive Automated Machine Learning. Mach. Learn. 2023, 112, 1131–1170. [Google Scholar] [CrossRef]

- Garouani, M.; Ahmad, A.; Bouneffa, M.; Hamlich, M. AMLBID: An Auto-Explained Automated Machine Learning Tool for Big Industrial Data. SoftwareX 2022, 17, 100919. [Google Scholar] [CrossRef]

- Wang, C.; Wang, H.; Zhou, C.; Chen, H. ExperienceThinking: Constrained Hyperparameter Optimization Based on Knowledge and Pruning. Knowl.-Based Syst. 2021, 223, 106602. [Google Scholar] [CrossRef]

- Baratchi, M.; Wang, C.; Limmer, S.; Van Rijn, J.N.; Hoos, H.; Bäck, T.; Olhofer, M. Automated Machine Learning: Past, Present and Future. Artif. Intell. Rev. 2024, 57, 122. [Google Scholar] [CrossRef]

- Benecke, J.; Benecke, C.; Ciutan, M.; Dosius, M.; Vladescu, C.; Olsavszky, V. Retrospective Analysis and Time Series Forecasting with Automated Machine Learning of Ascariasis, Enterobiasis and Cystic Echinococcosis in Romania. PLoS Negl. Trop. Dis. 2021, 15, e0009831. [Google Scholar] [CrossRef]

- Gogas, P.; Papadimitriou, T.; Agrapetidou, A. Forecasting Bank Failures and Stress Testing: A Machine Learning Approach. Int. J. Forecast. 2018, 34, 440–455. [Google Scholar] [CrossRef]

- Rashidi, H.H.; Makley, A.; Palmieri, T.L.; Albahra, S.; Loegering, J.; Fang, L.; Yamaguchi, K.; Gerlach, T.; Rodriquez, D.; Tran, N.K. Enhancing Military Burn- and Trauma-Related Acute Kidney Injury Prediction Through an Automated Machine Learning Platform and Point-of-Care Testing. Arch. Pathol. Lab. Med. 2021, 145, 320–326. [Google Scholar] [CrossRef]

- Czub, N.; Pacławski, A.; Szlęk, J.; Mendyk, A. Curated Database and Preliminary AutoML QSAR Model for 5-HT1A Receptor. Pharmaceutics 2021, 13, 1711. [Google Scholar] [CrossRef]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1–21. [Google Scholar]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.T.; Blum, M.; Hutter, F. Auto-Sklearn: Efficient and Robust Automated Machine Learning. In Automated Machine Learning; The Springer Series on Challenges in Machine Learning; Hutter, F., Kotthoff, L., Vanschoren, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 113–134. ISBN 978-3-030-05317-8. [Google Scholar]

- Le, T.T.; Fu, W.; Moore, J.H. Scaling Tree-Based Automated Machine Learning to Biomedical Big Data with a Feature Set Selector. Bioinformatics 2020, 36, 250–256. [Google Scholar] [CrossRef]

- Alaa, A.M.; Bolton, T.; Di Angelantonio, E.; Rudd, J.H.F.; Van Der Schaar, M. Cardiovascular Disease Risk Prediction Using Automated Machine Learning: A Prospective Study of 423,604 UK Biobank Participants. PLoS ONE 2019, 14, e0213653. [Google Scholar] [CrossRef] [PubMed]

- Zöller, M.-A.; Huber, M.F. Benchmark and Survey of Automated Machine Learning Frameworks. J. Artif. Intell. Res. 2021, 70, 409–472. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, P.; Li, C.; Li, F.; Xiang, D.; Chen, Y.-Z.; Akutsu, T.; Daly, R.J.; Webb, G.I.; Zhao, Q.; et al. iLearnPlus: A Comprehensive and Automated Machine-Learning Platform for Nucleic Acid and Protein Sequence Analysis, Prediction and Visualization. Nucleic Acids Res. 2021, 49, e60. [Google Scholar] [CrossRef]

- Lindauer, M.; Eggensperger, K.; Feurer, M.; Biedenkapp, A.; Deng, D.; Benjamins, C.; Ruhopf, T.; Sass, R.; Hutter, F. SMAC3: A Versatile Bayesian Optimization Package for Hyperparameter Optimization. J. Mach. Learn. Res. 2022, 23, 1–9. [Google Scholar]

- Karmaker (“Santu”), S.K.; Hassan, M.M.; Smith, M.J.; Xu, L.; Zhai, C.; Veeramachaneni, K. AutoML to Date and Beyond: Challenges and Opportunities. ACM Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Xu, H.; Sun, Z.; Cao, Y.; Bilal, H. A Data-Driven Approach for Intrusion and Anomaly Detection Using Automated Machine Learning for the Internet of Things. Soft Comput. 2023, 27, 14469–14481. [Google Scholar] [CrossRef]

- Salmani Pour Avval, S.; Eskue, N.D.; Groves, R.M.; Yaghoubi, V. Systematic Review on Neural Architecture Search. Artif. Intell. Rev. 2025, 58, 73. [Google Scholar] [CrossRef]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Wu, J.; Chen, S.; Liu, X. Efficient Hyperparameter Optimization through Model-Based Reinforcement Learning. Neurocomputing 2020, 409, 381–393. [Google Scholar] [CrossRef]

- Olson, R.S.; Moore, J.H. TPOT: A Tree-Based Pipeline Optimization Tool for Automating Machine Learning. In Automated Machine Learning; The Springer Series on Challenges in Machine Learning; Hutter, F., Kotthoff, L., Vanschoren, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 151–160. ISBN 978-3-030-05317-8. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. arXiv 2016, arXiv:1603.02754. [Google Scholar] [CrossRef]

- Bajaj, I.; Arora, A.; Hasan, M.M.F. Black-Box Optimization: Methods and Applications. In Black Box Optimization, Machine Learning, and No-Free Lunch Theorems; Springer Optimization and Its Applications; Pardalos, P.M., Rasskazova, V., Vrahatis, M.N., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 170, pp. 35–65. ISBN 978-3-030-66514-2. [Google Scholar]

- Alshinwan, M.; Abualigah, L.; Shehab, M.; Elaziz, M.A.; Khasawneh, A.M.; Alabool, H.; Hamad, H.A. Dragonfly Algorithm: A Comprehensive Survey of Its Results, Variants, and Applications. Multimed. Tools Appl. 2021, 80, 14979–15016. [Google Scholar] [CrossRef]

- Falkner, S.; Klein, A.; Hutter, F. BOHB: Robust and Efficient Hyperparameter Optimization at Scale. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization. arXiv 2016, arXiv:1603.06560. [Google Scholar] [CrossRef]

- Andonie, R.; Florea, A.-C. Weighted Random Search for CNN Hyperparameter Optimization. Int. J. Comput. Commun. Control 2020, 15, 3868. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. arXiv 2011, arXiv:1106.1813. [Google Scholar] [CrossRef]

- Chuang, J.; Manning, C.D.; Heer, J. Termite: Visualization techniques for assessing textual topic models. In Proceedings of the International Working Conference on Advanced Visual Interfaces (AVI’12), Capri Island, Italy, 21–25 May 2012; pp. 74–77. [Google Scholar]

- Sievert, C.; Shirley, K. LDAvis: A method for visualizing and interpreting topics. In Proceedings of the Workshop on Interactive Language Learning, Visualization, and Interfaces, Baltimore, MA, USA, 27 June 2014; pp. 63–70. [Google Scholar]

- Wason, R. Deep Learning: Evolution and Expansion. Cogn. Syst. Res. 2018, 52, 701–708. [Google Scholar] [CrossRef]

- Angarita-Zapata, J.S.; Maestre-Gongora, G.; Calderín, J.F. A Bibliometric Analysis and Benchmark of Machine Learning and AutoML in Crash Severity Prediction: The Case Study of Three Colombian Cities. Sensors 2021, 21, 8401. [Google Scholar] [CrossRef] [PubMed]

- Ilić, L.; Šijan, A.; Predić, B.; Viduka, D.; Karabašević, D. Research Trends in Artificial Intelligence and Security—Bibliometric Analysis. Electronics 2024, 13, 2288. [Google Scholar] [CrossRef]

- Álvarez-Bornstein, B.; Díaz-Faes, A.A.; Bordons, M. What Characterises Funded Biomedical Research? Evidence from a Basic and a Clinical Domain. Scientometrics 2019, 119, 805–825. [Google Scholar] [CrossRef]

- Shi, J.; Duan, K.; Wu, G.; Zhang, R.; Feng, X. Comprehensive Metrological and Content Analysis of the Public–Private Partnerships (PPPs) Research Field: A New Bibliometric Journey. Scientometrics 2020, 124, 2145–2184. [Google Scholar] [CrossRef]

- Jee, S.J.; Sohn, S.Y. Firms’ Influence on the Evolution of Published Knowledge When a Science-Related Technology Emerges: The Case of Artificial Intelligence. J. Evol. Econ. 2023, 33, 209–247. [Google Scholar] [CrossRef]

- Wang, Z.; Zhu, G.; Li, S. Mapping Knowledge Landscapes and Emerging Trends in Artificial Intelligence for Antimicrobial Resistance: Bibliometric and Visualization Analysis. Front. Med. 2025, 12, 1492709. [Google Scholar] [CrossRef]

- Potluru, A.; Arora, A.; Arora, A.; Aslam Joiya, S. Automated Machine Learning (AutoML) for the Diagnosis of Melanoma Skin Lesions From Consumer-Grade Camera Photos. Cureus 2024, 16, e67559. [Google Scholar] [CrossRef]

- Elangovan, K.; Lim, G.; Ting, D. A Comparative Study of an on Premise AutoML Solution for Medical Image Classification. Sci. Rep. 2024, 14, 10483. [Google Scholar] [CrossRef]

- Schmitt, M. Explainable Automated Machine Learning for Credit Decisions: Enhancing Human Artificial Intelligence Collaboration in Financial Engineering. arXiv 2024, arXiv:2402.03806. [Google Scholar] [CrossRef]

- Jha, S.; Guillen, M.; Christopher Westland, J. Employing Transaction Aggregation Strategy to Detect Credit Card Fraud. Expert. Syst. Appl. 2012, 39, 12650–12657. [Google Scholar] [CrossRef]

- Nnenna, I.O.; Olufunke, A.A.; Abbey, N.I.; Onyeka, C.O.; Chikezie, P.M.E. AI-Powered Customer Experience Optimization: Enhancing Financial Inclusion in Underserved Communities. Int. J. Appl. Res. Soc. Sci. 2024, 6, 2487–2511. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhou, G.; Zhu, Y. Model Selection via Automated Machine Learning. SSRN J. 2023. [Google Scholar] [CrossRef]

- Pang, R.; Xi, Z.; Ji, S.; Luo, X.; Wang, T. On the Security Risks of AutoML. arXiv 2021, arXiv:2110.06018. [Google Scholar]

- Blanzeisky, W.; Cunningham, P. Algorithmic Factors Influencing Bias in Machine Learning. In Machine Learning and Principles and Practice of Knowledge Discovery in Databases; Communications in Computer and Information Science; Kamp, M., Koprinska, I., Bibal, A., Bouadi, T., Frénay, B., Galárraga, L., Oramas, J., Adilova, L., Krishnamurthy, Y., Kang, B., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 1524, pp. 559–574. ISBN 978-3-030-93735-5. [Google Scholar]

- Xu, J.; Xiao, Y.; Wang, W.H.; Ning, Y.; Shenkman, E.A.; Bian, J.; Wang, F. Algorithmic Fairness in Computational Medicine. eBioMedicine 2022, 84, 104250. [Google Scholar] [CrossRef]

- Bajracharya, A.; Khakurel, U.; Harvey, B.; Rawat, D.B. Recent Advances in Algorithmic Biases and Fairness in Financial Services: A Survey. In Proceedings of the Future Technologies Conference (FTC) 2022, Volume 1; Lecture Notes in Networks and Systems; Arai, K., Ed.; Springer International Publishing: Cham, Switzerland, 2023; Volume 559, pp. 809–822. ISBN 978-3-031-18460-4. [Google Scholar]

- Ashktorab, Z.; Hoover, B.; Agarwal, M.; Dugan, C.; Geyer, W.; Yang, H.B.; Yurochkin, M. Fairness Evaluation in Text Classification: Machine Learning Practitioner Perspectives of Individual and Group Fairness. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; ACM: Hamburg, Germany, 2023; pp. 1–20. [Google Scholar]

- Aksnes, D.W.; Langfeldt, L.; Wouters, P. Citations, Citation Indicators, and Research Quality: An Overview of Basic Concepts and Theories. Sage Open 2019, 9, 2158244019829575. [Google Scholar] [CrossRef]

- Aiza, W.S.N.; Shuib, L.; Idris, N.; Normadhi, N.B.A. Features, Techniques and Evaluation in Predicting Articles’ Citations: A Review from Years 2010–2023. Scientometrics 2024, 129, 1–29. [Google Scholar] [CrossRef]

- Bornmann, L.; Marx, W. The Wisdom of Citing Scientists. Asso Info Sci. Tech. 2014, 65, 1288–1292. [Google Scholar] [CrossRef]

- Kochhar, S.K.; Ojha, U. Index for Objective Measurement of a Research Paper Based on Sentiment Analysis. ICT Express 2020, 6, 253–257. [Google Scholar] [CrossRef]

- Basumatary, B.; Tripathi, M.; Verma, M.K. Does Altmetric Attention Score Correlate with Citations of Articles Published in High CiteScore Journals. DESIDOC J. Libr. Inf. Technol. 2023, 43, 432–440. [Google Scholar] [CrossRef]

- Pottier, P.; Lagisz, M.; Burke, S.; Drobniak, S.M.; Downing, P.A.; Macartney, E.L.; Martinig, A.R.; Mizuno, A.; Morrison, K.; Pollo, P.; et al. Title, Abstract and Keywords: A Practical Guide to Maximize the Visibility and Impact of Academic Papers. Proc. R. Soc. B. 2024, 291, 20241222. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Yan, X.; Zhao, L.; Zhang, Y. Enhancing Keyphrase Extraction from Academic Articles Using Section Structure Information. Scientometrics 2025, 130, 2311–2343. [Google Scholar] [CrossRef]

- Vallelunga, R.; Scarpino, I.; Martinis, M.C.; Luzza, F.; Zucco, C. Applications of Text Mining Techniques to Extract Meaningful Information from Gastroenterology Medical Reports. J. Comput. Sci. 2024, 83, 102458. [Google Scholar] [CrossRef]

- Moafa, K.M.Y.; Almohammadi, N.F.H.; Alrashedi, F.S.S.; Alrashidi, S.T.S.; Al-Hamdan, S.A.; Faggad, M.M.; Alahmary, S.M.; Al-Darwaish, M.I.A.; Al-Anzi, A.K. Artificial Intelligence for Improved Health Management: Application, Uses, Opportunities, and Challenges-A Systematic Review. Egypt. J. Chem. 2024, 67, 865–880. [Google Scholar] [CrossRef]

- Li, K.-Y.; Burnside, N.G.; De Lima, R.S.; Peciña, M.V.; Sepp, K.; Cabral Pinheiro, V.H.; De Lima, B.R.C.A.; Yang, M.-D.; Vain, A.; Sepp, K. An Automated Machine Learning Framework in Unmanned Aircraft Systems: New Insights into Agricultural Management Practices Recognition Approaches. Remote Sens. 2021, 13, 3190. [Google Scholar] [CrossRef]

- Gün, M. Machine Learning in Finance: Transformation of Financial Markets. In Machine Learning in Finance; Contributions to Finance and Accounting; Gün, M., Kartal, B., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 1–16. ISBN 978-3-031-83265-9. [Google Scholar]

- Sharma, V.; Sharma, D.; Kumar Punia, S. Algorithmic Approaches, Practical Implementations and Future Research Directions in Machine Learning. In Proceedings of the 2024 1st International Conference on Advances in Computing, Communication and Networking (ICAC2N), Greater Noida, India, 16–17 December 2024; pp. 121–126. [Google Scholar]

- Han, S.; Liang, Y.; Ma, Q.; Xu, Y.; Zhang, Y.; Du, W.; Wang, C.; Li, Y. LncFinder: An Integrated Platform for Long Non-Coding RNA Identification Utilizing Sequence Intrinsic Composition, Structural Information and Physicochemical Property. Brief. Bioinform. 2019, 20, 2009–2027. [Google Scholar] [CrossRef] [PubMed]

| Exploration Steps | Filters on WoS | Description | Query | Query Number | Count |

|---|---|---|---|---|---|

| 1 | Title/Authors’ Keywords | Contains specific keywords related to AutoML in titles | (TI = (“automated_ machine_learning”)) OR TI = (“AutoML”) | #1 | 953 |

| Contains specific keywords related to AutoML in authors keywords | (AK = (“automated_ machine_learning”)) OR AK = (“AutoML”) | #2 | 1147 | ||

| Contains specific keywords related to AutoML in titles or authors keywords | #1 OR #2 | #3 | 1619 | ||

| 2 | Language | Limit to English | (#3) AND LA = (English) | #4 | 1613 |

| 3 | Document Type | Limit to Article | (#4) AND DT = (Article) | #5 | 964 |

| 4 | Year Published | Exclude 2025 | (#5) NOT PY = (2025) | #6 | 920 |

| Indicator | Value |

|---|---|

| Timespan | 2006:2024 |

| Sources | 517 |

| Documents | 920 |

| Average years from publication | 2.56 |

| Average citations per document | 13.35 |

| Average citations per year per document | 2.976 |

| References | 37,214 |

| Indicator | Value |

|---|---|

| Keywords plus | 1493 |

| Authors’ keywords | 2661 |

| Authors | 3964 |

| Author appearances | 4894 |

| Authors of single-authored documents | 19 |

| Authors of multi-authored documents | 3945 |

| Single-authored documents | 19 |

| Documents per author | 0.232 |

| Authors per document | 4.31 |

| Co-authors per documents | 5.32 |

| Collaboration index | 4.38 |

| No. | Paper (First Author, Year, Journal, Reference) | Number of Authors | Region | Total Citations (TC) | Total Citations per Year (TCY) | Normalized TC (NTC) |

|---|---|---|---|---|---|---|

| 1 | Elsken T., 2019, Journal of Machine Learning Research [77] | 3 | Germany | 1181 | 168.71 | 11.86 |

| 2 | He X., 2021, Knowledge-Based Systems [8] | 3 | China | 804 | 160.80 | 31.87 |

| 3 | Feurer M., 2019, The Springer Series on Challenges in Machine Learning [78] | 6 | Germany | 312 | 44.57 | 3.13 |

| 4 | Le TT., 2020, Bioinformatics [79] | 3 | USA | 244 | 40.67 | 10.26 |

| 5 | Alaa AM., 2019, PLoS ONE [80] | 5 | U.K. and USA | 231 | 33.00 | 2.32 |

| 6 | Zöller MA., 2021, Journal of Artificial Intelligence Research [81] | 2 | Germany | 168 | 33.60 | 6.66 |

| 7 | Chen Z., 2021, Nucleic Acids Research [82] | 12 | UK and China | 146 | 29.20 | 5.79 |

| 8 | Lindauer M., 2022, Journal of Machine Learning Research [83] | 9 | Germany | 146 | 36.50 | 12.06 |

| 9 | Karmaker SK., 2021, ACM Computing Surveys (CSUR) [84] | 6 | USA | 121 | 24.20 | 4.80 |

| 10 | Xu H, 2023, Soft Computing [85] | 4 | Canada | 109 | 36.33 | 15.03 |

| Words in Keywords Plus | Occurrences | Words in Authors’ Keywords | Occurrences |

|---|---|---|---|

| model | 56 | automated machine learning | 329 |

| classification | 55 | automl | 297 |

| prediction | 51 | machine learning | 215 |

| optimization | 45 | deep learning | 86 |

| algorithm | 36 | neural architecture search | 65 |

| selection | 28 | artificial intelligence | 59 |

| algorithms | 25 | automated machine learning (automl) | 42 |

| models | 25 | optimization | 31 |

| diagnosis | 24 | training | 30 |

| system | 24 | hyperparameter optimization | 28 |

| Bigrams in Abstracts | Occurrences | Bigrams in Titles | Occurrences |

|---|---|---|---|

| machine learning | 1315 | machine learning | 430 |

| automated machine | 499 | automated machine | 367 |

| learning automl | 288 | architecture search | 49 |

| learning ml | 160 | neural architecture | 47 |

| deep learning | 156 | learning approach | 46 |

| neural network | 141 | deep learning | 27 |

| learning models | 136 | machine learning-based | 26 |

| neural networks | 125 | learning model | 21 |

| ml models | 115 | neural network | 19 |

| architecture search | 110 | time series | 19 |

| Trigrams in Abstracts | Occurrences | Trigrams in Titles | Occurrences |

|---|---|---|---|

| automated machine learning | 496 | automated machine learning | 335 |

| machine learning automl | 285 | machine learning approach | 43 |

| machine learning ml | 157 | neural architecture search | 41 |

| machine learning models | 102 | automated machine learning-based | 23 |

| machine learning algorithms | 81 | machine learning model | 18 |

| neural architecture search | 76 | machine learning automl | 15 |

| architecture search nas | 51 | machine learning models | 12 |

| machine learning methods | 43 | machine learning tool | 10 |

| machine learning model | 42 | machine learning algorithms | 8 |

| receiver operating characteristic | 35 | machine learning framework | 8 |

| LDA Topics | BERTopics | Thematic Map Clusters | Overlap/Notes |

|---|---|---|---|

| LDA Topic 1—Algorithmic Foundations and Model Optimization (NAS, optimization, pipelines, hyperparameters) | BERTopic 1—NAS and Architectures | Cluster 4—Motor Themes (AutoML, deep learning, neural architecture search, optimization, hyperparameter tuning, meta-learning) | Strong alignment: all three approaches confirm AutoML’s algorithmic and methodological core. |

| LDA Topic 2—Applied AutoML in Healthcare and Prediction (patients, diagnosis, clinical, prediction, accuracy) | BERTopic 0—Performance and Healthcare Applications | Cluster 2—Motor Themes (prediction, diagnosis, autogluon, digital health); Cluster 3—Applied Themes (radiomics, breast cancer, predictive models) | Overlap across all methods: healthcare and prediction consistently emerge as key AutoML applications. |

| – | BERTopic 2—Intrusion Detection and Networks | – | Unique to BERTopic: cybersecurity applications (traffic, intrusion detection) do not surface in LDA or thematic map. |

| – | BERTopic 3—Student Performance and Academic Dropout Prediction | – | Unique to BERTopic: education-related applications absent from LDA and Thematic Map. |

| – | – | Cluster 1—Basic Themes (ML, AI, AutoML, Bayesian optimization, feature selection, COVID-19) | Identified only by thematic map: foundational concepts treated as background context in LDA and BERTopic. |

| – | – | Clusters 5 & 7—Niche Themes (SHAP, QSAR, 3D echocardiography) | Unique to thematic map: specialized subfields not detected by topic models. |

| – | – | Cluster 6—Emerging/Declining Themes (Blockchain) | Unique to thematic map: peripheral exploratory direction not visible in LDA or BERTopic. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tătaru, G.-C.; Cosac, A.; Ioanăș, I.; Florescu, M.-S.; Orzan, M.; Delcea, C.; Cotfas, L.-A. Understanding the Rise of Automated Machine Learning: A Global Overview and Topic Analysis. Information 2025, 16, 994. https://doi.org/10.3390/info16110994

Tătaru G-C, Cosac A, Ioanăș I, Florescu M-S, Orzan M, Delcea C, Cotfas L-A. Understanding the Rise of Automated Machine Learning: A Global Overview and Topic Analysis. Information. 2025; 16(11):994. https://doi.org/10.3390/info16110994

Chicago/Turabian StyleTătaru, George-Cristian, Adriana Cosac, Ioana Ioanăș, Margareta-Stela Florescu, Mihai Orzan, Camelia Delcea, and Liviu-Adrian Cotfas. 2025. "Understanding the Rise of Automated Machine Learning: A Global Overview and Topic Analysis" Information 16, no. 11: 994. https://doi.org/10.3390/info16110994

APA StyleTătaru, G.-C., Cosac, A., Ioanăș, I., Florescu, M.-S., Orzan, M., Delcea, C., & Cotfas, L.-A. (2025). Understanding the Rise of Automated Machine Learning: A Global Overview and Topic Analysis. Information, 16(11), 994. https://doi.org/10.3390/info16110994