Efficient Transformer-Based Abstractive Urdu Text Summarization Through Selective Attention Pruning

Abstract

1. Introduction

- Can selective attention head pruning improve the performance and efficiency of transformer models for Urdu abstractive summarization?

- How do different transformer architectures (BART, T5, and GPT-2) respond to attention head pruning when adapted for Urdu?

- What is the optimal pruning threshold that balances performance and efficiency for Urdu text summarization?

- How do the optimized models generalize across different domains of Urdu text?

- Development of optimized transformer models (EBART, ET5, and EGPT-2) through strategic attention head pruning, specifically designed for Urdu abstractive summarization;

- Comprehensive investigation of leading transformer architectures (BART, T5, and GPT-2) for Urdu language processing;

- Extensive evaluation of the optimized models using ROUGE metrics and comparative analysis against their original counterparts.

2. Background and Literature Review

2.1. Single Document Summarization Approaches

2.2. Multiple Document Summarization and Hybrid Approaches

3. Methodology

3.1. Dataset Description and Preprocessing

- Tokenization. The input Urdu text was segmented into subword tokens using the pre-trained tokenizers specific to each model (e.g., GPT2Tokenizer, T5Tokenizer, and BartTokenizer) [25].

- Sequence Length Adjustment. All tokenized sequences were padded or truncated to a fixed length of 512 tokens to conform to the input size requirements of the models.

- Text Normalization. Basic text normalization was performed, which involved converting all text to lowercase and removing extraneous punctuation marks.

- Tensor Conversion. The final tokenized and adjusted sequences were converted into PyTorch (version 2.2.0, Meta AI, Menlo Park, CA, USA) tensors, which is the required data format for model inference and fine-tuning.

3.2. Efficient Transformer Summarizer Models by Pruning Attention Heads Based on Their Contribution

3.2.1. Training the Efficient Summarizer Models

- Fine-tuning: In this step, the pre-trained models undergo fine-tuning for the specific domain of Urdu text summarization and are exposed to the patterns and features of the Urdu language. This step forms the basis by which the model learns Urdu-specific linguistic structures and contextual dependencies. The computational cost of this step is per epoch for N training samples and a sequence length of L. The fine-tuning process was implemented in PyTorch (version 2.2.0, Meta AI, Menlo Park, CA, USA) using the AdamW optimizer (version 0.0.1, Loshchilov and Hutter, ETH Zürich, Switzerland).

- Pruning: This stage systematically sheds redundant attention heads based on their contribution to the ROUGE score as a means of reducing model complexity and sharpening the focus of attention on the salient features of Urdu. This ensures that the model becomes more focused by the pruning of such heads that contribute minimally towards the task. The iterative pruning process has a time complexity of , where H is the number of attention heads, and E is the evaluation cost per head.

- Evaluation: This step ensures that pruning does not lead to degradation of performance; thus, the final model is efficient and accurate. The validation step here was important to confirm whether the pruned model could still generate good Urdu summaries while realizing computational gains.

- Number of encoder/decoder layers: 6 (for BART and T5); 12 (for GPT-2).

- Number of attention heads: 12 (for BART and T5); 12 (for GPT-2).

- Embedding size: 768.

- Feed-forward network size: 3072.

- Dropout rate: 0.1.

- Learning rate:, using the AdamW optimizer.

- Batch size: 8.

- Sequence length: 512 tokens.

- Weight decay: 0.01.

- Training epochs: 200.

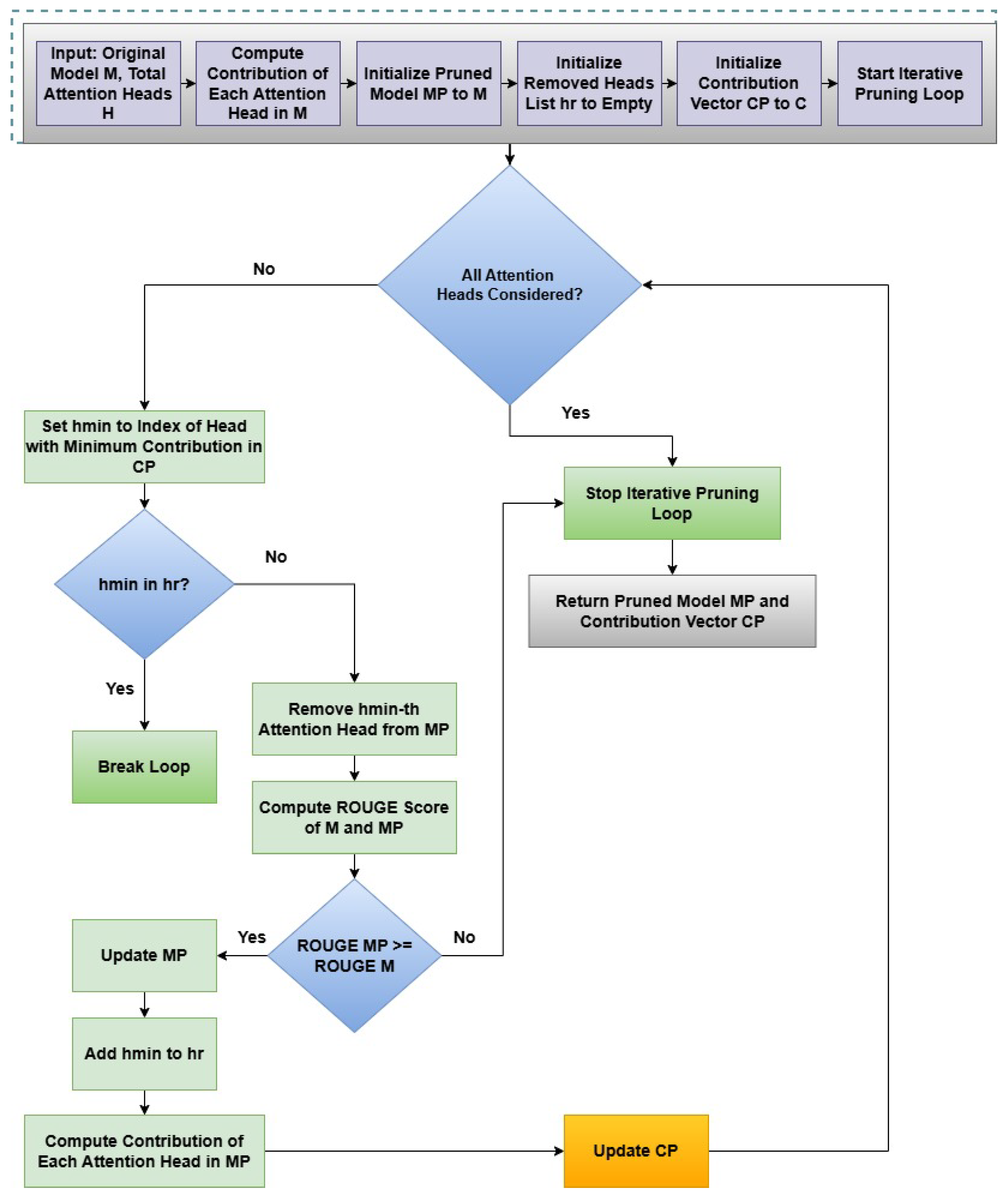

| Algorithm 1 Pruning and Summarization with Efficient GPT-2, T5, and BART Models |

Require:

|

- The number of transformer blocks (layers) in the encoder and decoder to balance model complexity and generalization.

- The number of attention heads in the multi-head attention mechanism to allow the model to focus on different parts of the input text simultaneously.

- The embedding size, chosen to capture sufficient information while maintaining computational efficiency.

- The size of the feed-forward neural network within each transformer block to adjust the model’s capacity and computational cost.

- The dropout rate applied to transformer layers to prevent overfitting by randomly dropping units during training.

- The learning rate used by the optimization algorithm (e.g., Adam, SGD) to ensure fast and stable convergence.

- The batch size, i.e., the number of sentences or paragraphs processed per training step, for stable gradient estimation.

- The maximum sequence length for input and output to capture adequate context while efficiently using computational resources.

3.2.2. Perform Iterative Attention Heads Pruning with in Dividual Contribution Computation

| Algorithm 2 Attention Heads Pruning Based on the Individual Contribution in the Multi-Head Attention |

|

3.2.3. Generate Summaries Using the Fine-Tuned Pruned Models

3.3. Ablation Study and Pruning Threshold Analysis

3.4. Computational Cost Measurement

4. Results

4.1. Experimental Setup and Statistical Validation

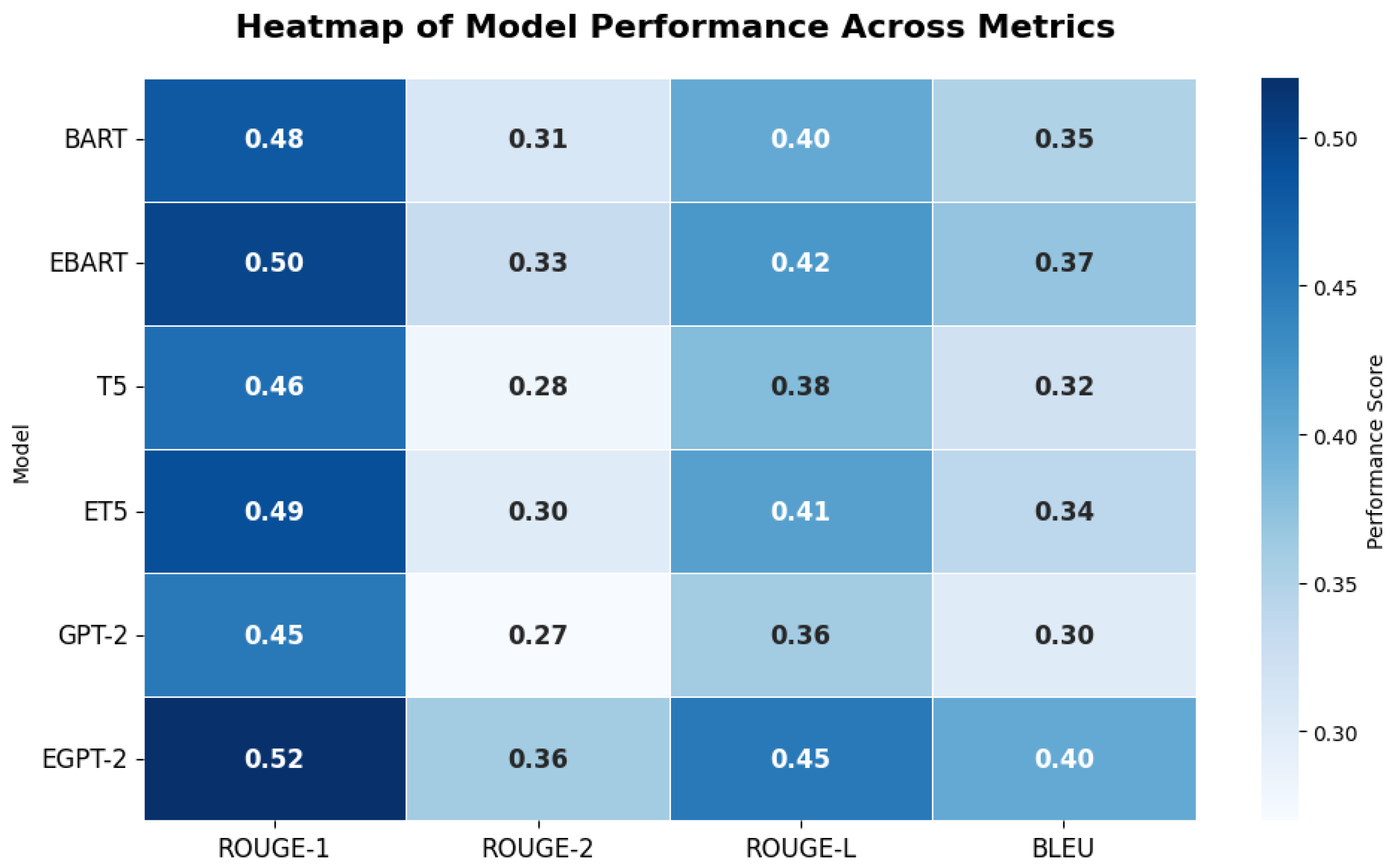

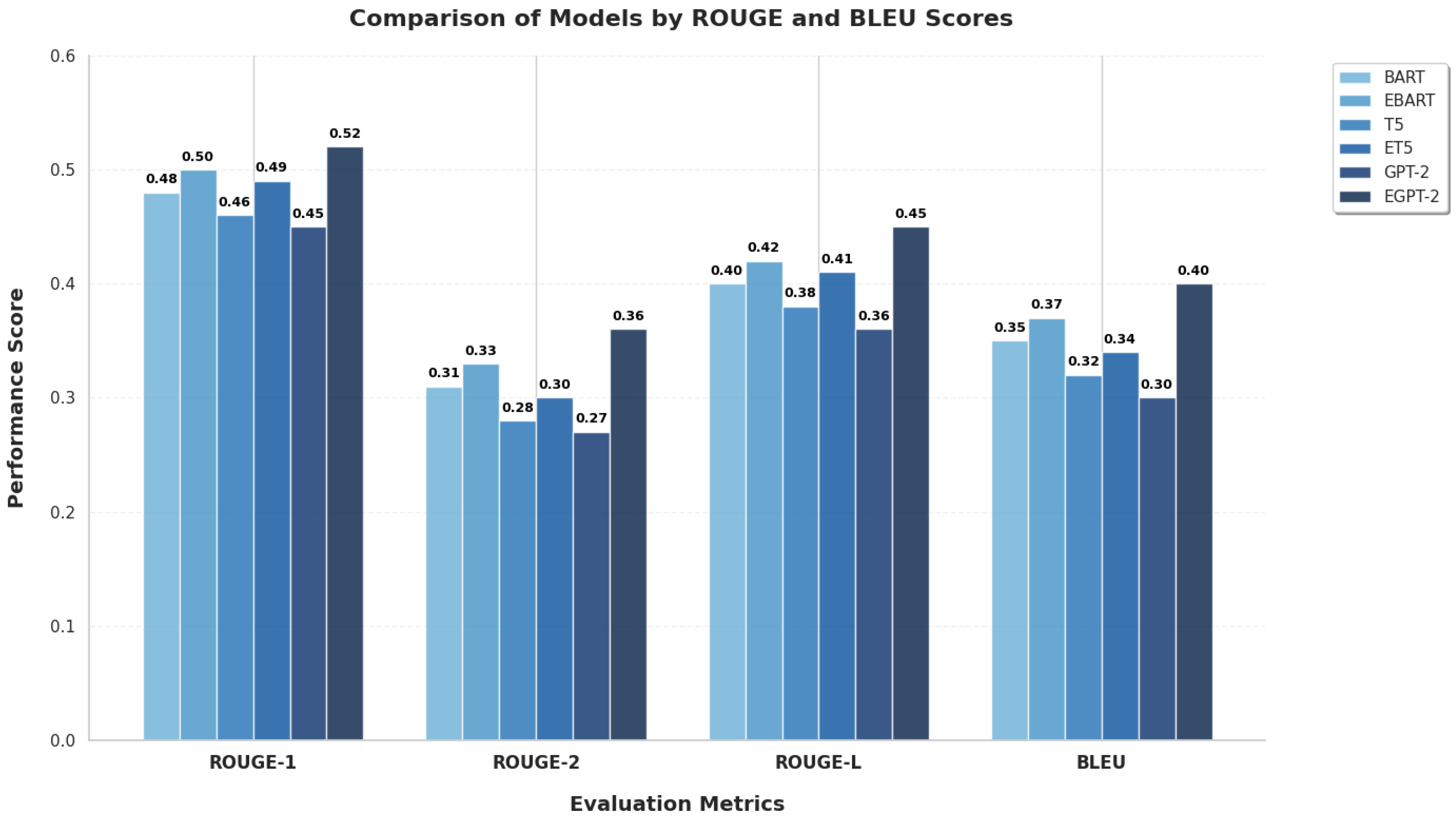

4.2. Overall Performance Analysis

4.3. Extended Ablation and Pruning Threshold Evaluation

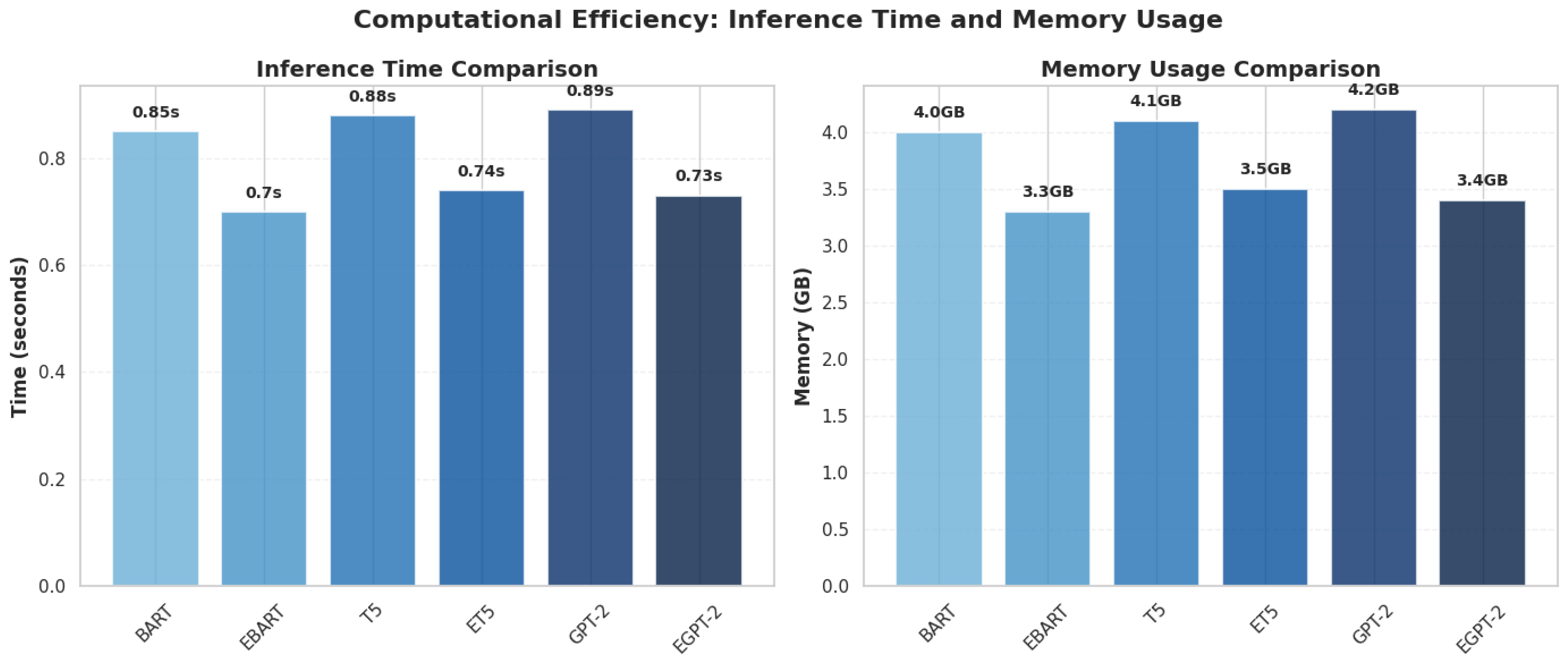

4.4. Computational Efficiency Analysis

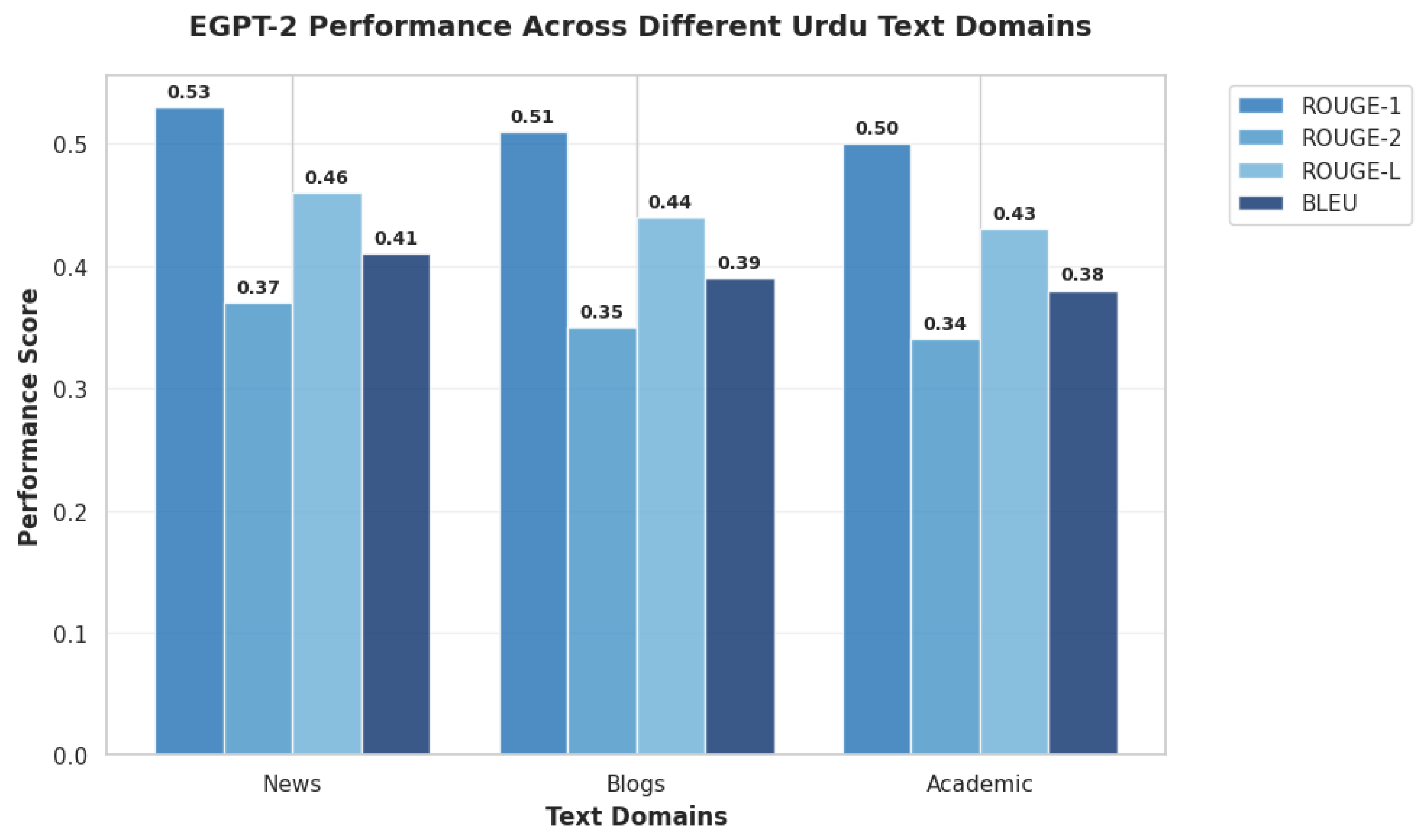

4.5. Cross-Domain Generalization

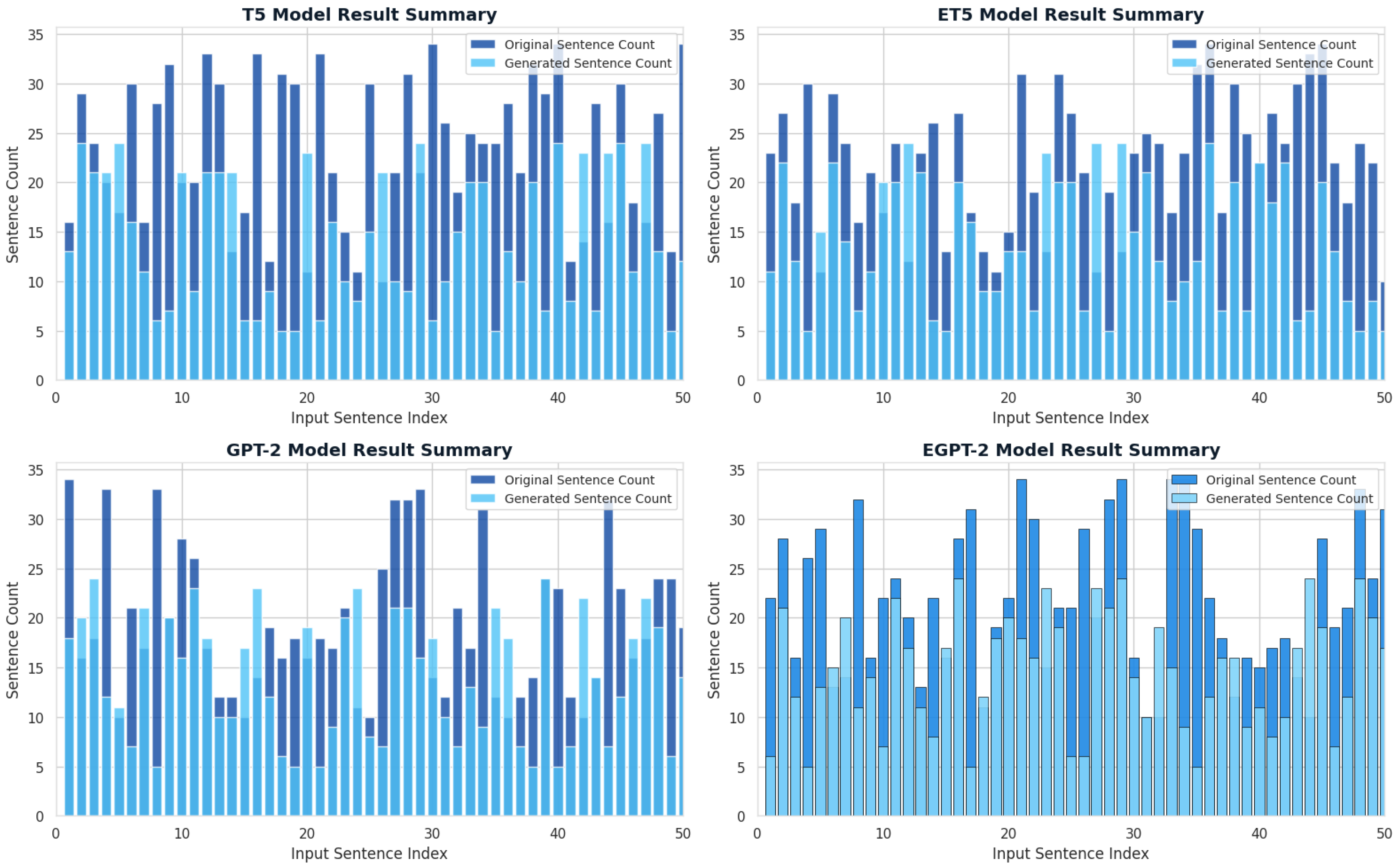

4.6. Impact of Input Sentence Count on Summary Generation

4.7. Qualitative Analysis and Model Comparison

5. Discussion

5.1. Interpretation of Results and Comparison with Prior Work

5.2. Answering Research Questions

- Can selective attention head pruning improve performance and efficiency? Yes, our results clearly show that all pruned models (EBART, ET5, and EGPT-2) outperform their original counterparts across all metrics while achieving significant reductions in inference time (16–22%) and memory usage (15–19%).

- How do different architectures respond to pruning?All three architectures benefited from pruning, but GPT-2 showed the greatest improvement, suggesting that decoder-only models may be particularly amenable to this optimization technique for generative tasks.

- What is the optimal pruning threshold? Our ablation study identified 30% as the optimal pruning ratio for EGPT-2, balancing performance gains with computational efficiency. Beyond this threshold, performance degradation occurs, validating our contribution-based iterative approach.

- How do optimized models generalize across domains? EGPT-2 maintained strong performance (ROUGE-1: 0.50–0.53) across news, blogs, and academic texts, demonstrating robust cross-domain generalization capabilities.

5.3. Theoretical and Practical Implications

5.4. Urdu vs. English Processing Considerations

6. Conclusions and Future Work

- Developing a novel attention head pruning framework specifically tailored for low-resource languages;

- Demonstrating that model optimization can simultaneously improve performance and computational efficiency;

- Providing a comprehensive evaluation methodology for Urdu abstractive summarization;

- Establishing transformer-based models as viable solutions for Urdu NLP tasks.

- Extending the pruning methodology to other low-resource languages with similar morphological complexity;

- Integrating Urdu-specific morphological embeddings to enhance semantic understanding and capture language-specific features;

- Investigating dynamic pruning techniques that adapt to input characteristics and domain requirements;

- Deploying and evaluating our models in real-time applications such as streaming news feeds and social media monitoring;

- Exploring multi-modal summarization approaches that combine text with other data modalities;

- Developing domain adaptation techniques to further improve performance in specialized domains.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Muhammad, M.; Jazeb, N.; Martinez-Enriquez, A.; Sikander, A. EUTS: Extractive Urdu Text Summarizer. In Proceedings of the 2018 17th Mexican International Conference on Artificial Intelligence (MICAI), Guadalajara, Mexico, 22–27 October 2018; pp. 39–44. [Google Scholar]

- Yu, Z.; Du, P.; Yi, L.; Luo, W.; Li, D.; Zhao, B.; Li, L.; Zhang, Z.; Zhang, J.; Zhang, J.; et al. Coastal Zone Information Model: A comprehensive architecture for coastal digital twin by integrating data, models, and knowledge. Fundam. Res. 2024. [Google Scholar] [CrossRef]

- Vijay, S.; Rai, V.; Gupta, S.; Vijayvargia, A.; Sharma, D.M. Extractive text summarisation in Hindi. In Proceedings of the 2017 International Conference on Asian Language Processing (IALP), Singapore, 5–8 December 2017; pp. 318–321. [Google Scholar]

- Rahimi, S.R.; Mozhdehi, A.T.; Abdolahi, M. An overview on extractive text summarization. In Proceedings of the 2017 IEEE 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 22 December 2017; pp. 54–62. [Google Scholar]

- Daud, A.; Khan, W.; Che, D. Urdu language processing: A survey. Artif. Intell. Rev. 2016, 27, 279–311. [Google Scholar] [CrossRef]

- Ali, A.R.; Ijaz, M. Urdu text classification. In Proceedings of the 2019 6th International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 16–18 December 2009; pp. 1–4. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Zettlemoyer, L. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Egomnwan, E.; Chali, Y. Transformer-based model for single documents neural summarization. In Proceedings of the 2019 3rd Workshop on Neural Generation and Translation, Hong Kong, China, 4 November 2019; pp. 70–79. [Google Scholar]

- Abolghasemi, M.; Dadkhah, C.; Tohidi, N. HTS-DL: Hybrid text summarization system using deep learning. In Proceedings of the 2022 27th International Computer Conference, Computer Society of Iran (CSICC), Tehran, Iran, 23–24 February 2022; pp. 1–6. [Google Scholar]

- Jiang, J.; Zhang, H.; Dai, C.; Zhao, Q.; Feng, H.; Ji, Z.; Li, Y. Enhancements of attention-based bidirectional LSTM for hybrid automatic text summarization. IEEE Access 2021, 9, 123660–123671. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Chowdhury, S.A.; Abdelali, A.; Darwish, K.; Soon-Gyo, J.; Salminen, J.; Jansen, B.J. Improving Arabic Text Categorization Using Transformer Training Diversification. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 226–236. [Google Scholar]

- Farooq, A.; Batool, S.; Noreen, Z. Comparing different techniques of Urdu text summarization. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), Karachi, Pakistan, 15–16 December 2021; pp. 1–6. [Google Scholar]

- Asif, M.; Raza, S.A.; Iqbal, J.; Perwatz, N.; Faiz, T.; Khan, S. Bidirectional encoder approach for abstractive text summarization of Urdu language. In Proceedings of the 2022 International Conference on Business Analytics for Technology and Security (ICBATS), Dubai, United Arab Emirates, 16–17 February 2022; pp. 1–6. [Google Scholar]

- Khyat, J.; Lakshmi, S.S.; Rani, M.U. Hybrid Approach for Multi-Document Text Summarization by N-Gram and Deep Learning Models. J. Intell. Syst. 2021, 30, 123–135. [Google Scholar]

- Mujahid, K.; Bhatti, S.; Memon, M. Classification of URDU headline news using Bidirectional Encoder Representation from Transformer and Traditional Machine learning Algorithm. In Proceedings of the IMTIC 2021—6th International Multi-Topic ICT Conference: AI Meets IoT: Towards Next Generation Digital Transformation, Karachi, Pakistan, 24–25 November 2021; pp. 1–6. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Michel, P.; Levy, O.; Neubig, G. Are Sixteen Heads Really Better than One? In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 14014–14024. [Google Scholar]

- Cheema, A.S.; Azhar, M.; Arif, F.; Sohail, M.; Iqbal, A. EGPT-SPE: Story Point Effort Estimation Using Improved GPT-2 by Removing Inefficient Attention Heads. Appl. Intell. 2025, 55, 994. [Google Scholar] [CrossRef]

- Savelieva, A.; Au-Yeung, B.; Ramani, V. Abstractive Summarization of Spoken and Written Instructions with BERT. arXiv 2020, arXiv:2008.09676. [Google Scholar] [CrossRef]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou, R.; Siddhant, A.; Barua, A.; Raffel, C. mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer. arXiv 2020, arXiv:2010.11934. [Google Scholar]

- Munaf, M.; Afzal, H.; Mahmood, K.; Iltaf, N. Low Resource Summarization Using Pre-trained Language Models. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 141. [Google Scholar] [CrossRef]

- Salau, A.O.; Arega, K.L.; Tin, T.T.; Quansah, A.; Sefa-Boateng, K.; Chowdhury, I.J.; Braide, S.L. Machine learning-based detection of fake news in Afan Oromo language. Bull. Electr. Eng. Inform. 2024, 13, 4260–4272. [Google Scholar] [CrossRef]

- Rauf, F.; Irfan, R.; Mushtaq, L.; Ashraf, M. Fake News Detection in Urdu Using Deep Learning. VFAST Trans. Softw. Eng. 2022, 10, 151–165. [Google Scholar] [CrossRef]

- Azhar, M.; Amjad, A.; Dewi, D.A.; Kasim, S. A Systematic Review and Experimental Evaluation of Classical and Transformer-Based Models for Urdu Abstractive Text Summarization. Information 2025, 16, 784. [Google Scholar] [CrossRef]

| Model | ROUGE-1 | ROUGE-2 | ROUGE-L | BLEU Score |

|---|---|---|---|---|

| BART | 0.48 | 0.31 | 0.40 | 0.35 |

| EBART | 0.50 | 0.33 | 0.42 | 0.37 |

| T5 | 0.46 | 0.28 | 0.38 | 0.32 |

| ET5 | 0.49 | 0.30 | 0.41 | 0.34 |

| GPT-2 | 0.45 | 0.27 | 0.36 | 0.30 |

| EGPT-2 | 0.52 | 0.36 | 0.45 | 0.40 |

| Pruning Ratio | ROUGE-1 | Inference Time (s) | Memory Usage (GB) |

|---|---|---|---|

| 0% (Original) | 0.45 | 0.89 | 4.2 |

| 15% | 0.48 | 0.78 | 3.7 |

| 30% | 0.52 | 0.73 | 3.4 |

| 45% | 0.49 | 0.70 | 3.1 |

| Model | Inference Time (s) | Reduction | Memory Usage (GB) | Reduction |

|---|---|---|---|---|

| BART | 0.85 | - | 4.0 | - |

| EBART | 0.70 | 18% | 3.3 | 17% |

| T5 | 0.88 | - | 4.1 | - |

| ET5 | 0.74 | 16% | 3.5 | 15% |

| GPT-2 | 0.89 | - | 4.2 | - |

| EGPT-2 | 0.73 | 22% | 3.4 | 19% |

| Domain | ROUGE-1 | ROUGE-2 | ROUGE-L | BLEU |

|---|---|---|---|---|

| News | 0.53 | 0.37 | 0.46 | 0.41 |

| Blogs | 0.51 | 0.35 | 0.44 | 0.39 |

| Academic | 0.50 | 0.34 | 0.43 | 0.38 |

| Average c | 0.51 | 0.35 | 0.44 | 0.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Azhar, M.; Amjad, A.; Farid, G.; Dewi, D.A.; Batumalay, M. Efficient Transformer-Based Abstractive Urdu Text Summarization Through Selective Attention Pruning. Information 2025, 16, 991. https://doi.org/10.3390/info16110991

Azhar M, Amjad A, Farid G, Dewi DA, Batumalay M. Efficient Transformer-Based Abstractive Urdu Text Summarization Through Selective Attention Pruning. Information. 2025; 16(11):991. https://doi.org/10.3390/info16110991

Chicago/Turabian StyleAzhar, Muhammad, Adeen Amjad, Ghulam Farid, Deshinta Arrova Dewi, and Malathy Batumalay. 2025. "Efficient Transformer-Based Abstractive Urdu Text Summarization Through Selective Attention Pruning" Information 16, no. 11: 991. https://doi.org/10.3390/info16110991

APA StyleAzhar, M., Amjad, A., Farid, G., Dewi, D. A., & Batumalay, M. (2025). Efficient Transformer-Based Abstractive Urdu Text Summarization Through Selective Attention Pruning. Information, 16(11), 991. https://doi.org/10.3390/info16110991