Abstract

The role of technology in translator training has become increasingly significant as machine translation (MT) evolves at a rapid pace. Beyond practical tool usage, training must now prepare students to engage critically with MT outputs and understand the socio-technical dimensions of translation. Traditional sentence-level MT evaluation, often conducted on isolated segments, can overlook discourse-level errors or produce misleadingly high scores for sentences that appear correct in isolation but are inaccurate within the broader discourse. Document-level MT evaluation has emerged as an approach that offers a more accurate perspective by accounting for context. This article presents the integration of context-aware MT evaluation into an MA-level translation module, in which students conducted a structured exercise comparing sentence-level and document-level methodologies, supported by reflective reporting. The aim was to familiarise students with context-aware evaluation techniques, expose the limitations of single-sentence evaluation, and foster a more nuanced understanding of translation quality. This study provides methodological insights for incorporating MT evaluation training into translator education and highlights how such exercises can develop critical awareness of MT’s contextual limitations. It also offers a framework for supporting students in building the analytical skills needed to evaluate MT output in professional and research settings.

1. Introduction

The role of technology in translator training has been a subject of study since technology became prominent in the wider field of translation. Teaching translation technology to translators is not only important for practical purposes but also for understanding the historical and socio-technical aspects of translation [1].

Research on translator training in relation to technical competencies has been extensive. Among the earliest initiatives to integrate Statistical Machine Translation (SMT) into translator education were those by Doherty et al. [2], Doherty and Kenny [3]. At the time, SMT was not widely taught, and when it was, the role of translators in the SMT workflow was constructed in a limiting way, due to the tendency “to see post-editing as the only role for translators (who then morph into post-editors) in such workflows” [3] (pp. 277–287). Their findings demonstrated that when translators take an active rather than passive role in engaging with MT technologies, they gain empowerment through increased familiarity with and understanding of the developers’ perspective. These studies also advanced a translator-oriented pedagogical approach that positions translators as informed users and evaluators of MT, rather than as passive post-editors. Their results showed a significant increase in students’ SMT self-efficacy and critical awareness, highlighting the importance of integrating MT across the curriculum and foreshadowing the shift from SMT to NMT and AI-based approaches [4].

With the emergence of Neural MT (NMT) systems and the improvement in translation quality, particularly for languages where SMT was lacking, students have increasingly embraced MT and rely on it extensively [5]. Thus, it is crucial to educate translators in training about the capabilities and limitations of the available MT systems. As Winters et al. [6] note, “the shifting position of professional translators towards engagement with translation technologies, including MT, adds weight and urgency to the value of foregrounding the role of the translator in our research.” This broader shift underscores the importance of exploring pedagogical models that centre translators as critical users and expert evaluators of MT outputs, rather than as passive consumers of automated translations.

As evident, there is a widespread acknowledgment that education in translation technology must adapt to new technologies and the evolving nature of the translation sector [7]. Therefore, this article advocates for the importance of teaching MT evaluation from a perspective that integrates context-aware evaluation, which has gained increasing attention in MT research with the advent of NMT systems and Large Language Models (LLMs). While the role of context has long been recognised in translation studies, its systematic application in MT evaluation has only recently emerged as a critical area of research, driven by the need for more rigorous assessment methodologies [8]. With the goal of developing and testing a methodology where context-aware MT evaluation can be taught in a way to advance the role of translators in training, giving them an active role as the experts in the evaluation of MT systems, we pose the following questions:

- Q1: How can context-aware translation evaluation activities be effectively implemented within the constraints of an MA translation technology lab setting?

- Q2: What is the impact of context-aware MT evaluation on students’ perceived ability to assess translation quality compared to sentence-level evaluation?

2. Related Work

2.1. MT Evaluation in the Classroom

Human evaluation of MT has been fundamental to the field since its inception. Assessing the quality of MT systems involves the use of either automatic or human evaluation methods, each possessing its own strengths and weaknesses. Scholars have long emphasised the importance of integrating MT education into translator training, ensuring that students can apply and critically select appropriate evaluation methods for different contexts [3], p. 285. Over the past decade, various studies and curricular frameworks have explored how MT-related competencies—including post-editing, error analysis, and evaluation—can best be developed in translator education. Earlier surveys (e.g., [9]) already pointed to the need for stronger training in quality assessment methods and tools, a call that continues to resonate in more recent discussions of MT pedagogy [6,7,10]. Reflections on MT in translation studies have also consistently underscored the need of context in evaluation (e.g., [11,12,13]).

With the paradigm shift from SMT to NMT models, the importance of MT evaluation became even more pronounced as researchers sought to assess improvements in fluency and adequacy, as well as new types of errors introduced by NMT. Moreover, studies from the SMT-to-NMT transition period show that comparative evaluations played a key role in helping students understand MT quality [14]. A practical example of such evaluation exercise is described in Moorkens [15], conducted within the same module as the present research. Students compared the quality of SMT and NMT systems through post-editing, adequacy, and error typology tasks, reporting increased confidence and interest in working with NMT. This experiment demonstrated how structured activities can empower students and enhance their critical engagement with machine translation. Although SMT has since been phased out, such early evaluation-based activities helped to establish the foundation for integrating MT evaluation training into translator education, equipping students with transferable skills for assessing evolving MT systems.

Various other studies have implemented MT in the classroom, not focusing on evaluation per se, but including post-editing training [16], task-based approaches (e.g., terminology, word order, passive voice) [14], post-editing training and its impact on creativity [17], and post-editing and pricing strategies [18], among others.

Building upon Kenny [1]’s argument that “curricula have to be updated to integrate technologies such as neural MT” and that “translation students and practitioners have to remain alert to the possibility of AI bias in MT” (p. 508), we argue here that teaching new methodologies for MT evaluation is as essential as teaching about the technology itself, particularly given the current stage of advancement in the translation field, with generative AI being used not only for translation but also for evaluation.

The following section reviews evaluation methods that incorporate document-level context to assess both translation quality and potential harms in MT outputs, the methodological basis for the classroom exercise described here. Although a number of studies have begun to address context-aware evaluation, the literature remains limited, and, to our knowledge, no prior work has examined how these insights can be systematically integrated into translator training.

2.2. The Role of Context in MT Evaluation

With the rise of NMT systems and the emergence of LLMs, assessing these systems has become increasingly challenging due to their high fluency, tendency to generate plausible yet inaccurate translations (hallucinations), and the necessity of discourse-level evaluation to capture coherence across longer texts. Differentiating between MT output and human translation has proven difficult using current human evaluation methods [19,20], as ‘segment-level scores may not provide a sufficiently comprehensive framework to address the intricate challenges introduced by context-aware translations’ [8]. As a result, there is a growing demand for assessment approaches that better account for these dimensions of context. One such approach, which has gained increasing attention in the MT community, is document-level human evaluation, as it allows for a more thorough assessment of suprasentential coherence and discourse-level phenomena.

Several studies have attempted to determine the amount of context necessary for translators [21], as well as various methodologies for incorporating context into MT evaluation [22,23,24]. (For a comprehensive survey on the role of context in MT evaluation, see Castilho and Knowles [25]) In the methodology presented in Castilho [22,23], sentences were evaluated in a (i) single-sentence random set-up and (ii) sentences-in-context set-up. The author found that, even though inter-annotator agreement was higher in the single-sentence condition (i), there was also a high number of misevaluation cases in that set-up. Moreover, the context-evaluation (ii) enabled translators to detect more harmful errors and increased their confidence in the evaluation process. The author notes that the high IAA scores in the single sentence setup are due to the fact that translators “tend to accept the translation when adequacy is ambiguous but the translation is correct, especially if it is fluent” (p. 42). Following this line of research, both the Conference on Machine Translation (WMT) and the Metrics Shared Task have incorporated context-aware evaluation methodologies since 2021. Building on this, Freitag et al. [24] conducted a large-scale study comparing professional translators and crowd workers in human evaluation of MT output. Using WMT’s evaluation framework (including MQM), they compared segment-level assessments with and without access to full document context. Their findings showed that crowd-worker evaluations correlated weakly with expert MQM judgments and often produced divergent system rankings. Moreover, access to document-level context led professionals to identify a wider range of discourse-level errors and to adjust quality scores accordingly. These results underscore the importance of context in reliable MT evaluation and highlight the need to train translators student, future professional evaluators, to recognise such context-dependent errors, which motivates the pedagogical focus of the present study.

It is evident that the field of MT evaluation is dynamic, and the most effective methodologies for conducting context-aware human evaluations are yet to be identified. However, it is vital to show translators in training the fast-changing field, as they need to be able to identify the strengths, weaknesses, and possible harms of these advanced systems with a more rigorous evaluation. While there has been some research into context-aware MT evaluation, no work has yet explored how to bring these insights into the context of translator training. In light of this, the present classroom exercise sought to bridge this gap by introducing master’s students to emerging methodologies for MT evaluation, adapting the experimental framework proposed by Castilho [22,23] to a translator training context.

3. Context of This Study and Educational Setting

This evaluation task was administered to two cohorts of master’s students at Dublin City University as part of the Translation Technology module. This module is a mandatory component of both the MA in Translation Studies (for more information, refer to https://www.dcu.ie/courses/postgraduate/salis/ma-translation-studies (accessed on 4 November 2025)) and the MSc in Translation Technology (for more information, refer to https://www.dcu.ie/courses/postgraduate/salis/msc-translation-technology (accessed on 4 November 2025)) programmes.

3.1. The Translation Technology Module

As mentioned earlier, this task was conducted during the Translation Technology lab, a module designed to familiarize students with specialized technologies utilized in the translation field. The module carries a weight of 10 ECTS (European Credit Transfer System) credits, aligning with the standards set by the Irish higher education sector. This equates to one-sixth of the overall taught master’s program. The primary objective of this module is to provide students with hands-on experience and promote critical reflection on the utility of these technologies. Some of the learning objectives of the module include the following:

- Understanding the principles underlying contemporary translation technologies;

- Enhancing texts for machine translation or human translation facilitated by a translation memory tool;

- Grasping the principles of contemporary machine translation;

- Critically assessing contemporary translation technologies and their outputs;

- Making informed decisions regarding the utilization of these technologies while considering associated risks;

- Understanding current professional, socio-technical, and ethical issues and trends related to technology in the profession, research, and industry/market.

Specifically, the MT evaluation exercise presented here supports the objectives of grasping the principles of contemporary MT, and critically assessing translation technologies and their outputs.

3.1.1. Lectures

The Translation Technology module comprises eleven two-hour lectures and eleven one-hour labs, with labs scheduled after the lectures. During the initial four weeks of the module, the focus is on Computer-Assisted Translation (CAT) tools, covering topics such as the evolution of the profession, technical aspects of tools, and socio-technical issues in translation technology. Correspondingly, the first four labs are dedicated to instructing students on the utilization of two CAT tools, explaining their features in alignment with the lectures. It is important to note that, at this stage, students work exclusively with translation memory functions, without any integration of MT into the CAT environment. This separation is pedagogically deliberate, allowing students to develop a foundational understanding of CAT principles before encountering MT as a distinct technology.

Beginning from week 5, the curriculum shifts towards MT, commencing with a brief historical overview followed by exploration of different paradigms (rule-based, statistical, and neural MT, and Large Language Models since 2023). Subsequently, the focus transitions to MT evaluation, encompassing human evaluation, automatic evaluation, and post-editing. The lab sessions in week 6 introduce students to the concept of human evaluation, particularly emphasising fluency, adequacy, and error annotation. At this stage, the goal is to familiarise students with the main MT evaluation criteria and methodologies rather than to produce robust assessment outcomes. Weeks 8 and 9 centre on learning to automatically evaluate and train a NMT system online, and using online platforms with automatic metrics.

The context-aware evaluation task was assigned to students during the week 10 lab session, subsequent to an extensive lecture on human evaluation.

3.1.2. Student Demographics

Approximately one-third of the students enrolled in the program are of Irish nationality, with English as their native language or, to a lesser extent, Irish. The remaining cohort primarily comprises students from other European Union countries where English is not the primary language, as well as individuals from regions including North and South America, Asia, and the Middle East. Their ages typically range from 22 to 50, with an average age of approximately 26. The majority of students hold undergraduate degrees in languages or combinations with other disciplines, while a minority possess undergraduate degrees in translation studies. Upon admission, students generally exhibit proficiency in standard office applications, though they typically lack experience in computer programming. Additionally, the majority have limited exposure to translation technologies, with only a handful having encountered technologies with basic interfaces such as online MT interfaces. In 2022, the module had 30 students enrolled, increasing to 38 students in 2023.

4. Methodological Setup: Sentence vs. Document Evaluation Lab

As previously mentioned, the lab comparing sentence- and document-level evaluation was conducted in week 10, following students’ exposure to human and automatic evaluation metrics. The activity was designed to align with students’ prior exposure to MT evaluation [26] and to make efficient use of lab resources and class time. The focus was on integrating context-aware evaluation into the lab in a way that is both feasible and pedagogically coherent.

Specifically, the lab aimed to expose students to the potential limitations of sentence-level MT evaluation by comparing it to document-level evaluation, which takes into account contextual information from surrounding sentences. This comparison was designed to help students understand how context-aware evaluation can lead to more accurate and nuanced assessments of MT output. We note that the quantitative component of this task was not designed for inferential statistical analysis but rather as a pedagogical tool to prompt critical reflection and support qualitative discussion.

4.1. Metrics

As previously mentioned, students had already received lectures on human evaluation metrics, thus they were familiar with the definitions. Nevertheless, each metric was defined in the lab instructions and within the spreadsheet.

A. Adequacy

Adequacy assessment pertains to the conveyed meaning of the translated text. It is measured using a Likert scale, with respondents answering the question: “How much of the meaning expressed in the source appears in the translation?”

- None of it

- Little of it

- Most of it

- All of it

B. Fluency

Fluency assessment concerns the grammatical aspects of the translated text. It is measured using a Likert scale, with respondents answering the question: “How fluent is the translation?”

- No fluency

- Little fluency

- Near native

- Native

C. Error Annotation

Error annotation comprises a simple taxonomy with four error types:

- Mistranslation: The target content does not accurately represent the source content.

- Untranslated: Content that should have been translated has been left untranslated.

- Word Form: There is a problem in the form of a word (e.g., the English target text has “becomed” instead of “became”).

- Word Order: The word order is incorrect (syntax) (e.g., “The house beautiful is” instead of “The house is beautiful”).

- No errors: The target content is flawless.

D. Ranking

Ranking evaluation requires the translator to select the best version of a translation (MT1 or MT2). If the translator believes that both versions exhibit the same quality, they can choose ‘Both’.

4.2. Materials

The lab materials provided detailed instructions on conducting the evaluation, along with a spreadsheet featuring five distinct tabs:

- -

- Tab 1: Sentence-level evaluation of fluency, adequacy, and error (Figure 1)

- -

- Tab 2: Sentence-level evaluation of ranking (Figure 2)

- -

- Tab 3: Inter-annotator agreement (Figure 3)

- -

- Tab 4: Document-level evaluation of fluency, adequacy, and error (Figure 4)

- -

- Tab 5: Document-level evaluation of ranking (Figure 5).

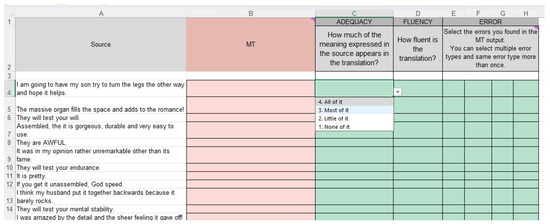

Figure 1.

Tab 1: Sentence-level evaluation of fluency, adequacy, and error. A drop-down menu was available for adequacy, fluency (Likert 1–4) and error (4 categories). The MT column was filled in by the 2022 cohort and pre-filled by the lecturer for the 2023 cohort.

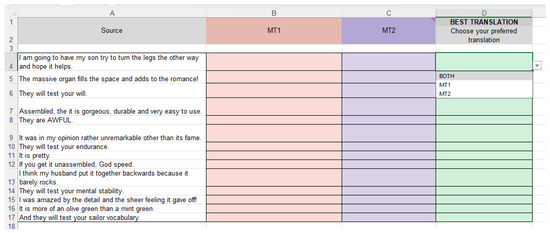

Figure 2.

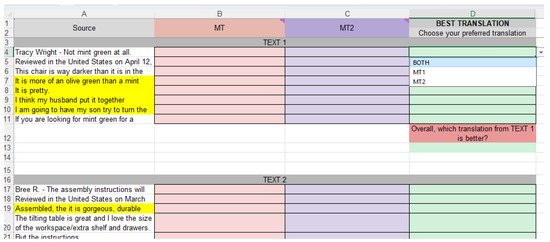

Tab 2: Sentence-level ranking evaluation was conducted using a drop-down menu featuring options for ‘MT1’, ‘MT2’, and ‘BOTH’. The MT1 column was filled in by the 2022 cohort and pre-filled by the lecturer for the 2023 cohort. The MT2 column was filled in by the students themselves.

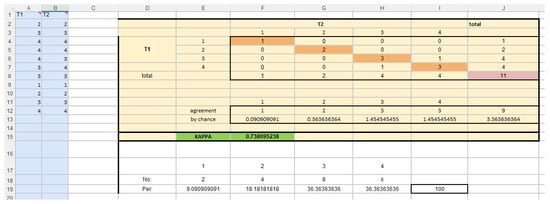

Figure 3.

Tab 3: Inter-annotator agreement calculation. Students filled in Column A and B with their own scores and the scores provided by a colleague.

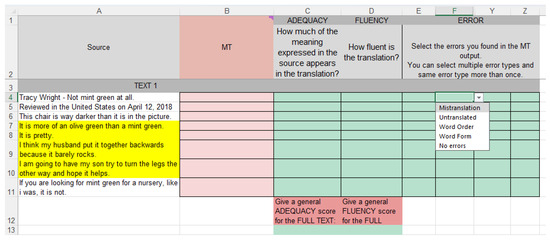

Figure 4.

Tab 4: Document-level evaluation of fluency, adequacy, and error. A drop-down menu was available for adequacy, fluency (Likert 1–4) and error (4 categories).The MT column was filled in by the 2022 cohort and pre-filled by the lecturer for the 2023 cohort. Note that the highlighted sentences were the ones students evaluated in the sentence-level set-up.

Figure 5.

Tab 5: Document-level ranking evaluation was conducted using a drop-down menu featuring options for ‘MT1’, ‘MT2’, and ‘BOTH’. The MT1 column was filled in by the 2022 cohort and pre-filled by the lecturer for the 2023 cohort. The MT2 column was filled in by the students themselves. Note that the highlighted sentences were the ones students evaluated in the sentence-level set-up.

4.3. Corpus

The evaluation tasks were based on the document-level DELA corpus [27], a test suite specifically designed to assess document-level machine translation quality. The corpus contains English-language source texts annotated for six types of context-related phenomena: lexical ambiguity, grammatical gender, grammatical number, terminology, ellipsis, and reference. These annotations identify instances where correct interpretation or translation of a segment depends on document-level context rather than sentence-level cues alone. The annotations were created in the English source texts, not in the translations, with the intention of highlighting context-sensitive challenges that are likely to cause errors in translation, particularly for MT systems that operate at the sentence level. Students were asked to evaluate existing MT outputs in their chosen language pair, but the source of difficulty remained the English-language context. It is important to note that while not every context issue necessarily triggers an error in all language pairs, the selected examples were known (from previous MT evaluations) to be problematic for at least some MT systems and language pairs. Thus, the purpose was not to guarantee an error per sentence, but rather to prompt students to examine context carefully and recognise when context might be necessary for proper evaluation.

For the 2022 evaluation, six full documents were selected from the DELA corpus: four from the Review domain and two from the News domain. From these, 21 context-annotated sentences were selected for the sentence-level evaluation (i.e., presented out of context), while the document-level evaluation included the full texts with the same 21 sentences highlighted. This dual presentation enabled comparison between evaluations performed with and without context. However, based on student feedback and observed time constraints, the 2023 evaluation adopted a lighter version. Only three shorter Review domain documents were used, and the number of context-annotated sentences selected for sentence-level evaluation was reduced to 15.

4.4. Translation Systems

In the 2022 lab session, students were instructed to use an online MT system of their preference to translate the source text into their target language. Specifically, one MT system served as their MT1 for the evaluation of fluency, adequacy, and error (tabs 1 and 4 —see Section 4.2), while another MT system served as their MT2 for the ranking evaluation (tabs 2 and 5—see Section 4.2), as the ranking evaluation needed the use of two distinct MT systems.

However, for the 2023 lab, in order to streamline the process and save time, the MT1 columns were pre-populated with translations generated by Google Translate for all target languages across all tabs. Subsequently, students were tasked with selecting an online MT system or a large language model of their choice to serve as their MT2. Notably, some students opted to use ChatGPT (https://chat.openai.com/ (accessed on 4 November 2023)) as their MT2.

4.5. The Lab

The lab was designed to raise students’ awareness of how context can affect translation evaluation, and consisted of two sequential tasks:

- Task 1: Sentence-Level Evaluation—Students evaluated a selection of individual sentences for fluency, adequacy, error identification, and ranking, without access to surrounding context. They then collaborated with a peer working in the same language pair to compute inter-annotator agreement (IAA) scores.

- Task 2: Document-Level Evaluation—Students were presented with the full documents from which the same sentences had been extracted. They re-evaluated the same sentences, now situated in their original context, and again computed IAA and compared both sets of scores (from Task 1 and Task 2).

Students were instructed to review the lab instructions. They had the option to download the files and work with them locally or use them on the cloud platform. They were explicitly advised not to discuss their evaluations with peers in the same language pair, in order to minimise scoring influence and preserve the independence of IAA calculations.

We note that re-evaluating the same sentences in Task 2 may introduce a bias stemming from prior exposure. However, this design choice was intentional and pedagogically motivated. By working with the same sentences across both tasks, students were encouraged to reflect critically on how their judgments shifted with access to context. Rather than viewing the second evaluation as “correcting” the first, the aim was to stimulate meta-cognitive insight into the challenges of sentence-level evaluation and the role of document-level information in interpreting MT output. This design enabled students not only to quantify differences in IAA and quality scores across tasks but also to engage in qualitative reflection on the types of context-sensitive errors that are likely to go unnoticed in sentence-level evaluation. In post-lab debriefing and written reflections, several students explicitly commented on their surprise at how context changed their interpretation of MT quality—an intended outcome of the exercise.

4.5.1. Task 1: Sentence-Level Evaluation

To carry out Task 1, students were asked to open the first tab, “1-SENT: Adequacy, Fluency and Errors”, to conduct the human evaluation of a text at sentence level in terms of adequacy, fluency, and error annotation (see Section 4.1). All assessments were performed via a drop-down menu interface (see Figure 1).

Upon completion of tab 1, students moved to tab 2 “2-SENT: Ranking” to perform the ranking evaluation on the same set of sentences, selecting the best translation among MT1, MT2, or Both, using the drop-down menu (see Figure 2). Subsequently, the results of Task 1 were discussed in class.

Following the conclusion of Task 1, students moved to Tab 3 “IAA: Adequacy&Fluency” to compute the IAA for adequacy, fluency, and error. They were instructed to collaborate with another student sharing the same language pair (and using the same MT system, for the 2022 cohort). The tab already contained Cohen’s Kappa formula [28], only requiring students to input their scores as Translator 1 (T1) and their classmate’s scores as Translator 2 (T2) (see Figure 3). They then analysed their scores, and the results were discussed in class.

4.5.2. Task 2: Document-Level Evaluation

Finally, students proceeded to Task 2, opening the Tab 4 “1-DOC: Adequacy, Fluency and Errors”. They were directed to assess the sentences highlighted (in yellow) within each text/document but were required to read the full document to understand the context in which these target sentences appeared. Students evaluated adequacy, fluency, and identified error types using the options provided in the drop-down menus (see Figure 4). (Due to time constraints during the lab session, students were asked to evaluate only the highlighted sentences rather than every sentence in the document, so they would have sufficient time to complete the IAA task; however, those who finished early were encouraged to assess the remaining sentences within the same documents.) Students evaluated adequacy, fluency, and identified error types using the options provided in the drop-down menu (see Figure 4).

Next, students were instructed to add the MT2 output (the same version used during the sentence-level evaluation) to Tab 5, “2-DOC: Ranking,” and to evaluate the translation at the document level, this time focusing on ranking MT1 and MT2 in context (see Figure 5). As in the sentence-level ranking task, MT2 was used solely for comparative ranking purposes and was not independently evaluated for adequacy, fluency, or error type.

Upon completion of the evaluation, they were instructed to return to Tab 3, the IAA Tab and input their scores for the document-level evaluation as T1, while partnering with a classmate as T2 (see Figure 3).

Subsequently, students analysed their scores and discussed their findings, highlighting the disparities between evaluating at the sentence level and the document level. This discussion occurred both in pairs and in class with the lecturer.

It is worth mentioning that the document-level tabs included an option for students to assign one single score for the entire translated documents (as shown in Figure 4 and Figure 5). However, due to time constraints, this task could not be completed during the lab session by most students. Students were advised to complete this task at home or in the lab if they finished the evaluation early. They were required to assign one single score for adequacy, fluency, and ranking to each full text, and to reflect on the rationale behind their scoring decisions. This was intended to illustrate the complexity and subjectivity involved in assigning a single quality score to an entire document, particularly when translation quality varies across segments. As reported in Castilho [22], assigning one score per full document tends to result in lower inter-annotator agreement for adequacy, ranking, and error identification when compared to sentence-level scoring, and sentence-in-context scoring. This is likely because full documents often contain a mix of “very good,” “reasonably good,” and “poorly translated” sentences, which makes the overall adequacy harder to pin down consistently. Translators may understand the document reasonably well overall, yet weigh different parts of it differently when forming a judgment, leading to divergent interpretations of what score best represents the text as a whole. This exercise was designed to help students recognise the challenges of holistic document-level assessment and to reflect critically on how translation quality is unevenly distributed across a text, affecting their ability to make consistent evaluative decisions.

5. Insights from Student Reflections

As part of the lab exercise, students were asked to submit a short Reflective Report (up to 500 words), articulating their thoughts on the two evaluation approaches: sentence-level and document-level assessment. We note that this reflection submission was not graded for correctness; rather, students who submitted reflections received participation points. To support their reflection, a structured questionnaire was provided, consisting of the following eight prompts:

- What were your expectations in terms of translation quality before doing this exercise?

- Were the results as you had expected?

- How did you approach evaluating the MT output(s) quality with single random sentences in contrast to evaluating sentences in context (with the full texts)?

- As a translator, how did you feel assessing the translation quality with the two different methodologies (single sentence vs. sentence in context) in terms of the following:

- a.

- effort

- b.

- how easy it was to understand the meaning of the source

- c.

- how easy was to recognise translation errors.

Give examples.

- Did you have any cases of misevaluation? If so, explain why you think they happened and give examples (source, target, gloss translation).

- Were the IAA results (if any) as you expected? Comment on the differences.

- With which methodology were you most satisfied and confident with the evaluation you provided? Why?

- Will this change how you use MT?

We note that these were intended purely as guidance, and therefore, students were not required to respond to each question individually, but could use them as a starting point for their own critical reflections. The qualitative comments were analysed thematically, and recurrent themes were identified inductively. While the frequency of responses was noted informally (even if not quantified), these themes were interpreted considering the research questions. The submissions provided insight into students’ engagement with context-aware MT evaluation, and accordingly, RQ2 examines changes in students’ perceived evaluation abilities. What follows is a synthesis of the most relevant and revealing insights that emerged across the reflective reports.

5.1. Expectations

The purpose of this opening question was to prompt students to reflect on their prior knowledge and understanding of MT quality. As Seel et al. [26] argue, learning environments should motivate learners by fostering expectations that stimulate reflective thinking about the objects of learning. Framing the activity in this way encouraged students to articulate and critically examine their assumptions about MT performance before engaging with the evaluation task itself.

The responses revealed that the majority of students held specific expectations regarding the performance of the MT systems they were utilising prior to the evaluation. Interestingly, students with language pairs known to pose fewer challenges for MT, such as romance languages, tended to have higher expectations of the system’s quality. Conversely, students dealing with language pairs recognised as more challenging for MT, such as low-resource languages, generally held lower expectations. It was noteworthy to observe that several students, having previously experimented with multiple MT systems, opted to explore entirely different systems that they had not previously encountered, such as eTranslation and GPT.

5.2. Approach to Sentence vs. Document-Level Evaluation

This question was aimed to make students reflect on their approaches to MT evaluation. Notably, this was a key focal point emphasised by the lecturer during the lab, encouraging students to consider potential adjustments or shifts in their evaluation strategies.

Several students indicated that their approach to sentence-level evaluation primarily centred on “focusing on fluency” (with some mentioning “grammar”) of individual translated sentences, without needing to “think of the context”, as ensuring adequacy would require additional contextual information. Students noted that during sentence-level evaluation, they only checked for “obvious errors” to determine their scores:

“I tackled each sentence individually, concentrating on their linguistic correctness”

“When assessing the translation quality, I found it is harder to evaluate the accuracy of a single random sentence than that of a sentence in full context, thus I was inclined to focus more on the assessment of fluency. For example, the word ‘they’ in the sentence ‘They will test your will’ can be translated as 他们 or 它们 depending on the context, the former one generally refers to human beings, while the latter refers to non-human things, animals or abstract concepts. In the absence of context, it can be assumed that both translations are correct.”

In contrast, students mentioned that in the document-level approach, they were able to assess both fluency and adequacy, as the full context facilitated a more comprehensive evaluation. Some students reported reading the entire source text before commencing their assessment, while others preferred to evaluate sentence by sentence, occasionally revisiting earlier judgments when encountering new contextual information. Rather than viewing this as a source of bias, the design of the task intentionally encouraged students to re-examine and potentially revise their initial sentence-level evaluations in light of new contextual insights. This reflective process was central to the pedagogical aim: to demonstrate how evaluative decisions are context-sensitive and to prompt students to critically assess their own evaluation strategies.

5.3. Perception of Difficulty

This question intended to encourage students to contemplate the level of effort involved in various forms of human evaluation of MT across different metrics. Additionally, it encouraged students to consider how the two different methodologies (sentence-level vs. document-level) may facilitate or hinder the accurate assessment of errors, and how the ability to comprehend the source text can affect the evaluation.

Effort: In terms of perceived effort, there was a divide in numbers of students who thought the sentence-level evaluation required more effort than the document-level evaluation. Interestingly, students who mentioned that they struggled “focusing on fluency” and/or “grammar” only to evaluate the random sentences, described that this methodology required more effort, while students who tended to accept the source text’s ambiguity more easily stated that more effort were required in the document-level evaluation, since they had more text to read and make sure the translation was consistent.

“I felt less strict on my interpretation and evaluation and more indulgent with imprecision and ambiguities [for the sentence-level evaluation]—and this exactly because I did not have the context. My perception of the task was positive: the work seemed to flow more easily and to demand small cognitive effort. Evaluating sentences in context in its turn, was a much more intellectually demanding task, requiring rereadings and a heavier load of interpretation that made my approach to the sentences more subjective and the categorisation of mistakes more demanding. However, it gave me the lacking context and allowed me to perceive mistakes that were invisible before.”

Understanding the Source and Identifying Errors: All students mentioned that errors were much easier to be identified when sentences were evaluated in context. The majority of students mentioned that the source sentences that contained some kind of ambiguity could only be fully understood in the document-level evaluation. Some of the most cited examples were sentences with ambiguous pronouns that could be translated in either gender (it, they), Named Entities (Chase Center), and lexical ambiguity examples (legs, juice). Interestingly, although acknowledging that it was easier to identify errors in contextualised sentences, some students mentioned that certain awkward sentences did not need the context to be evaluated:

“some of the mistakes were very obvious and in that sense it did not really matter if it was a single sentence or not, but for more complex or subtle mistakes having context surrounding the sentence that was being analysed did help to point them out.”

5.4. Misevaluation

The goal of this question was to guide students to critically reflect on instances of misevaluation, considering how they may have occurred and what measures could have prevented them. Encouraging students to identify and analyse their own misevaluations was one of the central aims of this exercise, as it develops their capacity to recognise the limitations of sentence-level assessment and the importance of contextual understanding. This focus also corroborates findings reported Castilho [23], where cases of misevaluation in evaluation lacking discourse-level information were observed among professional evaluators. Given the diverse language pairs among our students (see Section 3), the responses encompassed a wide range of perspectives. A considerable number of students noted that scores assigned to sentences in the sentence-level evaluation tended to be higher, even when the sentences contained ambiguous elements:

“The initial confusion was caused by the lack of context which prevented me from identifying the gender. Nevertheless, there was no ground to mark down the MTs’ outputs as incorrect as there was no background information to prove this.”

Moreover, students reported instances where they adjusted the scores assigned for a translation in the sentence-level evaluation upon evaluating the same sentence with context, realising that the translation was, in fact, incorrect:

“The presence of the context influenced some of my decisions made on a sentence-based level. E.g., it was only after I had read the whole text, did I understand the full meaning of ‘I am going to have my son try to turn the legs the other way and hope it helps’ and, as a result, was able to adjust my final evaluation.”

These students’ reflections indicate that engaging students in identifying and correcting their own misevaluations can foster greater awareness and critical judgement, both of which are essential for developing the evaluative expertise required in professional translation practice.

The following are examples of misevaluation stemmed from ambiguity cases that were pointed out in some students’ reflections, including the following:

Ambiguous pronouns (gender/reference):

- It is pretty.

- They will test your will.

- They are AWFUL.

- Bring it!

Lexical ambiguity:

- I am going to have my son try to turn the legs the other way and hope it helps.

- The massive organ fills the space and adds to the romance!

- Warriors hope fans’ return to Chase Center gives team juice

- Bring it!

Additionally, students with Japanese and Chinese language backgrounds observed that some of the types of errors included in the typology used for the task were not applicable or did not occur in their language pairs. After exploring the full MQM taxonomy [29], they identified instances in the MT outputs that they felt were context-related errors, but which did not clearly align with any of the categories provided. (While students discussed these observations in general terms int heir reflective reports, they did not provide specific textual examples; as such, their reflections could not be analysed in detail but still point to important cross-linguistic considerations in MT evaluation.) This response demonstrated their critical engagement beyond the core task, as they pursued independent inquiry into the limitations of the error categories and their applicability across diverse linguistic systems.

5.5. Inter-Annotator Agreement-IAA

The question about the IAA was designed to prompt students to compare the agreement results between themselves when conducting evaluations at the sentence-level and the document-level.

The majority of the students were able to pair up with a colleague who shared the same language pair (and the same MT systems) to calculate IAA at the sentence-level. However, due to time constraints, only a few were able to compare the IAA at the document-level.

Students who calculated IAA solely at the sentence-level commented on the discrepancies in evaluation between themselves and their colleague, particularly regarding the choice of scores and error identification. Furthermore, they acknowledged the impact of the chosen MT system and the challenges posed by their language pairs, indicating an awareness of these factors.

Those who computed IAA at both the sentence-level and document-level reflected on the differences in IAA scores between the two evaluation tasks. The majority observed that IAA scores at the sentence-level were higher compared to those at the document level.

“These results may be explained by the fact that translators do not stress the aspect of gender as much on a sentence-level, but they become duly aware of that aspect once the evaluation is carried out on a document-level.”

5.6. Satisfaction

The question about which methodology the students were satisfied the most, and more confident with the evaluation they provided, prompted them to consider the advantages and drawbacks of each approach. This allowed them to reflect both as translators, assessing their confidence in assigning scores, and from the perspective of researchers or project managers designing translation evaluations.

A few students mentioned that they were confident when assessing the translations on the sentence-level due to the reduced effort required to consider the full context:

“I was more confident evaluating translations on sentence-level because it has less emphasis on the gender aspect. The other methodology [document-level] required more attentiveness from the translator to catch translation errors that manifest as minor gender inflection issues, which can be daunting especially for big projects.”

However, the majority of students indicated greater satisfaction with the document-level evaluation methodology and expressed more confidence in their scores using this approach:

“I was confident and satisfied with document level because I knew exactly what was expected of me. I was confident at sentence level until I got to document level and context was brought in.”

“The methodology I am most satisfied with is the sentence-in-context assessment with the adequacy metric. This approach allows for a comprehensive understanding of the source text and a clearer evaluation of the referential relationship. It also reduces inaccurate evaluations.”

“Overall, I preferred the evaluation at the document level, as I was able to understand the context and be more critical of the quality of the MT output, whereas I was not confident when evaluating the output at the sentence-level since I had no context to understand fully the source sentences or to judge if there was a gender error.”

This aligns with one of the core objectives of the exercise, to prompt students to experience firsthand how context influences both the reliability and confidence of translation evaluation. By contrasting the two methodologies, the task was designed not only to highlight the contextual blind spots of MT systems but also to make students aware of how these blind spots affect their own judgments as evaluators. In general, there was a connection between satisfaction and the perceived effort required for each method, with students appreciating the greater reliability of document-level evaluation despite its higher cognitive demands.

5.7. Future Use of MT

Several reflective reports included insightful comments on how this activity might alter their approach to using MT. Some students noted that the exercise increased their awareness of MT’s limitations and the importance of defining quality criteria in evaluations. They expressed a newfound caution and understanding of the need for clear evaluation frameworks:

“I never considered the term “quality” to be so difficult to operationalise. It is now clear that when trying to measure quality one should always define what is perceived as “high” or “low” in their evaluation, as well as state the framework it takes place in.”

Others expressed a more nuanced view of MT, acknowledging its limitations and the necessity of human evaluation for handling nuances in grammar:

“This will change my thought on how to use MT. As translators, we should be more aware how to link different MT systems and evaluation methods to different situations.”

“In sum, this type of evaluation already makes me perceive MT with gentler eyes and understand it has limitations since it cannot always deal with nuances related to grammar errors properly, which is rather up for human evaluation.”

“I am more aware of the errors that are prevalent in machine translation regarding fluency and accuracy problems, and it will be interesting to see the progression of Irish in various translation engines in the future.”

Interestingly, many students highlighted the importance of providing context to MT systems for better outputs. This was an interesting finding, especially for the 2022 cohort, as at that time, apart from big MT systems (like Google and DeepL), most of the open MT systems were not able to recognise any context dependencies. That means if students pasted a single sentence or the full text in the MT systems, there would be no changes in the translation. However, for some MT systems, the change between pasting single sentences and the full documents did happen:

“This will change the way I use machine translation. When I use machine translation, I will put the entire text into the online machine translation system instead of copying and pasting sentence by sentence into the machine translation system.”

“In order to get better outputs from the online translation engine, I suppose it is better to input all the sentences with context rather than single random sentences extracted from a text, since the engine can recognize the context and then generate better translations.”

“I think the way I use MT in the future will change in the way that I will try to give the MT more context instead of just one sentence, since I saw that it can change the translation.”

“This highlights the importance of providing a MT engine with context to ensure a higher possibility of receiving accurate output.”

This finding prompted further experimentation and led to the publication of a paper with the students on how open MT systems handle context [30].

6. Insights from the Exercise

This exercise provided valuable insights into the nuances of teaching evaluation of MT output at different levels of granularity. The aim of this lab was to show students the variance in translation quality resulting from evaluations conducted at the sentence and document levels. Students were able to learn how to rigorously assess translations from EN into their respective languages, using the state-of-the-art human metrics consisting of adequacy, fluency, error and ranking. This pedagogical approach builds on prior work [22] reflecting practices introduced in similar classroom settings [15], and aligning with broader findings in MT pedagogy that highlight the importance of context-aware evaluation and the empowerment of translators as critical users [6,10,11,12,13].

The study does not attempt to measure learning gains quantitatively. Rather, it focuses on demonstrating that such activities can be implemented effectively within the constraints of a translation lab, and that they promote reflective engagement with evaluation practices. As shown in prior research on translator training and MT evaluation, structured classroom exercises that combine hands-on experience with guided reflection can empower students and build critical awareness of translation technology [3,4,7,9].

In response to RQ1 (how can context-aware translation evaluation activities be effectively implemented within the constraints of an MA translation technology lab setting), the findings show that such an exercise can be successfully integrated into a lab setting using a carefully scaffolded structure: beginning with sentence-level evaluation, progressing to document-level assessment, and combining hands-on evaluation tasks with guided reflection. The selection of the DELA corpus proved pedagogically effective, as the context-related issues annotated in the English source texts (such as reference, grammatical gender, and lexical ambiguity) were relevant to many of the language pairs used by the students. While these issues did not manifest in the same way across all languages, they were sufficiently cross-cutting to stimulate meaningful reflection on context-sensitive translation challenges. The methodological decision to highlight specific sentences for sentence-level evaluation helped structure the task effectively: it enabled students to begin with a focused sentence-level assessment and later revisit those same examples within the full document context, thereby supporting a direct and pedagogically valuable comparison. These observations echo findings that segment-level human evaluation may obscure context-dependent errors, underscoring the value of document-level assessment for both pedagogical and research purposes [8,19,20].

The accessibility and utility of the spreadsheet-based evaluation tool extended beyond the lab itself, with many students incorporating it into subsequent assignments and dissertations, suggesting sustained engagement. This indicates that the exercise not only provided immediate pedagogical value but also effectively supported students’ critical engagement with MT evaluation, aligning with broader objectives in translator training to develop evaluative skills and reflective practice [6,10].

Regarding the RQ2 (the impact of context-aware MT evaluation on students’ perceived ability to assess translation quality compared to sentence-level evaluation) the results show that the contrast between the two methodologies led students to recognise how sentence-level evaluations can obscure context-dependent issues such as reference, gender, or coherence. Students’ reflections not only shed light on the practical challenges and considerations faced during the evaluation process but also highlight the importance of context in determining translation quality. Most students expressed increased confidence and satisfaction when evaluating in context, noting that document-level assessment allowed for more accurate judgments and deeper insights into translation quality. This result coorborare results form professional translator found in [23]. The students’ shift in awareness highlights the pedagogical value of exposing students to the contextual blind spots of both MT systems and traditional evaluation practices. We note that, while a few students found it less complex to assess translations at the sentence level, others recognised the added value of considering the broader context provided by document-level evaluation.

By encouraging students to critically reflect on their evaluation methodologies and preferences, this exercise served a dual purpose: first, to illustrate how sentence-level evaluations may obscure important context-sensitive errors, and second, to highlight how MT systems often struggle to manage discourse-level phenomena such as reference, coherence, and ambiguity. Through this guided comparison, students became more attuned to the limitations of both evaluation practices and MT outputs, an awareness that is crucial for translators working in increasingly MT-integrated workflows. In doing so, the exercise supported several of the module’s learning outcomes, including understanding the principles underlying contemporary translation technologies, grasping the principles of contemporary MT, critically assessing translation technologies and their outputs, and making informed decisions regarding their use while considering associated risks. In this way, the lab not only fosters evaluation literacy but also contributes to broader translation pedagogy by foregrounding the role of context in both human and machine translation assessment, while engaging students with professional, socio-technical, and ethical considerations related to translation technology.

However, certain limitations of the exercise were noted. Some students appeared to misconstrue the lab’s primary objective, focusing on comparing the quality of the two MT systems used (MT1 and MT2), rather than comparing the two evaluation methodologies (sentence-level vs. document-level). In hindsight, this misunderstanding is understandable: while MT2 was included solely as a reference point for ranking, it was not intended to be evaluated independently for adequacy, fluency, or errors—a distinction that may not have been sufficiently emphasised. This highlights the need to communicate the pedagogical aims more explicitly in future iterations of the lab, particularly regarding the role of secondary MT outputs in the evaluation process.

Moreover, emphasising how different MT systems handle context is essential to helping students understand the source of certain translation errors. Some systems might struggle with context dependencies, particularly in areas such as reference, gender, or lexical ambiguity. Others may generate more accurate output when provided with full-document input, reducing the number of misevaluations students encounter due to missing contextual cues. In the current lab setup, the same MT outputs (typically generated from sentence-level input) were used in both evaluation tasks. However, this may have introduced bias, as document-level assessments were based on sentence-level MT, which does not reflect how the system might perform if fed the entire document as input. To address this, future iterations of the lab could generate two distinct MT outputs: one using sentence-level input (for the first task), and another using the entire document as input (for the document-level task). This would allow students to evaluate how MT quality changes based on input granularity and gain deeper insights into the relationship between system design and translation performance.

Ultimately, this study demonstrates that carefully structured, context-aware MT evaluation exercises allow students to develop critical skills necessary to navigate complex translation technologies. By exposing students to both the strengths and limitations of MT systems and the impact of context on evaluation outcomes, the lab addressed the persistent gaps in awareness and preparedness identified in prior research [7], helping translators in training to engage confidently and critically with MT tools, make informed decisions regarding the evaluation of these systems, and equipped to adapt to the rapidly evolving landscape of translation technology. More broadly, this exercise tackles a recurring challenge in translator education: as Venkatesan [7] notes, trainees often demonstrate limited awareness and preparedness in leveraging existing translation technologies, with attitudes remaining relatively stable over time. This is partly due to the absence of systematic training and a discomfort with trade-offs between quality and efficiency, even when MT output is efficient and acceptable. By providing structured, hands-on experience with context-aware MT evaluation, the lab helps bridge that gap, equipping students with both the practical skills and the reflective understanding necessary for contemporary translation practice.

Funding

This research was funded by the School of Applied Languages and Intercultural Studies at DCU, and partially with the financial support of Science Foundation Ireland at ADAPT, the SFI Research Centre for AI-Driven Digital Content Technology at DCU [13/RC/2106 P2].

Institutional Review Board Statement

Ethical review and approval were waived for this study. The research was based on the author’s own teaching practices and involved the evaluation of standard educational activities with the aim of improving teaching and learning within an existing module. According to Dublin City University’s policy, ethical approval is not required for projects that constitute assessment or audit of standard practice—such as the evaluation of teaching methods—where the focus is on ongoing pedagogical practice and enhancement. https://www.dcu.ie/research/no-submission, accessed on 20 July 2025.

Informed Consent Statement

Informed consent for participation is not required as per Dublin City University‘s policy https://www.dcu.ie/research/no-submission, accessed on 20 July 2025.

Data Availability Statement

The original data presented in the study are openly available in github at https://github.com/SheilaCastilho/DELA-Project, accessed on 20 July 2025.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Kenny, D. Technology and Translator Training. In The Routledge Handbook of Translation and Technology; O’Hagan, M., Ed.; Routledge: London, UK; New York, NY, USA, 2019; pp. 498–515. [Google Scholar]

- Doherty, S.; Kenny, D.; Way, A. Taking Statistical Machine Translation to the Student Translator. In Proceedings of the 10th Conference of the Association for Machine Translation in the Americas: Commercial MT User Program, San Diego, CA, USA, 28 October–1 November 2012. [Google Scholar]

- Doherty, S.; Kenny, D. The design and evaluation of a Statistical Machine Translation syllabus for translation students. Interpret. Transl. Train. 2014, 8, 295–315. [Google Scholar] [CrossRef]

- Mellinger, C.D. Translators and Machine Translation: Knowledge and Skills Gaps in Translator Pedagogy. Interpret. Transl. Train. 2017, 11, 280–293. [Google Scholar] [CrossRef]

- Tavares, C.; Tallone, L.; Oliveira, L.; Ribeiro, S. The Challenges of Teaching and Assessing Technical Translation in an Era of Neural Machine Translation. Educ. Sci. 2023, 13, 541. [Google Scholar] [CrossRef]

- Winters, M.; Deane-Cox, S.; Böser, U. Translation, Interpreting and Technological Change: Innovations in Research, Practice and Training; Bloomsbury Advances in Translation, Bloomsbury Publishing: London, UK, 2024. [Google Scholar]

- Venkatesan, H. Technology preparedness and translator training: Implications for curricula. Babel 2023, 69, 666–703. [Google Scholar] [CrossRef]

- Castilho, S.; Knowles, R. Editors’ foreword. Nat. Lang. Process. 2025, 31, 983–985. [Google Scholar] [CrossRef]

- Gaspari, F.; Almaghout, H.; Doherty, S. A Survey of Machine Translation Competences: Insights for Translation Technology Educators and Practitioners. Perspect. Stud. Transl. Theory Pract. 2015, 23, 333–358. [Google Scholar] [CrossRef]

- González Pastor, D.M. Introducing Machine Translation in the Translation Classroom: A Survey on Students’ Attitudes and Perceptions. Transl. Interpret. Stud. 2021, 16, 47–65. [Google Scholar] [CrossRef]

- Hatim, B. Translating Text in Context. In The Routledge Companion to Translation Studies; Munday, J., Ed.; Routledge: London, UK, 2009; pp. 50–67. [Google Scholar]

- Melby, A.K. Future of Machine Translation: Musings on Weaver’s Memo. In The Routledge Handbook of Translation and Technology; O’Hagan, M., Ed.; Routledge: London, UK, 2019; pp. 419–436. [Google Scholar]

- Killman, J. Machine Translation and Legal Terminology: Data-Driven Approaches to Contextual Accuracy. In Handbook of Terminology: Volume 3. Legal Terminology; Temmerman, R., Van Campenhoudt, M., Eds.; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2023; pp. 485–510. [Google Scholar]

- Esqueda, M.D. Machine translation: Teaching and learning issues. Trab. Linguística Apl. 2021, 60, 282–299. [Google Scholar] [CrossRef]

- Moorkens, J. What to expect from Neural Machine Translation: A practical in-class translation evaluation exercise. Interpret. Transl. Train. 2018, 12, 375–387. [Google Scholar] [CrossRef]

- Guerberof-Arenas, A.; Moorkens, J. Machine Translation and Post-editing Training as Part of a Master’s Programme. Jostrans J. Spec. Transl. 2019, 31, 217–238. [Google Scholar] [CrossRef]

- Guerberof-Arenas, A.; Valdez, S.; Dorst, A.G. Does Training in Post-editing Affect Creativity? J. Spec. Transl. 2024, 41, 74–97. [Google Scholar] [CrossRef]

- Girletti, S.; Lefer, M.A. Introducing MTPE pricing in translator training: A concrete proposal for MT instructors. Interpret. Transl. Train. 2024, 18, 1–18. [Google Scholar] [CrossRef]

- Läubli, S.; Castilho, S.; Neubig, G.; Sennrich, R.; Shen, Q.; Toral, A. A Set of Recommendations for Assessing Human–Machine Parity in Language Translation. J. Artif. Intell. Res. 2020, 67, 653–672. [Google Scholar] [CrossRef]

- Kocmi, T.; Avramidis, E.; Bawden, R.; Bojar, O.; Dvorkovich, A.; Federmann, C.; Fishel, M.; Freitag, M.; Gowda, T.; Grundkiewicz, R.; et al. Findings of the 2023 Conference on Machine Translation (WMT23): LLMs Are Here but Not Quite There Yet. In Proceedings of the Eighth Conference on Machine Translation, Singapore, 6–7 December 2023; pp. 1–42. [Google Scholar] [CrossRef]

- Castilho, S.; Popović, M.; Way, A. On Context Span Needed for MT Evaluation. In Proceedings of the Twelfth International Conference on Language Resources and Evaluation (LREC’20), Marseille, France, 13–15 May 2020; pp. 3735–3742. [Google Scholar]

- Castilho, S. On the same page? Comparing inter-annotator agreement in sentence and document level human machine translation evaluation. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020. [Google Scholar]

- Castilho, S. Towards Document-Level Human MT Evaluation: On the Issues of Annotator Agreement, Effort and Misevaluation. In Proceedings of the Workshop on Human Evaluation of NLP Systems. Association for Computational Linguistics, Online, 19–23 April 2021; pp. 34–45. [Google Scholar]

- Freitag, M.; Foster, G.; Grangier, D.; Ratnakar, V.; Tan, Q.; Macherey, W. Experts, Errors, and Context: A Large-Scale Study of Human Evaluation for Machine Translation. Trans. Assoc. Comput. Linguist. 2021, 9, 1460–1474. [Google Scholar] [CrossRef]

- Castilho, S.; Knowles, R. A survey of context in neural machine translation and its evaluation. Nat. Lang. Process. 2024, 31, 986–1016. [Google Scholar] [CrossRef]

- Seel, N.M.; Lehmann, T.; Blumschein, P.; Podolskiy, O.A. Instructional Design for Learning: Theoretical Foundations; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Castilho, S.; Cavalheiro Camargo, J.L.; Menezes, M.; Way, A. DELA Corpus: A Document-Level Corpus Annotated with Context-Related Issues. In Proceedings of the Sixth Conference on Machine Translation. Association for Computational Linguistics, Online, 10–11 November 2021; pp. 571–582. [Google Scholar]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Lommel, A.; Uszkoreit, H.; Burchardt, A. Multidimensional quality metrics (MQM): A framework for declaring and describing translation quality metrics. Tradumàtica 2014, 12, 455–463. [Google Scholar] [CrossRef]

- Castilho, S.; Mallon, C.Q.; Meister, R.; Yue, S. Do online Machine Translation Systems Care for Context? In What About a GPT Model? In Proceedings of the 24th Annual Conference of the European Association for Machine Translation, Tampere, Finland, 12–15 June 2023; pp. 393–417. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).