GSTGPT: A GPT-Based Framework for Multi-Source Data Anomaly Detection

Abstract

1. Introduction

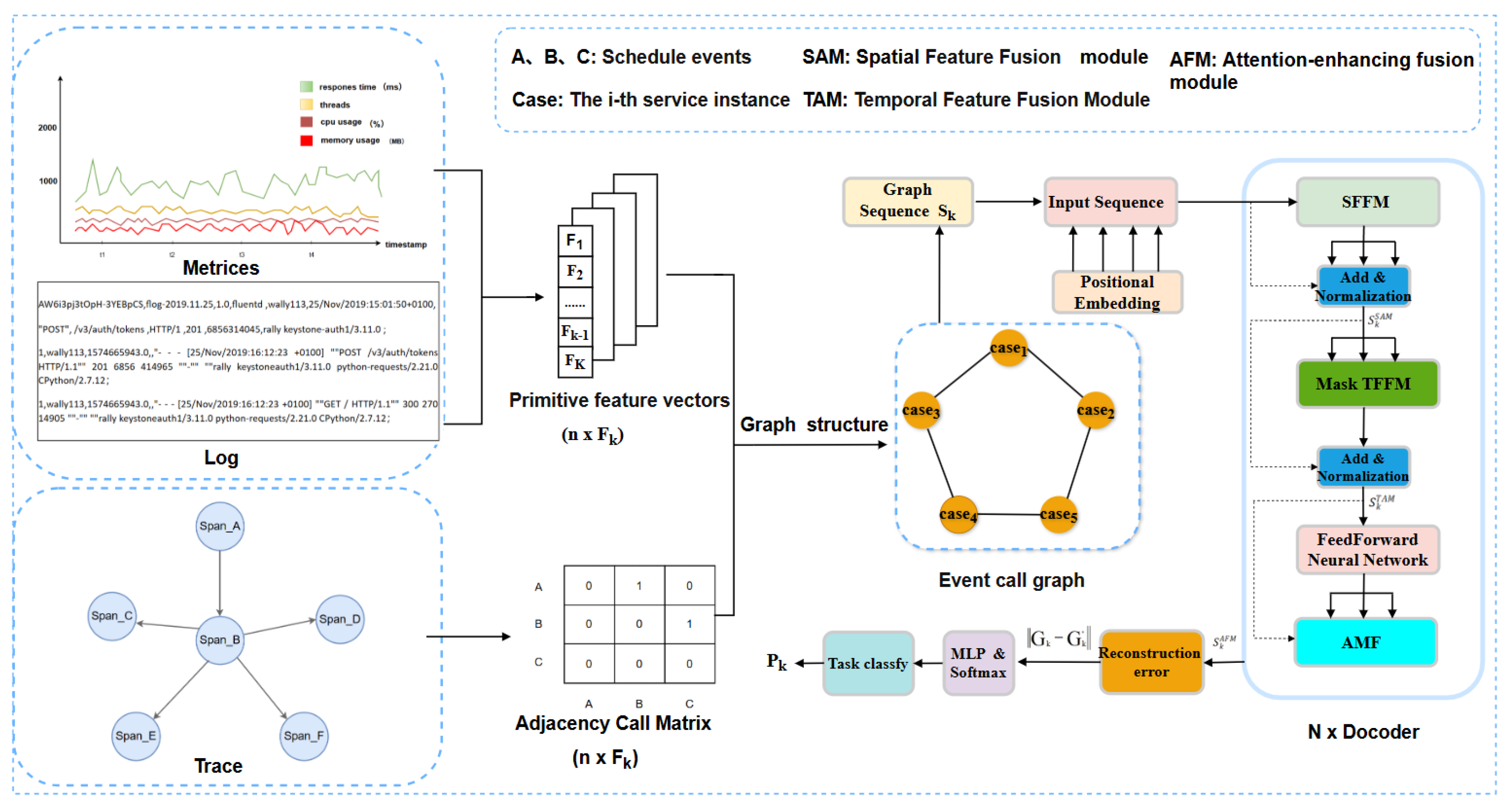

- We introduce GSTGPT, which represents heterogeneous sequences such as logs, traces, and performance as directed graphs to capture more structural information than previous methods.

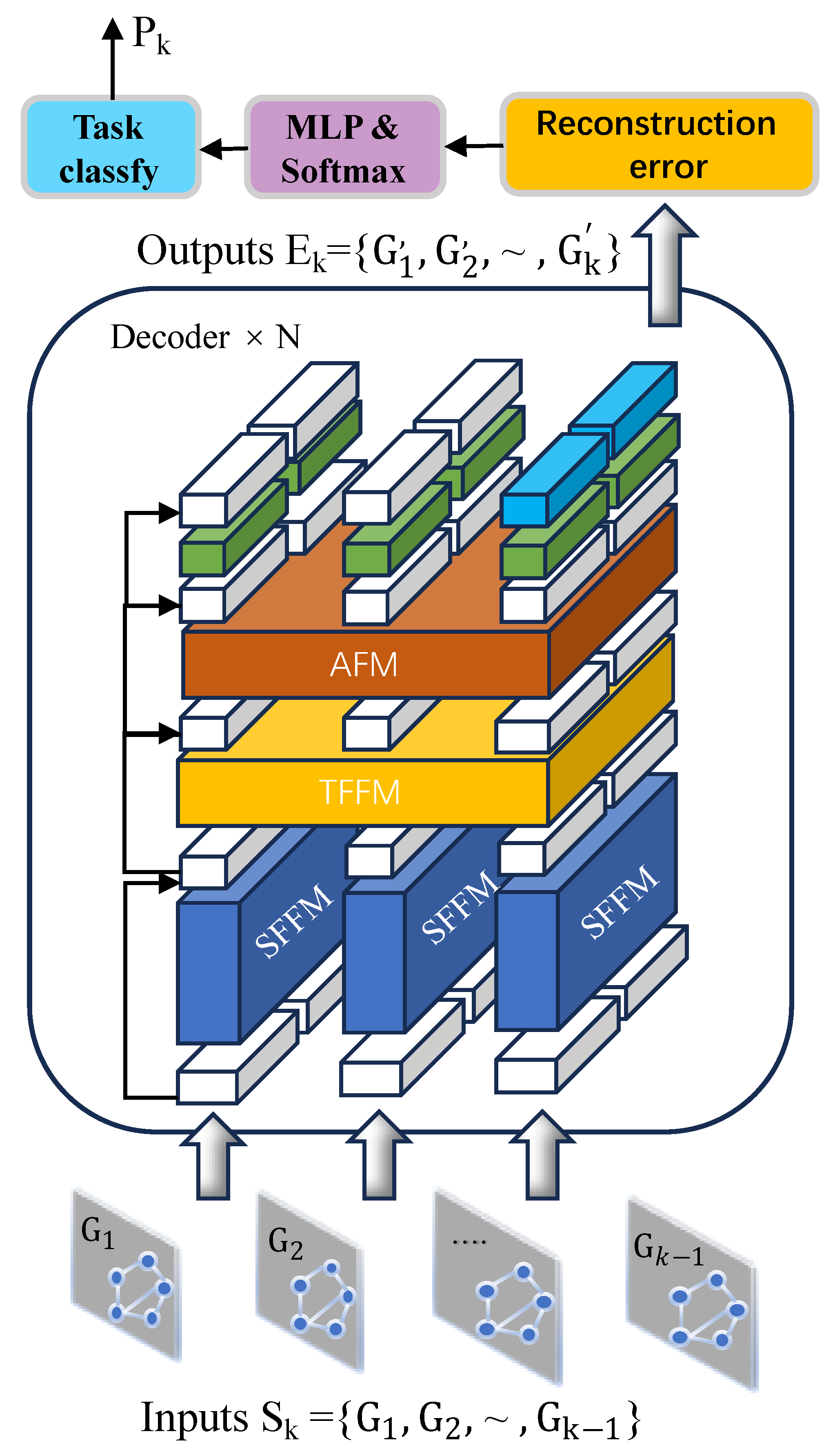

- We propose a neural network based on a generative pre-trained language model with spatial and temporal feature fusion to address the interrelation between multi-modal data features and temporal dependencies between sliding window data points.

- We establish an attention-based enhanced feature fusion module designed to strengthen the model’s ability to capture key information, allowing the model to automatically identify and emphasize critical features that enhance detection performance.

- We compare our method with five advanced anomaly detection methods on two real-world datasets under the same conditions. Ablation experiments show that using the complete GSTGPT method leads to a 9.55% performance improvement. The comparative experimental results on the two real-world datasets demonstrate that our proposed GSTGPT method achieves F1 scores of 0.957 and 0.967, outperforming other baseline models.

2. Related Work

2.1. Traditional-Based Classification Methods

2.2. Deep Learning-Based Methods

2.3. LLM-Based Methods

3. Preliminaries

3.1. Pre-Processing

3.1.1. Log Pre-Processing

3.1.2. Metrics Pre-Processing

3.1.3. Trace Pre-Processing

3.2. Multimodal Data Integration via Graph Structure

4. Approach

4.1. GPT with Spatial Feature Fusion Module

4.2. GPT with Temporal Feature Fusion Module

4.3. Attention-Enhancing Fusion Module

4.4. Reconstruction Error

| Algorithm 1 The GSTGPT training algorithm |

|

5. Experiments

5.1. Datasets

5.2. Baselines

5.3. Experiment Settings

5.4. Experimental Results

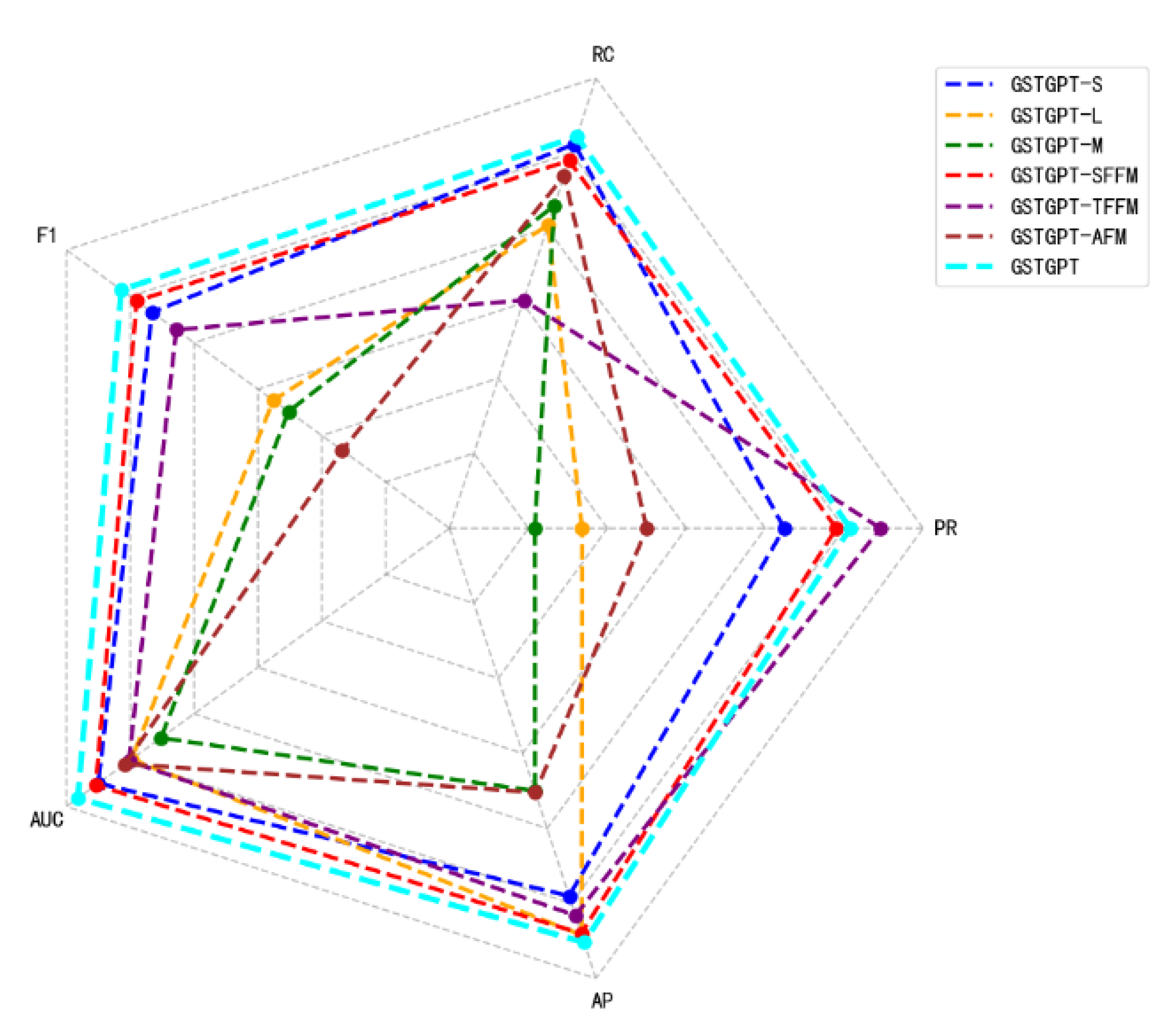

5.5. Ablation Studies

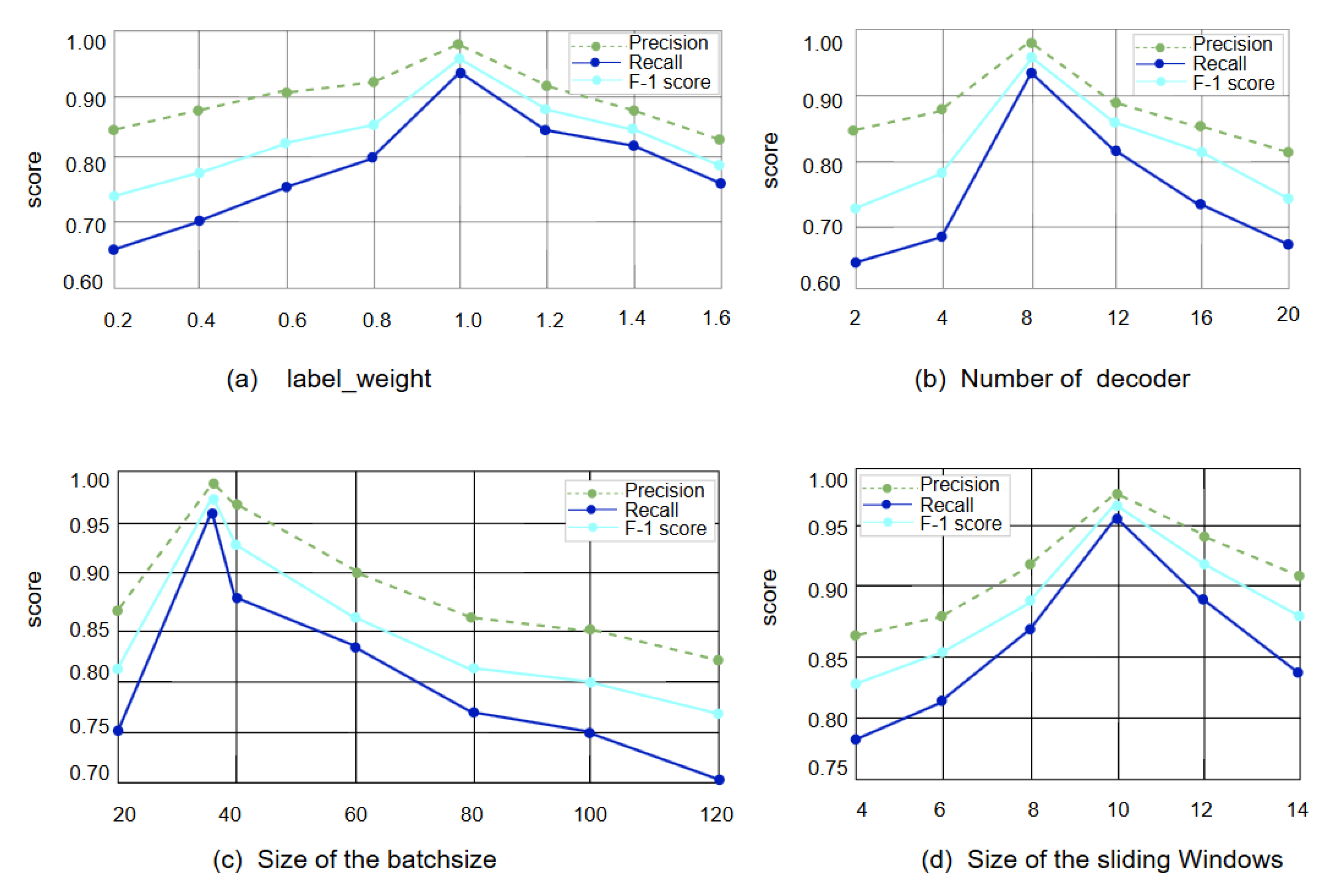

Parameter Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hariri, S.; Kind, M.C.; Brunner, R.J. Extended isolation forest. IEEE Trans. Knowl. Data Eng. 2019, 33, 1479–1489. [Google Scholar] [CrossRef]

- Hejazi, M.; Singh, Y.P. One-class support vector machines approach to anomaly detection. Appl. Artif. Intell. 2013, 27, 351–366. [Google Scholar] [CrossRef]

- Nanduri, A.; Sherry, L. Anomaly detection in aircraft data using Recurrent Neural Net-works (RNN). In Proceedings of the 2016 Integrated Communications Navigation and Surveillance (ICNS), Herndon, VA, USA, 19–21 April 2016; p. 5C2–1. [Google Scholar]

- Laghrissi, F.; Douzi, S.; Douzi, K.; Hssina, B. Intrusion detection systems using long short-term memory (LSTM). J. Big Data 2021, 8, 65. [Google Scholar] [CrossRef]

- Xu, W.; Jang-Jaccard, J.; Singh, A.; Wei, Y.; Sabrina, F. Improving performance of autoencoder-based net-work anomaly detection on nsl-kdd dataset. IEEE Access 2021, 9, 140136–140146. [Google Scholar] [CrossRef]

- Sabuhi, M.; Zhou, M.; Bezemer, C.P.; Musilek, P. Applications of generative adversarial networks in anomaly detection: A systematic literature review. IEEE Access 2021, 9, 161003–161029. [Google Scholar] [CrossRef]

- Guo, H.; Yuan, S.; Wu, X. Logbert: Log anomaly detection via bert. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Zhang, X.; Xu, Y.; Lin, Q.; Qiao, B.; Zhang, H.; Dang, Y.; Xie, C.; Yang, X.; Cheng, Q.; Li, Z.; et al. Robust log-based anomaly detection on unstable log data. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 807–817. [Google Scholar]

- Lu, S.; Wei, X.; Li, Y.; Wang, L. Detecting anomaly in big data system logs using convolu-tional neural network. In Proceedings of the 2018 IEEE 16th Intl Conf on Dependable, Autonomic and Secure Computing, 16th Intl Conf on Pervasive Intelligence and Computing, 4th Intl Conf on Big Data Intelligence and Computing and Cyber Science and Technology Congress (DASC/PiCom/DataCom/CyberSciTech), Athens, Greece, 12–15 August 2018; pp. 151–158. [Google Scholar]

- Yang, L.; Chen, J.; Wang, Z.; Wang, W.; Jiang, J.; Dong, X.; Zhang, W. Semi-supervised log-based anomaly detection via probabilistic label estimation. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 22–30 May 2021; pp. 1448–1460. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

- Zhao, N.; Chen, J.; Yu, Z.; Wang, H.; Li, J.; Qiu, B.; Xu, H.; Zhang, W.; Sui, K.; Pei, D. Identifying bad software changes via multimodal anomaly detection for online service systems. In Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Athens, Greece, 23–28 August 2021; pp. 527–539. [Google Scholar]

- Lee, C.; Yang, T.; Chen, Z.; Su, Y.; Lyu, M.R. Eadro: An end-to-end troubleshooting frame-work for microservices on multi-source data. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 1750–1762. [Google Scholar]

- Zhao, C.; Ma, M.; Zhong, Z.; Zhang, S.; Tan, Z.; Xiong, X.; Yu, L.; Feng, J.; Sun, Y.; Zhang, Y.; et al. Robust multimodal failure detection for microservice systems. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 5639–5649. [Google Scholar]

- Zhang, S.; Jin, P.; Lin, Z.; Sun, Y.; Zhang, B.; Xia, S.; Li, Z.; Zhong, Z.; Ma, M.; Jin, W.; et al. Robust failure diagnosis of microservice system through multimodal data. IEEE Trans. Serv. Comput. 2023, 16, 3851–3864. [Google Scholar] [CrossRef]

- Nedelkoski, S.; Bogatinovski, J.; Mandapati, A.K.; Becker, S.; Cardoso, J.; Kao, O. Multi-source distributed system data for ai-powered analytics. In Proceedings of the European Conference on Service-Oriented and Cloud Computing, Heraklion, Greece, 28–30 September 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 161–176. [Google Scholar]

- Liu, Y.; Tao, S.; Meng, W.; Yao, F.; Zhao, X.; Yang, H. Logprompt: Prompt engineering to-wards zero-shot and interpretable log analysis. In Proceedings of the 2024 IEEE/ACM 46th International Conference on Software Engineering: Companion Proceedings, Lisbon, Portugal, 14–20 April 2024; pp. 364–365. [Google Scholar]

- Han, X.; Yuan, S.; Trabelsi, M. Loggpt: Log anomaly detection via gpt. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 1117–1122. [Google Scholar]

- Xu, W.; Huang, L.; Fox, A.; Patterson, D.; Jordan, M.I. Detecting large-scale system prob-lems by mining console logs. In Proceedings of the ACM SIGOPS 22nd Symposium on Operating Systems Principles, Big Sky, MT, USA, 11–14 October 2009; pp. 117–132. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Kurita, T. Principal component analysis (PCA). In Computer Vision: A Reference Guide; Springer International Publishing: Cham, Switzerland, 2021; pp. 1013–1016. [Google Scholar]

- Almodovar, C.; Sabrina, F.; Karimi, S.; Azad, S. LogFiT: Log anomaly detection using fine-tuned language models. IEEE Trans. Netw. Serv. Manag. 2024, 21, 1715–1723. [Google Scholar] [CrossRef]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. Deeplog: Anomaly detection and diagnosis from system logs through deep learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1285–1298. [Google Scholar]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. Loganomaly: Unsuper-vised detection of sequential and quantitative anomalies in unstructured logs. Int. Jt. Conf. Artif. Intell. 2019, 19, 4739–4745. [Google Scholar]

- Liu, P.; Xu, H.; Ouyang, Q.; Jiao, R.; Chen, Z.; Zhang, S.; Yang, J.; Mo, L.; Zeng, J.; Xue, W.; et al. Unsupervised detection of microservice trace anomalies through service-level deep bayesian networks. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 48–58. [Google Scholar]

- Yang, Z.; Harris, I.G. LogLLaMA: Transformer-based log anomaly detection with LLaMA. arXiv 2025, arXiv:2503.14849. [Google Scholar]

- Gruver, N.; Finzi, M.; Qiu, S.; Wilson, A.G. Large language models are zero-shot time series forecasters. Adv. Neural Inf. Process. Syst. 2023, 36, 19622–19635. [Google Scholar]

- Zhou, T.; Niu, P.; Sun, L.; Jin, R. One fits all: Power general time series analysis by pretrained lm. Adv. Neural Inf. Process. Syst. 2023, 36, 43322–43355. [Google Scholar]

- Shi, X.; Xue, S.; Wang, K.; Zhou, F.; Zhang, J.; Zhou, J.; Tan, C.; Mei, H. Language models can improve event pre-diction by few-shot abductive reasoning. Adv. Neural Inf. Process. Syst. 2023, 36, 29532–29557. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A system-atic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Guan, W.; Cao, J.; Qian, S.; Gao, J.; Ouyang, C. Logllm: Log-based anomaly detection using large language models. arXiv 2024, arXiv:2411.08561. [Google Scholar]

- Li, Z.; Zhao, N.; Zhang, S.; Sun, Y.; Chen, P.; Wen, X.; Ma, M.; Pei, D. Constructing large-scale real-world benchmark datasets for aiops. arXiv 2022, arXiv:2208.03938. [Google Scholar]

- He, P.; Zhu, J.; Zheng, Z.; Lyu, M.R. Drain: An online log parsing approach with fixed depth tree. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 33–40. [Google Scholar]

- Wang, Z.; Wu, Z.; Li, X.; Shao, H.; Han, T.; Xie, M. Attention-aware temporal–spatial graph neural net-work with multi-sensor information fusion for fault diagnosis. Knowl. Based Syst. 2023, 278, 110891. [Google Scholar] [CrossRef]

- Huang, J.; Yang, Y.; Yu, H.; Li, J.; Zheng, X. Twin graph-based anomaly detection via attentive multi-modal learning for microservice system. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Luxembourg, 11–15 September 2023; pp. 66–78. [Google Scholar]

- Liu, Y.; Li, Z.; Pan, S.; Gong, C.; Zhou, C.; Karypis, G. Anomaly detection on attributed networks via con-trastive self-supervised learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 2378–2392. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Y.; Duan, J.; Huang, C.; Cao, D.; Tong, Y.; Xu, B.; Bai, J.; Tong, J.; Zhang, Q. Multivariate time-series anomaly detection via graph attention network. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 841–850. [Google Scholar]

- Xie, X.; Cui, Y.; Tan, T.; Zheng, X.; Yu, Z. Fusionmamba: Dynamic feature enhancement for multimodal image fusion with mamba. Vis. Intell. 2024, 2, 37. [Google Scholar] [CrossRef]

- Chen, Q.; Huang, G.; Wang, Y. The weighted cross-modal attention mechanism with sentiment prediction auxiliary task for multimodal sentiment analysis. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 2689–2695. [Google Scholar] [CrossRef]

| Method | Data Used | RC | PR | F1 | Baseline |

|---|---|---|---|---|---|

| TraceAnomaly | Trace | 0.989 | 0.909 | 0.947 | GCN |

| TranAD | Metric | 0.754 | 0.814 | 0.782 | Transformer & GAN |

| SCWarn | Metric & Log | 0.440 | 0.354 | 0.392 | DyGAT |

| PLELog | Log | 0.664 | 0.724 | 0.692 | BERT |

| LogGPT | Log | 0.910 | 0.978 | 0.943 | GPT |

| GSTGPT | Metric & Log & Trace | 0.961 | 0.953 | 0.957 | GPT & GAT |

| Method | Data Used | RC | PR | F1 | Baseline |

|---|---|---|---|---|---|

| TraceAnomaly | Trace | 0.246 | 0.850 | 0.373 | GCN |

| TranAD | Metric | 0.812 | 0.654 | 0.724 | Transformer & GAN |

| SCWarn | Metric & Log | 0.645 | 0.865 | 0.738 | DyGAT |

| PLELog | Log | 0.218 | 0.752 | 0.338 | BERT |

| LogGPT | Log | 0.913 | 0.875 | 0.893 | GPT |

| GSTGPT | Metric & Log & Trace | 0.964 | 0.972 | 0.967 | GPT & GAT |

| Method | PR | RC | AP | AUC | F1 |

|---|---|---|---|---|---|

| GSTGPT-S | 0.912 | 0.956 | 0.945 | 0.975 | 0.933 |

| GSTGPT-L | 0.784 | 0.902 | 0.971 | 0.949 | 0.838 |

| GSTGPT-M | 0.754 | 0.915 | 0.875 | 0.926 | 0.826 |

| GSTGPT-SFFM | 0.945 | 0.945 | 0.970 | 0.977 | 0.945 |

| GSTGPT-TFFM | 0.973 | 0.852 | 0.958 | 0.951 | 0.914 |

| GSTGPT-AFM | 0.825 | 0.935 | 0.876 | 0.954 | 0.784 |

| GSTGPT | 0.953 | 0.961 | 0.976 | 0.991 | 0.957 |

| Dataset | Interval time | # Logs | # Traces | # instance*metric |

|---|---|---|---|---|

| MSDS | 3 s | 174 | 16 | 7*4 |

| AIOps | 45 s | 6012 | 8265 | 40*25 |

| Dataset | Total Duration | Total number of logs | Total number of Traces | Total number of metric |

| MSDS | 9965 s | 11,595 | 12,183 | 11,158 |

| AIOps | 20,115 s | 75,528 | 86,924 | 72,247 |

| Dataset | Preprocessing | Constructing graph | Detecting | Total |

| MSDS | 0.027 s | 0.0012 s | 0.032 s | 0.0263 s |

| AIOps | 5.2554 s | 0.191 s | 0.248 s | 7.4324 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Fang, M.; Zhang, S.; Shan, F.; Li, J. GSTGPT: A GPT-Based Framework for Multi-Source Data Anomaly Detection. Information 2025, 16, 959. https://doi.org/10.3390/info16110959

Liu J, Fang M, Zhang S, Shan F, Li J. GSTGPT: A GPT-Based Framework for Multi-Source Data Anomaly Detection. Information. 2025; 16(11):959. https://doi.org/10.3390/info16110959

Chicago/Turabian StyleLiu, Jizhao, Mingyan Fang, Shuqin Zhang, Fangfang Shan, and Jun Li. 2025. "GSTGPT: A GPT-Based Framework for Multi-Source Data Anomaly Detection" Information 16, no. 11: 959. https://doi.org/10.3390/info16110959

APA StyleLiu, J., Fang, M., Zhang, S., Shan, F., & Li, J. (2025). GSTGPT: A GPT-Based Framework for Multi-Source Data Anomaly Detection. Information, 16(11), 959. https://doi.org/10.3390/info16110959