UniAD: A Real-World Multi-Category Industrial Anomaly Detection Dataset with a Unified CLIP-Based Framework

Abstract

1. Introduction

- Lack of Cross-Category Generalization:

- Incompatibility with Mixed-Category Training:

- We propose UniCLIP-AD, a unified anomaly detection framework built upon CLIP and prompt learning. It enables effective anomaly detection across multiple industrial categories using a single model, substantially improving generalization and deployment efficiency.

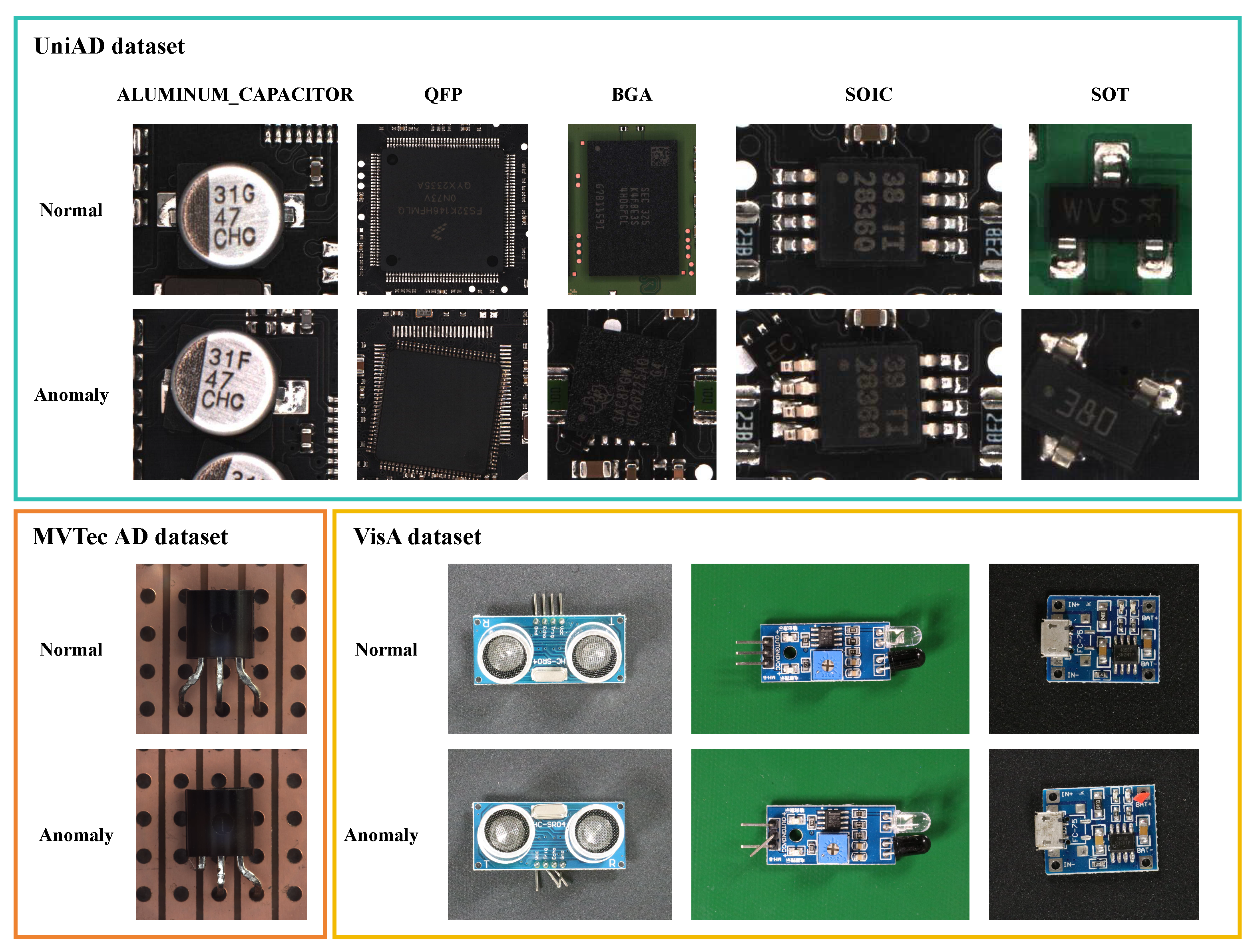

- We construct and release UniAD, a novel industrial anomaly detection dataset collected from actual production lines. It includes 25,000+ high-resolution images spanning 7 categories of electronic components, each with pixel-level and image-level annotations. UniAD offers authentic, diverse, and subtle defect instances, making it a strong benchmark for evaluating universal detection models.

- Extensive experiments demonstrate the superiority of our method. On UniAD, UniCLIP-AD achieves average AU-ROC and F1-scores of 92.1% and 89.8%, respectively, in cross-category detection. It significantly outperforms leading baselines—particularly in F1-score, which is critical for practical deployment—highlighting its strong potential as a general-purpose solution for industrial quality inspection.

2. Related Work

2.1. Anomaly Detection Methods

2.2. Existing Datasets

3. Methods

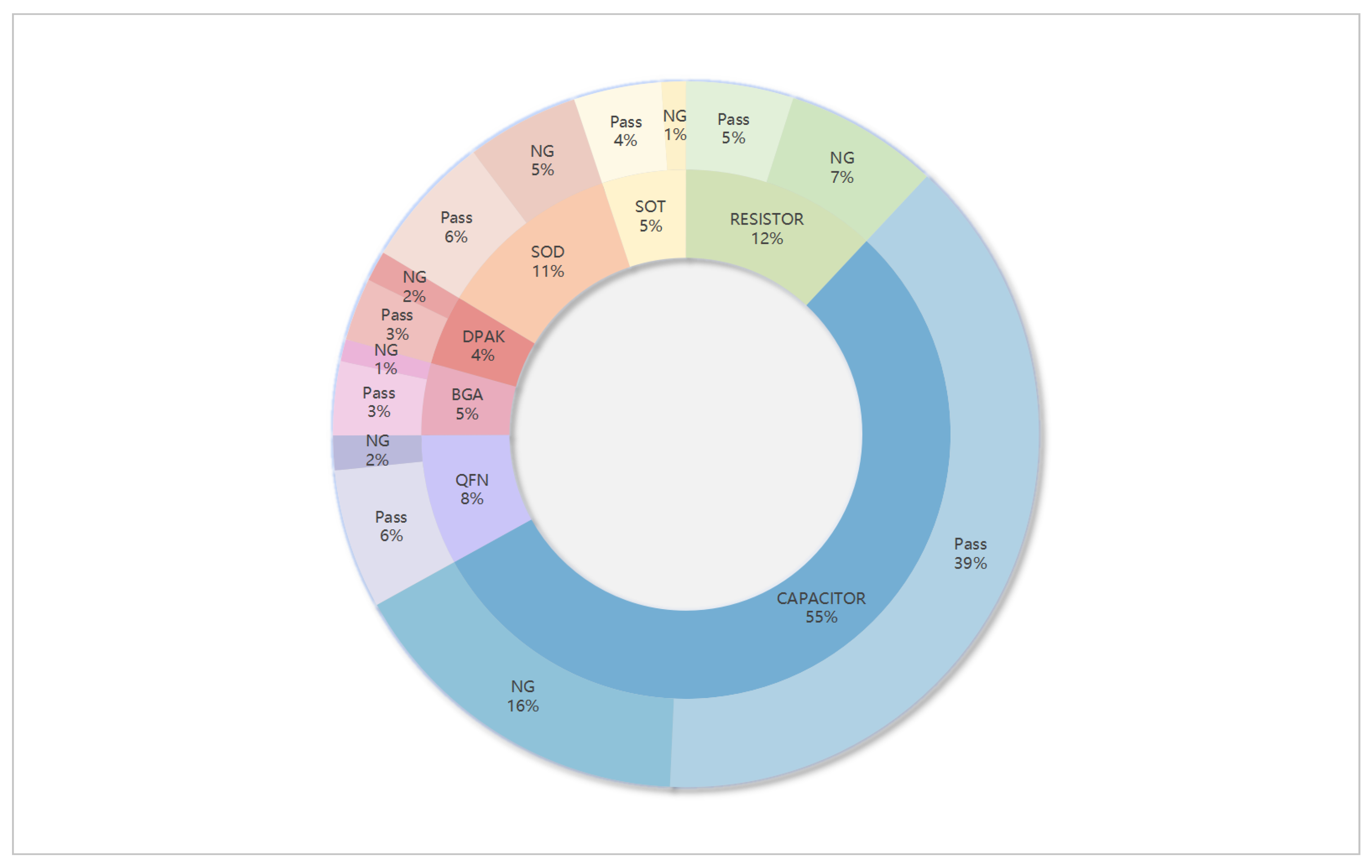

3.1. Dataset Analysis

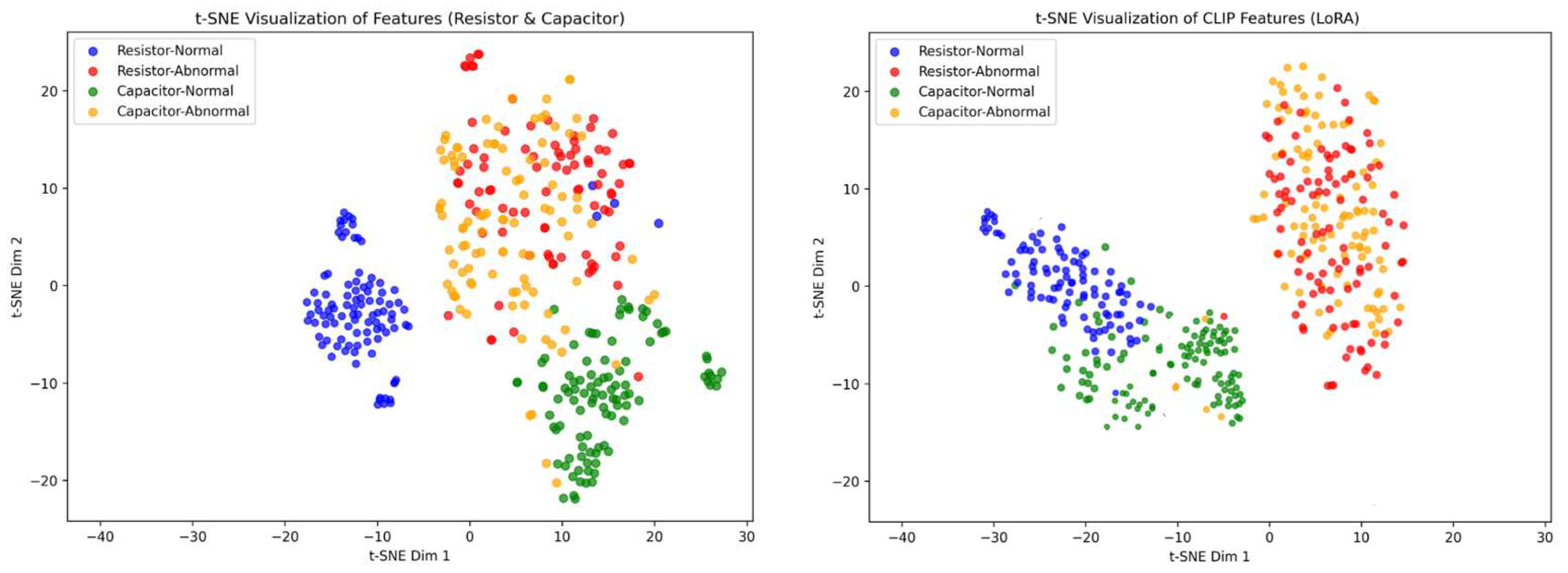

3.2. CLIP Backbone and LORA-Based Fine-Tuning

3.3. Prompt Construction and Similarity-Based Classification

- Normal prompt: “A cropped industrial photo without defect for anomaly detection”

- Anomalous prompt: “A cropped industrial photo with damage for anomaly detection”

3.4. Supervised Training with Cross-Entropy Loss

- It enables end-to-end training with clear labels, avoiding the need for reconstruction-based thresholds or latent feature distance heuristics.

- It generalizes across categories, since the model classifies based on prompt semantics rather than fixed class prototypes.

- It allows deployment in category-agnostic industrial settings, where defects are rare, unstructured, and continuously evolving.

3.5. Generalization and Robustness

4. Experiments and Result

4.1. Experimental Setup

4.2. Benchmark Methods

- CutPaste [26] is a classic data augmentation-based self-supervised learning method. It generates synthetic anomalies by cutting and pasting image patches onto random locations of normal images. By training a binary classification model on both the original normal samples and these synthetic anomalies, CutPaste effectively enhances the model’s sensitivity to local inconsistencies and structural errors. However, this method suffers from an inherent limitation: a significant “domain gap” exists between its synthetic anomalies and real industrial defects. The generated patches, lacking diversity in morphology and texture, can only crudely simulate defect features and fail to accurately cover the complex and varied patterns of real-world anomalies.

- SimpleNet [27] is an efficient discriminative method that leverages powerful features extracted by deep neural networks pre-trained on large-scale datasets, demonstrating excellent performance in single-class anomaly detection tasks. To further enhance its discriminative power against anomalies, SimpleNet strategically injects Gaussian noise into the pre-trained feature space to generate diverse abnormal features. It also introduces shallow feature adapters to reduce the distributional shift between the pre-trained features and the target domain data. Although these strategies have led to high detection AUROC (Area Under the Receiver Operating Characteristic Curve) on benchmark datasets like MVTec AD, SimpleNet still relies on training independently with sufficient normal samples for each category, and its detection capability is confined to known, trained object classes.

- CFA [4] (Coupled-hypersphere-based Feature Adaptation) is a feature adaptation method based on transfer learning. It fine-tunes pre-trained features by introducing learnable target-oriented patch descriptors and a scalable memory bank. The core idea is to increase the separability between normal and abnormal features by optimizing the features of normal samples to be more compact in a hyperspherical space. CFA has shown excellent detection performance on single-target datasets, achieving high AUROC. However, it is inherently designed for specialized adaptation to a specific category’s dataset. Extending it to a multi-category scenario necessitates repeating the entire adaptation process for each class, thus lacking cross-category generalization capability.

- DSR [28] (Dual-Space Reconstructor) is a generative model-based anomaly detection method. It innovatively proposes a dual-decoder reconstruction architecture and directly generates abnormal samples within the model’s latent space, thereby avoiding reliance on external anomaly datasets. This endogenous anomaly generation mechanism significantly improves the model’s detection performance on “near-distribution” anomalies. However, DSR’s architecture is relatively complex, and its performance is highly dependent on the effectiveness of anomaly simulation in the latent space. It still lacks the capability to directly model and generalize to significant distributional differences between categories and to completely unknown defects in the real world that deviate substantially from the training data distribution.

- AnomalyCLIP [29] is a recent CLIP-based zero-shot anomaly detection framework that introduces an object-agnostic prompt learning strategy to enhance cross-domain generalization. By decoupling anomaly understanding from specific object semantics, it learns domain-invariant textual prompts for “normal” and “abnormal” patterns. During inference, anomaly scores are computed via the cosine similarity between image embeddings and the abnormal prompt embedding. In this study, AnomalyCLIP is included as a recent CLIP variant baseline, reproduced under identical preprocessing and evaluation settings for fair comparison.

4.3. Evaluation Metrics

- High recall ensures that defective components are not mistakenly passed as normal.

- High precision reduces unnecessary alarms and manual inspections.

4.4. Experimental Results and Analysis

4.4.1. Overall Cross-Category Performance

- Limitation of Baseline Methods—The Dilemma of Distribution Fitting: Traditional methods, whether based on data augmentation (CutPaste), feature discrimination (SimpleNet, CFA), or image reconstruction (DSR), all fundamentally attempt to learn a compact “normal” data distribution that represents all training samples. While this strategy is effective in single-category tasks, the target distribution in a unified detection scenario becomes exceedingly complex, multi-modal, and even internally discontinuous due to the mixture of normal samples from seven different categories. Forcing a single model to fit such a complex distribution inevitably leads to inter-class interference, causing the model to over-generalize in order to accommodate all “normal” variations. This results in a loss of sensitivity to the fine-grained features of individual categories, ultimately manifesting as performance degradation and ambiguous decision-making.

- Advantage of UniCLIP-AD—Adaptation Based on Prior Knowledge: Our method addresses this problem from a fundamentally different perspective. Instead of learning a complex distribution from scratch, we build upon the strong foundation of CLIP. The frozen CLIP vision encoder provides a powerful, semantically coherent feature space, pre-trained on massive image–text pairs, which endows it with universal prior knowledge about what constitutes a “normal object.” Our core task shifts from difficult distribution fitting to efficient domain adaptation. By inserting lightweight LoRA modules for fine-tuning, we use a minimal number of parameters (only the LoRA parts) to capture the specific industrial domain features of the UniAD dataset and “inject” them into CLIP’s powerful general-purpose representations. This “universal prior + precise adaptation” strategy enables the model to understand both the commonalities of “resistors” and “BGA chips” as normal industrial components and their subtle differences. This leads to high-precision, high-confidence decisions in the unified detection task, ultimately reflected in the dual leadership in both AU-ROC and F1-Score.

4.4.2. Per-Category Performance Breakdown

- Superior Consistency and Overwhelming Advantage of Our Method: Our model demonstrates excellent and consistent performance across all subcategories. On the AU-ROC metric (Table 9), a key indicator of classification ability, it scored above 94% on all seven categories, achieving near-perfect scores of 99% on several, including CAPACITOR and RESISTOR. This indicates that our model has successfully learned a highly generalizable discriminative criterion applicable to chip components with diverse morphologies, textures, and functions. Similarly, on the more stringent and practically relevant F1-Score metric (Table 8), our method ranked first in multiple categories such as BGA, CAPACITOR, and RESISTOR, while also showing highly competitive performance in the remaining ones. This comprehensive and balanced excellence proves that our model does not exhibit category-specific biases but has successfully constructed a unified model capable of generalizing across the entire chip inspection domain.

- Performance Instability and Scenario-Dependency of Benchmark Methods: In stark contrast to the stable performance of our method, all baseline methods exhibited significant performance imbalances. Their effectiveness was highly correlated with the component category being processed, exposing the inherent limitations of their technical paradigms.

4.4.3. Result Analysis

4.4.4. Comparative Analysis with AnomalyCLIP and Cross-Domain Validation

4.4.5. Additional Comparison with Other Supervised Methods

4.5. Ablation Results

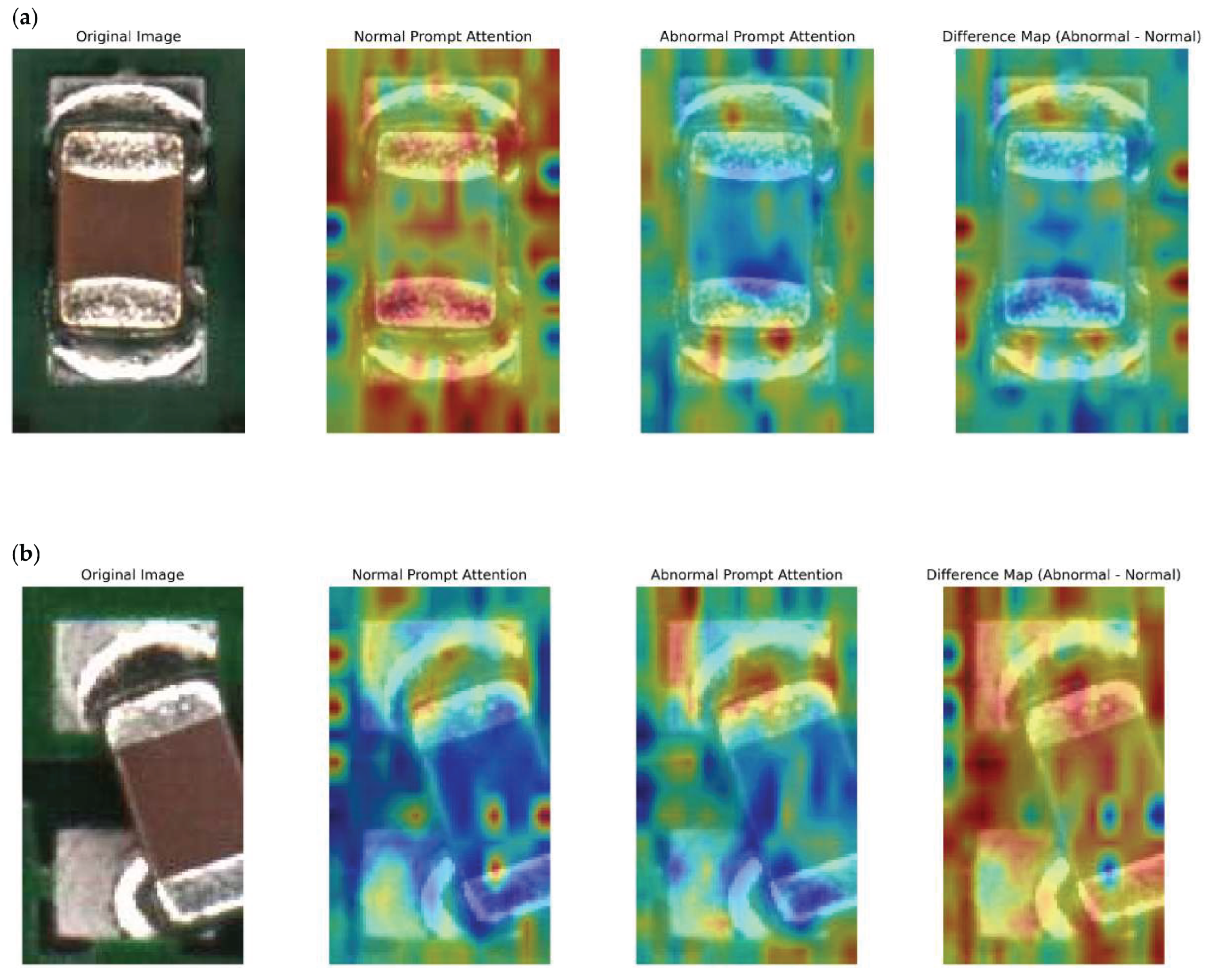

4.6. Visualization of LoRA Adaptation

- Normal Prompt Attention: Shows even attention across normal capacitor features.

- Abnormal Prompt Attention: Reveals focused attention on anomalous regions in abnormal images.

- Difference Map: Highlights significant attention shifts towards anomalies when comparing abnormal and normal prompts.

4.7. Robustness to Noisy and Imbalanced Data

- (1)

- Few-shot and Imbalanced Categories: We evaluated the model on categories with limited defect samples (BGA and SOT).

- (2)

- Noisy Labels: We randomly introduced 10% label corruption into the RESISTOR training set.

4.8. Generalization Evaluation

4.9. Practical Deployment Considerations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Draem-a discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8330–8339. [Google Scholar]

- Qiang, Y.; Cao, J.; Zhou, S.; Yang, J.; Yu, L.; Liu, B. tGARD: Text-Guided Adversarial Reconstruction for Industrial Anomaly Detection. IEEE Trans. Ind. Inform. 2025. [Google Scholar] [CrossRef]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 14318–14328. [Google Scholar]

- Lee, S.; Lee, S.; Song, B.C. Cfa: Coupled-hypersphere-based feature adaptation for target-oriented anomaly localization. IEEE Access 2022, 10, 78446–78454. [Google Scholar] [CrossRef]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9592–9600. [Google Scholar]

- Zou, Y.; Jeong, J.; Pemula, L.; Zhang, D.; Dabeer, O. Spot-the-difference self-supervised pre-training for anomaly detection and segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 392–408. [Google Scholar]

- Wang, C.; Zhu, W.; Gao, B.B.; Gan, Z.; Zhang, J.; Gu, Z.; Qian, S.; Chen, M.; Ma, L. Real-iad: A real-world multi-view dataset for benchmarking versatile industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 22883–22892. [Google Scholar]

- Zuo, Z.; Wu, Z.; Chen, B.; Zhong, X. A reconstruction-based feature adaptation for anomaly detection with self-supervised multi-scale aggregation. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5840–5844. [Google Scholar]

- Wyatt, J.; Leach, A.; Schmon, S.M.; Willcocks, C.G. Anoddpm: Anomaly detection with denoising diffusion probabilistic models using simplex noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 650–656. [Google Scholar]

- Zhang, H.; Wang, Z.; Zeng, D.; Wu, Z.; Jiang, Y.G. DiffusionAD: Norm-guided one-step denoising diffusion for anomaly detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025. [Google Scholar] [CrossRef]

- Hyun, J.; Kim, S.; Jeon, G.; Kim, S.H.; Bae, K.; Kang, B.J. Reconpatch: Contrastive patch representation learning for industrial anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 2052–2061. [Google Scholar]

- Yuan, J.; Gao, C.; Jie, P.; Xia, X.; Huang, S.; Liu, W. AFR-CLIP: Enhancing Zero-Shot Industrial Anomaly Detection with Stateless-to-Stateful Anomaly Feature Rectification. 2025. Available online: http://arxiv.org/abs/2503.12910 (accessed on 15 August 2025).

- Ma, W.; Zhang, X.; Yao, Q.; Tang, F.; Wu, C.; Li, Y.; Yan, R.; Jiang, Z.; Zhou, S.K. Aa-clip: Enhancing zero-shot anomaly detection via anomaly-aware clip. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 4744–4754. [Google Scholar]

- Gao, H.; Qiu, B.; Barroso, R.J.D.; Hussain, W.; Xu, Y.; Wang, X. Tsmae: A novel anomaly detection approach for internet of things time series data using memory-augmented autoencoder. IEEE Trans. Netw. Sci. Eng. 2022, 10, 2978–2990. [Google Scholar] [CrossRef]

- Huo, Y.; Cheng, X.; Lin, S.; Zhang, M.; Wang, H. Memory-augmented autoencoder with adaptive reconstruction and sample attribution mining for hyperspectral anomaly detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5518118. [Google Scholar] [CrossRef]

- Li, W.; Shang, Z.; Zhang, J.; Gao, M.; Qian, S. A novel unsupervised anomaly detection method for rotating machinery based on memory augmented temporal convolutional autoencoder. Eng. Appl. Artif. Intell. 2023, 123, 106312. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhang, J.; Yi, R.; Wang, Y.; Wang, C. Multimodal industrial anomaly detection via hybrid fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8032–8041. [Google Scholar]

- Yu, Z.; Dong, Z.; Yu, C.; Yang, K.; Fan, Z.; Chen, C.P. A review on multi-view learning. Front. Comput. Sci. 2025, 19, 197334. [Google Scholar] [CrossRef]

- Mohammadi, M.; Berahmand, K.; Sadiq, S.; Khosravi, H. Knowledge tracing with a temporal hypergraph memory network. In Proceedings of the International Conference on Artificial Intelligence in Education, Palermo, Italy, 22–26 July 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 77–85. [Google Scholar]

- Yang, E.; Xing, P.; Sun, H.; Guo, W.; Ma, Y.; Li, Z.; Zeng, D. 3CAD: A Large-Scale Real-World 3C Product Dataset for Unsupervised Anomaly Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 9175–9183. [Google Scholar]

- Jezek, S.; Jonak, M.; Burget, R.; Dvorak, P.; Skotak, M. Deep learning-based defect detection of metal parts: Evaluating current methods in complex conditions. In Proceedings of the 2021 13th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Brno, Czech Republic, 25–27 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 66–71. [Google Scholar]

- Cheng, Y.; Cao, Y.; Chen, R.; Shen, W. Rad: A comprehensive dataset for benchmarking the robustness of image anomaly detection. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2123–2128. [Google Scholar]

- Cao, Y.; Cheng, Y.; Xu, X.; Zhang, Y.; Sun, Y.; Tan, Y.; Zhang, Y.; Huang, X.; Shen, W. Visual Anomaly Detection under Complex View-Illumination Interplay: A Large-Scale Benchmark. arXiv 2025, arXiv:2505.10996. [Google Scholar]

- Li, W.; Xu, X.; Gu, Y.; Zheng, B.; Gao, S.; Wu, Y. Towards scalable 3d anomaly detection and localization: A benchmark via 3d anomaly synthesis and a self-supervised learning network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 22207–22216. [Google Scholar]

- Li, W.; Zheng, B.; Xu, X.; Gan, J.; Lu, F.; Li, X.; Ni, N.; Tian, Z.; Huang, X.; Gao, S.; et al. Multi-sensor object anomaly detection: Unifying appearance, geometry, and internal properties. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 9984–9993. [Google Scholar]

- Li, C.L.; Sohn, K.; Yoon, J.; Pfister, T. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 9664–9674. [Google Scholar]

- Liu, Z.; Zhou, Y.; Xu, Y.; Wang, Z. Simplenet: A simple network for image anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 20402–20411. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Dsr—A dual subspace re-projection network for surface anomaly detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 539–554. [Google Scholar]

- Zhou, Q.; Pang, G.; Tian, Y.; He, S.; Chen, J. Anomalyclip: Object-agnostic prompt learning for zero-shot anomaly detection. arXiv 2023, arXiv:2310.18961. [Google Scholar]

| Method | Adaptation Mechanism | Multi-Category Coverage | Data Requirement | Training Cost |

|---|---|---|---|---|

| UniCLIP-AD | Low-Rank Adaptation (LoRA) | Single model covering multiple industrial categories | Few auxiliary samples | Lightweight |

| AFR-CLIP | Cross-modal Feature Rectification + SP + MPFA | Partial zero-shot generalization | Requires auxiliary annotations | High |

| AA-CLIP | Two-stage text anchors + Residual adapters | Zero-shot generalization | Dependent on auxiliary annotations | Medium |

| Category | Total Samples | Pass | NG |

|---|---|---|---|

| RESISTOR | 3090 | 1272 | 1818 |

| CAPACITOR | 14,209 | 10,011 | 4198 |

| QFN | 2059 | 1650 | 409 |

| BGA | 1135 | 873 | 262 |

| DPAK | 1103 | 739 | 364 |

| SOD | 2911 | 1579 | 1332 |

| SOT | 1323 | 1035 | 288 |

| Defect Type | Sample Count |

|---|---|

| Missing Part | 324 |

| Component Misalignment | 173 |

| Tombstoning | 25 |

| Standing | 15 |

| Cold Solder | 7 |

| Short Circuit/Tilting/Body Warping | <10 |

| Width | Height | Count |

|---|---|---|

| 543 | 401 | 1713 |

| 87 | 46 | 1424 |

| 46 | 87 | 1415 |

| 87 | 47 | 982 |

| 47 | 87 | 751 |

| Comparison Dimension | MVTec AD | VisA | Real-IAD | UniAD |

|---|---|---|---|---|

| Annotation Granularity | Image-level/Pixel-level | Image-level + Region annotations | Image-level | Image-level + Pixel-level |

| Data Source | Lab-captured + synthetic | Industrial (partially synthetic) | Factory-collected | Real-world production line images |

| Defect Realism | Controlled/partially fake | Semi-realistic with some synthesis | Highly realistic | Highly realistic, with production-level detail |

| Task Coverage | Single-object, lab setting | Primarily PCB analysis | Multi-class electronic parts | Multi-type components + surface defect detection |

| Applicability | Limited to specific setups | Suitable for pattern/board analysis | Coarse-grained classification | Supports fine-grained localization + zero-shot detection |

| Category | Train Total | Train Normal | Train Anomalous | Test Total | Test Normal | Test Anomalous |

|---|---|---|---|---|---|---|

| RESISTOR | 2162 | 890 | 1272 | 928 | 382 | 546 |

| CAPACITOR | 9945 | 7007 | 2938 | 4264 | 3004 | 1260 |

| QFN | 1441 | 1155 | 286 | 618 | 495 | 123 |

| BGA | 794 | 611 | 183 | 341 | 262 | 79 |

| DPAK | 771 | 517 | 254 | 332 | 222 | 110 |

| SOD | 2037 | 1105 | 932 | 874 | 474 | 400 |

| SOT | 925 | 724 | 201 | 398 | 311 | 87 |

| Method | Mean AU-ROC | Mean F1-Score |

|---|---|---|

| CutPaste | 74.1 | 66 |

| SimpleNet | 84.8 | 44.7 |

| CFA | 88.3 | 65.6 |

| DSR | 81.5 | 65.9 |

| Ours (UniCLIP-AD) | 92.1 | 89.8 |

| Category | CutPaste | SimpleNet | CFA | DSR | UniCLIP-AD |

|---|---|---|---|---|---|

| BGA | 94.1 | 48.8 | 67.8 | 47.1 | 98.9 |

| CAPACITOR | 50.9 | 40.4 | 58.8 | 71.4 | 99.4 |

| DPAK | 88.3 | 45.7 | 67.6 | 37.9 | 62.9 |

| QFN | 95.4 | 55.6 | 55.1 | 60.5 | 82.9 |

| RESISTOR | 46.1 | 20.6 | 59.9 | 5.4 | 99.1 |

| Category | CutPaste | SimpleNet | CFA | DSR | UniCLIP-AD |

|---|---|---|---|---|---|

| BGA | 90.6 | 86.0 | 92.0 | 86.3 | 99.5 |

| CAPACITOR | 65.6 | 85.0 | 94.3 | 81.0 | 98.0 |

| DPAK | 85.0 | 85.0 | 89.3 | 78.0 | 97.4 |

| QFN | 68.6 | 84.0 | 75.8 | 86.8 | 96.3 |

| RESISTOR | 45.9 | 83.0 | 80.7 | 75.0 | 96.9 |

| Prompt Type | Description (Abnormal) | Params Added | AUROC |

|---|---|---|---|

| Hand-craft | “A cropped industrial photo with defect for anomaly detection” | 0 | 92.1 |

| Hand-craft | “Irregular shape, tilt, or incomplete connection.” | 0 | 92.0 |

| Hand-craft | “A soldered chip joint with visible misalignment, tilt or irregular welding defect in an industrial image.” | 0 | 92.4 |

| Soft prompt | Learnable tokens optimized during training | +0.1 M | 93.3 |

| Method | Dataset | AU-ROC (%) | F1-Score (%) | p-Value (vs. Ours) |

|---|---|---|---|---|

| AnomalyCLIP | UniAD | 90.8 ± 0.7 | 87.9 ± 0.8 | 0.011 |

| UniCLIP-AD (ours) | UniAD | 92.1 ± 0.4 | 89.8 ± 0.5 | - |

| AnomalyCLIP | MVTec AD | 95.8 ± 1.0 | 92.0 ± 0.7 | 0.012 |

| UniCLIP-AD (ours) | MVTec AD | 97.6 ± 0.6 | 94.5 ± 0.5 | - |

| Method | Training Type | AUROC | F1-Score | Multi-Category Generalization |

|---|---|---|---|---|

| ResNet-50 (Fine-tuned) | Fully Supervised | 83.7 | 79.1 | × (requires per-class training) |

| ViT-Supervised | Fully Supervised | 85.5 | 81.3 | × (requires per-class training) |

| UniCLIP-AD (Ours) | Lightly Supervised (Prompt + LoRA) | 92.1 | 89.8 | ✓ (single unified model) |

| Variants | AU-ROC | F1 Score |

|---|---|---|

| CLIP-zero shot | 63.2 | 67.4 |

| CLIP-lora | 92.1 | 89.8 |

| LoRA Rank (r) | Training Epochs | AUROC | F1 Score |

|---|---|---|---|

| 4 | 5 | 91.8 | 88.9 |

| 8 | 5 | 92.1 | 89.1 |

| 16 | 5 | 91.9 | 88.6 |

| 8 | 3 | 88.4 | 88.8 |

| 8 | 10 | 92.0 | 89.2 |

| Setting | Category | AUROC | F1-Score |

|---|---|---|---|

| Baseline (clean) | RESISTOR | 96.9 | 99.1 |

| 10% noisy labels | RESISTOR | 92.7 | 95.2 |

| Few-shot | BGA | 94.8 | 97.3 |

| Few-shot | SOT | 89.1 | 92.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Cao, J.; Duan, C. UniAD: A Real-World Multi-Category Industrial Anomaly Detection Dataset with a Unified CLIP-Based Framework. Information 2025, 16, 956. https://doi.org/10.3390/info16110956

Yang J, Cao J, Duan C. UniAD: A Real-World Multi-Category Industrial Anomaly Detection Dataset with a Unified CLIP-Based Framework. Information. 2025; 16(11):956. https://doi.org/10.3390/info16110956

Chicago/Turabian StyleYang, Junyang, Jiuxin Cao, and Chengge Duan. 2025. "UniAD: A Real-World Multi-Category Industrial Anomaly Detection Dataset with a Unified CLIP-Based Framework" Information 16, no. 11: 956. https://doi.org/10.3390/info16110956

APA StyleYang, J., Cao, J., & Duan, C. (2025). UniAD: A Real-World Multi-Category Industrial Anomaly Detection Dataset with a Unified CLIP-Based Framework. Information, 16(11), 956. https://doi.org/10.3390/info16110956