Prediction Multiscale Cross-Level Fusion U-Net with Combined Wavelet Convolutions for Thyroid Nodule Segmentation

Abstract

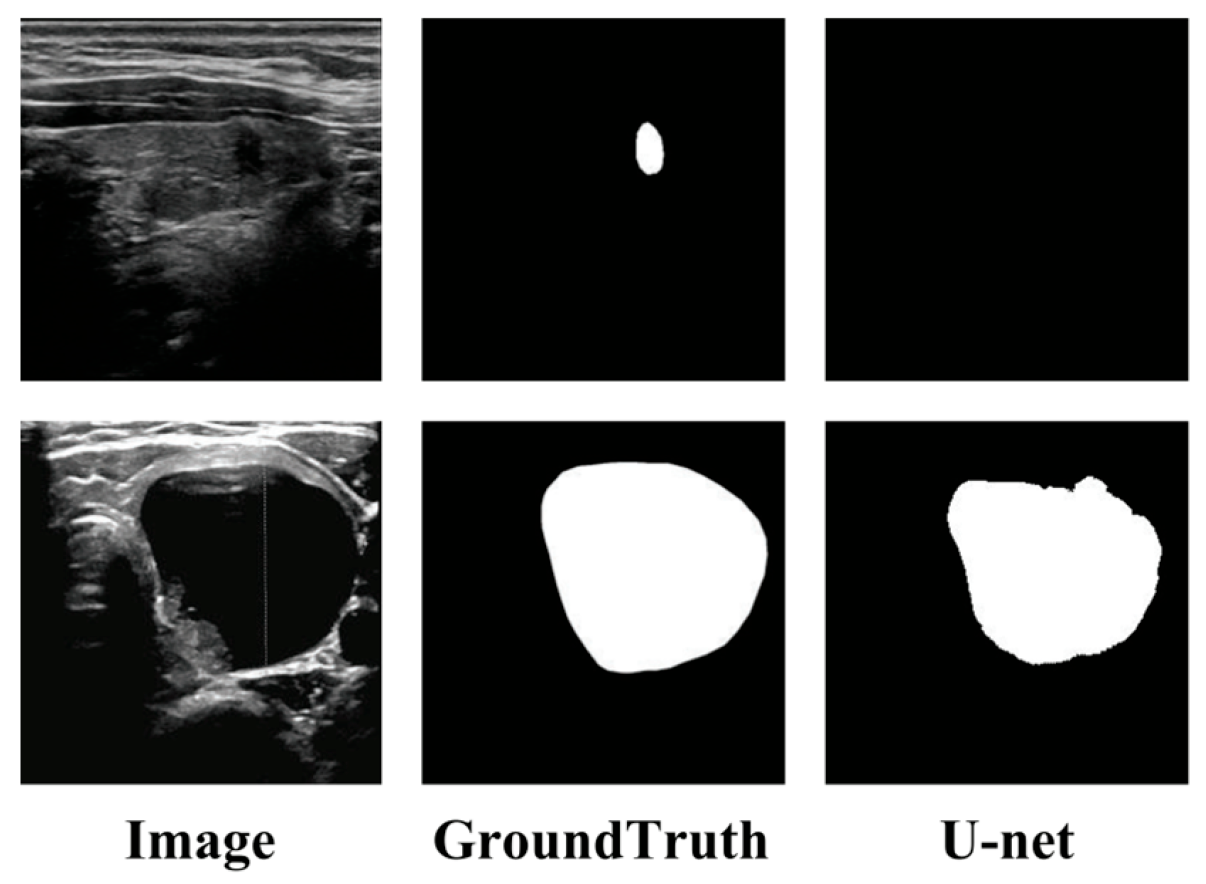

1. Introduction

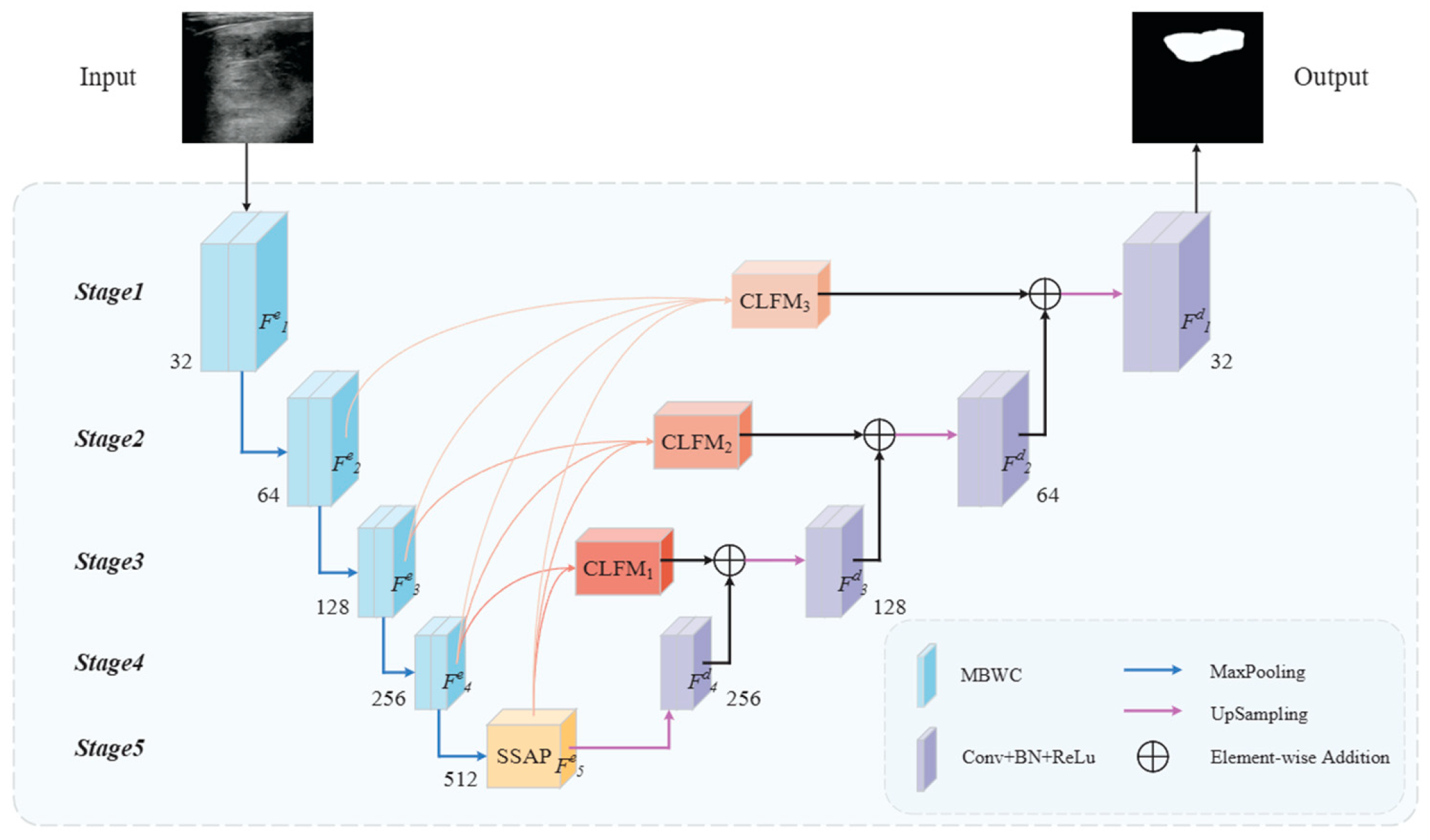

2. The Proposed Method

2.1. Overview

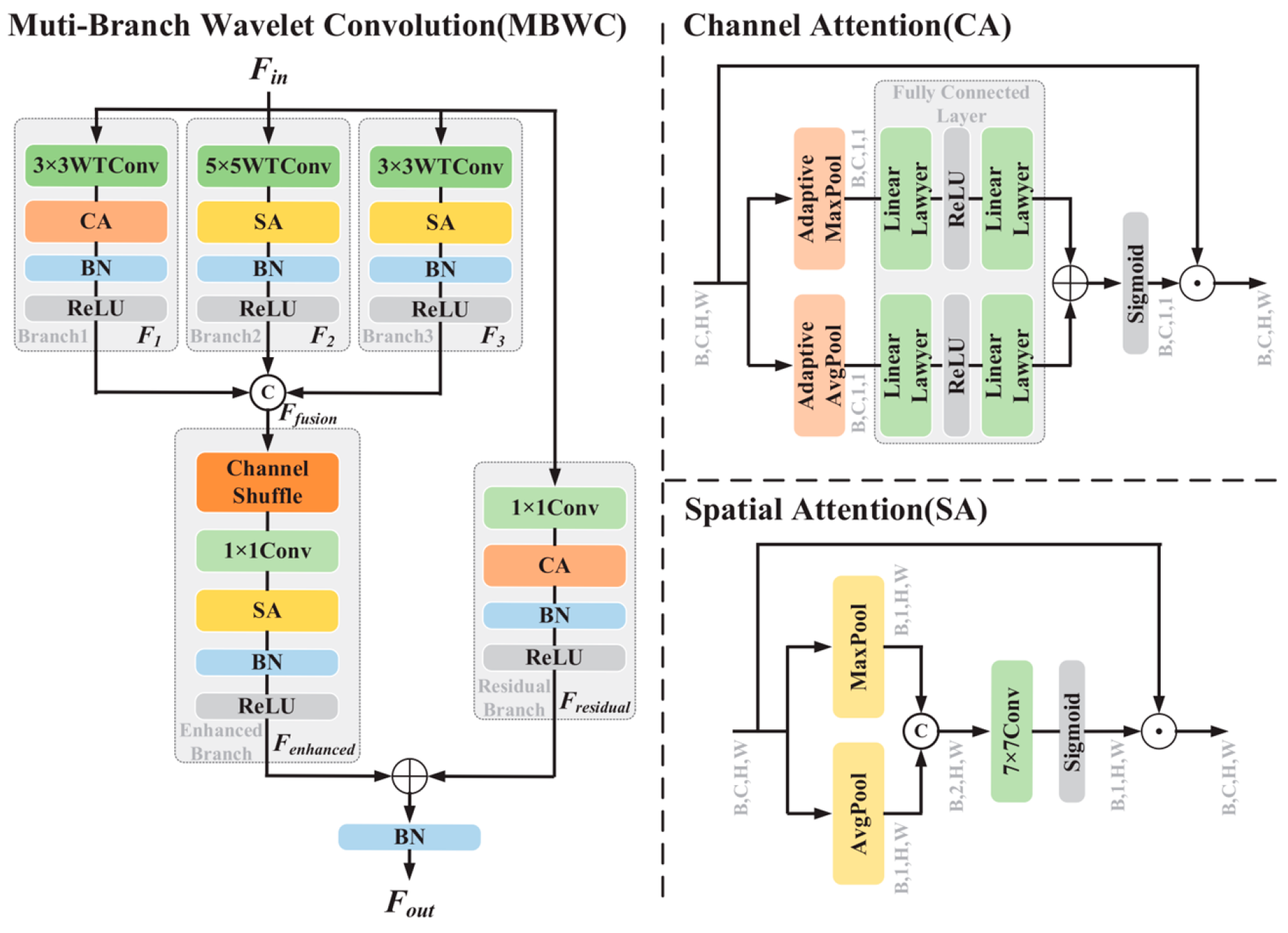

2.2. Muti-Branch Wavelet Convolution

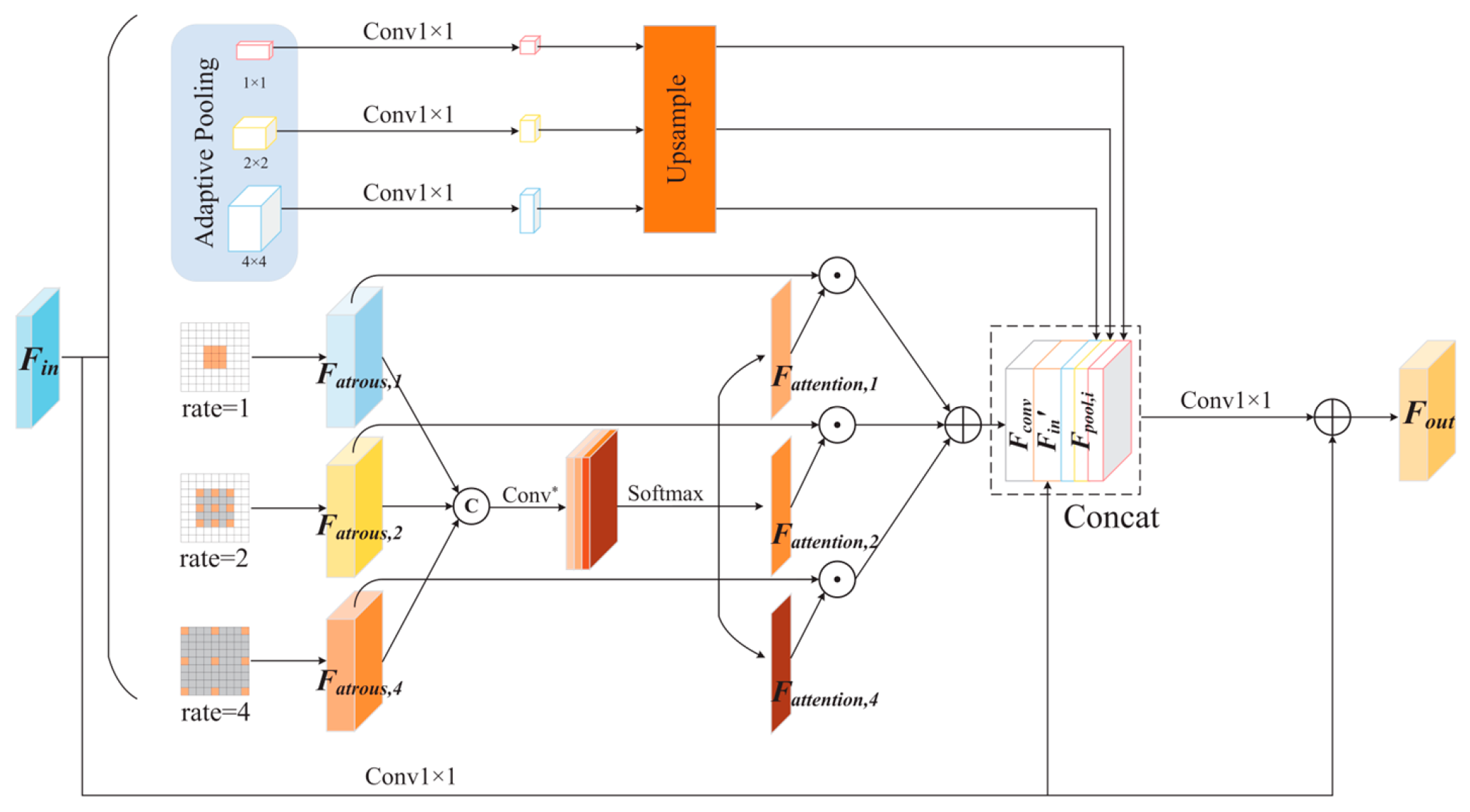

2.3. Scale Selection Atrous Pyramid

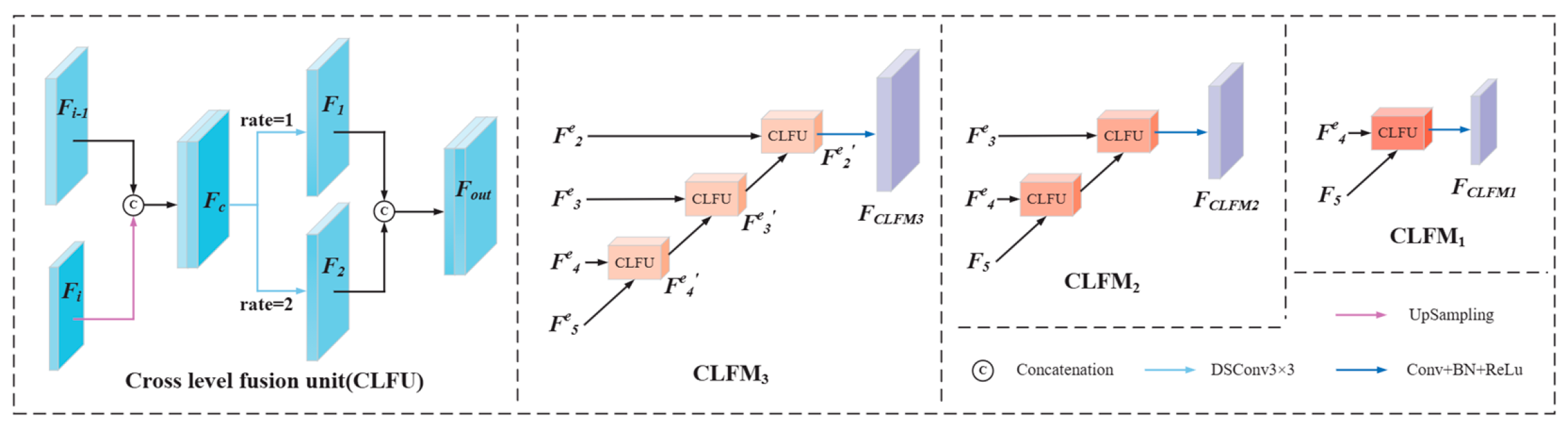

2.4. Cross-Level Fusion Module

2.5. Loss Function

3. Experiments

3.1. Datasets

3.2. Experimental Details

3.3. Evaluation Metrics

4. Experiment Result

4.1. Ablation Experiment

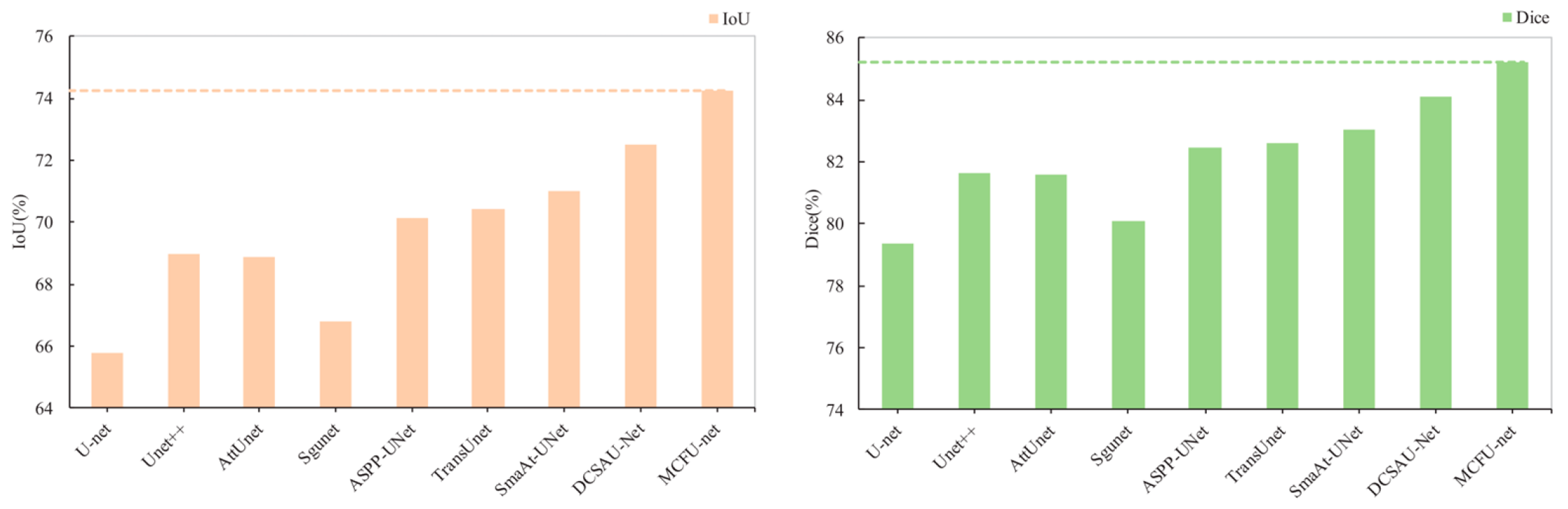

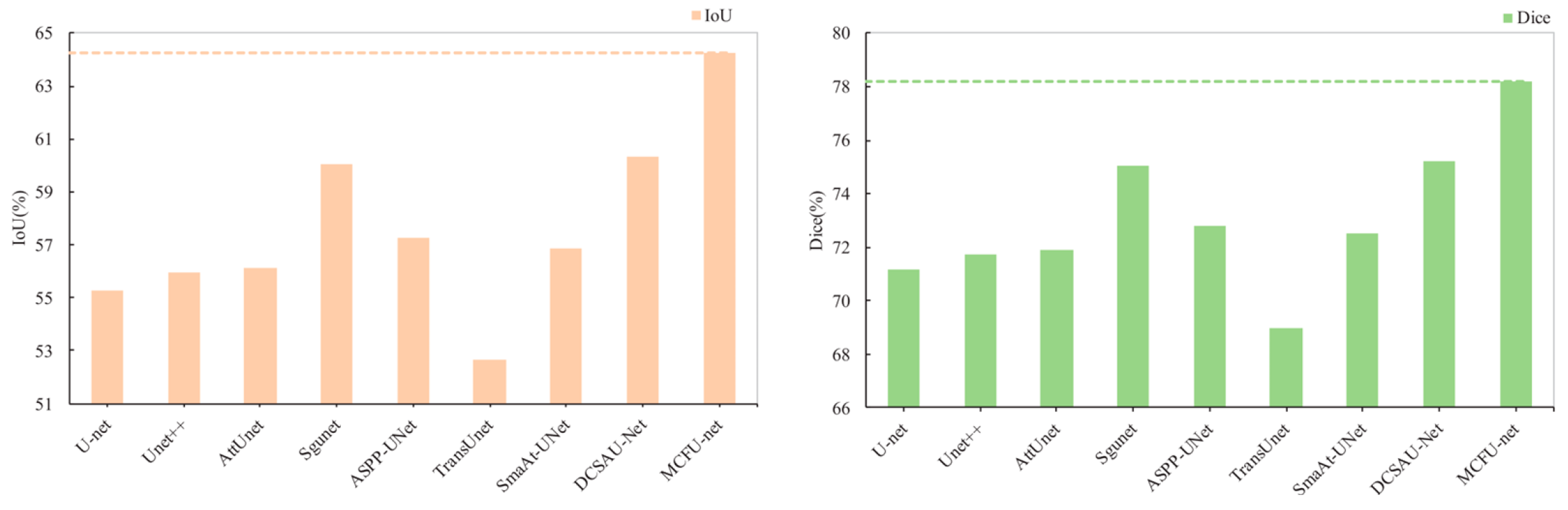

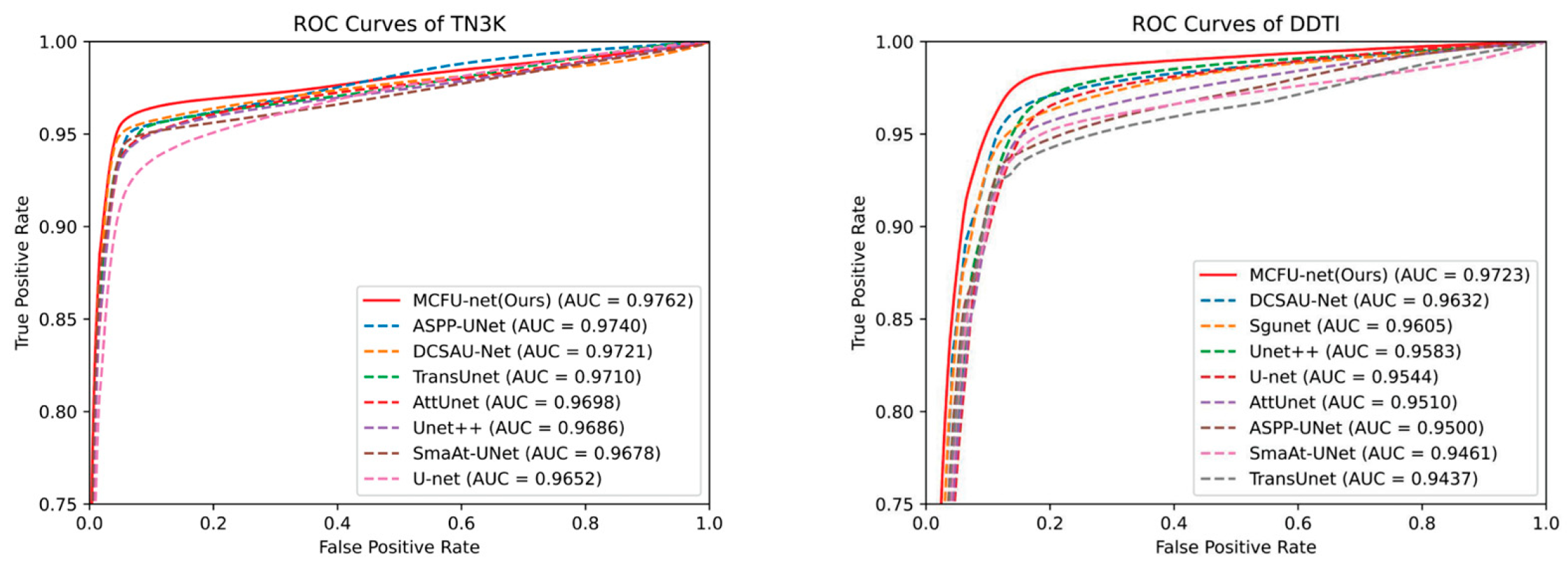

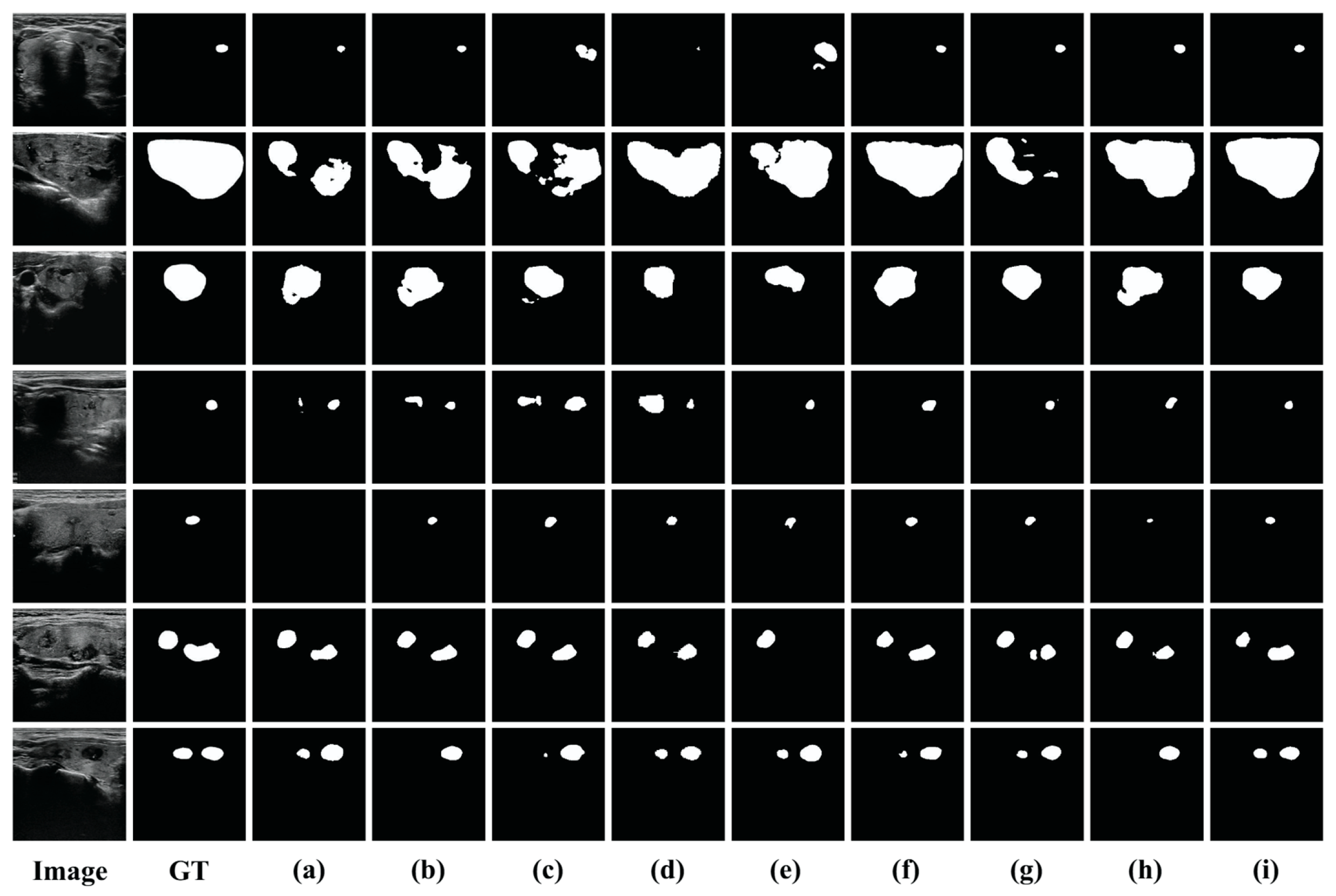

4.2. Comparison with the Other Methods

5. Discussion

5.1. The Impact of the MBWC Block Structure

5.2. The Impact of the Number of CLFMs

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, C.Y.; Lei, Y.F.; Tseng, C.H.; Shih, S.R. Thyroid segmentation and volume estimation in ultrasound images. IEEE Trans. Biomed. Eng. 2010, 57, 1348–1357. [Google Scholar] [CrossRef] [PubMed]

- Bray, F.; Laversanne, M.; Sung, H.; Ferlay, J.; Siegel, R.L.; Soerjomataram, I.; Jemal, A. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2024, 74, 229–263. [Google Scholar] [CrossRef]

- Peng, B.; Lin, W.; Zhou, W.; Bai, Y.; Luo, A.; Xie, S.; Yin, L. Enhanced pediatric thyroid ultrasound image segmentation using DC-Contrast U-Net. BMC Med. Imaging 2024, 24, 275. [Google Scholar] [CrossRef]

- Gong, Y.; Zhu, H.; Li, J.; Yang, J.; Cheng, J.; Chang, Y.; Bai, X.; Ji, X. SCCNet: Self-correction boundary preservation with a dynamic class prior filter for high-variability ultrasound image segmentation. Comput. Med. Imaging Graph. 2023, 104, 102183. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Mu, J.; Sun, H.; Dai, C.; Ji, Z.; Ganchev, I. BFG&MSF-Net: Boundary Feature Guidance and Multi-Scale Fusion Network for Thyroid Nodule Segmentation. IEEE Access 2024, 12, 78701–78713. [Google Scholar]

- Sun, S.; Fu, C.; Xu, S.; Wen, Y.; Ma, T. GLFNet: Global-local fusion network for the segmentation in ultrasound images. Comput. Biol. Med. 2024, 171, 108103. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Berlin, Germany, 5–9 October 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the International Workshop on Deep Learning in Medical Image Analysis, Granada, Spain, 20 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Liu, M.; Yuan, X.; Zhang, Y.; Chang, K.; Deng, Z.; Xue, J. An end to end thyroid nodule segmentation model based on optimized U-net convolutional neural network. In Proceedings of the 1st International Symposium on Artificial Intelligence in Medical Sciences, Beijing, China, 11–13 September 2020; pp. 74–78. [Google Scholar]

- Pan, H.; Zhou, Q.; Latecki, L.J. Sgunet: Semantic guided unet for thyroid nodule segmentation. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; IEEE: New York, NY, USA, 2021; pp. 630–634. [Google Scholar]

- Cui, S.; Zhang, Y.; Wen, H.; Tang, Y.; Wang, H. ASPP-UNet: A new semantic segmentation algorithm for thyroid nodule ultrasonic image. In Proceedings of the 2022 International Conference on Artificial Intelligence, Information Processing and Cloud Computing (AIIPCC), Kunming, China, 21–23 June 2022; IEEE: New York, NY, USA, 2022; pp. 323–328. [Google Scholar]

- Bi, H.; Cai, C.; Sun, J.; Jiang, Y.; Lu, G.; Shu, H.; Ni, X. BPAT-UNet: Boundary preserving assembled transformer UNet for ultrasound thyroid nodule segmentation. Comput. Methods Programs Biomed. 2023, 238, 107614. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Tan, G.; Duan, M.; Pu, B.; Luo, H.; Li, S.; Li, K. MLMSeg: A multi-view learning model for ultrasound thyroid nodule segmentation. Comput. Biol. Med. 2024, 169, 107898. [Google Scholar] [CrossRef]

- Yang, X.; Qu, S.; Wang, Z.; Li, L.; An, X.; Cong, Z. The study on ultrasound image classification using a dual-branch model based on Resnet50 guided by U-net segmentation results. BMC Med. Imaging 2024, 24, 314. [Google Scholar] [CrossRef]

- Chen, G.; Wang, H.; Chen, K.; Li, Z.; Song, Z.; Liu, Y.; Chen, W.; Knoll, A. A survey of the four pillars for small object detection: Multiscale representation, contextual information, super-resolution, and region proposal. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 936–953. [Google Scholar] [CrossRef]

- Dai, H.; Xie, W.; Xia, E. SK-Unet++: An improved Unet++ network with adaptive receptive fields for automatic segmentation of ultrasound thyroid nodule images. Med. Phys. 2024, 51, 1798–1811. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Zheng, T.; Qin, H.; Cui, Y.; Wang, R.; Zhao, W.; Zhang, S.; Geng, S.; Zhao, L. Segmentation of thyroid glands and nodules in ultrasound images using the improved U-Net architecture. BMC Med. Imaging 2023, 23, 56. [Google Scholar] [CrossRef]

- Ozcan, A.; Tosun, Ö.; Donmez, E.; Sanwal, M. Enhanced-TransUNet for ultrasound segmentation of thyroid nodules. Biomed. Signal Process. Control 2024, 95, 106472. [Google Scholar] [CrossRef]

- Zheng, S.J.; Yu, S.X.; Wang, Y.; Wen, J. GWUNet: A UNet with Gated Attention and Improved Wavelet Transform for Thyroid Nodules Segmentation. In Proceedings of the 31st International Conference on Multimedia Modeling (MMM 2025), Nara, Japan, 8–10 January 2025; pp. 31–44. [Google Scholar]

- Gan, J.; Zhang, R. Ultrasound image segmentation algorithm of thyroid nodules based on improved U-Net network. In Proceedings of the 2022 3rd International Conference on Control, Robotics and Intelligent System, Xi’an, China, 26–28 August 2022; pp. 61–66. [Google Scholar]

- Chen, G.; Liu, Y.; Qian, J.; Zhang, J.; Yin, X.; Cui, L.; Dai, Y. DSEU-net: A novel deep supervision SEU-net for medical ultrasound image segmentation. Expert Syst. Appl. 2023, 223, 119939. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Yang, Y.; Huang, H.; Shao, Y.; Chen, B. DAC-Net: A light-weight U-shaped network based efficient convolution and attention for thyroid nodule segmentation. Comput. Biol. Med. 2024, 180, 108972. [Google Scholar] [CrossRef]

- Xie, X.; Liu, P.; Lang, Y.; Guo, Z.; Yang, Z.; Zhao, Y. US-Net: U-shaped network with Convolutional Attention Mechanism for ultrasound medical images. Comput. Graph. 2024, 124, 104054. [Google Scholar] [CrossRef]

- Nie, X.Q.; Zhou, X.G.; Tong, T.; Lin, X.; Wang, L.; Zheng, H.; Li, J.; Xue, E.; Chen, S.; Zheng, M.; et al. N-Net: A novel dense fully convolutional neural network for thyroid nodule segmentation. Front. Neurosci. 2022, 16, 872601. [Google Scholar] [CrossRef]

- Ma, X.; Sun, B.; Liu, W.; Sui, D.; Shan, S.; Chen, J.; Tian, Z. Tnseg: Adversarial networks with multi-scale joint loss for thyroid nodule segmentation. J. Supercomput. 2024, 80, 6093–6118. [Google Scholar] [CrossRef]

- Ali, H.; Wang, M.; Xie, J. Cil-net: Densely connected context information learning network for boosting thyroid nodule segmentation using ultrasound images. Cogn. Comput. 2024, 16, 1176–1197. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Chen, Z.; Zhu, H.; Liu, Y.; Gao, X. MSCA-UNet: Multi-scale channel attention-based UNet for segmentation of medical ultrasound images. Clust. Comput. 2024, 27, 6787–6804. [Google Scholar] [CrossRef]

- Gong, H.; Chen, J.; Chen, G. Thyroid region prior guided attention for ultrasound segmentation of thyroid nodules. Comput. Biol. Med. 2023, 155, 106389. [Google Scholar] [CrossRef] [PubMed]

- Pedraza, L.; Vargas, C.; Narváez, F.; Durán, O.; Muñoz, E.; Romero, E. An open access thyroid ultrasound image database. In Proceedings of the 10th International symposium on medical information processing and analysis. SPIE 2015, 9287, 188–193. [Google Scholar]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Trebing, K.; Staǹczyk, T.; Mehrkanoon, S. SmaAt-UNet: Precipitation nowcasting using a small attention-UNet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- Xu, Q.; Ma, Z.; Duan, W. DCSAU-Net: A deeper and more compact split-attention U-Net for medical image segmentation. Comput. Biol. Med. 2023, 154, 106626. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2016; pp. 770–778. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2018; pp. 3–19. [Google Scholar]

| Methods | Pre (%) | Recall (%) | Spe (%) | Acc (%) | IoU (%) | Dice (%) | HD95 |

|---|---|---|---|---|---|---|---|

| Baseline | 90.26 ± 2.67 | 70.98 ± 3.45 | 99.00 ± 0.34 | 95.82 ± 0.15 | 65.79 ± 1.79 | 79.35 ± 1.32 | 32.26 ± 1.69 |

| MBWC | 91.41 ± 1.46 | 73.66 ± 1.90 | 99.11 ± 0.18 | 96.22 ± 0.11 | 68.85 ± 1.09 | 81.55 ± 0.76 | 30.56 ± 2.15 |

| MBWC + SSAP | 91.42 ± 1.03 | 76.83 ± 0.50 | 99.04 ± 0.13 | 96.63 ± 0.07 | 72.12 ± 0.52 | 83.80 ± 0.35 | 25.67 ± 1.98 |

| MBWC + SSAP + CLFM (Ours) | 92.14 ± 0.66 | 79.86 ± 0.97 | 99.12 ± 0.14 | 96.85 ± 0.08 | 74.25 ± 0.53 | 85.22 ± 0.34 | 23.45 ± 1.65 |

| Methods | Pre (%) | Recall (%) | Spe (%) | Acc (%) | IoU (%) | Dice (%) | HD95 |

|---|---|---|---|---|---|---|---|

| Baseline | 69.70 ± 2.24 | 72.81 ± 2.36 | 94.65 ± 0.67 | 91.51 ± 0.42 | 55.25 ± 1.35 | 71.17 ± 1.13 | 37.77 ± 1.11 |

| MBWC | 75.28 ± 1.89 | 73.51 ± 2.26 | 95.92 ± 0.49 | 92.70 ± 0.22 | 59.15 ± 0.96 | 74.33 ± 0.76 | 33.74 ± 2.96 |

| MBWC + SSAP | 76.16 ± 1.42 | 74.11 ± 2.61 | 96.15 ± 0.40 | 93.00 ± 0.17 | 60.04 ± 1.23 | 75.03 ± 0.96 | 30.70 ± 2.97 |

| MBWC + SSAP + CLFM (Ours) | 78.58 ± 1.15 | 77.89 ± 1.75 | 96.42 ± 0.29 | 93.76 ± 0.20 | 64.23 ± 1.05 | 78.21 ± 0.77 | 24.78 ± 1.55 |

| Methods | Pre (%) | Recall (%) | Spe (%) | Acc (%) | IoU (%) | Dice (%) | HD95 |

|---|---|---|---|---|---|---|---|

| U-net | 90.26 ± 2.67 | 70.98 ± 3.45 | 99.00 ± 0.34 | 95.82 ± 0.15 | 65.79 ± 1.79 | 79.35 ± 1.32 | 32.26 ± 1.69 |

| Unet++ | 90.87 ± 1.44 | 74.19 ± 2.21 | 99.04 ± 0.19 | 96.22 ± 0.10 | 68.99 ± 1.18 | 81.64 ± 0.84 | 30.10 ± 2.06 |

| AttUnet | 91.04 ± 1.48 | 73.96 ± 2.69 | 99.06 ± 0.20 | 96.21 ± 0.14 | 68.88 ± 1.57 | 81.56 ± 1.10 | 33.26 ± 1.98 |

| Sgunet | 91.13 ± 0.77 | 71.46 ± 2.06 | 99.08 ± 0.16 | 95.97 ± 0.15 | 66.78 ± 1.47 | 80.07 ± 1.06 | 32.66 ± 1.76 |

| ASPP-UNet | 89.70 ± 1.40 | 76.32 ± 2.05 | 98.87 ± 0.20 | 96.31 ± 0.10 | 70.13 ± 1.05 | 82.44 ± 0.73 | 29.15 ± 2.44 |

| TransUnet | 90.53 ± 1.55 | 76.07 ± 2.54 | 98.97 ± 0.22 | 96.37 ± 0.14 | 70.40 ± 1.42 | 82.62 ± 0.98 | 26.07 ± 2.44 |

| SmaAt-UNet | 89.98 ± 0.84 | 77.14 ± 2.33 | 98.90 ± 0.14 | 96.43 ± 0.15 | 71.00 ± 1.46 | 83.03 ± 0.99 | 25.66 ± 2.59 |

| DCSAU-Net | 91.51 ± 0.94 | 77.80 ± 1.68 | 99.07 ± 0.13 | 96.66 ± 0.08 | 72.53 ± 0.92 | 84.08 ± 0.62 | 25.94 ± 1.83 |

| MCFU-net (Ours) | 92.14 ± 0.66 | 79.86 ± 0.97 | 99.12 ± 0.14 | 96.85 ± 0.08 | 74.25 ± 0.53 | 85.22 ± 0.34 | 23.45 ± 1.65 |

| Methods | Pre (%) | Recall (%) | Spe (%) | Acc (%) | IoU (%) | Dice (%) | HD95 |

|---|---|---|---|---|---|---|---|

| U-net | 69.70 ± 2.24 | 72.81 ± 2.36 | 94.65 ± 0.67 | 91.51 ± 0.42 | 55.25 ± 1.35 | 71.17 ± 1.13 | 37.77 ± 1.11 |

| Unet++ | 71.51 ± 2.21 | 72.46 ± 6.65 | 95.09 ± 0.92 | 91.83 ± 0.32 | 55.97 ± 3.00 | 71.73 ± 2.47 | 37.48 ± 5.40 |

| AttUnet | 71.77 ± 1.41 | 72.06 ± 1.70 | 95.23 ± 0.32 | 91.90 ± 0.35 | 56.14 ± 1.47 | 71.90 ± 1.21 | 37.53 ± 3.77 |

| Sgunet | 76.24 ± 2.03 | 74.06 ± 3.75 | 96.09 ± 0.60 | 92.92 ± 0.12 | 60.05 ± 1.42 | 75.03 ± 1.11 | 31.86 ± 3.97 |

| ASPP-UNet | 73.91 ± 2.82 | 72.04 ± 3.54 | 95.67 ± 0.80 | 92.27 ± 0.22 | 57.27 ± 0.89 | 72.82 ± 0.73 | 31.31 ± 1.72 |

| TransUnet | 73.99 ± 2.00 | 64.79 ± 3.93 | 96.15 ± 0.59 | 91.63 ± 0.32 | 52.67 ± 2.15 | 68.98 ± 1.86 | 38.64 ± 2.86 |

| SmaAt-UNet | 71.41 ± 1.11 | 73.71 ± 2.38 | 95.03 ± 0.39 | 91.96 ± 0.20 | 56.88 ± 1.15 | 72.51 ± 0.93 | 36.16 ± 2.06 |

| DCSAU-Net | 74.07 ± 2.66 | 76.49 ± 3.09 | 95.48 ± 0.65 | 92.74 ± 0.60 | 60.31 ± 2.61 | 75.21 ± 2.04 | 30.88 ± 4.23 |

| MCFU-net (Ours) | 78.58 ± 1.15 | 77.89 ± 1.75 | 96.42 ± 0.29 | 93.76 ± 0.20 | 64.23 ± 1.05 | 78.21 ± 0.77 | 24.78 ± 1.55 |

| Branch1 | Branch2 | Branch3 | IoU (%) | Dice (%) |

|---|---|---|---|---|

| √ | 70.97 ± 0.65 | 83.02 ± 0.44 | ||

| √ | 69.93 ± 0.59 | 82.30 ± 0.40 | ||

| √ | 70.46 ± 0.41 | 82.67 ± 0.31 | ||

| √ | √ | 72.09 ± 1.17 | 83.78 ± 0.78 | |

| √ | √ | 72.80 ± 0.64 | 84.26 ± 0.43 | |

| √ | √ | 71.72 ± 0.91 | 83.53 ± 0.21 | |

| √ | √ | √ | 74.25 ± 0.53 | 85.22 ± 0.34 |

| Branch1 | Branch2 | Branch3 | IoU(%) | Dice(%) |

|---|---|---|---|---|

| √ | 61.67 ± 0.95 | 76.00 ± 0.72 | ||

| √ | 59.72 ± 1.29 | 74.76 ± 0.84 | ||

| √ | 60.29 ± 0.91 | 75.22 ± 0.61 | ||

| √ | √ | 62.38 ± 1.16 | 76.75 ± 0.87 | |

| √ | √ | 62.88 ± 1.07 | 77.21 ± 0.83 | |

| √ | √ | 61.30 ± 1.31 | 76.29 ± 1.01 | |

| √ | √ | √ | 64.23 ± 1.05 | 78.21 ± 0.77 |

| Datasets | TN3K | DDTI | ||

|---|---|---|---|---|

| Methods | IoU (%) | Dice (%) | IoU (%) | Dice (%) |

| Model | 72.12 ± 0.52 | 83.80 ± 0.35 | 60.04 ± 1.23 | 75.03 ± 0.96 |

| Model + CLFM1 | 72.23 ± 0.62 | 83.97 ± 0.42 | 60.83 ± 0.74 | 75.64 ± 0.57 |

| Model + CLFM1,2 | 73.08 ± 0.73 | 84.45 ± 0.49 | 61.55 ± 1.53 | 76.19 ± 1.18 |

| Model + CLFM1,2,3 | 73.40 ± 0.43 | 84.69 ± 0.28 | 63.09 ± 0.53 | 77.31 ± 0.41 |

| Model + CLFM1,2,3,4 | 73.05 ± 0.60 | 84.42 ± 0.40 | 61.90 ± 0.59 | 76.47 ± 0.45 |

| Datasets | TN3K | DDTI | ||

|---|---|---|---|---|

| Methods | IoU (%) | Dice (%) | IoU (%) | Dice (%) |

| Model | 73.01 ± 0.86 | 84.40 ± 0.58 | 60.04 ± 1.40 | 75.02 ± 1.10 |

| Model + CLFM1 | 73.19 ± 1.52 | 84.51 ± 1.02 | 60.97 ± 1.23 | 75.74 ± 0.95 |

| Model + CLFM1,2 | 73.30 ± 1.10 | 84.59 ± 0.73 | 61.42 ± 1.79 | 76.09 ± 1.38 |

| Model + CLFM1,2,3 | 74.25 ± 0.53 | 85.22 ± 0.34 | 64.23 ± 1.05 | 78.21 ± 0.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Tang, H.; Zhao, J.; Liu, R.; Zheng, S.; Hou, K.; Zhang, X.; Liu, F.; Ding, C. Prediction Multiscale Cross-Level Fusion U-Net with Combined Wavelet Convolutions for Thyroid Nodule Segmentation. Information 2025, 16, 1013. https://doi.org/10.3390/info16111013

Liu S, Tang H, Zhao J, Liu R, Zheng S, Hou K, Zhang X, Liu F, Ding C. Prediction Multiscale Cross-Level Fusion U-Net with Combined Wavelet Convolutions for Thyroid Nodule Segmentation. Information. 2025; 16(11):1013. https://doi.org/10.3390/info16111013

Chicago/Turabian StyleLiu, Shengzhi, Haotian Tang, Junhao Zhao, Rundong Liu, Sirui Zheng, Kaiyao Hou, Xiyu Zhang, Fuyong Liu, and Chen Ding. 2025. "Prediction Multiscale Cross-Level Fusion U-Net with Combined Wavelet Convolutions for Thyroid Nodule Segmentation" Information 16, no. 11: 1013. https://doi.org/10.3390/info16111013

APA StyleLiu, S., Tang, H., Zhao, J., Liu, R., Zheng, S., Hou, K., Zhang, X., Liu, F., & Ding, C. (2025). Prediction Multiscale Cross-Level Fusion U-Net with Combined Wavelet Convolutions for Thyroid Nodule Segmentation. Information, 16(11), 1013. https://doi.org/10.3390/info16111013