DQMAF—Data Quality Modeling and Assessment Framework

Abstract

1. Introduction

2. Literature Review

2.1. Data Quality Frameworks

2.2. ML-Based Data Quality Assessment in Various Domains

- IoT Systems: Supervised ML frameworks employing ensemble Decision Trees and Bayesian classifiers have been proposed to assess the quality of high-frequency and volatile oceanographic sensor data [6]. Key quality dimensions identified include accuracy, timeliness, completeness, consistency, and uniqueness [14,18].

- Healthcare: Standardized DQ terminologies and frameworks for electronic health records (EHRs) have been developed, focusing on conformance, completeness, and plausibility to ensure reliable clinical analytics [30]. Other research highlights the importance of structured and accurate health data for computing clinical quality indicators and supporting medical research [14,21].

- Social Media: Frameworks targeting the heterogeneity and velocity of user-generated content have been proposed, emphasizing lifecycle-oriented quality assessment [19,31]. Further, ML techniques leveraging sentiment analysis and natural language processing have been applied to large-scale platforms such as Airbnb reviews to derive data quality attributes and customer-related insights [32].

2.3. User-Centric Perspectives on Data Quality

3. Aim and Objectives

3.1. The Aim

3.2. Objectives

- To develop a comprehensive data profiling mechanism that extracts hidden indicators of data trustworthiness, supporting both data quality evaluation and user protection;

- To design supervised machine learning models capable of classifying data into predefined categories (high, medium, and low quality), enabling downstream applications such as authentication, document classification, and anomaly detection to operate more reliably;

- To train, optimize, and validate the models for scalable deployment across diverse data domains, including social media, IoT, healthcare, and e-commerce platforms where user safety and service trust are critical;

- To provide a reusable and extensible machine learning–based framework that integrates data quality assessment with proactive governance, thereby enhancing the fairness, transparency, and reliability of analytics and AI-driven services.

4. Research Questions

- RQ1: How can profiling-driven validations (completeness, consistency, accuracy, structural conformity) be systematically aggregated into interpretable quality labels (high/medium/low) in a reproducible manner?

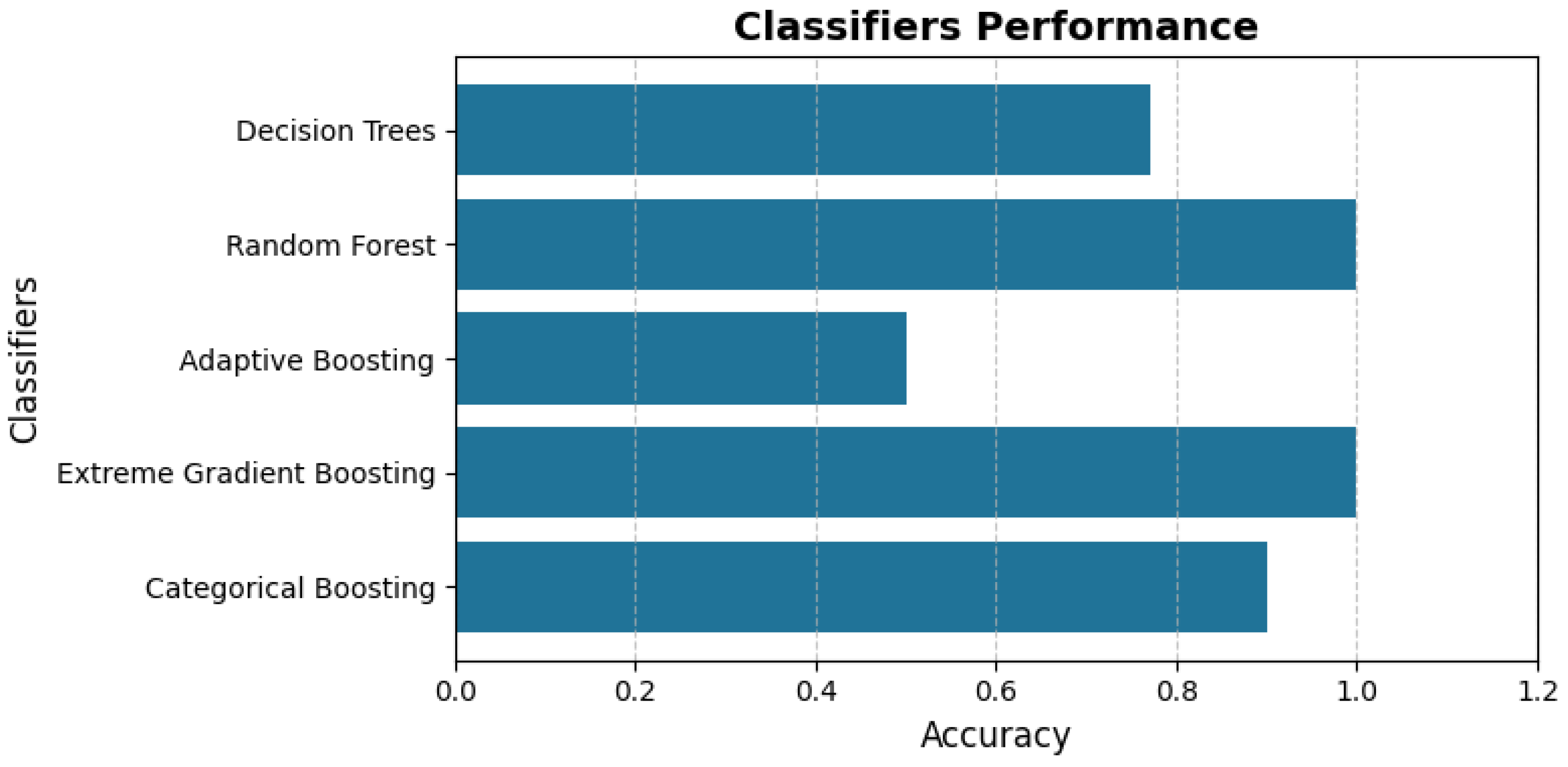

- RQ2: To what extent can supervised machine learning models (Decision Trees, Random Forest, AdaBoost, XGBoost, CatBoost) accurately classify records into quality tiers when trained on binary profiling features, without overfitting or leakage?

5. Methodology

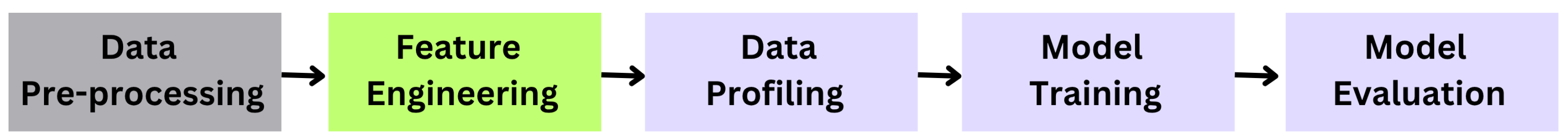

5.1. Data Quality Modeling and Assessment Framework (DQMAF)

- Data preprocessing;

- Feature engineering;

- Data profiling;

- Model training;

- Model evaluation.

5.2. Data Preprocessing

- Identifying and handling missing values: Missingness patterns are detected and addressed using multiple imputation strategies (mean, median, and KNN-based imputation).

- Removing duplicates: Duplicate entries are identified and removed to avoid redundancy and bias.

- Data type conversions: Attributes are converted to their appropriate types (e.g., integer, float, categorical) to ensure type consistency.

- Filtering irrelevant features: Non-informative or error-prone features are removed to enhance model performance.

- Ensuring completeness and structural consistency: Logical checks are performed (e.g., validating that the number of beds is not less than the number of bedrooms).

5.3. Feature Engineering

- Missingness Indicators: Binary flags represent the presence or absence of values for each feature.

- Cross-Field Validation: Logical dependencies between attributes (e.g., beds ≥ bedrooms) are verified to detect inconsistencies.

- Regular Expression Checks:Attributes such as postal codes, IDs, and emails are validated against expected formats.

- Data Type Consistency: Ensures uniformity in data formats within each column, detecting and flagging anomalies.

- Profiling Score Computation: Each validation produces binary outcomes and weights, which are aggregated into a profiling matrix.

5.4. Data Profiling

- Records with scores at or below the 25th percentile (Q1) were classified as Low quality.

- Records between the 25th and 50th percentiles (Q1–Q2) were classified as Medium quality.

- Records above the 50th percentile (Q2) were classified as High quality.

5.5. Model Training

- Decision Trees: provide hierarchical decision-making through interpretable if-else rules. They use criteria such as Information Gain (based on entropy) or Gini Index for splitting:where is the proportion of samples belonging to class i. Alternatively, the Gini Index is computed as:Lower Gini or entropy indicates a better split.

- Random Forest: An ensemble method that builds multiple Decision Trees on random subsets of data and features, and averages their predictions. This reduces variance and mitigates overfitting.

- AdaBoost: A boosting algorithm that assigns weights to misclassified samples in each iteration. The weight update rule is written as follows:where is the classification error at iteration t. Sample weights are updated as follows:where is the true label and is the weak classifier prediction.

- XGBoost: An optimized gradient boosting framework. It minimizes an objective function comprising the loss and a regularization term:where l is a differentiable loss function (e.g., logistic loss) and is a regularization term to control model complexity.

- CatBoost: Specifically designed for categorical features, CatBoost uses ordered boosting and target statistics to prevent overfitting and prediction shift. It efficiently handles categorical variables without explicit one-hot encoding.

5.6. Evaluation Metrics

- Accuracy: proportion of correctly classified samples.

- Precision: fraction of true positives among all predicted positives.

- Recall: fraction of true positives among all actual positives.

- F1-score: harmonic mean of precision and recall.

- Support: number of occurrences of each class label in the dataset.

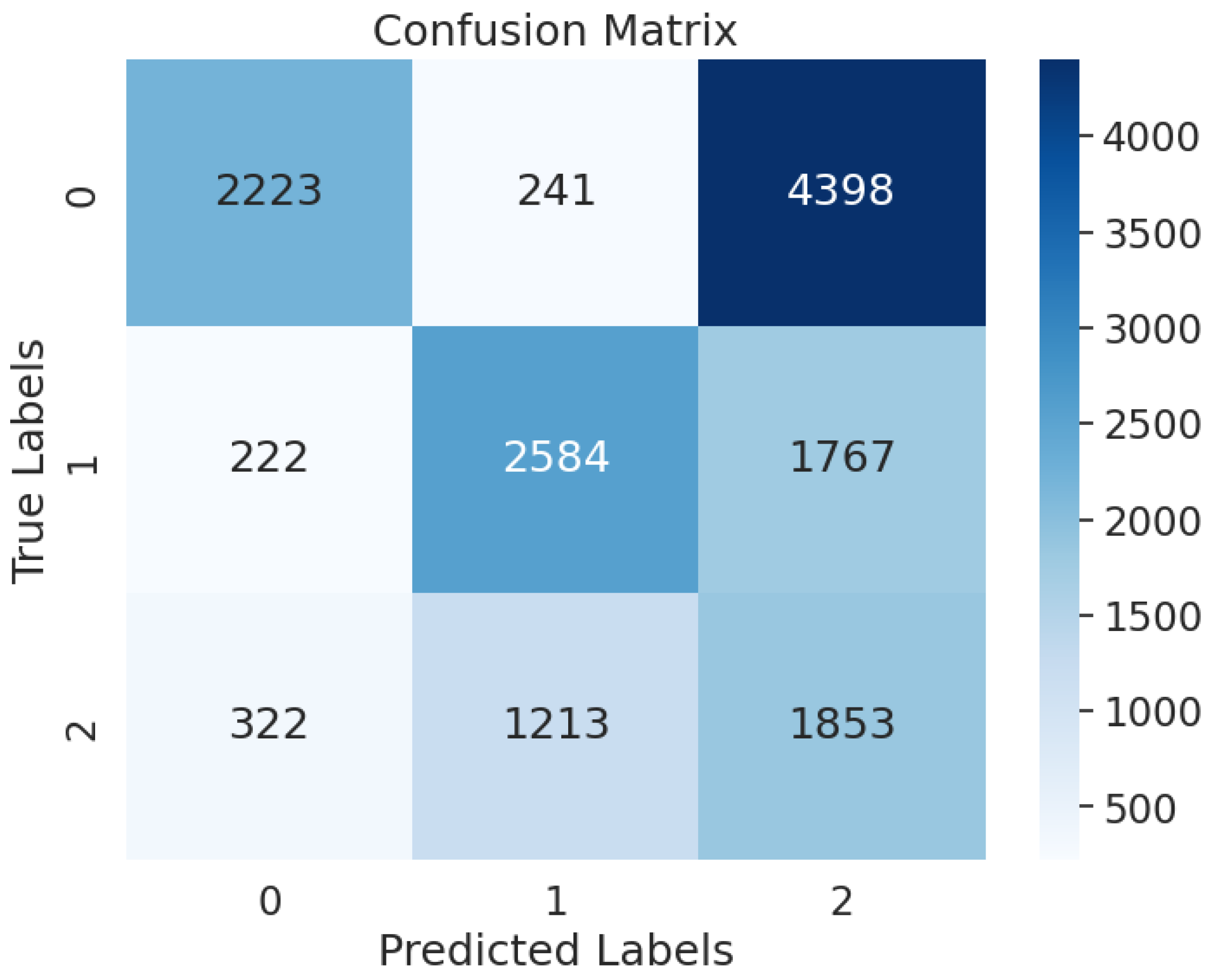

- Confusion Matrix: tabular representation of actual vs. predicted classes, providing insights into classification errors for each category.

6. Experimental Analysis

6.1. Implementation Environment

6.2. Dataset

6.3. Data Preprocessing and Imputation

- Data Inspection: Descriptive statistics and data type summaries were generated to understand the dataset structure (e.g., int64, float64, bool, object). Missingness patterns and anomalies were identified.

- Redundant Column Removal: Irrelevant, error-prone, or non-informative columns were eliminated to reduce noise and enhance model focus. In this context, we operationalize the relevance dimension as the suitability of data on two levels: (i) the extent to which data is actually used and accessed by users, and (ii) the degree to which the data produced aligns with user needs. Attributes that are rarely used, provide little utility to end-users, or fail to match their informational needs are considered less relevant, whereas attributes frequently accessed and directly supporting decision-making are deemed highly relevant.

- Shuffling: The dataset was randomized to mitigate ordering biases and improve model generalization.

- Categorical vs Numerical Classification: Columns were categorized into numerical and categorical features to enable tailored preprocessing strategies.

- Mean Imputation: For numerical attributes, missing values were replaced by the arithmetic mean, calculated as follows:where denotes the mean and represents the i-th observation.

- Median Imputation: Missing numerical values were replaced with the median of the respective attribute.

- KNN Imputation: Missing entries were estimated based on the k-nearest neighbors using the Euclidean distance, calculated as follows:where p and q denote two data points in an n-dimensional space.

6.4. Application of DQMAF on Airbnb Dataset

6.4.1. Feature Engineering

- Categorical Feature Encoding: Categorical columns (e.g., property_type, room_type, bed_type) were transformed using binary or one-hot encoding to facilitate machine learning.

- Statistical Summaries: Descriptive statistics for numerical attributes were computed to identify trends, variability, and outliers.

- Quality Labeling: A target variable, quality, was defined with three classes: high, medium, and low. This label was inferred from profiling scores and utilized for supervised classification.

6.4.2. Data Profiling

- Completeness Check (Missingness Indicators): Each cell was examined for null values. Presence of data received a weight of 5, while absence was assigned 0.

- Consistency Checks:

- –

- Cross-Field Validation: Logical relationships between fields (e.g., beds ≥ bedrooms) were enforced; matches received a weight of 2.

- –

- Regular Expression Validation: Columns such as zipcode and IDs were checked against regex-based format rules.

- –

- Data Type Consistency: All column values were validated against their expected data types (e.g., integer, string); consistent entries were weighted.

- –

- Range Consistency: Values for categorical attributes like city and bed_type were verified against expected ranges.

- Profile Matrix Creation: Each column was transformed into a binary representation based on these checks, with cumulative scores computed.

- Quality Classification: Based on total profile weights, data quality was categorized as high, medium, or low.

- New Dataset Formation: The original dataset was transformed into a structured representation with 49 binary profile features and one quality label.

6.5. Model Training and Evaluation

6.6. Generalizability Across Domains

7. Results and Discussion

7.1. Robustness and Sensitivity Analysis

7.2. Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zeng, S.; Jin, W.; Sun, T. The Value of Data in the Digital Economy: Investigating the Economic Consequences of Personal Information Protection. 2025. Available online: https://ssrn.com/abstract=5147162 (accessed on 10 October 2025).

- Cai, L.; Zhu, Y. The Challenges of Data Quality and Data Quality Assessment in the Big Data Era. Data Sci. J. 2015, 14, 2. [Google Scholar] [CrossRef]

- Li, G.; Zhou, X.; Cao, L. Machine Learning for Databases. In Proceedings of the AIMLSystems 2021: The First International Conference on AI-ML-Systems, Bangalore, India, 21–23 October 2021; ACM: New York, NY, USA, 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Warwick, W.; Johnson, S.; Bond, J.; Fletcher, G.; Kanellakis, P. A Framework to Assess Healthcare Data Quality. Eur. J. Soc. Behav. Sci. 2015, 13, 92–98. [Google Scholar] [CrossRef]

- Priestley, M.; O’Donnell, F.; Simperl, E. A Survey of Data Quality Requirements That Matter in ML Development Pipelines. J. Data Inf. Qual. 2023, 15, 11. [Google Scholar] [CrossRef]

- Rahman, A.; Smith, D.V.; Timms, G. A Novel Machine Learning Approach Toward Quality Assessment of Sensor Data. IEEE Sens. J. 2014, 14, 1035–1047. [Google Scholar] [CrossRef]

- Cichy, C.; Rass, S. An Overview of Data Quality Frameworks. IEEE Access 2019, 7, 24634–24648. [Google Scholar] [CrossRef]

- Tencent Cloud Techpedia. What Is the Difference Between Data Availability and Data Quality? 2025. Available online: https://www.tencentcloud.com/techpedia/108108 (accessed on 2 September 2025).

- Declerck, J.; Kalra, D.; Vander Stichele, R.; Coorevits, P. Frameworks, Dimensions, Definitions of Aspects, and Assessment Methods for the Appraisal of Quality of Health Data for Secondary Use: Comprehensive Overview of Reviews. JMIR Med. Inform. 2024, 12, e51560. [Google Scholar] [CrossRef] [PubMed]

- Data Reliability in 2025: Definition, Examples & Tools. 2024. Available online: https://atlan.com/what-is-data-reliability/#:~:text=Data%20reliability%20means%20that%20data,business%20analytics%2C%20or%20public%20policy (accessed on 2 September 2025).

- The Three Critical Pillars of Data Reliability—Acceldata. 2025. Available online: https://www.acceldata.io/guide/three-critical-pillars-of-data-reliability (accessed on 2 September 2025).

- He, D.; Liu, X.; Shi, Q.; Zheng, Y. Visual-language reasoning segmentation (LARSE) of function-level building footprint across Yangtze River Economic Belt of China. Sustain. Cities Soc. 2025, 127, 106439. [Google Scholar] [CrossRef]

- Randell, R.; Alvarado, N.; McVey, L.; Ruddle, R.A.; Doherty, P.; Gale, C.; Mamas, M.; Dowding, D. Requirements for a quality dashboard: Lessons from National Clinical Audits. AMIA Annu. Symp. Proc. 2020, 2019, 735–744. [Google Scholar]

- Reimer, A.P.; Milinovich, A.; Madigan, E.A. Data quality assessment framework to assess electronic medical record data for use in research. Int. J. Med. Inform. 2016, 90, 40–47. [Google Scholar] [CrossRef]

- Janssen, M.; van der Voort, H.; Wahyudi, A. Factors influencing big data decision-making quality. J. Bus. Res. 2017, 70, 338–345. [Google Scholar] [CrossRef]

- Jerez, J.M.; Molina, I.; García-Laencina, P.J.; Alba, E.; Ribelles, N.; Martín, M.; Franco, L. Missing data imputation using statistical and machine learning methods in a real breast cancer problem. Artif. Intell. Med. 2010, 50, 105–115. [Google Scholar] [CrossRef]

- Mahdavinejad, M.S.; Rezvan, M.; Barekatain, M.; Adibi, P.; Barnaghi, P.; Sheth, A.P. Machine learning for internet of things data analysis: A survey. Digit. Commun. Netw. 2018, 4, 161–175. [Google Scholar] [CrossRef]

- Rahm, E.; Do, H.H. Data Cleaning: Problems and Current Approaches. IEEE Data Eng. Bull. 2000, 23, 3–13. [Google Scholar]

- Immonen, A.; Paakkonen, P.; Ovaska, E. Evaluating the Quality of Social Media Data in Big Data Architecture. IEEE Access 2015, 3, 2028–2043. [Google Scholar] [CrossRef]

- Suzuki, K. Pixel-Based Machine Learning in Medical Imaging. Int. J. Biomed. Imaging 2012, 2012, 792079. [Google Scholar] [CrossRef]

- Dentler, K.; Cornet, R.; Teije, A.t.; Tanis, P.; Klinkenbijl, J.; Tytgat, K.; Keizer, N.d. Influence of data quality on computed Dutch hospital quality indicators: A case study in colorectal cancer surgery. BMC Med. Inform. Decis. Mak. 2014, 14, 32. [Google Scholar] [CrossRef]

- Marupaka, D. Machine Learning-Driven Predictive Data Quality Assessment in ETL Frameworks. Int. J. Comput. Trends Technol. 2024, 72, 53–60. [Google Scholar] [CrossRef]

- Frank, E. Machine Learning Models for Data Quality Assessment. EasyChair Preprint 13213, EasyChair. 2024. Available online: https://easychair.org/publications/preprint/cktz (accessed on 10 October 2025).

- Nelson, G. Data Management Meets Machine Learning. In Proceedings of the SAS Global Forum, Denver, CO, USA, 8–10 April 2018; Available online: https://support.sas.com/resources/papers/proceedings18/ (accessed on 10 October 2025).

- Zhou, Y.; Tu, F.; Sha, K.; Ding, J.; Chen, H. A Survey on Data Quality Dimensions and Tools for Machine Learning Invited Paper. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence Testing (AITest), Shanghai, China, 15–18 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 120–131. [Google Scholar] [CrossRef]

- Batini, C.; Scannapieco, M. Data Quality Dimensions. In Data and Information Quality; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–51. [Google Scholar] [CrossRef]

- Gong, Y.; Liu, G.; Xue, Y.; Li, R.; Meng, L. A survey on dataset quality in machine learning. Inf. Softw. Technol. 2023, 162, 107268. [Google Scholar] [CrossRef]

- Taleb, I.; Serhani, M.A.; Dssouli, R. Big Data Quality Assessment Model for Unstructured Data. In Proceedings of the 2018 International Conference on Innovations in Information Technology (IIT), Al Ain, United Arab Emirates, 18–19 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 69–74. [Google Scholar] [CrossRef]

- Zhou, L.; Pan, S.; Wang, J.; Vasilakos, A.V. Machine learning on big data: Opportunities and challenges. Neurocomputing 2017, 237, 350–361. [Google Scholar] [CrossRef]

- Kahn, M.G.; Callahan, T.J.; Barnard, J.; Bauck, A.E.; Brown, J.; Davidson, B.N.; Estiri, H.; Goerg, C.; Holve, E.; Johnson, S.G.; et al. A Harmonized Data Quality Assessment Terminology and Framework for the Secondary Use of Electronic Health Record Data. EGEMs (Gener. Evid. Methods Improv. Patient Outcomes) 2016, 4, 18. [Google Scholar] [CrossRef]

- Reda, O.; Zellou, A. Assessing the quality of social media data: A systematic literature review. Bull. Electr. Eng. Inform. 2023, 12, 1115–1126. [Google Scholar] [CrossRef]

- Amat-Lefort, N.; Barravecchia, F.; Mastrogiacomo, L. Quality 4.0: Big data analytics to explore service quality attributes and their relation to user sentiment in Airbnb reviews. Int. J. Qual. Reliab. Manag. 2022, 40, 990–1008. [Google Scholar] [CrossRef]

- Papastergios, V.; Gounaris, A. Stream DaQ: Stream-First Data Quality Monitoring. arXiv 2025, arXiv:2506.06147. [Google Scholar] [CrossRef]

- Sarr, D. Towards Explainable Automated Data Quality Enhancement Without Domain Knowledge. arXiv 2024, arXiv:2409.10139. [Google Scholar] [CrossRef]

- Angelov, P.P.; Soares, E.A.; Jiang, R.; Arnold, N.I.; Atkinson, P.M. Explainable artificial intelligence: An analytical review. WIREs Data Min. Knowl. Discov. 2021, 11, e1424. [Google Scholar] [CrossRef]

- Costa e Silva, E.; Oliveira, O.; Oliveira, B. Enhancing Real-Time Analytics: Streaming Data Quality Metrics for Continuous Monitoring. In Proceedings of the ICoMS 2024: 2024 7th International Conference on Mathematics and Statistics, Amarante, Portugal, 23–25 June 2024; ACM: New York, NY, USA, 2024; pp. 97–101. [Google Scholar] [CrossRef]

- Google Colab. 2025. Available online: https://colab.research.google.com/ (accessed on 23 August 2025).

- Pandas: Python Data Analysis Library. 2025. Available online: https://pandas.pydata.org/ (accessed on 23 August 2025).

- Scikit-Learn: Machine Learning in Python. 2025. Available online: https://scikit-learn.org/ (accessed on 23 August 2025).

- Impyute: Missing Data Imputation Library. 2025. Available online: https://impyute.readthedocs.io/en/master/ (accessed on 23 August 2025).

- Airbnb Price Dataset on Kaggle. 2025. Available online: https://www.kaggle.com/datasets/rupindersinghrana/airbnb-price-dataset (accessed on 23 August 2025).

| Validation | Weight | Threshold / Rule | Rationale |

|---|---|---|---|

| Completeness | 5 | ≥90% non-missing values per attribute | Expert-driven: Completeness is the most critical determinant of reliability and fairness in downstream analytics. |

| Consistency | 2 | Logical rules hold (e.g., beds ≥ bedrooms) | Data-driven: Cross-field errors observed in the Airbnb dataset; weight tuned to reflect their moderate prevalence. |

| Format Validity | 1 | Regex match (e.g., postal codes, IDs) | Trade-off: Structural conformity is important, but syntactic errors alone rarely invalidate analytical utility. |

| Data Type Consistency | 1 | Values match expected types (int, float, categorical) | Expert-driven: Prevents schema drift and parsing errors; low impact on semantic meaning. |

| Range/Domain Validity | 2 | Values fall within expected sets (e.g., city, bed type) | Trade-off: Balances detection of invalid values with tolerance for new/unseen categories. |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| high | 0.92 | 0.92 | 0.92 | 6862 |

| low | 0.65 | 0.92 | 0.76 | 4573 |

| median | 0.68 | 0.30 | 0.41 | 3388 |

| macro avg | 0.75 | 0.71 | 0.70 | 14,823 |

| weighted avg | 0.78 | 0.78 | 0.76 | 14,823 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| high | 1.00 | 1.00 | 1.00 | 6862 |

| low | 1.00 | 1.00 | 1.00 | 4573 |

| median | 1.00 | 1.00 | 1.00 | 3388 |

| macro avg | 1.00 | 1.00 | 1.00 | 14,823 |

| weighted avg | 1.00 | 1.00 | 1.00 | 14,823 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| high | 1.00 | 1.00 | 1.00 | 6862 |

| low | 1.00 | 1.00 | 1.00 | 4573 |

| median | 1.00 | 1.00 | 1.00 | 3388 |

| macro avg | 1.00 | 1.00 | 1.00 | 14,823 |

| weighted avg | 1.00 | 1.00 | 1.00 | 14,823 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| high | 0.96 | 1.00 | 0.99 | 6862 |

| low | 0.99 | 0.92 | 0.95 | 4573 |

| median | 0.91 | 0.97 | 0.94 | 3388 |

| macro avg | 0.96 | 0.96 | 0.96 | 14,823 |

| weighted avg | 0.97 | 0.97 | 0.97 | 14,823 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| high | 0.80 | 0.32 | 0.46 | 6862 |

| low | 0.64 | 0.57 | 0.60 | 4573 |

| median | 0.23 | 0.55 | 0.32 | 3388 |

| macro avg | 0.56 | 0.48 | 0.46 | 14,823 |

| weighted avg | 0.62 | 0.45 | 0.47 | 14,823 |

| Model | Baseline Accuracy | Accuracy Range Under ±20% Weight Variation | F1-Score Range |

|---|---|---|---|

| Random Forest | 1.00 | 0.99–1.00 | 0.99–1.00 |

| XGBoost | 1.00 | 0.99–1.00 | 0.99–1.00 |

| CatBoost | 0.97 | 0.96–0.98 | 0.96–0.97 |

| Decision Tree | 0.78 | 0.76–0.79 | 0.75–0.77 |

| AdaBoost | 0.47 | 0.45–0.49 | 0.44–0.46 |

| Model | Mean Accuracy (5 Splits) | Std. Deviation |

|---|---|---|

| Random Forest | 0.999 | 0.001 |

| XGBoost | 0.998 | 0.002 |

| CatBoost | 0.972 | 0.004 |

| Decision Tree | 0.776 | 0.006 |

| AdaBoost | 0.462 | 0.009 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Toq, R.; Almaslukh, A. DQMAF—Data Quality Modeling and Assessment Framework. Information 2025, 16, 911. https://doi.org/10.3390/info16100911

Al-Toq R, Almaslukh A. DQMAF—Data Quality Modeling and Assessment Framework. Information. 2025; 16(10):911. https://doi.org/10.3390/info16100911

Chicago/Turabian StyleAl-Toq, Razan, and Abdulaziz Almaslukh. 2025. "DQMAF—Data Quality Modeling and Assessment Framework" Information 16, no. 10: 911. https://doi.org/10.3390/info16100911

APA StyleAl-Toq, R., & Almaslukh, A. (2025). DQMAF—Data Quality Modeling and Assessment Framework. Information, 16(10), 911. https://doi.org/10.3390/info16100911