4.1. Experimental Design and Data Sources

This study systematically evaluates the instructional effectiveness and behavioral impacts of Generative AI in college English classrooms. A non-equivalent pretest–posttest control group design, a type of quasi-experimental method, was employed to approximate causal inference in authentic classroom settings while maintaining control over instructional variables.

Participants were 2023 undergraduates (non-English majors) from a key provincial university in China, spanning science and engineering, economics and management, and humanities. A total of 150 students were randomly assigned to the experimental or control group (

each) using stratified randomization based on gender and English pretest scores to enhance baseline comparability. Baseline equivalence was checked via independent-samples tests and standardized mean differences; no material imbalance was detected, and balance diagnostics are reported in

Table 2. The experiment lasted six weeks, with two sessions per week (two class hours per session; 24 h total). Course content, instructional objectives, and assessment structures were held constant across groups to minimize extraneous interference. All procedures received approval from the institutional ethics committee and written informed consent was obtained from all participants.

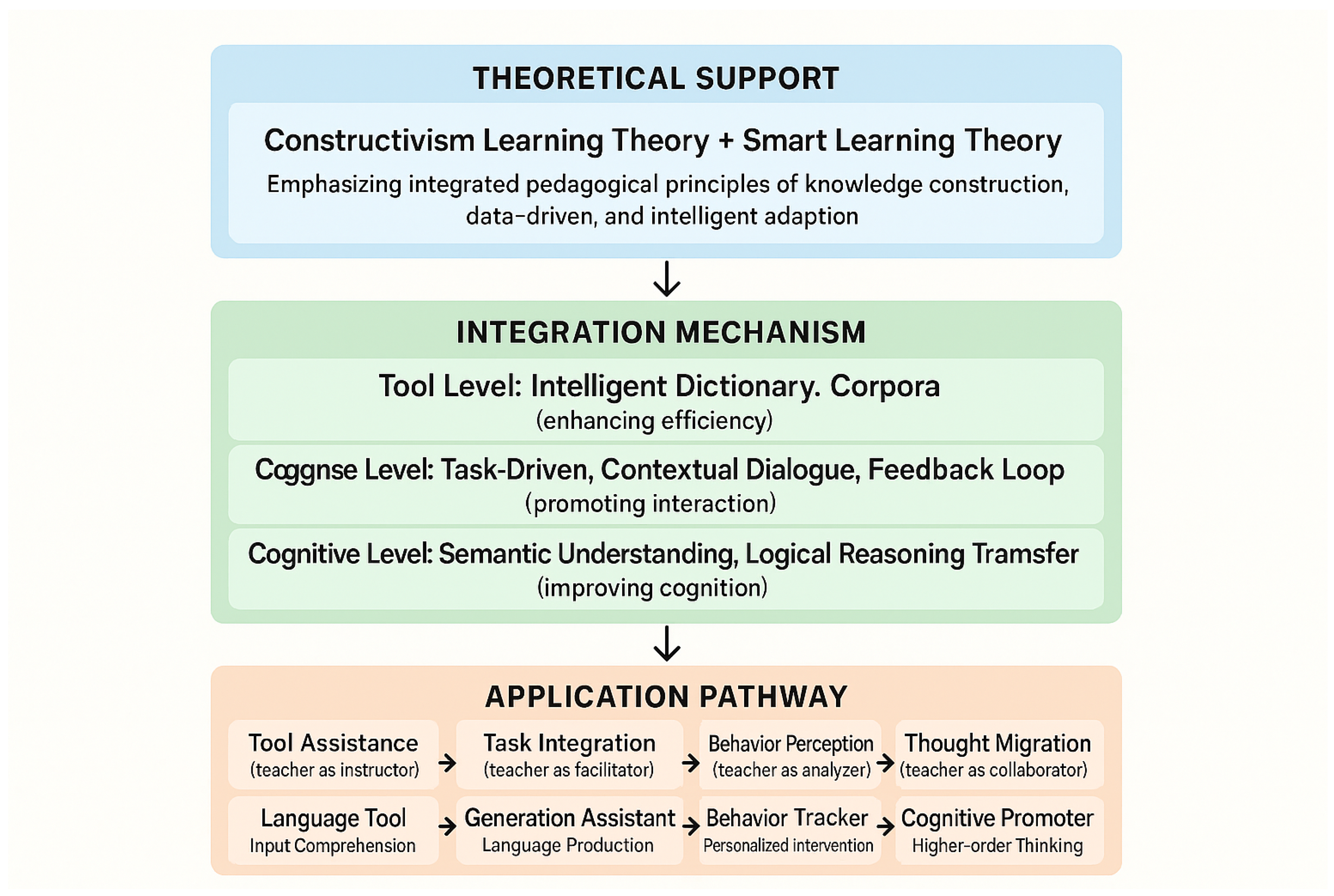

In terms of instructional strategy, the experimental group utilized a dual-platform Generative AI environment (ChatGPT 4.0 and DeepSeek 3.2, accessed via institutional accounts with official web interfaces; no third-party plug-ins or external model add-ons were used) embedded with task-driven modules (e.g., AI-guided writing and discourse extension), real-time feedback mechanisms, and behavioral tracking systems.

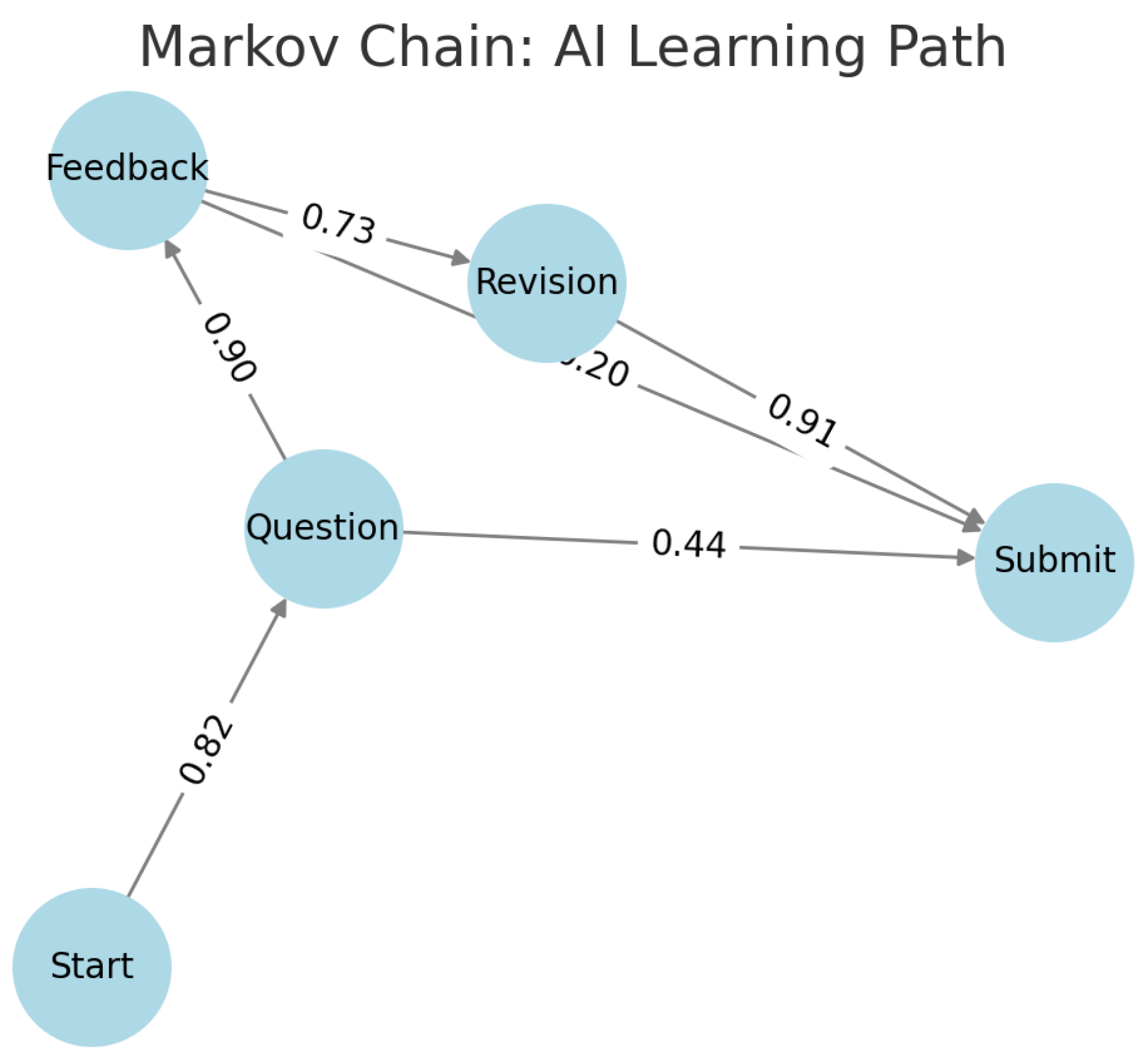

AI implementation details: Students engaged in a structured “draft → feedback → revision” loop: (i) submit a task-specific prompt and generate a first draft; (ii) request and document AI feedback on content, organization, and language form; (iii) revise the draft and append a short reflection note explaining which recommendations were adopted or rejected and why.

To support consistent use, a prompt template library was provided (e.g., genre-audience-purpose specification, style constraints, and content boundaries). Instructors received a short instructional facilitation protocol (pre-brief, live monitoring dashboard, and post-task debrief) to standardize coaching across classes. Responsible-use guidelines were communicated prior to the experiment: AI could be used for idea generation, language polishing, and structural suggestions; full outsourcing of assignments to AI was prohibited. Students were required to attach AI interaction summaries (prompt snapshots or paraphrased logs) to submissions, ensuring auditability and accountability.

In contrast, the control group followed a traditional teacher-centered approach combining PPT lectures and paper-based exercises, with feedback provided via manual grading and periodic assessments. All classes were delivered by instructors with comparable qualifications to minimize style bias. To monitor treatment fidelity, periodic classroom observations were conducted using a standardized checklist, with adherence notes summarized in

Table 3.

To comprehensively assess the effects of AI instruction, a multi-source data collection system was implemented, integrating quantitative and qualitative, process and outcome, and objective and subjective dimensions.

In terms of data collection, this study employed a multi-source design to comprehensively capture the outcomes and mechanisms of Generative-AI-assisted instruction.

First, cognitive outcome data were obtained from pretest and posttest English proficiency assessments, which covered reading comprehension, language usage, and writing. All items and rubrics were developed by a professional test design team, aligned with CEFR B1–B2 levels and the Chinese Standards of English Language Ability (CSE Levels 4–5). Inter-rater calibration was conducted prior to scoring, and the final scores were averaged from three independent raters under double-blind conditions to ensure reliability and validity.

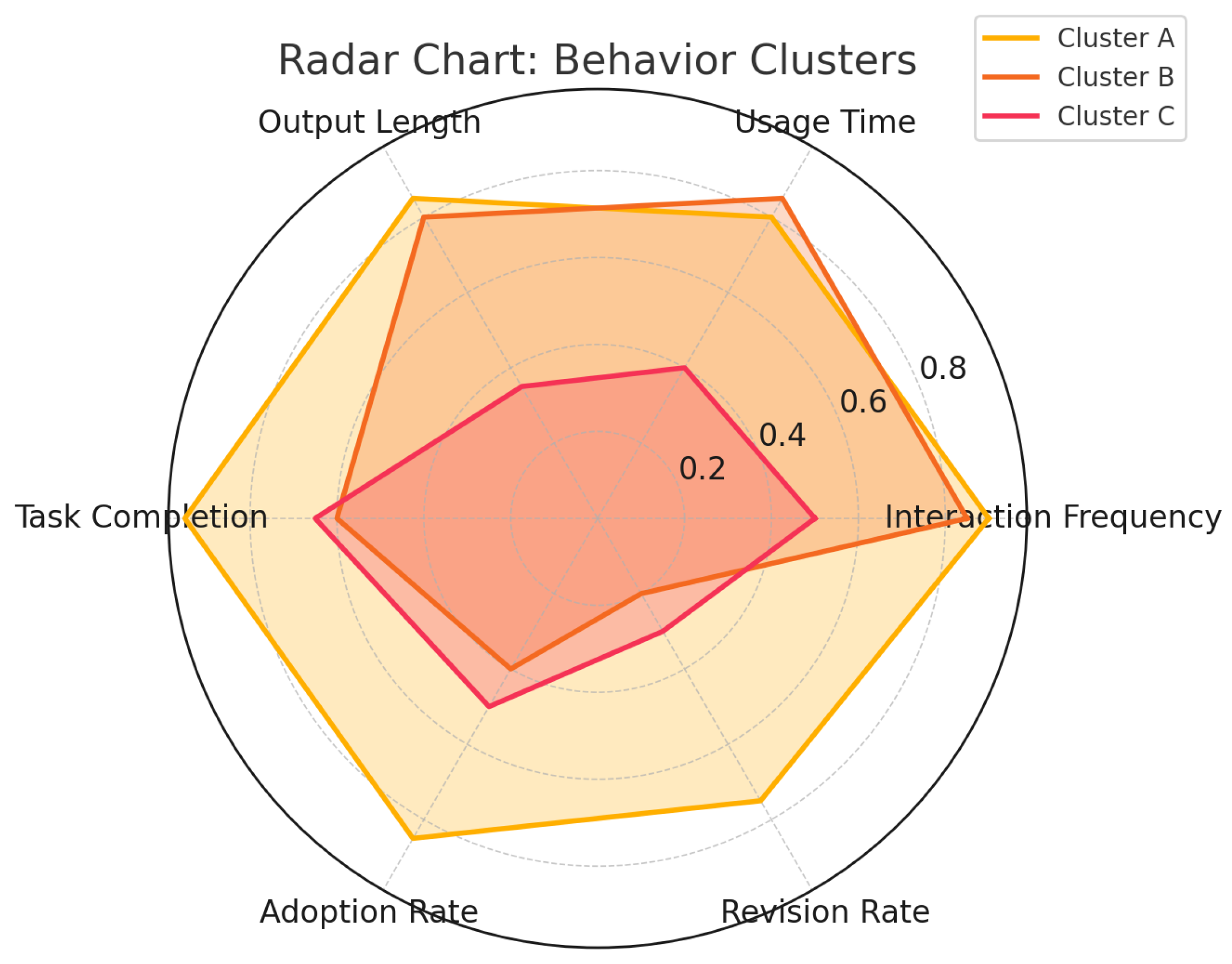

Second, AI platform log data (experimental group only) recorded the full process of student–AI interaction. Key indicators included weekly interaction frequency, average output length per task, revision count, and feedback adoption rate (defined as the proportion of actionable AI suggestions incorporated into the revised draft). Additional measures included weekly usage time, self-revision rate (operationalized by edit-distance between drafts), pathway completeness index (whether the full cycle “draft–feedback–revision–resubmission” was completed), BLEU score, and a lexico–syntactic features index (type–token ratio and clause complexity). All raw logs were anonymized before export, with personal identifiers such as names, IDs, and IPs removed. Data were stored on secure institutional servers with restricted access and daily backups in accordance with university data protection policies.

Third, in-class behavioral observations were conducted using structured observation sheets to record behaviors such as question frequency, participation, and engagement. Observers rotated across sessions and cross-reviewed notes. Observation prompts were aligned with the facilitation protocol (e.g., evidence of feedback uptake and quality of revision planning) to ensure consistency between intended pedagogy and enacted practices.

Fourth, motivation and perception surveys were administered in Weeks 3 and 6 using a 5-point Likert-scale instrument titled “Generative AI Learning Experience and Motivation,” measuring five dimensions. Scale items, factor structure, and reliability coefficients are reported in

Table 4. Administration procedures were identical across groups to minimize measurement bias.

Finally, teacher and student interviews were conducted in a semi-structured format with instructors and a purposive subsample of students, selected via maximum-variation sampling across behavioral clusters. The interview protocol covered four domains: (i) usefulness and limitations of AI feedback; (ii) changes in writing process and self-regulation; (iii) experiences with the draft–feedback–revision cycle; and (iv) responsible AI use and academic integrity. Interviews lasted approximately 20–30 min, were audio-recorded with participant consent, and were subsequently transcribed.

Academic integrity and ethical safeguards. Students signed an acceptable-use statement clarifying permitted and prohibited AI uses, and instructors verified consistency across drafts, AI summaries, and final submissions. Any concerns were addressed through formative feedback rather than punitive measures during the study. Informed consent was obtained prior to participation, and privacy safeguards for log data were strictly enforced, in line with COPE/APA ethical guidelines.

This five-dimensional framework enabled a comprehensive assessment of both cognitive outcomes and behavioral–cognitive mechanisms under AI-supported learning while ensuring transparency regarding platform implementation, task design, interview protocols, and ethical compliance.

4.2. Analysis Methods

To evaluate the effectiveness of Generative-AI-assisted instruction, this study employed a mixed-methods approach that integrated statistical inference, behavioral modeling, and structural modeling.