From Resilience to Cognitive Adaptivity: Redefining Human–AI Cybersecurity for Hard-to-Abate Industries in the Industry 5.0–6.0 Transition

Abstract

1. Introduction

- •

- RQ1: How do human factors in cybersecurity evolve during the transition from Industry 5.0 to Industry 6.0, particularly in hard-to-abate industries?

- •

- RQ2: What distinguishes cognitive adaptivity from traditional resilience approaches in addressing behavioral cybersecurity vulnerabilities?

- •

- RQ3: How can organizations in hard-to-abate industries implement cognitive adaptivity frameworks to enhance both cybersecurity posture and operational sustainability?

2. Theoretical Background

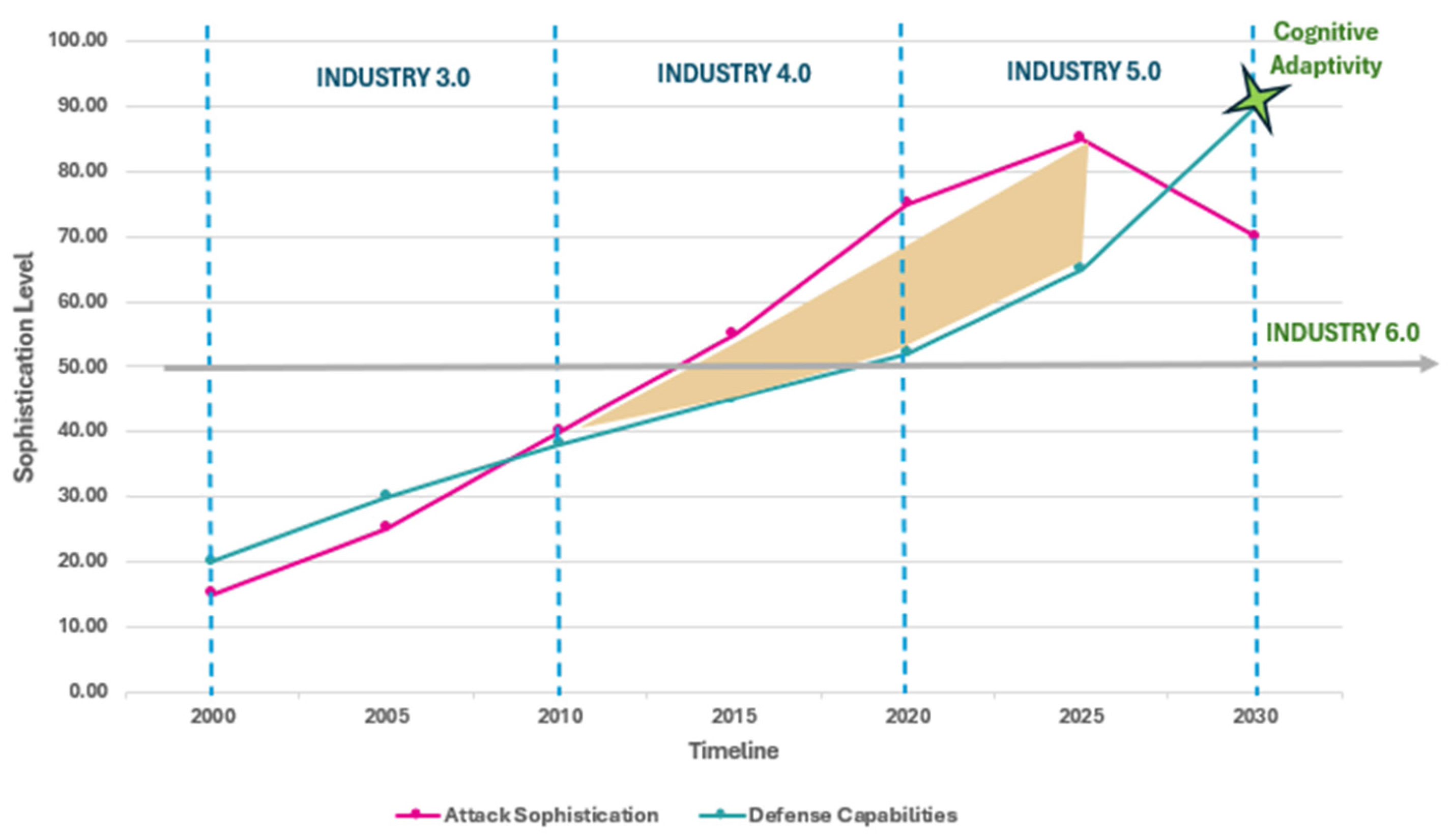

2.1. From Industry 3.0 to Industry 6.0: The Evolution of Human-Technology Interaction

2.2. Human Factors in Cybersecurity: Beyond Technical Vulnerabilities

2.3. From Resilience to Cognitive Adaptivity: A Theoretical Framework

3. Methodological Approach

3.1. Research Design and Philosophical Foundations

3.2. Data Collection Strategy

3.2.1. Primary Data Collection: Semi-Structured Interviews

- Sampling Strategy and Participant Selection

- •

- Tile Manufacturers (n = 67): Including production managers (n = 23), IT/cybersecurity personnel (n = 19), quality control managers (n = 15), and senior executives (n = 10)

- •

- Raw Material Suppliers (n = 5): Supply chain managers and sustainability officers

- •

- Glaze and Ink Producers (n = 6): R&D managers and production supervisors

- •

- Machinery Manufacturers (n = 5): Technical sales managers and IoT implementation specialists

- •

- Industry Associations (n = 3): Policy analysts and member services coordinators

- Interview Protocol Development

- Organizational Context (10–15 min): Participant background, organizational structure, digital transformation timeline, and current cybersecurity posture

- Human Factors in Cybersecurity (20–25 min): Experiences with social engineering, behavioral vulnerabilities, human–AI interaction challenges, and training effectiveness

- Evolutionary Perspectives (15–20 min): Changes in cybersecurity threats and responses across Industry 3.0–6.0 transition, adaptation strategies, and learning mechanisms

- Future Orientations (10–15 min): Expectations for Industry 6.0 development, cognitive adaptivity concepts, and implementation challenges

- Interview Execution and Quality Assurance

- •

- Bracketing techniques to minimize researcher bias through explicit acknowledgment of prior assumptions

- •

- Member checking with 25% of participants to verify interpretation accuracy

- •

- Peer debriefing sessions following each interview batch to identify emerging patterns and potential analytical blind spots

- •

- Saturation monitoring through tracking of new themes and concepts to determine data collection sufficiency

- Ethical Considerations and Data Protection

- •

- Anonymization protocols removing all identifying information from transcripts

- •

- Aggregate reporting ensuring individual responses could not be traced to specific organizations

- •

- Sensitive information exclusion allowing participants to designate certain information as off-record

- •

- Data retention limits with automatic deletion of identifying information after two years

3.2.2. Secondary Data Collection

- Industry Reports and White Papers

- •

- Annual cybersecurity reports from major ceramics industry associations

- •

- Technology adoption surveys from manufacturing consultancies

- •

- Threat intelligence reports from industrial cybersecurity vendors

- •

- Digital transformation case studies from leading ceramic manufacturers

- Regulatory and Policy Documents

- •

- European Union cybersecurity directives and implementation guidance

- •

- CSRD requirements and industry-specific sustainability reporting standards

- •

- National Industry 4.0 strategy documents from major ceramic-producing countries

- •

- Insurance industry risk assessments for manufacturing cybersecurity

- Academic and Technical Literature

- •

- Peer-reviewed articles on industrial cybersecurity and human factors (2020–2024)

- •

- Conference proceedings from manufacturing technology and cybersecurity conferences

- •

- Technical standards documents for industrial IoT and cyber-physical systems

- •

- Dissertation research on related topics from European technical universities

3.3. Data Analysis Approach

3.3.1. Qualitative Data Analysis

- Multi-AI Collaborative Analysis Protocol

- Primary Thematic Analysis (ChatGPT-5): Custom prompts were developed to identify recurring themes, behavioral patterns, and cybersecurity vulnerabilities across transcripts. ChatGPT-5’s large context window enabled analysis of complete interviews while maintaining thematic consistency.

- Conceptual Validation (Claude-3.5): Claude was employed for deeper conceptual analysis, particularly for identifying theoretical connections and validating the emergence of the cognitive adaptivity framework. Its analytical capabilities proved valuable for connecting empirical observations to theoretical constructs.

- Comparative Analysis (Microsoft Copilot): Copilot’s integration with enterprise search capabilities enabled cross-referencing of interview findings with secondary data sources, identifying convergences and divergences between stakeholder perceptions and documented industry trends.

- Quality Assurance and Human Oversight

- •

- Inter-AI Validation: Each transcript underwent analysis by at least two different AI models, with outputs compared for consistency and completeness

- •

- Human Verification: Two independent researchers validated all AI-generated codes, achieving 89% agreement on thematic categorizations

- •

- Triangulation Protocol: AI-identified patterns were systematically cross-checked against secondary data sources and existing literature

- Prompt Engineering for Domain Specificity

- •

- Custom prompts were developed for cybersecurity and industrial transition analysis, including:

- •

- Industry-specific terminology recognition (ceramic manufacturing processes, cybersecurity threats)

- •

- Behavioral pattern identification in human–AI interaction contexts

- •

- Temporal analysis across Industry 3.0–6.0 transitions

- Methodological Transparency

3.3.2. Mixed-Methods Integration

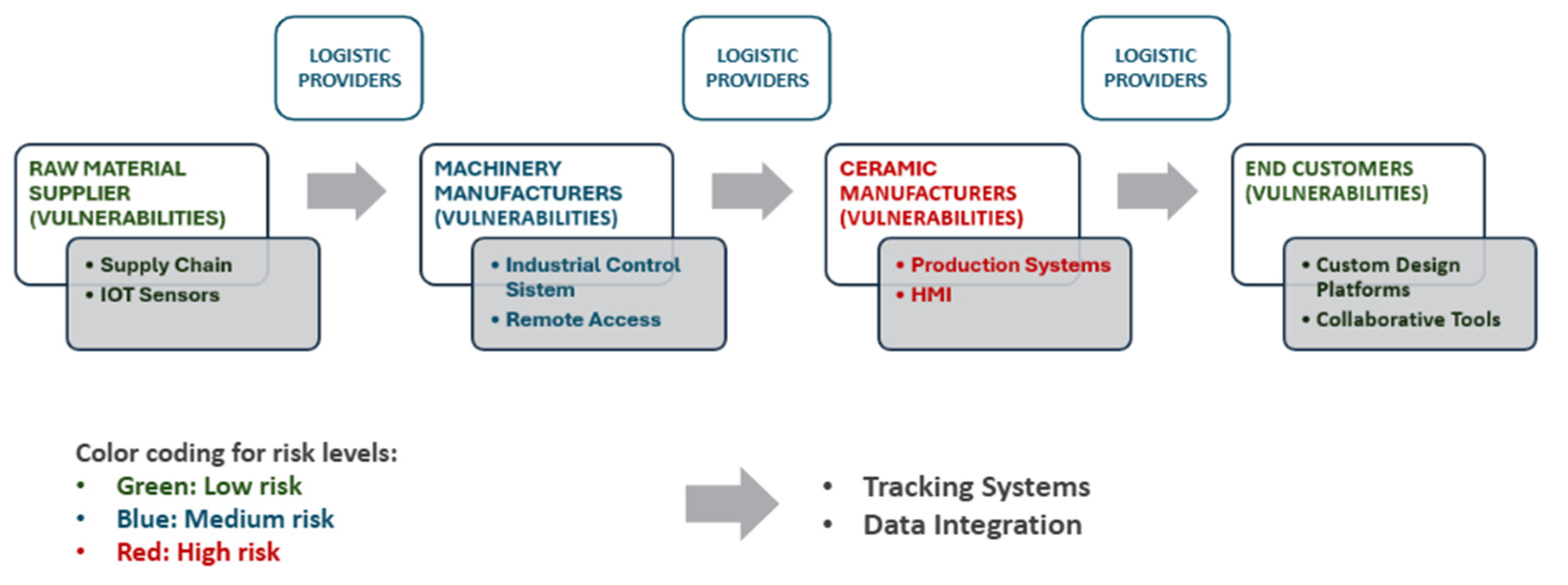

4. The Ceramic Value Chain and Cybersecurity Challenges

4.1. Industry 3.0–4.0: Foundation of Digital Vulnerabilities

4.2. Industry 5.0: Human-Centric Vulnerabilities and Collaborative Risks

4.3. Industry 6.0: Cognitive Ecosystems and Adaptive Threats

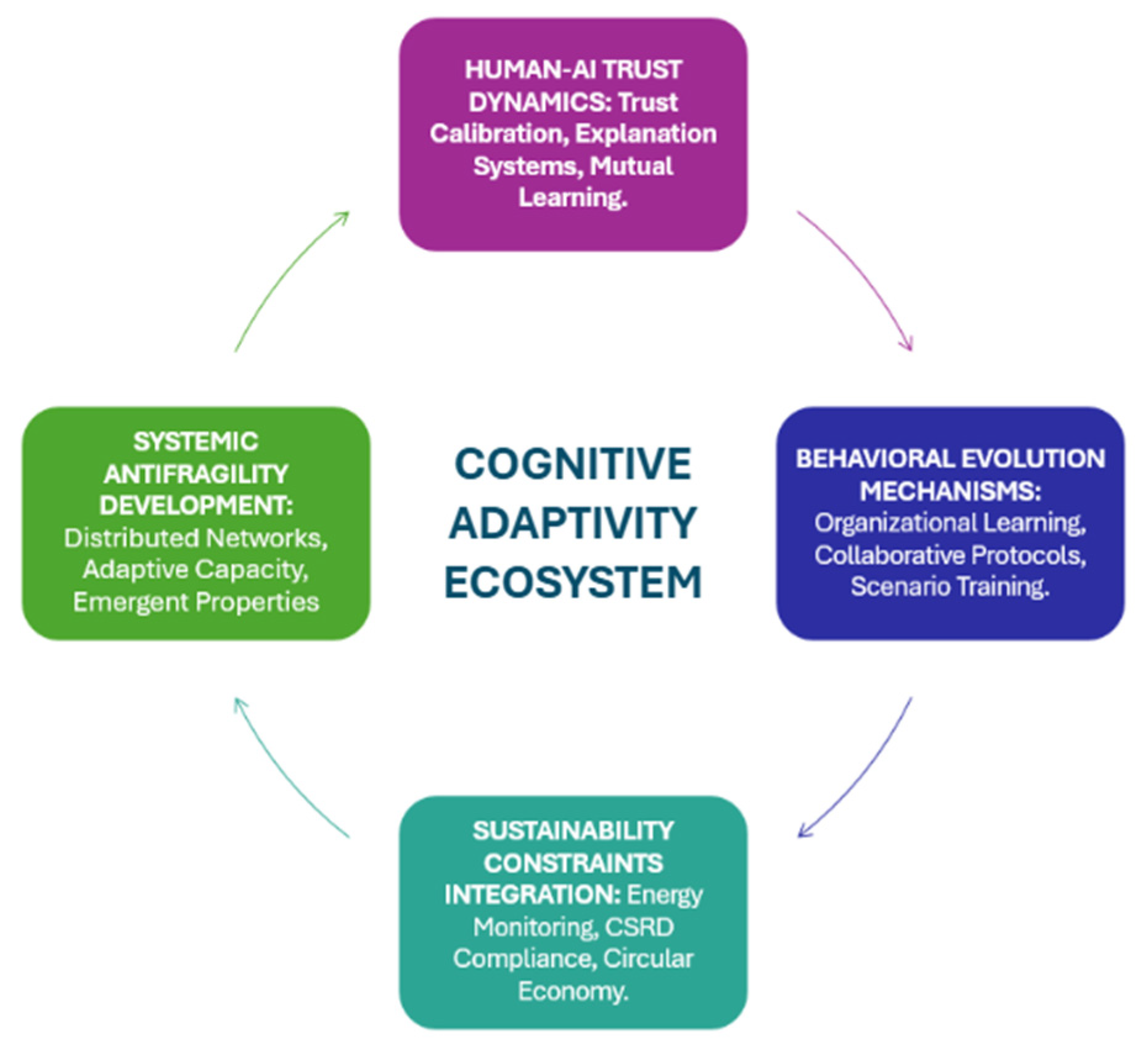

5. Conceptual Model: Cognitive Adaptivity in Hard-to-Abate Industries

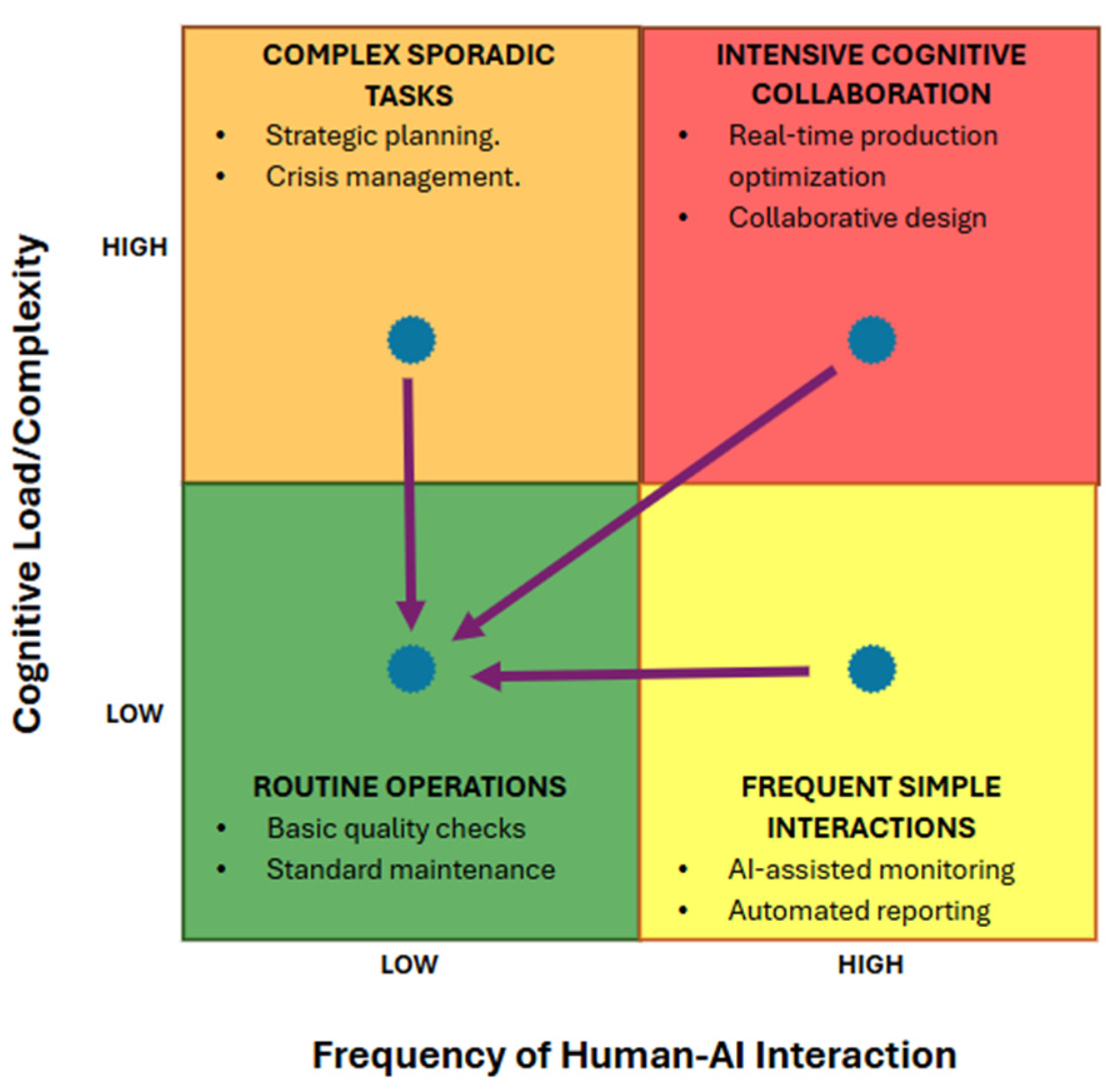

5.1. Human–AI Trust Dynamics

5.2. Behavioral Evolution Mechanisms

5.3. Sustainability Constraints Integration

5.4. Systemic Antifragility Development

5.5. Implementation in the Ceramic Value Chain

5.6. Quantitative Representation of Adaptive Dynamics

6. Discussion

6.1. Theoretical Contributions and Distinctions

6.2. Implications for Hard-to-Abate Industries

6.3. Practical Implementation Considerations

6.4. Limitations and Boundary Conditions

7. Conclusions and Future Research

7.1. Theoretical Contributions

7.2. Practical Implications

7.3. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jiang, W.; Hu, F. Artificial Intelligence Agent-Enabled Predictive Maintenance: Conceptual Proposal and Basic Framework. Computers 2025, 14, 329. [Google Scholar] [CrossRef]

- Radanliev, P.; De Roure, D.; Maple, C.; Nurse, J.R.; Nicolescu, R.; Ani, U. AI Security and Cyber Risk in IoT Systems. Front. Big Data 2024, 7, 1402745. [Google Scholar] [CrossRef]

- Fernández-Miguel, A.; García-Muiña, F.E.; Settembre-Blundo, D.; Tarantino, S.C.; Riccardi, M.P. Exploring Systemic Sustainability in Manufacturing: Geoanthropology’s Strategic Lens Shaping Industry 6.0. Glob. J. Flex. Syst. Manag. 2024, 25, 579–600. [Google Scholar] [CrossRef]

- Safa, N.S.; Abroshan, H. The Effect of Organizational Factors on the Mitigation of Information Security Insider Threats. Information 2025, 16, 538. [Google Scholar] [CrossRef]

- Mulahuwaish, A.; Qolomany, B.; Gyorick, K.; Abdo, J.B.; Aledhari, M.; Qadir, J.; Carley, K.; Al-Fuqaha, A. A Survey of Social Cybersecurity: Techniques for Attack Detection, Evaluations, Challenges, and Future Prospects. Comput. Hum. Behav. Rep. 2025, 18, 100668. [Google Scholar] [CrossRef]

- Kostelić, K. Dynamic Awareness and Strategic Adaptation in Cybersecurity: A Game-Theory Approach. Games 2024, 15, 13. [Google Scholar] [CrossRef]

- Tynchenko, V.; Lomazov, A.; Lomazov, V.; Evsyukov, D.; Nelyub, V.; Borodulin, A.; Gantimurov, A.; Malashin, I. Adaptive Management of Multi-Scenario Projects in Cybersecurity: Models and Algorithms for Decision-Making. Big Data Cogn. Comput. 2024, 8, 150. [Google Scholar] [CrossRef]

- Rawindaran, N.; Jayal, A.; Prakash, E. Cybersecurity Framework: Addressing Resiliency in Welsh SMEs for Digital Transformation and Industry 5.0. J. Cybersecur. Priv. 2025, 5, 17. [Google Scholar] [CrossRef]

- Hunter, T.S.; Taylor, M.; Selvadurai, N. Emerging Technologies in Oil and Gas Development: Regulatory and Policy Perspectives. Res. Handb. Oil Gas Law 2023, 345–372. [Google Scholar] [CrossRef]

- Eirinakis, P.; Lounis, S.; Plitsos, S.; Arampatzis, G.; Kalaboukas, K.; Kenda, K.; Lu, J.; Rožanec, J.M.; Stojanovic, N. Cognitive Digital Twins for Resilience in Production: A Conceptual Framework. Information 2022, 13, 33. [Google Scholar] [CrossRef]

- Kanaan, A.; Ahmad, A.; Alorfi, A.; Aloun, M. Cybersecurity Resilience for Business: A Comprehensive Model for Proactive Defense and Swift Recovery. In Proceedings of the 2024 2nd International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 6–28 February 2024; pp. 1–7. [Google Scholar]

- Contini, G.; Peruzzini, M.; Bulgarelli, S.; Bosi, G. Developing Key Performance Indicators for Monitoring Sustainability in the Ceramic Industry: The Role of Digitalization and Industry 4.0 Technologies. J. Clean. Prod. 2023, 414, 137664. [Google Scholar] [CrossRef]

- Contini, G.; Grandi, F.; Peruzzini, M. Human-Centric Green Design for Automatic Production Lines: Using Virtual and Augmented Reality to Integrate Industrial Data and Promote Sustainability. J. Ind. Inf. Integr. 2025, 44, 100801. [Google Scholar] [CrossRef]

- Liu, X.; Cheng, X.; Liao, C.; Chen, J.; Li, X.; Liu, K. Ceramic Anti-Counterfeiting Technology Identification Method Based on Blockchain. In Proceedings of the 2021 8th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud)/2021 7th IEEE International Conference on Edge Computing and Scalable Cloud (EdgeCom), Washington, DC, USA, 26–28 June 2021; pp. 36–41. [Google Scholar]

- Appolloni, A.; D’Adamo, I.; Gastaldi, M.; Yazdani, M.; Settembre-Blundo, D. Reflective Backward Analysis to Assess the Operational Performance and Eco-Efficiency of Two Industrial Districts. Int. J. Product. Perform. Manag. 2023, 72, 1608–1626. [Google Scholar] [CrossRef]

- Zakoldaev, D.A.; Korobeynikov, A.G.; Shukalov, A.V.; Zharinov, I.O.; Zharinov, O.O. Industry 4.0 vs Industry 3.0: The Role of Personnel in Production. In IOP Conference Series: Materials Science and Engineering; IOP Science: Beijing, China, 2025; p. 012048. [Google Scholar]

- Pedreira, V.; Barros, D.; Pinto, P. A Review of Attacks, Vulnerabilities, and Defenses in Industry 4.0 with New Challenges on Data Sovereignty Ahead. Sensors 2021, 21, 5189. [Google Scholar] [CrossRef]

- Pacaux-Lemoine, M.; Berdal, Q.; Guérin, C.; Rauffet, P.; Chauvin, C.; Trentesaux, D. Designing Human–system Cooperation in Industry 4.0 with Cognitive Work Analysis: A First Evaluation. Cogn. Technol. Work 2022, 24, 93–111. [Google Scholar] [CrossRef]

- Hassan, M.A.; Zardari, S.; Farooq, M.U.; Alansari, M.M.; Nagro, S.A. Systematic Analysis of Risks in Industry 5.0 Architecture. Appl. Sci. 2024, 14, 1466. [Google Scholar] [CrossRef]

- Kour, R.; Karim, R.; Dersin, P.; Venkatesh, N. Cybersecurity for Industry 5.0: Trends and Gaps. Front. Comput. Sci. 2024, 6, 1434436. [Google Scholar] [CrossRef]

- Khadka, K.; Ullah, A.B. Human Factors in Cybersecurity: An Interdisciplinary Review and Framework Proposal. Int. J. Inf. Secur. 2025, 24, 1–13. [Google Scholar] [CrossRef]

- Casino, F. Unveiling the Multifaceted Concept of Cognitive Security: Trends, Perspectives, and Future Challenges. Technol. Soc. 2025, 83, 102956. [Google Scholar] [CrossRef]

- Tilbury, J.; Flowerday, S. Automation Bias and Complacency in Security Operation Centers. Computers 2024, 13, 165. [Google Scholar] [CrossRef]

- Schmitt, M.; Flechais, I. Digital Deception: Generative Artificial Intelligence in Social Engineering and Phishing. Artif. Intell. Rev. 2024, 57, 324. [Google Scholar] [CrossRef]

- Sarker, I.H.; Janicke, H.; Mohsin, A.; Gill, A.; Maglaras, L. Explainable AI for Cybersecurity Automation, Intelligence and Trustworthiness in Digital Twin: Methods, Taxonomy, Challenges and Prospects. ICT Express 2024, 10, 935–958. [Google Scholar] [CrossRef]

- Alsharida, R.A.; Al-rimy, B.A.S.; Al-Emran, M.; Zainal, A. A Systematic Review of Multi Perspectives on Human Cybersecurity Behavior. Technol. Soc. 2023, 73, 102258. [Google Scholar] [CrossRef]

- Albaladejo-González, M.; Nespoli, P.; Gómez Mármol, F.; Ruipérez-Valiente, J.A. A Multimodal and Adaptive Gamified System to Improve Cybersecurity Competence Training. Clust. Comput. 2025, 28, 567. [Google Scholar] [CrossRef]

- Eswaran, U.; Eswaran, V.; Eswaran, V.; Murali, K. Empowering the Factory of the Future: Integrating Artificial Intelligence, Machine Learning, and IoT Innovations in Industry 6.0. In Evolution and Advances in Computing Technologies for Industry 6.0; CRC Press: Boca Raton, FL, USA, 2024; pp. 1–21. [Google Scholar]

- Zaritskyi, V.; Shyrokorad, D.; Mancilla, R.O.; oerg Abendroth, J.; Mpantis, A.; Perales, O.G.; Triantafyllou, G.; RDI, A.A.M.; Borgaonkar, R. AI-Driven Access Control System for Smart Factory Devices. In Proceedings of the 2025 IEEE International Conference on Smart Computing (SMARTCOMP), Cork, Ireland, 16–19 June 2025; pp. 264–269. [Google Scholar]

- Araujo, M.S.d.; Machado, B.A.S.; Passos, F.U. Resilience in the Context of Cyber Security: A Review of the Fundamental Concepts and Relevance. Appl. Sci. 2024, 14, 2116. [Google Scholar] [CrossRef]

- Moriano, P.; Hespeler, S.C.; Li, M.; Mahbub, M. Adaptive Anomaly Detection for Identifying Attacks in Cyber-Physical Systems: A Systematic Literature Review. Artif. Intell. Rev. 2025, 58, 283. [Google Scholar] [CrossRef]

- Edris, E.K.K. Utilisation of Artificial Intelligence and Cybersecurity Capabilities: A Symbiotic Relationship for Enhanced Security and Applicability. Electronics 2025, 14, 2057. [Google Scholar] [CrossRef]

- Al E’mari, S.; Sanjalawe, Y.; Fataftah, F.; Hajjaj, R. Foundations of Autonomous Cyber Defense Systems. In AI-Driven Security Systems and Intelligent Threat Response Using Autonomous Cyber Defense; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 1–34. [Google Scholar]

- Guhl, J.; Neuhüttler, J. Resilient Smart Services: A Literature Review. Procedia Comput. Sci. 2025, 253, 307–322. [Google Scholar] [CrossRef]

- Saveljeva, J.; Uvarova, I.; Peiseniece, L.; Volkova, T.; Novicka, J.; Polis, G.; Kristapsone, S.; Vembris, A. Cybersecurity for Sustainability: A Path for Strategic Resilience. In Proceedings of the 2025 IEEE International Conference on Cyber Security and Resilience (CSR), Chania, Greece, 4–6 August 2025; pp. 745–752. [Google Scholar]

- Dosumu, O.O.; Adediwin, O.; Nwulu, E.O.; Chibunna, U.B. Carbon Capture and Storage (CCS) in the US: A Review of Market Challenges and Policy Recommendations. Int. J. Multidiscip. Res. Growth Eval 2024, 5, 1515–1529. [Google Scholar] [CrossRef]

- Ahmadirad, Z. The Role of AI and Machine Learning in Supply Chain Optimization. Int. J. Mod. Achiev. Sci. Eng. Technol. 2025, 2, 1–8. [Google Scholar]

- Obreja, D.M.; Rughiniș, R.; Țurcanu, D. What Drives New Knowledge in Human Cybersecurity Behavior? Insights from Bibliometrics and Thematic Review. Comput. Hum. Behav. Rep. 2025, 18, 100650. [Google Scholar] [CrossRef]

- Branchini, L.; Bignozzi, M.C.; Ferrari, B.; Mazzanti, B.; Ottaviano, S.; Salvio, M.; Toro, C.; Martini, F.; Canetti, A. Cogeneration Supporting the Energy Transition in the Italian Ceramic Tile Industry. Sustainability 2021, 13, 4006. [Google Scholar] [CrossRef]

- D’Adamo, I.; Fratocchi, L.; Grosso, C.; Tavana, M. An Integrated Business Strategy for the Twin Transition: Leveraging Digital Product Passports and Circular Economy Models. Bus. Strategy Environ. 2025. [Google Scholar] [CrossRef]

- Strang, K.D. Cybercrime Risk found in Employee Behavior Big Data using Semi-Supervised Machine Learning with Personality Theories. Big Data Cogn. Comput. 2024, 8, 37. [Google Scholar] [CrossRef]

- Andrade, A.D. Dancing between theory and data: Abductive reasoning. In Handbook of Qualitative Research Methods for Information Systems; Edward Elgar Publishing: Northampton, MA, USA, 2023; pp. 274–287. [Google Scholar]

- Madsen, D.Ø.; Berg, T.; Slåtten, K. Four Futures of Industry 6.0: Scenario-Based Speculation Beyond Human-Centric Production. Available at SSRN 5354848 2025. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5354848 (accessed on 20 September 2025).

- Dupont, B.; Shearing, C.; Bernier, M.; Leukfeldt, R. The Tensions of Cyber-Resilience: From Sensemaking to Practice. Comput. Secur. 2023, 132, 103372. [Google Scholar] [CrossRef]

- Floyd, J.A.; D’Adamo, I.; Wamba, S.F.; Gastaldi, M. Competitiveness and Sustainability in the Paper Industry: The Valorisation of Human Resources as an Enabling Factor. Comput. Ind. Eng. 2024, 190, 110035. [Google Scholar] [CrossRef]

- Boggini, C. Reporting Cybersecurity to Stakeholders: A Review of CSRD and the EU Cyber Legal Framework. Comput. Law Secur. Rev. 2024, 53, 105987. [Google Scholar] [CrossRef]

- Saeed, S.; Gull, H.; Aldossary, M.M.; Altamimi, A.F.; Alshahrani, M.S.; Saqib, M.; Zafar Iqbal, S.; Almuhaideb, A.M. Digital Transformation in Energy Sector: Cybersecurity Challenges and Implications. Information 2024, 15, 764. [Google Scholar] [CrossRef]

- Taleb, N.N.; Douady, R. Mathematical Definition, Mapping, and Detection of (Anti) Fragility. Quant. Financ. 2013, 13, 1677–1689. [Google Scholar] [CrossRef]

- Singh, K.; Chatterjee, S.; Mariani, M.; Wamba, S.F. Cybersecurity Resilience and Innovation Ecosystems for Sustainable Business Excellence: Examining the Dramatic Changes in the Macroeconomic Business Environment. Technovation 2025, 143, 103219. [Google Scholar] [CrossRef]

- Banka, K.; Uchihira, N. Dynamic Capability in Business Ecosystems as a Sustainable Industrial Strategy: How to Accelerate Transformation Momentum. Sustainability 2024, 16, 4506. [Google Scholar] [CrossRef]

- Noor, A.F.M.; Moghavvemi, S.; Tajudeen, F.P. Identifying Key Factors of Cybersecurity Readiness in Organizations: Insights from Malaysian Critical Infrastructure. Comput. Secur. 2025, 159, 104674. [Google Scholar] [CrossRef]

- Thron, E.; Faily, S.; Dogan, H.; Freer, M. Human Factors and Cyber-Security Risks on the Railway—The Critical Role Played by Signalling Operations. Inf. Comput. Secur. 2024, 32, 236–263. [Google Scholar] [CrossRef]

- Allahawiah, S.; Altarawneh, H.; Al-Hajaya, M. The Role of Organizational Culture in Cybersecurity Readiness: An Empirical Study of the Jordanian Ministry of Justice. Calitatea 2024, 25, 74–84. [Google Scholar]

- Jiang, T.; Sun, Z.; Fu, S.; Lv, Y. Human-AI Interaction Research Agenda: A User-Centered Perspective. Data Inf. Manag. 2024, 8, 100078. [Google Scholar] [CrossRef]

| INDUSTRIAL ERA | PRIMARY THREAT CATEGORIES | HUMAN FACTOR VULNERABILITIES | DOMINANT RESPONSE APPROACH | ATTACK SUCCESS RATE (%) |

|---|---|---|---|---|

| Industry 3.0 (1970–2010) | Basic malware, unauthorized access, data theft. | Limited interaction, operator error. | Technical controls, access management. | 15–20 |

| Industry 4.0 (2011–2020) | IoT exploitation, supply chain attacks, data manipulation. | Cognitive overload, automation bias, complexity confusion. | Hybrid technical-human controls. | 25–35 |

| Industry 5.0 (2021–2025) | Advanced social engineering, AI-assisted phishing, collaborative system manipulation. | Trust exploitation, human–AI miscalibration, collaborative vulnerabilities. | Human-centric security, behavioral training. | 40–50 |

| Industry 6.0 (2025+) | Deepfakes, cognitive manipulation, autonomous attack systems, learning system poisoning. | Symbiotic dependencies, cognitive adaptation exploitation. | Cognitive adaptivity frameworks. | 20–30 |

| STAKEHOLDER CATEGORY | INDUSTRY 4.0 ERA (2015–2020) | INDUSTRY 5.0 ERA (2021–2024) | COGNITIVE LOAD IMPACT | RECOVERY IMPROVEMENT WITH ADAPTIVITY |

|---|---|---|---|---|

| Tile Manufacturers | Incidents: 24/year, Cost: €180K avg | Incidents: 16/year, Cost: €320K avg | High—Complex HMI systems | 65% faster recovery |

| Raw Material Suppliers | Incidents: 8/year, Cost: €45K avg | Incidents: 12/year, Cost: €85K avg | Medium—Supply chain integration | 45% faster recovery |

| Glaze/Ink Producers | Incidents: 6/year, Cost: €65K avg | Incidents: 10/year, Cost: €120K avg | High—R&D system targets | 70% faster recovery |

| Machinery Manufacturers | Incidents: 12/year, Cost: €95K avg | Incidents: 8/year, Cost: €150K avg | Medium—Technical expertise buffer | 50% faster recovery |

| Industry Associations | Incidents: 3/year, Cost: €25K avg | Incidents: 5/year, Cost: €40K avg | Low—Shared intelligence benefits | 80% faster recovery |

| Total Industry Impact | €2.1M/year average | €3.8M/year average | - | Projected 60% cost reduction |

| FRAMEWORK DIMENSION | TRADITIONAL RESILIENCE | COGNITIVE ADAPTIVITY | KEY PERFORMANCE INDICATORS | IMPLEMENTATION TIMELINE |

|---|---|---|---|---|

| LearningMechanism | Post-incident analysis, lessons learned documents | Continuous behavioral adaptation, real-time insight extraction | Learning velocity (insights/month), knowledge retention rate | 6–12 months |

| Threat Anticipation | Reactive detection, signature-based systems | Proactive behavioral modeling, pattern recognition | Prediction accuracy (%), early warning effectiveness | 12–18 months |

| Human–AI Interaction | Fixed roles, hierarchical decision-making | Dynamic collaboration, mutual adaptation | Trust calibration index, symbiotic efficiency score | 18–24 months |

| System Response | Recovery to baseline functionality | Performance enhancement through adversity | Adaptivity coefficient, capability growth rate | 24–36 months |

| Knowledge Management | Centralized documentation, training programs | Distributed learning networks, experiential knowledge | Knowledge diffusion speed, cross-organizational learning | 12–24 months |

| IMPLEMENTATION PHASE | DURATION | KEY ACTIVITIES | SUCCESS METRICS | INVESTMENT RANGE (€) | RISK MITIGATION STRATEGIES |

|---|---|---|---|---|---|

| Foundation Building | 6–9 months | Baseline assessment, stakeholder alignment, initial training | Staff engagement > 80%, basic capability development | 150 K–300 K | Change management, pilot programs |

| Pilot Deployment | 9–12 months | Limited scope implementation, feedback collection, refinement | 25% reduction in incident response time | 300 K–600 K | Parallel legacy systems, gradual transition |

| Scaled Implementation | 12–18 months | Organization-wide deployment, integration optimization | 50% improvement in threat detection | 600 K–1.2 M | Phased rollout, continuous monitoring |

| Ecosystem Integration | 18–24 months | Supply chain extension, industry collaboration | Cross-organizational learning effectiveness | 400 K–800 K | Partnership agreements, data sharing protocols |

| Continuous Evolution | Ongoing | Adaptive refinement, capability enhancement | Sustained competitive advantage metrics | 200 K–400 K/year | Innovation investment, skill development |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández-Miguel, A.; Ortíz-Marcos, S.; Jiménez-Calzado, M.; Fernández del Hoyo, A.P.; García-Muiña, F.E.; Settembre-Blundo, D. From Resilience to Cognitive Adaptivity: Redefining Human–AI Cybersecurity for Hard-to-Abate Industries in the Industry 5.0–6.0 Transition. Information 2025, 16, 881. https://doi.org/10.3390/info16100881

Fernández-Miguel A, Ortíz-Marcos S, Jiménez-Calzado M, Fernández del Hoyo AP, García-Muiña FE, Settembre-Blundo D. From Resilience to Cognitive Adaptivity: Redefining Human–AI Cybersecurity for Hard-to-Abate Industries in the Industry 5.0–6.0 Transition. Information. 2025; 16(10):881. https://doi.org/10.3390/info16100881

Chicago/Turabian StyleFernández-Miguel, Andrés, Susana Ortíz-Marcos, Mariano Jiménez-Calzado, Alfonso P. Fernández del Hoyo, Fernando Enrique García-Muiña, and Davide Settembre-Blundo. 2025. "From Resilience to Cognitive Adaptivity: Redefining Human–AI Cybersecurity for Hard-to-Abate Industries in the Industry 5.0–6.0 Transition" Information 16, no. 10: 881. https://doi.org/10.3390/info16100881

APA StyleFernández-Miguel, A., Ortíz-Marcos, S., Jiménez-Calzado, M., Fernández del Hoyo, A. P., García-Muiña, F. E., & Settembre-Blundo, D. (2025). From Resilience to Cognitive Adaptivity: Redefining Human–AI Cybersecurity for Hard-to-Abate Industries in the Industry 5.0–6.0 Transition. Information, 16(10), 881. https://doi.org/10.3390/info16100881