1. Introduction

In machine learning, it is necessary to have complete and sufficient training data for parameter tuning to achieve good performance. Therefore, the amount of data and the distribution of data features become an important factor. Traditional machine learning assumes that the training data and test data satisfy the condition of being independent and identically distributed. Under this assumption, most models work well. However, in practical applications, it is usually difficult to obtain a large amount of annotated data for a specific task, and data annotation is time-consuming and laborious, which leads to insufficient data in machine learning models. Moreover, there is a problem of inconsistent distribution of data between different data domains, which is called sample selection bias [

1] or covariance displacement [

2,

3]. The above issues will reduce the robustness and reliability of traditional machine learning models, leading to a decrease in the performance of the model.

To avoid repetitive data annotation and improve the performance of the model, currently, domain adaptation (DA) methods that can achieve knowledge transfer between different domains, solving the problems of different data distributions and lack of train data in different domains effectively [

4,

5,

6]. Domain adaptation can be mainly divided into instance-based methods, feature representation-based methods, and classifier-based methods. This paper focuses on the feature representation-based methods, which assumes that the relevant domain data has shared implicit features [

7]. That is, the marginal distribution between data in different domains is matched [

8]. Then, feature space information is used to align the domain data in the feature space in a mapping way to find the shared features between different domains. Therefore, a reliable machine learning model can be constructed using source domain data with sufficient labels [

9]. By transferring source and target domain data features, common features between different domain data are utilized to expand the features of target domain data, reducing the strict constraint requirements of the model on data and improving the performance of the model [

10].

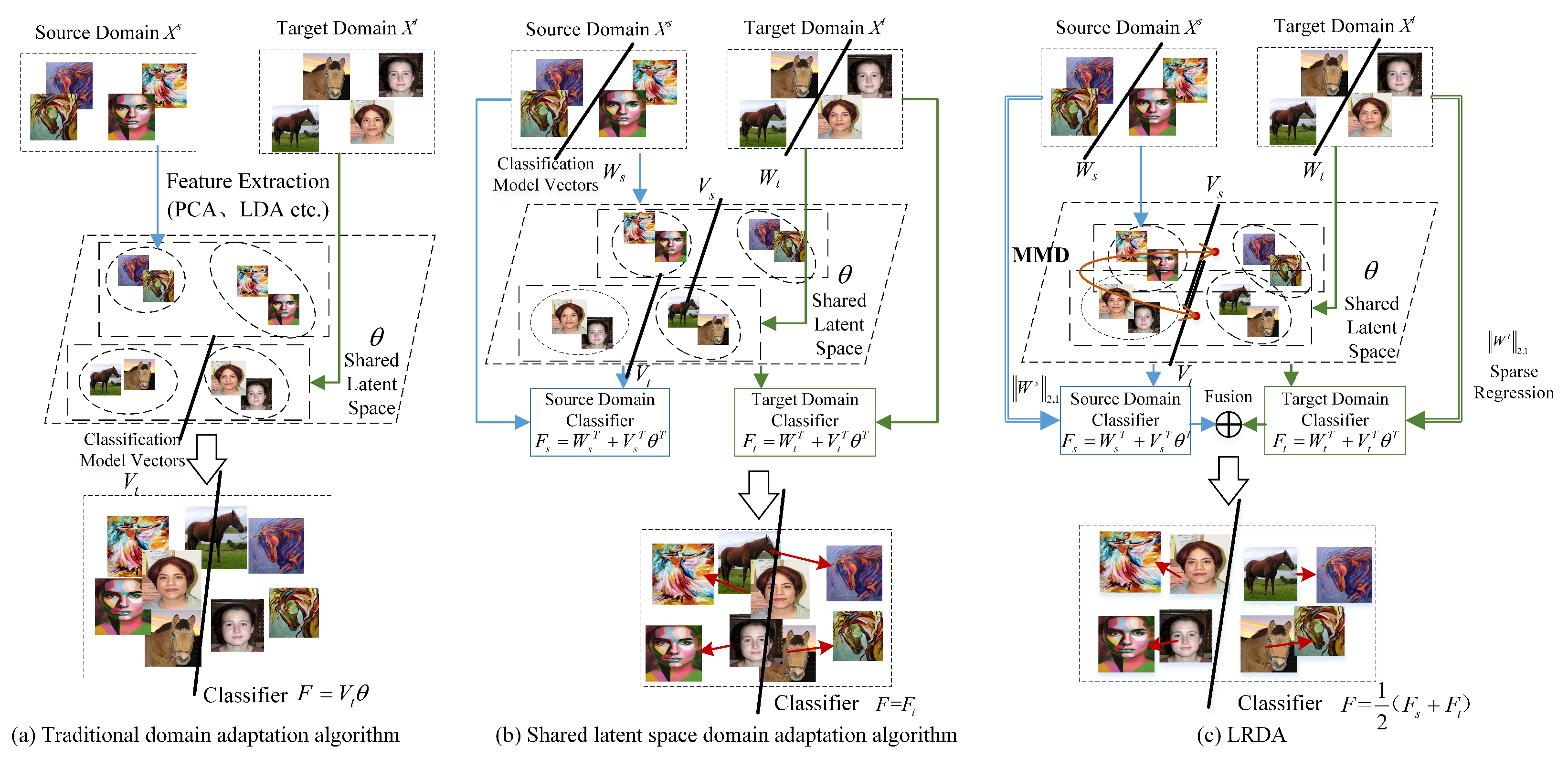

The initial domain adaptation methods based on feature representation use feature representation methods to transform source domain data and target domain data into latent space, and construct a classifier through the latent feature representation of the target domain. The principle of these methods is summarized in

Figure 1a, which employs feature extraction to construct a feature invariant latent space and then design a classifier for the target domain [

11]. Gheisari et al. [

12] demonstrated that minimizing classification error while maximizing manifold consistency in a shared space led to improved classification accuracy on target domains compared to non-adaptive baselines. Zheng et al. [

13] showed that finding a dimensionality reduction technique minimizing distribution distance in the latent space effectively enabled feature transfer, resulting in measurable performance gains. Blitzer et al. [

14] established that modeling feature correlations across domains via structural correspondence learning identified pivot features crucial for cross-domain discrimination, enhancing adaptation robustness. Jiang et al. [

15] extended this concept effectively to multi-view data, showing that incorporating latent space features across views significantly addressed the unique challenges posed by multi-perspective domain differences. Xu et al. [

16] leveraged distributionally robust optimization for feature extraction under weak supervision, demonstrating enhanced robustness to distributional uncertainties and achieving competitive results.

Later methods incorporate the original space information, which can guide target domain classifier construction by combining it with latent space features. This joint information is then utilized to build the classifier [

17,

18], as shown in

Figure 1b. Dong et al. [

19] proved that embedding a shared low-dimensional latent space into an SVM (support vector machine) framework, constrained by source-target latent space alignment, substantially improved SVM performance in domain adaptation tasks compared to standard SVMs. Yao et al. [

20] successfully expanded this concept to the more complex multi-source adaptation scenario, showing that constraining the predicted label matrix across sources further enhanced adaptation effectiveness and robustness. Zhang et al. [

21] demonstrated that combining latent space features with target pseudo-labels effectively mined richer domain-invariant information, yielding state-of-the-art results on several benchmarks. However, the above methods do not consider the distribution of latent space features from the perspective of distribution consistency, and the differences in distribution will reduce the effectiveness of shared features, thereby reducing the performance of domain adaptation.

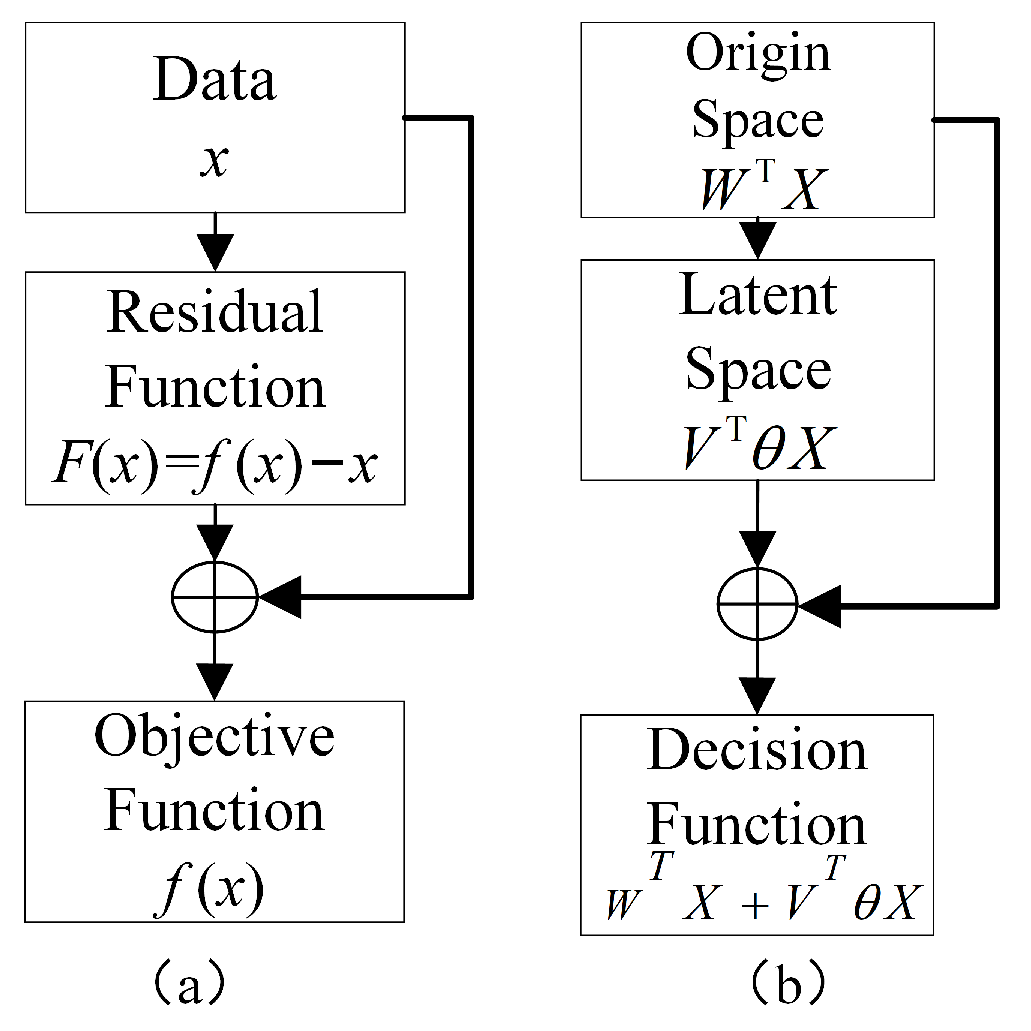

Inspired by Residual Network [

22], we propose a shared

latent space

domain

adaptation with

residual model (LRDA) to make full use of the relationship between original feature and latent space feature in domains, and address the problem of mismatched distribution of latent space features in domain adaptation. Specifically, mapping the source and target domain data into a shared latent feature space, mining common features between source and target domains, to achieve the goal of fully utilizing the source domain data to enhance the feature expression of the target domain data. Subsequently, minimizing the distribution differences between the shared feature space of the source and target domain, ensuring latent space features have a more consistent feature distribution, and reducing model performance degradation caused by inconsistent feature distribution. Finally, by combining the original spatial features with the the latent space features in both source and target domain, a residual model is formed to reduce the difficulty of fitting the model and obtain a better classifier. Following the above description, the principle of LRDA is shown in

Figure 1c.

The main contributions of this paper are:

- 1.

We introduce a shared latent space by source domain feature and target domain feature, constructing a latent space domain adaptation method. Measuring the differences in latent space feature distribution, further constraining the consistent distribution of shared feature spaces in the domain to align the feature distribution in the shared latent space.

- 2.

We build a residual model using the original feature space and latent feature space, optimizing residual function to reduce the difficulty of feature transfer and improve model performance.

- 3.

We adopt the -norm for feature selection to sparsely represent the original feature, increasing the robustness of the model for outliers and noise naturally existing in dataset.

- 4.

Experiments verify that our method has better performance and can effectively recognize shallow and deep features, effectively improve the performance of cross-domain visual recognition tasks.

Author Contributions

Conceptualization, B.Z. and J.P.; data curation, B.Z.; formal analysis, B.Z. and J.P.; funding acquisition, B.Z. and J.P.; investigation, B.Z. and F.Y.; methodology, B.Z. and F.Y.; project administration, B.Z. and J.P.; resources, J.P. and Z.Z.; software, B.Z. and Z.Z.; supervision, F.Y.; validation, J.P.; visualization, Z.Z. and J.P.; writing—original draft, B.Z.; writing—review and editing, B.Z., J.P. and Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the key projects of Ningbo Education Science Planning in 2025 no. 2025YZD023 and Ningbo Natural Science Foundation no. 2023J242.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study is openly available in Office31, Caltech-256, Office-Home, PIE, MNIST-UPS and COIL20, reference number [

27,

29,

31,

32,

34,

35,

36].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Huang, J.; Smola, A.; Gretton, A.; Borgwardt, K.; Scholkopf, B. Correcting sample selection bias by unlabeled data. In Advances in Neural Information Processing Systems 19: Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Sugiyama, M.; Storkey, A.J. Mixture regression for covariate shift. In Advances in Neural Information Processing Systems 19: Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Sugiyama, M.; Nakajima, S.; Kashima, H.; Buenau, P.; Kawanabe, M. Direct importance estimation with model selection and its application to covariate shift adaptation. In Advances in Neural Information Processing Systems 20, Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; Curran Associates, Inc.: Red Hook, NY, USA, 2008. [Google Scholar]

- Ghifary, M.; Balduzzi, D.; Kleijn, W.B.; Zhang, M. Scatter component analysis: A unified framework for domain adaptation and domain generalization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1414–1430. [Google Scholar] [CrossRef]

- Evgeniou, T.; Micchelli, C.A.; Pontil, M.; Shawe-Taylor, J. Learning multiple tasks with kernel methods. J. Mach. Learn. Res. 2005, 4, 615–637. [Google Scholar]

- Duan, L.; Tsang, I.W.; Xu, D. Domain transfer multiple kernellearning. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 465–479. [Google Scholar] [CrossRef]

- Wang, S.; Wang, B.; Zhang, Z.; Heidari, A.A.; Chen, H. Class-aware sample reweighting optimal transport for multi-source domain adaptation. Neurocomputing 2024, 523, 213–223. [Google Scholar] [CrossRef]

- Rostami, M.; Rostami, M.; Bose, D.; Narayanan, S.; Galstyan, A. Domain adaptation for sentiment analysis using robust internal representations. In Findings of the Association for Computational Linguistics: EMNLP; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 11484–11498. [Google Scholar]

- Ding, N.; Xu, Y.; Tang, Y.; Xu, C.; Wang, Y.; Tao, D. Source-free domain adaptation via distribution estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7212–7222. [Google Scholar]

- Ge, C.; Huang, R.; Xie, M.; Lai, Z.; Song, S.; Li, S.; Huang, G. Domain adaptation via prompt learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 36, 1160–1170. [Google Scholar] [CrossRef] [PubMed]

- Kay, J.; Haucke, T.; Stathatos, S.; Deng, S.; Young, E.; Perona, P.; Beery, S.; Van Horn, G. Align and distill: Unifying and improving domain adaptive object detection. arXiv 2024, arXiv:2403.12029. [Google Scholar] [CrossRef]

- Gheisari, M.; Baghshah, M.S. Unsupervised domain adaptation via representation learning and adaptive classifier learning. Neurocomputing 2015, 165, 300–311. [Google Scholar] [CrossRef]

- Zheng, V.W.; Pan, S.J.; Yang, Q.; Pan, J.J. Transferring Multi-device Localization Models using Latent Multi-task Learning. In Proceedings of the 23rd National Conference on Artificial Intelligence, Chicago, IL, USA, 13–17 July 2008; pp. 1427–1432. [Google Scholar]

- Blitzer, J.; McDonald, R.; Pereira, F. Domain adaptation with structural correspondence learning. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing, Sydney, Australia, 22–23 July 2006; pp. 120–128. [Google Scholar]

- Jiang, L.; Hauptmann, A.G.; Xiang, G. Leveraging high-level and low-level features for multimedia event detection. In Proceedings of the 20th ACM international conference on Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 449–458. [Google Scholar]

- Xu, H.; Guo, H.; Yi, L.; Ling, C.; Wang, B.; Yi, G. Revisiting Source-Free Domain Adaptation: A New Perspective via Uncertainty Control. In Proceedings of the The Thirteenth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Tao, J.; Xu, H. Discovering domain-invariant subspace for depression recognition by jointly exploiting appearance and dynamics feature representations. IEEE Access 2019, 7, 186417–186436. [Google Scholar] [CrossRef]

- Wu, Y.; Li, Z.; Wang, C.; Zheng, H.; Zhao, S.; Li, B.; Tao, D. Domain re-modulation for few-shot generative domain adaptation. Adv. Neural Inf. Process. Syst. 2024, 36, 57099–57124. [Google Scholar]

- Aimei, D.; Shitong, W. A Shared Latent Subspace Transfer Learning Algorithm Using SVM. Acta Autom. Sin. 2014, 40, 2276–2287. [Google Scholar]

- Yao, Z.; Tao, J. Multi-source adaptation multi-label classification framework via joint sparse feature selection and shared subspace learning. Comput. Eng. Appl. 2017, 53, 88–96. [Google Scholar]

- Zhang, Y.; Tao, J.; Yan, L. Domain-Invariant Label Propagation With Adaptive Graph Regularization. IEEE Access 2024, 12, 190728–190745. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Shi, X.; Guo, Z.; Lai, Z.; Yang, Y.; Bao, Z.; Zhang, D. A framework of joint graph embedding and sparse regression for dimensionality reduction. IEEE Trans. Image Process. 2015, 24, 1341–1355. [Google Scholar] [CrossRef] [PubMed]

- Ma, Z.; Nie, F.; Yang, Y.; Uijlings, J.R.R.; Sebe, N. Web image annotation via subspace-sparsity collaborated feature selection. IEEE Trans. Multimed. 2012, 14, 1021–1030. [Google Scholar] [CrossRef]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Bernhard, S.; Smola, A.J. A kernel method for the two-sample-problem. In Advances in Neural Information Processing Systems 19: Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Nie, F.; Huang, H.; Cai, X.; Ding, C.H.Q. Efficient and Robust Feature Selection via Joint L2,1-Norms Minimization. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Vancouver, BC, USA, 6–9 December 2010; pp. 1813–1821. [Google Scholar]

- Saenko, K.; Kulis, B.; Fritz, M.; Darrell, T. Adapting visual category models to new domains. In Computer VisionECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010, Proceedings, Part IV 11; Springer: Berlin/Heidelberg, Germany, 2010; pp. 213–226. [Google Scholar]

- Sun, B.; Feng, J.; Saenko, K. Return of frustratingly easy domain adaptation. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 Object Category Dataset; California Institute of Technology: Pasadena, CA, USA, 2007. [Google Scholar]

- Zhang, J.; Li, W.; Ogunbona, P. Joint geometrical and statistical alignment for visual domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1859–1867. [Google Scholar]

- Venkateswara, H.; Eusebio, J.; Chakraborty, S.; Panchanathan, S. Deep hashing network for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5018–5027. [Google Scholar]

- Sim, T.; Baker, S.; Bsat, M. The CMU pose, illumination and expression database. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1615–1618. [Google Scholar] [CrossRef]

- Long, M.; Wang, J.; Ding, G.; Sun, J.; Yu, P.S. Transfer feature learning with joint distribution adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2200–2207. [Google Scholar]

- LeCun, Y.; Bottou, L. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Hull, J.J. A database for handwritten text recognition research. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 550–554. [Google Scholar] [CrossRef]

- Nene, S.A.; Nayar, S.K.; Murase, H. Columbia Object Image Library (Coil-20). Technical Report CUCS-005-96. 1996. Available online: https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php (accessed on 27 July 2025).

- Gong, B.; Shi, Y.; Sha, F.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 2066–2073. [Google Scholar]

- Baktashmotlagh, M.; Harandi, M.T.; Lovell, B.C.; Salzmann, M. Unsupervised domain adaptation by domain invariant projection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 769–776. [Google Scholar]

- Luo, L.; Wang, X.; Hu, S.; Wang, C.; Tang, Y.; Chen, L. Close yet distinctive domain adaptation. arXiv 2017, arXiv:1704.04235. [Google Scholar] [CrossRef]

- Fernando, B.; Habrard, A.; Sebban, M.; Tuytelaars, T. Unsupervised visual domain adaptation using subspace alignment. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2014; pp. 2960–2967. [Google Scholar]

- Busto, P.P.; Gall, J. Open set domain adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 754–763. [Google Scholar]

- Liang, J.; He, R.; Sun, Z.; Tan, T. Aggregating randomized clustering-promoting invariant projections for domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1027–1042. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar] [CrossRef]

- Long, M.; Wang, J. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 97–105. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Ghifary, M.; Kleijn, W.B.; Zhang, M.; Balduzzi, D.; Li, W. Deep reconstruction-classification networks for unsupervised domain adaptation. In Computer VisionECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part IV 14; Springer: Cham, Switzerland, 2016; pp. 597–613. [Google Scholar]

- Long, M.; Wang, J.; Jordan, M.I. Unsupervised domain adaptation with residual transfer networks. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Yan, H.; Ding, Y.; Li, P.; Wang, Q.; Xu, Y.; Zuo, W. Mind the class weight bias: Weighted maximum mean discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2272–2281. [Google Scholar]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Deep transfer learning with joint adaptation networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 2208–2217. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Kang, G.; Jiang, L.; Wei, Y.; Yang, Y.; Hauptmann, A. Contrastive adaptation network for single-and multisource domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1793–1804. [Google Scholar] [CrossRef] [PubMed]

- Cui, S.; Wang, S.; Zhuo, J.; Su, C.; Tian, Q. Gradually vanishing bridge for adversarial domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12455–12464. [Google Scholar]

- Hu, L.; Kan, M.; Shan, S.; Chen, X. Unsupervised domain adaptation with hierarchical gradient synchronization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4043–4052. [Google Scholar]

- Tang, H.; Chen, K.; Jia, K. Unsupervised domain adaptation via structurally regularized deep clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8725–8735. [Google Scholar]

- Luo, L.; Chen, L.; Hu, S.; Lu, Y.; Wang, X. Discriminative and geometry aware unsupervised domain adaptation. IEEE Trans. Cybern. 2020, 50, 3914–3927. [Google Scholar] [CrossRef]

- Tao, J.; Dan, Y.; Zhou, D.; He, S. Robust latent multi-source adaptation for encephalogram based emotion recognition. Front. Neurosci. 2022, 16, 850–906. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).