A Real-Time Advisory Tool for Supporting the Use of Helmets in Construction Sites

Abstract

1. Introduction

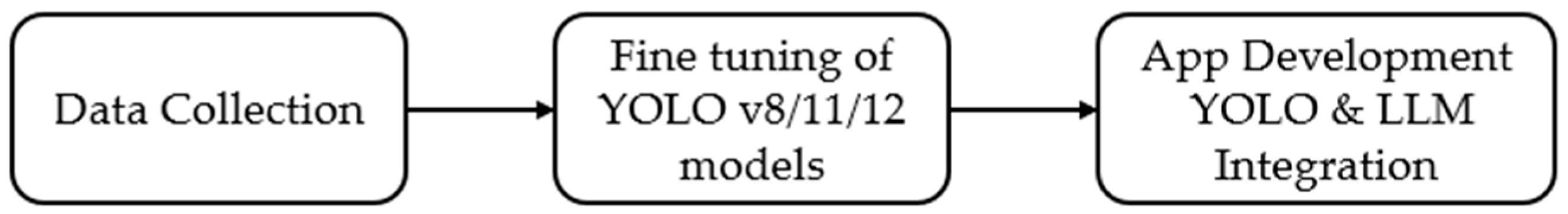

2. Materials and Methods

2.1. The Dataset

2.2. The Models

2.2.1. The You Only Look Once (YOLO) Model

2.2.2. Large Language Models

3. Results

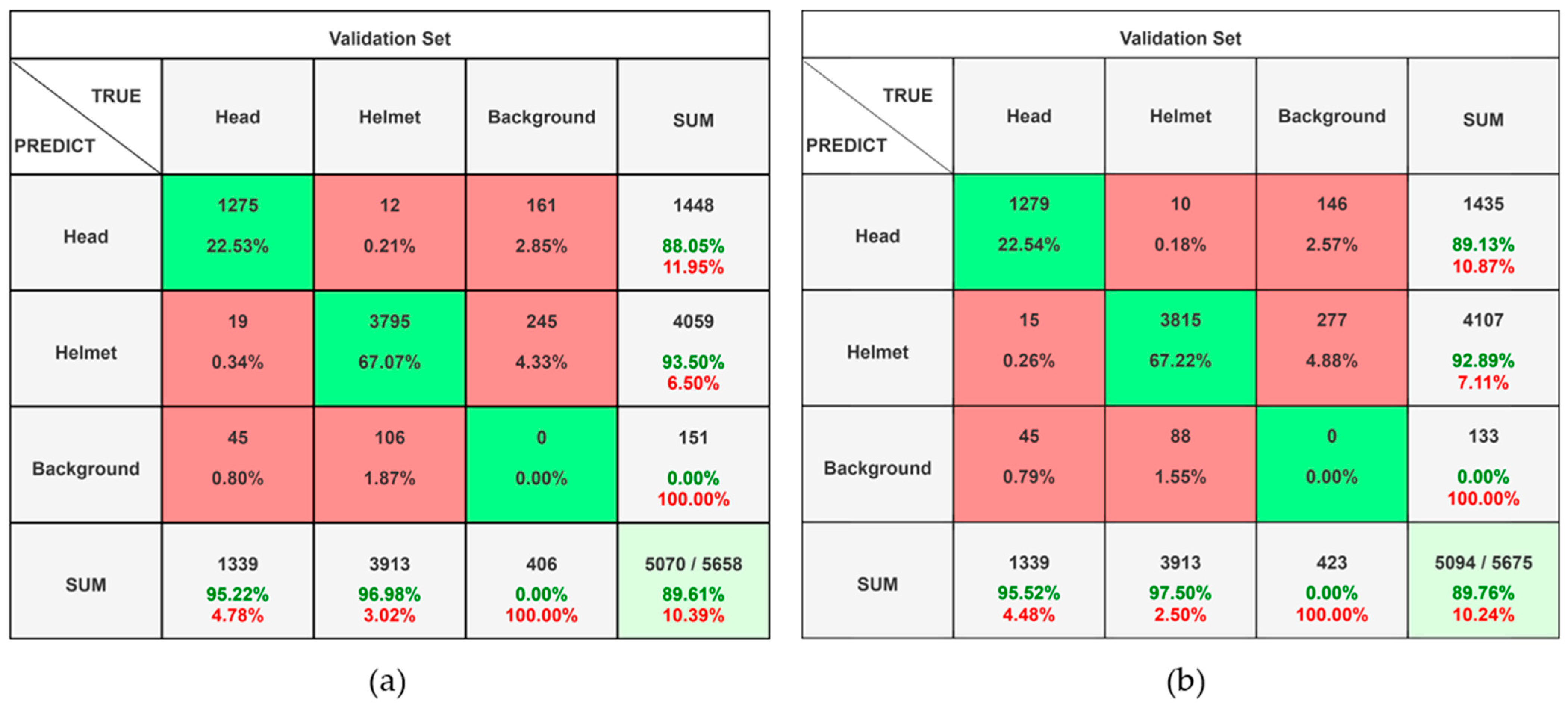

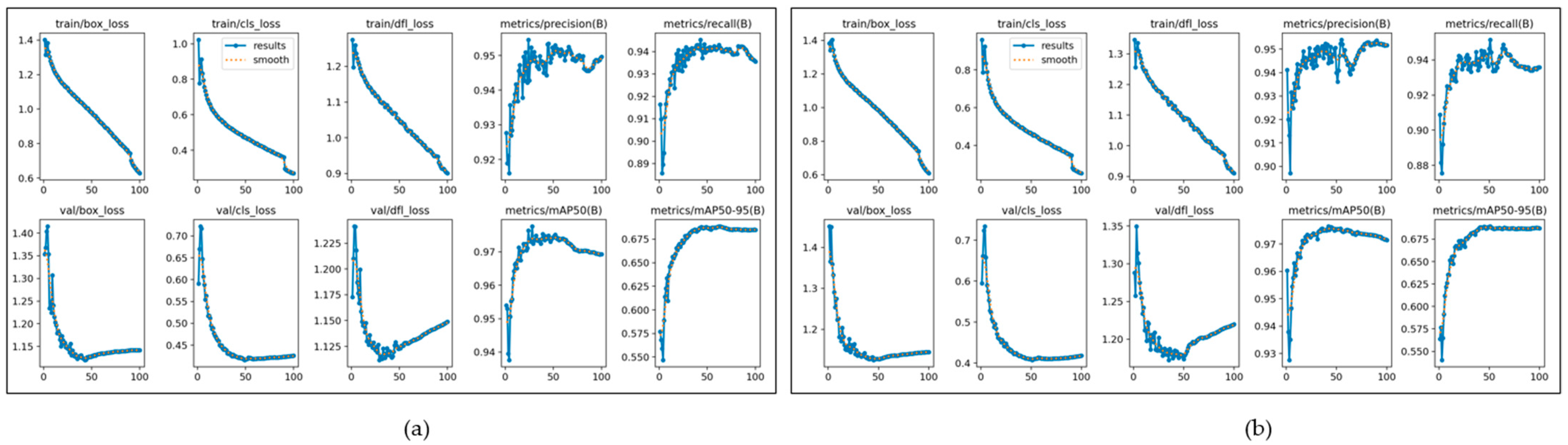

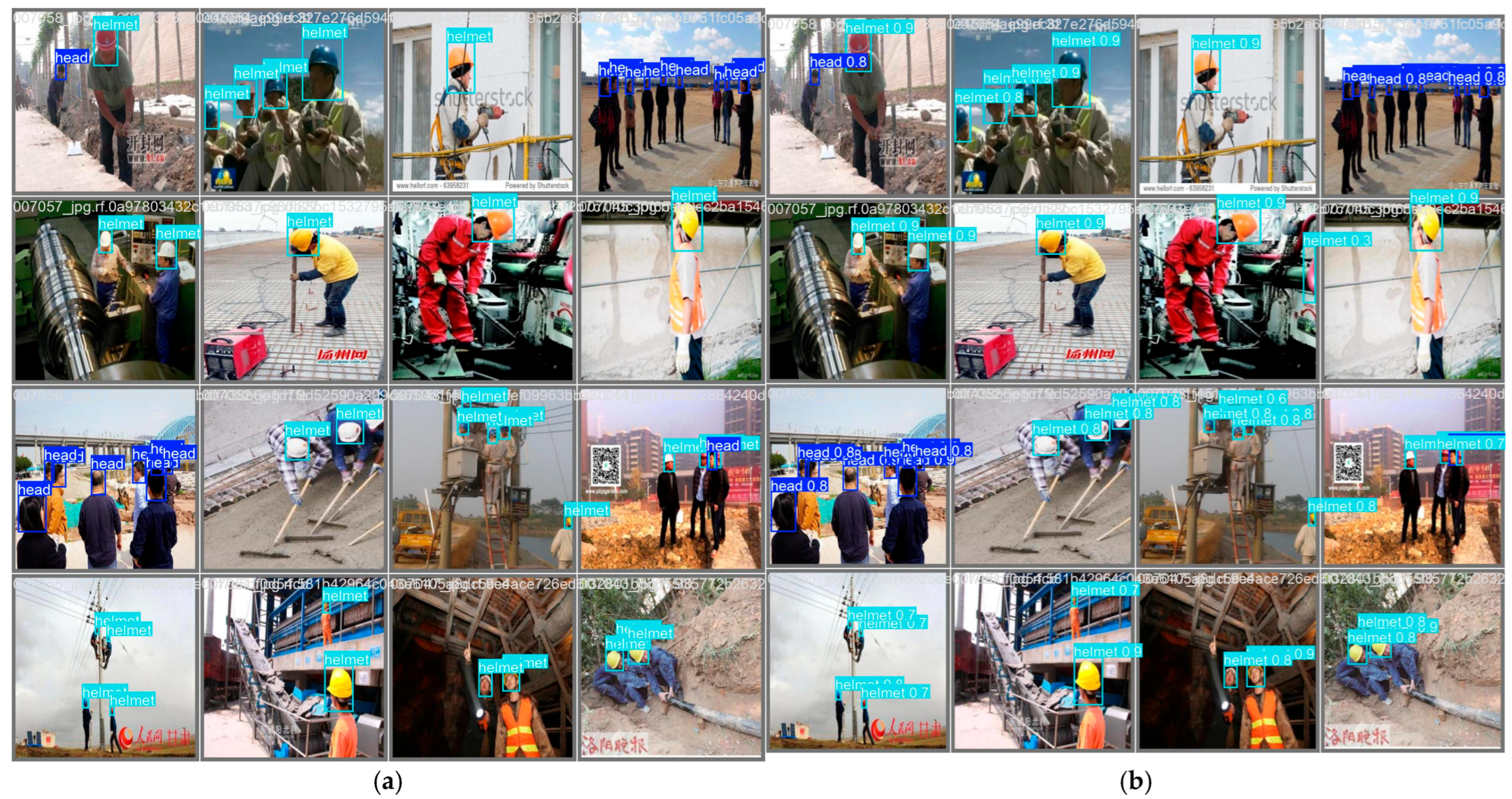

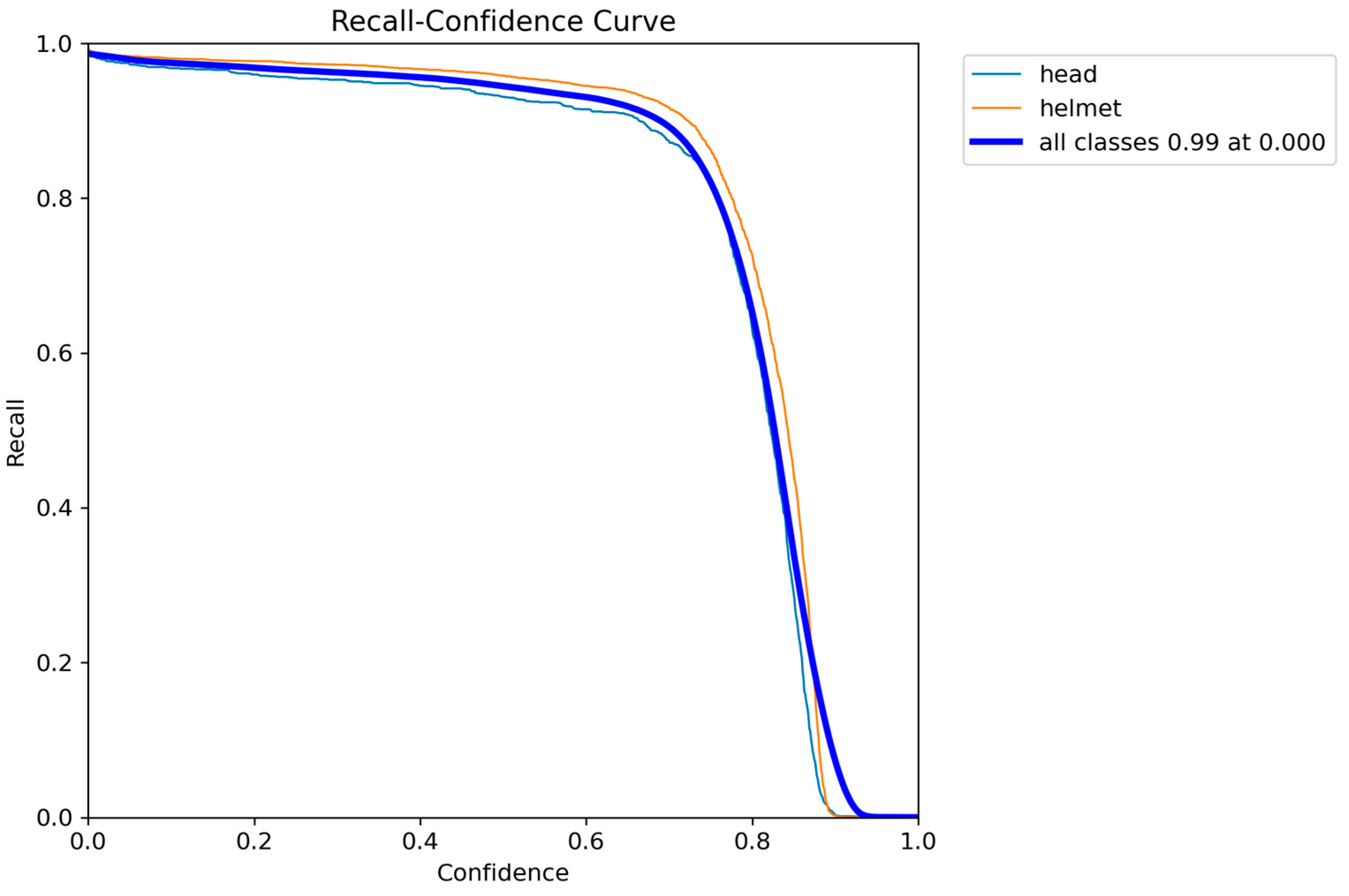

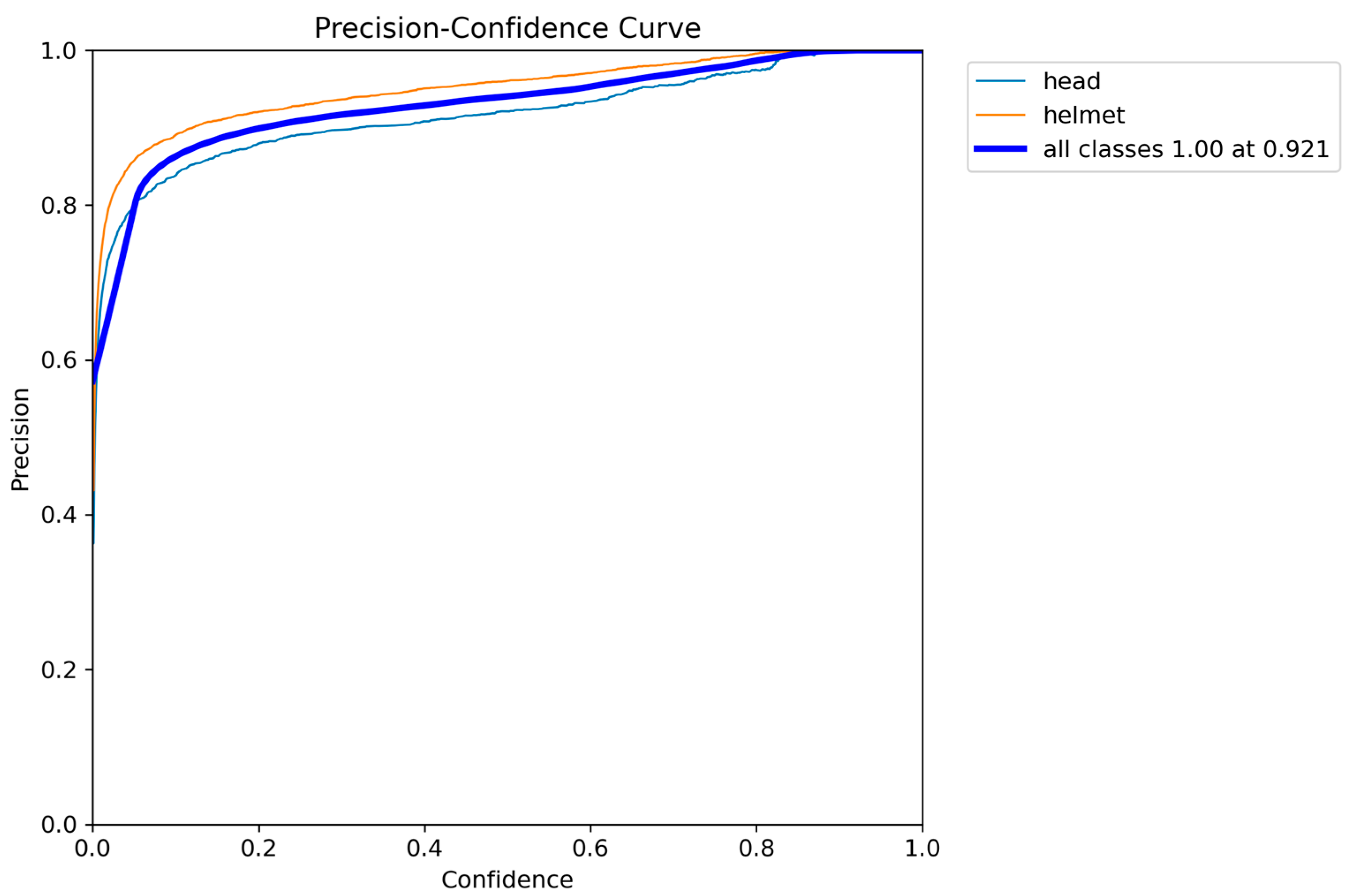

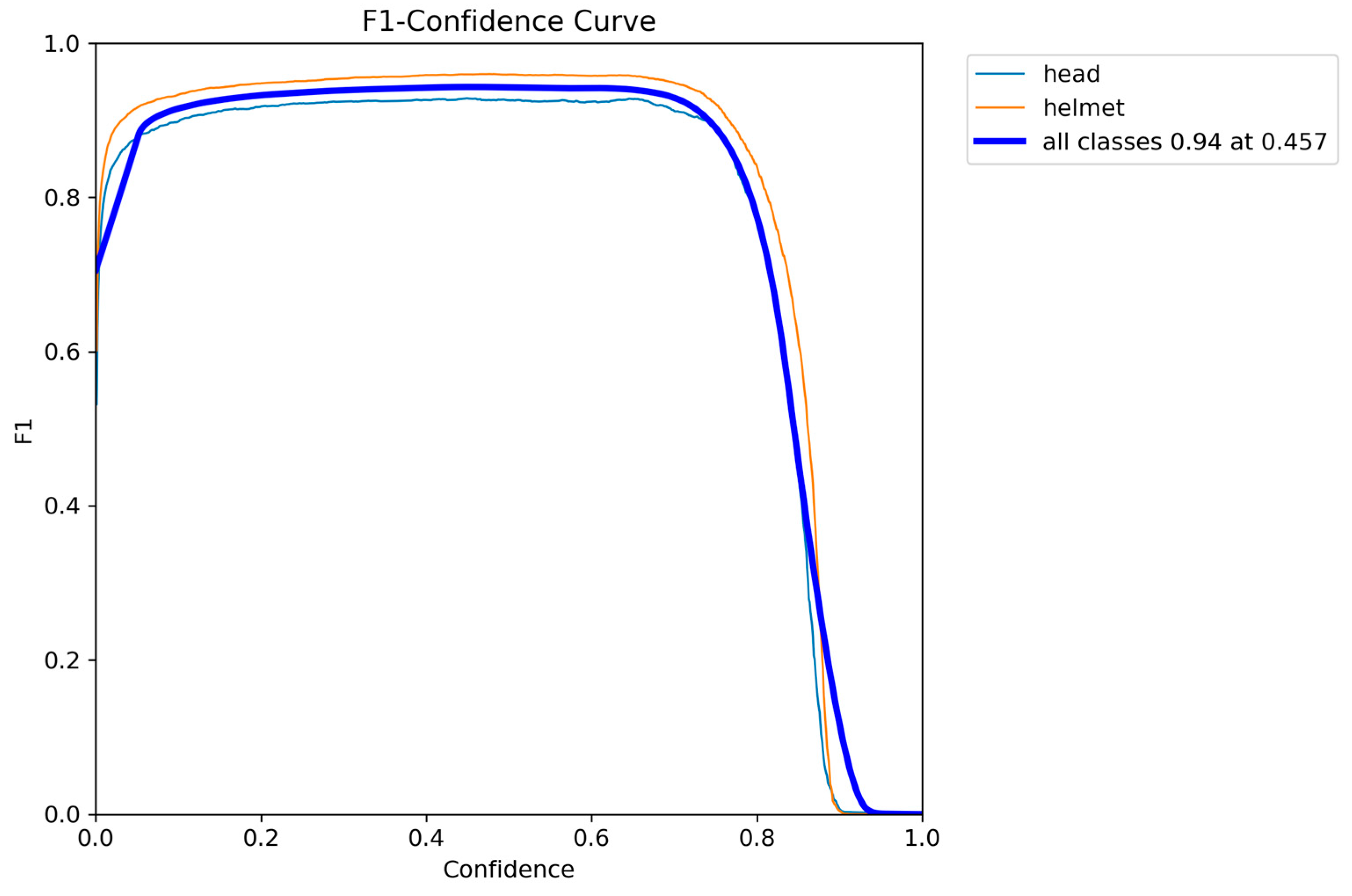

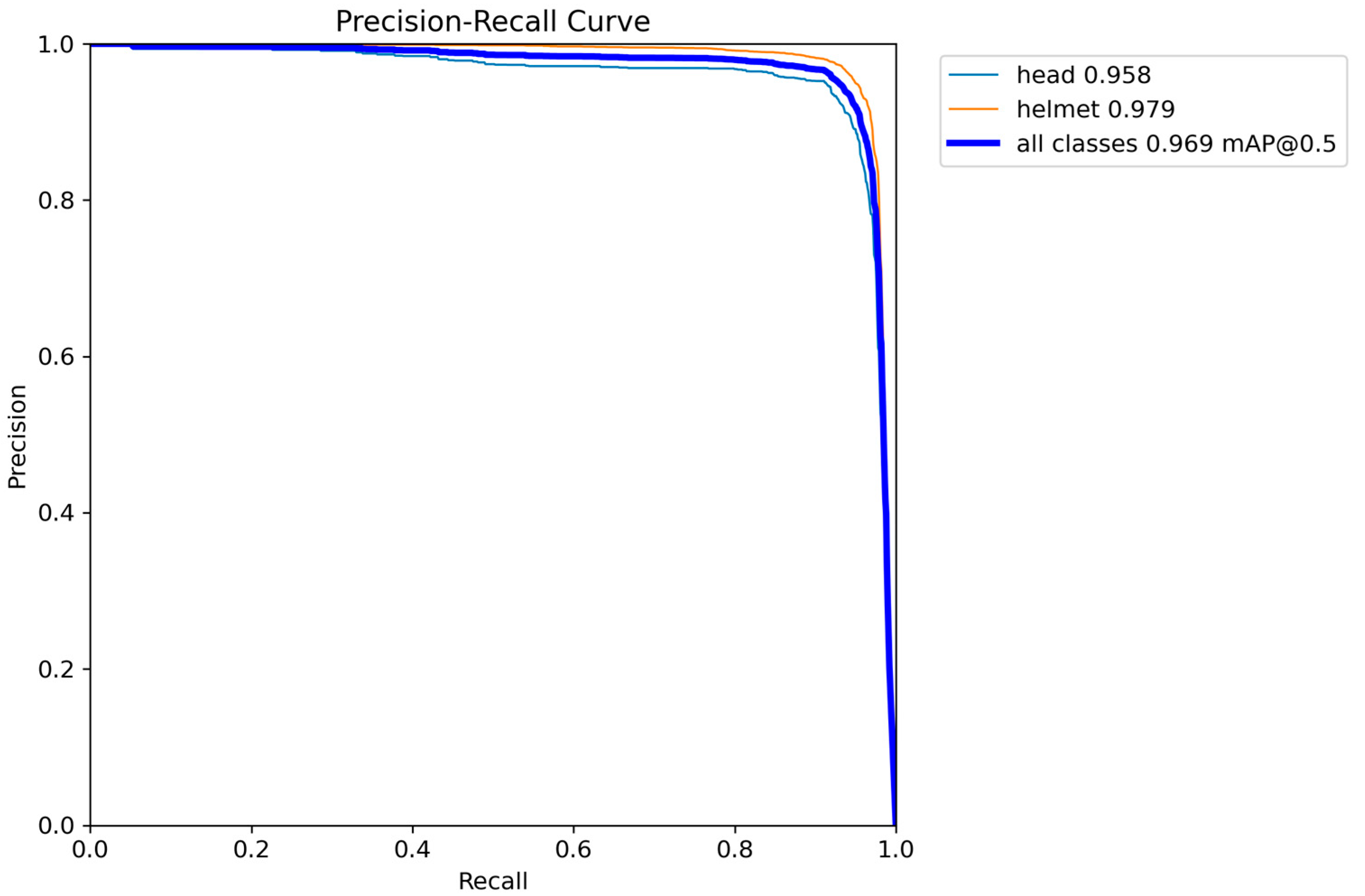

3.1. The Training Phase Outputs

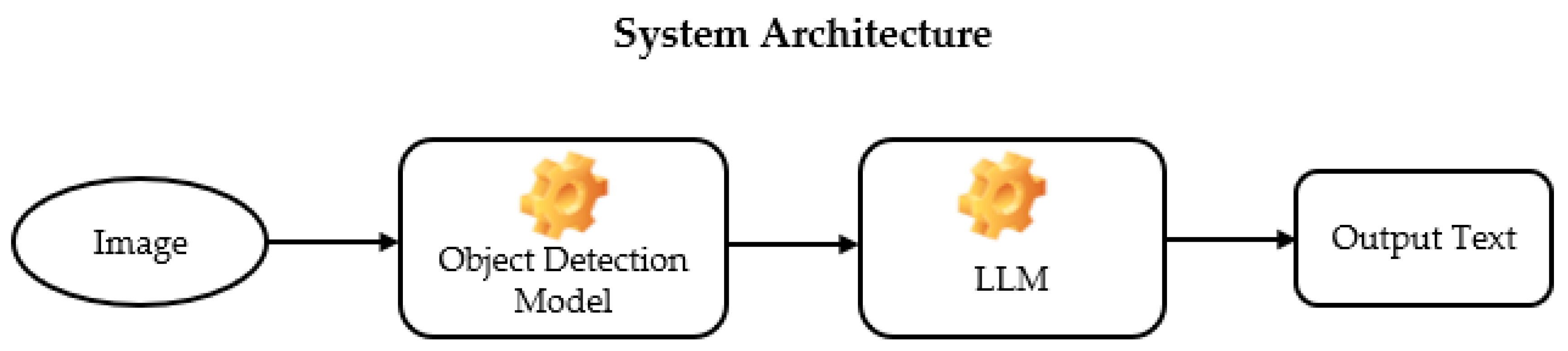

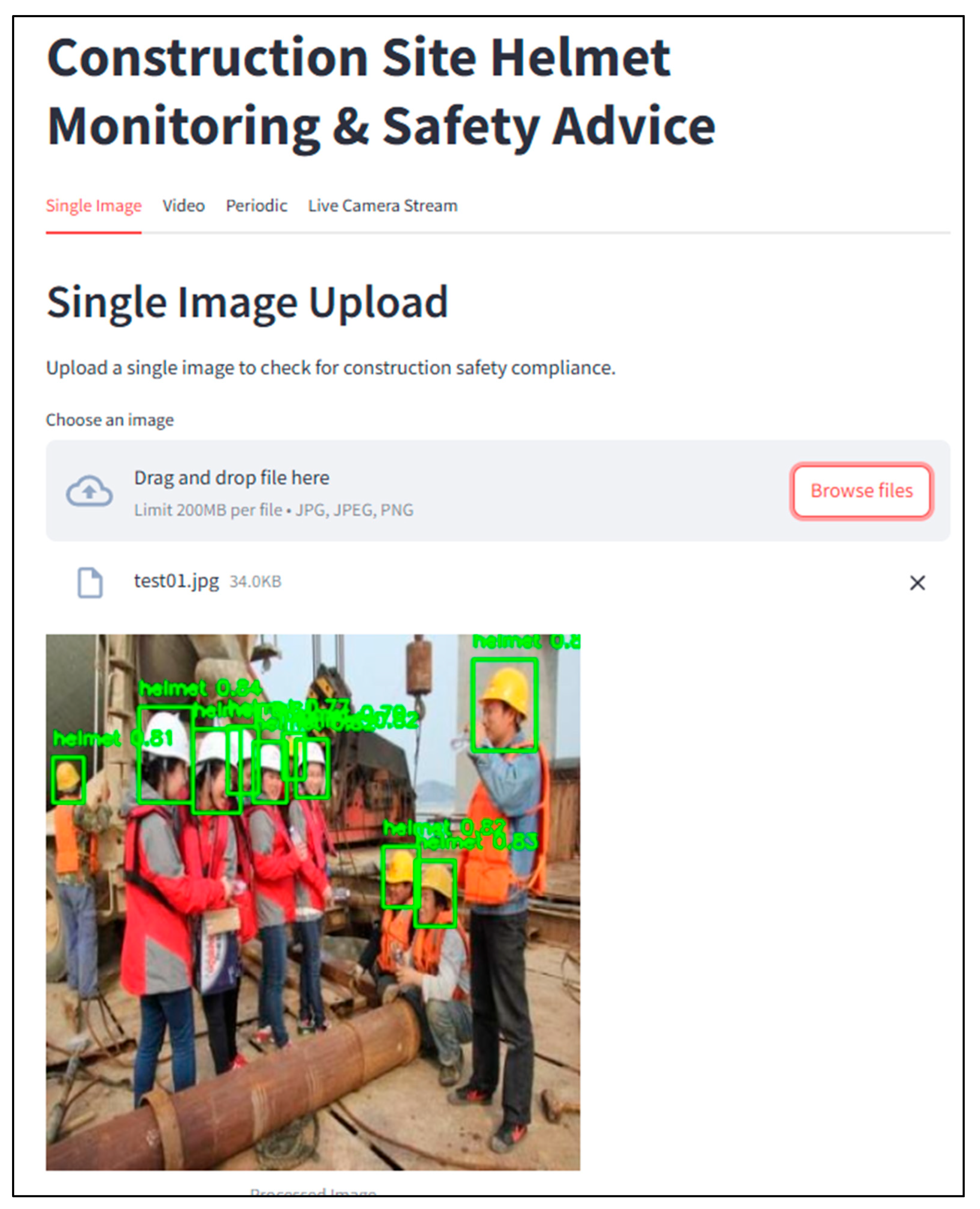

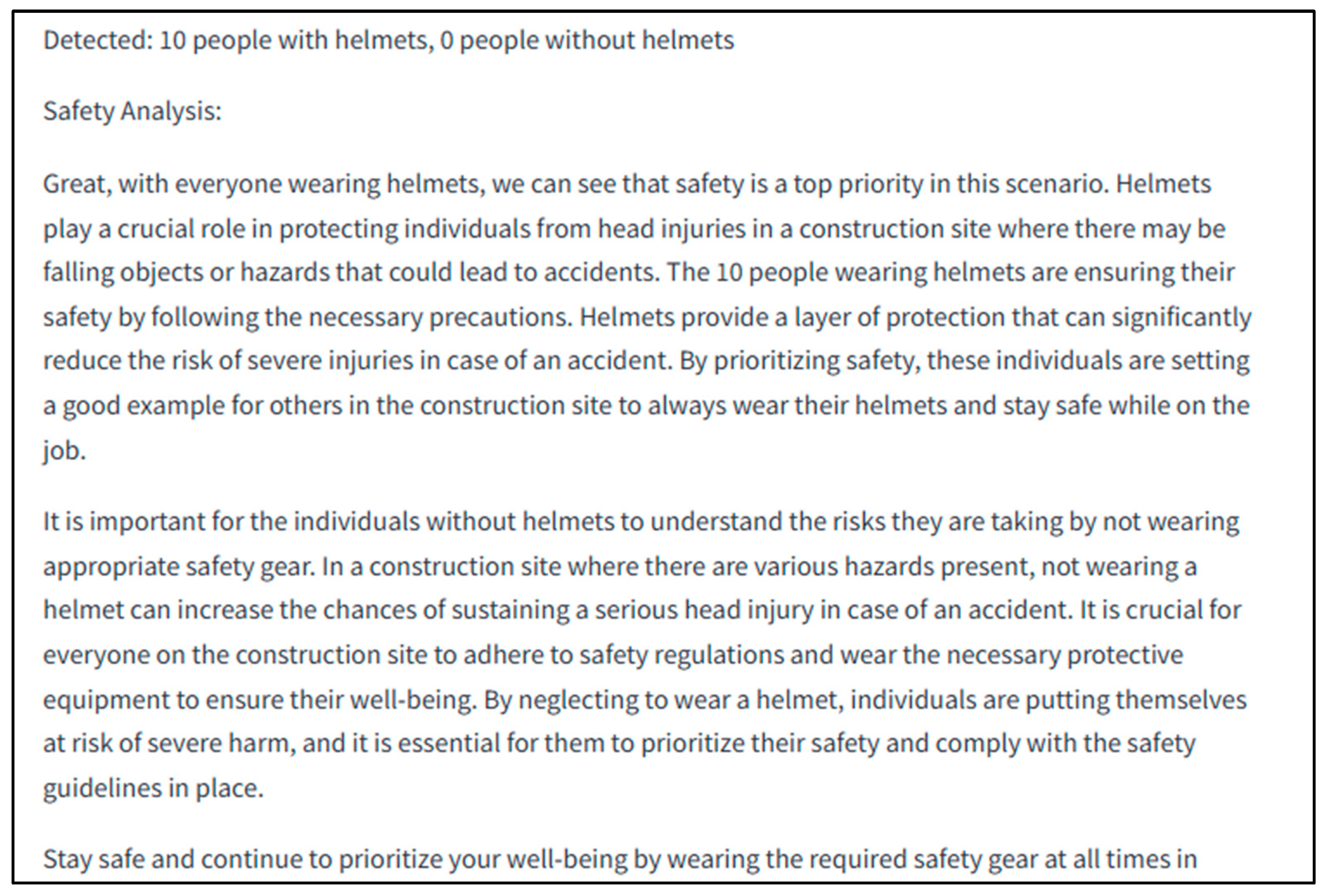

3.2. The Real Time Advisory Tool

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, S.; Wang, J.; Shou, W.; Ngo, T.; Sadick, A.-M.; Wang, X. Computer Vision Techniques in Construction: A Critical Review. Arch. Comput. Methods Eng. 2021, 28, 3383–3397. [Google Scholar] [CrossRef]

- Tsirtsakis, P.; Zacharis, G.; Maraslidis, G.S.; Fragulis, G.F. Deep learning for object recognition: A comprehensive review of models and algorithms. Int. J. Cogn. Comput. Eng. 2025, 6, 298–312. [Google Scholar] [CrossRef]

- Islam, S.U.; Zaib, S.; Ferraioli, G.; Pascazio, V.; Schirinzi, G.; Husnain, G. Enhanced Deep Learning Architecture for Rapid and Accurate Tomato Plant Disease Diagnosis. Agriengineering 2024, 6, 375–395. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, Z.; Chen, W. The evolution of object detection methods. Eng. Appl. Artif. Intell. 2024, 133, 108458. [Google Scholar] [CrossRef]

- Kineber, A.F.; Antwi-Afari, M.F.; Elghaish, F.; Zamil, A.M.A.; Alhusban, M.; Qaralleh, T.J.O. Benefits of Implementing Occupational Health and Safety Management Systems for the Sustainable Construction Industry: A Systematic Literature Review. Sustainability 2023, 15, 12697. [Google Scholar] [CrossRef]

- Xiao, B.; Kang, S.-C. Development of an Image Data Set of Construction Machines for Deep Learning Object Detection. J. Comput. Civ. Eng. 2021, 35, 05020005. [Google Scholar] [CrossRef]

- Araya-Aliaga, E.; Atencio, E.; Lozano, F.; Lozano-Galant, J. Automating Dataset Generation for Object Detection in the Construction Industry with AI and Robotic Process Automation (RPA). Buildings 2025, 15, 410. [Google Scholar] [CrossRef]

- Kazaz, B.; Poddar, S.; Arabi, S.; Perez, M.A.; Sharma, A.; Whitman, J.B. Deep Learning-Based Object Detection for Unmanned Aerial Systems (UASs)-Based Inspections of Construction Stormwater Practices. Sensors 2021, 21, 2834. [Google Scholar] [CrossRef]

- Seth, Y.; Sivagami, M. Enhanced YOLOv8 Object Detection Model for Construction Worker Safety Using Image Transformations. IEEE Access 2025, 13, 10582–10594. [Google Scholar] [CrossRef]

- Barlybayev, A.; Amangeldy, N.; Kurmetbek, B.; Krak, I.; Razakhova, B.; Tursynova, N.; Turebayeva, R. Personal protective equipment detection using YOLOv8 architecture on object detection benchmark datasets: A comparative study. Cogent Eng. 2024, 11, 2333209. [Google Scholar] [CrossRef]

- Bai, R.; Wang, M.; Zhang, Z.; Lu, J.; Shen, F. Automated Construction Site Monitoring Based on Improved YOLOv8-seg Instance Segmentation Algorithm. IEEE Access 2023, 11, 139082–139096. [Google Scholar] [CrossRef]

- Jiao, X.; Li, C.; Zhang, X.; Fan, J.; Cai, Z.; Zhou, Z.; Wang, Y. Detection Method for Safety Helmet Wearing on Construction Sites Based on UAV Images and YOLOv8. Buildings 2025, 15, 354. [Google Scholar] [CrossRef]

- Biswas, M.; Hoque, R. Construction Site Risk Reduction via YOLOv8: Detection of PPE, Masks, and Heavy Vehicles. In Proceedings of the 2024 IEEE International Conference on Computing, Applications and Systems (COMPAS), Chattogram, Bangladesh, 25–26 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- El-Kafrawy, A.M.; Seddik, E.H. Personal Protective Equipment (PPE) Monitoring for Construction Site Safety using YOLOv12. In Proceedings of the 2025 International Conference on Machine Intelligence and Smart Innovation (ICMISI), Alexandria, Egypt, 10–12 May 2025; pp. 456–459. [Google Scholar] [CrossRef]

- Zhong, J.; Qian, H.; Wang, H.; Wang, W.; Zhou, Y. Improved real-time object detection method based on YOLOv8: A refined approach. J. Real-Time Image Process. 2025, 22, 4. [Google Scholar] [CrossRef]

- Nelson, J. Hard Hat Workers Computer Vision Project. Available online: https://universe.roboflow.com/joseph-nelson/hard-hat-workers (accessed on 28 February 2025).

- Ray, S.; Haque, M.; Rahman, M.; Sakib, N.; Al Rakib, K. Experimental investigation and SVM-based prediction of compressive and splitting tensile strength of ceramic waste aggregate concrete. J. King Saud Univ.-Eng. Sci. 2024, 36, 112–121. [Google Scholar] [CrossRef]

- Omer, B.; Jaf, D.K.I.; Abdalla, A.; Mohammed, A.S.; Abdulrahman, P.I.; Kurda, R. Advanced modeling for predicting compressive strength in fly ash-modified recycled aggregate concrete: XGboost, MEP, MARS, and ANN approaches. Innov. Infrastruct. Solut. 2024, 9, 61. [Google Scholar] [CrossRef]

- Bekdaş, G.; Aydın, Y.; Isıkdağ, Ü.; Sadeghifam, A.N.; Kim, S.; Geem, Z.W. Prediction of Cooling Load of Tropical Buildings with Machine Learning. Sustainability 2023, 15, 9061. [Google Scholar] [CrossRef]

- Aydın, Y.; Cakiroglu, C.; Bekdaş, G.; Geem, Z.W. Explainable Ensemble Learning and Multilayer Perceptron Modeling for Compressive Strength Prediction of Ultra-High-Performance Concrete. Biomimetics 2024, 9, 544. [Google Scholar] [CrossRef]

- Kumar, P.; Pratap, B. Feature engineering for predicting compressive strength of high-strength concrete with machine learning models. Asian J. Civ. Eng. 2024, 25, 723–736. [Google Scholar] [CrossRef]

- Cakiroglu, C.; Aydın, Y.; Bekdaş, G.; Geem, Z.W. Interpretable Predictive Modelling of Basalt Fiber Reinforced Concrete Splitting Tensile Strength Using Ensemble Machine Learning Methods and SHAP Approach. Materials 2023, 16, 4578. [Google Scholar] [CrossRef]

- Bekdaş, G.; Aydın, Y.; Nigdeli, S.M.; Ünver, I.S.; Kim, W.-W.; Geem, Z.W. Modeling Soil Behavior with Machine Learning: Static and Cyclic Properties of High Plasticity Clays Treated with Lime and Fly Ash. Buildings 2025, 15, 288. [Google Scholar] [CrossRef]

- Aydın, Y.; Işıkdağ, Ü.; Bekdaş, G.; Nigdeli, S.M.; Geem, Z.W. Use of Machine Learning Techniques in Soil Classification. Sustainability 2023, 15, 2374. [Google Scholar] [CrossRef]

- Aydin, Y.; Bekdaş, G.; Işikdağ, Ü.; Nigdeli, S.M.; Geem, Z.W. Optimizing artificial neural network architectures for enhanced soil type classification. Geomech. Eng. 2024, 37, 263–277. [Google Scholar]

- Ji, Y.; Zhang, H.; Zhang, Z.; Liu, M. CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Inf. Sci. 2021, 546, 835–857. [Google Scholar] [CrossRef]

- Mirzaei, B.; Nezamabadi-Pour, H.; Raoof, A.; Derakhshani, R. Small Object Detection and Tracking: A Comprehensive Review. Sensors 2023, 23, 6887. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Karim, J.; Nahiduzzaman; Ahsan, M.; Haider, J. Development of an early detection and automatic targeting system for cotton weeds using an improved lightweight YOLOv8 architecture on an edge device. Knowl.-Based Syst. 2024, 300, 112204. [Google Scholar] [CrossRef]

- Fakhrurroja, H.; Fashihullisan, A.A.; Bangkit, H.; Pramesti, D.; Ismail, N.; Mahardiono, N.A. A Vision-Based System: Detecting Traffic Law Violation Case Study of Red-Light Running Using Pre-Trained YOLOv8 Model and OpenCV. In Proceedings of the 2024 IEEE International Conference on Smart Mechatronics (ICSMech), Yogyakarta, Indonesia, 19–21 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Rasheed, A.F.; Zarkoosh, M. YOLOv11 optimization for efficient resource utilization. J. Supercomput. 2025, 81, 1085. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/ (accessed on 9 September 2025).

- Ayachi, R.; Said, Y.; Afif, M.; Alshammari, A.; Hleili, M.; Ben Abdelali, A. Assessing YOLO models for real-time object detection in urban environments for advanced driver-assistance systems (ADAS). Alex. Eng. J. 2025, 123, 530–549. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- He, L.-H.; Zhou, Y.-Z.; Liu, L.; Cao, W.; Ma, J.-H. Research on object detection and recognition in remote sensing images based on YOLOv11. Sci. Rep. 2025, 15, 14032. [Google Scholar] [CrossRef]

- Tripathi, A.; Gohokar, V.; Kute, R. Comparative Analysis of YOLOv8 and YOLOv9 Models for Real-Time Plant Disease Detection in Hydroponics. Eng. Technol. Appl. Sci. Res. 2024, 14, 17269–17275. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Perri, D.; Simonetti, M.; Gervasi, O. Synthetic Data Generation to Speed-Up the Object Recognition Pipeline. Electronics 2021, 11, 2. [Google Scholar] [CrossRef]

| Model | mAP50-95 |

|---|---|

| YOLOv8n | 37.3 |

| YOLOv11n | 39.5 |

| YOLOv12n | 40.6 |

| YOLOv11m | 51.5 |

| YOLOv12m | 52.5 |

| Model | Class Name | Precision | 1-Precision | Recall | False Negative Rate (FNR) | F1 Score | Specificity (TNR) | False Positive Rate (FPR) | Accuracy | Misclassification Rate | Macro-F1 | Weighted-F1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv11m | Head | 0.9522 | 0.0478 | 0.8805 | 0.1195 | 0.9150 | 0.9848 | 0.0152 | 0.8961 | 0.1039 | 0.6223 | 0.9172 |

| Helmet | 0.9698 | 0.0302 | 0.9350 | 0.0650 | 0.9521 | 0.9262 | 0.0738 | |||||

| Background | 0.0000 | 1.0000 | 0.0000 | 1.0000 | 0.0000 | 0.9263 | 0.0737 | |||||

| YOLOv12m | Head | 0.9552 | 0.0448 | 0.8913 | 0.1087 | 0.9221 | 0.9858 | 0.0142 | 0.8976 | 0.1024 | 0.6245 | 0.9217 |

| Helmet | 0.9750 | 0.0250 | 0.9289 | 0.0711 | 0.9514 | 0.9375 | 0.0625 | |||||

| Background | 0.0000 | 1.0000 | 0.0000 | 1.0000 | 0.0000 | 0.9237 | 0.0763 |

| Model | Epoch | Train/Box_loss | Train/Cls_loss | Train/dfl_loss | Metrics/Precision(B) | Metrics/Recall(B) | Metrics/mAP50(B) | Metrics/mAP50-95(B) | Val/Box_loss | Val/Cls_loss | Val/Dfl_loss | lr/pg0 | lr/pg1 | lr/pg2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8n | 1 | 1.4789 | 1.6877 | 1.2887 | 0.89417 | 0.87535 | 0.9313 | 0.49118 | 1.5729 | 0.94295 | 1.2703 | 0.00333 | 0.00333 | 0.00333 |

| 50 | 1.1169 | 0.57672 | 1.0933 | 0.94847 | 0.93062 | 0.96811 | 0.66174 | 1.1725 | 0.45508 | 1.1052 | 0.005149 | 0.005149 | 0.005149 | |

| 100 | 0.92405 | 0.38676 | 1.0114 | 0.9519 | 0.93127 | 0.96793 | 0.66252 | 1.1737 | 0.44413 | 1.1072 | 0.000199 | 0.000199 | 0.000199 | |

| YOLOv11n | 1 | 65.3311 | 1.47569 | 1.68443 | 1.33289 | 0.90634 | 0.86088 | 0.92357 | 0.49152 | 1.54324 | 0.95522 | 1.28459 | 0.00333 | 0.00333 |

| 50 | 2773.16 | 1.12328 | 0.58735 | 1.09226 | 0.9461 | 0.93719 | 0.9714 | 0.67527 | 1.13352 | 0.44897 | 1.08096 | 0.005149 | 0.005149 | |

| 100 | 5670.2 | 0.92176 | 0.38898 | 1.00745 | 0.94832 | 0.93784 | 0.97037 | 0.68013 | 1.13031 | 0.43515 | 1.08172 | 0.000199 | 0.000199 | |

| YOLOv12n | 1 | 223.192 | 1.46226 | 1.65184 | 1.30184 | 0.91392 | 0.87746 | 0.93669 | 0.53639 | 1.42353 | 0.88957 | 1.26034 | 0.00333 | 0.00333 |

| 50 | 9078.57 | 1.10491 | 0.56182 | 1.14365 | 0.94452 | 0.9459 | 0.97142 | 0.67258 | 1.14483 | 0.43819 | 1.15867 | 0.005149 | 0.005149 | |

| 100 | 18220.3 | 0.88344 | 0.35808 | 1.03329 | 0.94337 | 0.9412 | 0.96677 | 0.67527 | 1.14635 | 0.42833 | 1.16127 | 0.000199 | 0.000199 | |

| YOLOv11m | 1 | 199.879 | 1.40227 | 1.02382 | 1.27468 | 0.92764 | 0.91636 | 0.954 | 0.57687 | 1.35352 | 0.59099 | 1.17274 | 0.00333 | 0.00333 |

| 50 | 10143.7 | 0.97952 | 0.47022 | 1.04803 | 0.95047 | 0.94135 | 0.97429 | 0.68721 | 1.1287 | 0.41706 | 1.12316 | 0.005149 | 0.005149 | |

| 100 | 33267.5 | 0.62764 | 0.26969 | 0.90073 | 0.94964 | 0.93551 | 0.96936 | 0.68489 | 1.14201 | 0.42561 | 1.14905 | 0.000199 | 0.000199 | |

| YOLOv12m | 1 | 590.136 | 1.38181 | 0.96074 | 1.34625 | 0.94118 | 0.9088 | 0.96037 | 0.56379 | 1.45156 | 0.59458 | 1.28809 | 0.00333 | 0.00333 |

| 50 | 24731.6 | 0.98494 | 0.46164 | 1.08454 | 0.93998 | 0.94636 | 0.97528 | 0.68883 | 1.12704 | 0.40958 | 1.17363 | 0.005149 | 0.005149 | |

| 100 | 37824.2 | 0.6086 | 0.25221 | 0.91 | 0.9517 | 0.93576 | 0.97147 | 0.68701 | 1.14465 | 0.41815 | 1.21958 | 0.000199 | 0.000199 |

| Model | Metrics/mAP50(B)-Epoch no. | Metrics/mAP50-95(B)-Epoch no. |

|---|---|---|

| YOLOv8n | 0.96936-55 | 0.66398-60 |

| YOLOv11n | 0.97198-51 | 0.68013-100 |

| YOLOv12n | 0.97142-50 | 0.67527-100 |

| YOLOv11m | 0.97766-28 | 0.68853-62 |

| YOLOv12m | 0.97648-42 | 0.68919-51 |

| Study | Model | Dataset | Class Number | Metrics |

|---|---|---|---|---|

| Kang et al. [6] | YOLO-v3, Inception-SSD, R-FCN-ResNet101 and Faster-RCNN-ResNet101 | Alberta Construction Image Dataset (ACID) (10,000 images) | 10 | mAP = 89.2% |

| Araya-Aliaga et al. [7] | trained RetinaNet, Faster R-CNN and YOLOv5 | High-quality synthetic images | 4 | F1 score = 63.7%, precision = 66.8% |

| Kazaz et al. [8] | VGG-16 | Dataset consisting of 800 aerial images collected using unmanned aerial vehicles (UAVs) | - | accuracy = 100% |

| Seth and Sivagami [9] | YOLOv8 | Open-source dataset contains 5000 images | 3 | precision = 1 |

| Barlybayev et al. [10] | YOLOv8m | Color Helmet and Vest (CHV) and Safety HELmet dataset (SHEL5K) with 5K | 4 | evaluation score = 0.929 |

| Bai et al. [11] | YOLOv8-seg | - | 8 | mAP = 0.866 |

| Jiao et al. [12] | YOLOv8 | 1584 images | 2 | mAP = 0.975 |

| Biswas and Hoque [13] | YOLOv8 | 1026 images | 7 | mAP = 95.4 |

| El-Kafrawy and Seddik [14] | YOLOv8, YOLOv9, YOLOv11 and YOLOv12 | 2047 images | 7 | box precision = 0.798, mAP50 = 0.553 |

| This study | YOLOv8, YOLOv11, YOLOv12 (OpenAI GPT-3.5-turbo Large Language Model and with a Streamlit-based GUI) | Open-source dataset contains 7035 images [16] | 2 | mAP50 = 0.97766 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Işıkdağ, Ü.; Çemrek, H.A.; Sönmez, S.; Aydın, Y.; Bekdaş, G.; Geem, Z.W. A Real-Time Advisory Tool for Supporting the Use of Helmets in Construction Sites. Information 2025, 16, 824. https://doi.org/10.3390/info16100824

Işıkdağ Ü, Çemrek HA, Sönmez S, Aydın Y, Bekdaş G, Geem ZW. A Real-Time Advisory Tool for Supporting the Use of Helmets in Construction Sites. Information. 2025; 16(10):824. https://doi.org/10.3390/info16100824

Chicago/Turabian StyleIşıkdağ, Ümit, Handan Aş Çemrek, Seda Sönmez, Yaren Aydın, Gebrail Bekdaş, and Zong Woo Geem. 2025. "A Real-Time Advisory Tool for Supporting the Use of Helmets in Construction Sites" Information 16, no. 10: 824. https://doi.org/10.3390/info16100824

APA StyleIşıkdağ, Ü., Çemrek, H. A., Sönmez, S., Aydın, Y., Bekdaş, G., & Geem, Z. W. (2025). A Real-Time Advisory Tool for Supporting the Use of Helmets in Construction Sites. Information, 16(10), 824. https://doi.org/10.3390/info16100824