Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting

Abstract

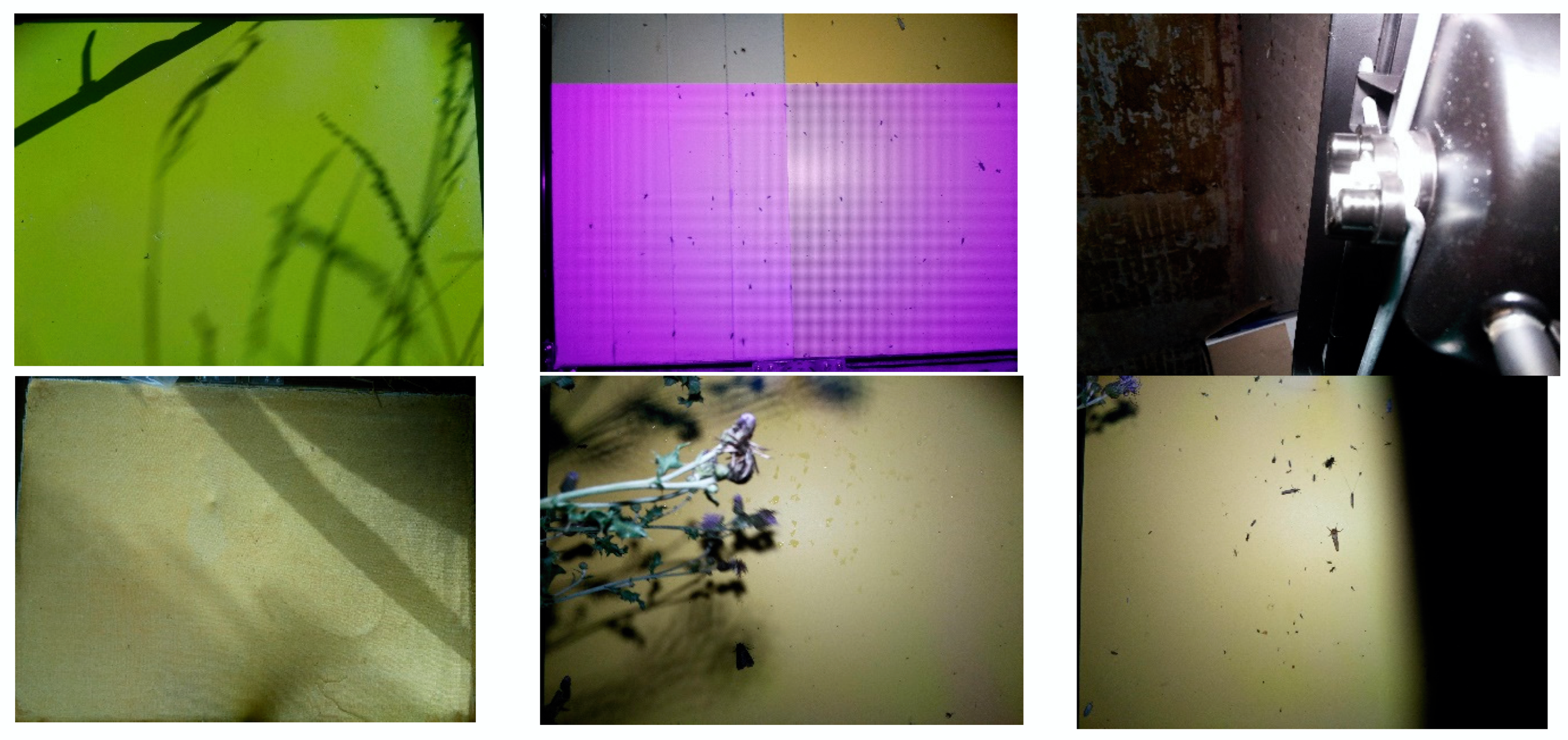

1. Introduction

2. Methods

2.1. Image Preprocessing

2.1.1. Train–Test Split

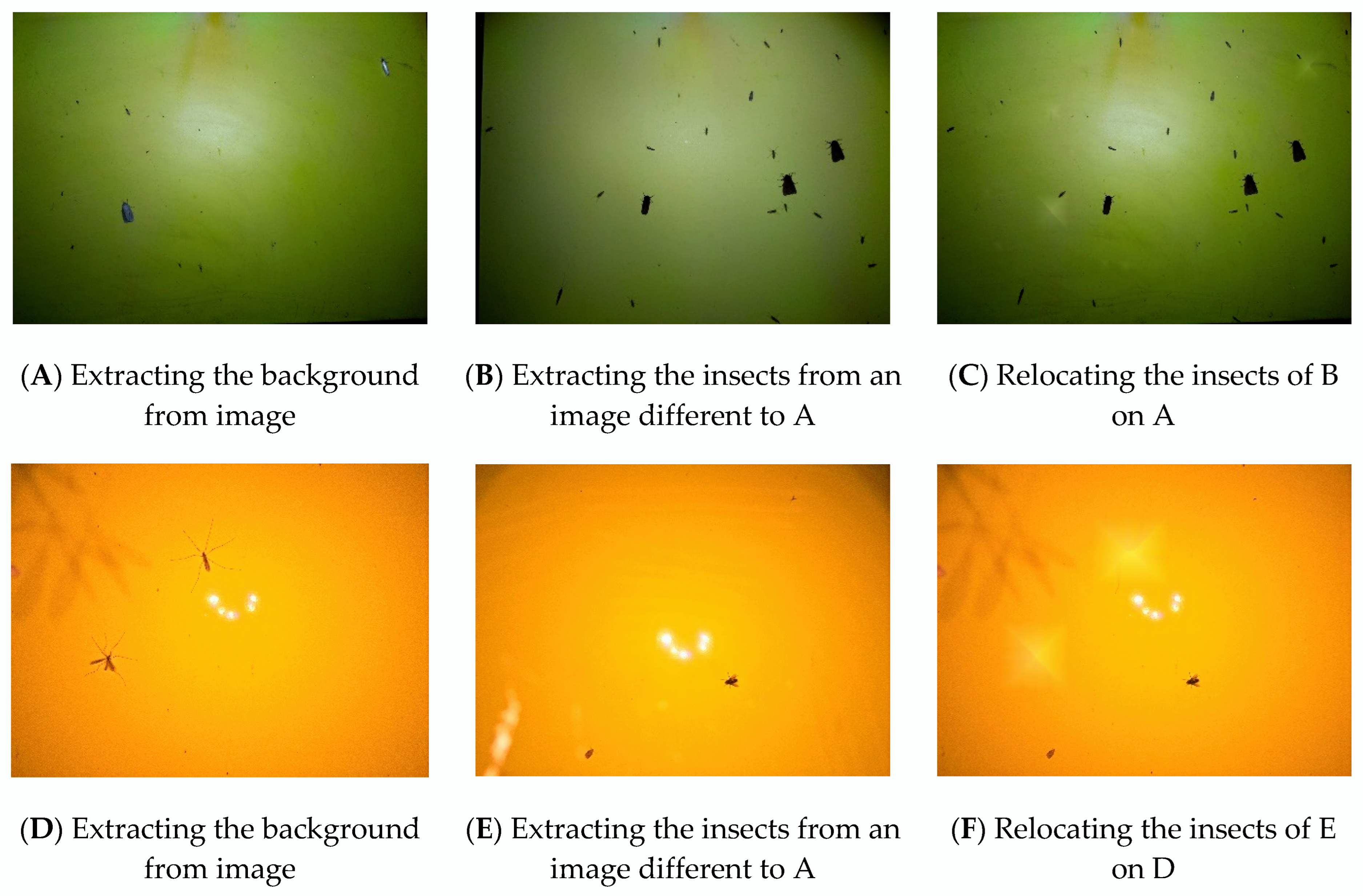

2.1.2. Augmentation

- Using the annotation file, we located the insects’ bounding boxes in the image.

- We created a copy of this selected area including the insect.

- The background within the bounding box was removed, turning it white.

- The copy was then converted to grayscale.

- We iterated through each pixel in the grayscale image, identifying pixels with values below 240 (with 255 representing pure white).

- For each identified pixel, we retrieved the corresponding pixel value from the original insect image (including all three RGB channels) and applied it to the corresponding location in the insect-free image.

2.1.3. Application of the SAHI Approach

2.1.4. First Dataset

2.1.5. Second Dataset

2.1.6. Third Dataset

2.2. Hardware

2.3. Object Recognition Algorithm

3. Results

- 1.

- Performance Metrics Across Datasets

- 2.

- SAHI Processing Impact

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hallmann, C.A.; Sorg, M.; Jongejans, E.; Siepel, H.; Hofland, N.; Schwan, H.; Stenmans, W.; Müller, A.; Sumser, H.; Hörren, T.; et al. More than 75 percent decline over 27 years in total flying insect biomass in protected areas. PLoS ONE 2017, 12, e0185809. [Google Scholar] [CrossRef] [PubMed]

- van der Sluijs, J.P. Insect decline, an emerging global environmental risk. Curr. Opin. Environ. Sustain. 2020, 46, 39–42. [Google Scholar] [CrossRef]

- Høye, T.T.; Ärje, J.; Bjerge, K.; Hansen, O.L.P.; Iosifidis, A.; Leese, F.; Mann, H.M.R.; Meissner, K.; Melvad, C.; Raitoharju, J. Deep learning and computer vision will transform entomology. Proc. Natl. Acad. Sci. USA 2021, 118, e2002545117. [Google Scholar] [CrossRef] [PubMed]

- van Klink, R.; August, T.; Bas, Y.; Bodesheim, P.; Bonn, A.; Fossøy, F.; Høye, T.T.; Jongejans, E.; Menz, M.H.; Miraldo, A.; et al. Emerging technologies revolutionize insect ecology and monitoring. Trends Ecol. Evol. 2022, 37, 872–885. [Google Scholar] [CrossRef]

- Suto, J. Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review. Agriculture 2022, 12, 1721. [Google Scholar] [CrossRef]

- Ye, R.; Gao, Q.; Qian, Y.; Sun, J.; Li, T. Improved YOLOv8 and SAHI Model for the Collaborative Detection of Small Targets at the Micro Scale: A Case Study of Pest Detection in Tea. Agronomy 2024, 14, 1034. [Google Scholar] [CrossRef]

- Passias, A.; Tsakalos, K.-A.; Rigogiannis, N.; Voglitsis, D.; Papanikolaou, N.; Michalopoulou, M.; Broufas, G.; Sirakoulis, G.C. Comparative Study of Camera- and Sensor-Based Traps for Insect Pest Monitoring Applications. In Proceedings of the 2023 IEEE Conference on AgriFood Electronics (CAFE), Torino, Italy, 25–27 September 2023; pp. 55–59. [Google Scholar] [CrossRef]

- Passias, A.; Tsakalos, K.-A.; Rigogiannis, N.; Voglitsis, D.; Papanikolaou, N.; Michalopoulou, M.; Broufas, G.; Sirakoulis, G.C. Insect Pest Trap Development and DL-Based Pest Detection: A Comprehensive Review. IEEE Trans. AgriFood Electron. 2024, 2, 323–334. [Google Scholar] [CrossRef]

- Kargar, A.; Zorbas, D.; Tedesco, S.; Gaffney, M.; O’Flynn, B. Detecting Halyomorpha halys using a low-power edge-based monitoring system. Comput. Electron. Agric. 2024, 221, 108935. [Google Scholar] [CrossRef]

- Oliveira, F.; Costa, D.G.; Assis, F.; Silva, I. Internet of Intelligent Things: A convergence of embedded systems, edge computing and machine learning. Internet Things 2024, 26, 101153. [Google Scholar] [CrossRef]

- Saradopoulos, I.; Potamitis, I.; Konstantaras, A.I.; Eliopoulos, P.; Ntalampiras, S.; Rigakis, I. Image-Based Insect Counting Embedded in E-Traps That Learn without Manual Image Annotation and Self-Dispose Captured Insects. Information 2023, 14, 267. [Google Scholar] [CrossRef]

- Saradopoulos, I.; Potamitis, I.; Ntalampiras, S.; Konstantaras, A.I.; Antonidakis, E.N. Edge Computing for Vision-Based, Urban-Insects Traps in the Context of Smart Cities. Sensors 2022, 22, 2006. [Google Scholar] [CrossRef] [PubMed]

- Ong, S.; Høye, T.T. Trap colour strongly affects the ability of deep learning models to recognize insect species in images of sticky traps. Pest Manag. Sci. 2024. [Google Scholar] [CrossRef]

- Available online: https://diopsis.challenges.arise-biodiversity.nl (accessed on 28 November 2024).

- Padubidri, C.; Visserman, H.; Lanitis, A.; Kamilaris, A. TaxaNet: Harnessing a Hierarchical Loss Function for Insect Classification Using Deep Learning. In Proceedings of the 4th International Workshop on Camera Traps, AI, and Ecology, Hagenberg, Austria, 5–6 September 2024. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing Aided Hyper Inference and Fine-Tuning for Small Object Detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar] [CrossRef]

- Available online: https://github.com/Gsarant/Diopsis_detection_task (accessed on 14 December 2024).

- Połap, D.; Jaszcz, A.; Prokop, K. Generating synthetic data using GANs fusion in the digital twins model for sonars. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; p. 1. [Google Scholar] [CrossRef]

| mAP50 (%) | |||||||

|---|---|---|---|---|---|---|---|

| Fold | A | B | C | D | E | F | G |

| 1 | 63.9 | 33.6 | 66.7 | 28.0 | 67.3 | 57.7 | 71.8 |

| 2 | 63.6 | 33.6 | 70.1 | 34.3 | 67.0 | 55.5 | 72.7 |

| 3 | 64.8 | 39.5 | 66.7 | 32.9 | 69.8 | 55.4 | 73.2 |

| 4 | 62.7 | 35.8 | 66.0 | 36.9 | 67.1 | 54.7 | 72.5 |

| 5 | 62.8 | 38.0 | 67.4 | 33.2 | 67.8 | 57.5 | 73.5 |

| mean | 63.6 | 36.1 | 67.4 | 33.1 | 67.8 | 56.2 | 72.7 |

| std | 0.9 | 2.6 | 1.6 | 3.2 | 1.2 | 1.4 | 0.7 |

| Approaches | mAP50 (%) | Time (s) |

|---|---|---|

| YOLOv10n | 67.3 | 5.15 |

| SAHI 640 × 640 | 57.7 | 103.34 |

| Combine YOLOv10n+SAHI 800 × 800 | 71.8 | 76.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saradopoulos, I.; Potamitis, I.; Rigakis, I.; Konstantaras, A.; Barbounakis, I.S. Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting. Information 2025, 16, 10. https://doi.org/10.3390/info16010010

Saradopoulos I, Potamitis I, Rigakis I, Konstantaras A, Barbounakis IS. Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting. Information. 2025; 16(1):10. https://doi.org/10.3390/info16010010

Chicago/Turabian StyleSaradopoulos, Ioannis, Ilyas Potamitis, Iraklis Rigakis, Antonios Konstantaras, and Ioannis S. Barbounakis. 2025. "Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting" Information 16, no. 1: 10. https://doi.org/10.3390/info16010010

APA StyleSaradopoulos, I., Potamitis, I., Rigakis, I., Konstantaras, A., & Barbounakis, I. S. (2025). Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting. Information, 16(1), 10. https://doi.org/10.3390/info16010010