Abstract

In natural language processing, word sense disambiguation (WSD) continues to be a major difficulty, especially for low-resource languages where linguistic variation and a lack of data make model training and evaluation more difficult. The goal of this comprehensive review and meta-analysis of the literature is to summarize the body of knowledge regarding WSD techniques for low-resource languages, emphasizing the advantages and disadvantages of different strategies. A thorough search of several databases for relevant literature produced articles assessing WSD methods in low-resource languages. Effect sizes and performance measures were extracted from a subset of trials through analysis. Heterogeneity was evaluated using pooled effect and estimates were computed by meta-analysis. The preferred reporting elements for systematic reviews and meta-analyses (PRISMA) were used to develop the process for choosing the relevant papers for extraction. The meta-analysis included 32 studies, encompassing a range of WSD methods and low-resourced languages. The overall pooled effect size indicated moderate effectiveness of WSD techniques. Heterogeneity among studies was high, with an I2 value of 82.29%, suggesting substantial variability in WSD performance across different studies. The (τ2) tau value of 5.819 further reflects the extent of between-study variance. This variability underscores the challenges in generalizing findings and highlights the influence of diverse factors such as language-specific characteristics, dataset quality, and methodological differences. The p-values from the meta-regression (0.454) and the meta-analysis (0.440) suggest that the variability in WSD performance is not statistically significantly associated with the investigated moderators, indicating that the performance differences may be influenced by factors not fully captured in the current analysis. The absence of significant p-values raises the possibility that the problems presented by low-resource situations are not yet well addressed by the models and techniques in use.

1. Introduction

Natural language processing experts consider WSD among the thorniest and most unsolvable issues. Many ambiguities exist in natural languages, and in order to obtain good disambiguation results, academics are offering to tackle the problem in a range of languages [1].

Word sense disambiguation is the ability to computationally ascertain a word’s meaning in context. WSD is a well-known NLP problem that has been dubbed an AI-complete problem. Although WSD is frequently a transitional stage, it is crucial for numerous applications, including machine translation, information retrieval, and text summarization [2]. Many natural language processing (NLP) tasks, including sentiment analysis, machine translation, multilingual information extraction, and semantic Web, benefit from applying word sense disambiguation [3].

Regardless of language, a polysemous word’s ambiguity is known as its capacity for several meanings. To make the ambiguity clear, several word sense disambiguation (WSD) strategies have been applied. These methods can be used to ascertain a word’s correct meaning based on its specific context [4]. Uncertainty surrounding word sense (WSD) is one of the main issues in natural language processing. Ambiguous words frequently have multiple meanings depending on the situation. WSD is the process of classifying an uncertain word’s semantics based on its context. It significantly affects a variety of applications, including text categorization, emotion analysis, speech recognition, machine translation, topic detection, and search engines [5].

A fundamental component of Natural Language Processing (NLP) systems that aim to comprehend and interpret human language in a contextual and semantic manner is word sense disambiguation (WSD). Since lexical ambiguity is a fundamental aspect of natural language, resolving this issue is essential to raising the caliber and precision of NLP applications in fields like sentiment analysis, text summarization, machine translation, and question response systems [6].

There are several different approaches to word sense disambiguation: supervised machine learning methods that use the largest manually annotated corpus to train a classifier, such as support vector machines and k nearest neighbors, which are among the more effective approaches; dictionary-based methods that take advantage of lexical knowledge bases; and fully unsupervised methods that generate word senses from input text by clustering word occurrences [2,7,8].

The most effective method in this area among them is the supervised learning strategy [3]. The researchers [9] argue that a semi-supervised strategy can be used to resolve word sense disambiguation since the precision of the findings is extremely near when compared to a supervised approach. Moreover, using a neural network model as a classifier, the supervised method produces word sense disambiguation results that are more accurate, whereas convolutional neural networks are specifically made for processing natural language.

In this research, the researchers intend to discuss the existing methods to resolve word sense disambiguation for morphologically rich, low-resourced languages. Since the efficiency of most WSD algorithms mostly relies on the corpus available for the language, the issue becomes more difficult for a low-resourced language [10].

2. Related Work

The researchers [7] developed the Marathi language’s word sense disambiguation system by employing the Marathi WordNet and Lesk technique. They employed synset verification, which produces accurate results in many cases but is not very effective in others. The synset verification is implemented using the Lesk algorithm, which also confirms the validity of the set. The similarity statistics are not particularly accurate because the morphology of Indian languages varies greatly. The authors were the only ones who used the method to test terms. The algorithm only considers noun terms, which is a disadvantage of the chosen approach.

For supervised medical word sense disambiguation, the researchers [11] presented a novel deep neural network architecture based on a layered bidirectional LSTM network, upon which a max-pooling throughout many time steps is performed to produce a dense representation of the context. To determine the best input form for the max-pooling layer, four further tweaks were made to the LSTM’s output. Additionally, a “universal” network is trained by researchers to jointly disambiguate all the target confusing terms. The ambiguous word’s embedding is concatenated to the maxpooled vector in the universal network as a “hint” layer by researchers. Our universal network achieves around 90% of the test accuracy, according to the results [11].

The researcher [10] proposed a creative strategy for addressing WSD for a low-resourced language with the assistance of a high-resource language. To enhance performance and increase the efficacy of WSD, researchers employed a modified version of the Lesk Algorithm driven by a Word2Vec model trained in a High Resource Language. Although the experiment was conducted using the low-resourced Assamese language, the technique works with other low-resourced languages as well. The agglutinative nature of Assamese, an Indian language with rich morphology, makes the problem hard. By creating and utilizing a word extractor for the Assamese language to provide the tokens during processing, researchers were able to significantly enhance performance. The results show that it is possible to distinguish between distinct senses of the same word using this unique approach.

The researchers [12] give an example of how Urdu semantic labeling techniques might be developed and assessed using the suggested corpus. The suggested corpus has 8000 tokens spread over the following genres or domains: Wikipedia, social media, news, and historical texts (each with 2000 tokens). Using the USAS (UCREL Semantic Analysis System) semantic taxonomy, which offers a comprehensive set of semantic fields for coarse-grained annotation, the corpus has been manually annotated with 21 major semantic fields and 232 sub-fields. From the suggested corpus, the researchers collected local, topical, and semantic features, and then used seven distinct supervised multi-target classifiers on them. Findings indicate that 94% of the suggested corpus semantic domains for coarse-grained annotation are accurate [12].

The method proposed by [10] uses a Word2Vec model trained in a High-Resource language to power a modified version of the Lesk Algorithm, which is used to solve WSD using a lexical dictionary-based approach. In addition, to eliminating the stopwords, the researcher enhanced efficiency by creating and utilizing a Word-Extractor that provides the tokens with data from the Assamese WordNet, even in cases where the original word form is not present. The solution is total automation. The experiment’s findings support the viability of employing this method to distinguish between senses of the same term. When utilized independently as a plug-in together with other Natural Language Processing life cycles for low-resourced languages, this WSD paradigm can greatly enhance processing for other low-resourced languages.

The supervised WSD technique uses machine-learning algorithms from sense-annotated data created manually or semantically annotated corpora to introduce the induction principle for classification models to determine the appropriate sense for each specific context [13,14]. According to [15], supervised learning techniques organize structured data into an annotated training corpus. The primary drawback of supervised learning approaches is their reliance on a substantial amount of manually annotated data. Supervised learning techniques handle structured information using annotated training corpora [15]. Neural networks, K-Nearest Neighbors (KNN), Support Vector Machines, Decision Trees, and the Naïve Bayes classifier are a few of the computational intelligence approaches used in the supervised classification approach. In their 2016 study, Sarmah and Sarma, (2016) [16] employed the supervised Naïve Bayes classifier technique for an autonomous disambiguation task. They created training data with sense-annotated features using 160 ambiguous terms from WordNet and the Assamese Corpus, and their results showed an accuracy of 71%.

A model based on a bi-directional long short-term memory (BiLSTM) network and an attention model based on self-attention architecture were the two deep learning-based models for supervised WSD that the researchers [17] presented. The outcome demonstrates that, on the MSH WSD dataset, the BiLSTM neural network model with an appropriate upper layer structure outperforms the current state-of-the-art models even better, while the attention model outperformed the BiLSTM model with good accuracy by a factor of three or four. Furthermore, the researchers [17] trained “universal” models to jointly decipher any confusing words. As a “hint,” concatenate the target ambiguous word’s embedding to the max-pooled vector in the universal models. According to the outcome, the universal BiLSTM neural network model produced results with an accuracy of almost 90% [17].

Although they lack subjectivity and overlook the semantics of the underlying textual structures, automatic annotators automatically annotate the data to create the training set for the supervised classifier. The researchers [18] considered domain-specific aspects of the annotation process to create an automated annotation system that is both scalable and semantically rich. Using distributional semantic models (LSA and Word2Vec) to supplement the novel bootstrapping algorithm, the authors [18] developed an improved method for automatically annotating Tweets. The suggested algorithm was tested on 12,000 crowdsourced annotated Tweets, and it produced an accuracy of 68.56%, higher than the baseline.

The goal of the research is to ascertain the meaning of an ambiguous word by addressing the issue of word sense disambiguation in the Arabic language. To model the issue, researchers [19] use supervised sequence to sequence learning. For Arabic word sense disambiguation, researchers presented recurrent neural network models, BERT-based models, and combined POS-BERT models. The POSBERT method yields 96.33% accuracy for researchers [19].

The researchers [20,21] have demonstrated strong performance in addressing this issue by thoroughly examining supervised machine-learning techniques in this field. Scholars [20,21] have examined four approaches that incorporate pre-trained word embedding as features for training two supervised machine learning models: Naïve Bayes (NB) and Support Vector Machines (SVM). One of the training features is the context of the target abbreviation, which is applied to 500 sentences for each of the 13 abbreviations that were taken from public clinical note datasets from Fairview Health Services, a Twin Cities organization affiliated with the University of Minnesota (UMN). Our findings demonstrated that SVM outperforms NB in all four strategies; the model with the highest accuracy, which was pre-trained using texts from PubMed, Wikipedia, and PMC (PubMedCentral), had a 97.08% accuracy rate.

The work on word sense disambiguation (WSD) in Bengali, one of the Indian languages with fewer resources, is presented in this paper [22]. There are two phases to the entire work that are completed in order. Using conventional methodologies, four popular approaches—Decision Tree (DT), Support Vector Machine (SVM), Artificial Neural Network (ANN), and Naïve Bayes (NB)—that are frequently used for word sense disambiguation are examined in the first phase. Appropriate adjustments are made and put into practice during application to obtain the intended effects. The outcomes of the first experiments are used to propose a combined strategy in the second step. The accuracy rates of these baseline techniques were 63.84%, 76.9%, 76.23%, and 80.23%, in that order [22].

Words in the Punjabi language have been decoded using the Naïve Bayes supervised classifier. Building the supervised machine learning models requires a thorough understanding of the feature extraction procedure. Bag of Words (BoW) and collocation models are used independently for the proposed Punjabi WSD system to extract pertinent features. While the collocation model uses two words before and two words after the target word as features, the BoW model uses all words around the target word. The same training dataset was used to construct both models. It has been noted that the Naïve Bayes algorithm’s performance is greatly influenced by the choice of smoothing parameter. Tests for this suggested work were conducted on 150 of the most confusing noun words taken from Punjabi WordNet [23].

The NB algorithm is a probabilistic model that makes use of the Bayes rule and adopts conditional independence of features given the class label, it has been used considerably with success for WSD tasks [13,24]. NB approach categorizes text documents using two constraints named conditional probability of each sense (Si) of a word (w) and the features (fj) in the context [14]. The appropriate sense in the context is represented by the maximum value assessed through the expressed formula [14]:

In the expressed formula above, the number of features is signified by m, and probability P(Si) is computed from the co-occurrence frequency in the training set of sense. The P (fj | Si) is computed from the feature in the presence of the sense.

Researchers [25] describe how, after making the required adjustments, a supervised methodology was used for the job of word sense disambiguation in Bangla. When applied to a database of the 19 most used ambiguous Bangla words, the Naïve Bayes probabilistic model, which has been selected as a baseline method for sense classification, produces modest results with 81% accuracy at the beginning. Two modifications are made to the baseline method: (1) the lemmatization process is integrated into the system, and (2) the operational process is bootstrapped. As a result, the approach’s accuracy level is increased to 84% accuracy, which is encouraging for the disambiguation process overall since it allows for more refinement of the current method to obtain better results.

Using supervised approaches, the researchers [26] explicitly investigated the WSD system for the Punjabi language. A manual preparation was performed for the sense-tagged corpus of 150 ambiguous Punjabi noun terms. This proposed work investigated the following techniques: Decision List, Decision Tree, Naïve Bayes, K-Nearest Neighbor (K-NN), Random Forest, and Support Vector Machines (SVM). Feature space unigram, bigram, collocations, co-occurrence, and syntactic count-based features were employed by the classifier. From the unlabeled Wikipedia text, the semantic characteristics of Punjabi were derived using word2vec CBOW and skip-gram shallow neural network models. For the WSD of Punjabi words, two additional deep-learning neural network classifiers have been used: multilayer perceptrons and long short-term memory. Using the word embedding feature, the LSTM classifier has attained an accuracy of 84% [26].

Unsupervised methods organize a vast amount of textual material by processing unstructured semantic information [15]. Using training data without exact annotation, unsupervised techniques circumvent the limitations of supervised techniques and analyze unstructured semantic information in the context of enormous volumes of textual data [15]. Latent Semantic Analysis (LSA), Latent Dirichlet Allocation (LDA), and PageRank approaches are examples of dimension reduction or clustering techniques that are used to identify semantic ideas. One drawback of these techniques is that they do not demarcate context groups [15].

An innovative approach to identifying the latent meaning that connects a sentence’s words has been proposed by researchers [27]. A graph is employed by researchers [27] to uncover this implicit information, which is then applied to clarify the unclear word. The results of the studies demonstrate that the suggested algorithm correctly understands the sense of both homonyms and polysemous words. The suggested technique has demonstrated an accuracy of 79.6%, which is 2.5 percent better than the best-unsupervised approach in SemEval-2007 and has outperformed the approaches given in the SemEval-2013 assignment for word sense disambiguation.

The researchers [28,29] developed an unsupervised graph-based system for the Hindi word sense disambiguation challenge with an emphasis on word sense ambiguity. With the help of the suggested method, a weighted graph is produced, with nodes standing in for the meanings of words that occur in the context of ambiguous phrases and edges for the relationships between them. It employs a random walk-style approach to determine which sense of a polysemous word is more suited in a particular context and leverages semantic similarity computed from Hindi WordNet to provide weight to edges. Twenty polysemous nouns from a sense-annotated dataset were used for the evaluation. A higher overall accuracy of 63.39% was noted by the researchers [28,29] than in previously published studies using the same dataset.

An innovative unsupervised graph-based method for Hindi word sense disambiguation has been put into practice by the researchers [30]. Researchers use a random walk on a graph made specifically for each occurrence to help in disambiguation. The many meanings of the words that exist in the context of ambiguous words are represented as nodes in the graph. The edge weights are determined by comparing the semantic similarity of two nodes. Two path-based similarity metrics are compared by the researchers. According to experimental research, a Leacock–Chodrow similarity measure outperforms the shortest path measure. An average accuracy of 72.09% was noted by the researchers [30] for all five cases of polysemous nouns.

Using pre-trained language models’ masked language model task, the researchers [31] present a novel unsupervised technique for HowNet-based Chinese WSD. In the studies, researchers construct a new and larger HowNet-based WSD dataset, considering the current evaluation dataset to be small and outdated. The model performs noticeably better than all the baseline techniques, according to experimental results.

The impact of word embedding on developing an unsupervised Arabic sense inventory is presented by the researchers [32]. To explore their impact on the resultant sense inventory and their effectiveness in word sense disambiguation for Arabic context, three pre-trained embeddings are examined. A fully unsupervised technique based on a graph-based word sense induction algorithm is used to create sense inventories. According to the findings, the Aravec-Twitter inventory obtains an accuracy of 0.47 for 50 neighbors, which is the best result; for 200 neighbors, it is almost as accurate as the Fasttext inventory [32].

The knowledge-based approach is based on diverse knowledge sources such as machine-readable dictionaries (e.g., WordNet) or sense inventories and uses information explicitly articulated in the form of rules or lexicons [15]. The knowledge-based scheme is categorized by the type of resources they use, such as Machine-Readable Dictionaries (MRDs), Thesauri, and Computational Lexicons or Lexical Knowledge Bases [13,15].

In the Bengali language, a knowledge-based method for word sense disambiguation (WSD) has been introduced. Bengali Text Corpus, created as part of the Government of India’s Technology Development for Indian Language (TDIL) initiative, provided the input dataset for Bengali WordNet, a knowledge base created at ISI Kolkata [33]. The suggested method determines the precise meaning of an ambiguous word in Bengali by finding the largest overlap between the definition of the word in the dictionary, the definitions of the terms that collocate with it in that sentence, and the synonyms of those collocating words. Nine often-used ambiguous terms in Bengali are selected to test the system. Seventy-five percent of the output is accurate [33].

To address the issue of inadequate usage of the current knowledge base, the researchers [34] presented a word sense disambiguation method based on graphs and knowledge bases. It builds the disambiguation graph by processing samples in the lexical knowledge base that have a strong sense differentiation capability and using dependency parsing to gather contextual knowledge. Subsequently, the disambiguation can be completed by merging the contextual and dependency disambiguation graphs. Tests conducted on the SemEval-2007 task #5 dataset reveal a disambiguation accuracy of 47%, surpassing that of the previously listed techniques.

The knowledge-based approach is a compromise between the supervised and unsupervised methods, which both make use of WordNet, ontologies, and manually built lexical databases. Nonetheless, research indicates that knowledge-based applications are a viable substitute for supervised systems [15]. Thanks to the sophisticated Graph-based method, knowledge-based disambiguation has become more relevant in NLP [35].

An innovative knowledge-based sense disambiguation (KSD) technique was put out by researchers [36] to address the issue of lexical ambiguity in question-answer (QA) systems. The suggested creative process, which combines several knowledge sources, is the main contribution. This creates a shallow NLP by combining the question’s metadata (date/GPS), context information, and domain ontology. The suggested KSD approach evolved into a special tool for a mobile quality assurance application that seeks to ascertain the pilgrims’ intended meanings when they ask inquiries. The findings of the experiment demonstrate that, within the pilgrimage domain, the approach achieved accuracy performance that was both equivalent to and superior to the baselines.

A knowledge-based coarse-grained sense disambiguation technique based on selectional preferences defined by topic models is presented by the researchers [37]. With three competitive baselines, the method’s overall accuracy of 83% is a substantial improvement [37]. To develop a knowledge base, the researchers [38] looked at the learning of semantic class-level selectional preference (SP). First, a Semantic Knowledge-base of Contemporary Chinese (SKCC) noun taxonomy is modified for SP acquisition. Second, a tree-cut model based on MDL is put into practice. Thirdly, SP is presented in SKCC as the source of the gold standard test set used to assess the performance of SP acquisition. The experiments investigate verb–object, verb–subject, and adjective–noun relations as three different types of predicate-argument relations. The best three relaxed accuracy for the verb–object relation is 75.26%, while the top one strict accuracy is 24.74% [38].

Researchers [21,39,40] offer a knowledge-based method for word sense disambiguation in this work that can identify a term’s correct sense in a particular context by utilizing a variety of semantic similarity metrics. The studies demonstrate that the technique drew extremely near performance utilizing semantic measures based on word embeddings when using WordNet-based similarity measurements. Additionally, using real-world data, the researchers created a tiny dataset in which the annotators’ feedback allowed them to discern between terms that were confusing and those that were unclear. Lastly, an analysis of a cutting-edge dataset, including linguistic factors, was conducted to assist and explain the effectiveness of the approach. The results of the analysis showed that texts with high noun and adjective ratios and high scores for lexical richness correlate with improved WSD performance.

Researchers [41] examined Hindi WSD in this article using this knowledge-based methodology. To eliminate words’ ambiguity, word knowledge from outside knowledge sources is incorporated. In this work, using WordNet of Hindi, researchers attempted to create a WSD tool by taking a knowledge-based approach. For WSD for Hindi, the program employs the knowledge-based LESK algorithm. The accuracy provided by the suggested approach is roughly 71.4%.

For Persian WSD, the researchers [42] suggest a brand-new knowledge-based technique. Each document’s subjects are retrieved using a pre-trained LDA model, and each ambiguous content word is assigned to a different topic. The study determines how similar the words of the designated topic of w, are to the gloss of s on the FarsNet (the Persian WordNet) for each conceivable sense s of a particular word w. Next, we determine which sense is most likely by giving it the highest score. Research could attain state-of-the-art performance when compared to other knowledge-based methods, according to an evaluation of the method conducted on a Persian all-words WSD dataset [42].

Based on distributional semantic space, the researchers [43] suggested a unique hybrid supervised and unsupervised method for Amharic sense disambiguation. Ten ambiguous words in total are used to evaluate each strategy and the combined approach. 82.3 percent of the F1-score and 70% accuracy were obtained by the supervised technique, whereas 85.7% of the F1-score and 60% accuracy were obtained by the unsupervised approach. An accuracy of 86% and an F1-score of 92.5% were attained by the combined method.

Utilizing additional context-based information on unclear terms, researchers [44] offer a scoring system that allows for knowledge-based resolution of ambiguity. To achieve this, the researchers assembled two lists of terms: they chose words around the target word from the corpus and extracted associated words of a sense from WordNet. Ultimately, 80.1% accuracy was attained using the suggested strategy on the TWA corpus, which is encouraging when compared to the outcomes of previous studies using comparable techniques.

Using the public MSH WSD dataset as the test set, researchers [45] use knowledge-based techniques that take use of recent developments in neural word/concept embeddings to outperform the state-of-the-art in biological WSD. To obtain concept vectors, researchers [45] use MetaMap 2016V2, an existing concept mapping software. The accuracy of the linear time technique is 92.24%, a 3% gain over unsupervised methods. Our more costly method, which uses a nearest-neighbor structure, achieves an accuracy of 94.34%, effectively halving the error rate. Our work demonstrates that biological WSD is not an exception to the current trend of many language processing tasks benefiting from the use of dense vector representations learned from unlabeled free text.

A new word sense disambiguation method based on the semantic relations of the lexical database PolyWordNet was created by researchers [46]. Unlike contextual overlap count knowledge-based word sense disambiguation methods, the algorithm does not count word overlaps between the glosses of context and sense bags. Rather, the algorithm looks for connections between the senses of the target word and the routes or links of context terms. It records every path or link that joins a sense of the target word with a context term. Next, for every linked sense, the program counts the number of paths, links, or connections. The technique that uses PolyWordNet has an accuracy of 96.11%, which is higher than the other contextual overlap count WSD approach that uses Princeton WordNet, which has an accuracy of 58.33%.

A deep learning-based methodology for constructing an RDF-based ontology from unstructured text was proposed by researchers [47]. Using databases of newspaper articles, the researchers hope to assess the suggested model by developing a general knowledge ontology. The suggested model includes a Relation Extraction model, a novel implementation of the RDF mapping technique, and is built on the foundation of transformer architecture and natural language processing. The model’s capacity to resolve the word sense disambiguation issue is its primary selling point. The model demonstrated strong performance and earned exceptionally high accuracy ratings.

A new unsupervised knowledge-based algorithm for global word sense disambiguation (WSD) is called ShotgunWSD. The Shotgun sequencing technique, a popular whole genome sequencing method, served as the model for the program. The relatedness score between two-word senses is computed using a new method in ShotgunWSD 2.0, which is an enhanced version of the tool that keeps the fundamental process of creating better local sense configurations in place. To create a sense bag for every sense, researchers [48] gather all the words from the associated WordNet synset, gloss, and related synsets. Then, they use a common word embedding framework to embed all the words from all the sense bags across the document in a vector space. To determine the sense embedding for a specific word sense, researchers [48] use the median of all the remaining word embeddings in that sense bag. On six benchmarks, SemEval 2007, Senseval-2, Senseval-3, SemEval 2013, SemEval 2015, and overall (uniform), researchers compare the enhanced ShotgunWSD algorithm (version 2.0) with its prior version (1.0) as well as numerous cutting-edge unsupervised WSD techniques. Researchers show that ShotgunWSD 2.0 outperforms several other recent unsupervised or knowledge-based methods, as well as ShotgunWSD 1.0. The improvements of ShotgunWSD 2.0 over ShotgunWSD 1.0 are, for the most part, statistically significant, according to the study’s paired McNemar’s significance tests, which had a confidence interval of 0:01.

To categorize WSD algorithms, the researchers [49] looked at machine learning and knowledge-based approaches. Every category is thoroughly analyzed, and the algorithms that correspond with it are explained. The research study examines a range of WSD strategies and resources. The study covers publications from a variety of journals and talks about current research directions as well as field competitions and trends.

The related literature review discusses knowledge-based, supervised, and unsupervised approaches’ efficacy in general. Table 1 lists particular methods or algorithms in each of these categories, along with information about how well they worked and in what situations.

Table 1.

Summary of performance benchmarks of WSD techniques for low-resourced languages.

Models can be adapted to low-resource languages using WSD approaches that take advantage of transfer learning from high-resource languages. Despite having little data, methods like cross-lingual models and multilingual embeddings help transfer knowledge between languages and improve performance. Low-resource languages can benefit from the fine-tuning of pre-trained models on high-resource languages (e.g., multilingual BERT), which provides a foundation and increases accuracy with fewer data. In environments with limited resources, methods that make use of both labeled and unlabeled data can be successful. WSD performance can be improved using semi-supervised learning by using large amounts of unlabeled data to speed up the learning process. One of the main obstacles to training and evaluating WSD models in low-resource languages is the absence of substantial, high-quality annotated corpora. Multiple dialects in low-resource languages make data collecting and model training even more difficult.

The training of complex models necessitates large computational resources, which are scarce in environments centered around low-resource languages. The lack of common assessment datasets and standards for low-resource languages makes evaluating and contrasting the effectiveness of various WSD techniques challenging. Low-resource languages may have distinct or sophisticated linguistic characteristics (such as morphology or syntax) that are poorly represented in current models, which could have an impact on WSD performance. By addressing these flaws and capitalizing on the advantages, WSD for low-resource languages can improve significantly, resulting in more inclusive and efficient natural language processing systems.

3. Materials and Methods

The method included choosing the literature using a methodical technique. Transparency and impartiality were promoted by the systematic literature selection, which made it possible to use a structured search strategy with precise inclusion and exclusion criteria. Because potential biases in the literature selection may be reduced and freely acknowledged, this eventually improves reliability [55]. The PRISMA methodology was applied when conducting the systematic literature review [56].

The research objective was to retrieve publications and analyze them from various databases related to word sense disambiguation for low-resourced languages. The descriptive nature of the research study allows it to map the features of the scientific output of articles about WSD for morphologically rich, low-resourced languages. It achieves this by presenting the observation, classification, analysis, and interpretation of articles. The study also offers meta-analysis, which is a quantitative method used to examine a topic’s scholarly output. Peer-reviewed articles that appear in pertinent journals and conference proceedings between 2014 and 2024 are examined.

The goal of our systematic literature review (SLR) is to carefully find, evaluate, and compile pertinent works in the areas of WSD for low-resourced languages as they relate. The following sub-questions served as a guide for the research:

RQ1: What is the nature and extent of the polysemy problem in the Sesotho sa Leboa language?

RQ2: What are the existing methods for word sense disambiguation (WSD) relevant to low-resource languages?

RQ3: How can an automated WSD scheme for the Sesotho sa Leboa language be developed using corpus-based ensemble methods and transformer-based architecture?

PRISMA standards served as the framework for our systematic review, which we conducted in an organized manner to guarantee thorough coverage and methodical examination of the literature. To ensure relevance and reduce bias, we established explicit inclusion and exclusion criteria to select the studies [57].

3.1. Search Strategy

The researchers conducted a comprehensive systematic literature review for this study on 23 June 2024, by methodically examining the IEEE Xplore, Springer, and SCOPUS databases. To find a wider variety of material that might not be indexed in conventional databases, we also performed a search on Google Scholar. Each database was specifically targeted with a different search string: ((word sense disambiguation) OR (“Morphologically rich”) OR (“low-resourced languages”)) AND ((“Natural Language Processing”) OR (“Word Embedding”) OR (“Word Vector Space”) OR (“Lexical Ambiguity”) OR (“Polysemy”) OR (“Language Models”) OR (“Semantic Space”) OR (“Semantic Similarity”)).

These databases were chosen based on the authors’ renown in the computer science and engineering domains, guaranteeing a comprehensive and pertinent body of peer-reviewed research [57]. Table 2 summarizes the search results, including the quantity of pertinent documents found in each database and useful information on the sources’ characteristics. The efficacy of the search technique and the resulting corpus of literature, which serves as the basis for our systematic review, are summarized in this table.

Table 2.

Summary of database search results for WSD.

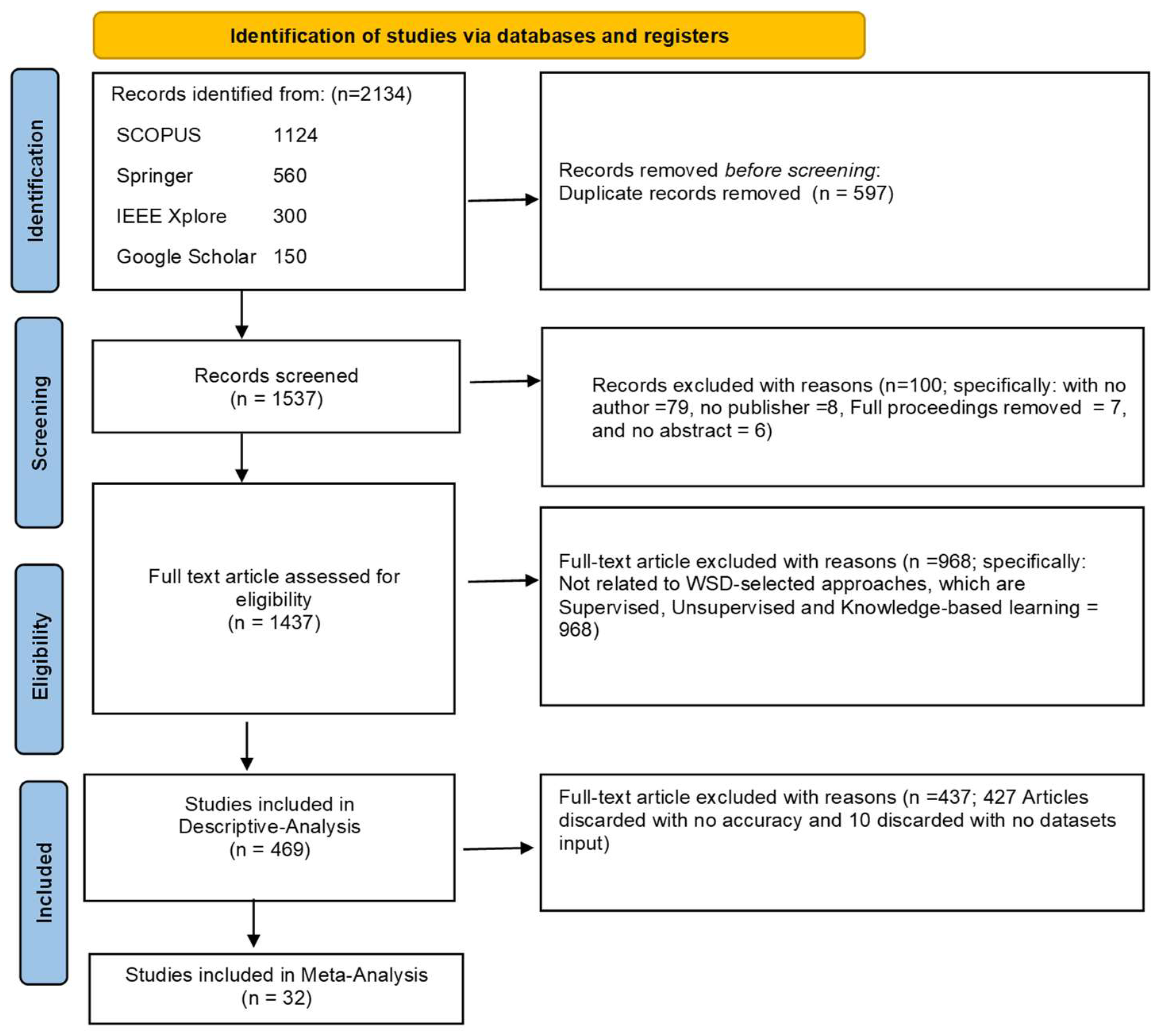

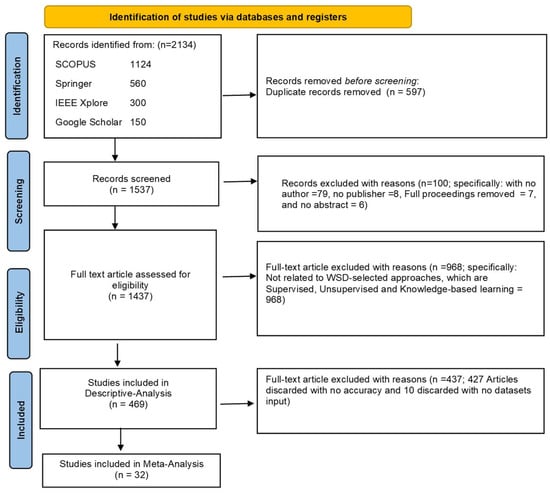

A flow diagram of the different phases of our systematic review is depicted in Figure 1.

Figure 1.

PRISMA flow diagram for the WSD research [15].

3.2. Inclusion Criteria and Exclusion Criteria

The type of study was the basis for the inclusion criteria used to choose pertinent studies. The keywords were chosen with a clear idea of the intended outcome in mind, but they did not provide a clear image of what was expected. Thousands of pertinent articles were found in the first stage of the search when the keywords were applied, and the keywords appeared in the headers, content, abstracts, and reference lists. As shown in Table 3, we established precise inclusion and exclusion criteria in addition to the chosen keywords.

Table 3.

Inclusion and exclusion criteria.

The process of extracting data was methodical, entailing the identification of employed approaches and the extraction of findings. The methodical technique made it easier to analyze recurring themes and trends, which in turn gave researchers insights into word sense disambiguation bias in low-resourced languages [58].

3.3. Data Synthesis and Statistical Analysis

The data were entered into an Excel spreadsheet to make them ready for statistical analysis. Next, these data were loaded into RStudio and STATA, two statistical analysis programs. The overall pooled effect size and the effect sizes of each primary study that was included in the data extracted were used to determine the results of all primary investigations. The foundation of our investigation was the random-effects model [59]. The abstracts of the collected literature were processed and analyzed to obtain an overview of the research content of the collected data. Since not every article was consistent with the research objectives, we screened unnecessary articles and manually extracted related articles; this resulted in 2134 articles after multiple rounds of screening and extraction; we then performed a manual check to ensure a more accurate inclusion.

Additionally, to investigate the relationship between various WSD techniques, the authors went back to the collected literature to understand their potential meanings and used such additional information to classify the articles that could not be clearly categorized.

Furthermore, subgroup analysis and meta-regression are often used when performing a moderator analysis in a systematic review with meta-analysis. Subgroup analysis splits participant data into smaller groups for comparison purposes. As a result, this study conducted a subgroup analysis centered on research performance evaluation metrics, or the accuracy, of the included studies to pinpoint the cause of study heterogeneity. The subcategories were established based on the methods applied (supervised learning, unsupervised learning, transformer architecture for word sense disambiguation, knowledge base, deep learning, ensemble deep learning, ensemble machine learning, hybrid). Furthermore, meta-regression analyses were performed to ascertain whether any subsets of the included studies were able to capture the pooled effect size [59].

4. Results

Research turned up 2134 publications from several databases, including Google Scholar, IEEE Xplore, Springer Link, and SCOPUS. Some articles were deleted because they were not in English, and 597 articles were deleted because they were duplicates. A total of 100 papers were eliminated due to incomplete author identification in some cases, as well as incomplete publisher identification in others. The whole proceedings, including abstract-free pieces, were also eliminated. Roughly 968 articles were deleted because they lacked clear WSD approaches. Four hundred and thirty-seven more items were turned down because they lacked evaluation metrics. A final meta-analysis involving 32 studies was conducted.

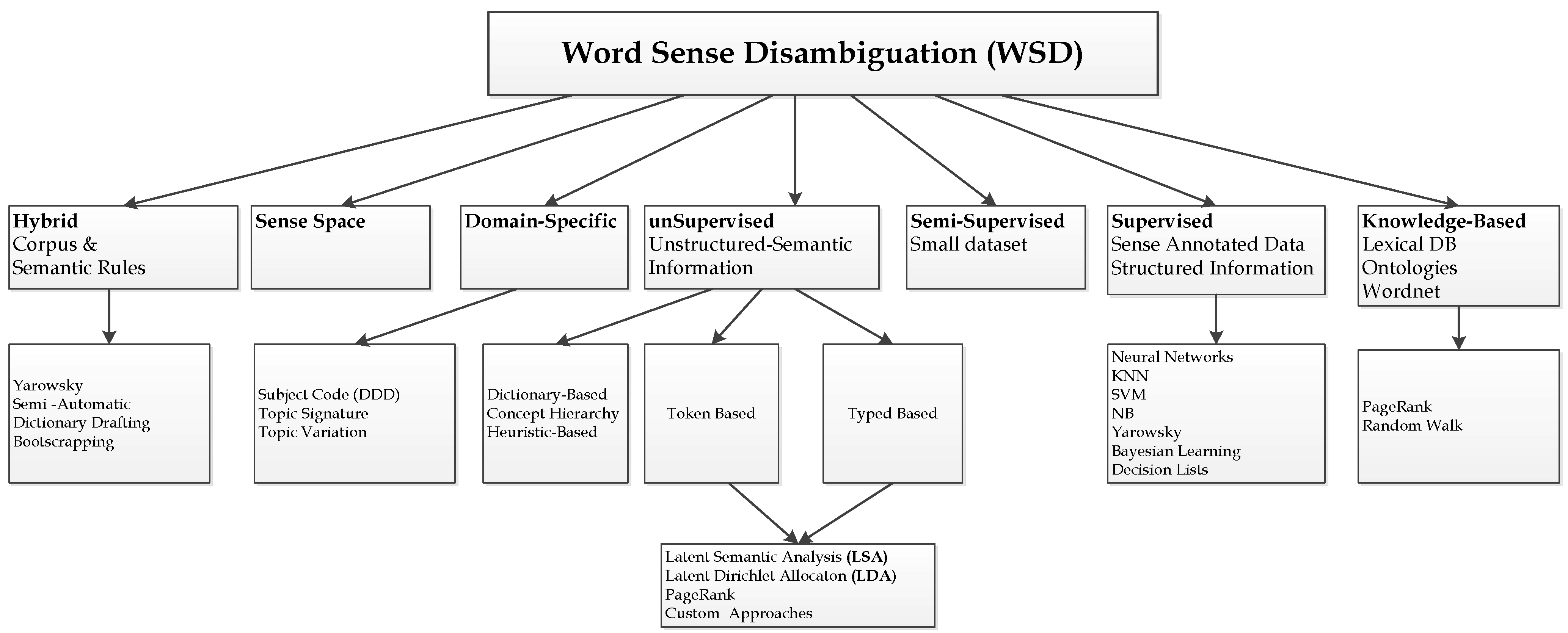

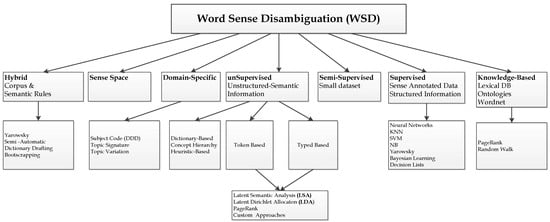

The diagram in Figure 2 is a proposed WSD Taxonomy based on the review of the literature.

Figure 2.

Proposed WSD Taxonomy.

4.1. Meta-Analysis Summary

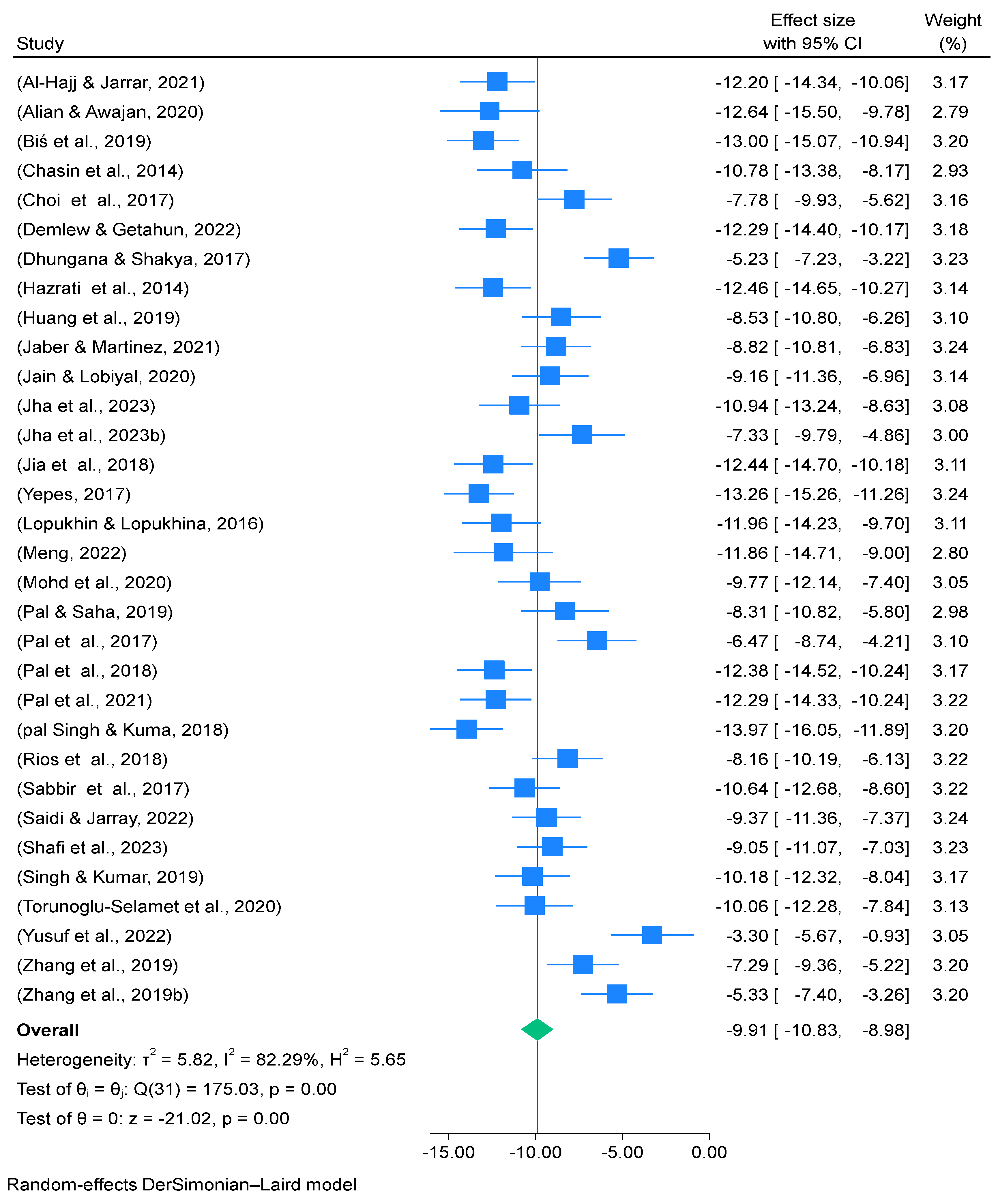

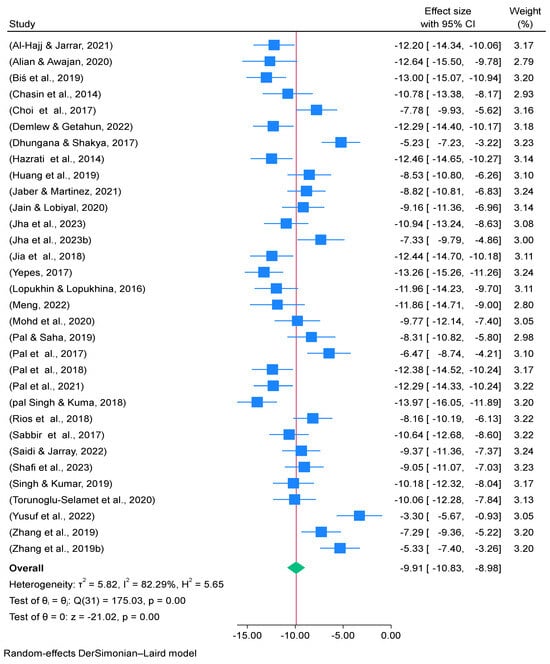

When it comes to word sense disambiguation (WSD), a forest plot in Figure 3 is a graphical representation that is used in meta-analyses to show the results of individual studies and the overall pooled effect. To gather data for this meta-analysis, the researchers used Stata and the R package. Additionally, they plotted the effect sizes of individual studies as squares on the graph and displayed the overall pooled effect size as a diamond, which includes a line representing the confidence interval of the pooled effect.

Figure 3.

Forest plot for distribution of effect size of meta-analysis summary of WSD in Table 3 [8,11,12,17,18,19,20,21,25,26,27,28,29,30,32,33,34,37,38,43,44,45,46,50,51,53,54,60].

As a result, weights are selected to account for the volume of data included in each study. Random-effects model meta-analyses were carried out utilizing the sample size and accuracy based on effect size and standard error of effect size to assess the accuracy of word sense disambiguation techniques. More proof of the heterogeneity in effect sizes may be found in the test of homogeneity chi-square test result, (Q) = 175.03. A total random pooled effect size of −9.906 was found, with a 95% confidence interval (CI) ranging from −10.830 to −8.983. Table 4 and Figure 3 reveal that variability between studies was high when reflecting the statistics of . Actual heterogeneity to total studied variation (p ≤ 0.05) was substantially high (25%, 50%, and 75% are correspondingly considered low, moderate, and high levels, demonstrating that the variability is due to heterogeneity [59]. The meta-analysis summary is shown graphically in Figure 2’s Forest plot. The statistics gives an idea of the heterogeneity of the studies, since , the researchers conclude that studies drawn from the forest plot are inconsistent and questionable.

Table 4.

Meta-analysis summary on WSD: random-effects model (DerSimonian–Laird).

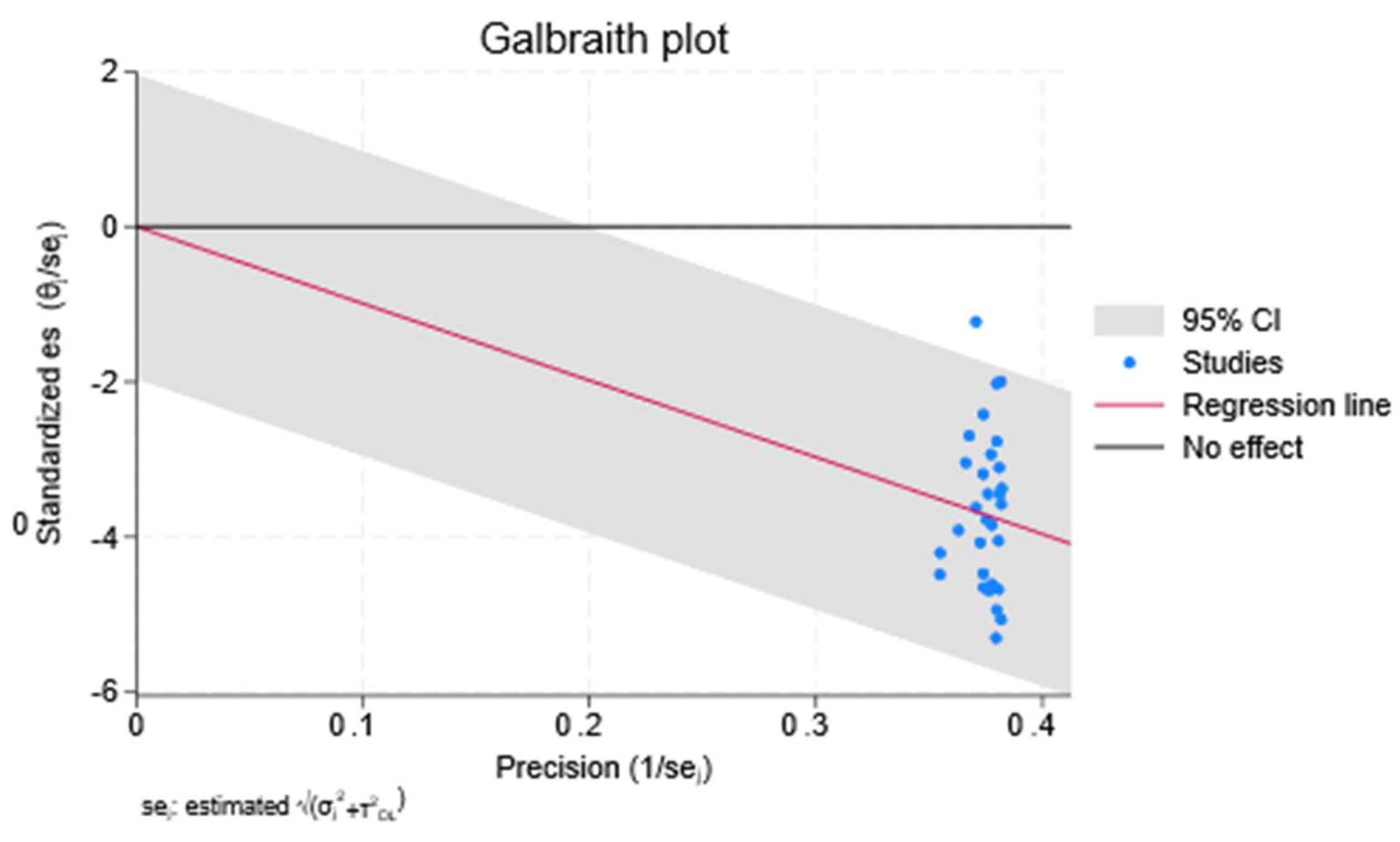

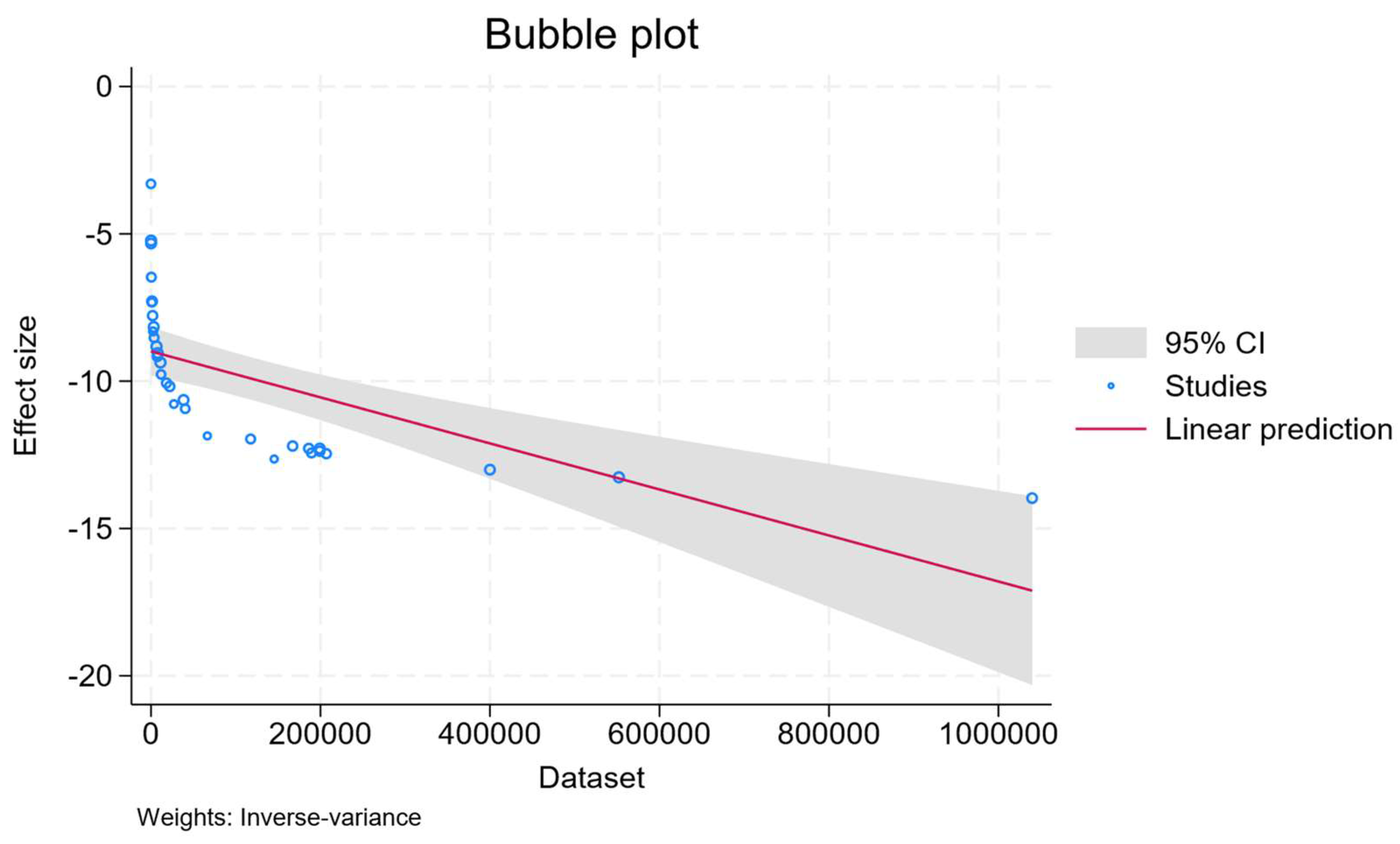

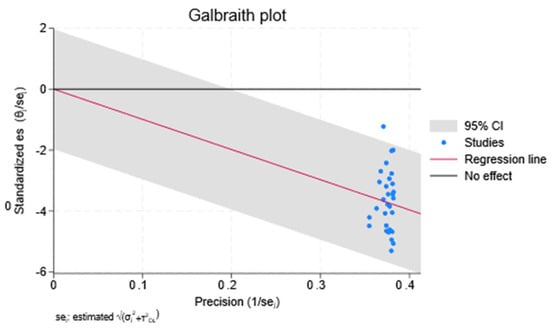

Figure 4’s Galbraith plot demonstrates a substantial correlation between the accuracy produced by the model and the sample size; the regression line’s negative slope further suggests that accuracy decreases with increasing sample size. High heterogeneity is also supported by the fact that only one study deviates from the 95% CI. According to Galbraith plots, the confidence interval lines define the area that contains 95% of the research.

Figure 4.

Galbraith Plot.

4.2. Publication Bias and Meta-Regression

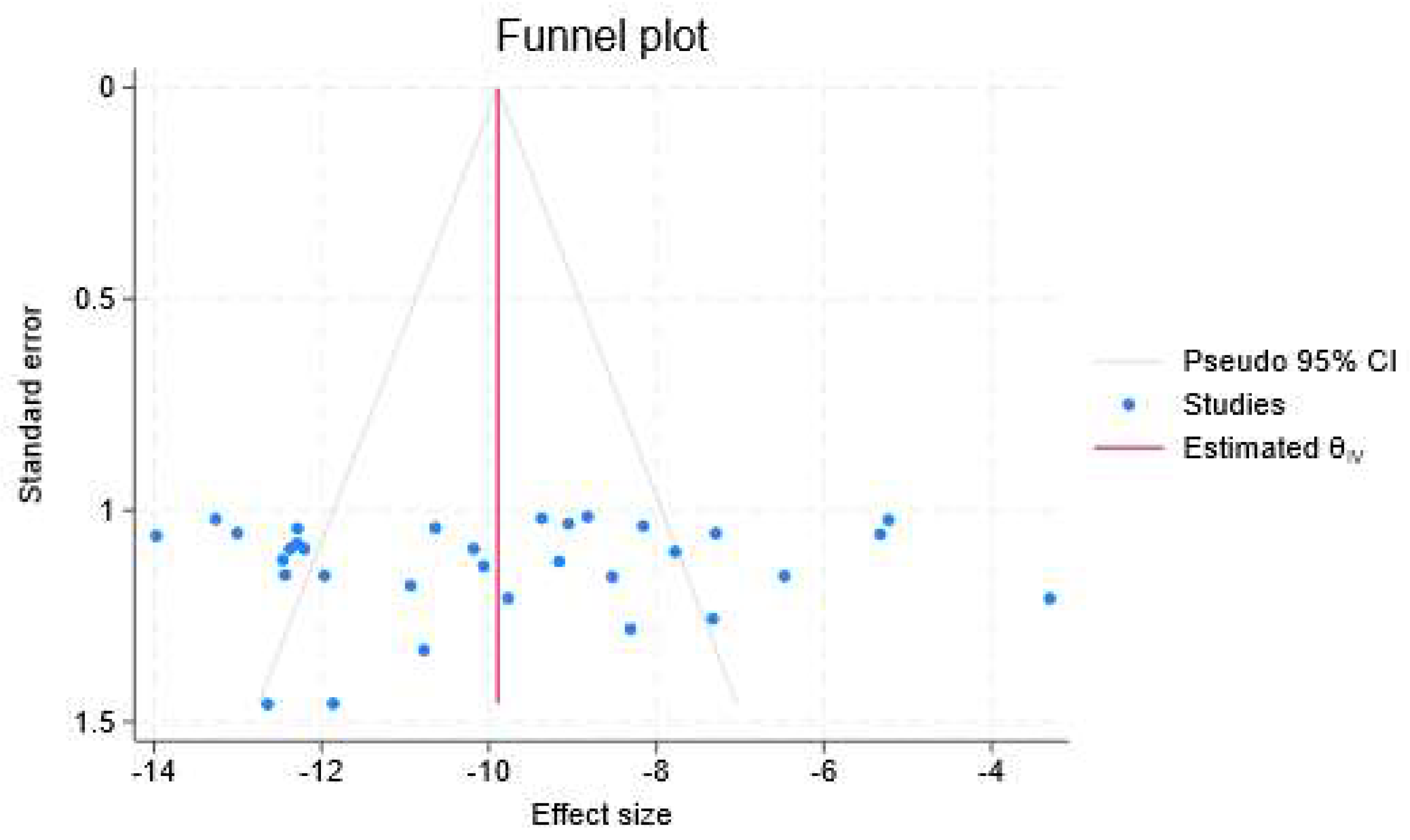

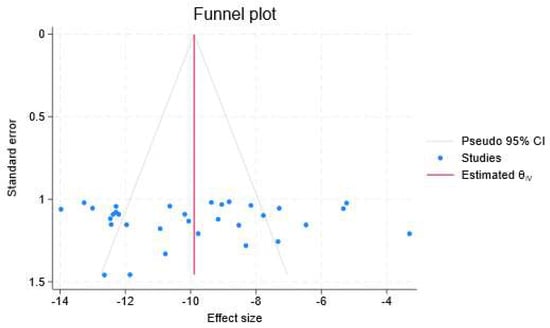

Figure 5’s funnel plot is used to show and identify publication bias or heterogeneity based on the standard error distribution of each individual study in a meta-analysis. In systematic reviews and meta-analyses, publication bias is unavoidable. However, research indicates that this bias should be assessed to draw valid conclusions about how much bias may affect the generalizability of findings. A funnel plot was used to visually assess the publication bias in this research [59]. Figure 5’s triangular region’s symmetrical distribution of research suggests that this bias is unimportant. The funnel plot might be interpreted subjectively because it is based on a visual assessment. Thus, as a quantifiable indicator of publication bias, meta-regression was carried out. Given that the p-values from the meta-regression and the meta-analysis are 0.454 and 0.440, respectively, it is clear from Table 5 that there is no substantial bias and that the meta-analytic effect is not statistically significant.

Figure 5.

Funnel plot.

Table 5.

Meta-regression per publication year.

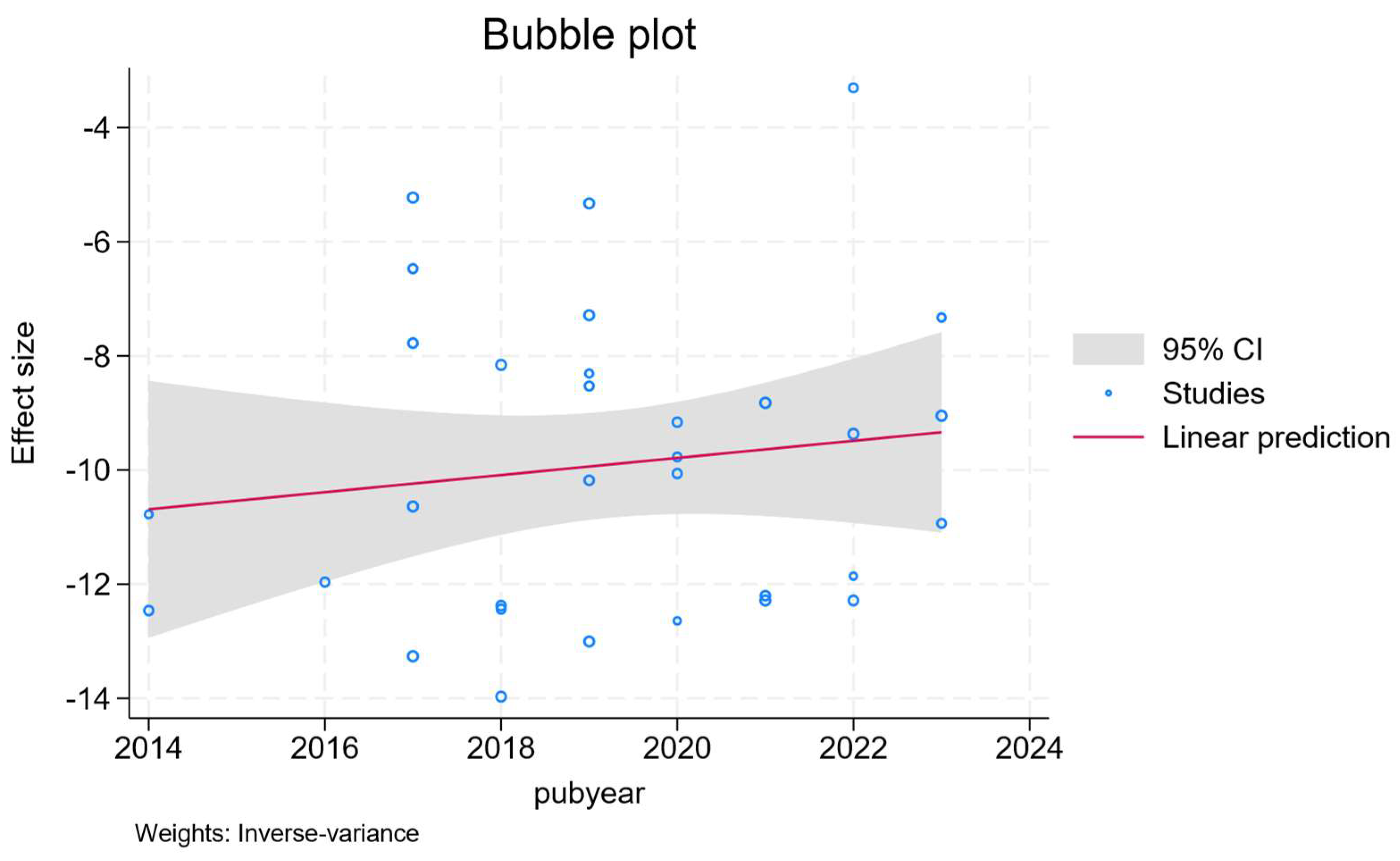

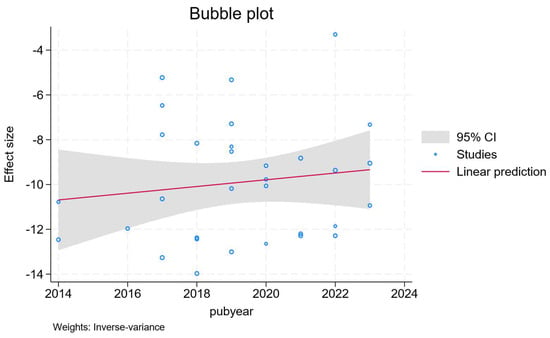

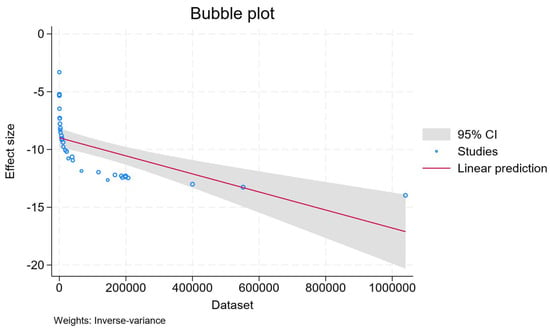

The publishing year characteristics are shown in Table 5, and the dataset shown in Table 6 was utilized as moderators in a meta-regression analysis to investigate the sources of heterogeneity. Table 6’s results show that there is a significant cause of heterogeneity, with p = 0.00. Figure 6’s Bubble plot per year and Figure 7’s Bubble plot per dataset, which show a considerably more widely distributed distribution of research each year, provide more evidence for this.

Table 6.

Meta-regression per the dataset.

Figure 6.

Bubble plot per publication year.

Figure 7.

Bubble plot for the dataset.

Table 7 shows the Trimfill Analysis of Public Bias with a p-value > |z| = 0.7459, proving that there is no substantial bias in the observed studies. A statistical method called trimfill analysis is frequently applied in meta-analyses to mitigate publication bias. To make the funnel plot symmetric, it imputes hypothetical missing studies to account for this bias. An improved approximation of the actual effect magnitude is the outcome. A high p-value (such as 0.7459) in the trimfill analysis context indicates that there is no significant evidence of publication bias and that the observed data are insufficient to reject the null hypothesis.

Table 7.

Meta Trimfill Snalysis of public vias.

4.3. Implications of Heterogeneity for WSD Based on Forest Plot in Figure 3

In the context of a word sense disambiguation (WSD) meta-analysis, the measures of heterogeneity provided by I2 = 82.29% and Tau-squared (τ2) = 5.819 suggest a high degree of heterogeneity among the studies. In this instance, an I2 of 82.29% indicates that differences between studies, as opposed to random fluctuation, may account for a significant amount of the diversity in research findings. The high I2 value suggests that the study’s findings are inconsistent and highly varied. This variation may result from variations in the study populations, approaches, or environments. There are other factors that could be responsible for this variation, including datasets, experimental circumstances, and WSD approaches.

In essence, tau-squared (τ2) measures the degree of unpredictability in study results by estimating the variation of genuine effect sizes across investigations. 5.819 is Tau-Squared (τ2): The expected variance of the actual impact sizes is given by this value. Greater significant variability among study effects is indicated by a greater τ2. A large variability in the genuine effects across trials is indicated by a τ2 value of 5.819. This indicates that the actual impacts of the WSD techniques under consideration are inconsistent, with disparate studies producing rather varied findings, reflecting wide variations in the actual effect sizes between research studies. The variety is substantial enough to imply that varying research reflects varying levels of WSD technique efficiency.

Overall, the combination of high I2 and high τ2 indicates that although there is variation in the efficacy of the WSD techniques. To make meaningful inferences, it is crucial to comprehend the reasons for this variation.

4.4. Significance

If the null hypothesis is correct, there is a 45.4% chance of witnessing the current results, or more severe ones, according to a p-value of 0.454, and a 44.0% chance of doing so according to a p-value of 0.440. This is comparatively high, suggesting that there is no statistically meaningful relationship or impact that has been found. As the p-value exceeds the standard significance level of 0.05, the null hypothesis cannot be ruled out. This indicates that not enough data are available to draw the conclusion that there is a meaningful relationship or effect. If the null value is included in the 95% confidence interval (CI) for both p-values of 0.454 and 0.440, then the p-value showing no statistical significance is in line with this. In essence, a high p-value indicates that there is no statistical significance for the observed effect. The evidence is not strong enough to assert a statistically significant effect or association in the context of your study, according to the p-values and the confidence interval.

4.5. Descriptive Statistics of Primary Studies

To support the research, a dataset containing texts or documents relevant to word sense disambiguation for low-resourced languages from 2014 to early 2024 was obtained. The information was gathered using academic journals, research papers, and conference proceedings from IEEE Xplore, SCOPUS, and other sources. The scientists ensured that all documents in the dataset included metadata, including the timestamp, accuracy, word sense disambiguation techniques, and year of publication.

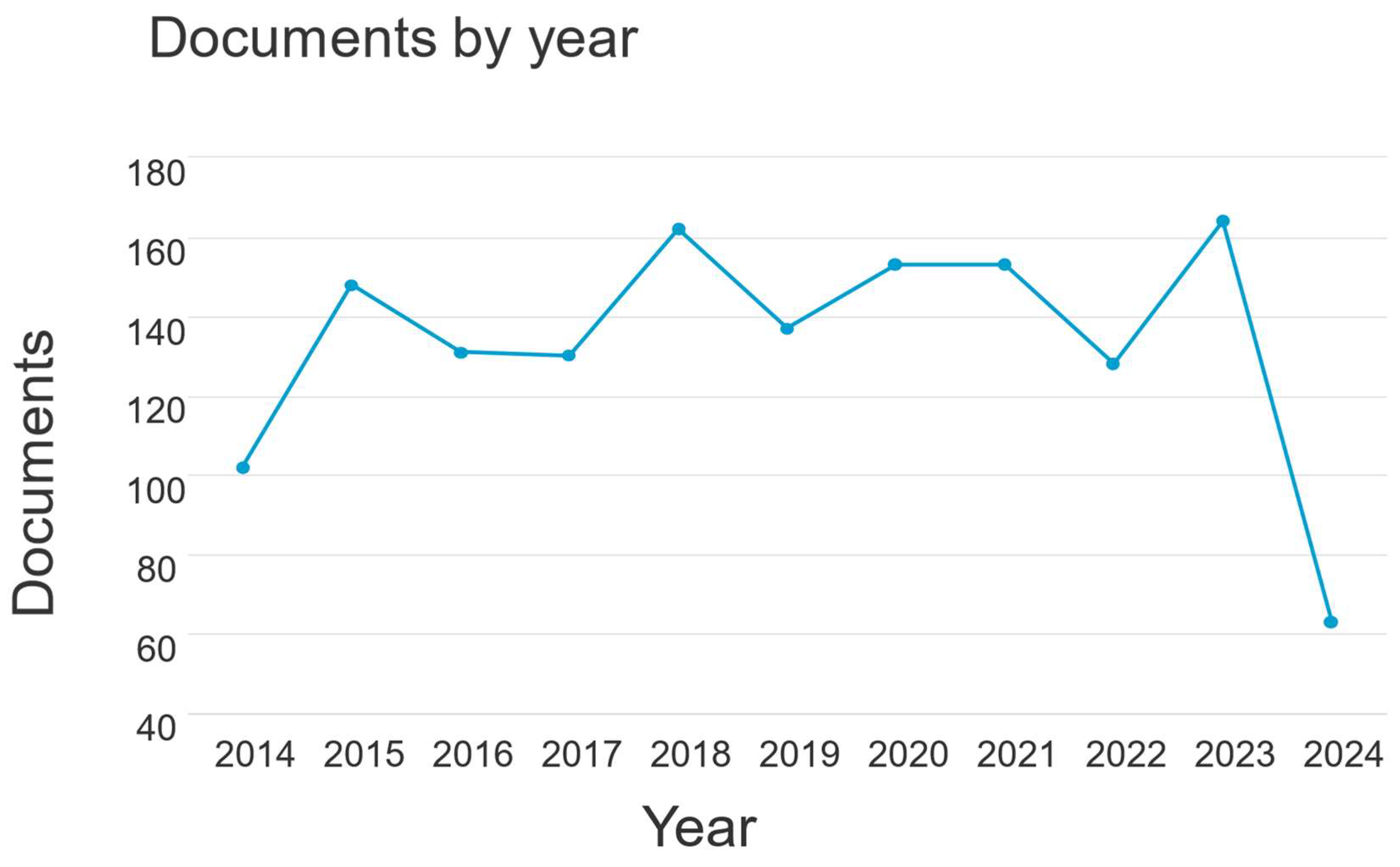

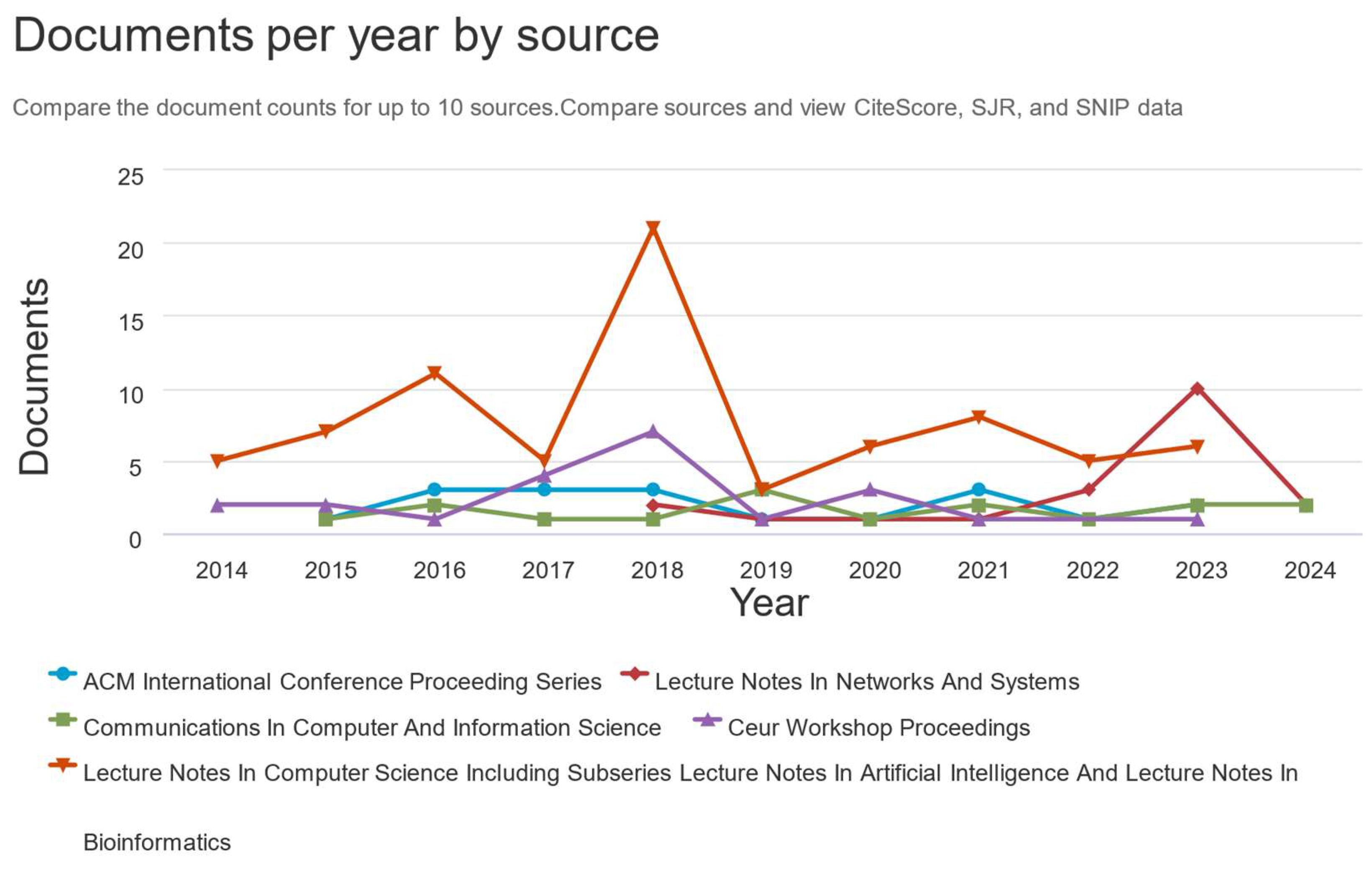

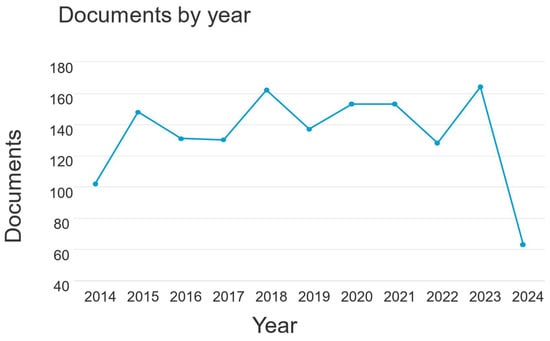

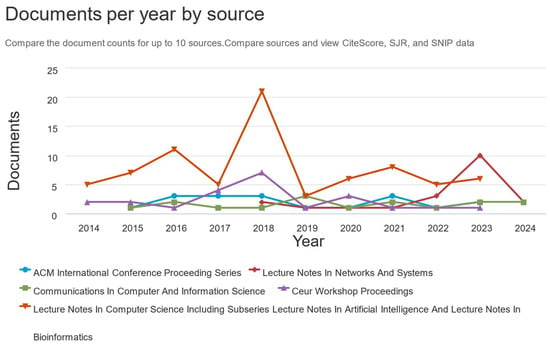

Figure 8 shows the publishing patterns for the years 2014 to 2024. Over 100 articles from 2015 to 2021 demonstrate substantial growth in interest in word sense disambiguation techniques. Nevertheless, there was a drop in publications in other years. Figure 9 shows a rise in lecture notes in Computer Science, including Subseries Lecturer Notes in Artificial Intelligence and Lecturer Notes in Bioinformatics. It should be mentioned that the research was conducted using data that were gathered as of early 2024. As a result, there is a good chance that more publications will be made in journals that are published in 2024’s later months.

Figure 8.

Publications by Year.

Figure 9.

Publication per year by source.

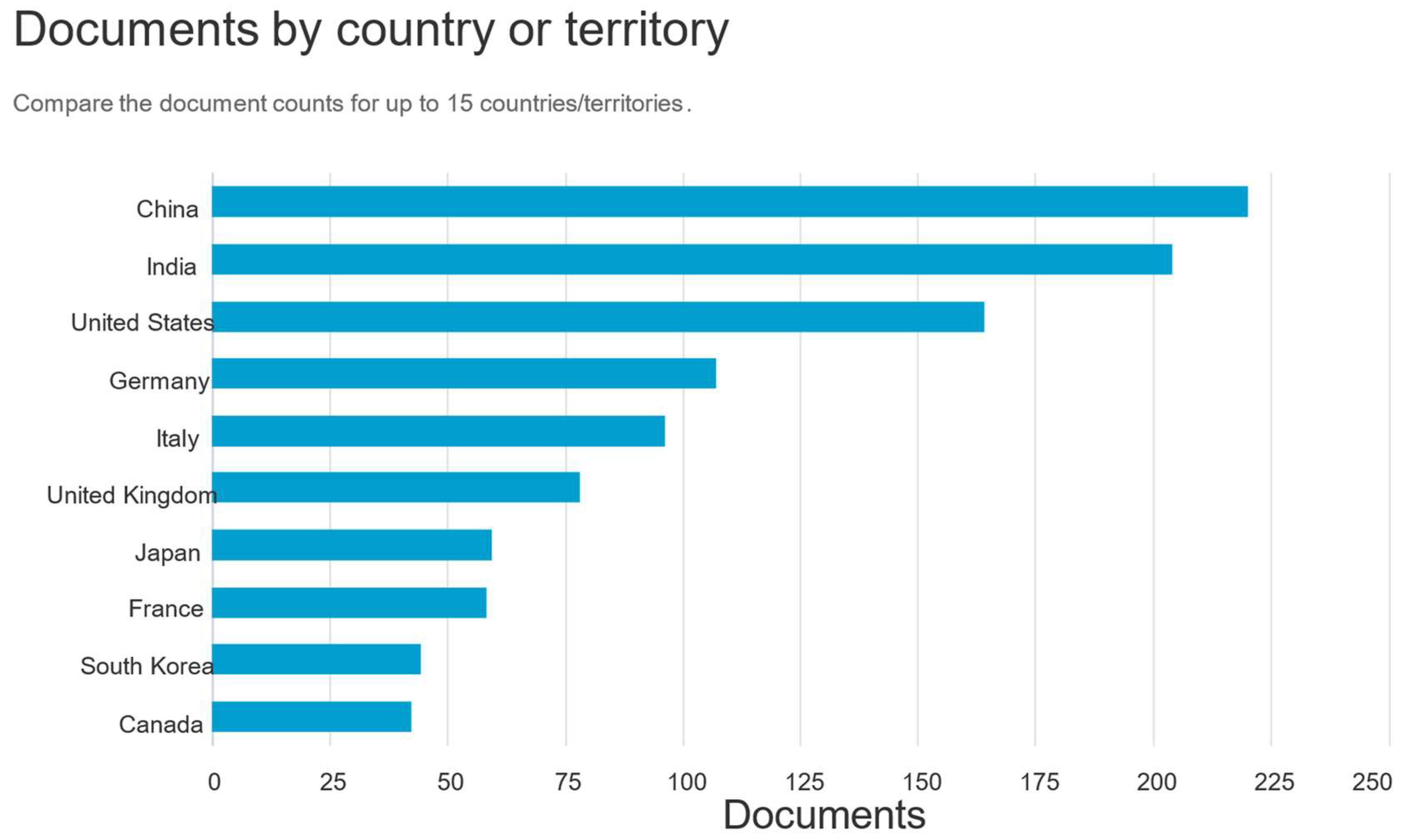

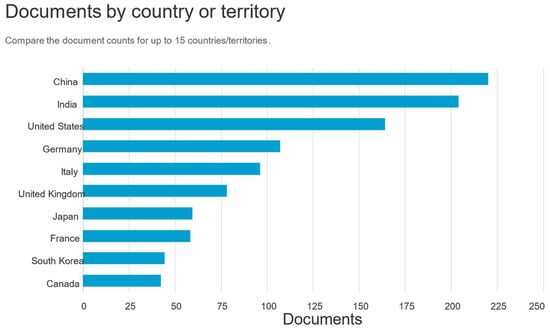

The researchers also methodically studied or looked at subjects within text data from different countries, as seen in Figure 10, providing insights into the discourse and thematic patterns particular to each country. The visual presentation demonstrates how research has progressed in word sense disambiguation for low-resourced languages. This analysis indicates that India is currently leading the way, followed by China and Spain.

Figure 10.

Word sense disambiguation by country.

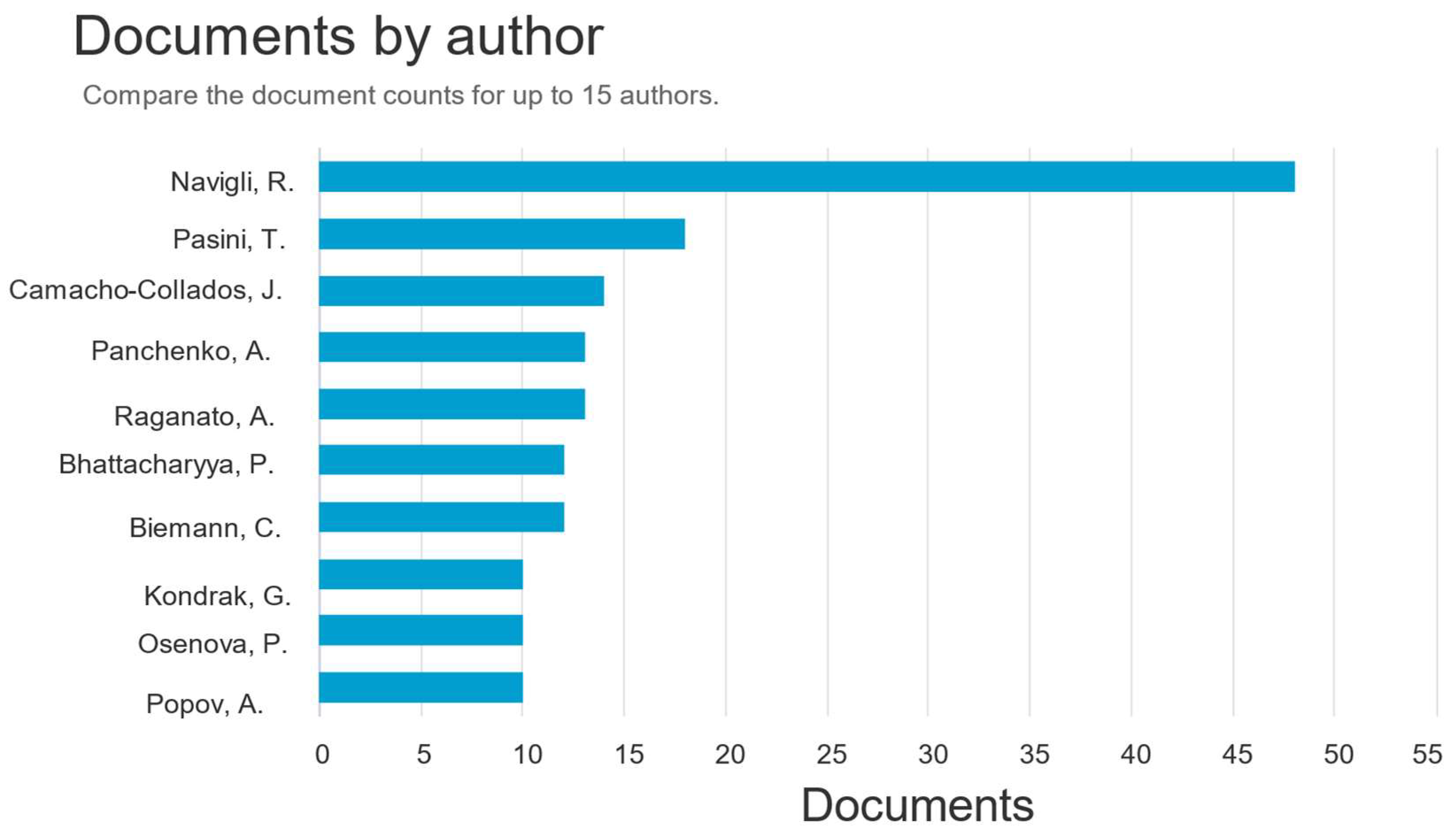

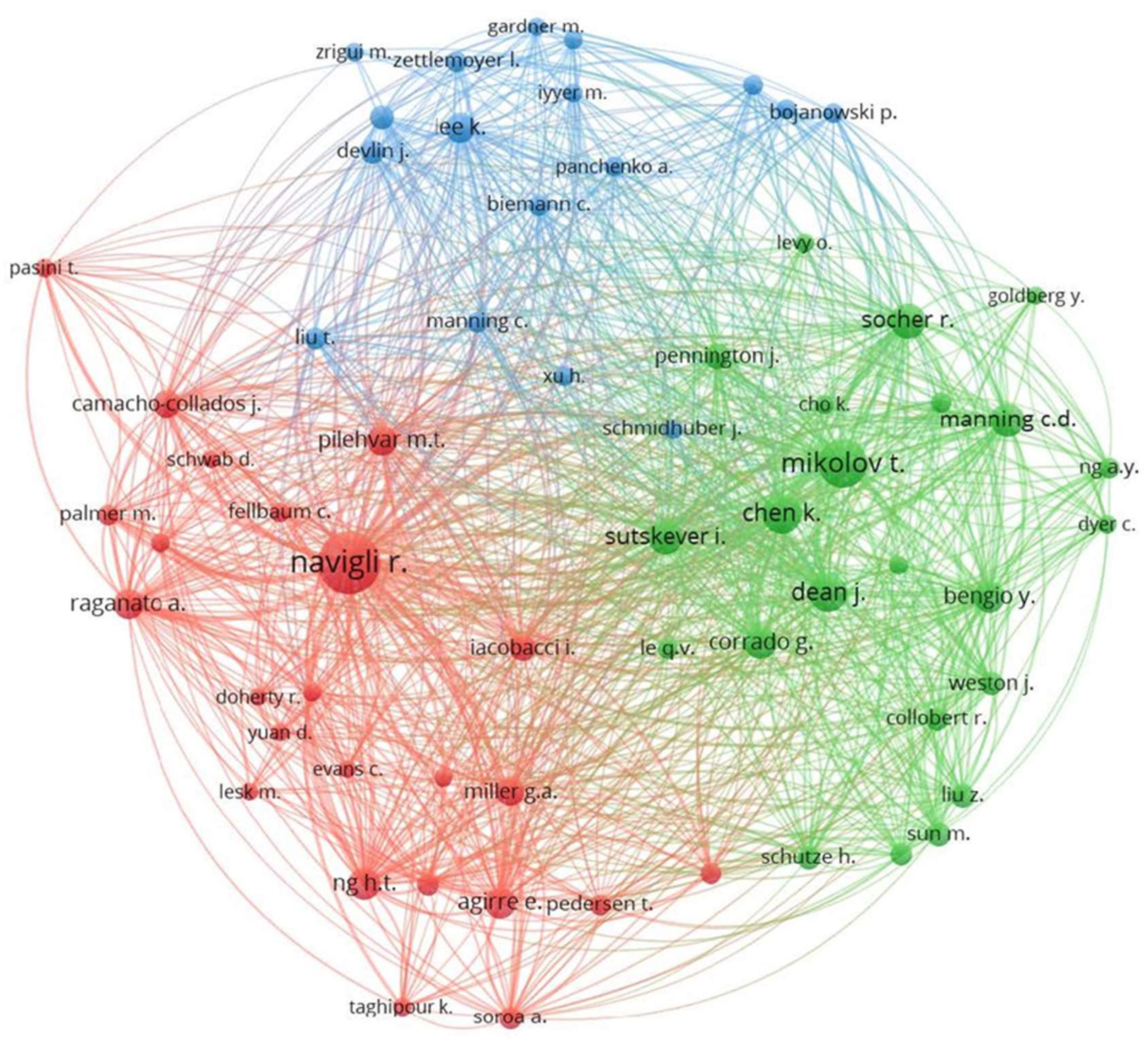

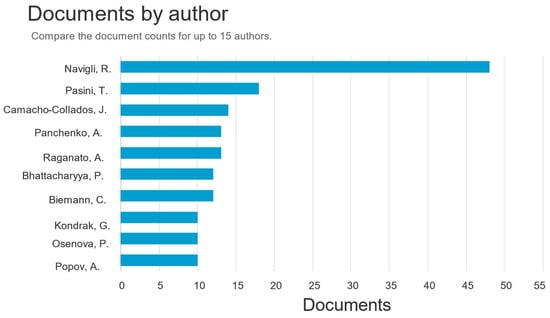

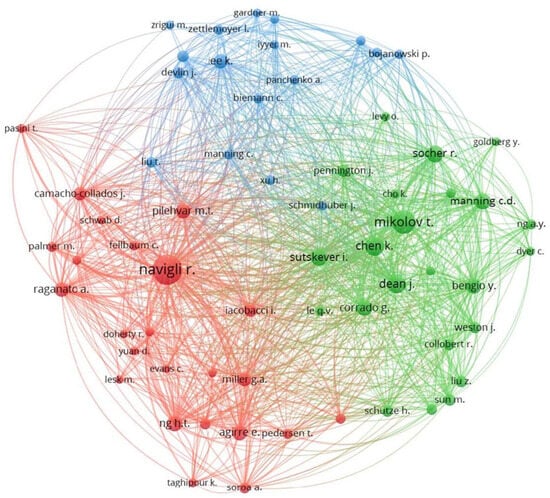

Figure 11 and Figure 12 demonstrate publications by authors for WSD in low-resourced languages. Professor Roberto Navigli has published more than 40 articles in Natural Language Processing, including word sense disambiguation for low-resourced languages. Professor Roberto Navigli is the creator of BabelNet, the largest multilingual encyclopedic computational dictionary. His research lies in Natural Language Processing, with a particular focus on multilingualism, lexical semantics (word sense disambiguation and induction), and sentence-level semantics (Semantic Role Labeling and Semantic Parsing). Professor Tommaso Pasini received his PhD in multilingual techniques to autonomously induce word sense distributions and build datasets for word sense disambiguation in many languages while working as a post-doc at the Sapienza NLP lab in Rome. To improve word sense disambiguation for languages with limited resources, Professor Tommaso Pasini and Professor Roberto Navigli co-authored several research articles.

Figure 11.

Word sense disambiguation by author.

Figure 12.

Bibliometric analysis by authors.

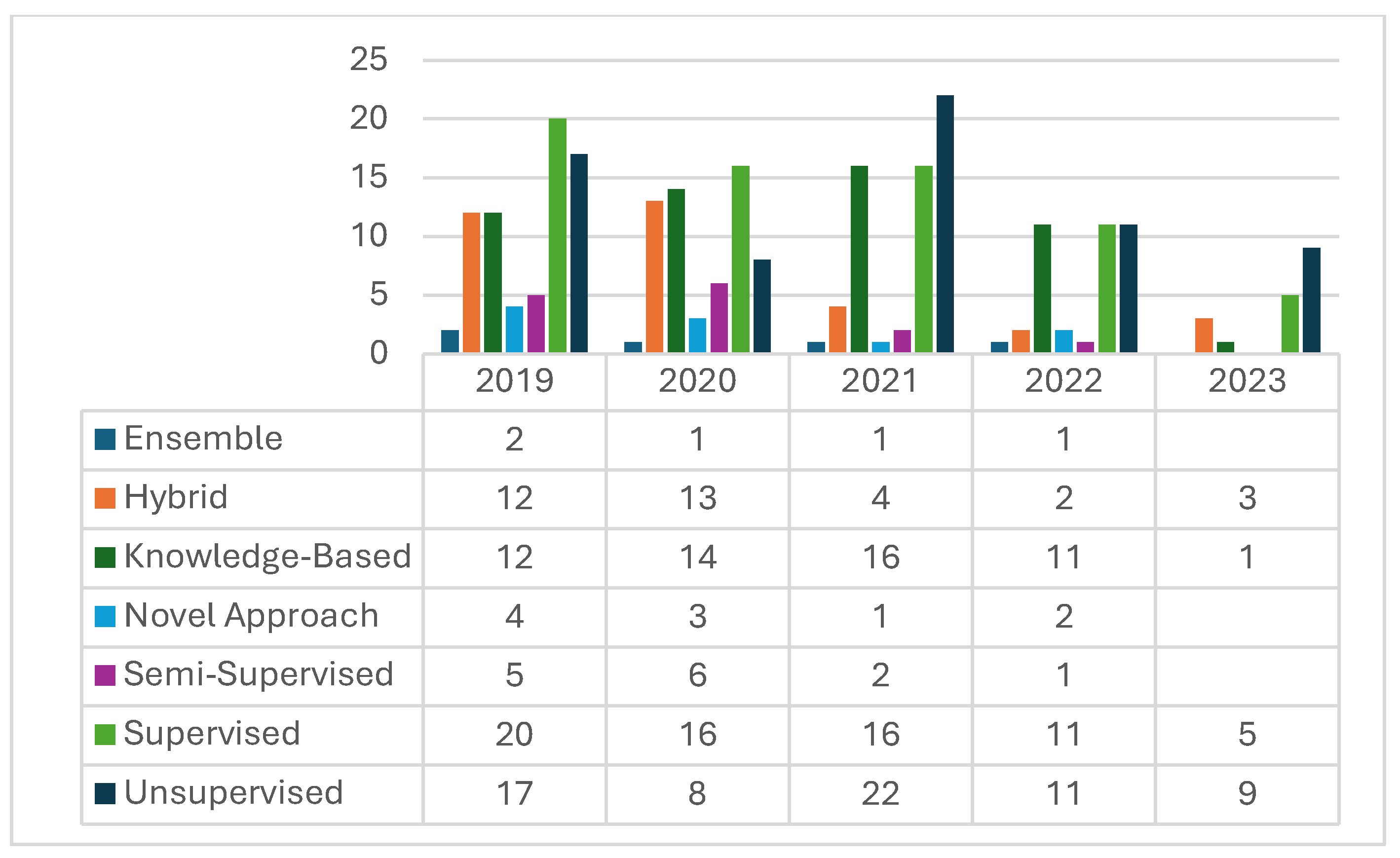

Figure 13 shows the publishing patterns for the years 2019 to 2023. There was a large increase in interest in word sense disambiguation methods from two publications in 2019 to a little over 20 in 2021 publications. The greatest number of research is reported for the year 2021. The most generally employed techniques are those that combine a supervised and unsupervised approach; Figure 13 illustrates the growing popularity of this strategy over the years under investigation. It should be mentioned that the study was conducted using data that were gathered up until 2023. As a result, there is a good chance that more publications will be made in journals later in 2023.

Figure 13.

Approaches per year.

The Springer, IEEE Xplore, SCOPUS, and Google Scholar databases were the primary sources of research articles in 2019 on supervised learning approaches for word sense disambiguation in low-resourced languages, with unsupervised learning coming in second. The number of publications produced by hybrid and knowledge-based approaches was comparable, but researchers in this discipline were not drawn to ensemble, innovative, or semi-supervised approaches. Though supervised learning remained at the forefront, the trend from 2020 had altered, with publications now advancing with knowledge-based approaches and hybrid techniques. Unsupervised learning articles peaked in 2021 and appear to continue continuing their upward trend in 2023. Articles published between 2019 and 2023 do not cover much deep learning or transformer architecture.

5. Conclusions

To statistically assess word sense disambiguation techniques based on supervised, unsupervised, and knowledge-based learning for low-resourced languages, this study used a systematic review and meta-analysis methodology. The meta-analysis was based on a database that was constructed with several factors associated with these techniques utilizing information from thirty-two scientific publications. Effect sizes, heterogeneity, meta-regression analysis, and publication bias were all taken into consideration for the included research. This resulted from the various sample sizes and methodology that the earlier studies employed. Three basic approaches—supervised, unsupervised, and knowledge-based learning—were applied in the literature.

The following deductions are a result of the findings:

- The most popular approaches for WSD for languages with limited resources were the supervised and unsupervised approaches;

- Each study’s sample size for determining the accuracy of the WSD differed greatly. There is a significant negative correlation between the sample size and the WSD method’s accuracy. This emphasizes how important it is to test WSD algorithms with many samples. Furthermore, a significant factor in the heterogeneity was the sample size used to calculate the WSD accuracy. The accuracy of a word sense disambiguation (WSD) approach tends to decline with increasing sample size, according to a substantial negative correlation between the two variables. There could be multiple reasons for a noteworthy inverse relationship between sample size and WSD accuracy, including (1) overfitting and complexity: Greater sample numbers lessen overfitting, but they also increase variability. If the model is not modified to accommodate this complexity, accuracy may suffer. (2) Data quality and diversity: If the model is unable to handle the extra variability, expanding the sample size may result in more noisy or diverse data, which could have a detrimental effect on accuracy. (3) Model adaptation: The accuracy of the WSD approach may be impacted by its unsuitability for larger datasets. (4) Evaluation sensitivity: Accuracy measurements may be impacted by larger datasets, revealing performance problems that are hidden in smaller datasets. Gaining an understanding of these variables can aid in the development of methods, such as improved data preparation, model tuning, and handling of data variability, to increase WSD accuracy with higher sample numbers;

- The study’s conclusions demonstrated the usefulness of the inclusion and exclusion criteria in minimizing bias by revealing the presence of heterogeneity and a negligible publication bias;

- Ultimately, the results of the meta-analysis demonstrated that the effectiveness of the many strategies put forth in the main research that was included was adequate to explore word sense disambiguation strategies for languages with limited resources.

This work’s meta-analysis helped to draw attention to the advancements in word meaning disambiguation methods, with a focus on supervised, unsupervised, deep learning, and knowledge-based techniques. The significance of knowledge-based, supervised, unsupervised, and deep learning approaches is further supported by review findings. The provision of clear, objective, and replicable summaries of word sense disambiguation methods is made possible by the meta-analysis. Considering the results, the study recognizes the significant correlation between sample size and word sense disambiguation accuracy for low-resourced languages. Understanding the most current advancements in the field of study is made easier by this perceptive meta-analysis. This meta-analysis is thought to showcase state-of-the-art techniques now in use and, more significantly, offer guidance for future research into cutting-edge techniques for word sense disambiguation.

The study may have been limited by the fact that the review was conducted on techniques that depend on supervised, unsupervised, knowledge-based learning models, semi-supervised, and transformer-based architecture models. Furthermore, as this study relies on literature from the SCOPUS, Springer, IEEE Xplore, and Google Scholar databases, it is recommended to do additional research using a variety of databases and performance evaluations other than accuracy.

Numerous prior research works on WSD in low-resource languages concentrate on surveying WSD and related fundamental issues, like the lack of annotated data, the efficacy of different approaches, and the requirement for language-specific modifications without meta-analysis. In addition to addressing the same issues as earlier research, the current systematic review and meta-analysis study offers a meta-analysis of how various WSD techniques function in low-resource scenarios. Both the current meta-analysis and earlier research assess a variety of WSD techniques, such as supervised, unsupervised, and semi-supervised methods. Consistent patterns or observations can be seen in the effectiveness of various strategies in low-resource environments. With the addition of more recent studies, the present meta-analysis reveals updated patterns, such as novel techniques that perform better across languages.

Because low-resource languages have limitations, previous research relied on conventional methodologies. Improved results are obtained from the current study since it uses more sophisticated methods, such as Transformer base architecture models and deep learning models. By using transformer-based designs, transfer learning, or domain adaptation techniques, the research builds on earlier investigations and may produce new or improved results.

Author Contributions

Conceptualization, H.D.M. and M.A.M.; methodology H.D.M. and M.A.M.; formal analysis H.D.M. and M.A.M.; data curation, H.D.M. and M.A.M.; writing—original draft preparation, H.D.M. and M.A.M.; writing—review and editing H.D.M. and M.A; visualization, H.D.M. and M.A.M.; supervision, S.O.O., F.G. and P.A.O.; project administration, H.D.M.; funding acquisition, M.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Research Foundation (NRF), grant number BAAP2204052075-PR-2023. Tshwane University of Technology has also availed funds for the research project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The material used in this study is publicly available from the sourced databases (Accessed on 23 June 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Farouk, G.M.; Ismail, S.S.; Aref, M.M. Transformer-Based Word Sense Disambiguation: Advancements, Impact, and Future Directions. In Proceedings of the 11th IEEE International Conference on Intelligent Computing and Information Systems, ICICIS 2023, Cairo, Egypt, 21–23 November 2023; IEEE: Piscatvey, NJ, USA, 2023; pp. 140–146. [Google Scholar] [CrossRef]

- Srivastav, A.; Tayal, D.K.; Agarwal, N. A Novel Fuzzy Graph Connectivity Measure to Perform Word Sense Disambiguation Using Fuzzy Hindi WordNet. In Proceedings of the 3rd IEEE 2022 International Conference on Computing, Communication, and Intelligent Systems, ICCCIS 2022, Greater Noida, India, 4–5 November 2022; IEEE: Piscatvey, NJ, USA, 2022; pp. 648–654. [Google Scholar] [CrossRef]

- Abdelaali, B.; Tlili-Guiassa, Y. Swarm optimization for Arabic word sense disambiguation based on English pre-trained word embeddings. In Proceedings of the ISIA 2022—International Symposium on Informatics and Its Applications, M’sila, Algeria, 29–30 November 2022; IEEE: Piscatvey, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Sert, B.; Elma, E.; Altinel, A.B. Enhancing the Performance of WSD Task Using Regularized GNNs With Semantic Diffusion. IEEE Access 2023, 11, 40565–40578. [Google Scholar] [CrossRef]

- Zhang, C.X.; Shao, Y.L.; Gao, X.Y. Word Sense Disambiguation Based on RegNet With Efficient Channel Attention and Dilated Convolution. IEEE Access 2023, 11, 130733–130742. [Google Scholar] [CrossRef]

- Nascimento, C.H.D.; Garcia, V.C.; Araújo, R.d.A. A Word Sense Disambiguation Method Applied to Natural Language Processing for the Portuguese Language. IEEE Open J. Comput. Soc. 2024, 5, 268–277. [Google Scholar] [CrossRef]

- Gahankari, A.; Kapse, A.S.; Atique, M.; Thakare, V.; Kapse, A.S. Hybrid approach for Word Sense Disambiguation in Marathi Language. In Proceedings of the 2023 4th IEEE Global Conference for Advancement in Technology, GCAT 2023, Bangalore, India, 6–8 October 2023; IEEE: Piscatvey, NJ, USA, 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, E.; Koh, Y.S. Supervised Clinical Abbreviations Detection and Normalisation Approach. In Proceedings of the 16th Pacific Rim International Conference on Artificial Intelligence, Proceedings, Part III, Cuvu, Yanuca Island, Fiji, 26–30 August 2019; pp. 691–703. [Google Scholar] [CrossRef]

- Kokane, C.D.; Babar, S.D.; Mahalle, P.N. Word Sense Disambiguation for Large Documents Using Neural Network Model. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies, ICCCNT 2021, Kharagpur, India, 6–8 July 2021; IEEE: Piscatvey, NJ, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Boruah, P. A Novel Approach to Word Sense Disambiguation for a Low-Resource Morphologically Rich Language. In Proceedings of the 2022 IEEE 6th Conference on Information and Communication Technology, CICT 2022, Gwalior, India, 18–20 November 2022; IEEE: Piscatvey, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Bis, D.; Zhang, C.; Liu, X.; He, Z. Layered Multistep Bidirectional Long Short-Term Memory Networks for Biomedical Word Sense Disambiguation. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; IEEE: Piscatvey, NJ, USA, 2018; pp. 313–320. [Google Scholar] [CrossRef]

- Shafi, J.; Nawab, R.M.A.; Rayson, P. Semantic Tagging for the Urdu Language: Annotated Corpus and Multi-Target Classification Methods. ACM Trans. Asian Low Resour. Lang. Inf. Process. 2023, 22, 175. [Google Scholar] [CrossRef]

- Bakx, G.E. Machine Learning Techniques for Word Sense Disambiguation. Universitat Politµecnica de Catalunya. 2006. Available online: https://www.lsi.upc.edu/~escudero/wsd/06-tesi.pdf (accessed on 27 June 2024).

- Pal, A.R.; Saha, D. Word Sense Disambiguation: A Survey. Int. J. Control Theory Comput. Model. 2015, 5, 1–16. [Google Scholar] [CrossRef]

- Hladek, D.; Stas, J.; Pleva, M.; Ondas, S.; Kovacs, L. Survey of the Word Sense Disambiguation and Challenges for the Slovak Language. In Proceedings of the 17th IEEE International Symposium on Computational Intelligence and Informatics, Budapest, Hungary, 23–26 May 2023; IEEE: Piscatvey, NJ, USA, 2016; pp. 225–230. [Google Scholar] [CrossRef]

- Sarmah, J.; Sarma, S.K. Word Sense Disambiguation for Assamese. In Proceedings of the 2016 IEEE 6th International Conference on Advanced Computing (IACC), Bhimavaram, India, 27–28 February 2016; IEEE: Piscatvey, NJ, USA, 2016; pp. 146–151. [Google Scholar] [CrossRef]

- Zhang, C.; Biś, D.; Liu, X.; He, Z. Biomedical word sense disambiguation with bidirectional long short-term memory and attention-based neural networks. In Proceedings of the International Conference on Bioinformatics and Biomedicine 2018, Madrid, Spain, 3–6 December 2018; IEEE: Piscatvey, NJ, USA, 2019; pp. 1–15. [Google Scholar] [CrossRef]

- Mohd, M.; Jan, R.; Hakak, N. Enhanced Bootstrapping Algorithm for Automatic Annotation of Tweets. Int. J. Cogn. Inform. Nat. Intell. 2021, 14, 35–60. [Google Scholar] [CrossRef]

- Jarray, F.; Saidi, R. Combining Bert Representation and POS Tagger for Arabic Word Sense Disambiguation Combining Bert representation and POS tagger for Arabic Word Sense Disambiguation. Intell. Syst. Des. Appl. ISDA 2021 2023, 418, 1–11. [Google Scholar] [CrossRef]

- Jaber, A.; Martínez, P. Disambiguating Clinical Abbreviations using Pre-trained Word Embeddings. Healthinf 2021, 5, 501–508. [Google Scholar] [CrossRef]

- Rios, A.; Müller, M.; Sennrich, R. The Word Sense Disambiguation Test Suite at WMT18. In Proceedings of the Third Conference on Machine Translation: Shared Task Papers 2018, Belgium, Brussels, 27 July 2018; pp. 588–596. [Google Scholar] [CrossRef]

- Ranjan, A.; Diganta, S.; Naskar, K.S.; Sekhar, N. In search of a suitable method for disambiguation of word senses in Bengali. Int. J. Speech Technol. 2021, 24, 439–454. [Google Scholar] [CrossRef]

- Singh, V.P.; Bhatia, P. Naive Bayes Classifier for Word Sense Disambiguation of Punjabi Language. Malays. J. Comput. Sci. 2018, 31, 188–199. [Google Scholar] [CrossRef]

- Aliwy, A.H.; Taher, H.A. Word Sense Disambiguation: Survey study. J. Comput. Sci. 2019, 15, 1004–1011. [Google Scholar] [CrossRef]

- Ranjan, A.; Diganta, S.; Sekhar, D.N.; Pal, A. Word Sense Disambiguation in Bangla Language Using Supervised Methodology with Necessary Modifi cations. J. Inst. Eng. Ser. B 2018, 99, 519–526. [Google Scholar] [CrossRef]

- Singh, V.P.; Kumar, P. Sense disambiguation for Punjabi language using supervised machine learning techniques. Sādhanā 2019, 44, 2269. [Google Scholar] [CrossRef]

- Jain, G.; Lobiyal, D.K. Word sense disambiguation using implicit information. Nat. Lang. Eng. 2020, 26, 413–432. [Google Scholar] [CrossRef]

- Jha, P.; Agarwal, S.; Abbas, A.; Siddiqui, T.J. A Novel Unsupervısed Graph—Based Algorıthm for Hindi Word Sense Disambiguation. SN Comput. Sci. 2023, 4, 675. [Google Scholar] [CrossRef]

- Chasin, R.; Rumshisky, A.; Uzuner, O.; Szolovits, P. Word sense disambiguation in the clinical domain: A comparison of knowledge-rich and knowledge- poor unsupervised methods. J. Am. Med. Inf. Assoc. 2014, 21, 792–800. [Google Scholar] [CrossRef] [PubMed]

- Jha, P.; Agarwal, S.; Abbas, A.; Siddiqui, T. Comparative Analysis of Path-based Similarity Measures for Word Sense Disambiguation. In Proceedings of the 2023 3rd International conference on Artificial Intelligence and Signal Processing (AISP), Vijayawada, India, 18–20 March 2023; IEEE: Piscatvey, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Hou, B.; Qi, F.; Zang, Y.; Zhang, X.; Liu, Z.; Sun, M. Try to Substitute: An Unsupervised Chinese Word Sense Disambiguation Method Based on HowNet. In Proceedings of the COLING 2020—28th International Conference on Computational Linguistics, Proceedings of the Conference, Barcelona, Spain, 8–13 December 2023; International Committee on Computational Linguistics: New York, NY, USA, 2020; pp. 1752–1757. [Google Scholar] [CrossRef]

- Alian, M.; Awajan, A. Sense Inventories for Arabic Texts. In Proceedings of the 21st International Arab Conference on Information Technology (ACIT), Giza, Egypt, 6–8 December 2020; IEEE: Piscatvey, NJ, USA, 2020; pp. 3–6. [Google Scholar] [CrossRef]

- Pal, A.R.; Saha, D.; Naskar, S.K. Word Sense Disambiguation in Bengali: A Knowledge based Approach using Bengali WordNet. In Proceedings of the 2017 Second International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 22–24 February 2017; IEEE: Piscatvey, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Meng, F. Word Sense Disambiguation Based on Graph and Knowledge Base. In Proceedings of the 4th EAI International Conference on Robotic Sensor Networks, online, 21–22 November 2022; Springer: Cham, Switzerland, 2022; pp. 31–41. [Google Scholar] [CrossRef]

- Neeraja, K.; Rani, B.P. Approaches for Word Sense Disambiguation: Current State of The Art. Int. J. Electron. Commun. Comput. Eng. 2015, 6, 197–201. Available online: https://www.ijecce.org (accessed on 27 June 2024).

- Arbaaeen, A.; Shah, A. A knowledge-based sense disambiguation method to semantically enhanced NL question for restricted domain. Information 2021, 12, 452. [Google Scholar] [CrossRef]

- Choi, Y.; Wiebe, J.; Mihalcea, R. Coarse-Grained +/−Effect Word Sense Disambiguation for Implicit Sentiment Analysis. IEEE Trans. Affect. Comput. 2017, 8, 471–479. [Google Scholar] [CrossRef]

- Jia, Y.; Li, Y.; Zan, H. Acquiring Selectional Preferences for Knowledge Base. CLSW 2018, 10709, 275–283. [Google Scholar] [CrossRef]

- Godinez, E.V.; Szlávik, Z.; Contempré, E.; Sips, R.J. What do you mean, doctor? A knowledge-based approach for word sense disambiguation of medical terminology. In Proceedings of the 14th International Conference on Health Informatics, Vienna, Austria, 11–13 February 2021; Volume 5, pp. 273–280. [Google Scholar] [CrossRef]

- Popov, A.; Simov, K.; Osenova, P. Know your graph. State-of-the-art knowledge-based WSD. In Proceedings of the International Conference Recent Advances in Natural Language Processing, RANLP, Varna, Bulgaria, 4–6 September 2023; ACL Anthology: Shoumen, Bulgaria, 2019; pp. 949–958. [Google Scholar] [CrossRef]

- Sharma, P.; Joshi, N. Knowledge-Based Method for Word Sense Disambiguation by Using Hindi WordNet. Eng. Technol. Appl. Sci. Res. 2019, 9, 3985–3989. [Google Scholar] [CrossRef]

- Rouhizadeh, H.; Shamsfard, M.; Rouhizadeh, M. Knowledge Based Word Sense Disambiguation with Distributional. In Proceedings of the 10th International Conference on Computer and Knowledge Engineering (ICCKE2020), Mashhad, Iran, 29–30 October 2020; IEEE: Piscatvey, NJ, USA, 2020; pp. 329–335. [Google Scholar] [CrossRef]

- Demlew, G.; Yohannes, D. Resolving Amharic Lexical Ambiguity using Neural Word Embedding. In Proceedings of the 2022 International Conference on Information and Communication Technology for Development for Africa (ICT4DA), Bahir Dar, Ethiopia, 28–30 November 2022; IEEE: Piscatvey, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Fard, M.H.; Fakhrahmad, S.M.; Sadreddini, M. Word Sense Disambiguation based on Gloss Expansion. In Proceedings of the 2014 6th Conference on Information and Knowledge Technology (IKT), Shahrood, Iran, 28–30 May 2014; IEEE: Piscatvey, NJ, USA, 2014; pp. 7–10. [Google Scholar] [CrossRef]

- Sabbir, A.K.M.; Jimeno-yepes, A.; Kavuluru, R. Knowledge-Based Biomedical Word Sense Disambiguation with Neural Concept Embeddings. In Proceedings of the IEEE 17th International Conference on Bioinformatics and Bioengineering (BIBE), Washington, DC, USA, 23–25 October 2017; IEEE: Piscatvey, NJ, USA, 2018; pp. 63–170. [Google Scholar] [CrossRef]

- Dhungana, U.R.; Shakya, S. Word sense disambiguation using PolyWordNet. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; IEEE: Piscatvey, NJ, USA, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Hari, A.; Kumar, P. WSD based Ontology Learning from Unstructured Text using Transformer. In Procedia Computer Science; Elsevier: Amsterdam, The Netherlands, 2022; pp. 367–374. [Google Scholar] [CrossRef]

- Butnaru, A.M.; Ionescu, R.T. ShotgunWSD 2.0: An Improved Algorithm for Global Word Sense Disambiguation. IEEE Access 2019, 7, 120961–120975. [Google Scholar] [CrossRef]

- Karnik, M.; Mishra, V.; Gaikwad, V.; Wankhede, D.; Chougale, P.; Pophale, V.; Zope, A.; Maski, C. State of the Art Analysis of Word Sense Disambiguation(ICICSD). Int. Conf. Intell. Comput. Sustain. Dev. 2024, 2122, 55–70. [Google Scholar] [CrossRef]

- Al-hajj, M.; Jarrar, M. ArabGlossBERT: Fine-Tuning BERT on Context-Gloss Pairs for WSD. Comput. Lang. 2022, 40–48. [Google Scholar] [CrossRef]

- Yusuf, M.; Surana, P.; Sharma, C. HindiWSD: A Package for Word Sense Disambiguation in Hinglish & Hindi. In Proceedings of the WILDRE-6 Workshop @LREC2020; Girish Nath Jha, A.K.O., Sobha, L., Bali, K., Eds.; European Language Resources Association (ELRA): Marseille, France, 2022; pp. 18–23. Available online: https://aclanthology.org/2022.wildre-1.4/ (accessed on 27 June 2024).

- Gujjar, V.; Mago, N.; Kumari, R.; Patel, S.; Chintalapudi, N.; Battineni, G. A Literature Survey on Word Sense Disambiguation for the Hindi Language. Information 2023, 14, 495. [Google Scholar] [CrossRef]

- Pal, A.R.; Saha, D. Word Sense Disambiguation in Bengali language using unsupervised methodology with modifications. Sadhana 2019, 44, 168. [Google Scholar] [CrossRef]

- Torunoğlu-Selamet, D.; İnceoğlu, A.; Eryiğit, G. Preliminary Investigation on Using Semi-Supervised Contextual Word Sense Disambiguation for Data Augmentation. In Proceedings of the 2020 5th International Conference on Computer Science and Engineering (UBMK), Diyarbakir, Turkey, 9–11 September 2020; IEEE: Piscatvey, NJ, USA, 2020; pp. 4–9. [Google Scholar] [CrossRef]

- Alessio, I.D.; Quaglieri, A.; Burrai, J.; Pizzo, A.; Mari, E.; Aitella, U.; Lausi, G.; Tagliaferri, G.; Cordellieri, P.; Giannini, A.M.; et al. Behavioral sciences ‘Leading through Crisis’: A Systematic Review of Institutional Decision-Makers in Emergency Contexts. Behav. Sci. 2024, 14, 481. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Necula, S.C.; Dumitriu, F.; Greavu-Șerban, V. A Systematic Literature Review on Using Natural Language Processing in Software Requirements Engineering. Electronics 2024, 13, 2055. [Google Scholar] [CrossRef]

- Albaroudi, E.; Mansouri, T.; Alameer, A. A Comprehensive Review of AI Techniques for Addressing Algorithmic Bias in Job Hiring. AI 2024, 5, 383–404. [Google Scholar] [CrossRef]

- Thompson, R.C.; Joseph, S.; Adeliyi, T.T. A Systematic Literature Review and Meta-Analysis of Studies on Online Fake News Detection. Information 2022, 13, 527. [Google Scholar] [CrossRef]

- Iomdin, B.; Lopukhina, A.; Lopukhin, K.; Nosyrev, G. Word sense frequency of similar polysemous words in different languages. In Computational Linguistics and Intellectual Technologies: Proceedings of the International Conference “Dialogue 2016”, Moscow, Russia, 1–4 June 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 214–22564742146. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).