AquaVision: AI-Powered Marine Species Identification

Abstract

1. Introduction

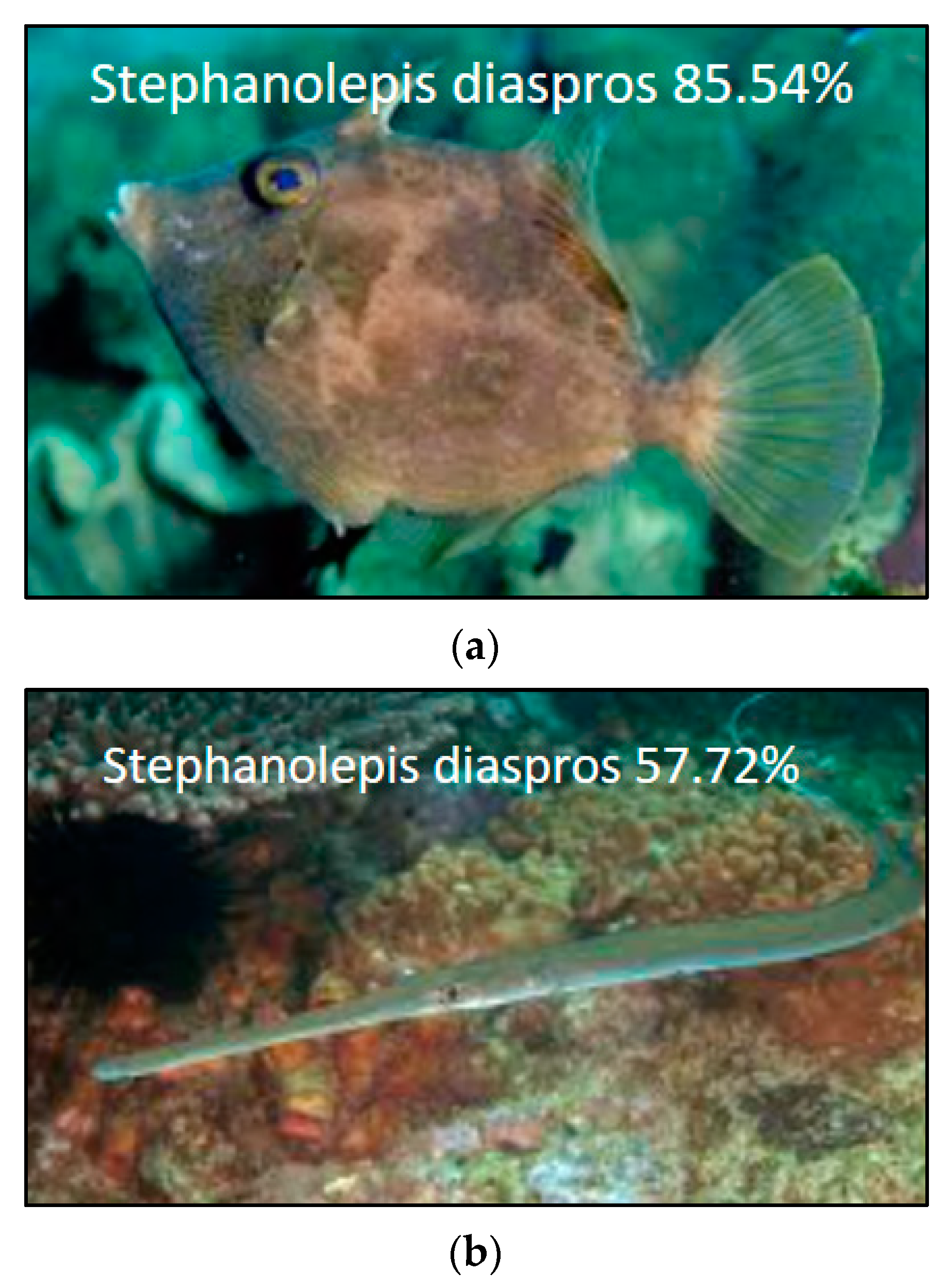

2. Materials and Methods

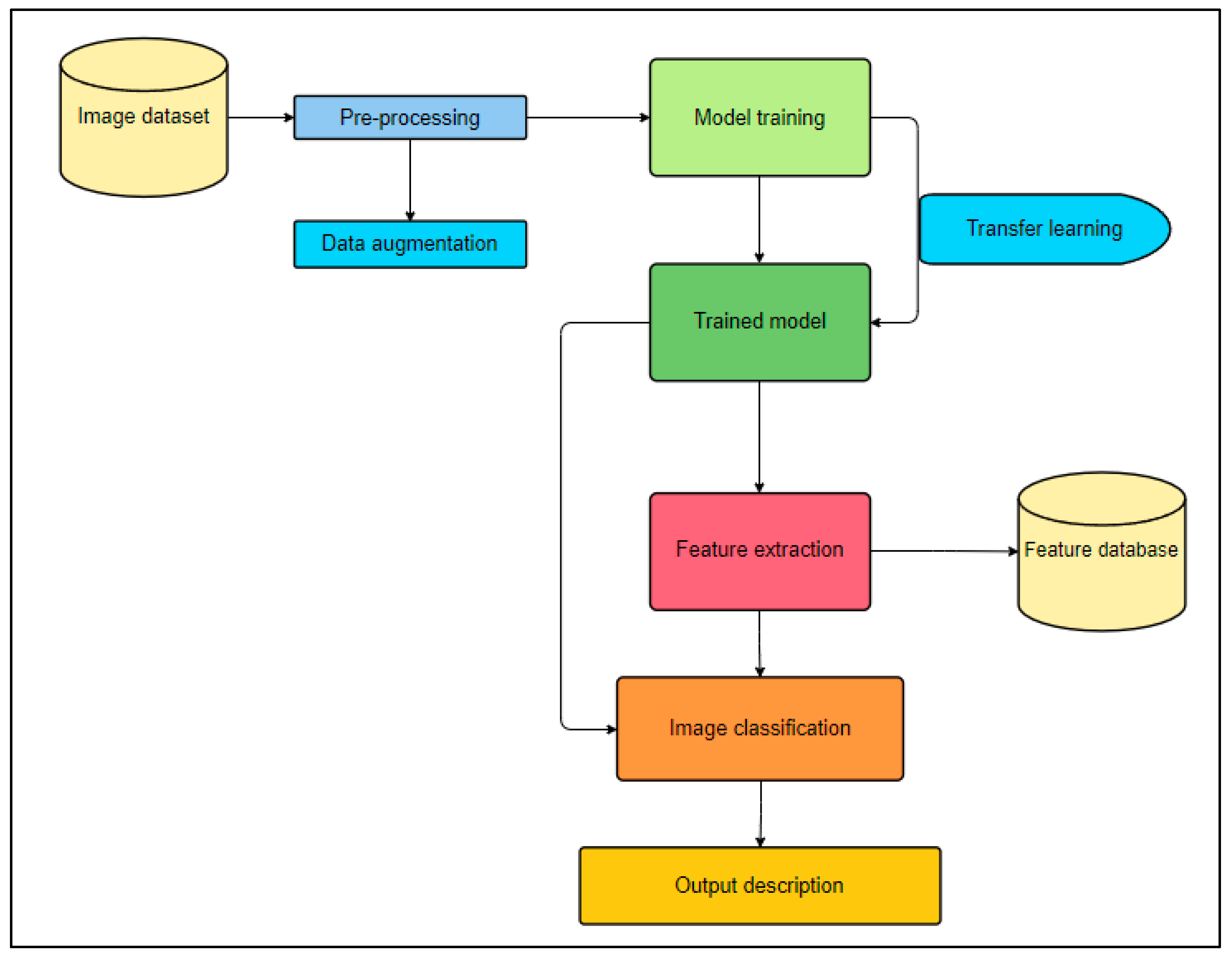

2.1. Framework

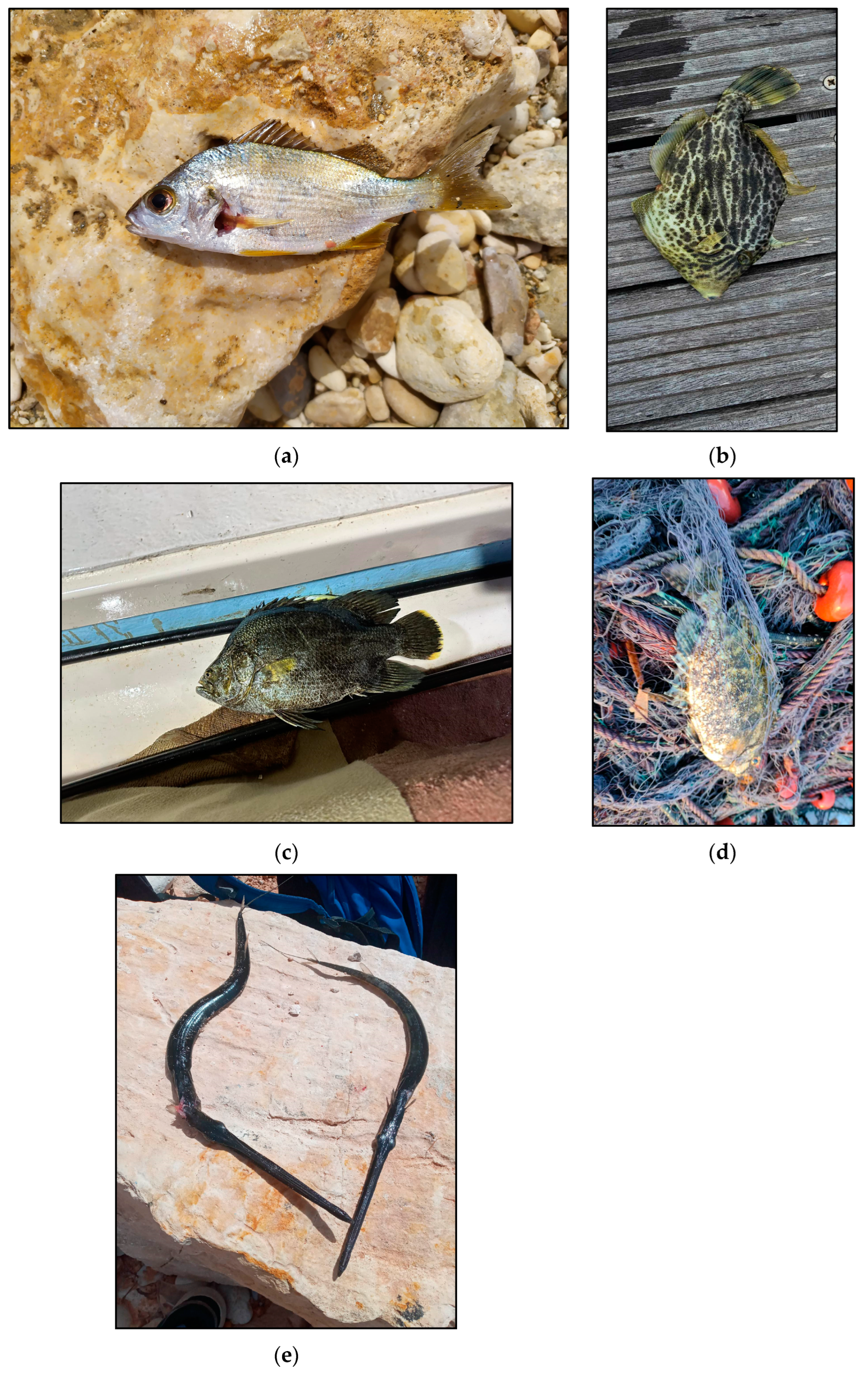

2.2. Generation of Image Dataset

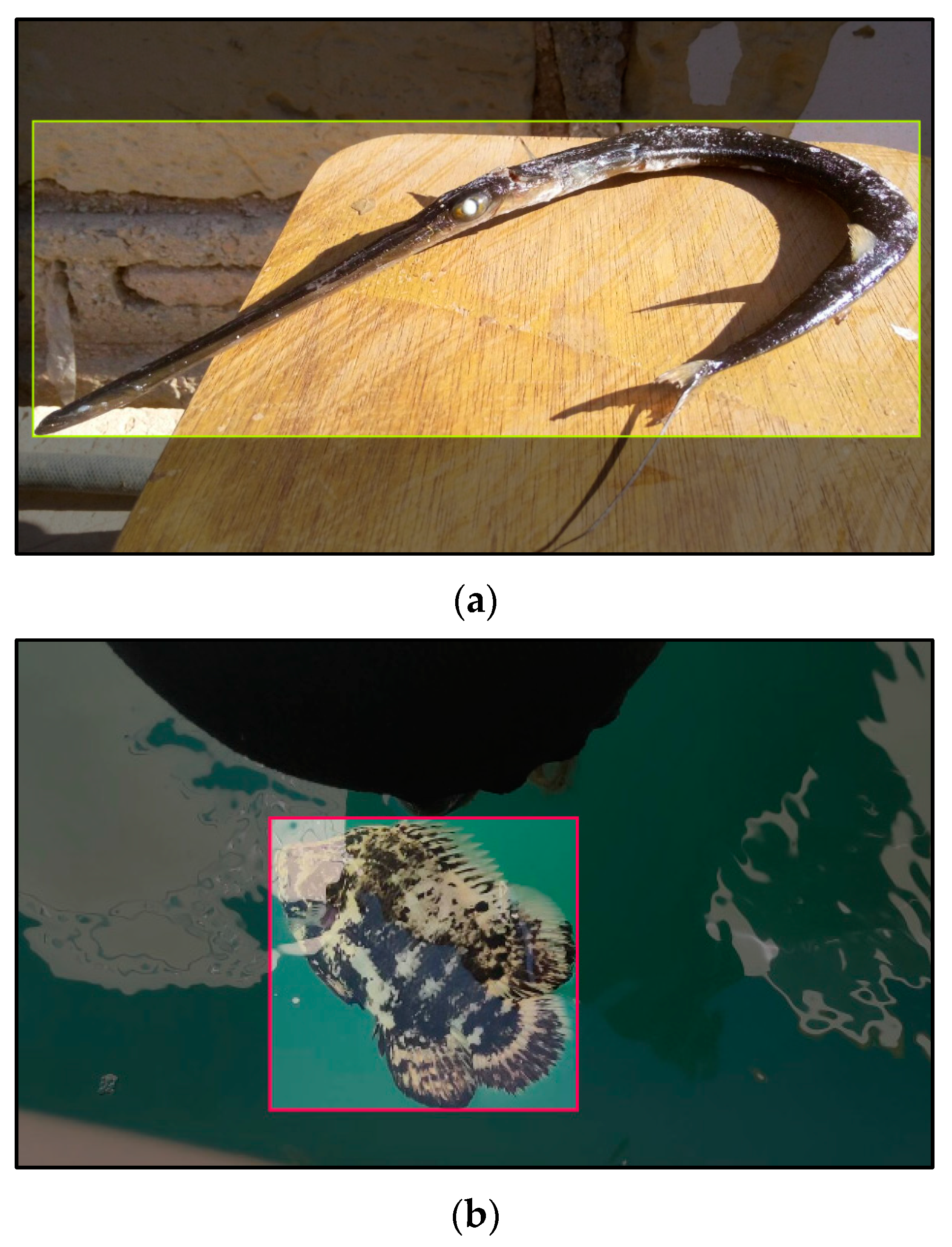

2.3. Development of the Classification Models

3. Results

3.1. Confusion Matrices

3.2. Error Metrics

3.3. Model Performance Metrics

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fleuré, V.; Magneville, C.; Mouillot, D.; Sébastien, V. Automated identification of invasive rabbitfishes in underwater images from the Mediterranean Sea. Aquat. Conserv. 2024, 34, e4073. [Google Scholar] [CrossRef]

- Azzurro, E.; Smeraldo, S.; D’Amen, M. Spatio-temporal dynamics of exotic fish species in the Mediterranean Sea: Over a century of invasion reconstructed. Glob. Change Biol. 2022, 28, 6268–6279. [Google Scholar] [CrossRef] [PubMed]

- Shaltout, M.; Omstedt, A. Recent sea surface temperature trends and future scenarios for the Mediterranean Sea. Oceanologia 2014, 56, 411–443. [Google Scholar] [CrossRef]

- Ovalle, J.C.; Vilas, C.; Antelo, L.T. On the use of deep learning for fish species recognition and quantification on board fishing vessels. Mar. Policy 2022, 139, 105015. [Google Scholar] [CrossRef]

- Evans, J.; Barbara, J.; Schembri, P.J. Updated review of marine alien species and other “newcomers” recorded from the Maltese Islands (Central Mediterranean). Mediterr. Mar. Sci. 2015, 16, 225. [Google Scholar] [CrossRef]

- Li, J.; Gray, R.M.; Olshen, R.A. Multiresolution image classification by hierarchical modeling with two-dimensional hidden Markov models. IEEE Trans. Inf. Theory 2000, 46, 1826–1841. [Google Scholar]

- Riley, S. Preventing Transboundary Harm From Invasive Alien Species. Rev. Eur. Community Int. Environ. Law 2009, 18, 198–210. [Google Scholar] [CrossRef]

- Occhipinti-Ambrogi, A.; Galil, B. Marine alien species as an aspect of global change. Adv. Oceanogr. Limnol. 2010, 1, 199–218. [Google Scholar] [CrossRef]

- Galil, B.S. Loss or gain? Invasive aliens and biodiversity in the Mediterranean Sea. Mar. Pollut. Bull. 2007, 55, 314–322. [Google Scholar] [CrossRef] [PubMed]

- Galanidi, M.; Zenetos, A.; Bacher, S. Assessing the socio-economic impacts of priority marine invasive fishes in the Mediterranean with the newly proposed SEICAT methodology. Mediterr. Mar. Sci. 2018, 19, 107. [Google Scholar] [CrossRef]

- Azzurro, E.; Soto, S.; Garofalo, G.; Maynou, F. Fistularia commersonii in the Mediterranean Sea: Invasion history and distribution modelling based on presence-only records. Biol. Invasions 2012, 15, 977–990. [Google Scholar] [CrossRef]

- Deidun, A.; Vella, P.; Sciberras, A.; Sammut, R. New records of Lobotes surinamensis (Bloch, 1790) in Maltese coastal waters. Aquat. Invasions 2010, 5 (Suppl. 1), S113–S116. [Google Scholar] [CrossRef]

- Pešić, A.; Marković, O.; Joksimović, A.; Ćetković, I.; Jevremović, A. Invasive Marine Species in Montenegro Sea Waters. In The Handbook of Environmental Chemistry; Springer: Berlin/Heidelberg, Germany, 2020; pp. 547–572. [Google Scholar] [CrossRef]

- Xu, L.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. Deep Learning for Marine Species Recognition. In Smart Innovation, Systems and Technologies; Springer: Berlin/Heidelberg, Germany, 2019; pp. 129–145. [Google Scholar] [CrossRef]

- Villon, S.; Mouillot, D.; Chaumont, M.; Darling, E.S.; Subsol, G.; Claverie, T.; Villéger, S. A Deep learning method for accurate and fast identification of coral reef fishes in underwater images. Ecol. Inform. 2018, 48, 238–244. [Google Scholar] [CrossRef]

- Catalán, I.A.; Álvarez-Ellacuría, A.; Lisani, J.-L.; Sánchez, J.; Vizoso, G.; Heinrichs-Maquilón, A.E.; Hinz, H.; Alós, J.; Signarioli, M.; Aguzzi, J.; et al. Automatic detection and classification of coastal Mediterranean fish from underwater images: Good practices for robust training. Front. Mar. Sci. 2023, 10, 1151758. [Google Scholar] [CrossRef]

- Gauci, A.; Deidun, A.; Abela, J. Automating Jellyfish Species Recognition through Faster Region-Based Convolution Neural Networks. Appl. Sci. 2020, 10, 8257. [Google Scholar] [CrossRef]

- Rum, S.N.M.; Az, F. FishDeTec: A Fish Identification Application using Image Recognition Approach. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 102–106. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Computer Vision and Artificial Intelligence for Fish Recognition, Monitoring, and Management. Fishes 2022, 7, 335. [Google Scholar] [CrossRef]

- Hassoon, M.I. Fish Species Identification Techniques: A Review. Al-Nahrain J. Sci. 2022, 25, 39–44. [Google Scholar] [CrossRef]

- Ma, Y.-X.; Zhang, P.; Tang, Y. Research on Fish Image Classification Based on Transfer Learning and Convolutional Neural Network Model. In Proceedings of the 2018 14th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Huangshan, China, 28–30 July 2018. [Google Scholar] [CrossRef]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Fawzi, A.; Samulowitz, H.; Turaga, D.; Frossard, P. Adaptive data augmentation for image classification. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3688–3692. [Google Scholar] [CrossRef]

- Marom, N.D.; Rokach, L.; Shmilovici, A. Using the confusion matrix for improving ensemble classifiers. In Proceedings of the 2010 IEEE 26th Convention of Electrical and Electronics Engineers in Israel, Eilat, Israel, 17–20 November 2010; pp. 555–559. [Google Scholar] [CrossRef]

- Villon, S.; Iovan, C.; Mangeas, M.; Claverie, T.; Mouillot, D.; Villéger, S.; Vigliola, L. Automatic underwater fish species classification with limited data using few-shot learning. Ecol. Inform. 2021, 63, 101320. [Google Scholar] [CrossRef]

| Species | Number of Training Images | Number of Validation Images | Number of Testing Images |

|---|---|---|---|

| Fistularia commersonii | 277 | 79 | 41 |

| Lobotes surinamensis | 203 | 58 | 30 |

| Pomadasys incisus | 123 | 35 | 19 |

| Siganus luridus | 177 | 50 | 26 |

| Stephanolepis diaspros | 153 | 43 | 23 |

| Actual | ||||||

|---|---|---|---|---|---|---|

| Model | Fistularia commersonii | Lobotes surinamensis | Pomadasys incisus | Siganus luridus | Stephanolepis diaspros | |

| TF.Keras | Fistularia commersonii | 24 | 2 | 8 | 4 | 3 |

| Lobotes surinamensis | 4 | 20 | 3 | 1 | 2 | |

| Pomadasys incisus | 3 | 2 | 11 | 1 | 2 | |

| Siganus luridus | 4 | 2 | 3 | 12 | 5 | |

| Stephanolepis diaspros | 3 | 0 | 3 | 5 | 12 | |

| ResNet18 | Fistularia commersonii | 40 | 0 | 0 | 1 | 0 |

| Lobotes surinamensis | 1 | 28 | 0 | 0 | 1 | |

| Pomadasys incisus | 0 | 0 | 18 | 1 | 0 | |

| Siganus luridus | 1 | 1 | 1 | 21 | 2 | |

| Stephanolepis diaspros | 1 | 2 | 0 | 0 | 20 | |

| YOLO v8 * | Fistularia commersonii | 32 | 0 | 0 | 0 | 0 |

| Lobotes surinamensis | 2 | 17 | 0 | 4 | 1 | |

| Pomadasys incisus | 1 | 0 | 9 | 1 | 0 | |

| Siganus luridus | 1 | 0 | 6 | 20 | 0 | |

| Stephanolepis diaspros | 1 | 1 | 0 | 0 | 15 | |

| Model | Species | Precision | Recall | f1 Score | Accuracy |

|---|---|---|---|---|---|

| TF.Keras | Fistularia commersonii | 0.63 | 0.59 | 0.61 | 0.57 |

| Lobotes surinamensis | 0.77 | 0.67 | 0.71 | ||

| Pomadasys incisus | 0.39 | 0.58 | 0.47 | ||

| Siganus luridus | 0.52 | 0.46 | 0.49 | ||

| Stephanolepis diaspros | 0.50 | 0.52 | 0.51 | ||

| ResNet18 | Fistularia commersonii | 0.98 | 0.93 | 0.95 | 0.91 |

| Lobotes surinamensis | 0.93 | 0.90 | 0.92 | ||

| Pomadasys incisus | 0.95 | 0.95 | 0.94 | ||

| Siganus luridus | 0.81 | 0.91 | 0.86 | ||

| Stephanolepis diaspros | 0.87 | 0.87 | 0.87 | ||

| YOLO v8 | Fistularia commersonii | 0.86 | 1.00 | 0.93 | 0.84 |

| Lobotes surinamensis | 0.94 | 0.71 | 0.81 | ||

| Pomadasys incisus | 0.60 | 0.82 | 0.69 | ||

| Siganus luridus | 0.80 | 0.74 | 0.77 | ||

| Stephanolepis diaspros | 0.94 | 0.88 | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mifsud Scicluna, B.; Gauci, A.; Deidun, A. AquaVision: AI-Powered Marine Species Identification. Information 2024, 15, 437. https://doi.org/10.3390/info15080437

Mifsud Scicluna B, Gauci A, Deidun A. AquaVision: AI-Powered Marine Species Identification. Information. 2024; 15(8):437. https://doi.org/10.3390/info15080437

Chicago/Turabian StyleMifsud Scicluna, Benjamin, Adam Gauci, and Alan Deidun. 2024. "AquaVision: AI-Powered Marine Species Identification" Information 15, no. 8: 437. https://doi.org/10.3390/info15080437

APA StyleMifsud Scicluna, B., Gauci, A., & Deidun, A. (2024). AquaVision: AI-Powered Marine Species Identification. Information, 15(8), 437. https://doi.org/10.3390/info15080437