Abstract

Acoustic management is very important for detecting possible events in the context of a smart environment (SE). In previous works, we proposed a reflective middleware for acoustic management (ReM-AM) and its autonomic cycles of data analysis tasks, along with its ontology-driven architecture. In this work, we aim to develop an emotion-recognition system for ReM-AM that uses sound events, rather than speech, as its main focus. The system is based on a sound pattern for emotion recognition and the autonomic cycle of intelligent sound analysis (ISA), defined by three tasks: variable extraction, sound data analysis, and emotion recommendation. We include a case study to test our emotion-recognition system in a simulation of a smart movie theater, with different situations taking place. The implementation and verification of the tasks show a promising performance in the case study, with 80% accuracy in sound recognition, and its general behavior shows that it can contribute to improving the well-being of the people present in the environment.

1. Introduction

As exposed in the work of Ref. [1], emotion recognition has experienced exponential growth in recent years due to its extensive application in the interaction between humans and computers. In the vast field of multimodal emotion recognition, explained in Ref. [2], the data sources for an emotion recognition system can be very diverse (images, audio, text, physical state), and they must contribute to an overall management that combines all the individual emotional knowledge acquired to improve the quality of emotion prediction [3].

The main research in the field of emotion recognition in audio is related to speech. In Ref. [4], the authors developed new techniques for an automated speech emotion recognition (SER) system, based on features such as spectral entropy and classifiers based on deep neural networks. The work of Ref. [5] is also related to SER but uses neural networks for recognition, with its main concern being the noise that interferes with speech recognition. In the music aspect, Li et al. [6] show an interesting approach to address an improved SER using music theory to study the acoustics of both vocal and auditory expressions. On the other hand, Zhang et al. [7] use the SER with complementary acoustic representations by augmenting data on vocal tract length perturbation to predict the frequency distribution of emotions.

However, the challenge in regards to movie dubbing (visual voice cloning, V2C) involves generating speech that matches video timing and emotion. Current V2C models align phonemes with video frames, but this causes incomplete pronunciation and identity instability. To address this, StyleDubber is introduced [8], which shifts dubbing learning to the phoneme level. It includes: (1) a multimodal style adaptor to learn pronunciation style and incorporate video emotion, (2) an utterance-level style learning module to enhance style expression, and (3) a phoneme-guided lip aligner for better lip-sync alignment. To address the problem of variations in emotion, pace, and background in movie dubbing, a two-stage dubbing method is proposed in Ref. [9]. First, a phoneme encoder is pre-trained on a large text–speech corpus to learn clear pronunciation. Second, a prosody consistency learning module bridges emotional expression with phoneme-level attributes, while a duration consistency module ensures lip-sync alignment. Finally, a dubbing architecture is proposed in Ref. [10], using hierarchical prosody modeling to link visual cues (lip movement, facial expressions, and scenes) to speech prosody. Lip movements align with speech duration, while facial expressions influence energy and pitch.

While these works represent a significant contribution to emotion recognition, their focus is mainly on speech. Methods based on emotion recognition from sound traditionally process speech. In this work, our main motivation is to achieve an emotion recognition system without considering speech, having the random sound events of the context as a main input. Its real application is linked to environments where speech is difficult to process/extract from the prevailing sounds in the environment, but where it is necessary to determine the emotional state in this context. Specifically, as Ref. [11] artistically exposes, a sound object is an acoustic action that corresponds to the intention of listening. In acoustics, this definition corresponds to a sound event. The field of sound event detection (SED) is a well-researched area, with techniques that are often passed from system to system, as noted in Ref. [12], in which the authors trained a sound event detection algorithm that offers several technical choices.

In this paper, we propose an emotion-recognition system for smart environments (ERSSE) using acoustic information, specifically, sound events. This paper is based on the previous ReM-AM system [13], which proposes a reflective middleware with an acoustic management layer that contains three autonomic cycles of data analysis tasks: general acoustic management (GAM), intelligent sound analysis (ISA), and artificial sound perception (ASP). The work in Ref. [13] proposes a generic design of a sound management system in an SE. Particularly, this work is based on the autonomic cycle of ISA proposed in Ref. [13] to develop a system that allows for emotion recognition without direct speech information. The main contribution of this work is to design and implement an emotion-recognition system from sound events which can be used in any SE to capture ambient sounds. The emotions to recognize include the six basic emotions proposed in Ref. [14]: anger, disgust, fear, happiness, sadness, surprise, with the addition of the neutral emotion. The main contributions of this work are the description of a sound pattern for emotion recognition, based on a set of descriptors, and the definition of an autonomic cycle that exploits this information for emotion recognition. The case study tests the system by activating it in a smart movie theater in which different situations take place as the system recognizes emotions and provides recommendations according to what is happening in the environment.

The organization of this paper is as follows. Section 2 presents ReM-AM, its ontology-driven architecture (ODA) and its autonomic cycle of intelligent sound analysis (ISA), along with the sound pattern for emotion recognition. Section 3 describes the specification of the emotion-recognition system, which includes the autonomic cycle of ERSSE. Section 4 presents the case study and an analysis of the general behavior of the autonomic cycle. Section 5 shows a comparison with similar works, and finally, the conclusions are presented in Section 6.

2. Theoretical Framework

This section describes ReM-AM, with its ontology and autonomic cycles, along with the sound pattern for emotion recognition used to develop ERSSE.

2.1. ReM-AM

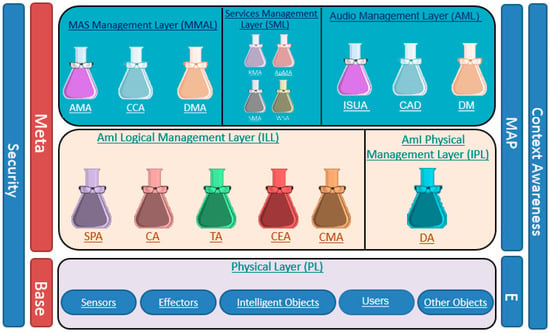

As explained in Ref. [13], ReM-AM (Figure 1) is an adaptation of the middleware AmICL [15], which includes a layer related to acoustic management to make the system suitable for acoustic recognition, among other tasks. This layer, called the audio management layer (AML), has a component for the characterization and categorization of sound events (collecting audio data, CAD), which creates metadata and defines their properties. Another component is used for sound recognition and smart analysis, employing information from the interactions in the environment (interaction system–user–agent ISUA); and the last component is used for the decision-making (DM) process to adapt and optimize an SE, according to its acoustic necessities or deficiencies.

Figure 1.

ReM-AM (from [13]).

ReM-AM exhibits three autonomic cycles: (i) general acoustic management (GAM) to improve an SE on the basis of its acoustic features, with more absorption, noise-canceling, etc.; (ii) intelligent sound analysis (ISA), for identifying acoustic features and determining the required tasks according to what is taking place in the environment, such as sending a warning or an alert signal; and (iii) artificial sound perception (ASP) to achieve a virtual representation of the soundscape in an SE.

ERSSE is based on the ISA autonomous cycle because it provides an acoustic overview of the SE by identifying the acoustic characteristics present that determine the sound events, from which emotional recognition can be achieved.

2.1.1. ODA for ReM-AM

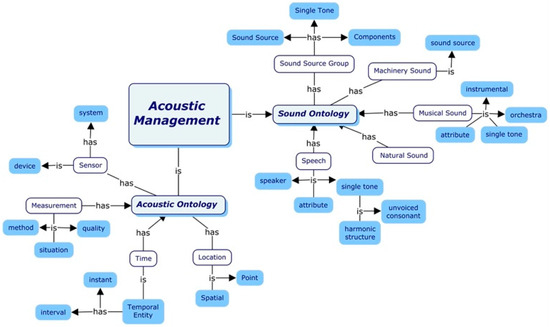

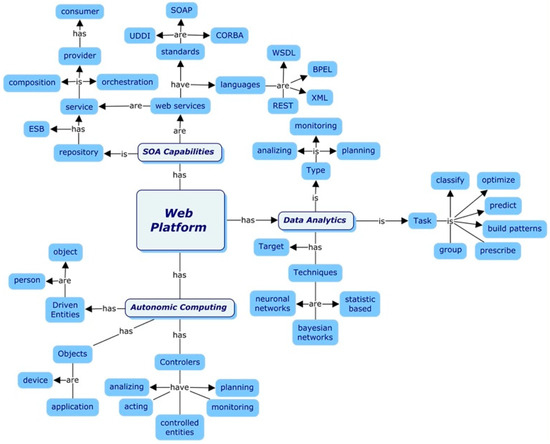

Ref. [16] presents an ontology driving architecture (ODA) for ReM-AM. Particularly, this ODA is defined by two ontology layers. It uses a sound ontology and an acoustic ontology for the CIM layer (Figure 2) as domain information to integrate the terms and aspects required for acoustic management. The PIM layer (Figure 3) contains ontologies related to computational implementation such as SOA capabilities, a task description of data analytics [15], and autonomic computing [17].

Figure 2.

CIM layer (from Ref. [16]).

Figure 3.

PIM layer (from Ref. [16]).

As mentioned previously, the CIM layer (see Figure 2) contains all the relevant information for sound management in an environment. In particular, two types of information are considered, a sound ontology that describes all types of sounds (natural, machine, speech, etc.) and another ontology that describes sound events, defined by time, site, sensor, and metric as the occurrence of said event is measured. From the CIM layer, the sound ontology, e.g., the sound source group, the machinery sound, the musical sound, and the natural sound, is relevant for ERSSE. In regards to the acoustic ontology, the time and the location are relevant for estimating a sound event. The tasks and techniques of data analytics and the controllers and driven entities of autonomic computing are relevant to the PIM layer.

On the other hand, the PIM layer (see Figure 3) describes the paradigms on which ReM-AM is based, of which those relevant for ERSSE include autonomous computing (the basis of autonomous cycles) and data analysis (since the definition of ISA is a set of data analysis tasks, in our case, sound data).

2.1.2. Autonomic Cycle of Intelligent Sound Analysis (ISA)

The autonomic cycle of ISA, as presented in Ref. [13], identifies the acoustic features of an SE and the possible tasks that can be executed in its context. The SE will be provided with acoustic sensors, located according to the dimension and distribution of the space, to collect as much acoustic information as possible with high accuracy. ISA exhibits three phases:

- Observation (called the CAD module): the sensors obtain the acoustic information, allowing for the precise perception of the sound field in the SE.

- Analysis (called the ISUA module): the information is classified and stored for the next phase.

- Decision Making (called the DM module): determines the necessities of the current environment and identifies the tasks that can be executed in the given context.

The ISA autonomic cycle is a better approach for ERSSE than is either GAM or ASP due to its recognition capacity. Its adaptation to ERSSE will be explained in the next section.

2.2. Sound Pattern for Emotion Recognition

The recognition of emotional audio signals exhibits a growing potential for use in human–computer interactions, as noted in Ref. [18]. It is a more appealing research technique for emotion recognition than other physiological signals, such as electroencephalograms or electrocardiograms. This work presents a sound event pattern using a set of descriptors to achieve an initial ERSSE approach to the sound’s influence on detecting emotions. Table 1 shows the different descriptors that can be deduced from sound events to obtain information that results in emotion recognition.

Table 1.

Descriptors of the sound events pattern.

The descriptors allow the system to identify the features required to recognize emotions from random sound events rather than from speech. For ERSSE, we used the Toronto Emotional Speech Set (TESS) [19], which is a dataset for training emotions. Based on our pattern and this dataset, the system can recognize the values of the descriptors in the audio from the context and associate them with the metadata of emotions in the dataset in order to attribute them to the new perceived events, thus identifying the emotions.

3. Specification of ERSSE

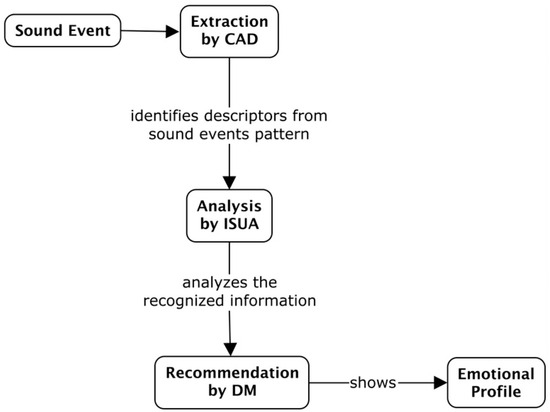

Recognizing emotions in an SE can improve the general experience of its users, which is also essential for architects and engineers in the conception of a space [20]. Additionally, creating an emotional profile of an SE using acoustic information is a less invasive method of obtaining this information. Using the autonomic cycle of ISA is the best option for acquiring information from sound events, classifying and analyzing them, and then identifying the pertinent emotion (see Figure 4).

Figure 4.

Diagram of the Autonomic Cycle ISA for ERSSE.

As shown in Figure 4, when a sound event is identified, the CAD component extracts the information from it and identifies the descriptors explained in Table 1. That information is sent to the ISUA for classification and analysis, giving the result to the DM module, where the system can determine the prevalent emotion in the SE. The tasks in the autonomic cycle are as follows:

- Extraction: When a sound event is perceived by the system, the CAD component extracts the acoustic descriptors of the hierarchical pattern shown in Table 1, along with the associated emotion metadata from the TESS dataset.

- Analysis: This task carries out the emotional classification of the sound events by analyzing the information previously prepared by the CAD module. To do this, a classification model, previously trained using the data from the TESS dataset and the different values of the sound descriptors shown in Table 1, is subsequently used to perform this classification task using the new sound events. Specifically, sound events will be classified as exhibiting one of the following seven emotions [21,22,23]:

- Anger: Response to interference with the pursuit of a goal.

- Disgust: Repulsion by something.

- Fear: Response to the threat of harm.

- Happiness: Feelings that are enjoyed.

- Sadness: Response to the loss of something.

- Surprise: Response to a sudden unexpected event. This is the briefest emotion.

- Neutral: No reaction.

- Recommendation: The system generates a response from the combination of sound events detected in the SE. The pertinent action to recommend as a response is shown in Table 2, which details the emotion, the subject in the SE, and the actions as a response, as shown in the system or as deployed in the SE.

Table 2. Actions recommended by the DM module.

Table 2. Actions recommended by the DM module.

In this way, ERSSE can consider the six basic emotions, as well as neutrality, where something can happen, but there is no reaction.

Specifically, ERSSE recognizes emotion in real time in an SE using the acoustic descriptors of Table 1. The sound source, the machinery sound, the musical sound, and the natural sound of the acoustic ontology in the CIM layer of ODA help to locate the nature of the sound event to recognize the associated emotion defined in the TESS dataset. With that, it builds the classification model for the analysis task, additionally employing the tasks and techniques of data analytics, defined by the PIM layer of ODA, and the TESS dataset.

Table 3 shows the tasks of the autonomic cycle of ERSSE. The extraction is responsible for the identification of descriptors in the SE. The information is used in the task of analysis to detect the emotion using a classification model. Then, using the controllers and driven entities from the autonomic computing of the PIM layer of ODA, the recommendation task is responsible for defining the action in the SE according to the emotion identified by the system.

Table 3.

Tasks description.

4. Case Study

In this section, we test the system in a smart movie theater where there are both high occupancy and a variety of subjects. In this context, different situations may occur depending on the community, the film projected, the genre, etc. We describe the case study, the tasks of the autonomic cycle, and the general behavior of ERSSE.

4.1. Description of Case Study

In a smart movie theater, people watch a comedy film. The system recognizes the general background noises such as couples whispering or people eating popcorn. None of this noise provides any information about the emotions in the SE. Next, we describe several sound events that could occur.

Event 1: When people laugh, the system recognizes the sound events, and using its classification model, it categorizes them as adult/children/elder laughs (happiness) using the descriptors from the sound pattern. As this is an SE where a comedy film is playing, the system does not react to the laughs in terms of an alert, but instead only adds them to the stable soundscape.

Event 2: Unexpectedly, there is a problem with the movie projector, and the film stops; people start to yell, children are complaining, and some others stand up and go to talk to the person in charge to ask about the situation. The system identifies that the background noise changes: there are louder voices, the children are perhaps even crying, and those who stood up to go out are walking with fast steps (all this information is extracted by the CAD task). The analysis task (ISUA module) uses this information, and its classification model detects anger in the environment. Finally, the recommendation task (DM module) defines the pertinent action according to the different sound events and emotions recognized.

Event 3: At another moment, a child starts to cry because his/her bag of popcorn is empty. The system identifies the crying as a sound event from the background noise and recognizes the emotion of disgust. Then, a recommendation will be shown as an alert to his or her parents/person/authority in charge.

Event 4: When one of the parents goes out to buy more popcorn, the person falls down the stairs and people start screaming. The system identifies the screams as a sound event from the soundscape, recognizes the emotion of fear, and produces an alarm as a notification of what is happening.

Thus, in this example we see how the system could consider different situations, e.g., in contexts using different film genres, different conditions in the cinema, etc. In each case, ERSSE would be able to identify the emotion and recommend relevant solutions in real time.

4.2. Tasks of the Autonomic Cycle of ERSSE

The implementation of the system was positive in every task, and the tasks were verified as follows:

- Extraction: the CAD component extracts the information available in the SE for the audio descriptors defined in Table 1, considering techniques from Ref. [24], specifically using the Fourier transform in tasks related to noise robustness and dysarthric speech, where the information is separated and pre-processed for the subsequent analysis.

- Analysis: First, this task trains a classification model using the TESS dataset, which includes the descriptors/features defined in Table 1 and the emotions associated with their values. This dataset includes 2800 audio files, with a length between 1 to 4 s each, portraying different emotions. Once the model is trained, the ISUA will receive the information from the previous task and classify it. Specifically, the classification model was built using the random forest method, similar to the model presented in Ref. [3]. Specifically, the dataset was divided as follows: 80% for training, 10% for validation, and 10% for testing, following a fivefold cross-validation scheme. In addition, 33% of the dataset was initially used, and an accuracy of 60% was obtained in the test set and 66% accuracy in the validation set. Later, the data subset was expanded to 66%, and finally, up to 85%, to see if the results improved. With this last data subset, the system achieved an accuracy of 88% in the test set, and 92% in the validation set.

- Recommendation: The recommendation system is based on the information in Table 2. Basically, based on the data that comes from the other modules (emotion and subject), it determines the action to take. Specifically, for this SE (a smart movie theater) and the events detected by the previous tasks, it would define the actions to be carried out. Table 4 indicates the recommendations for the events described in the previous section.

Table 4. Responses to detected events.

Table 4. Responses to detected events.

We note the ability of the system to follow all events in the environment, only considering the emotions recognized in it. For example, when the child is upset because he/she does not have more popcorn, our system is able to detect that emotion and the subject (Disgust + Children) to indicate that a warning signal should be sent to the parents/person/authority in charge.

4.3. Analysis of the Behavior of the Autonomic Cycle

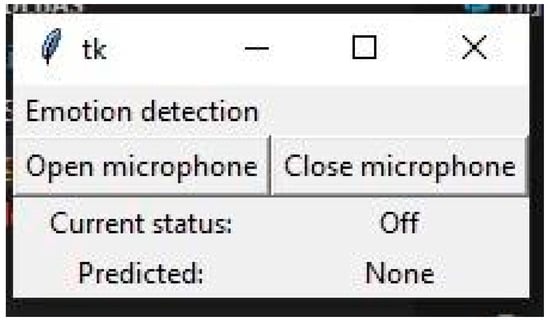

The interface for the system is very simple (see Figure 5), only displaying whether or not the emotion detection system is available (open and close the audio input), along with the emotion predicted. Specifically, the interface allows for the opening of the microphone to listen to ambient sound. To do so, the Current Status button must be set to “on”. Then, the system captures information about the sound events based on the descriptors shown in Table 1. When an emotion is identified, the status of the Predicted field changes from “None” to “Predicted” (the predicted information, with its descriptors, is stored in a .cvs file called “predicted-emotion”).

Figure 5.

Simple interface.

The system shows promising behavior and great potential in the field of emotion recognition from random sound events in the SE. This machine learning model is capable of creating an emotion profile of an SE from audio inputs. The extraction process was successful, and the classification method exhibited acceptable performance. However, the 85% accuracy result should be improved in future experiments. The recommendation module requires calibration for different SEs, which would make it more applicable and produce a more complex result.

5. Comparison with Similar Works

It is necessary to establish common criteria between ERSSE and other similar systems. For this case, in Table 5, the first criterion is the emotion recognition of the sound events rather than the recognition of speech; the second is the use of smart agents, and the third is the implementation in specific SEs.

Table 5.

Comparison with similar works.

X means that reference meets that criterion. Ref. [4] includes a very interesting innovation in emotion recognition from speech, which means that the main focus of the research is speech. The researchers use different features for emotion classification, but the implementation is not for SEs. In Ref. [5], the focus is also on sound events, in addition to speech, mainly because in regards to speech recognition, the background noises can interfere with emotion recognition. The implementation is not related to an SE.

Ref. [6] presents an innovative implementation of music theory in emotion recognition. Its computational acoustic representation uses different classifiers to predict emotions from music. In Ref. [7], the authors present an improvement in speech emotion recognition using complementary acoustic representations and data augmentations. However, they use a speaker-independent setting, which uses non-smart technology and is not autonomous.

The potential of ERSSE is evident in the combination of these criteria: ERSSE places its main focus on random sound events, and there is no need for speech in an SE for ERSSE to be effective. It employs a pattern of sound events, with acoustic and sociological descriptors, to achieve a high accuracy in emotion recognition, including the necessary features to identify and classify the obtained data. Moreover, ERSSE is conceived directly for use in SEs to improve the experience of its users.

6. Conclusions

The relevance of this work is confirmed by its use of only sound events. The autonomic cycle of ERSSE uses a set of descriptors necessary to achieve its tasks. This system can be implemented in different SEs. Each task performs a specific activity necessary to recognize emotions and to adapt the SE based on these emotions. For example, the first task collects acoustic information, ignoring speech; the second task uses this information to emotionally classify the sound event and finally, to recommend a precise action based on the identified emotion. The results of the classification model, displaying an accuracy of 88%, are promising. However, the main limitation is lack of data available to train the system.

The case study exposed a specific context in which the system is capable of reacting to multiple sound events occurring in the environment. In systems that only recognize speech, emotions cannot be effectively identified. The system worked correctly, recognizing adults, children, and elders, as well as separating the new sound events from the soundscape. The system was able to focus on the action required when it recognized disgust and fear.

For future research, the goal is to combine our previous work regarding sound event recognition (without emotions) with ERSSE to achieve a more general acoustic management system. It will be also necessary to create a customized dataset including self-recorded emotions to improve the training of the system. Moreover, it could be interesting to expand from the basic emotions to include other reactions such as jealousy, grief, and hope.

Author Contributions

Conceptualization, J.A.; Methodology, J.A. and R.G.; Software, G.S.; Validation, G.S. and J.A.; Formal analysis, G.S. and J.A.; Data curation, G.S and R.G.; Writing – original draft, G.S.; Writing – review & editing, J.A. and R.G.; Supervision, J.A.; Funding acquisition, R.G. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Universidad del Sinú.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

We would like to thank Jose Maria Mira Barrientos, Samuel Cadavid Pérez, and Juan José Parra Díaz from the University of EAFIT, Colombia, for providing insight and expertise that assisted with this research.

Conflicts of Interest

The authors declare no conflicts of interest. The content of this publication does not reflect the official opinion of the European Union. The responsibility for the information and views expressed herein lies entirely with the author(s).

References

- Zhang, S.; Yang, Y.; Chen, C.; Zhang, X.; Leng, Q.; Zhao, X. Deep learning-based multimodal emotion recognition from audio, visual, and text modalities: A systematic review of recent advancements and future prospects. Expert Syst. Appl. 2023, 237, 121692. [Google Scholar] [CrossRef]

- Ahmed, N.; Al Aghbari, Z.; Girija, S. A systematic survey on multimodal emotion recognition using learning algorithms. Intell. Syst. Appl. 2023, 17, 200171. [Google Scholar] [CrossRef]

- Das, S.; Imtiaz, S.; Neom, N.H.; Siddique, N.; Wang, H. A hybrid approach for Bangla sign language recognition using deep transfer learning model with random forest classifier. Expert Syst. Appl. 2023, 213, 118914. [Google Scholar] [CrossRef]

- Mishra, S.P.; Warule, P.; Deb, S. Variational mode decomposition based acoustic and entropy features for speech emotion recognition. Appl. Acoust. 2023, 212, 109578. [Google Scholar] [CrossRef]

- Bhangale, K.; Kothandaraman, M. Speech Emotion Recognition Based on Multiple Acoustic Features and Deep Convolutional Neural Network. Electronics 2023, 12, 839. [Google Scholar] [CrossRef]

- Li, X.; Shi, X.; Hu, D.; Li, Y.; Zhang, Q.; Wang, Z.; Unoki, M.; Akagi, M. Music Theory-Inspired Acoustic Representation for Speech Emotion Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 2534–2547. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Cui, X.; Zhang, W. Speech Emotion Recognition with Complementary Acoustic Representations. In Proceedings of the 2022 IEEE Spoken Language Technology Workshop (SLT), Doha, Qatar, 9–12 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 846–852. [Google Scholar]

- Cong, G.; Qi, Y.; Li, L.; Beheshti, A.; Zhang, Z.; Hengel, A.; Yang, M.; Yan, C.; Huang, Q. StyleDubber: Towards Multi-Scale Style Learning for Movie Dubbing. arXiv 2024, arXiv:2402.12636. [Google Scholar]

- Zhang, Z.; Li, L.; Cong, G.; Haibing YI, N.; Gao, Y.; Yan, C.; van den Hengel, A.; Qi, Y. From Speaker to Dubber: Movie Dubbing with Prosody and Duration Consistency Learning. ACM Multimedia. 2024. Available online: https://openreview.net/pdf?id=QHRNR64J1m (accessed on 5 September 2024).

- Cong, G.; Li, L.; Qi, Y.; Zha, Z.; Wu, Q.; Wang, W.; Jiang, B.; Yang, M.; Huang, Q. Learning to Dub Movies via Hierarchical Prosody Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14687–14697. [Google Scholar]

- Godøy, R.I. Perceiving Sound Objects in the Musique Concrète. Front. Psychol. 2021, 12, 672949. [Google Scholar] [CrossRef] [PubMed]

- Turpault, N.; Serizel, R. Training sound event detection on a heterogeneous dataset. arXiv 2020, arXiv:2007.03931. [Google Scholar]

- Santiago, G.; Aguilar, J. Integration of ReM-AM in smart environments. WSEAS Trans. Comput. 2019, 18, 97–100. [Google Scholar]

- Liu, C.; Wang, Y.; Sun, X.; Wang, Y.; Fang, F. Decoding six basic emotions from brain functional connectivity patterns. Sci. China Life Sci. 2023, 66, 835–847. [Google Scholar] [CrossRef] [PubMed]

- Aguilar, J.; Jerez, M.; Exposito, E.; Villemur, T. CARMiCLOC: Context Awareness Middleware in Cloud Computing. In Proceedings of the Latin American Computing Conference (CLEI), Arequipa, Peru, 19–23 October 2015. [Google Scholar]

- Santiago, G.; Aguilar, J. Ontological model for the acoustic management in intelligent environments. Appl. Comput. Inform. 2022. [Google Scholar] [CrossRef]

- Sánchez, M.; Exposito, E.; Aguilar, J. Implementing self-* autonomic properties in self-coordinated manufacturing processes for the Industry 4.0 context. Comput. Ind. 2020, 121, 103247. [Google Scholar] [CrossRef]

- Chalapathi, M.M.V.; Kumar, M.R.; Sharma, N.; Shitharth, S. Ensemble Learning by High-Dimensional Acoustic Features for Emotion Recognition from Speech Audio Signal. Secur. Commun. Netw. 2022, 2022, 8777026. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K.; Dupuis, K. Toronto Emotional Speech Set (TESS); Version 1.0; Borealis: Toronto, Canada, 2020. [Google Scholar] [CrossRef]

- Zou, Z.; Semiha, E. Towards emotionally intelligent buildings: A Convolutional neural network based approach to classify human emotional experience in virtual built environments. Adv. Eng. Inform. 2023, 55, 101868. [Google Scholar] [CrossRef]

- Cordero, J.; Aguilar, J.; Aguilar, K.; Chávez, D.; Puerto, E. Recognition of the Driving Style in Vehicle Drivers. Sensors 2020, 20, 2597. [Google Scholar] [CrossRef] [PubMed]

- Salazar, C.; Aguilar, J.; Monsalve-Pulido, J.; Montoya, E. Affective recommender systems in the educational field. A systematic literature review Comput. Sci. Rev. 2021, 40, 100377. [Google Scholar]

- Ekman, P.; Cordaro, D. What is Meant by Calling Emotions Basic. Emot. Rev. 2011, 3, 364–370. [Google Scholar] [CrossRef]

- Loweimi, E.; Yue, Z.; Bell, P.; Renals, S.; Cvetkovic, Z. Multi-Stream Acoustic Modelling Using Raw Real and Imaginary Parts of the Fourier Transform. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 876–890. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).