Abstract

Automated Machine Learning (AutoML) is a subdomain of machine learning that seeks to expand the usability of traditional machine learning methods to non-expert users by automating various tasks which normally require manual configuration. Prior benchmarking studies on AutoML systems—whose aim is to compare and evaluate their capabilities—have mostly focused on tabular or structured data. In this study, we evaluate AutoML systems on the task of object detection by curating three commonly used object detection datasets (Open Images V7, Microsoft COCO 2017, and Pascal VOC2012) in order to benchmark three different AutoML frameworks—namely, Google’s Vertex AI, NVIDIA’s TAO, and AutoGluon. We reduced the datasets to only include images with a single object instance in order to understand the effect of class imbalance, as well as dataset and object size. We used the metrics of the average precision (AP) and mean average precision (mAP). Solely in terms of accuracy, our results indicate AutoGluon as the best-performing framework, with a mAP of 0.8901, 0.8972, and 0.8644 for the Pascal VOC2012, COCO 2017, and Open Images V7 datasets, respectively. NVIDIA TAO achieved a mAP of 0.8254, 0.8165, and 0.7754 for those same datasets, while Google’s VertexAI scored 0.855, 0.793, and 0.761. We found the dataset size had an inverse relationship to mAP across all the frameworks, and there was no relationship between class size or imbalance and accuracy. Furthermore, we discuss each framework’s relative benefits and drawbacks from the standpoint of ease of use. This study also points out the issues found as we examined the labels of a subset of each dataset. Labeling errors in the datasets appear to have a substantial negative effect on accuracy that is not resolved by larger datasets. Overall, this study provides a platform for future development and research on this nascent field of machine learning.

1. Introduction

Machine learning (ML) is a branch of artificial intelligence concerned with the development and use of computational models that possess the capacity to improve their performance at some specified task through experience. A more formal definition was provided by computer scientist Tom Mitchell [1];

“A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.”

Machine learning has been applied to a broad class of problems like sentiment analysis [2], recommender systems [3], medical diagnoses [4], fraud detection [5], autonomous vehicle control [6], and agricultural yield predictions [7]. The ML task with which we are concerned and which forms the basis of this study is referred to as “Object Detection.” Object detection is a sub-domain of computer vision and a good problem definition is provided by Zhao et al. [8]: “Object detection is to determine where objects are located in a given image (object localization) and which category each object belongs to (object classification).” Object detection falls under the category of supervised learning, as it involves the use of training data that are supplied with annotated bounding boxes. These bounding boxes indicate the location of the object in an image and are labeled with the corresponding object class.

Tsung-Yi Ling et al. [9] provide a demonstration of hierarchies for tasks in computer vision, illustrating the distinctions between object detection (i.e., object localization) and some higher and lower order tasks, as shown in Figure 1. With object detection, a model should provide the class of the object, a bounding box which circumscribes all pixels belonging to that class, and a confidence interval showing how confident the detector is about that particular prediction [10]. Object detection is a higher order task than image classification, where classes of objects are identified, but no localization information is provided. Semantic segmentation is a higher order task than object detection, since it must make determinations about the object class and location at a more precise pixel-level. Instance segmentation builds upon this further by distinguishing individual instances of each class and localization at the pixel-level. With object detection, it is possible that bounding boxes of different classes or objects will overlap.

Figure 1.

Computer vision tasks from left to right: image classification, object detection, semantic segmentation, and instance segmentation.

Object detection has a history dating back to the 1990s, but it was in 2001 with the “Viola-Jones” detector that this subdiscipline of computer vision saw considerable progress [11]. Early object detection methods relied on handcrafted features, and the performance of such algorithms plateaued into the early 2010s. In 2012, Girshick et al. [12] proposed the use of high-capacity Convolutional Neural Networks (CNNs) for a bottom-up region proposal for localization and segmentation, which he called an RCNN. This method increased the mean average precision of a common benchmark dataset by over 30%. Since that time, deep learning methods have come to dominate the field of object detection and most modern computer vision algorithms now are rooted in the use of CNNs [13].

In the traditional machine learning pipeline, there are a variety of tasks that require manual configuration on the user’s part. This includes data collection and cleanup (like missing data imputation), feature selection and transformation, model discovery, explanation, training, the allocation of compute resources, hyperparameter optimization, the configuration of architectures, as well as model deployment and monitoring [14]. Traditional machine learning techniques often require technical expertise, statistical knowledge, and subject-matter expertise in order to generate a model that is generalizable and predictive. As has been pointed out [15,16], this makes traditional machine learning techniques inaccessible to many individuals. Automated Machine Learning (AutoML) has emerged in the last decade as a means of overcoming this need for highly configured machine learning techniques requiring manual optimization [14,17].

This study seeks to evaluate the efficacy of AutoML tools for object detection tasks. To the best of our knowledge, no previous research has compared the performance of various AutoML frameworks in the realm of object detection. To address this gap in the literature, our study involves the implementation and comparison of three AutoML tools: Google’s Vertex AI, NVIDIA’s TAO, and AutoGluon. These tools were evaluated using three different datasets: Pascal VOC2012, COCO 2017, and Open Images V7.

2. Background and Related Works

2.1. Automated Machine Learning (AutoML)

The ultimate goal of AutoML would be to automate all the aspects of the machine learning pipeline from end-to-end, although no such framework currently exists. Recent efforts have focused on hyperparameter optimization and algorithm selection. AutoML refers broadly to the large-scale automation of various machine learning processes [18], and may include Hyper-Parameter Optimization (HPO), meta-learning, and Combined Algorithm and Hyper-parameter Selection (CASH) [14]. Machine learning frameworks that incorporate some of these aspects are generally referred to as “AutoML,” even though these are, in reality, only a sub-domain of automated machine learning.

Hyperparameter optimization is the problem of optimizing a loss function over a graph-structured configuration space [19]. A hyperparameter is a variable in an ML model that controls the learning process. A simple example would be the “learning rate” variable which determines the iteration step size in an optimization problem—like gradient descent—and provides a balance between the convergence rate and overshooting the loss function’s minima. Another example is the batch size hyperparameter that determines how many training examples an ML algorithm evaluates before updating the node weights. A CNN may include anywhere from ten to fifty such hyperparameters, based upon the particularities of how a model is parametrized. Some common methods employed for HPO include a random search, grid search, gradient-based optimization methods, and Bayesian optimization. While many AutoML tools may perform hyperparameter tuning, each framework may implement different optimization algorithms with a different number of hyperparameters to tune.

Meta-learning seeks to alleviate one of the limitations of traditional deep learning-based approaches, which rely on large-scale datasets, by creating models that gain experience over multiple training episodes of related tasks [20]. Such methods use information about the training process in one instance to optimize training in another. For this reason, it is sometimes referred to as “learning to learn.” Algorithm selection can also be an automated feature of a machine learning framework. An AutoML framework can encode a variety of algorithms (Nearest neighbor, SVM, random forest, etc.) as tunable hyperparameters, allowing for the framework itself to select the best performing algorithm.

Instances where the framework performs both the task of hyperparameter optimization as well as the algorithm selection are referred to as Combined Algorithm Selection and Hyperparameter Optimization (CASH) [20]. Karmaker et al. [21] proposed a six-tiered classification system for AutoML tools based on their level of autonomy. At the lowest level (1), you have to go from the scratch implementation of a machine learning algorithm using a programming language, all the way to level (6) which is able to automate everything from task formulation (TF) to prediction engineering (PE), feature engineering (FE), machine learning (ML), alternative model exploration, and result summarizing. There is no AutoML framework at level 6. This classification system is summarized in Table 1.

Table 1.

AutoML classification. Adapted from Karmaker et al [21].

2.2. Review of Benchmarking AutoML Frameworks and Object Detection

Ferreira et al. broadly classify three major categories of publications which compare AutoML tools: (1) publications in which a novel AutoML tools is compared with existing tools, (2) publications in which no novel AutoML tools is proposed but comparisons are made between distinct tools, (3) and publications which compare the characteristics of each tool without a focus on predictive performance. There is a growing amount of literature on benchmarking AutoML tools; however, their focus is almost entirely on tabular or text data. Nagarajah et al. review a variety of automated and semi-automated machine learning algorithms used for tabular data, providing a qualitative overview of their respective features [18]. Ferreira et al. examined 8 open-source tools on 12 different datasets spanning regression, binary, and multi-class classification tasks [22]. Gijsbers et al. developed a benchmarking framework that utilized 4 AutoML tools and 39 classification datasets [23]. This was later extended to 9 frameworks and 104 datasets, including both classification and regression datasets [24]. Zoller et al. benchmarked 14 different machine learning frameworks (including both AutoML and HPO) on 137 classification datasets [25]. Shi et al. compared AutoGluon-tabular and H2O variants for 18 multimodal datasets [26]. LeDell et al. focused mainly on the H2O AutoML framework, but included a comparison to three other frameworks from the Open ML benchmark, extending the datasets to 44 [27]. Borji et al. produced a benchmarking study on the object detection of 41 different models and 7 datasets, but did not include AutoML frameworks [28]. Lenkala et al. compared three different AutoML frameworks on three different time-series datasets for epileptic seizure detection [29]. A similar study of the aforementioned was performed by Westergaard et al., with the same AutoML frameworks and three new time-series datasets [30]. Paladino et al. similarly examined three AutoML tools on three datasets, but in this case, they were tabular datasets [31]. Since our task of interest is object detection, this has only limited relevance to the scope of our study. AutoML frameworks for structured data are much more developed and the options are more extensive.

Gijsbers et al. [24] compare 9 well-known AutoML frameworks against 71 classification tasks and 33 regression tasks and develops an extensible benchmark for AutoML frameworks that allows more valid comparisons between each benchmark. Gijsbers points out some common issues with benchmarking AutoML frameworks such as selection bias in the datasets and failing to use comparable resource budgets and compute resources.

Ferreira et al. [22] examines 8 open-source AutoML tools with 12 popular OpenML datasets on classifications and regression tasks. Furthermore, they make three comparison scenarios between General Machine Learning (GML), which utilizes traditional ML algorithms; Deep Learning (DL), focusing on a Neural Architecture Search; and XGBoost (XGB), considering a single case of XGB algorithm hyperparameter tuning. The best tools are selected first on the basis of the best average predictive score and then the lowest computational effort.

Papers that benchmarked machine learning algorithms for object detection tend to do so for specific use cases like remote sensing images [32] or salient object detecting [28]. These studies are also not evaluating automated machine learning. In one study [33], similar AutoML frameworks were compared, but it was done so for image classification rather than object detection. This study also did not evaluate the frameworks’ dependence on class imbalance and dataset size.

2.3. Metrics for Object Detection

In order to determine the validity of any object detection model, some evaluation criteria must be utilized. Over the past decade, several primary metrics have been employed for object detection tasks [10]. However, this presents challenges in benchmarking studies as these evaluation metrics have yet to be fully standardized [34]. One important metric is the Mean Average Precision (mAP) which is the mean value of the Average Precision (AP) over all object categories. In this equation, n represents the classes.

Average Precision (AP) is itself based on two important metrics in Machine Learning, namely, Precision and Recall which are contingent on True Positive (TP), False Positive (FP), and False Negative (FN):

From the definition, we can see that a model can have a high recall and low precision by predicting many bounding boxes, even wrong predictions. A model could, on the other hand, have low recall and high precision by only predicting bounding boxes with high confidence intervals, since precision does not take into account false negatives. For this reason, precision and recall are often provided in another metric, the F1 score, which is the harmonic mean of precision and recall.

By plotting precision against recall, we generate a “precision-recall curve”, as in Figure 2. The curve is generated by calculating the precision and recall across a number of confidence thresholds. The area underneath this curve represents the average precision. This is also referred to as the Area Under Curve (AUC) and abbreviated to PRAUC when it refers to the area under the Precision–Recall curve. One such curve can be generated for each object class, and the resultant values can be then averaged to generate the mAP. The mAP is a measurement of classification accuracy, but since object detection is also contingent on localization, we must use another parameter to determine what constitutes a true positive. For that, we utilize Intersection Over Union (IOU), which measures the overlap between the predicted bounding box and the ground-truth bounding box.

Figure 2.

Precision–Recall Curve.

This can be a point of contention for benchmarking models since the threshold value of IOU, with which we consider what is “successfully detected” and “missed”, can be arbitrarily set, although 0.5 has become a commonly used threshold value [34]. The threshold value is usually reported in benchmark studies along with the Average Precision as “AP@50” to reflect the metric and IOU threshold at which it is determined. In instances where multiple IOU thresholds are used for an average AP, the metric is provided with an interval and step-size [10,34] such as AP@50:5:95. In this instance, AP is being determined with an IOU interval from 0.5 to 0.95 with a step-size of 0.05 and averaged.

3. Research Methodology

Traditional deep learning methods often require technical expertise and may involve a lengthy trial-and-error process in order to generate a good model [35] which might need significant computational resources depending on the size of the dataset and the particularities of the ML architecture that is being leveraged. AutoML seeks to alleviate this developmental burden by automating the ML pipeline [25], allowing novice users to create useful machine learning models [23]. Automation can be applied to any number of processes within the ML pipeline, including data preparation, feature engineering, model generation, and model evaluation [8,17]. The code used in this study has been made publicly available on GitHub [36].

The goal of this study was to test AutoML tools by benchmarking their performance on various object detection datasets. We have evaluated these AutoML tools “as is,” and are not comparing differences in the data pre-processing state, or feature engineering capabilities, etc. Since there can be substantial differences in terms of configurability for each AutoML framework, we try to make as few changes to the default or standard configurations as possible.

In our study, we presumed that the creators of each tool had meticulously selected the most effective hyperparameters to maximize their tool’s functionality. We chose not to alter these settings, believing that the preset configurations were already fine-tuned for optimal results. Our evaluation was conducted using each tool in its unmodified, default form, reflecting the typical usage scenario for users who may not have extensive knowledge in machine learning. This method allowed us to gain insightful information about the performance of each tool under standard conditions, which is particularly useful for individuals not well-versed in the complexities of algorithms or specific adjustment possibilities. Given that AutoML tools offer a wide array of customizable features, trying to uniformly align them using the same algorithms would be overly complex and often impractical.

3.1. Selected AutoML Frameworks

The number of automated machine learning frameworks available which are capable of performing object detection tasks is somewhat limited currently. For this project, there were a few considerations for selection. First, we wanted to use frameworks for which there was extensive documentation and support. The two proprietary frameworks (Google’s Vertex AI Vision and NVIDIA TAO) were clear choices for this project due to the considerable institutional backing that they receive. Secondly, we wanted to compare these proprietary options to an open-source option, in order to provide a kind of cost–benefit analysis for each framework. For this reason, we selected AutoGluon. Finally, we wanted frameworks that provided a fairly high degree of automation. All the frameworks selected perform at least hyper-parameter optimization and an architecture search, which are particularly challenging aspects of the machine learning pipeline.

AutoML options like VEGA [37] and DetNAS [38] were considered, but excluded from the study since, in the case of the former, there were security concerns from the development group, and in the latter, the scope of its AutoML functionalities was too limited. Roboflow [39] was also considered, but since this is also a proprietary framework there were more enticing options from larger companies.

3.1.1. TAO (NVIDIA)

TAO (Train, Adapt, Optimize) by NVIDIA (version 5.2.0) is a free-to-use proprietary framework built on TensorFlow and Pytorch [40]. TAO offers over 100 model architectures across various computer vision tasks and utilizes transfer learning for improved model performance. To improve efficiency on edge devices and reduce the memory footprint [41], TAO also has the ability to prune a trained model before being deployed. For object detection, TAO utilizes the KITTI dataset format where each object instance is one line of a TXT file [42]. Each text file is named after the corresponding image.

3.1.2. AutoGluon

AutoGluon (version 0.6.2) [43] is a framework designed to automate machine learning on tabular, text, and image data, as well as perform time-series forecasting and multimodal prediction. AutoGluon has over 70 pre-trained models in its model zoo, all utilizing some type of neural network. AutoGluon allows for the manual configuration of hyperparameters like the learning rate, batch size, and model architecture, but can perform these tasks automatically as well with a user-specified strategy, like a random search, grid search, Bayesian optimization, or reinforcement learning. AutoGluon evaluates models with mAP, but allows the addition of custom metrics as well as those metrics which are already a part of the Scikit library. AutoGluon is a popular machine learning framework whose automated features are well described and benchmarked in non-object detection tasks. AutoGluon can utilize the COCO or VOC dataset format [9,44], and in our cases, we opted to use the VOC format. Like the KITTI format, each XML label file is named after the corresponding image and includes information on the instances of the class names and bounding boxes.

3.1.3. VertexAI (Google)

Google’s Vertex AI [45] is an AutoML platform designed for image, video, text, and tabular data, allowing users to train and deploy models through a unified API, client library, and web interface. Vertex AI utilizes AuPRC (Area under Precision–Recall Curve) as its main object detection evaluation metric, as well as log-loss, and a confusion matrix. However, this can be easily converted to mAP based on the number of classes it predicts. Vertex AI utilizes Bayesian optimization for hyperparameter tuning, but allows for user configuration of hyperparameters as well. Vertex AI has received a significant amount of attention in the ML community. Although Vertex AI includes extensive documentation on how to use its framework, much of the underlying implementation is hidden from the user. Nonetheless, with its extensive automated capabilities, this framework falls squarely within the scope of this project. For the dataset labels, VertexAI provides an easy-to-use but non-standard format.

3.2. Datasets

We selected datasets that are commonly used in other benchmarks and analyses. MS COCO is referenced and utilized extensively in the literature, and Pascal VOC is seen commonly in computer vision challenges. This also provides the benefit of having datasets which are well-organized, well-annotated, and overall high-quality. We also wanted datasets which could be manipulated to explore some of the challenges in object detection related to dataset size and class imbalance. For this reason, Open Images was a very good candidate for the study given its size and class-breadth. Furthermore, we curated our dataset to those images containing only single class instances. This makes object occlusion in the images less likely and provides an overall more manageable dataset. The curation of the datasets was done using the FiftyOne [46] toolset, by Voxel51.

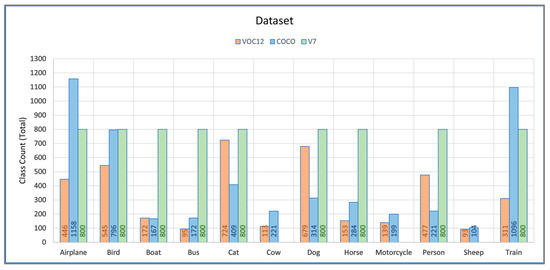

Although Open Images V7 is a substantial dataset, it still did not provide enough images for 3 of the classes (“Cow”, “Motorcycle”, “Sheep”), with only a single class instance that would have kept in parity with the 9 other classes which had 800 such images. As we wanted to keep V7 as a balanced dataset, we chose to eliminate those 3 classes from the dataset.

The datasets were shuffled and randomized from their original train, validation, and test splits and reorganized into a train, validation, and test split of 70%, 15%, and 15%, respectively. Figure 3 shows the final datasets and class distributions.

Figure 3.

Image count by class.

We also considered using ImageNet [47] as a dataset, but since this dataset is organized according to the WordNet hierarchy, there were too many classes to make this manageable and comparable to other datasets we will use. Other datasets like the LARA Traffic Light Detection dataset and Exclusively Dark Images dataset were considered, but these are for specific use cases of object detection and we are seeking results that are more generalizable. CIFAR-100 and Caltech-101 are also popular datasets in computer vision, but these are only suited for image classification.

Next, we describe the details of the selected datasets, MS COCO, Pascal VOC2012, and Open Images V7.

3.2.1. COCO

MS COCO [9] is a large-scale dataset designed for a number of computer vision tasks like class and instance segmentation, object detection, and classification. The images also include scene descriptions. MS COCO is composed of over 330,000 images, 1.5 million object instances, 80 objects categories, and 91 stuff categories. The scale of this dataset and its ubiquity in computer vision benchmarks makes it particularly attractive for us in this project.

3.2.2. Pascal VOC2012

The Pascal VOC [48] dataset is another commonly used dataset to benchmark object detection algorithms. From 2005 to 2012, it saw subsequent updates with the latest dataset being used for image classification, object detection, segmentation, and action classification. The dataset (VOC12) is composed of 11,530 images containing 27,450 bounding boxes across 20 categories. This is a much smaller dataset than the aforementioned MS COCO, but is another dataset of interest since it is frequently used in object detection challenges.

3.2.3. Open Images V7

Open Images [49] is a dataset developed by Google that has used crowdsourcing to generate an enormous trove of annotated images. There are roughly 9 million images in this dataset covering 600 object classes for 1.9 million of those images. There are 16 million bounding boxes within that subject which makes it by far the largest object detection dataset available. The dataset is also utilized for segmentation tasks, localized narratives, and visual relationships. Given the scope and size of this dataset, we used only a small portion of it to bring it into parity with the other datasets we have selected.

4. Results

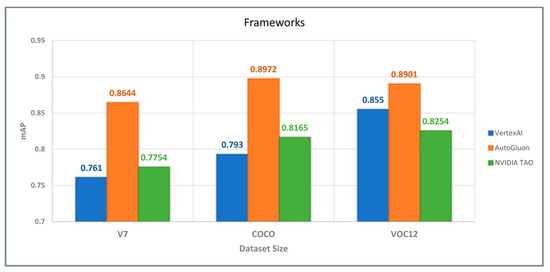

All the results were obtained on Google Colab Pro+ utilizing NVIDIA A100 Tensor Core GPUs (40 GBs) and Intel Xeon CPU (2.2 GHz). Figure 4 shows the mAP for each AutoML framework across each dataset. In terms of accuracy, our results indicate AutoGluon as the best-performing framework with a mAP of 0.8901, 0.8972, and 0.8644 for the Pascal VOC2012, COCO 2017, and Open Images V7 datasets, respectively. NVIDIA TAO achieved a mAP of 0.8254, 0.8165, and 0.7754 for those same datasets. Aside from the VOC12 dataset, Google’s VertexAI performed the worst overall, with scores of 0.855, 0.793, and 0.761.

Figure 4.

mAP for each framework by dataset.

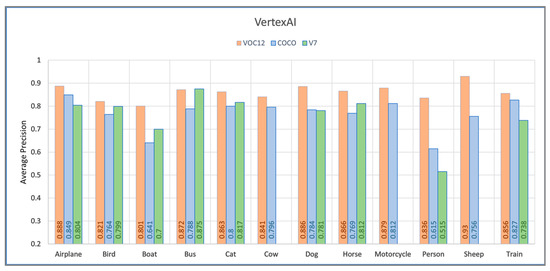

4.1. Vertex AI

In Figure 5, we show the AP for each class for each dataset when trained using Vertex AI. The models were trained until no further improvement in the average precision could be achieved. The node hours utilized by each dataset were 61.1, 41.5, and 33.9 for the V7, COCO, and VOC12 datasets, respectively. VertexAI was the worst-performing framework of the three, only outperforming NVIDIA TAO on the VOC12 dataset. VertexAI does not appear to provide information about the hyperparameters or the neural network architecture, so we do not include that information here.

Figure 5.

AP by class for VertexAI.

4.2. AutoGluon

Overall, AutoGluon was the best-performing framework across all datasets. The training time for VOC12 was 50.6 min. For COCO, it was 63.2 min and for V7 it was 150.4 min. The base network selected was ResNet50. We used a preset value for the hyperparameter selection and tuning which was called on the fit() method. The batch size was eight. The number of epochs was 50, and the learning rate was 0.0001. In Figure 6, we provide the AP for each class.

Figure 6.

AP by class for AutoGluon.

4.3. NVIDIA TAO

TAO outperformed VertexAI (excepting for the VOC12 dataset), but underperformed AutoGluon across all datasets when comparing their mAP. Like in AutoGluon, we trained our models using the Google Pro+ runtime environment with NVIDIA A100 GPUs. In this case, the base network was Yolo V4 with a ResNet18 backbone. The batch size was eight and the number of epochs was 80. The learning rate had a minimum of 1 × 10−7 and a maximum value of 1 × 10−4. The VOC training time was 286 min. The COCO training time was 147 min and V7 was 336 min. In Figure 7, we provide the AP across each class.

Figure 7.

AP by class for AutoGluon.

5. Discussion

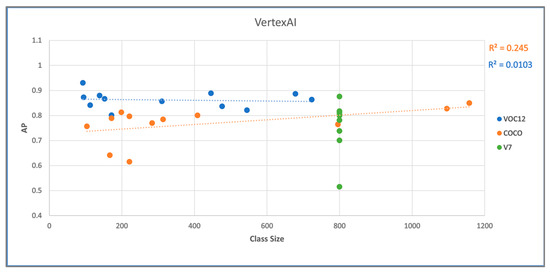

The datasets designed for classification may have particular classes over or under-represented which affects the ability of the model to recognize edge cases [50]. In this study, we also explore the effects of class imbalance and dataset size on performance by observing model accuracy as a function of class size. In Figure 8, we plot the AP of each class as a function of its size for the VertexAI framework. Figure 9 plots the AP by class size for the AutoGluon framework and Figure 10 plots the AP by class size for the TAO framework. Figure 8, Figure 9 and Figure 10 show no clear relationship between the class size and AP.

Figure 8.

AP by class size for VertexAI.

Figure 9.

AP by class size for AutoGluon.

Figure 10.

AP by class size for TAO.

Previous research had focused on the effects of object occlusion, image resolution, and object size with regard to accuracy [51,52,53,54]. Hoiem et al. [55] found in their study that AP was most sensitive to object size with occlusion, truncation, and with aspect ratio also having a considerable effect. We include in this research another metric which we refer to as the “bounding box ratio”. This is the ratio of the object bounding box size to the overall image size. Since the bounding box corresponds to the height and width of the object in the image, and we have selected our dataset images to only include single object instances, this can be used as a proxy for the apparent object size. We can then evaluate the average precision for each class as a function of the average bounding box ratio for that class. The evaluation was done using the Pascal VOC AP standard, where the IOU threshold is set to 0.50 and the PRAUC is interpolated continuously. VertexAI provided the AP and mAP metrics directly after training, but in the case of NVIDIA TAO and AutoGluon, we use the set of predicted labels provided by each trained model and a Python library to calculate AP and mAP [10].

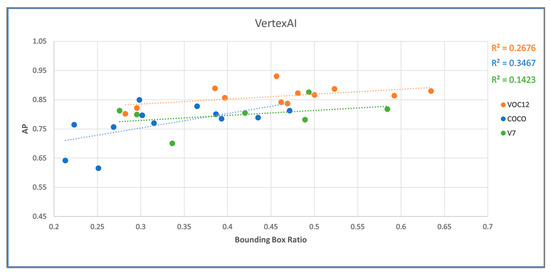

We explored the relationship between the relative object size in the image to the size of the image overall. In Figure 11, we plot the AP as a function of the bounding box ratio, which is the ratio of the ground truth bounding box to the overall size of the image, for VertexAI.

Figure 11.

AP as a function of bounding box ratio for VertexAI.

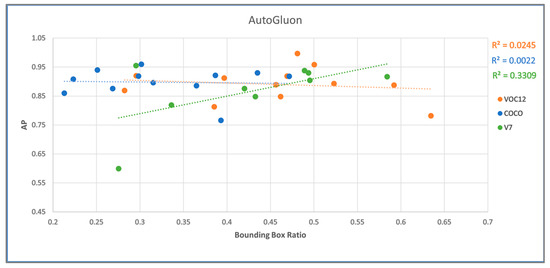

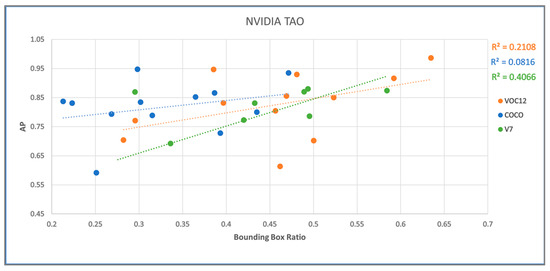

In Figure 12, we provide AP as a function of the bounding box ratio for the AutoGluon framework. Figure 13 shows AP as a function of the bounding box ratio for the TAO AutoML framework. In the top right of each figure, an R2 value (coefficient of determination) is provided for each dataset, as an indication of the proportion of variance in AP which can be accounted for by the average object size for each class [56]. It is important to note that limiting our dataset to single object instances does not preclude an object from being occluded or truncated in the image. The results here indicate that, overall, the AP has a weak correlation to the bounding box ratio.

Figure 12.

AP as a function of bounding box ratio for AutoGluon.

Figure 13.

AP as a function of bounding box ratio for TAO.

We looked at a small subset (5%) of each dataset and observed substantial differences in the dataset labeling quality. The V7 dataset had significant issues, including instances of obvious missed labels. Examples of these issues are shown in Figure 14, Figure 15 and Figure 16. In Figure 14, there is a ‘person’, but the label of the image is ‘dog’. In some cases, a classification label was used inappropriately to refer to too many objects in the image, such as the ‘person’ label for the entire image in Figure 15.

Figure 14.

Missed dog label—V7 dataset.

Figure 15.

The entire image is labeled “person”—V7 dataset.

Figure 16.

Improper false positive. Only class “dog” was identified in the original label—V7 dataset.

Of the 360 images from the V7 dataset we reviewed, we observed 35 labeling issues. For the COCO dataset, we looked at 258 images and noted five labeling issues. Of the 197 random images we reviewed in the VOC12 dataset, no labeling issues were observed. Instances of images with missed labels sometimes led to properly identified classes and localized objects being treated as false positives. For example, only the class “dog” was identified in the original label for the image shown in Figure 16.

Based on the reviewed samples, the V7 dataset exhibited the highest number of labeling issues. As illustrated in Figure 4, AutoML frameworks demonstrated their poorest performance with the V7 dataset. Conversely, these frameworks achieved their best average performance on the VOC12 dataset, where no labeling issues were observed.

This study is focused on accuracy. However, there are other important factors to consider when choosing an AutoML framework for object detection such as cost, ease-of-use, and inference speed. Although VertexAI was the worst-performing framework in terms of accuracy, it was the easiest to use. There were no runtime errors during training, and no debugging was needed. It was also the framework that provided the least amount of information about the actual training process. AutoGluon and TAO, meanwhile, had a number of issues to be resolved before a model was successfully trained, but the frameworks provide a substantial amount of information about the training process itself.

Normalizing compute resources is, in many cases, difficult to do, since we are exploring the use of proprietary frameworks which may not necessarily disclose that information entirely. Furthermore, proprietary frameworks do not always offer the choice of using a local runtime. In such cases, we simply disclose what computational resources were leveraged during training. Two of our frameworks (TAO and AutoGluon) were run in a hosted environment on Google Colab Pro+ utilizing NVIDIA A100 Tensor Core GPUs (40 GBs) and Intel Xeon CPU (2.2 GHz) with 51 GB of system RAM. We could not confirm all the compute resources utilized by VertexAI in our own training, since those resources are entirely managed by the framework, but based on the training cost, it also appears to have used NVIDIA A100 GPUs.

6. Conclusions and Future Work

Despite using less training time, AutoGluon outperformed TAO and VertexAI across all datasets, as measured by mAP. TAO outperformed VertexAI in the V7 and COCO dataset and was outperformed in the VOC12 dataset. We also investigated the relationship between class size and imbalance and average precision and there seemed to be no clear correlation. The bounding box ratio was provided as a proxy for object size and this was investigated as another possible reason for differences in accuracy, but there appeared to be no clear relationship there either. Objects could still be occluded in our dataset, even though they were reduced to single object instances, and this could also be a point of investigation for future work. A more expansive benchmarking study will also include other AutoML frameworks like Microsoft’s Azure and Amazon’s Sagemaker.

While assessing a portion of the datasets for labeling correctness, the V7 dataset presented considerable issues, such as mislabeling and improper classification. In contrast, the COCO dataset encountered fewer issues, and the VOC12 dataset demonstrated flawless labeling. This disparity in the labeling quality might have influenced the performance of the AutoML frameworks, leading to suboptimal outcomes with the error-prone V7 dataset and an optimal performance with the VOC12 dataset.

The varying data standards required by different AutoML frameworks currently pose a challenge, particularly for inexperienced users, due to their complexity. Adapting dataset labels and file structures to meet the specific input needs of each AutoML framework is a tedious process. Therefore, developers of these frameworks should focus on offering more comprehensive and clearly documented dataset format options to enhance user accessibility and efficiency.

The advent of AutoML represents a great opportunity for novice users or domain-experts without a technical programming background to leverage machine learning for their own purposes. In this sense, AutoML represents a democratization of machine learning where it can be applied to wider use cases by a greater number of people. We hope to present through this project a starting point for novice data analysts, educators, medical-imaging specialists, or statisticians who may be interested in a more general computer vision task to understand their options, when it comes to AutoML, contextualized in a common machine learning task like object detection. This study provides a platform for future development and research on this nascent field of machine learning.

Author Contributions

Investigation, Software, Writing Original Draft, Validation, Methodology: S.d.O.; Conceptualization, Methodology, Supervision and Writing—Review and Editing: O.T. (Oguzhan Topsakal); Validation and Writing—Review and Editing: O.T. (Onur Toker). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mitchell, T. Machine Learning; McGraw Hill: New York, NY, USA, 1997. [Google Scholar]

- Ahmad, M.; Aftab, S.; Muhammad, S.S.; Ahmad, S. Machine Learning Techniques for Sentiment Analysis: A Review. Int. J. Multidiscip. Sci. Eng. 2017, 8, 226. [Google Scholar]

- Zheng, R.; Qu, L.; Cui, B.; Shi, Y.; Yin, H. Automl for Deep Recommender Systems: A Survey. arXiv 2022, arXiv:220313922. [Google Scholar] [CrossRef]

- Kononenko, I. Machine Learning for Medical Diagnosis: History, State of the Art and Perspective. Artif. Intell. Med. 2001, 23, 89–109. [Google Scholar] [CrossRef] [PubMed]

- Garg, V.; Chaudhary, S.; Mishra, A. Analysing Auto ML Model for Credit Card Fraud Detection. Int. J. Innov. Res. Comput. Sci. Technol. 2021, 9, 2347–5552. [Google Scholar] [CrossRef]

- Shi, X.; Wong, Y.D.; Chai, C.; Li, M.Z.-F. An Automated Machine Learning (AutoML) Method of Risk Prediction for Decision-Making of Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 7145–7154. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Padilla, R.; Passos, W.L.; Dias, T.L.; Netto, S.L.; Da Silva, E.A. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 256–276. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imag. 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated Machine Learning: Methods, Systems, Challenges; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Doke, A.; Gaikwad, M. Survey on Automated Machine Learning (AutoML) and Meta Learning. In Proceedings of the 2021 IEEE 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, 6–8 July 2021; pp. 1–5. [Google Scholar]

- Shang, Z.; Zgraggen, E.; Buratti, B.; Kossmann, F.; Eichmann, P.; Chung, Y.; Binnig, C.; Upfal, E.; Kraska, T. Democratizing Data Science through Interactive Curation of Ml Pipelines. In Proceedings of the 2019 International Conference on Management of Data, Amsterdam, The Netherlands, 30 June–5 July 2019; pp. 1171–1188. [Google Scholar]

- Yao, Q.; Wang, M.; Chen, Y.; Dai, W.; Li, Y.-F.; Tu, W.-W.; Yang, Q.; Yu, Y. Taking Human out of Learning Applications: A Survey on Automated Machine Learning. arXiv 2018, arXiv:181013306. [Google Scholar]

- Nagarajah, T.; Poravi, G. A Review on Automated Machine Learning (AutoML) Systems. In Proceedings of the 2019 IEEE 5th International Conference for Convergence in Technology (I2CT), Bombay, India, 29-31 March 2019; pp. 1–6. [Google Scholar]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. Adv. Neural Inf. Process. Syst. 2011, 24, 014008. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-Learning in Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Karmaker, S.K.; Hassan, M.M.; Smith, M.J.; Xu, L.; Zhai, C.; Veeramachaneni, K. Automl to Date and beyond: Challenges and Opportunities. ACM Comput. Surv. CSUR 2021, 54, 1–36. [Google Scholar]

- Ferreira, L.; Pilastri, A.; Martins, C.M.; Pires, P.M.; Cortez, P. A Comparison of AutoML Tools for Machine Learning, Deep Learning and XGBoost. In Proceedings of the IEEE 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18-22 July 2021; pp. 1–8. [Google Scholar]

- Gijsbers, P.; LeDell, E.; Thomas, J.; Poirier, S.; Bischl, B.; Vanschoren, J. An Open Source AutoML Benchmark. arXiv 2019, arXiv:190700909. [Google Scholar]

- Gijsbers, P.; Bueno, M.L.; Coors, S.; LeDell, E.; Poirier, S.; Thomas, J.; Bischl, B.; Vanschoren, J. Amlb: An Automl Benchmark. arXiv 2022, arXiv:220712560. [Google Scholar]

- Zöller, M.-A.; Huber, M.F. Benchmark and Survey of Automated Machine Learning Frameworks. J Artif Intell Res 2019, 70, 409–472. [Google Scholar] [CrossRef]

- Shi, X.; Mueller, J.W.; Erickson, N.; Li, M.; Smola, A.J. Benchmarking Multimodal AutoML for Tabular Data with Text Fields. arXiv 2021, arXiv:2111.02705. [Google Scholar]

- LeDell, E.; Poirier, S. H2o Automl: Scalable Automatic Machine Learning. In Proceedings of the AutoML Workshop at ICML, Vienna, Austria, 12–18 July 2020; ICML: San Diego, CA, USA, 2020; Volume 2020. [Google Scholar]

- Borji, A.; Cheng, M.-M.; Jiang, H.; Li, J. Salient Object Detection: A Benchmark. IEEE Trans. Image Process. 2015, 24, 5706–5722. [Google Scholar] [CrossRef]

- Lenkala, S.; Marry, R.; Gopovaram, S.R.; Akinci, T.C.; Topsakal, O. Comparison of Automated Machine Learning (AutoML) Tools for Epileptic Seizure Detection Using Electroencephalograms (EEG). Computers 2023, 12, 197. [Google Scholar] [CrossRef]

- Westergaard, G.; Erden, U.; Mateo, O.A.; Lampo, S.M.; Akinci, T.C.; Topsakal, O. Time Series Forecasting Utilizing Automated Machine Learning (AutoML): A Comparative Analysis Study on Diverse Datasets. Information 2024, 15. [Google Scholar] [CrossRef]

- Paladino, L.M.; Hughes, A.; Perera, A.; Topsakal, O.; Akinci, T.C. Evaluating the Performance of Automated Machine Learning (AutoML) Tools for Heart Disease Diagnosis and Prediction. AI 2023, 4, 1036–1058. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Berg, G. Image Classification with Machine Learning as a Service: A Comparison between Azure, SageMaker, and Vertex AI. Bachelor’s Thesis, Linnaeus University, Växjö, Sweden, 2022. [Google Scholar]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- He, X.; Zhao, K.; Chu, X. AutoML: A Survey of the State-of-the-Art. Knowl. Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- De Oliveira, S. AutoML-Study. Available online: https://github.com/Telephos/AutoML-study/tree/main (accessed on 18 January 2024).

- Wang, B.; Xu, H.; Zhang, J.; Chen, C.; Fang, X.; Xu, Y.; Kang, N.; Hong, L.; Jiang, C.; Cai, X.; et al. Vega: Towards an End-to-End Configurable Automl Pipeline. arXiv 2020, arXiv:201101507. [Google Scholar]

- Chen, Y.; Yang, T.; Zhang, X.; Meng, G.; Xiao, X.; Sun, J. Detnas: Backbone Search for Object Detection. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–12 December 2019; Volume 32. [Google Scholar]

- Alexandrova, S.; Tatlock, Z.; Cakmak, M. RoboFlow: A Flow-Based Visual Programming Language for Mobile Manipulation Tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5537–5544. [Google Scholar]

- Shah, C.; Sinha, D.; Wang, Y.; Cha, S.; Radhakrishnan, S. Access the Latest in Vision AI Model Development Workflows with NVIDIA TAO Toolkit, 5.0; NVIDIA: Santa Clara, CA, USA, 2023. [Google Scholar]

- Ghosh, S.; Srinivasa, S.K.K.; Amon, P.; Hutter, A.; Kaup, A. Deep Network Pruning for Object Detection. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3915–3919. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The Kitti Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- AutoGluon. Available online: https://auto.gluon.ai/0.6.2/install.html (accessed on 18 January 2024).

- Hoiem, D.; Divvala, S.K.; Hays, J.H. Pascal VOC 2008 Challenge. In World Literature Today; University of Illinois: Chicago, IL, USA, 2009; Volume 24. [Google Scholar]

- Vertex AI—Train and Use Your Own Models. Available online: https://cloud.google.com/vertex-ai/docs/training-overview (accessed on 18 January 2024).

- FiftyOne 2022. Available online: https://github.com/voxel51/fiftyone (accessed on 18 January 2024).

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Open Images Dataset V7. Available online: https://storage.googleapis.com/openimages/web/factsfigures_v7.html (accessed on 18 January 2024).

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance Problems in Object Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef]

- Hao, Y.; Pei, H.; Lyu, Y.; Yuan, Z.; Rizzo, J.-R.; Wang, Y.; Fang, Y. Understanding the Impact of Image Quality and Distance of Objects to Object Detection Performance. arXiv 2022, arXiv:220908237. [Google Scholar]

- Chen, C.; Liu, M.-Y.; Tuzel, O.; Xiao, J. R-CNN for Small Object Detection. In Proceedings of the Computer Vision–ACCV 2016: 13th Asian Conference on Computer Vision, Revised Selected Papers, Part V 13. Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2017; pp. 214–230. [Google Scholar]

- Vedaldi, A.; Zisserman, A. Structured Output Regression for Detection with Partial Truncation. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009. [Google Scholar]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP Human Detector with Partial Occlusion Handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Hoiem, D.; Chodpathumwan, Y.; Dai, Q. Diagnosing Error in Object Detectors. In Proceedings of the European Conference on Computer Vision—ECCV 2012, Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 340–353. [Google Scholar]

- Nagelkerke, N.J. A Note on a General Definition of the Coefficient of Determination. biometrika 1991, 78, 691–692. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).