Detecting Abnormal Behaviors in Dementia Patients Using Lifelog Data: A Machine Learning Approach

Abstract

1. Introduction

2. Related Works

2.1. Research on Detection of Abnormal Behavior Based on Machine Learning

2.2. Machine Learning Argorithms

2.2.1. K-Nearest Neighbors (KNN)

- Principle: KNN classifies a data point based on the majority class of its k-nearest neighbors in the feature space.

- Pros: Easy to implement, works well with small datasets, and requires no training.

- Cons: Computationally expensive for large datasets, sensitive to noise and irrelevant features, and performance decreases as the dimensionality of the feature space increases.

2.2.2. Random Forest (RF)

- Principle: Combines the predictions of multiple decision trees, which are trained on different subsets of the dataset, to make a final decision.

- Pros: Reduces overfitting, handles missing values well, and provides good feature importance estimates.

- Cons: Can be slow to train and predict, particularly with many trees, and may struggle with very high-dimensional datasets.

2.2.3. Logistic Regression (LR)

- Principle: Models the probability of the outcome using the logistic function applied to a linear combination of input features.

- Pros: Easy to implement, fast to train, and provides interpretable feature coefficients.

- Cons: Assumes a linear relationship between features and the log-odds of the outcome and may struggle with complex decision boundaries.

2.2.4. Decision Tree (DT)

- Principle: Splits the data into subsets based on feature values, recursively constructing a tree-like structure with decision nodes and leaf nodes.

- Pros: Easy to understand and interpret, can handle both numerical and categorical data, and requires little preprocessing.

- Cons (Continued): Prone to overfitting, sensitive to small changes in the data, and may produce biased trees if some classes dominate.

2.2.5. Support Vector Machines (SVM)

- Principle: Finds the optimal hyperplane that maximizes the margin between different classes in the feature space.

- Pros: Effective in high-dimensional spaces, versatile due to the use of kernel functions, and provides good generalization performance.

- Cons: Can be slow to train for large datasets, requires parameter tuning, and may struggle with noisy or overlapping classes.

2.2.6. Multi-Layer Perceptron (MLP)

- Principle: Consists of an input layer, one or more hidden layers, and an output layer, with each layer containing a set of interconnected nodes (neurons). The network is trained using backpropagation and gradient descent.

- Pros: Can learn complex non-linear relationships between input and output and can be used for a wide range of applications.

- Cons: Can be sensitive to hyperparameter choices, prone to overfitting, and may require significant computational resources for large networks.

3. Design and Implementation of Machine-Learning-Based Dementia Patient Abnormal Behavior Detection System

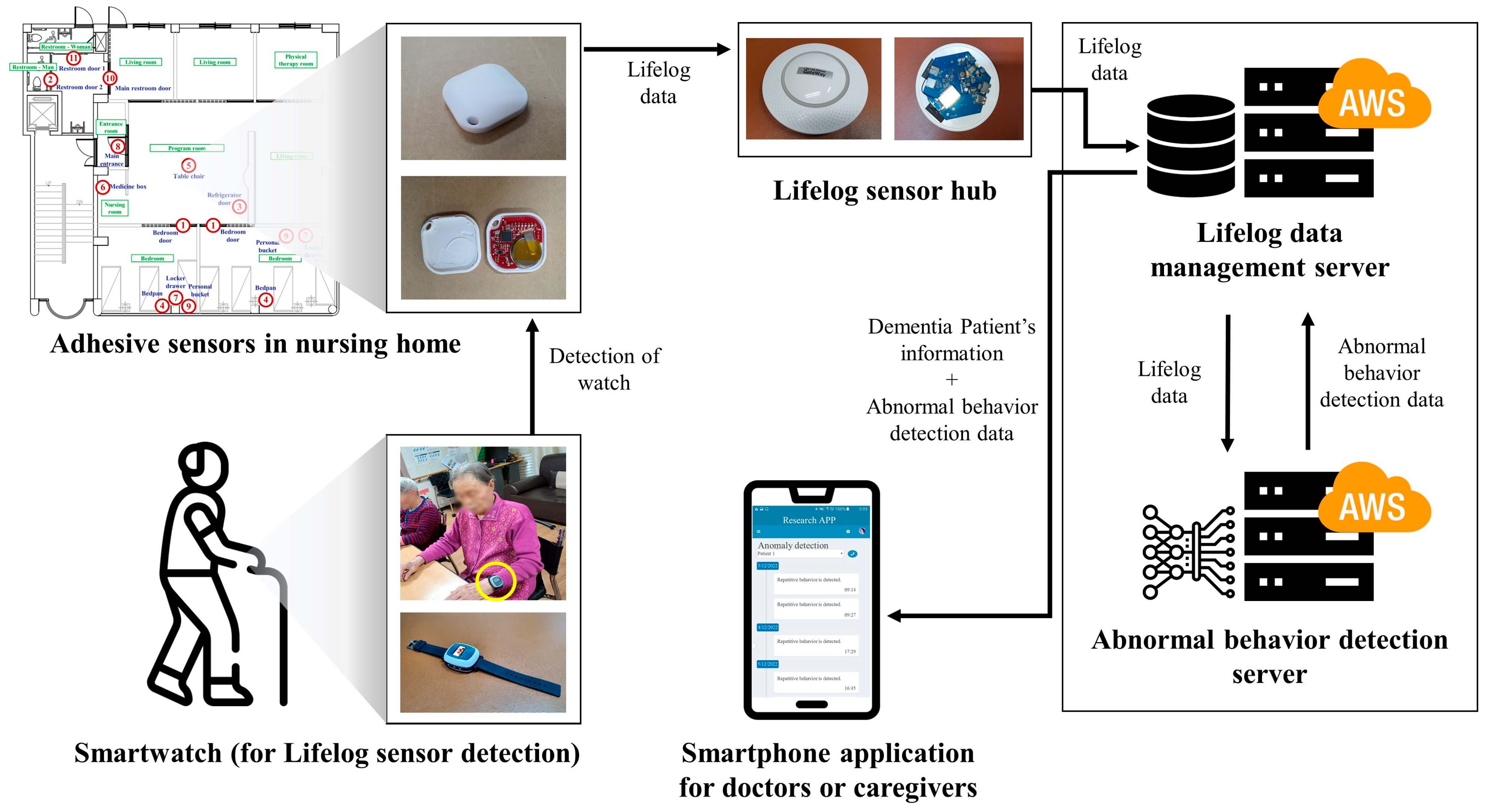

3.1. System Structure and Description

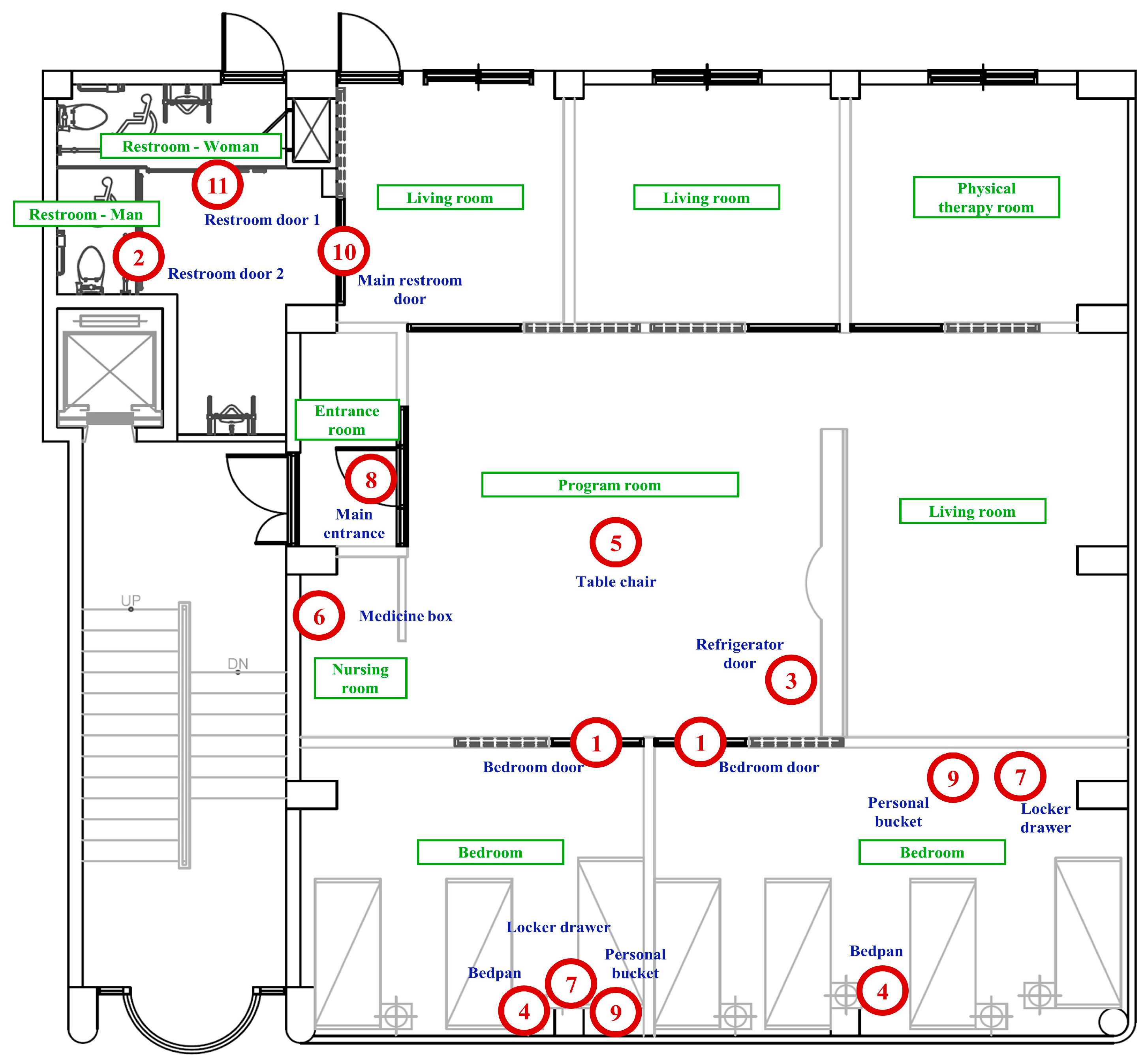

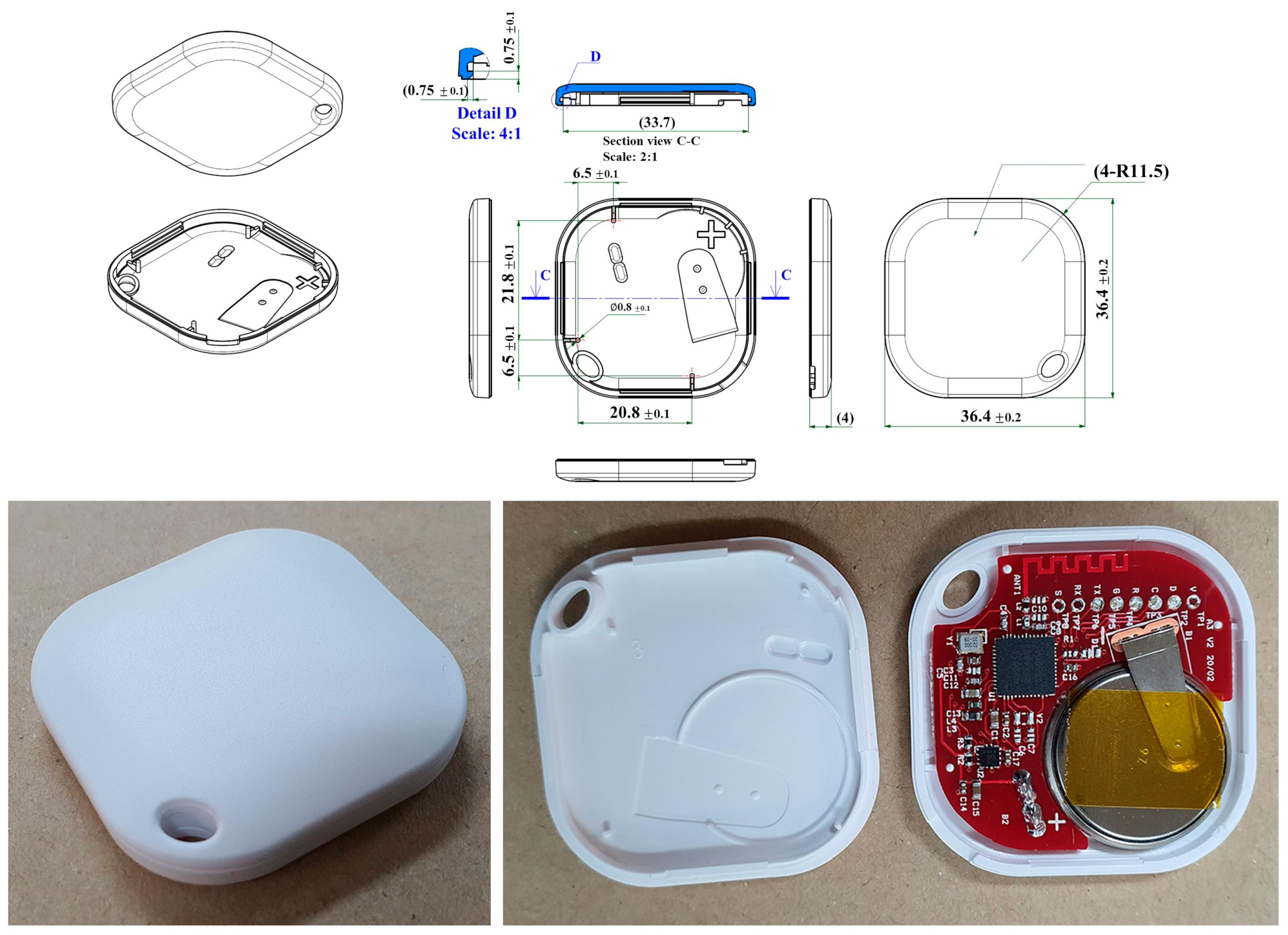

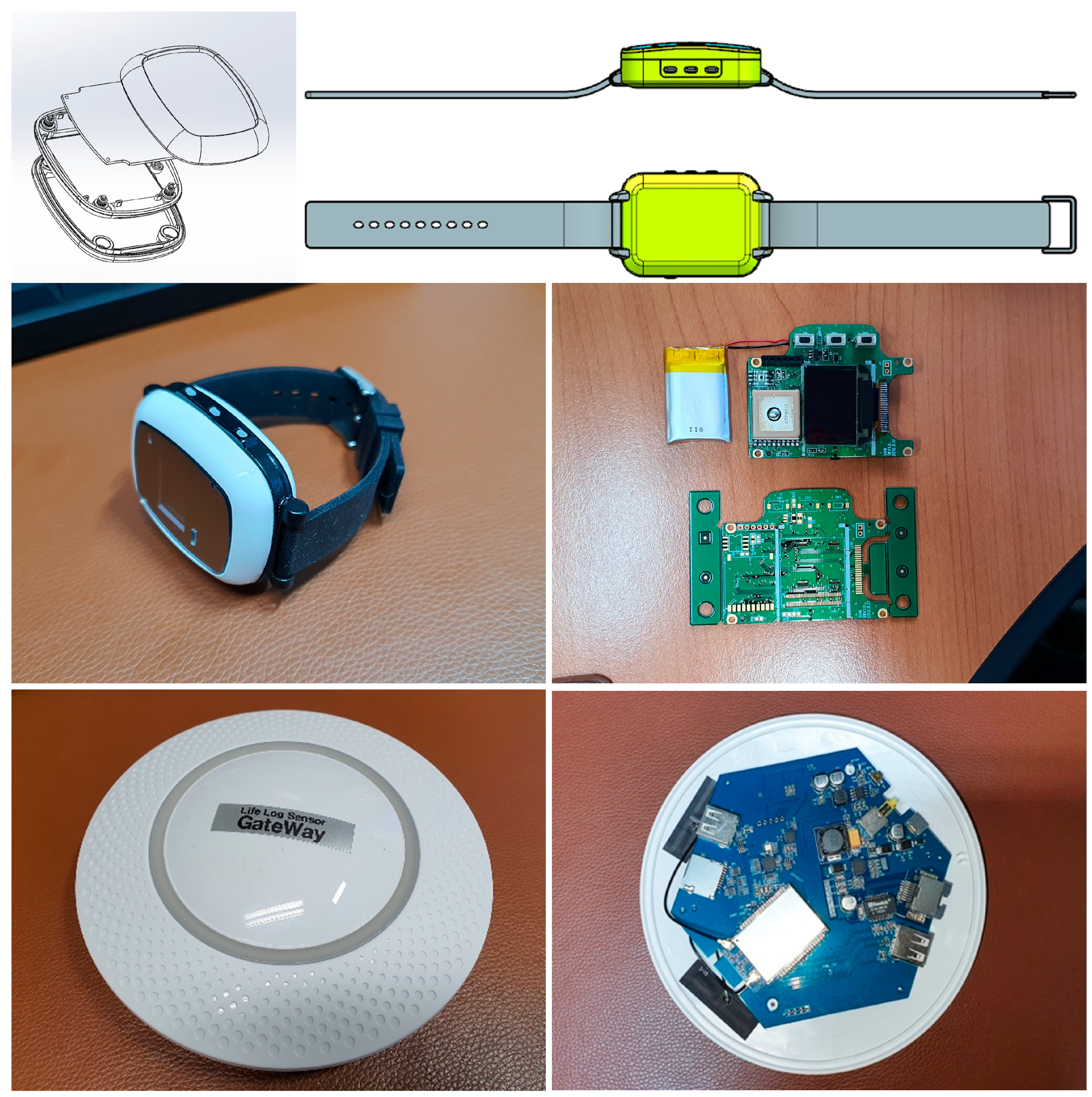

3.2. Lifelog Sensor Device

3.3. Lifelog Dataset

4. Experiment and Experimental Results

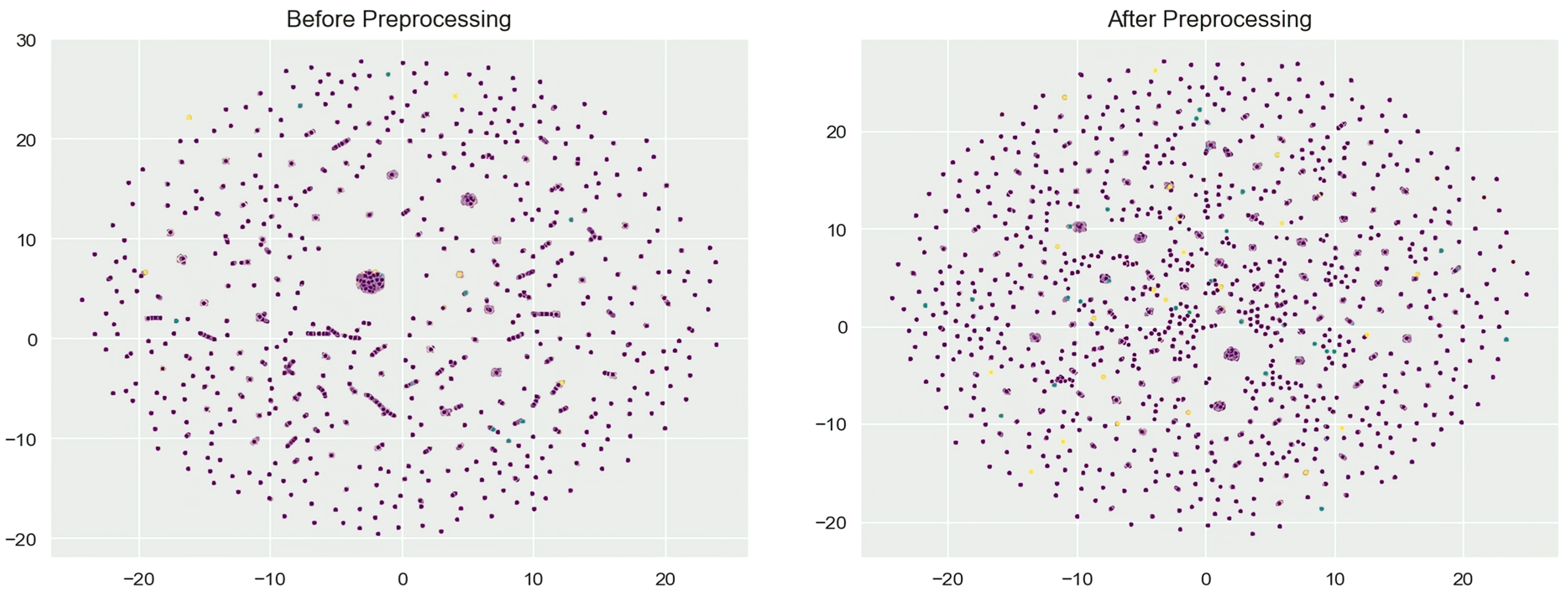

4.1. Data Preprocessing

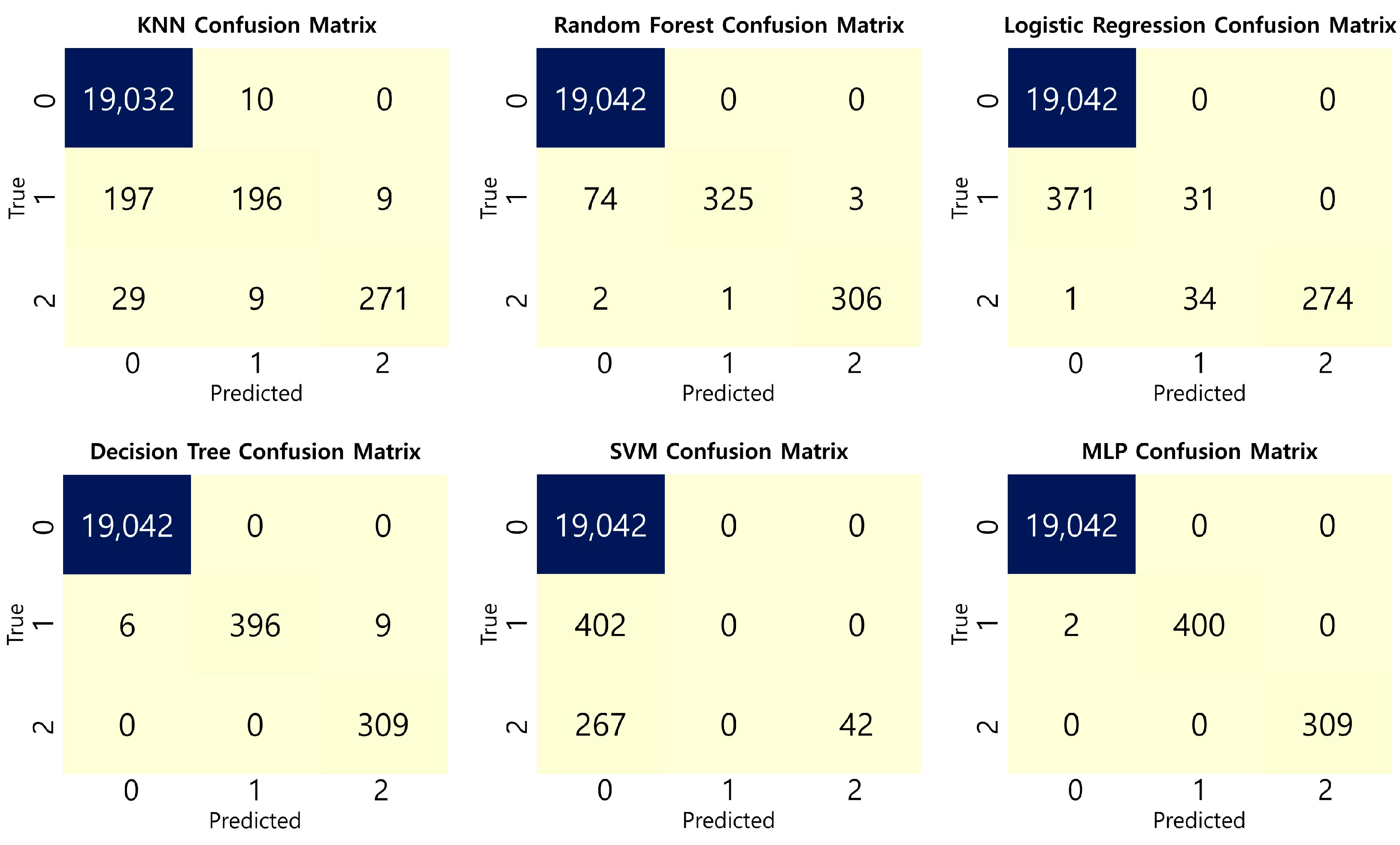

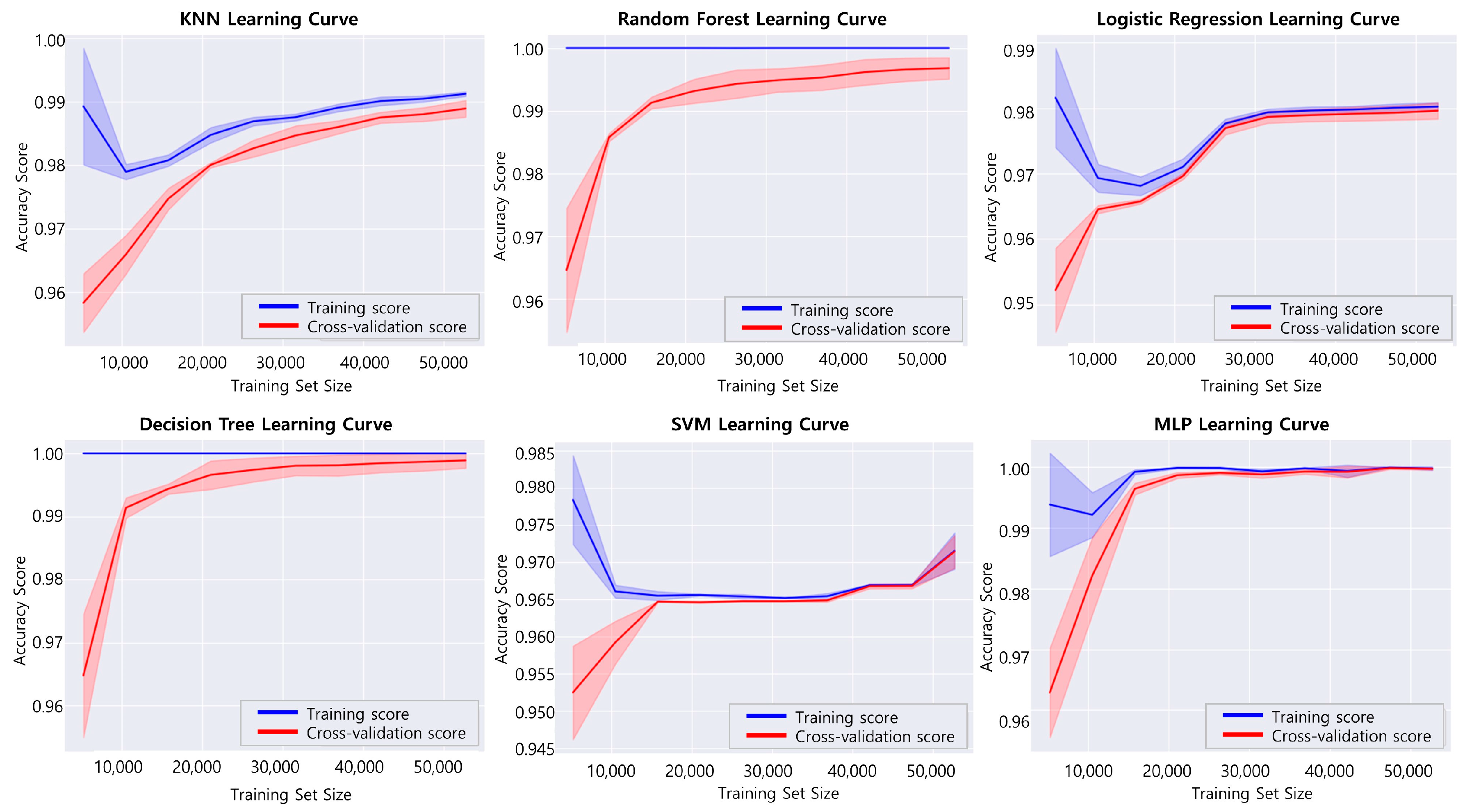

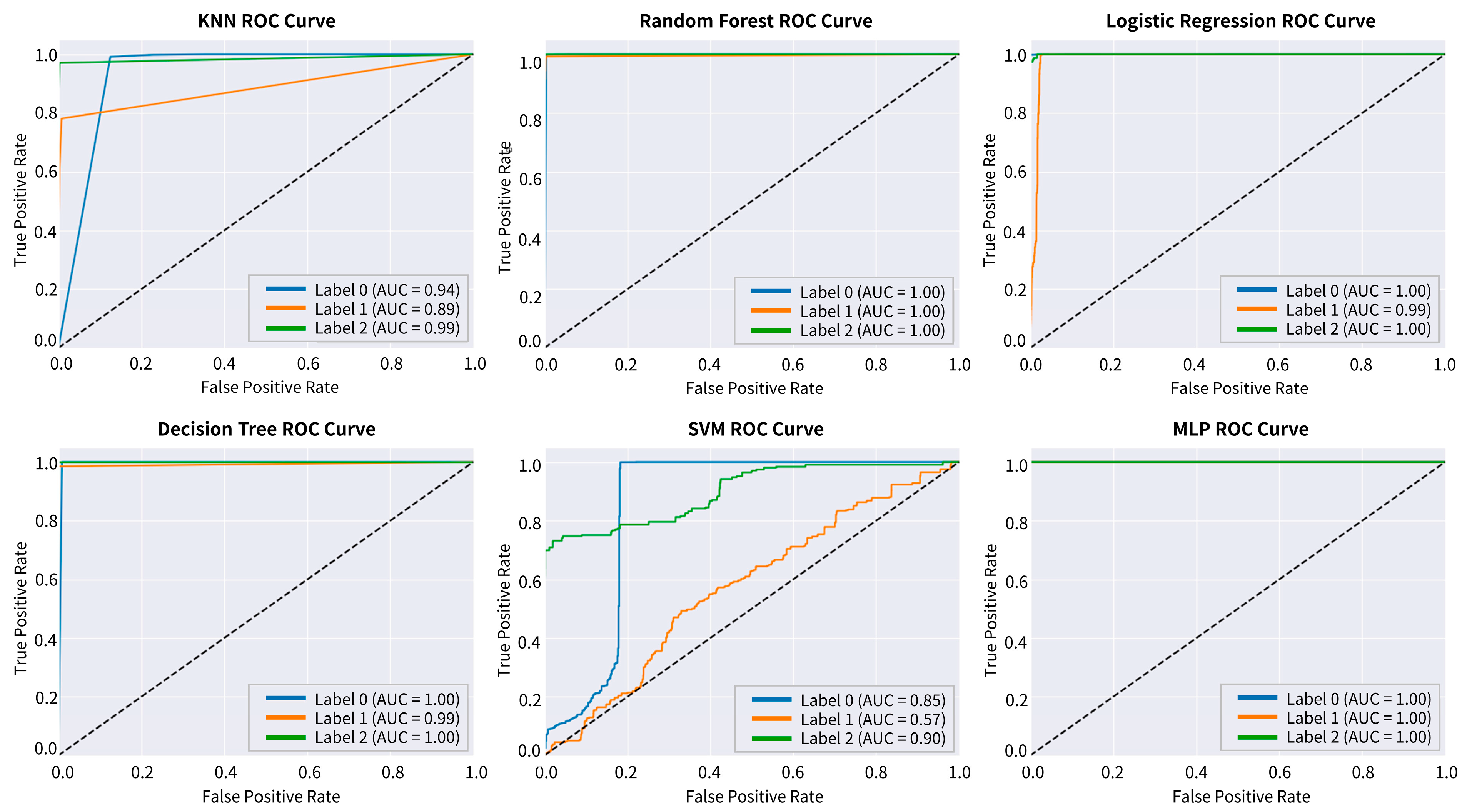

4.2. Parameters and Performance Evaluation of Models

- Accuracy: This metric represents the proportion of instances in which the model’s predictions match the actual values. In other words, it signifies the ratio of samples that the model correctly predicted out of the total samples. Accuracy is one of the fundamental indicators for evaluating the performance of a classification model and is a representative metric that demonstrates how accurately the model predicts. Accuracy is calculated as shown in Equation (1).

- Precision: This metric represents the proportion of samples that are positive among the results predicted as positive by the model. In other words, it indicates how many of the samples predicted as positive by the model are genuinely positive. A high precision means that most samples predicted as positive by the model are positive. Precision is calculated as shown in Equation (2).

- Recall: This metric represents the proportion of samples predicted as positive by the model among the actual positive samples. In other words, it indicates how many of the actual positive samples the model predicts as positive. A high recall means that the model accurately identifies most of the positive samples. Recall is calculated as shown in Equation (3).

- F1 Score: This metric is the harmonic mean of precision and recall. It represents the average value considering both precision and recall, indicating the overall performance of the model. A high F1 score implies that both precision and recall are high. F1 score reaches its highest value when precision and recall are balanced. F1 score is calculated as shown in Equation (4).

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gauthier, S.; Rosa-Neto, P.; Morais, J.; Webster, C. World Alzheimer Report 2021: Journey through the Diagnosis of Dementia; Alzheimer’s Disease International: London, UK, 2021. [Google Scholar]

- Gulisano, W.; Maugeri, D.; Baltrons, M.A.; Fà, M.; Amato, A.; Palmeri, A.; D’Adamio, L.; Grassi, C.; Devanand, D.P.; Honig, L.S.; et al. Role of Amyloid-β and Tau Proteins in Alzheimer’s Disease: Confuting the Amyloid Cascade. J. Alzheimers Dis. 2018, 64, S611–S631. [Google Scholar] [CrossRef]

- Prince, M.; Wimo, A.; Guerchet, M.; Ali, G.-C.; Wu, Y.-T.; Prina, M. World Alzheimer Report 2015, the Global Impact of Dementia: An Analysis of Prevalence, Incidence, Cost and Trends; Alzheimer’s Disease International: London, UK, 2015; p. 87. [Google Scholar]

- Saha, S.; Gerdtham, U.-G.; Toresson, H.; Minthon, L.; Jarl, J. Economic Evaluation of Management of Dementia Patients—A Systematic Literature Review; Lund University: Lund, Sweden, 2018. [Google Scholar]

- Prince, M.; Guerchet, M.; Prina, M. World Alzheimer Report 2013; Alzheimer’s Disease International: London, UK, 2013. [Google Scholar]

- Kuppusamy, P.; Bharathi, V. Human abnormal behavior detection using CNNs in crowded and uncrowded surveillance—A survey. Meas. Sens. 2022, 24, 100510. [Google Scholar] [CrossRef]

- University of Central Florida. UCF-Crime Dataset. Available online: https://www.v7labs.com/open-datasets/ucf-crime-dataset (accessed on 25 March 2023).

- UCSD Anomaly Detection Dataset. Available online: http://www.svcl.ucsd.edu/projects/anomaly/dataset.html (accessed on 25 March 2023).

- Lawrence, S.; Giles, C.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef]

- Niu, Z.; Guo, W.; Wang, Y.; Kong, Z.; Huang, L.; Xue, J. A Novel Anomaly Detection Approach based on Ensemble Semi-Supervised Active Learning (ADESSA). Comput. Secur. 2023, 129, 103190. [Google Scholar]

- Canadian Institute for Cybersecurity. NSL-KDD Dataset. Available online: http://www.unb.ca/cic/datasets/nsl.html (accessed on 25 March 2023).

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the IEEE Symposium on Computational Intelligence for Security and Defense Applications (CISDA), Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Wang, J.; Xia, L. Abnormal behavior detection in videos using deep learning. Clust. Comput. 2019, 22, 9229–9239. [Google Scholar] [CrossRef]

- Graves, A. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Han, R.; Kim, K.; Choi, B.; Jeong, Y. A Study on Detection of Malicious Behavior Based on Host Process Data Using Machine Learning. Appl. Sci. 2023, 13, 4097. [Google Scholar]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Murphy, K.P. Naive bayes classifiers. Univ. Br. Columbia 2006, 18, 1–8. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Gaddam, A.; Mukhopadhyay, S.C.; Gupta, G.S. Elder care based on cognitive sensor network. IEEE Sens. J. 2011, 11, 574–581. [Google Scholar] [CrossRef]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; van den Hengel, A. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1705–1714. [Google Scholar]

- Huang, C.; Wu, Z.; Wen, J.; Xu, Y.; Jiang, Q.; Wang, Y. Abnormal event detection using deep contrastive learning for intelligent video surveillance system. IEEE Trans. Ind. Inform. 2021, 18, 5171–5179. [Google Scholar] [CrossRef]

- Mahadevan, V.; LI, W.X.; Bhalodia, V.; Vasconcelos, N. Anomaly Detection in Crowded Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1975–1981. [Google Scholar]

- Lu, C.; Shi, J.; Jia, J. Abnormal Event Detection at 150 FPS in Matlab. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Yi, M. Single-Image Crowd Counting via Multi-Column Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Nawaratne, R.; Alahakoon, D.; De Silva, D.; Yu, X. Spatiotemporal Anomaly Detection Using Deep Learning for Real-Time Video Surveillance. IEEE Trans. Ind. Informatics 2020, 16, 393–402. [Google Scholar] [CrossRef]

- Luo, W.; Liu, W.; Lian, D.; Tang, J.; Duan, L.; Peng, X.; Gao, S. Video anomaly detection with sparse coding inspired deep neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1070–1084. [Google Scholar] [CrossRef]

- Chen, G.; Liu, P.; Liu, Z.; Tang, H.; Hong, L.; Dong, J.; Conradt, J.; Knoll, A. Neuroaed: Towards efficient abnormal event detection in visual surveillance with neuromorphic vision sensor. IEEE Trans. Inf. Forensics Secur. 2020, 16, 923–936. [Google Scholar] [CrossRef]

- Wright, J.; Ma, Y.; Mairal, J.; Sapiro, G.; Huang, T.S.; Yan, S. Sparse Representation for Computer Vision and Pattern Recognition. Proc. IEEE 2010, 98, 1031–1044. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Li, G.; Jung, J.J. Deep learning for anomaly detection in multivariate time series: Approaches, applications, and challenges. Inf. Fusion 2022, 91, 93–102. [Google Scholar] [CrossRef]

- DeMaris, A. A tutorial in logistic regression. J. Marriage Fam. 1995, 57, 956–968. [Google Scholar] [CrossRef]

- Gladwin, C.H. Ethnographic Decision Tree Modeling; Sage Publication: Thousand Oaks, CA, USA, 1989. [Google Scholar]

- Wang, L. Support Vector Machines: Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005; Volume 177. [Google Scholar]

- Gardner, M.W.; Dorling, S. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Moore, J. Acrylonitrile-butadiene-styrene (ABS)—A review. Composites 1973, 4, 118–130. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar]

| Study (Year) | Dataset | Model | Accuracy |

|---|---|---|---|

| Kuppusamy, P. et al. [6] (2022) | UCF-Crime [7], UCSD [8] | CNN [9] | 99.5% |

| Niu, Z. et al. [10], (2023) | NSL-KDD [11], KDD Cup 99 [12] | ADESSA [10] | 99.2% |

| Wang, J. et al. [13] (2019) | Human behavior video dataset [13] | CNN [9], LSTM [14] | 92.5% |

| Han R. et al. [15] (2023) | Host behavior data [15] | KNN [18], NB [19], RF [20], AE [21], MemAE [22] | 98.3% |

| Huang, C. et al. [23] (2021) | UCSD [24], CUHK Avenue [25], ShanghaiTech [26] | MemAE [22], TAC-Net [23], ISTL [27], sRNN-AE [28] | 98.1% |

| Chen, G. et al. [29] (2020) | NeuroAED [29] | EMST [29], SR [30], K-SVD [31] | 95.8% |

| Li, G. et al. [32] (2022) | Time series dataset [32] | LSTM [14], AE [21] | 91.7% |

| Sensor Number | Location | Furniture or Appliances |

|---|---|---|

| 1 | Bedroom | Bedroom door |

| 2 | Restroom—Man | Restroom door 2 |

| 3 | Program room | Refrigerator door |

| 4 | Bedroom | Bedpan |

| 5 | Program room | Table chair |

| 6 | Nursing room | Medicine box |

| 7 | Bedroom | Locker drawer |

| 8 | Main entrance | Main entrance |

| 9 | Bedroom | Personal bucket |

| 10 | Restroom | Main restroom door |

| 11 | Restroom—Woman | Restroom door 1 |

| Abnormal Behaviors | Description |

|---|---|

| Wandering | Walking aimlessly and getting lost, especially in familiar surroundings |

| Falls | Losing balance and falling, often leading to injury |

| Sleep disturbances | Difficulty falling asleep or staying asleep |

| Delusions/Hallucinations | seeing, hearing, or believing things that are not real |

| Agitation and aggression | Verbal or physical outbursts, including hitting, biting, or kicking, often in response to frustration or confusion |

| Repetitive questioning/behaviors | Asking the same question or doing the same action repeatedly |

| Eating disturbances (overeating, pica, anorexia) | Eating too much or too little, or eating non-food items, such as paper or dirt |

| Medication mismanagement | Taking too much medication or taking medication improperly |

| Hoarding/Inappropriate behaviors (including hoarding objects or engaging in socially inappropriate or unhygienic behaviors) | Collecting or hoarding items or engaging in behavior that is socially inappropriate or unhygienic |

| Date | Time | Sensor Numbers | Label | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |||

| 15 November 2022 | 07:10 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 37 | 0 | 0 | 1 |

| 15 November 2022 | 07:11 | 0 | 0 | 0 | 0 | 0 | 10 | 7 | 0 | 5 | 0 | 0 | 0 |

| 15 November 2022 | 07:12 | 0 | 0 | 0 | 0 | 0 | 0 | 17 | 0 | 9 | 0 | 0 | 0 |

| 15 November 2022 | 07:14 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Datetime | Sensor Numbers | Label | TA | MaxA | MeanA | SA | ||

|---|---|---|---|---|---|---|---|---|

| 1 | … | 11 | ||||||

| 15 November 2022 07:10 | 0 | … | 0 | 1 | 21.0 | 15.0 | 3.818 | 7.723 |

| 15 November 2022 07:11 | 0 | … | 0 | 0 | 24.0 | 24.0 | 4.364 | 10.854 |

| 15 November 2022 07:12 | 0 | … | 0 | 0 | 40.0 | 37.0 | 7.363 | 17.023 |

| 15 November 2022 07:15 | 0 | … | 0 | 0 | 22.0 | 10.0 | 4.000 | 6.842 |

| 15 November 2022 07:16 | 0 | … | 0 | 0 | 26.0 | 17.0 | 4.727 | 9.234 |

| Model | Parameters |

|---|---|

| KNN | n_neighbors: 5 weights: ‘uniform’ algorithm: ‘auto’ |

| RF | n_estimators: 100 max_depth: None min_samples_split: 2 min_samples_leaf: 1 max_features: ‘auto’ bootstrap: True |

| LR | C: 1.0 penalty: ‘l2’ solver: ‘lbfgs’ max_iter: 100 |

| DT | criterion: ‘gini’ splitter: ‘best’ max_depth: None min_samples_split: 2 min_samples_leaf: 1 |

| SVM | C: 1.0 kernel: ‘rbf’ gamma: ‘scale’ decision_function_shape: ‘ovr’ max_iter: -1 |

| MLP | hidden_layer_sizes: 1 layer of 100 neurons (100) activation: ‘relu’ solver: ‘adam’ alpha: 0.0001 batch_size: ‘auto’ learning_rate: ‘constant’ max_iter: 200 random_state: 42 |

| Actual Values | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted Values | Positive | TP | FP |

| Negative | FN | TN | |

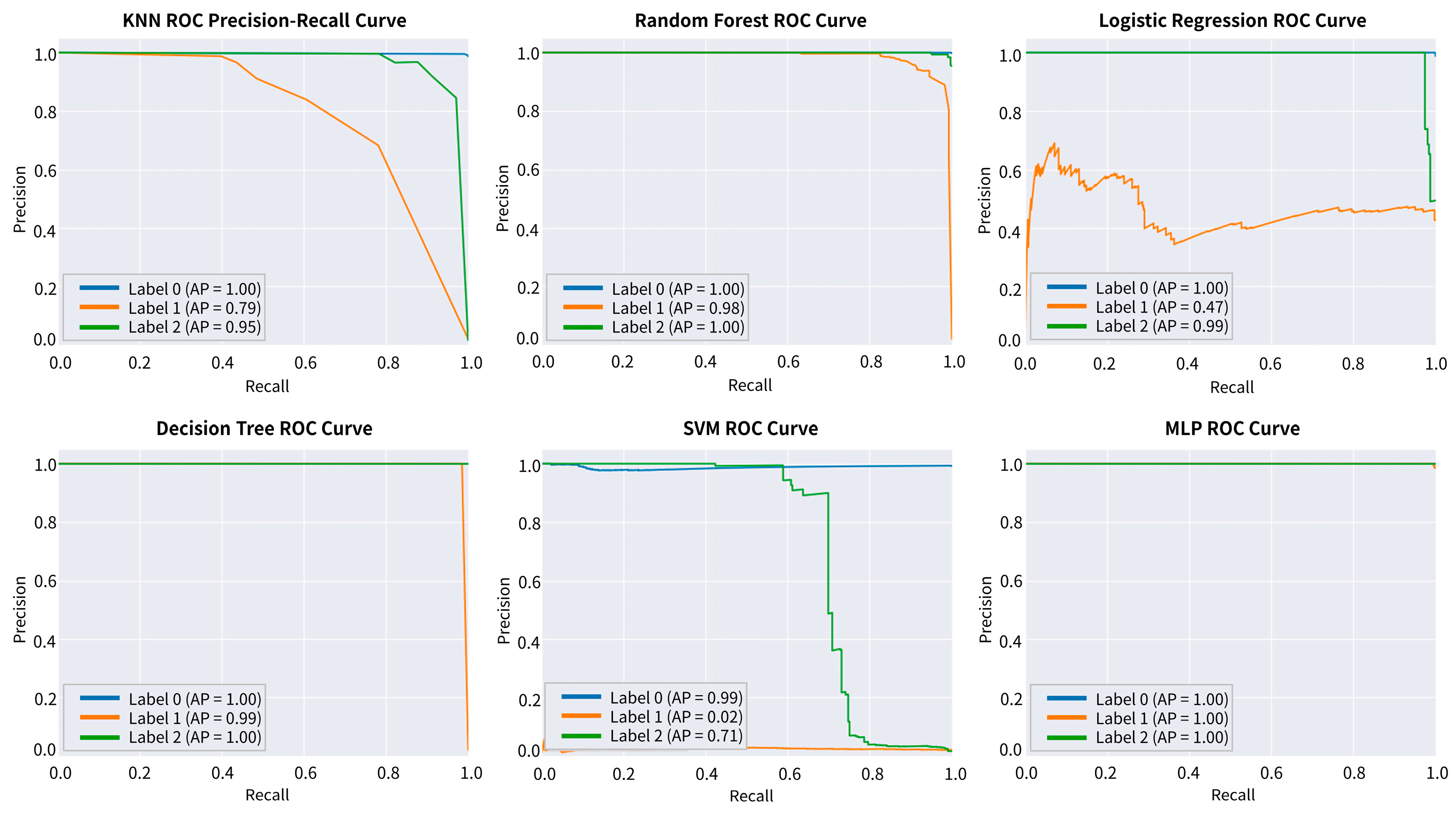

| Model | Accuracy | Precision | Recall | F1 Score | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 0 | 1 | 2 | 0 | 1 | 2 | ||

| LR | 0.9794 | 0.98 | 0.48 | 1 | 1 | 0.08 | 0.89 | 0.99 | 0.13 | 0.94 |

| DT | 0.9997 | 1 | 1 | 1 | 1 | 0.99 | 1 | 1 | 0.99 | 1 |

| RF | 0.9959 | 1 | 1 | 0.99 | 1 | 0.81 | 0.99 | 1 | 0.89 | 0.99 |

| SVM | 0.9661 | 0.97 | 0 | 1 | 1 | 0 | 0.14 | 0.98 | 0 | 0.24 |

| KNN | 0.9871 | 0.99 | 0.91 | 0.97 | 1 | 0.49 | 0.88 | 0.99 | 0.64 | 0.92 |

| MLP | 0.9999 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.; Jang, J.; Park, H.; Jeong, J.; Shin, D.; Shin, D. Detecting Abnormal Behaviors in Dementia Patients Using Lifelog Data: A Machine Learning Approach. Information 2023, 14, 433. https://doi.org/10.3390/info14080433

Kim K, Jang J, Park H, Jeong J, Shin D, Shin D. Detecting Abnormal Behaviors in Dementia Patients Using Lifelog Data: A Machine Learning Approach. Information. 2023; 14(8):433. https://doi.org/10.3390/info14080433

Chicago/Turabian StyleKim, Kookjin, Jisoo Jang, Hansol Park, Jaeyeong Jeong, Dongil Shin, and Dongkyoo Shin. 2023. "Detecting Abnormal Behaviors in Dementia Patients Using Lifelog Data: A Machine Learning Approach" Information 14, no. 8: 433. https://doi.org/10.3390/info14080433

APA StyleKim, K., Jang, J., Park, H., Jeong, J., Shin, D., & Shin, D. (2023). Detecting Abnormal Behaviors in Dementia Patients Using Lifelog Data: A Machine Learning Approach. Information, 14(8), 433. https://doi.org/10.3390/info14080433