1. Introduction

In fact, assessment plays a very important role in the learning process [

1]. Assessment is a process of evaluating knowledge, the ability to understand, and achievement of test takers’ skills [

2]. Assessment is used to measure students’ abilities with the aim of selecting students for new admissions, measuring the level of understanding of post-learning material, and as a determinant of graduation. In addition, one of the benefits of conducting an assessment is as a reference for determining student learning flows. An example is the determination of material according to students’ abilities [

3] and determining the next material they need to study [

4]. In addition, student assessments can streamline the allocation of resources needed to increase student learning competencies [

5].

As test-takers, we often do not know whether these students’ answers are valid or not, and whether they are taking it seriously or cheating. As students, we also sometimes come across questions that are very difficult, forcing us to answer to obtain the best grades even though we do not know the answers. This behaviour is called rapid-guessing behaviour. According to ref. [

6], rapid-guessing behaviour occurs when test takers answer questions quicker than usual in a speeded test. However, assessment results can be invalid because students cheated or rapidly guessed the answer to the question [

6]. Ref. [

7] states that, therefore, to obtain the ideal assessment results, it is necessary to differentiate assessment results based on student behaviour, whether they answer by guessing (rapid-guessing behaviour) or answer seriously (solution behaviour). This rapid-guessing behaviour causes biased scores and unreliable tests, so it should be ignored.

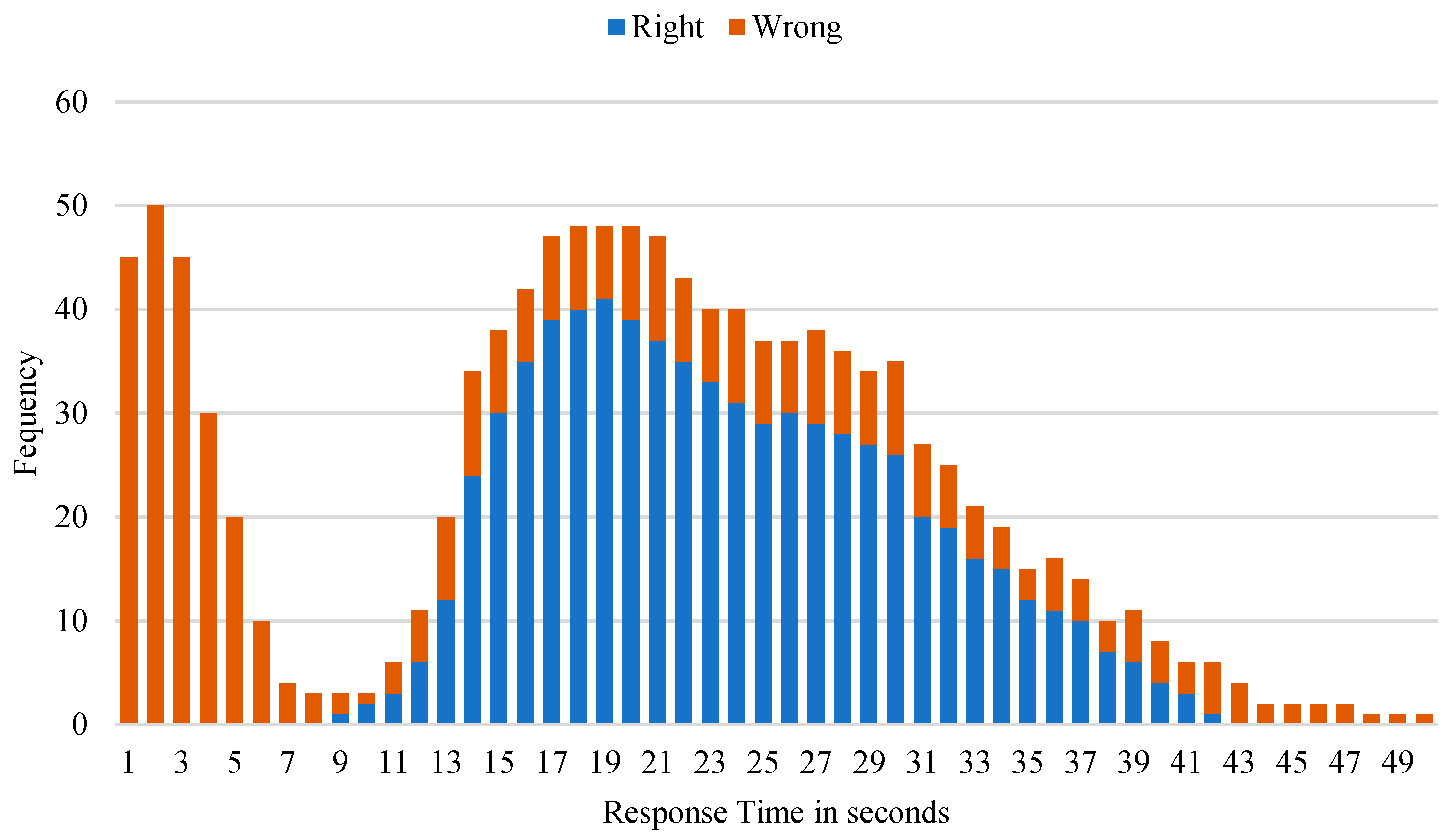

Schnipke was the first to discover rapid-guessing behaviour when mapping the response times of the Graduate Record Examination Computer-Based Test (GRE-CBT). In her research, each question was mapped to its response time distribution as shown in

Figure 1. Response time is taken from how long it takes students to read to answer a question. In practice, to distinguish rapid-guessing behaviour and solution behaviour, we need to determine the threshold time.

Several studies investigated how to determine the threshold time. Schnipke use visual inspection to determine threshold and distinguish both behaviours. A similar approach was carried out by DeMars [

8], Setzer et al. [

9], and Pastor et al. [

10]. However, detecting rapid-guessing behaviour becomes more difficult using this approach when the RT distribution has the same RT peak. Students who answered by guessing and students who answered seriously both made overlapping response time distributions. Other researchers used the k-second method to determine the RT threshold, in which the fixed threshold value is generally set between three to five seconds [

7]. K-second is the simplest threshold method. It does not require information about each item’s surface features or response time distribution and is particularly useful with large item pools. Its one-size-fits-all nature, however, will often result in variations in misclassification across items [

7,

8,

9,

10].

Some other researchers use the surface features method to distinguish between the two behaviours. Surface features determine the RT threshold using several item features. Silm et al. [

11] considered the test subject and item length in determining the RT threshold. Wise and Kong [

12] considered the number of characters and whether there were tables or images. However, in both studies, the results of evaluating students’ rapid guessing behaviour were not explicitly detailed. In contrast to methods that use time thresholds, Lin [

13] processes the student’s ability score (l) and item difficulty index (i) based on the Rasch model to determine guessing behaviour. They argue that if there is a large difference between the student’s ability and the item difficulty index, then it is rapid-guessing behaviour.

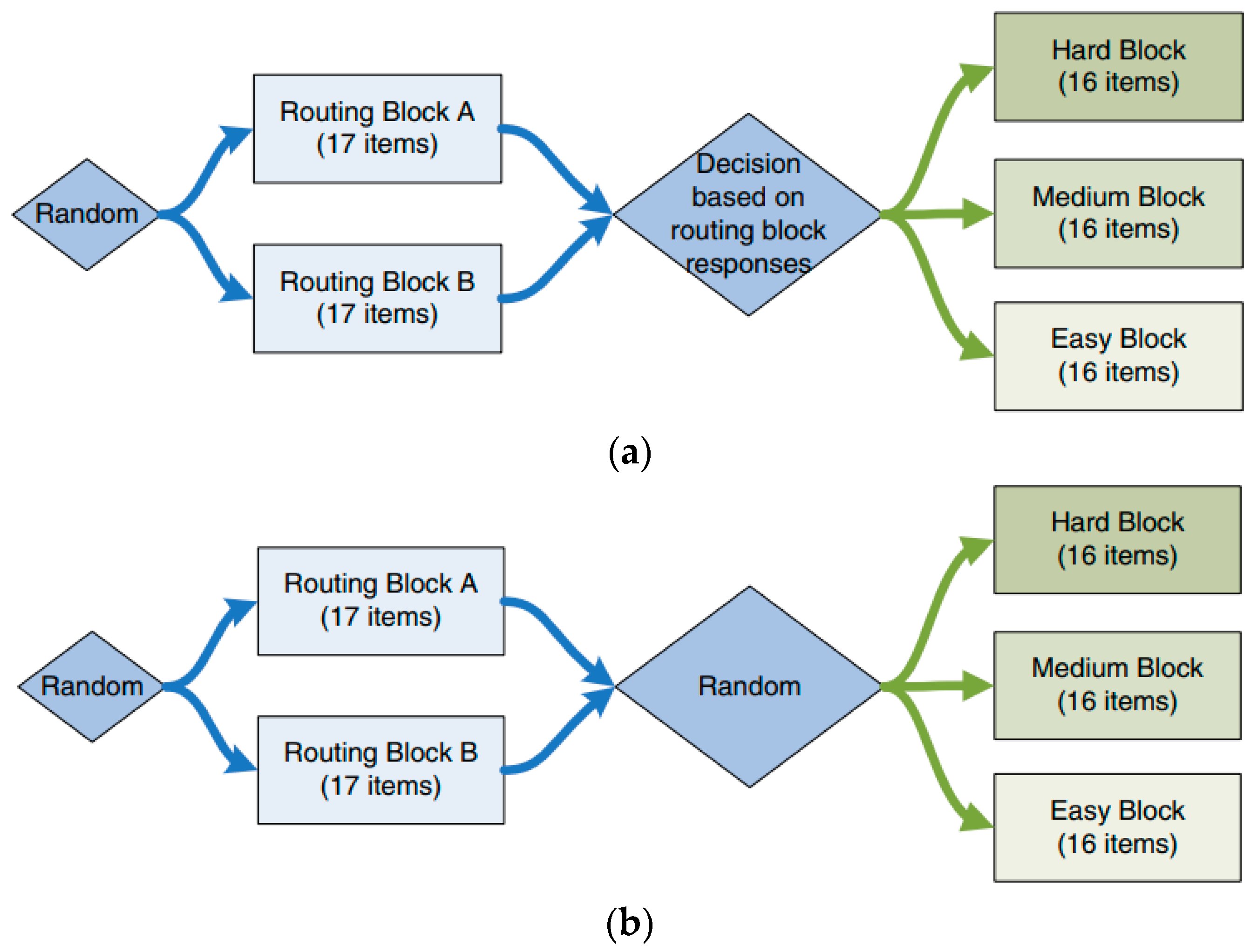

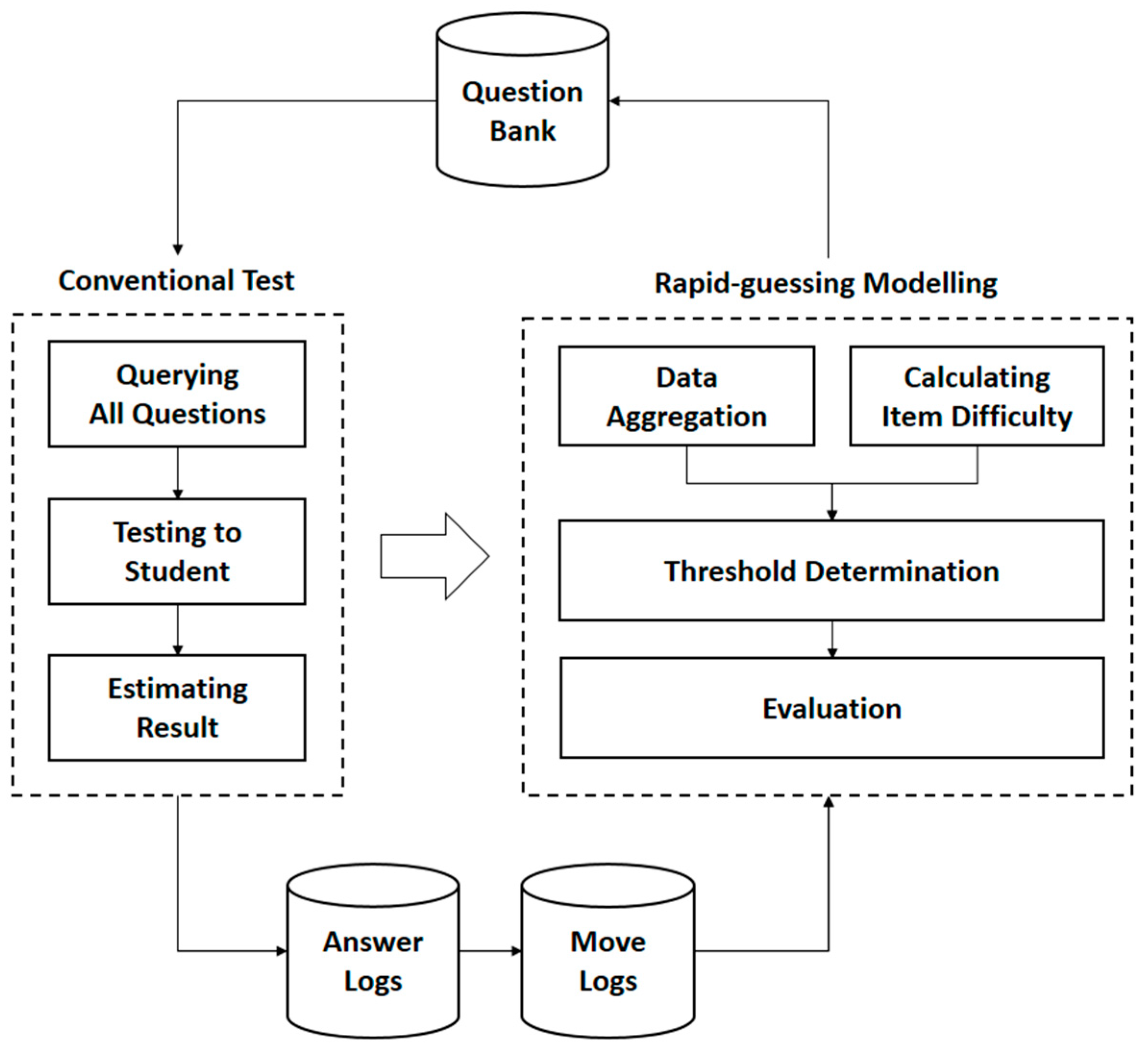

This study aims to propose a correction to the determination of time thresholds as part of the identification of rapid-guessing behaviour in assessment. The correction we provide is that the determination of the threshold is not simply about how to choose the right number to be used as a threshold, but also needs to pay attention to how difficult the question and how the data processing technique is. We tried several data aggregation techniques such as sum, average, and maximum. We adopted the concept of k-seconds and combined it with the features of item response theory (IRT)to create a new approach in determining the time threshold for each item category. The questions were divided into three categories based on their difficulty according to IRT features. Data was obtained from online exams during lectures on campus. Response time is obtained from how long students work on questions (calculated from the time of opening to answering questions). The expected benefit of this research is that the question maker can know which answers are given seriously by students and which are given fraudulently, so that the scores can be differentiated. This research is part of our larger research on computer adaptive assessment.

4. Results and Discussion

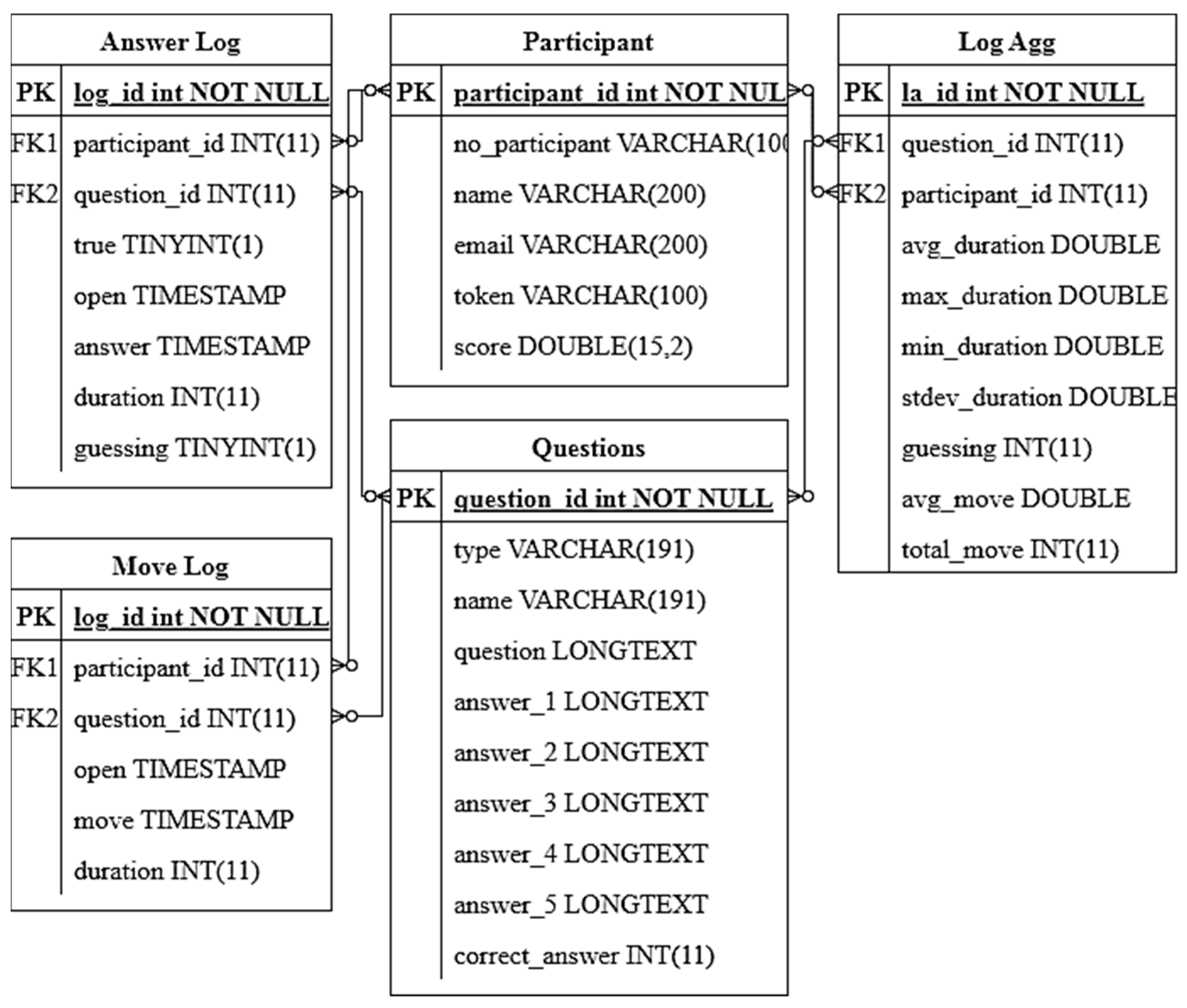

We conducted experiments on students during lecture hours. Some of the steps were aggregating data, calculating item difficulty, determining threshold, and evaluation. The first step is to perform data aggregation. We collect data from the Answer Log and Move Log tables to be aggregated in an aggregation table according to the design. However, the parameters we use here are only time-related parameters, including avg_duration, max_duration, min_duration, stdev_duration, avg_move, and total_move. However, considering the processing time, we chose three main parameters to compare, namely avg_duration, max_duration, and total_move.

The second step is to calculate item_difficulty. We use the equation from IRT to calculate the item difficulty. Then, we assign labels to it using the inference method of fuzzy logic. Following the completion of the conventional tests by the students, the answer log was used in the RT-based guessing model. The guessing model proposed in this study only uses one parameter, namely time. Further analysis on the answer log data indicated several different behaviours exhibited by the students in giving responses to the presented items. These behaviours occurred due to the duration of the test (90 min), which is long for a multiple-choice test that consists of 40 items. The first behaviour exhibited by the students was that several students used the remaining time to reconsider doubtful responses after they had given responses to all the items. The second behaviour exhibited by the students was that several students spent a lot of time reading items that they deemed difficult, then they skipped the item without giving a response. After giving responses to the other items, the students then came back to the items they deemed difficult and gave a quick response. Due to these exhibited behaviours, we investigated the use of several parameters to define RT in the proposed guessing model. The first parameter that we used to define RT was the time spent by the students to initially read an item and give a response, which we named duration. The second parameter was the accumulation of time spent on an item even after giving a response, which we named total move. The last parameter, named max time, was derived from the duration parameter, which was the longest time spent to initially read an item and give a response among all the students. We compared the performance of the guessing model with the use of these different parameters.

Table 3 shows the evaluation matrix of threshold determination for the SPM course and SE course. At a glance, the accuracy value of SE course is higher than that of SPM course. This difference is because the number of students taking the exam is not the same. There are more students in the SPM course compared to students in the SE course. This certainly affects the accuracy of the model. The more samples, the greater the potential for outlier behaviour. Therefore, outlier detection [

20] is necessary to reduce bias.

Each table displays the evaluation matrix of our proposed methods compared to other threshold determination methods. In addition, each table is compared with various aggregation parameters. In general, the guessing model that used the modified k-seconds method to determine the RT threshold outperformed the other models in terms of accuracy. In the SPM course, using the avg_duration, the accuracy was 68% aggregation parameter, outperforming the other methods. Meanwhile, on the SE course, the accuracy was 85%, outperforming the other methods. Further analysis of modified k-seconds method revealed that the model performed better with the use of the total move and max time parameters. With the use of the total move parameter, the model achieved a higher accuracy. However, this model obtained a recall value of 0. This indicates that the model was unable to detect rapid-guessing behaviour. As a result of the recall metric having a value of 0, the precision and F1 score values were not able to be calculated.

Furthermore, the evaluation of the models based on the F1 score metric revealed that the guessing model that used the surface features method along with the guessing model that used the normative method to determine the RT threshold achieved the best performance. Further analysis of these two models revealed that the performance of both models was more stable with the use of the duration parameter.

Our experiments show that our proposed method, modified k-second, has superior accuracy compared to other methods in both courses. In addition, this study also proves that there is a difference in accuracy along with the difference in aggregation techniques. Aggregation using total_move has higher accuracy than using avg_duration or max_duration parameters. Therefore, further research needs to try other aggregation parameters, one of which is sum_duration. However, when viewed from the F1 score evaluation, the best method is the surface feature. Although in terms of accuracy, modified k-second recorded the highest value, this method has a very low recall value, because the count of students who guessed is very little (data imbalance). This causes the model to be biased, so that the model cannot properly accommodate class with little data [

21]. For further research, several techniques need to be conducted to handle data imbalance, such as modifying preprocessing techniques, algorithmic approaches, cost sensitivity, and ensemble learning [

21].

Author Contributions

Conceptualization, U.L.Y. and A.N.P.B.; methodology, U.L.Y. and A.N.P.B.; software, A.N.P.B.; validation, A.N.P.B. and M.A.; formal analysis, M.A. and E.P.; investigation, A.N.P.B.; resources, A.N.P.B.; data curation, M.A. and E.P.; writing—original draft preparation, M.A.; writing—review and editing, E.P.; visualization, M.A.; supervision, U.L.Y. and E.P.; project administration, U.L.Y.; funding acquisition, U.L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Institut Teknologi Sepuluh Nopember (ITS) for WCP-Like Grant Batch 2, grant number 1855/IT2/T/HK.00.01/2022.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, M.A., upon reasonable request.

Acknowledgments

This work is part of the “i-assessment project”, an adaptive testing-based test application.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

| Notation and Acronym |

| RA | Response accuracy |

| RT | Response time |

| RTE | Response time effort |

| IRT | Item response theory |

| SE | Software engineering |

| SPM | Software project management |

| Item difficulty |

| The number of examinees that submitted a response item i |

| The number of examinees that were unable to submit (false answer) a response to item i |

| l | Student’s ability |

| i | Rasch model |

References

- Scottish Qualifications Authority Guide to Assessment; Scottish Qualifications Authority: Glasgow, UK, 2017; pp. 3–9.

- Kennedy, K.J.; Lee, J.C.K. The changing role of schools in Asian societies: Schools for the knowledge society. In The Changing Role of Schools in Asian Societies: Schools for the Knowledge Society; Routledge: Oxfordshire, UK, 2007; pp. 1–228. [Google Scholar]

- Hwang, G.J.; Sung, H.Y.; Chang, S.C.; Huang, X.C. A fuzzy expert system-based adaptive learning approach to improving students’ learning performances by considering affective and cognitive factors. Comput. Educ. Artif. Intell. 2020, 1, 100003. [Google Scholar] [CrossRef]

- Hwang, G.-J. A conceptual map model for developing intelligent tutoring systems. Comput. Educ. 2003, 40, 217–235. [Google Scholar] [CrossRef]

- Peng, S.S.; Lee, C.K.J. Educational Evaluation in East Asia: Emerging Issues and Challenges; Nova Science Publishers: Hauppauge, NY, USA, 2009. [Google Scholar]

- Schnipke, D.L. Assessing Speededness in Computer-Based Tests Using Item Response Times. Ph.D. Thesis, Johns Hopkins University, Baltimore, MD, USA, 1995. [Google Scholar]

- Wise, S.L. Rapid-Guessing Behavior: Its Identification, Interpretation, and Implications. Educ. Meas. Issues Pract. 2017, 36, 52–61. [Google Scholar] [CrossRef]

- Demars, C.E. Changes in Rapid-Guessing Behavior Over a Series of Assessments. Educ. Assess. 2007, 12, 23–45. [Google Scholar] [CrossRef]

- Setzer, J.C.; Wise, S.L.; van den Heuvel, J.R.; Ling, G. An Investigation of Examinee Test-Taking Effort on a Large-Scale Assessment. Appl. Meas. Educ. 2013, 26, 34–49. [Google Scholar] [CrossRef]

- Pastor, D.A.; Ong, T.Q.; Strickman, S.N. Patterns of Solution Behavior across Items in Low-Stakes Assessments. Educ. Assess. 2019, 24, 189–212. [Google Scholar] [CrossRef]

- Silm, G.; Must, O.; Täht, K. Test-taking effort as a predictor of performance in low-stakes tests. Trames 2013, 17, 433–448. [Google Scholar] [CrossRef]

- Wise, S.L.; Kong, X. Response time effort: A new measure of examinee motivation in computer-based tests. Appl. Meas. Educ. 2005, 18, 163–183. [Google Scholar] [CrossRef]

- Lin, C.K. Effects of Removing Responses With Likely Random Guessing Under Rasch Measurement on a Multiple-Choice Language Proficiency Test. Lang. Assess. Q. 2018, 15, 406–422. [Google Scholar] [CrossRef]

- Wise, S.L.; Ma, L.; Kingsbury, G.G.; Hauser, C. An investigation of the relationship between time of testing and test-taking effort. Natl. Counc. Meas. Educ. 2010, 1–18. Available online: https://eric.ed.gov/?id=ED521960 (accessed on 12 June 2023).

- Vermunt, J. Latent Class Modeling with Covariates: Two Improved Three-Step Approaches. Political Anal. 2017, 18, 450–469. [Google Scholar] [CrossRef]

- Bakk, Z.; Vermunt, J.K. Robustness of stepwise latent class modeling with continuous distal outcomes. Struct. Equ. Model. 2016, 23, 20–31. [Google Scholar] [CrossRef]

- Lee, Y.H.; Jia, Y. Using response time to investigate students’ test-taking behaviors in a NAEP computer-based study. Large-Scale Assess. Educ. 2014, 2, 8. [Google Scholar] [CrossRef]

- Ebel, R.L.; Frisbie, D.A. Essentials of Educational Measurement, 5th ed.; Prentice-Hall of India Private Limited: New Delhi, India, 1991; ISBN 0-87692-700-2. [Google Scholar]

- Purushothama, G. Introduction to Statistics. In Nursing Research and Statistics; Jaypee Brothers Medical Publishers (P) Ltd.: New Delhi, India, 2015; p. 218. [Google Scholar] [CrossRef]

- Singh, K.; Upadhyaya, S. Outlier Detection: Applications And Techniques. IJCSI Int. J. Comput. Sci. Issues 2012, 9, 307. [Google Scholar]

- Ali, H.; Najib, M.; Salleh, M.; Saedudin, R.; Hussain, K. Imbalance class problems in data mining: A review. Indones. J. Electr. Eng. Comput. Sci. 2019, 14, 1552–1563. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).