A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video

Abstract

1. Introduction

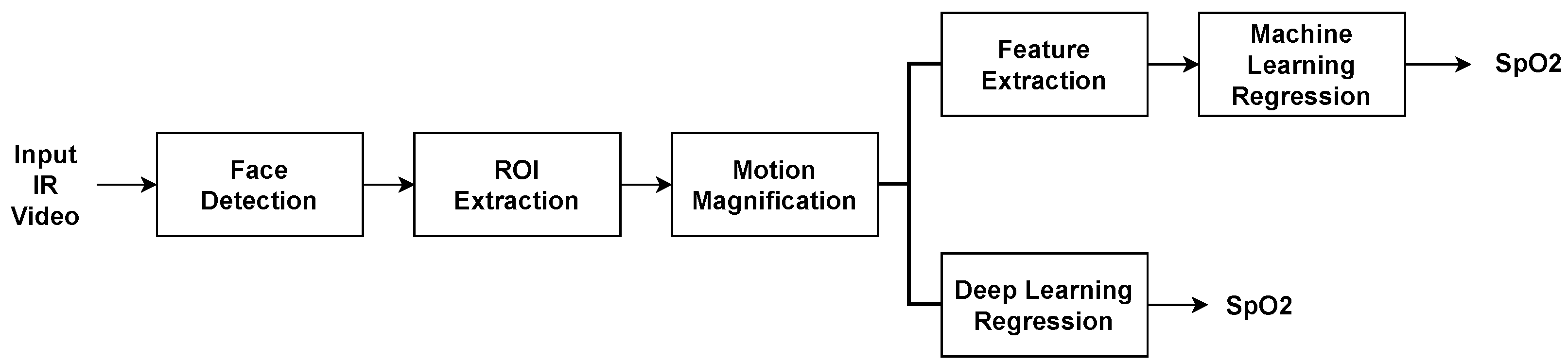

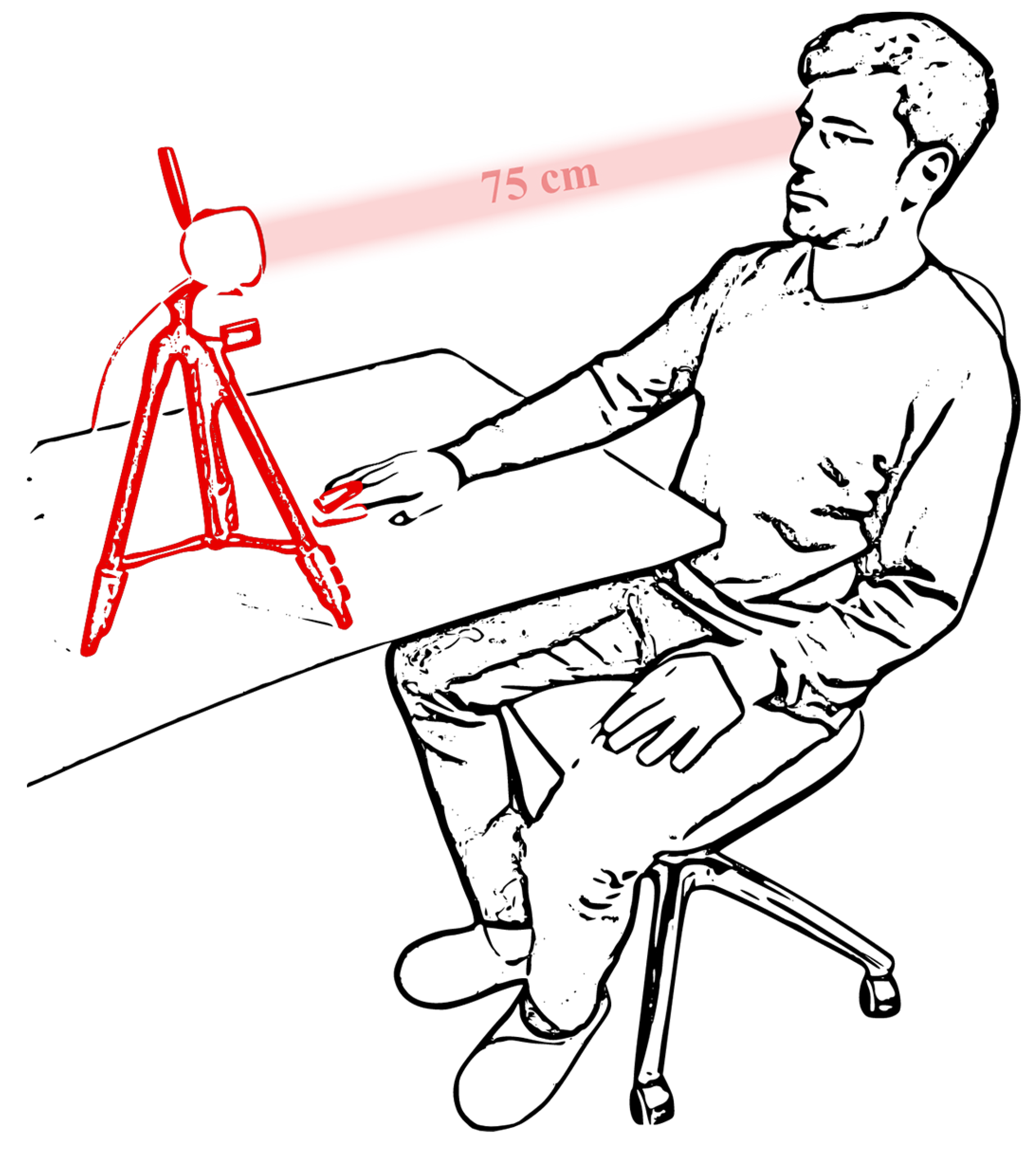

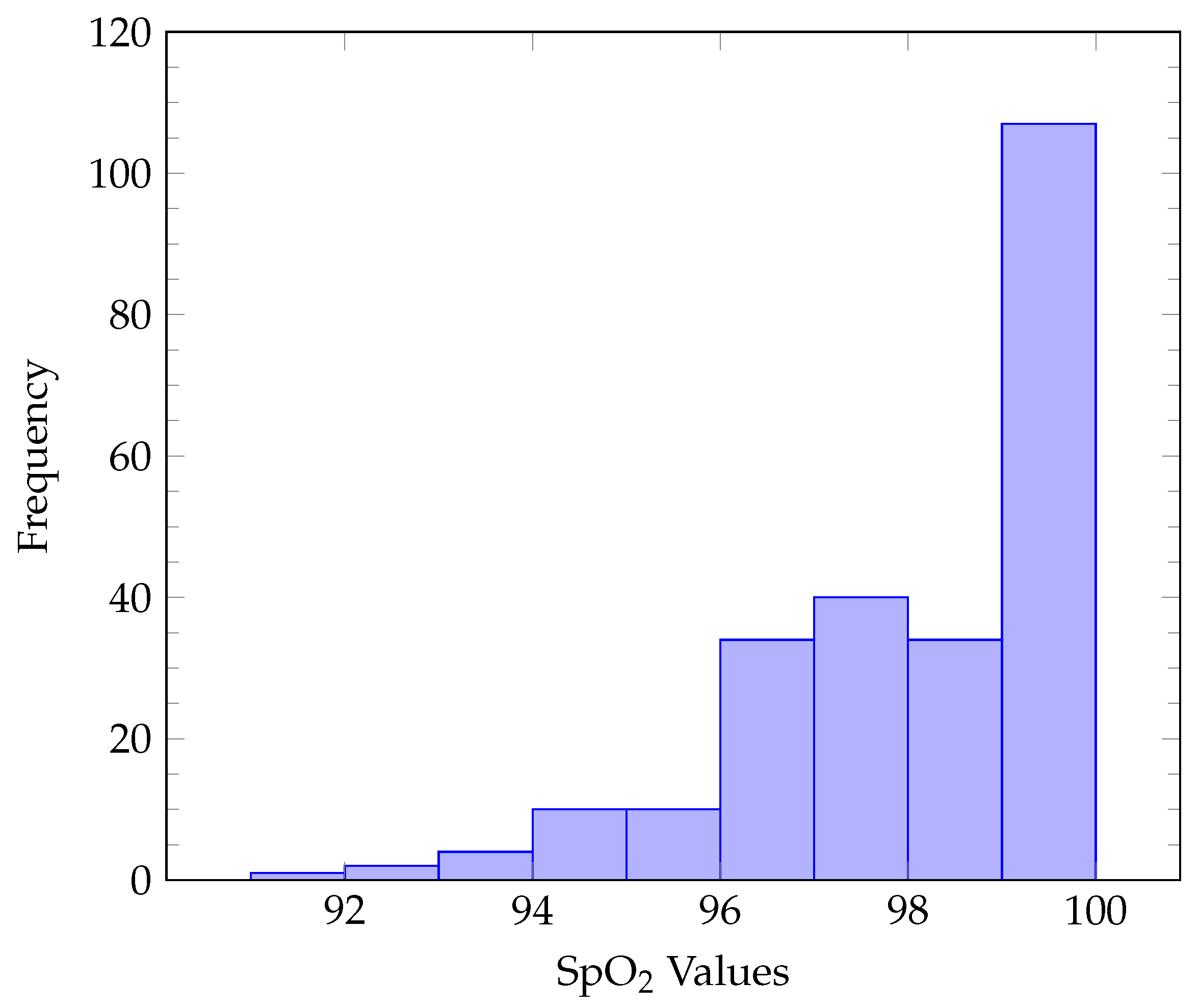

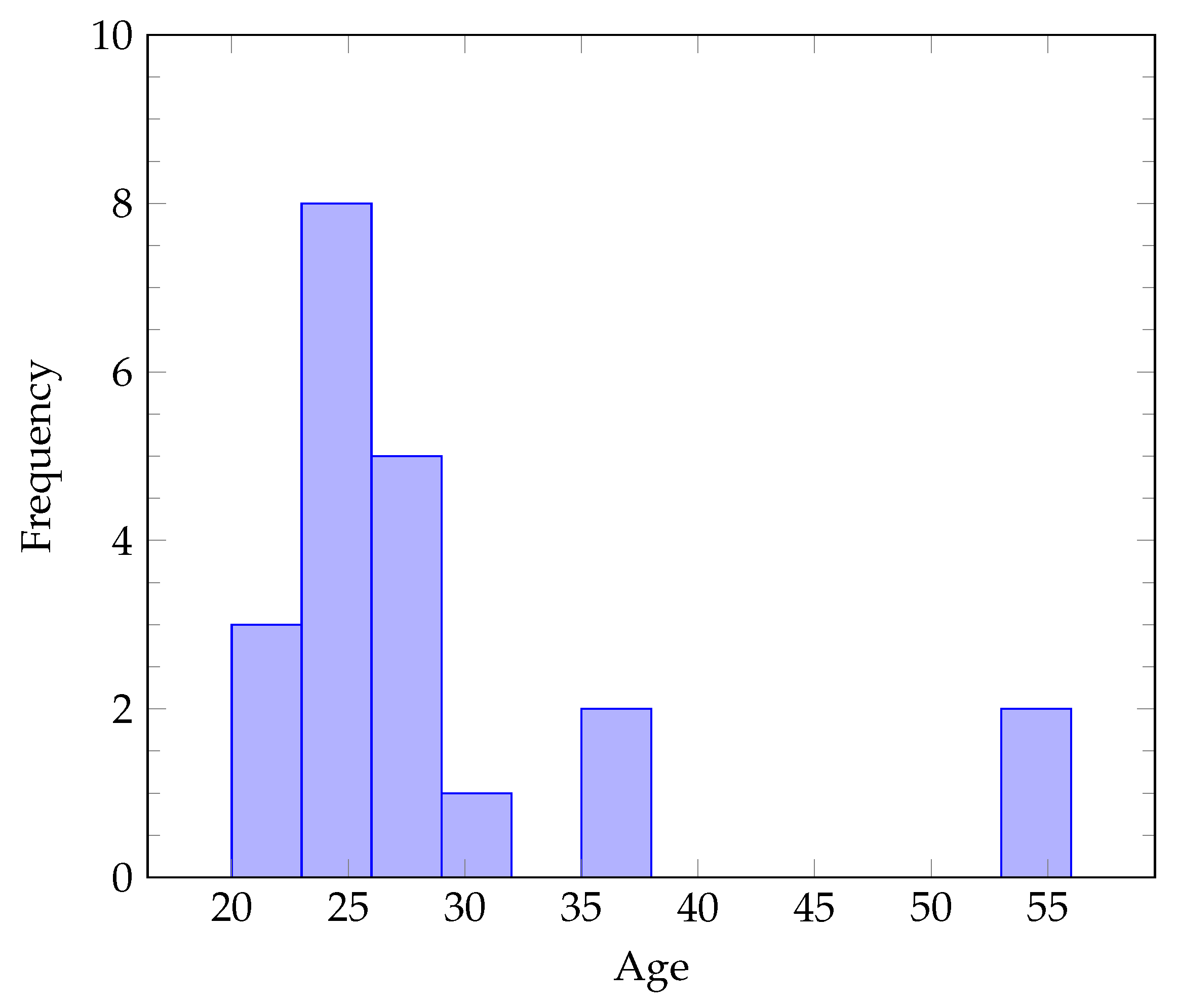

2. Dataset Protocol and Equipment

3. Proposed Methodology

- Detect the face and extract the desired facial areas for the procedure.

- Apply motion magnification.

- Extract the proposed features from the desired facial regions.

- The extracted features or the extracted regions are presented to a traditional machine learning or a deep learning regression system that predicts the SpO2 index.

3.1. Facial Segmentation

3.2. Motion Magnification

3.3. Feature Extraction

- The mean of the average intensity of all frames:

- The standard deviation of the average intensity of all frames:

- The mean of the standard deviation of the intensity of all frames:

- The standard deviation of the standard deviation of the intensity of all frames:

4. Machine Learning Regression

4.1. Traditional Techniques

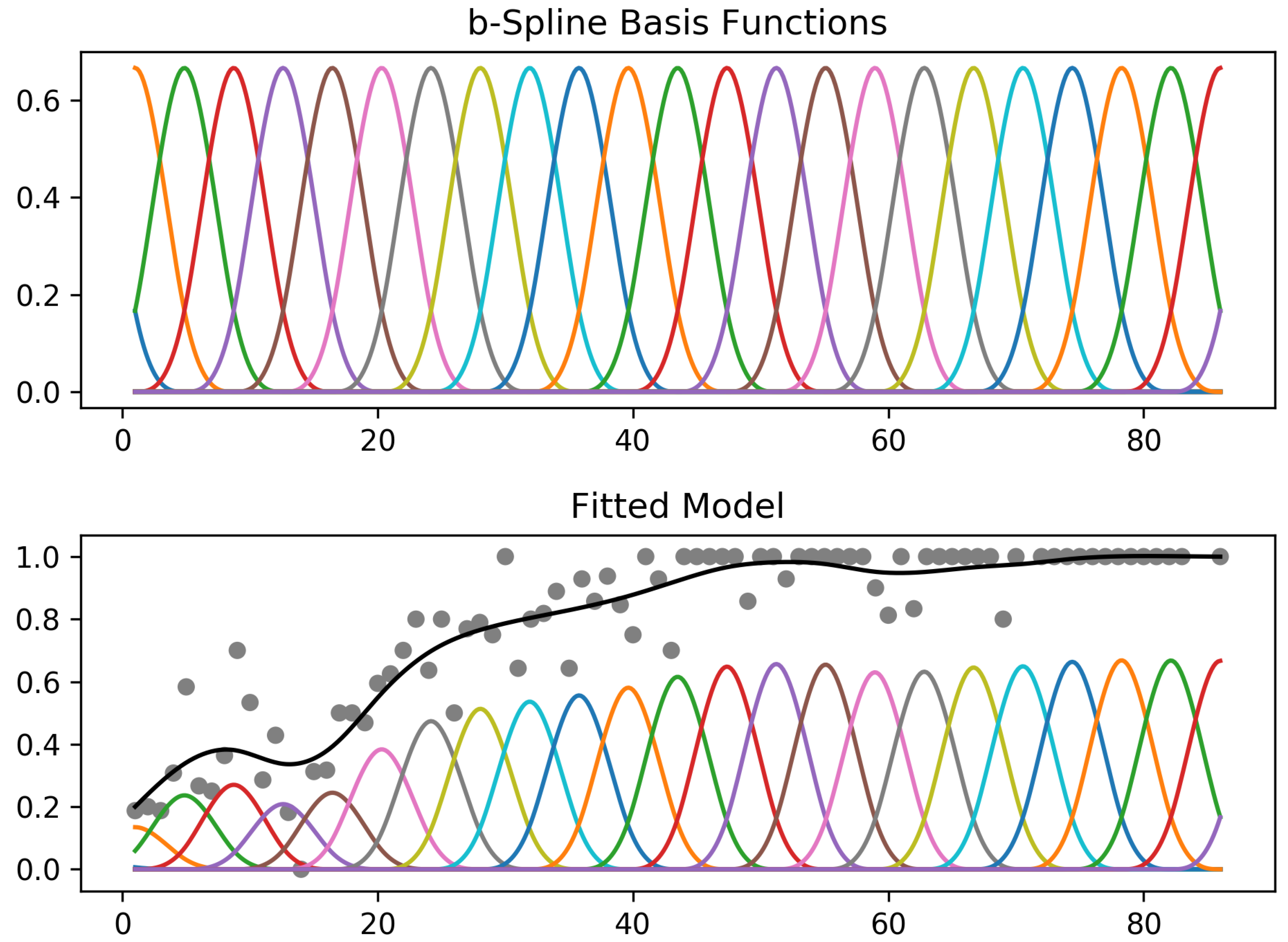

4.2. Generalized Additive Model

4.3. Extremely Randomized Trees

5. Deep Learning Regression

5.1. Multilayer Perceptron

5.2. Spatial 3D Convolutional Neural Network

5.3. Video Vision Transformer—ViViT

6. Results

6.1. Implementation

6.2. Accuracy

6.3. Model Comparison and Selection

| Algorithm 1 Selection algorithm |

|

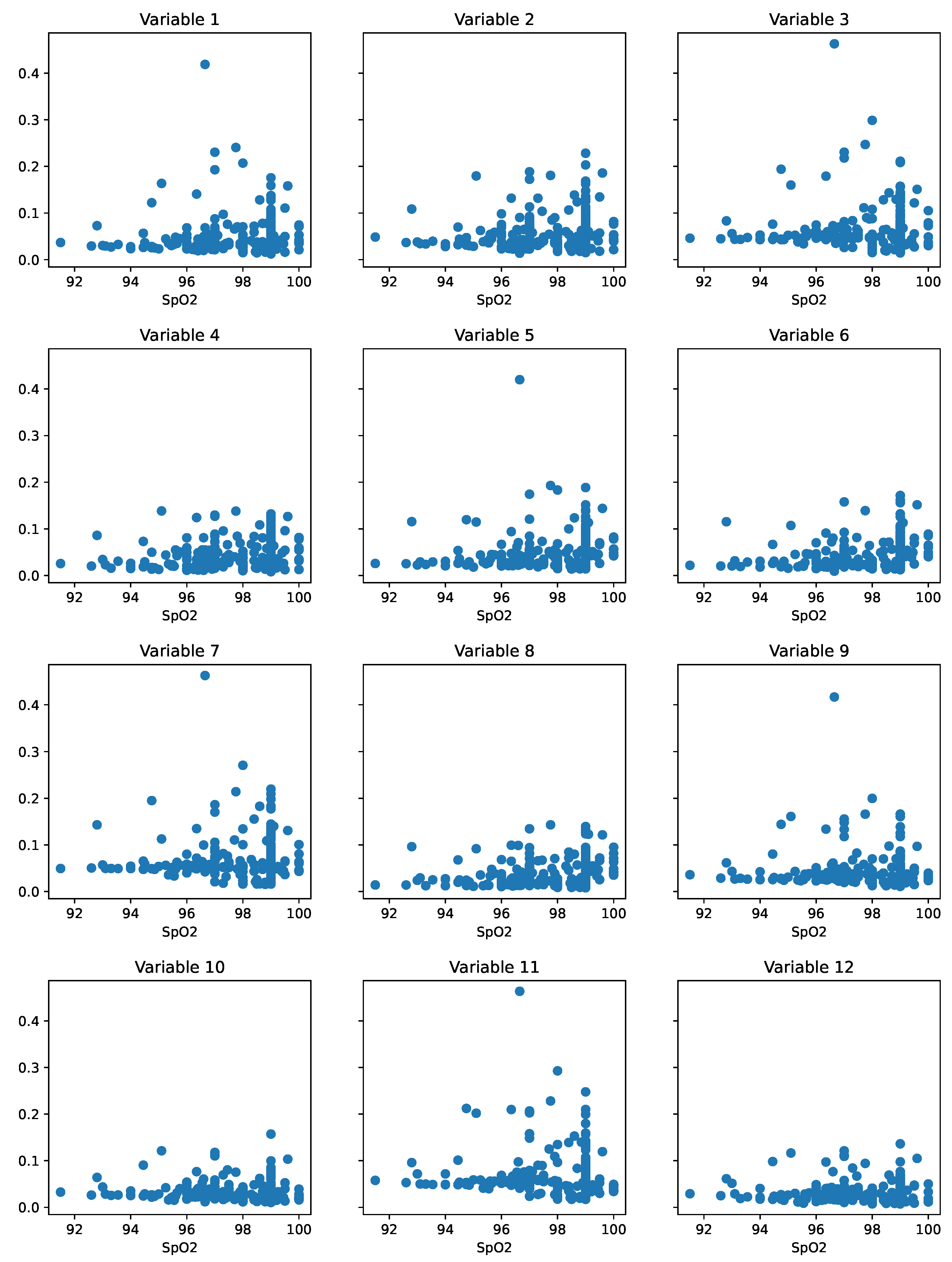

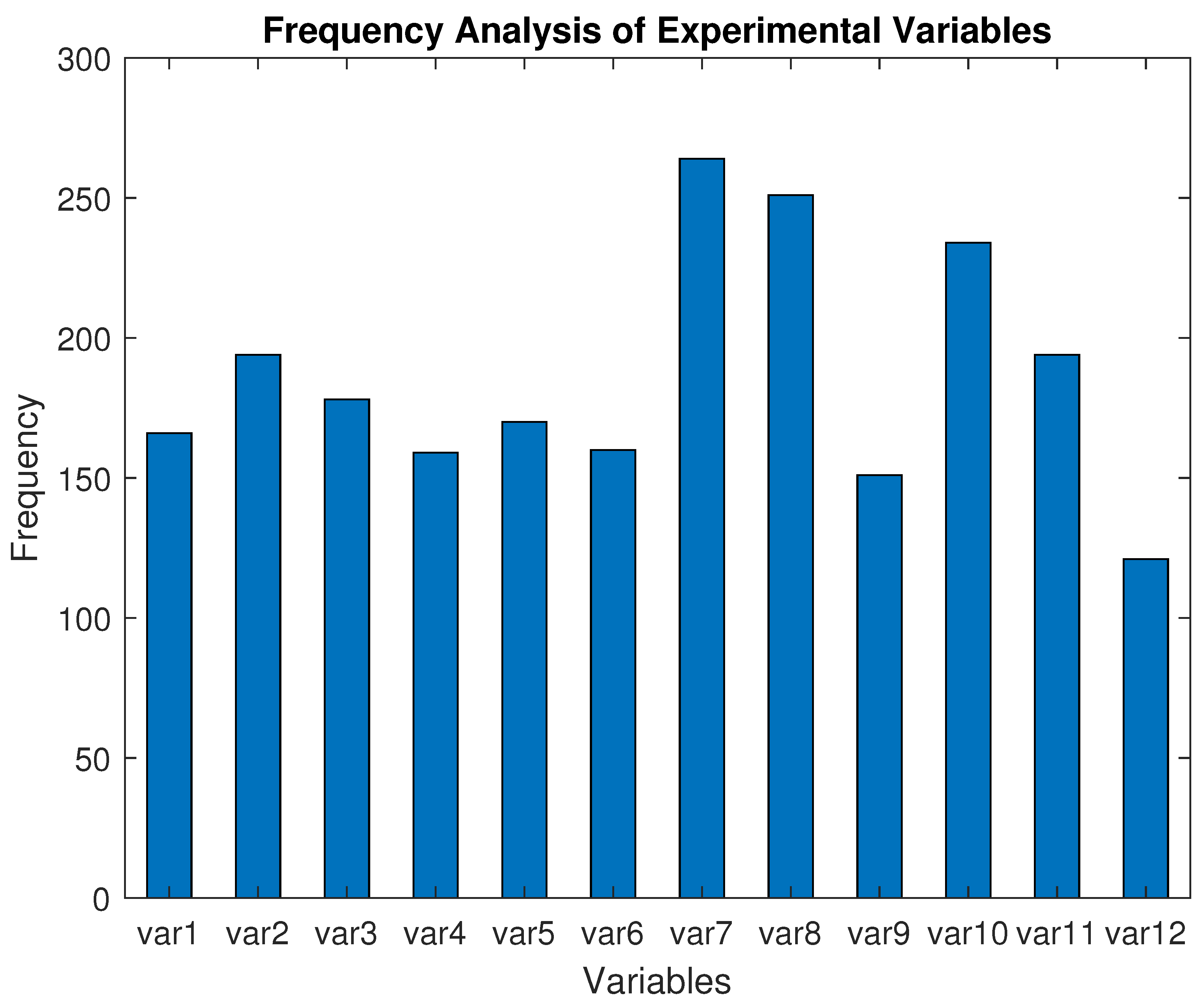

6.4. Impact and Relevance of Extracted Variables

6.5. Parameters and Potential Biases

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- “Heart Health and Aging”. National Institute on Aging. U.S. Department of Health and Human Services. 1 June 2018. Available online: https://www.nia.nih.gov/health/heart-health-and-aging (accessed on 16 May 2023).

- Gooneratne, N.S.; Patel, N.P.; Corcoran, A. Chronic Obstructive Pulmonary Disease Diagnosis and Management in Older Adults. J. Am. Geriatr. Soc. 2010, 58, 1153–1162. [Google Scholar] [CrossRef]

- Semelka, M.; Wilson, J.; Floyd, R. Review of Diagnosis and Treatment of Obstructive Sleep Apnea in Adults. Am. Fam. Phy. 2016, 94, 355–360. [Google Scholar]

- “COPD-Symptoms|NHLBI, NIH”. National Heart Lung and Blood Institute. U.S. Department of Health and Human Services; 24 March 2022. Available online: https://www.nhlbi.nih.gov/health/copd/symptoms (accessed on 16 May 2023).

- Ling, I.T.; James, A.L.; Hillman, D.R. Interrelationships between Body Mass, Oxygen Desaturation, and Apnea-Hypopnea Indices in a Sleep Clinic Population. Sleep 2012, 35, 89–96. [Google Scholar] [CrossRef] [PubMed]

- Cui, J.; Mao, X.; Olman, V.; Hastings, P.J.; Xu, Y. Hypoxia and Miscoupling between Reduced Energy Efficiency and Signaling to Cell Proliferation Drive Cancer to Grow Increasingly Faster. J. Mol. Cell Biol. 2012, 4, 174–176. [Google Scholar] [CrossRef] [PubMed]

- O’Driscoll, B.R.; Howard, L.S.; Earis, J.; Mak, V. British Thoracic Society Guideline for Oxygen Use in Adults in Healthcare and Emergency Settings. BMJ Open Respir. Res. 2017, 4, e000170. [Google Scholar] [CrossRef] [PubMed]

- Kong, L.; Zhao, Y.; Dong, L.; Jian, Y.; Jin, X.; Li, B.; Feng, Y.; Liu, M.; Liu, X.; Wu, H. Non-Contact Detection of Oxygen Saturation Based on Visible Light Imaging Device Using Ambient Light. Opt. Express 2013, 21, 17464–17471. [Google Scholar] [CrossRef]

- Moço, A.; Verkruysse, W. Pulse Oximetry Based on Photoplethysmography Imaging with Red and Green Light. J. Clin. Monit. Comput. 2021, 35, 123–133. [Google Scholar] [CrossRef]

- De Fátima Galvão Rosa, A.; Betini, R.C. Noncontact SPO2 Measurement Using Eulerian Video Magnification. IEEE Trans. Instrum. Meas. 2019, 69, 2120–2130. [Google Scholar] [CrossRef]

- Verkruysse, W.; Bartula, M.; Bresch, E.; Rocque, M.; Meftah, M.; Kirenko, I. Calibration of Contactless Pulse Oximetry. Anesth. Analg. 2017, 124, 136–145. [Google Scholar] [CrossRef]

- Nemcova, A.; Jordanova, I.; Varecka, M.; Smisek, R.; Marsanova, L.; Smital, L.; Vitek, M. Monitoring of Heart Rate, Blood Oxygen Saturation, and Blood Pressure Using a Smartphone. Biomed. Signal Process. Control 2020, 59, 101928. [Google Scholar] [CrossRef]

- Rasche, S.; Huhle, R.; Junghans, E.; de Abreu, M.G.; Ling, Y.; Trumpp, A.; Zaunseder, S. Association of Remote Imaging Photoplethysmography and Cutaneous Perfusion in Volunteers. Sci. Rep. 2020, 10, 16464. [Google Scholar] [CrossRef]

- Rogers, J. An Introduction to Cardiovascular Physiology; Butterworth-Heinemann: Oxford, UK, 2009. [Google Scholar]

- Briers, D.; Duncan, D.D.; Hirst, E.; Kirkpatrick, S.J.; Larsson, M.; Steenbergen, W.; Stromberg, T.; Thompson, O.B. Laser Speckle Contrast Imaging: Theoretical and Practical Limitations. J. Biomed. Opt. 2013, 18, 066018. [Google Scholar] [CrossRef]

- Tamura, T. Current Progress of Photoplethysmography and SPO2 for Health Monitoring. Biomed. Eng. Lett. 2019, 9, 21–36. [Google Scholar] [CrossRef]

- Akamatsu, Y.; Onishi, Y.; Imaoka, H. Blood Oxygen Saturation Estimation from Facial Video via DC and AC components of Spatio-temporal Map. arXiv 2022, arXiv:2212.07116. [Google Scholar]

- Wu, H.Y.; Rubinstein, M.; Shih, E.; Guttag, J.; Durand, F.; Freeman, W. Eulerian Video Magnification for Revealing Subtle Changes in the World. ACM Trans. Graph. 2012, 31, 65. [Google Scholar] [CrossRef]

- Akamatsu, Y.; Onishi, Y.; Imaoka, H. Heart Rate and Oxygen Saturation Estimation from Facial Video with Multimodal Physiological Data Generation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022. [Google Scholar]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Milutinovic, J.; Zelic, K.; Nedeljkovic, N. Evaluation of Facial Beauty Using Anthropometric Proportions. Sci. World J. 2014, 2014, 428250. [Google Scholar] [CrossRef]

- Kaya, K.S.; Türk, B.; Cankaya, M.; Seyhun, N.; Coşkun, B.U. Assessment of Facial Analysis Measurements by Golden Proportion. Braz. J. Otorhinolaryngol. 2019, 85, 494–501. [Google Scholar] [CrossRef]

- Fernandes, J.W. The Legacy of Art in Plastic Surgery. Plast. Reconstr. Surg. Glob. Open 2021, 9, e3519. [Google Scholar] [CrossRef]

- Garratt, C.; Ward, D.; Antoniou, A.; Camm, A.J. Misuse of Verapamil in Pre-Excited Atrial Fibrillation. Lancet 1989, 333, 367–369. [Google Scholar] [CrossRef]

- Brieva, J.; Moya-Albor, E.; Ponce, H. A Non-Contact SpO2 Estimation Using a Video Magnification Technique. In Proceedings of the 17th International Symposium on Medical Information Processing and Analysis, Campinas, Brazil, 17–19 November 2021. [Google Scholar]

- Lauridsen, H.; Hedwig, D.; Perrin, K.L.; Williams, C.J.; Wrege, P.H.; Bertelsen, M.F.; Pedersen, M.; Butcher, J.T. Extracting Physiological Information in Experimental Biology via Eulerian Video Magnification. BMC Biol. 2019, 17, 103. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R. Generalized Additive Models. Stat. Sci. 1986, 1, 3. [Google Scholar] [CrossRef]

- MacCullagh, P.; Nelder, J.A. Generalized Linear Models; Chapman and Hall: London, UK, 1989. [Google Scholar]

- Servén, D.; Brummitt, C. “A Tour of PyGAM”. A Tour of pyGAM-pyGAM Documentation. 2018. Available online: https://pygam.readthedocs.io/en/latest/notebooks/tour_of_pygam.html (accessed on 16 May 2023).

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:141 2.6980. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Singh, S.P.; Wang, L.; Gupta, S.; Goli, H.; Padmanabhan, P.; Gulyás, B. 3D Deep Learning on Medical Images: A Review. Sensors 2020, 20, 5097. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.Y.; Lim, J.; Na, T.; Kim, M. 3DSRnet: Video Super-resolution using 3D Convolutional Neural Networks. arXiv 2018, arXiv:1812.09079. [Google Scholar]

- Kim, D.E.; Gofman, M. Comparison of Shallow and Deep Neural Networks for Network Intrusion Detection. In Proceedings of the IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018. [Google Scholar]

- Song, Y.; Cai, Y.; Tan, L. Video-Audio Emotion Recognition Based on Feature Fusion Deep Learning Method. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS), East Lansing, MI, USA, 9–11 August 2021. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lucic, M.; Schmid, C. Vivit: A Video Vision Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Gosthipaty, A.; Thakur, A. Keras Documentation: Video Vision Transformer. Video Vision Transformer. Keras. 12 January 2022. Available online: https://keras.io/examples/vision/vivit/ (accessed on 16 May 2023).

- ISO (International Organization for Standardization). ISO 80601-2-61: Medical Electrical Equipment—Part 2-61: Particular Requirements for the Basic Safety and Essential Performance of Diagnostic Ultrasound Equipment; ISO: Geneva, Switzerland, 2019. [Google Scholar]

- Pulse Oximeter Accuracy and Limitations. U.S. Food and Drug Administration. FDA; 7 November 2022. Available online: https://www.fda.gov/medical-devices/safety-communications/pulse-oximeter-accuracy-and-limitations-fda-safety-communication (accessed on 16 May 2023).

- Lapum, J.L.; Verkuyl, M.; Garcia, W.; St-Amant, O.; Tan, A. Vital Sign Measurement across the Lifespan—1st Canadian Edition; eCampusOntario: Toronto, ON, Canada, 2019. [Google Scholar]

| Regression Algorithm | Average RMSE | Minimum RMSE | MAE | MAPE | Score | No. Features |

|---|---|---|---|---|---|---|

| Linear Regression | 1.565 | 1.481 | 1.206 | 0.012 | 0.155 | 5 |

| Ridge | 1.599 | 1.582 | 1.304 | 0.013 | 0.024 | 11 |

| SGD Regressor | 1.919 | 1.863 | 1.651 | 0.017 | −0.424 | 3 |

| ElasticNet | 1.615 | 1.612 | 1.318 | 0.014 | −0.009 | 5 |

| Lars | 1.574 | 1.483 | 1.213 | 0.013 | 0.126 | 4 |

| Lasso (alpha = ) | 1.612 | 1.604 | 1.316 | 0.014 | 0.000 | 7 |

| LassoLars (alpha = ) | 1.565 | 1.523 | 1.260 | 0.014 | 0.000 | 7 |

| Huber Regressor | 1.581 | 1.498 | 1.179 | 0.012 | 0.149 | 6 |

| Quantile Regressor | 1.753 | 1.730 | 1.272 | 0.013 | −0.158 | 3 |

| RANSAC Regressor | 1.880 | 1.840 | 1.279 | 0.013 | −0.198 | 5 |

| Theilsen Regressor | 1.674 | 1.512 | 1.268 | 0.013 | 0.106 | 4 |

| Poisson Regressor | 1.615 | 1.612 | 1.318 | 0.014 | 0.000 | 6 |

| Tweedie Regressor | 1.615 | 1.612 | 1.318 | 0.014 | 0.000 | 8 |

| Gamma Regressor | 1.615 | 1.612 | 1.318 | 0.014 | 0.000 | 11 |

| AdaBoost Regressor (5, 50, 0.1) 1 | 1.352 | 1.235 | 0.956 | 0.010 | 0.386 | 5 |

| AdaBoost Regressor (5, 100, 0.1) 1 | 1.343 | 1.226 | 0.957 | 0.010 | 0.411 | 5 |

| AdaBoost Regressor (10, 50, 0.1) 1 | 1.410 | 1.245 | 0.927 | 0.010 | 0.428 | 7 |

| AdaBoost Regressor (10, 100, 0.1) 1 | 1.401 | 1.246 | 0.923 | 0.010 | 0.398 | 6 |

| Bagging Regressor (5, 50) 2 | 1.355 | 1.230 | 0.980 | 0.010 | 0.374 | 5 |

| Bagging Regressor (10, 50) 2 | 1.391 | 1.189 | 0.927 | 0.010 | 0.427 | 8 |

| Bagging Regressor (5, 100) 2 | 1.346 | 1.212 | 0.975 | 0.010 | 0.393 | 9 |

| Bagging Regressor (10, 100) 2 | 1.379 | 1.202 | 0.920 | 0.010 | 0.406 | 6 |

| Extra Trees Regressor (50) 3 | 1.337 | 1.184 | 0.964 | 0.010 | 0.465 | 6 |

| Extra Trees Regressor (100) 3 | 1.331 | 1.171 | 0.960 | 0.010 | 0.465 | 5 |

| HistGradientBoosting Regressor | 1.386 | 1.234 | 1.019 | 0.011 | 0.410 | 5 |

| Gradient Boosting Regressor (100, 0.01, 3) 4 | 1.412 | 1.339 | 1.097 | 0.011 | 0.315 | 6 |

| Gradient Boosting Regressor (100, 0.01, 5) 4 | 1.401 | 1.277 | 1.063 | 0.011 | 0.375 | 10 |

| Gradient Boosting Regressor (100, 0.1, 3) 4 | 1.424 | 1.243 | 1.038 | 0.011 | 0.378 | 8 |

| Gradient Boosting Regressor (100, 0.1, 5) 4 | 1.444 | 1.256 | 1.033 | 0.011 | 0.372 | 8 |

| Gradient Boosting Regressor (500, 0.01, 3) 4 | 1.391 | 1.258 | 1.023 | 0.011 | 0.393 | 5 |

| Gradient Boosting Regressor (500, 0.01, 5) 4 | 1.422 | 1.248 | 1.019 | 0.010 | 0.383 | 7 |

| Gradient Boosting Regressor (500, 0.1, 3) 4 | 1.469 | 1.272 | 1.073 | 0.011 | 0.362 | 8 |

| Gradient Boosting Regressor (500, 0.1, 5) 4 | 1.448 | 1.265 | 1.037 | 0.011 | 0.395 | 10 |

| Random Forest Regressor (100, 3) 5 | 1.380 | 1.292 | 1.044 | 0.011 | 0.345 | 8 |

| Random Forest Regressor (100, 5) 5 | 1.355 | 1.254 | 0.997 | 0.010 | 0.412 | 4 |

| OxygeNN | 1.711 | 1.532 | 1.356 | 0.014 | 0.006 | 6 |

| Generalized Additive Model (4 splines) | 1.573 | 1.526 | 1.170 | 0.012 | −0.008 | 12 |

| Generalized Additive Model (6 splines) | 1.464 | 1.413 | 1.071 | 0.011 | 0.120 | 12 |

| Generalized Additive Model (8 splines) | 1.513 | 1.407 | 1.092 | 0.011 | 0.061 | 12 |

| Generalized Additive Model (10 splines) | 1.534 | 1.383 | 1.093 | 0.011 | 0.118 | 12 |

| Generalized Additive Model (12 splines) | 1.491 | 1.435 | 1.085 | 0.011 | 0.043 | 12 |

| Generalized Additive Model (14 splines) | 1.491 | 1.427 | 1.079 | 0.011 | 0.118 | 12 |

| 3D-CNN (single-source model) | 1.592 | 1.570 | 1.296 | 0.013 | 0.000 | - |

| 3D-CNN (multi-source model) | 1.594 | 1.564 | 1.298 | 0.013 | −0.002 | - |

| ViViT | 1.685 | 1.615 | 1.330 | 0.013 | −0.040 | - |

| Layer/Stride | Contents | Output Size |

|---|---|---|

| Input Features | - | |

| FC1 | 128 | |

| FC2 | 256 | |

| FC3 | 128 | |

| FC4 | 64 | |

| Output | 1 |

| Layer/Stride | Contents | Output Size (H × W × D × C) |

|---|---|---|

| Input Clip | - | 64 × 128 × 300 × 1 |

| Conv3D | 20 × 41 × 98 × 16 | |

| Conv3D | 5 × 12 × 31 × 32 | |

| Flatten | - | 59,520 |

| FC1 | 128 | |

| FC2 | 128 | |

| Output | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stogiannopoulos, T.; Cheimariotis, G.-A.; Mitianoudis, N. A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video. Information 2023, 14, 301. https://doi.org/10.3390/info14060301

Stogiannopoulos T, Cheimariotis G-A, Mitianoudis N. A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video. Information. 2023; 14(6):301. https://doi.org/10.3390/info14060301

Chicago/Turabian StyleStogiannopoulos, Thomas, Grigorios-Aris Cheimariotis, and Nikolaos Mitianoudis. 2023. "A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video" Information 14, no. 6: 301. https://doi.org/10.3390/info14060301

APA StyleStogiannopoulos, T., Cheimariotis, G.-A., & Mitianoudis, N. (2023). A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video. Information, 14(6), 301. https://doi.org/10.3390/info14060301