Abstractive Summary of Public Opinion News Based on Element Graph Attention

Abstract

1. Introduction

2. Related Technologies

2.1. Abstractive Summarization Methods

2.2. Graph-Based Summarization Methods

2.3. Multi-Document Summarization Methods

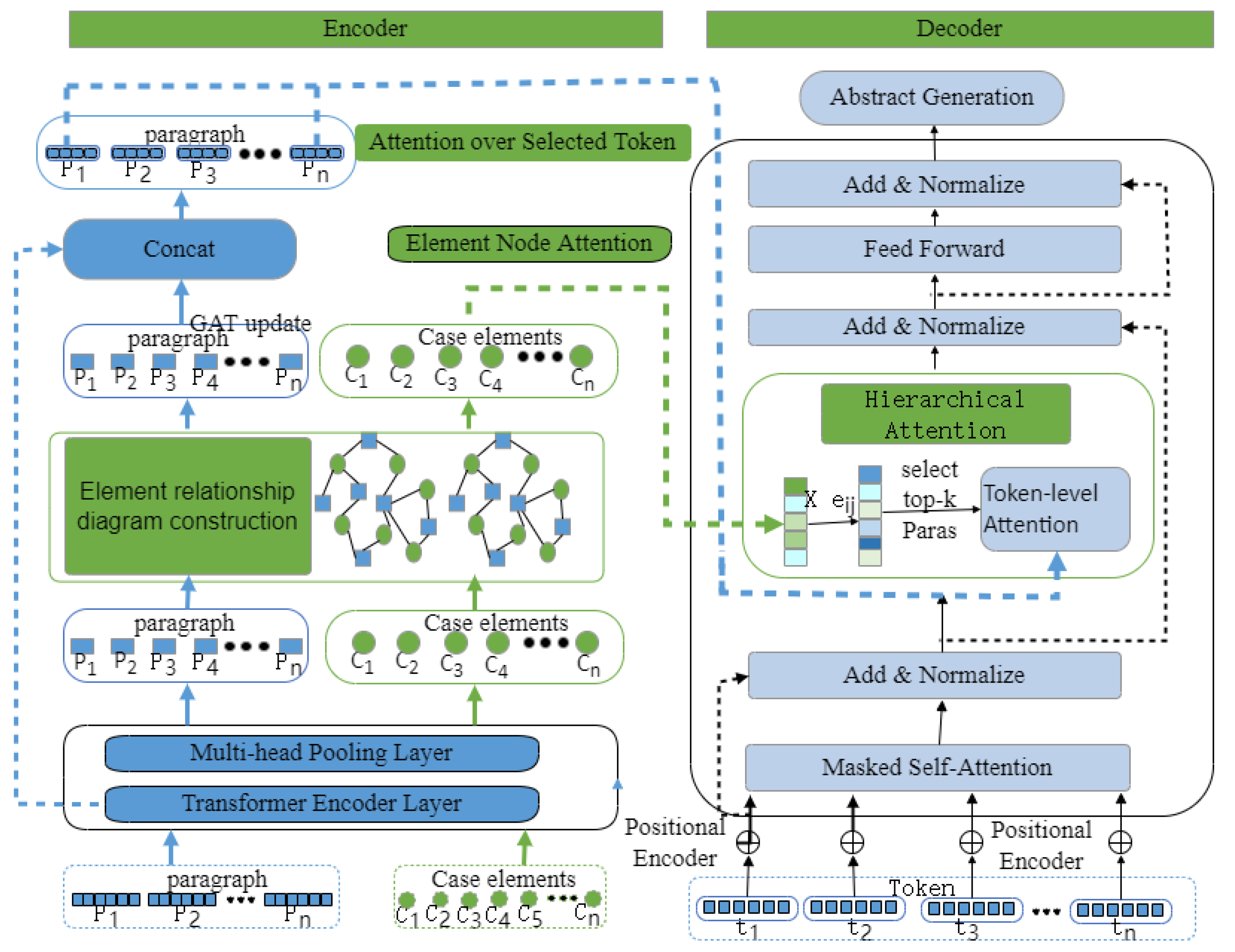

3. Materials and Methods

3.1. Element Relationship Diagram Construction Module

| Algorithm 1: Algorithm for constructing element relation graph |

| Input: input text Output: element relationship diagram 1. Collection of case data sets ; 2. With the document paragraph node as the initial node, to ; 3. For in do; 4. for in do; 5. if contains then; 6. ; 7. end if; 8. End for; 9. End for; 10. For in do; 11. for in do; 12. if and contains then; 13. ; 14. end if; 15. end for; 16. End for. |

3.2. Document Encoder

3.3. Graph Encoder

3.4. Element Decoder Based on Two-Layer Attention

3.5. Parameter Training

4. Results

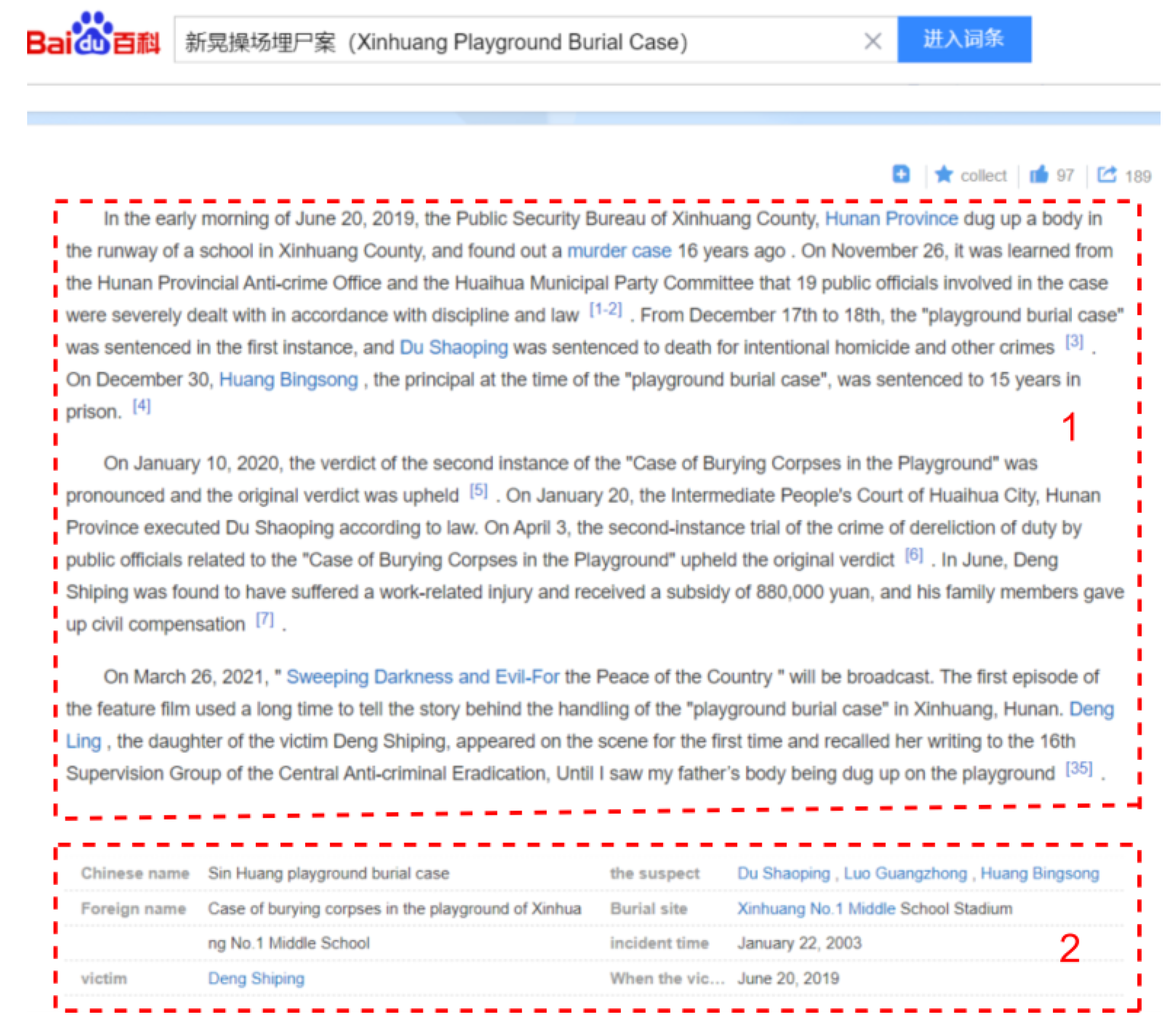

4.1. Case–Public Opinion Multi-Document Summary Dataset

4.2. Experimental Parameter Settings

4.3. Baseline Model Settings

5. Discussion

5.1. Analysis of Experimental Results

5.2. Analysis of Ablation Experiments

5.3. Comparative Experimental Analysis of Different Case Element Extraction Methods

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Barzilay, R.; McKeown, K.R. Sentence fusion for multidocument news summarization. Comput. Linguist. 2005, 31, 297–328. [Google Scholar] [CrossRef]

- Filippova, K.; Strube, M. Sentence fusion via dependency graph compression. In Proceedings of the 2008 Conference on Empirical Methods in Natural Language Processing, Honolulu, HI, USA, 25–27 October 2008; pp. 177–185. [Google Scholar]

- Banerjee, S.; Mitra, P.; Sugiyama, K. Multi-document abstractive summarization using ilp based multi-sentence compression. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Li, W. Abstractive multi-document summarization with semantic information extraction. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1908–1913. [Google Scholar]

- Bing, L.; Li, P.; Liao, Y.; Lam, W.; Guo, W.; Passonneau, R.J. Abstractive multi-document summarization via phrase selection and merging. arXiv 2015, arXiv:1506.01597. [Google Scholar]

- Cohn, T.A.; Lapata, M. Sentence compression as tree transduction. J. Artif. Intell. Res. 2009, 34, 637–674. [Google Scholar] [CrossRef]

- Wang, L.; Cardie, C. Domain-independent abstract generation for focused meeting summarization. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Sofia, Bulgaria, 4–9 August 2013; pp. 1395–1405. [Google Scholar]

- Pighin, D.; Cornolti, M.; Alfonseca, E.; Filippova, K. Modelling events through memory-based, open-ie patterns for abstractive summarization. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 892–901. [Google Scholar]

- Paulus, R.; Xiong, C.; Socher, R. A deep reinforced model for abstractive summarization. arXiv 2017, arXiv:1705.04304. [Google Scholar]

- Gehrmann, S.; Deng, Y.; Rush, A.M. Bottom-up abstractive summarization. arXiv 2018, arXiv:1808.10792. [Google Scholar]

- Li, W.; Xiao, X.; Lyu, Y.; Wang, Y. Improving neural abstractive document summarization with structural regularization. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4078–4087. [Google Scholar]

- Zhang, X.; Wei, F.; Zhou, M. HIBERT: Document level pre-training of hierarchical bidirectional transformers for document summarization. arXiv 2019, arXiv:1905.06566. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P. Pegasus: Pre-training with extracted gap-sentences for abstractive summarization. In Proceedings of the International Conference on Machine Learning. PMLR; 2020; pp. 11328–11339. Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwiiwNjb8P_8AhXD6aQKHdhzB3sQFnoECAoQAQ&url=http%3A%2F%2Fproceedings.mlr.press%2Fv119%2Fzhang20ae%2Fzhang20ae.pdf&usg=AOvVaw1VKn6wia_Muv_rcuqG30sp (accessed on 1 January 2023).

- Zou, Y.; Zhang, X.; Lu, W.; Wei, F.; Zhou, M. Pre-training for abstractive document summarization by reinstating source text. arXiv 2020, arXiv:2004.01853. [Google Scholar]

- Grail, Q.; Perez, J.; Gaussier, E. Globalizing BERT-based transformer architectures for long document summarization. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 1792–1810. [Google Scholar]

- Erkan, G.; Radev, D.R. Lexrank: Graph-based lexical centrality as salience in text summarization. J. Artif. Intell. Res. 2004, 22, 457–479. [Google Scholar] [CrossRef]

- Wan, X. An exploration of document impact on graph-based multi-document summarization. In Proceedings of the 2008 Conference on Empirical Methods in Natural Language Processing, Honolulu, HI, USA, 25–27 October 2008; pp. 755–762. [Google Scholar]

- Christensen, J.; Soderland, S.; Etzioni, O. Towards coherent multi-document summarization. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 1163–1173. [Google Scholar]

- Tan, J.; Wan, X.; Xiao, J. Abstractive document summarization with a graph-based attentional neural model. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1171–1181. [Google Scholar]

- Yasunaga, M.; Zhang, R.; Meelu, K.; Pareek, A.; Srinivasan, K.; Radev, D. Graph-based neural multi-document summarization. arXiv 2017, arXiv:1706.06681. [Google Scholar]

- Fan, A.; Gardent, C.; Braud, C.; Bordes, A. Using local knowledge graph construction to scale seq2seq models to multi-document inputs. arXiv 2019, arXiv:1910.08435. [Google Scholar]

- Huang, L.; Wu, L.; Wang, L. Knowledge graph-augmented abstractive summarization with semantic-driven cloze reward. arXiv 2020, arXiv:2005.01159. [Google Scholar]

- Wang, D.; Liu, P.; Zheng, Y.; Qiu, X.; Huang, X. Heterogeneous graph neural networks for extractive document summarization. arXiv 2020, arXiv:2004.12393. [Google Scholar]

- Song, Z.; King, I. Hierarchical Heterogeneous Graph Attention Network for Syntax-Aware Summarization. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2022; pp. 11340–11348. [Google Scholar]

- Jin, H.; Wang, T.; Wan, X. Multi-granularity interaction network for extractive and abstractive multi-document summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6244–6254. [Google Scholar]

- Liu, Y.; Lapata, M. Hierarchical transformers for multi-document summarization. arXiv 2019, arXiv:1905.13164. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI blog 2019, 1, 9. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text summarization branches out; 2004; pp. 74–81. Available online: https://aclanthology.org/W04-1013.pdf (accessed on 1 January 2023).

- Liu, P.J.; Saleh, M.; Pot, E.; Goodrich, B.; Sepassi, R.; Kaiser, L.; Shazeer, N. Generating wikipedia by summarizing long sequences. arXiv 2018, arXiv:1801.10198. [Google Scholar]

- Li, W.; Xiao, X.; Liu, J.; Wu, H.; Wang, H.; Du, J. Leveraging graph to improve abstractive multi-document summarization. arXiv 2020, arXiv:2005.10043. [Google Scholar]

| Exemplar 1: The reporter learned from the Anti-gangland Office of Hunan Province and the Huaihua Municipal Party Committee that the historical backlog of Xinhuang’s “playground burial case” (the case of Deng Shiping’s murder), has been thoroughly investigated. Du Shaoping and his accomplice Luo Guangzhong were arrested according to law and prosecuted on suspicion of intentional homicide:Huang Bingsong and other 19 public officials involved in the case received corresponding party affiliation and government sanctions such as expulsion from the party and public office. Exemplar 2: The First Instance of intermediate People’s Court of Huaihua City, Hunan Province held a public hearing of the defendant Du Shaoping’s intentional homicide case and the case of a vicious criminal group and pronounced the verdict in court.Deng Shiping and Yao Benying (deceased) from the General Affairs Office of Xinhuang No. 1 Middle School supervised the quality of the project.During the construction process, Du Shaoping had conflicts with Deng Shiping due to issues such as project quality, and held a grudge against Deng Shiping.On January 22, 2003, Du Shaoping and Luo Guangzhong killed Deng Shiping in the office of the engineering headquarters. Exemplar 3: The Huaihua Intermediate People’s Court held that defendant Du Shaoping, together with defendant Luo Guangzhong, deliberately and illegally deprived others of their lives, resulting in the death of one person; intentionally injured another person’s body, resulting in minor injuries to one person; organized and led a criminal group of evil forces to carry out quarrels, provocation, illegal detention etc. Defendant Luo Guangzhong was convicted of intentional homicide and sentenced to death with a two-year reprieve and deprivation of political rights for life. |

| Case Elements: Element Name: element information Case Name: Xinhuang’s “playground burial case” (the case of Deng Shiping’s murder) Victim: Deng Shiping Suspect: Du Shaoping, Luo Guangzhong, Huang Bingsong Burial Site: Xinhuang No. 1 Middle School Stadium The time of the incident: January 22, 2003 Victim found time: June 20, 2019 |

| Summary: In the early morning of June 20, 2019, the Public Security Bureau of Xinhuang County, Hunan Province dug up a body in the runway of Xinhuang No. 1 Middle School, and found out a murder case that happened 16 years ago.On December 30, the then-principal Huang Bingsong was sentenced to 15 years in prison for the “playground burial case”. On January 20, the Intermediate People’s Court of Huaihua City, Hunan Province executed Du Shaoping in accordance with the law.In June 2020, Deng Shiping was found to be injured at work and received a subsidy of 880,000 yuan, and his family gave up civil compensation. |

| Number of Documents | Number of Sentences | Average Sentence Length | Length of Summarization | |

|---|---|---|---|---|

| training sets | 3969 | 50.78 | 1255 | 192.21 |

| validation set | 300 | 48.25 | 1122 | 190.88 |

| testing set | 300 | 47.66 | 1107 | 189.07 |

| Parameter Name | Parameter Value |

|---|---|

| training steps | 200,000 |

| beam size | 5 |

| learning rate | 0.002 |

| warm-up | 20,000 |

| hyper parameter |

| Model | RG-1 | RG-2 | RG-L |

|---|---|---|---|

| FT | 30.28 | 14.12 | 26.78 |

| T-DMCA | 31.22 | 15.22 | 26.94 |

| HT | 31.94 | 15.76 | 26.57 |

| GraphSum | 32.52 | 15.96 | 26.40 |

| Our Model | 32.81 | 16.78 | 27.19 |

| Model | RG-1 | RG-2 | RG-L |

|---|---|---|---|

| Our Model | 32.81 | 16.78 | 27.19 |

| w/o graph encoder | 29.88 | 13.99 | 20.12 |

| w/o two-level attention | 30.15 | 14.12 | 22.21 |

| Method | RG-1 | RG-2 | RG-L |

|---|---|---|---|

| NER | 31.24 | 15.27 | 25.65 |

| TFIDF | 31.37 | 15.49 | 25.69 |

| TextRank | 32.15 | 16.33 | 26.87 |

| Our Model | 32.81 | 16.78 | 27.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Hou, S.; Li, G.; Yu, Z. Abstractive Summary of Public Opinion News Based on Element Graph Attention. Information 2023, 14, 97. https://doi.org/10.3390/info14020097

Huang Y, Hou S, Li G, Yu Z. Abstractive Summary of Public Opinion News Based on Element Graph Attention. Information. 2023; 14(2):97. https://doi.org/10.3390/info14020097

Chicago/Turabian StyleHuang, Yuxin, Shukai Hou, Gang Li, and Zhengtao Yu. 2023. "Abstractive Summary of Public Opinion News Based on Element Graph Attention" Information 14, no. 2: 97. https://doi.org/10.3390/info14020097

APA StyleHuang, Y., Hou, S., Li, G., & Yu, Z. (2023). Abstractive Summary of Public Opinion News Based on Element Graph Attention. Information, 14(2), 97. https://doi.org/10.3390/info14020097