An Efficient Two-Stage Network Intrusion Detection System in the Internet of Things

Abstract

1. Introduction

- (1)

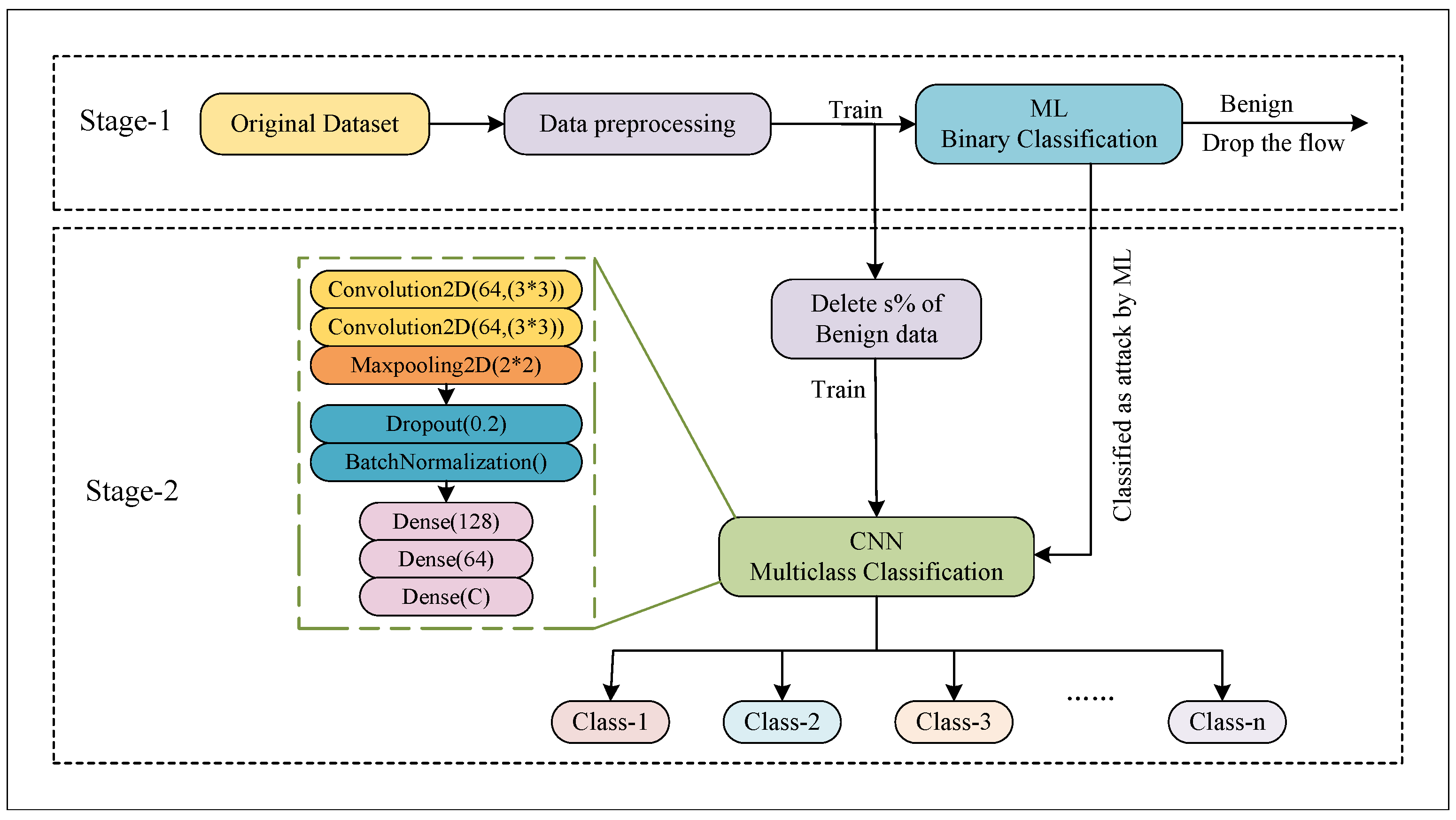

- For the intrusion detection of large-scale flow data, we propose a two-stage fine-grained network intrusion detection model that combines LightGBM algorithm and CNN. The model improves detection accuracy and efficiency while making full use of large-scale data.

- (2)

- In Stage-1, we use the LightGBM algorithm to identify normal and abnormal network traffic and compare six other classic machine learning classification algorithms, including RF, DT, LR, KNN, AdaBoost, and XGBoost. The experimental results show that the LightGBM algorithm has advantages in accuracy and time cost.

- (3)

- In Stage-2, we use CNN to perform fine-grained attack class detection on the samples predicted to be abnormal in Stage-1. Aiming at the problem of class imbalance, we use the improved SMOTE based on the imbalance ratio (IR-SMOTE) to study the effect of different class imbalance ratios in training set on the performance of the model. Experimental results show that the two-stage intrusion detection model can adapt well to imbalanced large-scale network flow data.

2. Related Work

3. Proposed Solution

3.1. Benchmark Dataset

3.2. Stage-1

3.2.1. Data Preprocessing

3.2.2. Baseline Methods

3.3. Stage-2

3.3.1. IR-SMOTE

| Algorithm 1 IR-SMOTE |

| Input: Training set , where C is the total number of classes and is the number of samples in class i Output: Resampled training set ; 1: 2: Oversampling coefficient 3: 4: for to C do 5: if then 6: for do 7: Choose a sample from 8: Randomly select a sample from the K nearest neighbor sample of 9: r = random.random (0, 1) 10: Synthetic sample 11: Add the Synthetic sample to 12: end for 13: end if 14: 15: end for 16: return |

3.3.2. Convolutional Neural Network

4. Experiments and Evaluation

4.1. Evaluation Metrics

4.2. Stage-1: Normal and Abnormal Recognition

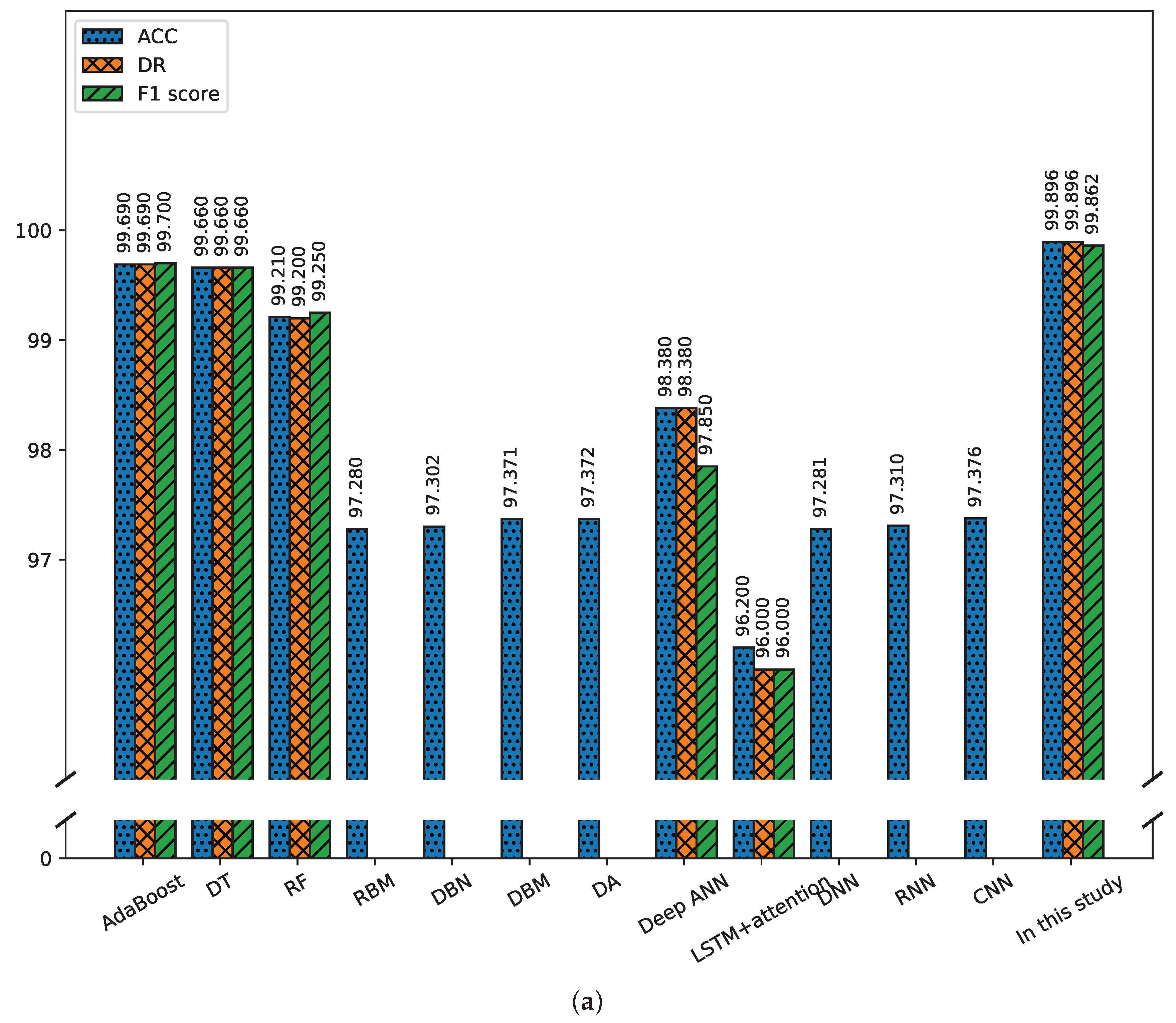

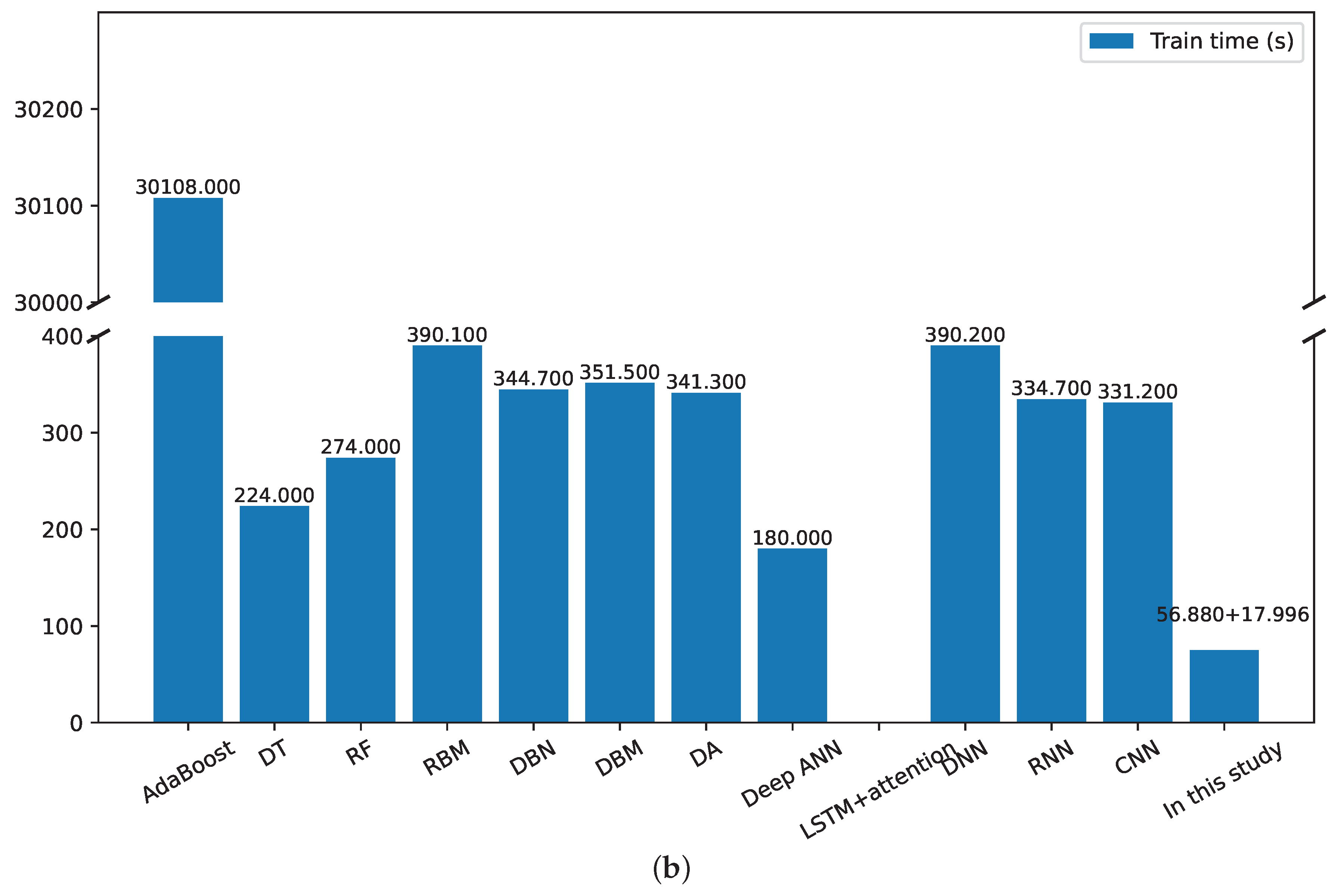

4.3. Stage-2: Fine-Grained Classification

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Srinivasan, S.; Pham, Q.; Padannayil, S.K.; Simran, K. A visualized botnet detection system based deep learning for the internet of things networks of smart cities. IEEE Trans. Ind. Appl. 2020, 56, 4436–4456. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Venkatraman, S.; Akram, J.; Qin, Z. MTHAEL: Cross-architecture IoT malware detection based on neural network advanced ensemble learning. IEEE Trans. Comput. 2020, 69, 1654–1667. [Google Scholar] [CrossRef]

- Rehman, A.; Paul, A.; Yaqub, M.A.; Rathore, M.M.U. Trustworthy Intelligent Industrial Monitoring Architecture for Early Event Detection by Exploiting Social IoT. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, SAC ’20, Virtual, 30 March–3 April 2020; pp. 2163–2169. [Google Scholar]

- Liu, H.; Lang, B. Machine learning and deep learning methods for intrusion detection systems: A survey. Appl. Sci. 2019, 9, 4396. [Google Scholar] [CrossRef]

- Mahfouz, A.M.; Venugopal, D.; Shiva, S.G. Comparative analysis of ML classifiers for Nnetwork intrusion detection. In Proceedings of the Fourth International Congress on Information and Communication Technology, London, UK, 27–28 February 2019; pp. 193–207. [Google Scholar]

- Tesfahun, A.; Bhaskari, D.L. Intrusion detection using random forests classifier with SMOTE and feature reduction. In Proceedings of the 2013 International Conference on Cloud & Ubiquitous Computing & Emerging Technologies, Pune, India, 15–16 November 2013; pp. 127–132. [Google Scholar]

- Bhavani, T.T.; Rao, M.K.; Reddy, A.M. Network intrusion detection system using random forest and decision tree machine learning techniques. In Proceedings of the First International Conference on Sustainable Technologies for Computational Intelligence, Jaipur, India, 29–30 March 2019; Springer: Singapore, 2020; pp. 637–643. [Google Scholar]

- Pajouh, N.H.; Javidan, R.; Khayami, R.; Dehghantanha, A.; Choo, K.K.R. A two-layer dimension reduction and two-tier classification model for anomaly-based intrusion detection in IoT backbone networks. IEEE Trans. Emerg. Top. Comput. 2019, 7, 314–323. [Google Scholar] [CrossRef]

- Cavusoglu, U. A new hybrid approach for intrusion detection using machine learning methods. Appl. Intell. 2019, 49, 2735–2761. [Google Scholar] [CrossRef]

- Karatas, G.; Demir, O.; Sahingoz, O.K. Increasing the performance of machine learning-based IDSs on an imbalanced and up-to-date dataset. IEEE Access 2020, 8, 32150–32162. [Google Scholar]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Slay, J. Towards developing network forensic mechanism for botnet activities in the IoT based on machine learning techniques. Mob. Netw. Manag. 2018, 235, 30–44. [Google Scholar]

- Dhaliwal, S.S.; Nahid, A.A.; Abbas, R. Effective Intrusion Detection System Using XGBoost. Information 2018, 9, 149. [Google Scholar] [CrossRef]

- D’hooge, L.; Wauters, T.; Volckaert, B.; De Turck, F. Inter-dataset generalization strength of supervised machine learning methods for intrusion detection. J. Inf. Secur. Appl. 2020, 54, 102564. [Google Scholar] [CrossRef]

- Zhang, J.; Gardner, R.; Vukotic, I. Anomaly detection in wide area network meshes using two machine learning algorithms. Futur. Gener. Comp. Syst. 2019, 93, 418–426. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, L.; Wu, C.Q.; Li, Z. An effective convolutional neural network based on SMOTE and gaussian mixture model for intrusion detection in imbalanced dataset. Comput. Netw. 2020, 117, 107315. [Google Scholar] [CrossRef]

- Bu, S.J.; Cho, S.B. A convolutional neural-based learning classifier system for detecting database intrusion via insider attack. Inf. Sci. 2020, 512, 123–136. [Google Scholar] [CrossRef]

- Nguyen, M.T.; Kim, K. Genetic convolutional neural network for intrusion detection systems. Future Gener. Comput. Syst. 2020, 113, 418–427. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Wassan, S.; Safaei, B.; Zheng, Q. Image-based malware classification using ensemble of CNN architectures (IMCEC). Comput. Secur. 2020, 92, 101748. [Google Scholar] [CrossRef]

- Almiani, M.; AbuGhazleh, A.; Al-Rahayfeh, A.; Atiewi, S.; Razaque, A. Deep recurrent neural network for IoT intrusion detection system. Simul. Model. Pract. Theory 2020, 101, 102031. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.Q.; Gao, S.; Wang, Z.; Xu, Y.; Liu, Y. An effective deep learning based scheme for network intrusion detection. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 682–687. [Google Scholar]

- Kanimozhi, V.; Jacob, T.P. Artificial intelligence based network intrusion detection with hyper-parameter optimization tuning on the realistic cyber dataset CSE-CIC-IDS2018 using cloud computing. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 4–6 April 2019; pp. 33–36. [Google Scholar]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Elazhary, H. Internet of things (IoT), mobile cloud, cloudlet, mobile IoT, IoT cloud, fog, mobile edge, and edge emerging computing paradigms: Disambiguation and research directions. J. Netw. Comput. Appl. 2019, 128, 105–140. [Google Scholar] [CrossRef]

- Tahsien, S.M.; Karimipour, H.; Spachos, P. Machine learning based solutions for security of internet of things (IoT): A survey. J. Netw. Comput. Appl. 2020, 161, 18. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Carro, B.; Sanchez-Esguevillas, A.; Lloret, J. Conditional variational autoencoder for prediction and feature recovery applied to intrusion detection in IoT. Sensors 2017, 17, 1967. [Google Scholar] [CrossRef]

- Rathore, M.M.; Saeed, F.; Rehman, A.; Paul, A.; Daniel, A. Intrusion Detection Using Decision Tree Model in High-Speed Environment. In Proceedings of the 2018 International Conference on Soft-computing and Network Security (ICSNS), Coimbatore, India, 14–16 February 2018; pp. 1–4. [Google Scholar]

- Hosseini, S.; Zade, B.M.H. New hybrid method for attack detection using combination of evolutionary algorithms, SVM, and ANN. Comput. Netw. 2020, 173, 15. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Almomani, O.; Alsaaidah, A.; Al-Otaibi, S.; Bani-Hani, N.; Hwaitat, A.K.A.; Al-Zahrani, A.; Lutfi, A.; Awad, A.B.; Aldhyani, T.H.H. Performance Investigation of Principal Component Analysis for Intrusion Detection System Using Different Support Vector Machine Kernels. Electronics 2022, 11, 3571. [Google Scholar] [CrossRef]

- Alzaqebah, A.; Aljarah, I.; Al-Kadi, O.; Damaševičius, R. A Modified Grey Wolf Optimization Algorithm for an Intrusion Detection System. Mathematics 2022, 10, 999. [Google Scholar] [CrossRef]

- Gamage, S.; Samarabandu, J. Deep learning methods in network intrusion detection: A survey and an objective comparison. J. Netw. Comput. Appl. 2020, 169, 102767. [Google Scholar] [CrossRef]

- Abdulhammed, R.; Musafer, H.; Alessa, A.; Faezipour, M.; Abuzneid, A. Features dimensionality reduction approaches for machine learning based network intrusion detection. Electronics 2019, 8, 322. [Google Scholar] [CrossRef]

- Huang, S.; Lei, K. IGAN-IDS: An imbalanced generative adversarial network towards intrusion detection system in ad-hoc networks. Ad Hoc Netw. 2020, 105, 102177. [Google Scholar] [CrossRef]

- Lin, P.; Ye, K.; Xu, C.Z. Dynamic network anomaly detection system by using deep learning techniques. In Proceedings of the International Conference on Cloud Computing, San Diego, CA, USA, 25–30 June 2019; Volume 11513, pp. 161–176. [Google Scholar]

- CSE-CIC-IDS2018 Dataset. Available online: https://www.unb.ca/cic/datasets/ids-2018.html (accessed on 27 November 2022).

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy, Funchal, Portugal, 22–24 January 2018. [Google Scholar]

- Ke, G.L.; Meng, Q.; Finley, T.; Wang, T.F.; Chen, W.; Ma, W.D.; Ye, Q.W.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Advances in Neural Information Processing Systems; Neural Information Processing Systems (Nips): La Jolla, CA, USA, 2017; Volume 30. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Protas, E.; Bratti, J.D.; Gaya, J.F.O.; Drews, P.; Botelho, S.S.C. Visualization methods for image transformation convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2231–2243. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556v6. [Google Scholar]

- Chicco, D.; Jurman, G. The advantages of the matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 13. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Maglaras, L.; Moschoyiannis, S.; Janicke, H. Deep learning for cyber security intrusion detection: Approaches, datasets, and comparative study. J. Inf. Secur. Appl. 2020, 50, 102419. [Google Scholar] [CrossRef]

| Article | Classifiers | Datasets | Evaluation Metric | Techniques | Findings |

|---|---|---|---|---|---|

| Koroniotis et al. [12] | DT, ANN, NB, ARM | UNSW-NB15 | ACC, FAR | Information gain and Weka tool. | Use of four classification techniques, DT C4.5, ARM, ANN and NB for defining and investigating the botnets. |

| Zhang et al. [16] | CNN | CICIDS2017 UNSW-NB15 | ACC, DR, FAR, PRE, F1-score | SMOTE and GMM | A flow-based NID model which fuses SGM and 1D-CNN to detect highly imbalanced network traffic. |

| Almiani et al. [20] | RNN | NSL-KDD | ACC, PRE, Recall, F1-score, FPR, FNR | RNN | An IDS composed of cascaded filtering stages to detect specific types of attacks for IoT environments. |

| Zhang et al. [21] | MLP | UNSW-NB15 | ACC, PRE, Recall, F1-score | DAE and MLP | An effective IDS based on deep learning techniques, DAE and MLP. |

| Kanimozhi et al. [22] | ANN | CSE-CIC-IDS2018 | ACC, PRE, Recall, F1-score, AUC | GridSearchCV | An experimental approach of ANN with hyper-parameter optimization on the realistic new IDS cyber dataset . |

| Lopez-Martin et al. [26] | ID-CVAE | NSL-KDD | Frequency, ACC, PRE, Recall, F1-score, FPR, NPV | CVAE | An anomaly-based supervised ML method based on the CVAE. |

| Rathore et al. [27] | C4.5 DT | KDD99 | ACC | FSR and BER | Propose a real-time IDS for the high-speed environment with a fewer number of features. |

| Hosseini et al. [28] | SVM, ANN | NSL-KDD | PRE, ACC, F1-score, MCC, AUC | MGA-SVM and HGS-PSO-ANN | The SVM technique is used to select relevant features. Than combining MGA-SVM with HGS-PSO-ANN. |

| Almaiah et al. [29] | SVM | KDD Cup’99, UNSW-NB15 | ACC, Sensitivity, F1-score | PCA and SVM | Study the impact of different kernel functions of SVM on classification performance. |

| Alzaqebah et al. [30] | ELM | UNSW-NB15 | ACC, F1-score, G-mean measures | GWO and IG | Optimize the Grey Wolf Optimization algorithm (GWO) using information gain. |

| Abdulhammed et al. [32] | RF | CICIDS2017 | ACC, F1-score, FPR, TPR, PRE, Recall | UDBB | UDBB approach for imbalanced classes. |

| Huang et al. [33] | DNN | NSL-KDD, UNSW-NB15, CICIDS2017 | ACC, F1-score, AUC | IGAN | IGAN to generate representative samples for minority classes and counter the class imbalance problem in intrusion detection by IGAN-IDS. |

| Category | Attack Type | Class Label | Number | Volume (%) |

|---|---|---|---|---|

| Benign | / | 0 | 13,484,708 | 83.070 |

| Brute-force | SSH-Bruteforce | 1 | 187,589 | 1.156 |

| FTP-Bruteforce | 2 | 193,360 | 1.191 | |

| Brute Force –XSS | 3 | 230 | 0.001 | |

| Brute Force –Web | 4 | 611 | 0.004 | |

| Web attack | SQL Injection | 5 | 87 | 0.001 |

| DoS attack | DoS attacks-Hulk | 6 | 461,912 | 2.846 |

| DoS attacks-SlowHTTPTest | 7 | 139,890 | 0.862 | |

| DoS attacks-Slowloris | 8 | 10,990 | 0.068 | |

| DoS attacks-GoldenEye | 9 | 41,508 | 0.256 | |

| DDoS attack | DDOS attack-HOIC | 10 | 686,012 | 4.226 |

| DDOS attack-LOIC-UDP | 11 | 1730 | 0.011 | |

| DDOS attack-LOIC-HTTP | 12 | 576,191 | 3.550 | |

| Botnet | Bot | 13 | 286,191 | 1.763 |

| Infilteration | Infilteration | 14 | 161,934 | 0.998 |

| Total | / | / | 16,232,943 | 100 |

| Prediction Negative | Prediction Positive | |

|---|---|---|

| Actual Negative | TN | FP |

| Actual Positive | FN | TP |

| Method | ACC | DR | FAR | Precision | MCC | Train-Time (s) | Test-Time (s) | |

|---|---|---|---|---|---|---|---|---|

| LR | 95.337 | 97.423 | 1.012 | 93.975 | 84.900 | 82.712 | 2849.512 | 0.188 |

| DT | 98.716 | 95.747 | 0.678 | 96.640 | 96.192 | 95.421 | 1581.116 | 0.704 |

| RF | 98.897 | 95.404 | 0.391 | 98.028 | 96.698 | 96.049 | 1001.335 | 3.583 |

| KNN | 99.027 | 95.437 | 0.241 | 98.774 | 97.077 | 96.514 | 51,138.024 | 10,967.225 |

| AdaBoost | 98.540 | 94.419 | 0.620 | 96.878 | 95.633 | 94.768 | 6018.889 | 35.698 |

| XGBoost | 99.149 | 95.087 | 0.023 | 99.883 | 97.426 | 96.959 | 3989.552 | 3.786 |

| LightGBM | 99.135 | 95.009 | 0.237 | 99.878 | 97.383 | 96.909 | 56.880 | 1.286 |

| Class | Class Label | Train | Val | Test | Total |

|---|---|---|---|---|---|

| Benign | 0 | 485,449 | 53,939 | 638 | 540,026 |

| SSH-Bruteforce | 1 | 135,064 | 15,007 | 37,518 | 187,589 |

| FTP-Bruteforce | 2 | 139,219 | 15,469 | 38,672 | 193,360 |

| Brute Force –XSS | 3 | 166 | 18 | 37 | 221 |

| Brute Force –Web | 4 | 440 | 49 | 66 | 555 |

| SQL Injection | 5 | 63 | 7 | 7 | 77 |

| DoS attacks-Hulk | 6 | 332,577 | 36,953 | 92,382 | 461,912 |

| DoS attacks-SlowHTTPTest | 7 | 100,721 | 11,191 | 27,978 | 139,890 |

| DoS attacks-Slowloris | 8 | 7913 | 879 | 2172 | 10,964 |

| DoS attacks-GoldenEye | 9 | 29,885 | 3321 | 8302 | 41,508 |

| DDOS attack-HOIC | 10 | 493,928 | 54,881 | 137,203 | 686,012 |

| DDOS attack-LOIC-UDP | 11 | 1245 | 139 | 346 | 1730 |

| DDOS attack-LOIC-HTTP | 12 | 414,858 | 46,095 | 115,208 | 576,161 |

| Bot | 13 | 203,058 | 22,895 | 57,213 | 283,166 |

| Infilteratio | 14 | 116,592 | 12,955 | 5112 | 134,659 |

| Total | / | 2,464,178 | 273,798 | 522,854 | 3,260,830 |

| Class | SMOTE | ||||

|---|---|---|---|---|---|

| Benign | 17.712 | 14.890 | 16.144 | 16.458 | 17.398 |

| SSH-Bruteforce | 99.984 | 99.984 | 99.984 | 99.984 | 99.984 |

| FTP-Bruteforce | 100 | 100 | 100 | 100 | 100 |

| Brute Force –XSS | 75.676 | 100 | 91.892 | 97.297 | 100 |

| Brute Force –Web | 98.485 | 68.182 | 93.939 | 90.909 | 89.394 |

| SQL Injection | 57.143 | 71.429 | 71.429 | 71.429 | 71.429 |

| DoS attacks-Hulk | 100 | 100 | 100 | 100 | 100 |

| DoS attacks-SlowHTTPTest | 100 | 100 | 100 | 100 | 100 |

| DoS attacks-Slowloris | 100 | 100 | 100 | 100 | 100 |

| DoS attacks-GoldenEye | 99.988 | 99.988 | 99.988 | 99.988 | 99.976 |

| DDOS attack-HOIC | 100 | 100 | 100 | 100 | 100 |

| DDOS attack-LOIC-UDP | 100 | 100 | 100 | 100 | 100 |

| DDOS attack-LOIC-HTTP | 100 | 100 | 100 | 100 | 100 |

| Bot | 100 | 100 | 100 | 100 | 100 |

| Infilteratio | 99.980 | 99.980 | 99.980 | 99.980 | 100 |

| DR | 99.896 | 99.890 | 99.894 | 99.895 | 99.896 |

| ACC | 99.896 | 99.890 | 99.894 | 99.895 | 99.896 |

| Precision | 99.902 | 99.897 | 99.900 | 99.901 | 99.903 |

| 99.862 | 99.853 | 99.859 | 99.860 | 99.862 | |

| MCC | 95.922 | 95.922 | 95.913 | 95.922 | 95.836 |

| Train-time (s) | 17.996 | 17.475 | 17.691 | 19.890 | 51.050 |

| Test-time (s) | 8.790 | 8.083 | 8.851 | 8.470 | 9.057 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Zhang, B.; Huang, L.; Zhang, Z.; Huang, H. An Efficient Two-Stage Network Intrusion Detection System in the Internet of Things. Information 2023, 14, 77. https://doi.org/10.3390/info14020077

Zhang H, Zhang B, Huang L, Zhang Z, Huang H. An Efficient Two-Stage Network Intrusion Detection System in the Internet of Things. Information. 2023; 14(2):77. https://doi.org/10.3390/info14020077

Chicago/Turabian StyleZhang, Hongpo, Bo Zhang, Lulu Huang, Zhaozhe Zhang, and Haizhaoyang Huang. 2023. "An Efficient Two-Stage Network Intrusion Detection System in the Internet of Things" Information 14, no. 2: 77. https://doi.org/10.3390/info14020077

APA StyleZhang, H., Zhang, B., Huang, L., Zhang, Z., & Huang, H. (2023). An Efficient Two-Stage Network Intrusion Detection System in the Internet of Things. Information, 14(2), 77. https://doi.org/10.3390/info14020077