1. Introduction

Programming ability is widely seen as a highly useful skill in our modern technological society. The need, therefore, arises to examine the variables and tools that can influence students’ success in acquiring this skill [

1]. Learning to code involves a variety of factors, including the educational process, instructional materials, the technology employed, and metacognitive aspects. It is clear that engaging lessons and activities only have value when they have an impact on the pupils [

2]. To this end, teaching aids and innovative teaching techniques may increase students’ feelings of success [

3] and aid in their development of confidence, which is consistent with constructivist teaching methods and the theories of Piaget and Vygotsky [

4,

5,

6,

7,

8].

Many students find fundamental programming concepts abstract and complicated upon first encountering them and experience challenges and misperceptions as a result [

9]. Teachers could benefit from guidance in how to effectively teach these students since problems can result from a lack of or inadequate teaching methodologies [

10,

11]. Numerous strategies have been used to attempt to address these challenges, for instance, leveraging mobile technology [

12] and pair programming [

13]. Competitive programming challenges and contests are also popular [

14] as is the use of automatic graders [

15] in assessment. Research has also been carried out into the most effective sequence in which to introduce new concepts to students [

16].

Visual Execution Environments (VEEs) have been proven to be effective in introducing programming concepts to students taking introductory programming courses at the university level (commonly referred to as CS1 students) [

17] as well as to younger students [

16]. Learning through game programming [

18] and using a combination of VEEs and game programming [

19] have also been found to be effective approaches.

The contribution of this research is a rigorous investigation into how to teach fundamental programming concepts at the CS1 level. In this research, a Java Visual Executing Environment (JVEE), created specifically for CS1 students, was evaluated as a teaching, learning, and practice tool for computer programming. The study aimed to address two research questions (RQ):

RQ1: Can this cohort of CS1 students benefit from the use of a Java Visual Execution Environment to enhance their understanding of programming concepts?

RQ2: Which programming principles are typically easier to understand? Furthermore, which are challenging?

The programming concepts covered here can be found in a typical “Introduction to Programming” course, including input and output structures, conditionals, loops, arrays, functions, recursion, and files.

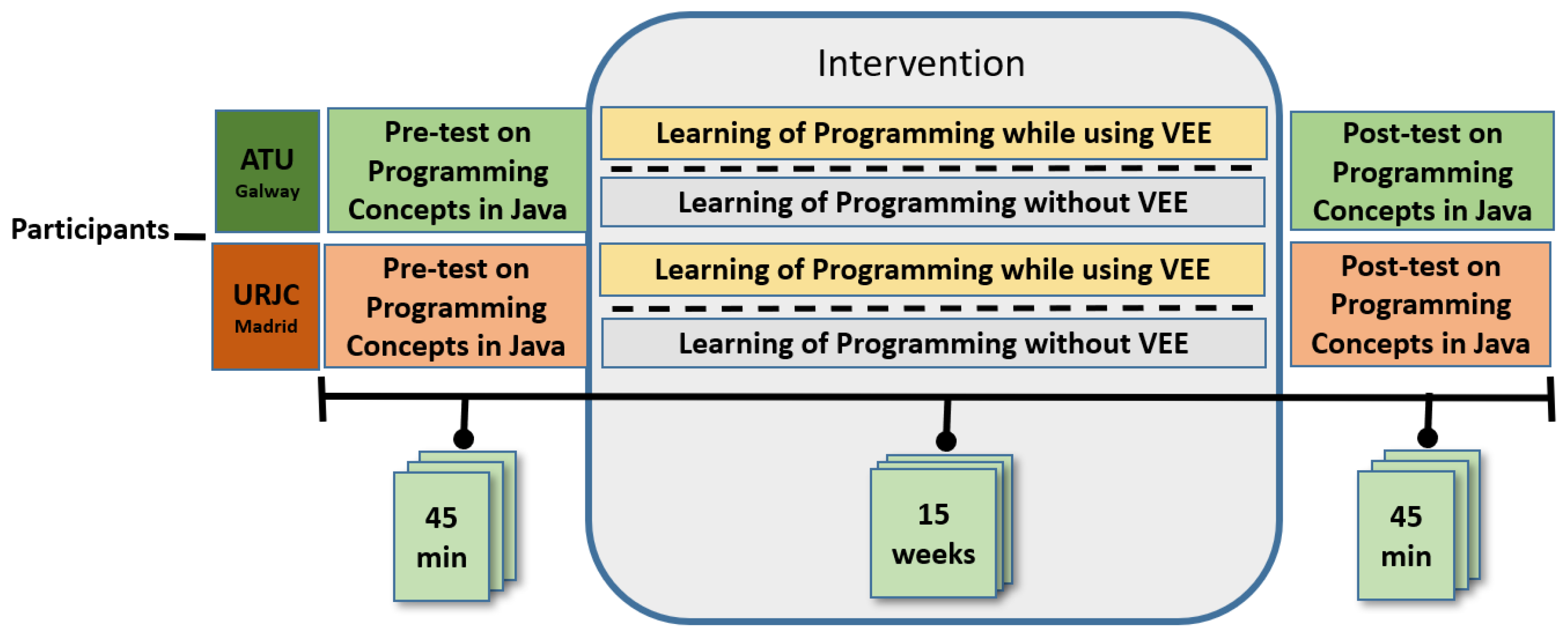

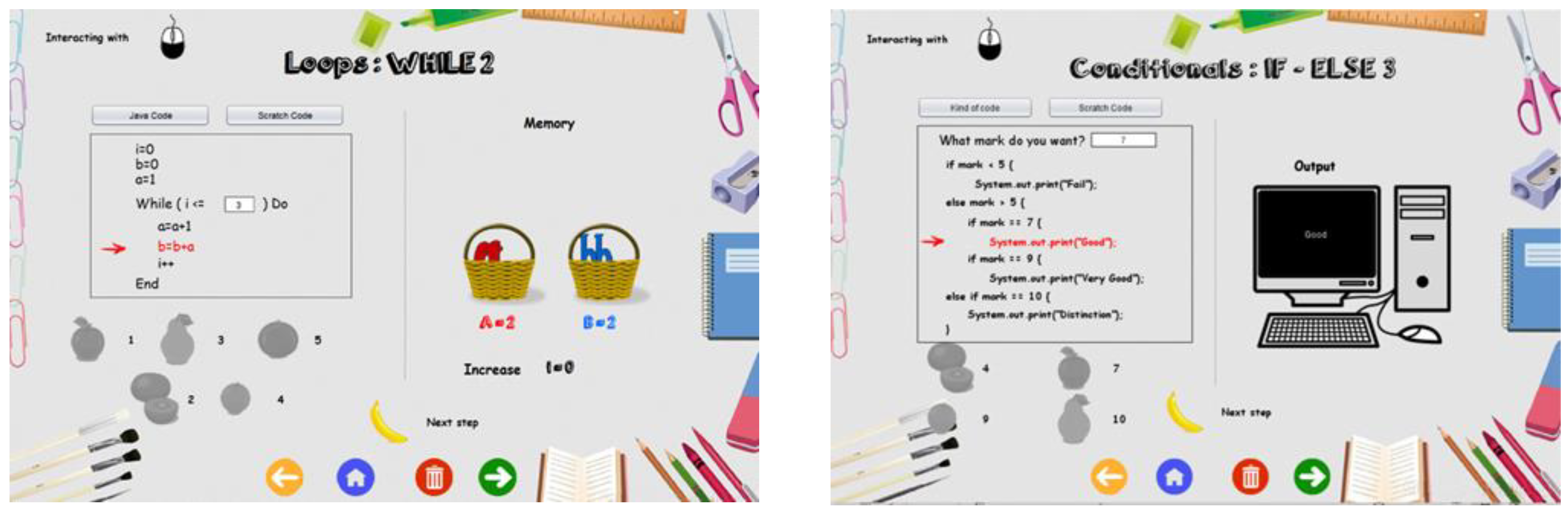

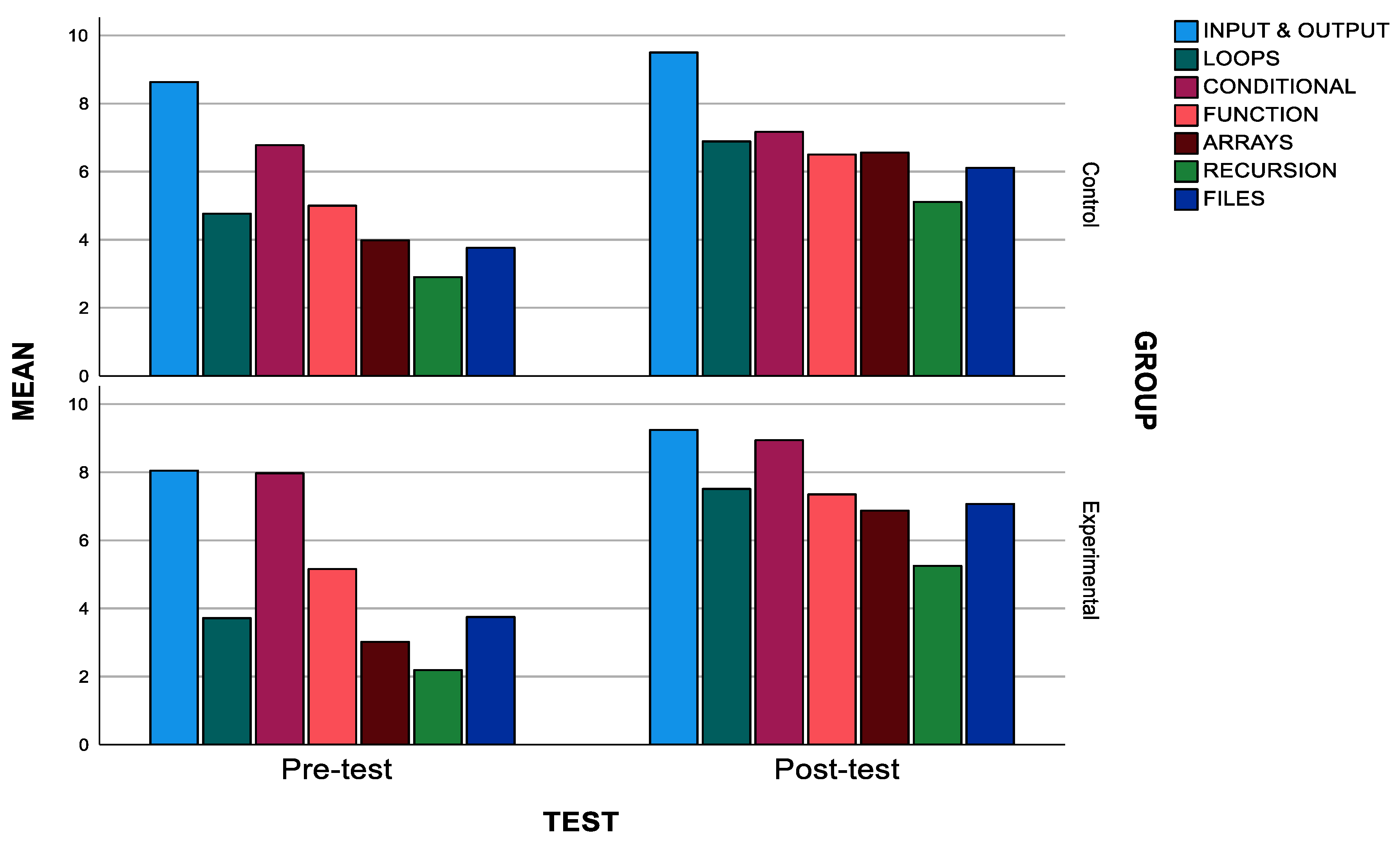

This paper examined whether a group of 63 CS1 undergraduate students registered for a Java-based introductory programming course in one of two universities (in Galway, Ireland and Madrid, Spain) could develop their programming skills under the guidance of the VEE. Secondly, it looked into whether use of the VEE had a topic-dependent benefit, i.e., whether particular topics could be taught effectively with the VEE more than others. The same lessons and order of concepts were followed on both university sites, and four very experienced and coordinated tutors taught the module to the CS1 students in each university. The student cohort was divided into four groups: two groups were designated as the experimental groups, and the other two as the control groups. The JVEE was used in the instruction of the experimental groups, whereas the control groups received the usual instruction given to CS1 students at the participating sites. The process for the experimental group included an introduction to each concept being taught, which was also conducted through initially using the Java VEE. The JVEE provided interactive exercises with visuals illustrating the execution of the code in steps, alongside giving context for the concepts using pre-made, on-the-spot exercises based on metaphors for each idea and practice with suggested activities in Java.

The JVEE was used for the whole of the first semester of 2021–2022 in Madrid, from 13 September to 22 December, and secondly, in Galway, Ireland, in the second semester of 2022–2023, from 23 January to 5 May. The improvement in the pupils’ learning was evaluated with a test before and after the course, which had 28 multiple-choice questions that covered the programming fundamentals. The same test was administered before and after the course of instruction with the JVEE. The findings revealed that the use of the JVEE resulted in a significant improvement in students’ programming ability in all topics, except conditionals. The improvement was particularly pronounced for some concepts, such as loops, recursion, files, arrays, and functions.

This paper is organized as follows:

Section 2 examines the theoretical framework for enhancing programming learning, complementary methods for teaching programming, and Visual Execution Environments.

Section 3 describes the research design, pedagogical strategy, research participants, and instruments for measuring. The experiment’s findings are presented in

Section 4, both generally and with programming ideas.

Section 5 discusses the limits of the study.

Section 6 summarizes the results and suggests areas for further research.

5. Discussion and Conclusions

The contribution of this research is a rigorous investigation into how to teach fundamental CS1-level coding topics and how this affects students’ educational advancements in response to such a proposition. In this research, a Java Visual Executing Environment (JVEE) was evaluated as a teaching, learning, and practice tool for computer programming that was created for CS1 students. There were two research questions that aimed to address this:

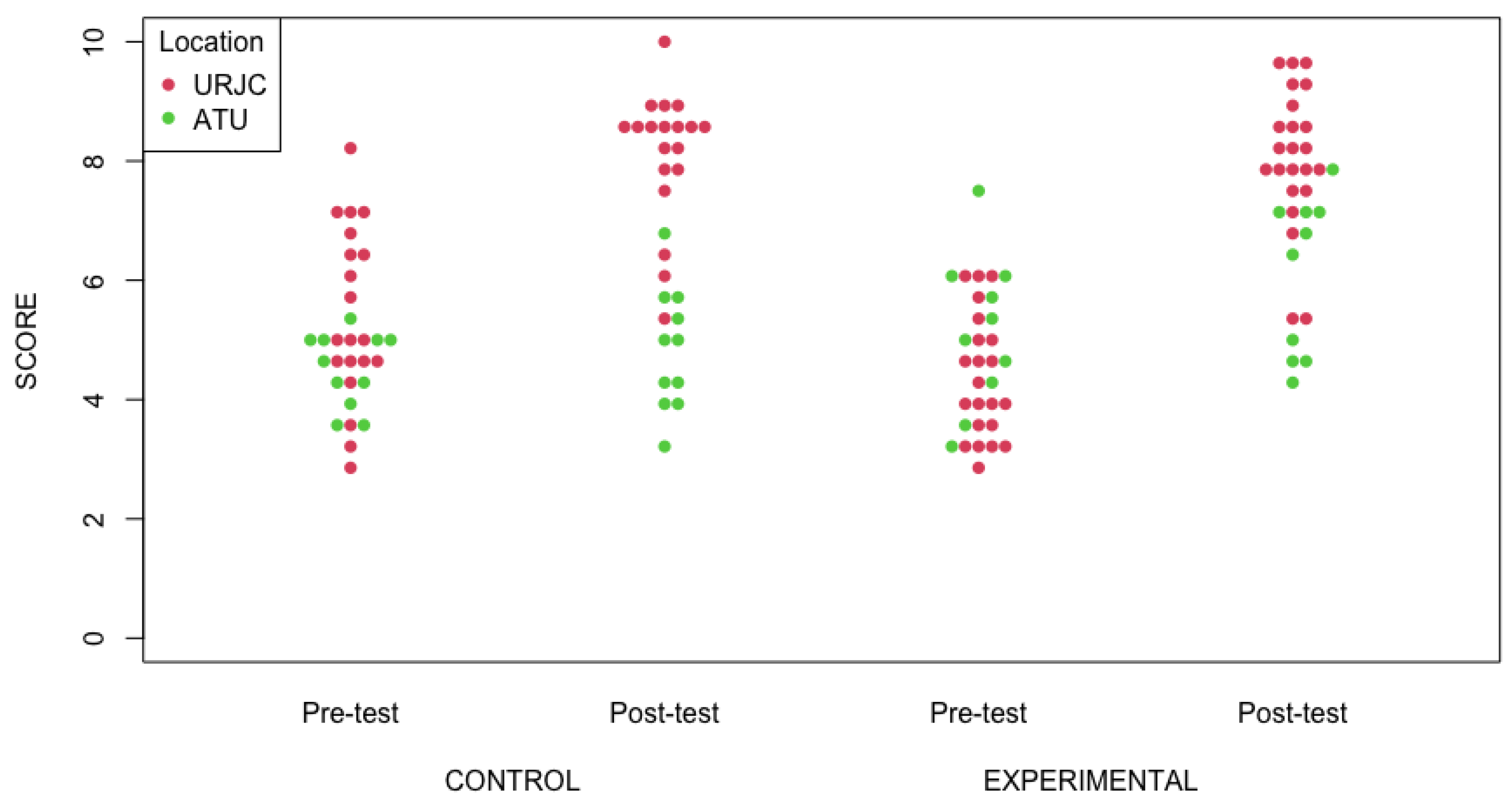

As discussed in the preceding section, statistically significant improvements in programming knowledge were seen in both the control and experimental groups as a result of undergoing a course of instruction. Since the question was whether the use of the JVEE had an impact on the students’ learning, it was therefore necessary to directly compare the control group (with no use of the JVEE) with the experimental group (with use of the JVEE). As it was seen, both pre-tests were homogeneous. For this reason, the post-tests of each group could be directly compared, since they started from the same conditions. In the case of the comparison of the post-tests for the control and experimental groups, there was a statistically significant difference.

To take into account all these particularities that are separately presented, a more advanced mathematical model was used, ANCOVA. In this model, the pre-test results were a covariate that included factors such as place of origin (Spain or Ireland) and group (control or experimental). To do this, first of all, it was verified that the conditions necessary for applying this model were present, namely, normality of the data and homoscedasticity.

Therefore, it was observed that the pre-test grade did not influence the model, although the influence of the location of the students was statistically significant (in Spain, there were better grades, on average, than in Ireland), as well as the group to which they belonged (the experimental group’s post-test scores were better compared with those of the control group in both locations). The interaction of location and group was not statistically significant. Through the partial Eta-Squared values, the effect of each significant factor could be measured. Both the location (Spain or Ireland) and the group factors (experimental or control groups) corresponded to a large effect, though the value was double for the group factors.

Here the study concentrated on observing what occurred when we took each dimension separately, e.g., input and output, loops, conditionals, functions, arrays, recursion, and files. As can be seen in previous sections, all the dimensions increased or maintained their mean values from the pre- and post-test results, both in the experimental and control groups. The information given was complemented by where the greatest rise in the mean values for the experimental group could be observed.

We examined these concept variations between the pre- and post-tests to discover if they were statistically significant. This was tested using the t-Student test for paired sample design. For the control group, there was a statistically significant improvement between the pre- and post-tests for all the concepts (input and output, loops, functions, arrays, recursion, and files), except the conditional concept (p-value > 0.05). The same phenomenon occurred in the experimental group, where the mean values increased even more (in this order) for loops, recursion, files, arrays, and functions; again, conditionals was the only concept in which the improvement was not statistically significant.

Author Contributions

Conceptualization, R.H.-N., C.P., J.F., P.P.-B. and M.D.; methodology, R.H.-N., C.P., J.F., P.P.-B. and M.D.; software, R.H.-N.; validation, R.H.-N., C.P., J.F., P.P.-B. and M.D.; formal analysis, C.P.; investigation, R.H.-N., C.P., J.F., P.P.-B. and M.D.; resources, R.H.-N., C.P., J.F., P.P.-B. and M.D.; data curation, R.H.-N.; writing—original draft preparation, R.H.-N., C.P. and J.F.; writing—review and editing, R.H.-N., C.P. and J.F.; visualization, R.H.-N. and C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Spanish Ministry of Universities’ “José Castillejo” Program for Mobility Stays Abroad for Young Doctors, grant number CAS21/00413 to Raquel Hijón-Neira.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical reasons.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Lau, W.W.; Yuen, A.H. Modelling programming performance: Beyond the influence of learner characteristics. Comput. Educ. 2011, 57, 1202–1213. [Google Scholar] [CrossRef]

- Astin, W.A. College Retention Rates Are Often Misleading; Chronicle of Higher Education: Washington, DC, USA, 1993. [Google Scholar]

- Stuart, V.B. Math Course or Math Anxiety? Natl. Counc. Teach. Math. 2000, 6, 330. [Google Scholar]

- Piaget, J. The Moral Judgement of the Child; Penguin Books: New York, NY, USA, 1932. [Google Scholar]

- Piaget, J. Origins of Intelligence in Children; International Universities Press: New York, NY, USA, 1952. [Google Scholar]

- Vygotsky, L.S. Thought and Language, 2nd ed.; MIT Press: Cambridge, MA, USA, 1962. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Process; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Vygotsky, L.S. The Genesis of Higher Mental Functions. In Cognitive Development to Adolescence; Richardson, K., Sheldon, S., Eds.; Erlbaum: Hove, UK, 1988. [Google Scholar]

- Renumol, V.; Jayaprakash, S.; Janakiram, D. Classification of Cognitive Difficulties of Students to Learn Computer Programming; Indian Institute of Technology: New Delhi, India, 2009; p. 12. [Google Scholar]

- Barker, L.J.; McDowell, C.; Kalahar, K. Exploring factors that influence computer science introductory course students to persist in the major. ACM SIGCSE Bull. 2009, 41, 153–157. [Google Scholar] [CrossRef]

- Coull, N.J.; Duncan, I. Emergent Requirements for Supporting Introductory Programming. Innov. Teach. Learn. Inf. Comput. Sci. 2011, 10, 78–85. [Google Scholar] [CrossRef]

- Maleko, M.; Hamilton, M.; D’Souza, D. Novices’ Perceptions and Experiences of a Mobile Social Learning Environment for Learning of Programming. In Proceedings of the 12th International Conference on Innovation and Technology in Computer Science Education (ITiCSE), Haifa, Israel, 3–5 July 2012. [Google Scholar]

- Williams, L.; Wiebe, E.; Yang, K.; Ferzli, M.; Miller, C. In Support of Pair Programming in the Introductory Computer Science Course. Comput. Sci. Educ. 2002, 12, 197–212. [Google Scholar] [CrossRef]

- Combéfis, S.; Beresnevičius, G.; Dagienė, V. Learning Programming through Games and Contests: Overview, Characterisation and Discussion. Olymp. Inform. 2016, 10, 39–60. [Google Scholar] [CrossRef]

- Combéfis, S. Automated Code Assessment for Education: Review, Classification and Perspectives on Techniques and Tools. Software 2022, 1, 3–30. [Google Scholar] [CrossRef]

- Hijón-Neira, R.; Pérez-Marin, D.; Pizarro, C.; Connolly, C. The Effects of a Visual Execution Environment and Makey Makey on Primary School Children Learning Introductory Programming Concepts. IEEE Access 2020, 8, 217800–217815. [Google Scholar] [CrossRef]

- Hijón-Neira, R.; Connolly, C.; Palacios-Alonso, D.; Borrás-Gené, O. A Guided Scratch Visual Execution Environment to Introduce Programming Concepts to CS1 Students. Information 2021, 12, 378. [Google Scholar] [CrossRef]

- Ouahbi, I.; Kaddari, F.; Darhmaoui, H.; Elachqar, A.; Lahmine, S. Learning Basic Programming Concepts by Creating Games with Scratch Programming Environment. Procedia-Soc. Behav. Sci. 2015, 191, 1479–1482. [Google Scholar] [CrossRef]

- Hijon-Neira, R.B.; Velázquez-Iturbide, Á.; Pizarro-Romero, C.; Carriço, L. Game programming for improving learning experience. In Proceedings of the 2014 Conference on Innovation & Technology in Computer Science Education, Uppsala, Sweden, 21–25 June 2014; pp. 225–230. [Google Scholar] [CrossRef]

- Jenkins, T. The motivation of students of programming. ACM SIGCSE Bull. 2001, 33, 53–56. [Google Scholar] [CrossRef]

- Kurland, D.M.; Pea, R.D.; Lement, C.C.; Mawby, R. A Study of the Development of Programming Ability and Thinking Skills in High School Students. J. Educ. Comput. Res. 1986, 2, 429–458. [Google Scholar] [CrossRef]

- Alshaigy, B.; Ott, L. Novice programming students: Common difficulties and misconceptions. In Proceedings of the 43rd ACM Technical Symposium on Computer Science Education, Raleigh, NC, USA, 29 February–3 March 2012. [Google Scholar]

- Luxton-Reilly, A.; Simon; Albluwi, I.; Becker, B.A.; Giannakos, M.; Kumar, A.N.; Ott, L.; Paterson, J.; Scott, M.; Sheard, J.; et al. Introductory programming: A systematic literature review. In Proceedings of the ITiCSE 2018 Companion: Proceedings Companion of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education, Larnaca, Cyprus, 2–4 July 2018; Association for Computing Machinery (ACM): New York, NY, USA, 2018; pp. 55–106. [Google Scholar] [CrossRef]

- Robins, A.; Rountree, J.; Rountree, N. Learning and teaching programming: A review and discussion. Comput. Sci. Educ. 2003, 13, 137–172. [Google Scholar] [CrossRef]

- Brooks, F.P. No Silver Bullet: Essence and Accidents of Software Engineering. In Proceedings of the Tenth World Computing Conference, Dublin, Ireland, 1–5 September 1986; pp. 1069–1076. [Google Scholar]

- Mishra, D.; Ostrovska, S.; Hacaloglu, T. Exploring and expanding students’ success in software testing. Inf. Technol. People 2017, 30, 927–945. [Google Scholar] [CrossRef]

- Corney, M.; Hanks, B.; McCauley, R. ‘Explain in Plain English’ Questions Revisited: Data Structures Problems. In Proceedings of the 45th ACM Technical Symposium on Computer Science Education, Atlanta, GA, USA, 5–8 March 2014. [Google Scholar]

- Clancy, M.J.; Linn, M.C. Case studies in the classroom. ACM SIGCSE Bull. 1992, 24, 220–224. [Google Scholar] [CrossRef]

- Arto, V.; Luukkainen, M.; Kurhila, J. Multi-faceted support for learning computer programming. In Proceedings of the 42nd ACM Technical Symposium on Computer Science Education, Dallas, TX, USA, 9–12 March 2011. [Google Scholar]

- Denny, P.; Luxton-Reilly, A.; Harmer, J. Students use of the PeerWise system. In Proceedings of the 13th Annual SIGCSE Conference on Innovation and Technology in Computer Science Education, Madrid, Spain, 30 June–2 July 2008; ACM: New York, NY, USA; pp. 73–77. [Google Scholar]

- Ragonis, N.; Uri, L. Factors explaining success in an introductory computer science course. ACM Trans. Comput. Educ. (TOCE) 2018, 19, 1–21. [Google Scholar]

- Chandramouli, M.; Zahraee, M.; Winer, C. A fun-learning approach to programming: An adaptive Virtual Reality (VR) platform to teach programming to engineering students. In Proceedings of the IEEE International Conference on Electro/Information Technology, Milwaukee, WI, USA, 5–7 July 2014. [Google Scholar]

- Silapachote, P.; Srisuphab, A. Teaching and learning computational thinking through solving problems in Artificial Intelligence: On designing introductory engineering and computing courses. In Proceedings of the 2016 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Bangkok, Thailand, 7–9 December 2016. [Google Scholar]

- Liu, C.-C.; Cheng, Y.-B.; Huang, C.-W. The effect of simulation games on the learning of computational problem solving. Comput. Educ. 2011, 57, 1907–1918. [Google Scholar] [CrossRef]

- Kazimoglu, C.; Kiernan, M.; Bacon, L.; Mackinnon, L. A Serious Game for Developing Computational Thinking and Learning Introductory Computer Programming. Procedia-Soc. Behav. Sci. 2012, 47, 1991–1999. [Google Scholar] [CrossRef]

- Garcia-Iruela, M.; Hijón-Neira, R. Experiencia de Juegos Serios en el aula de Formación Profesional. In Proceedings of the V Congreso Internacional de Videojuegos y Educación, (CIVE, 2017), La Laguna, Spain, 7–9 June 2017; Available online: https://riull.ull.es/xmlui/bitstream/handle/915/6682/CIVE17_paper_17.pdf?sequence=1 (accessed on 18 October 2023).

- Saad, A.; Shuff, T.; Loewen, G.; Burton, K. Supporting undergraduate computer science education using educational robots. In Proceedings of the ACMSE 2018 Conference, Tuscaloosa, AL, USA, 29–31 March 2012. [Google Scholar]

- Weintrop, W.; Wilensky, U. Comparing Block-Basedand Text-Based Programming in High School Computer Science Classrooms. ACM Trans. Comput. Educ. 2017, 18, 1. [Google Scholar] [CrossRef]

- Martínez-Valdés, J.A.; Velázquez-Iturbide, J.; Neira, R.H. A (Relatively) Unsatisfactory Experience of Use of Scratch in CS1. In Proceedings of the 5th International Conference on Technological Ecosystems for Enhancing Multiculturality, Cadiz, Spain, 18–20 October 2017. [Google Scholar]

- Fitzgerald, S.; Lewandowski, G.; Mccauley, R.; Murphy, L.; Simon, B.; Thomas, L.; Zander, C. Debugging: Finding, fixing and flailing, a multi-institutional study of novice debuggers. Comput. Sci. Educ. 2008, 18, 93–116. [Google Scholar] [CrossRef]

- Salguero, A.; Griswold, W.G.; Alvara Cdo Porter, L. Understanding Sources of Student Struggle in Early Computer Science Courses. In Proceedings of the 17th ACM Conference on International Computing Education Research (ICER 2021), Virtual Event, 16–19 August 2021; ACM: New York, NY, USA, 2021; pp. 319–333. [Google Scholar]

- Fogg, B.J. A behavior model for persuasive design. In Proceedings of the 4th International Conference on Persuasive Technology, Claremont, CA, USA, 26–29 April 2009; pp. 1–7. [Google Scholar]

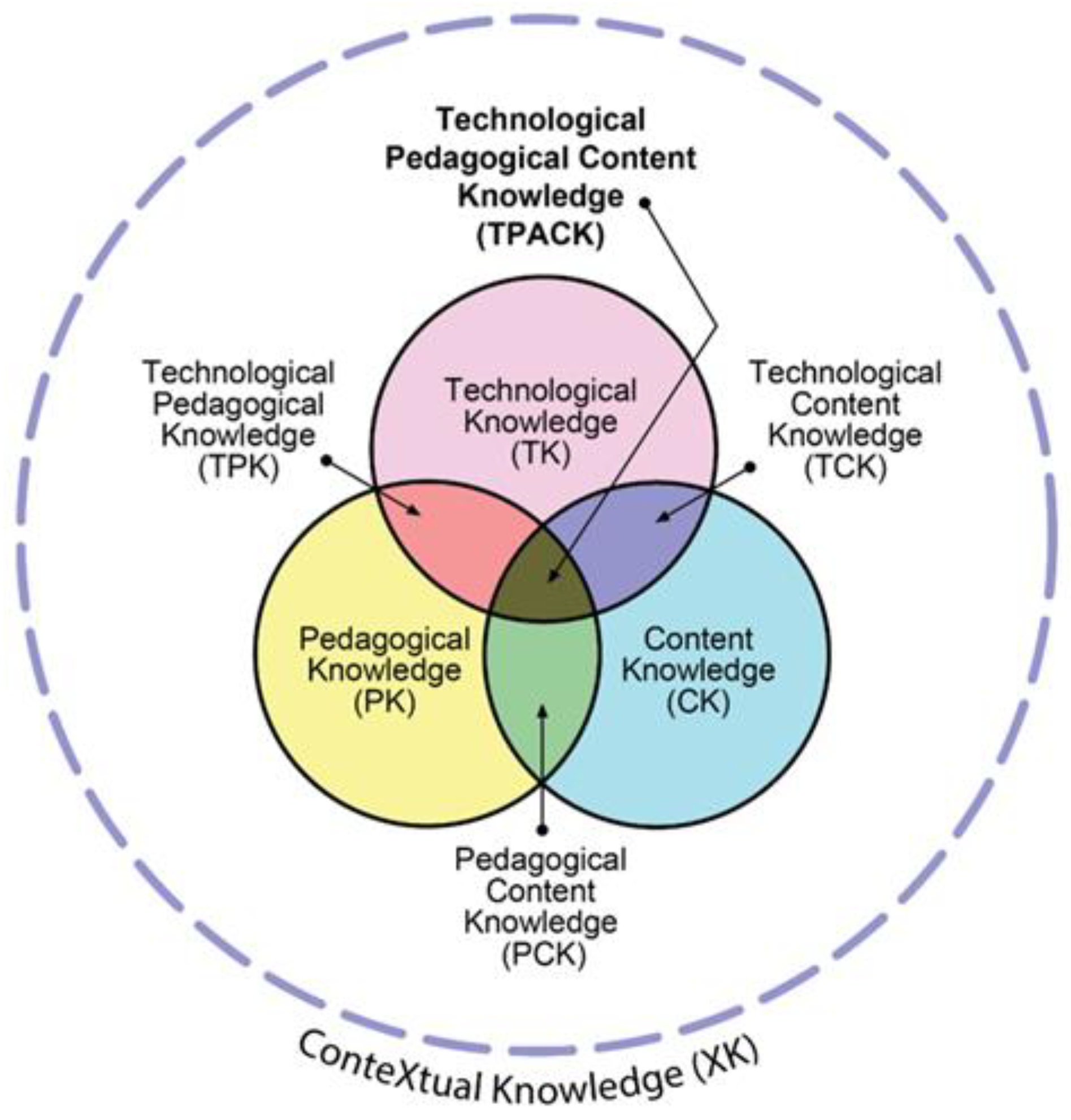

- Mishra, P.; Koehler, M. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Introducing Technological Pedagogical Content Knowledge; American Educational Research Association: Vancouver, BC, Canada, 2008; pp. 1–16. [Google Scholar]

- Hijón-Neira, R.; Connolly, C.; Pizarro, C.; Pérez-Marín, D. Prototype of a Recommendation Model with Artificial Intelligence for Computational Thinking Improvement of Secondary Education Students. Computers 2023, 12, 113. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).