Abstract

The purpose of this study is to examine the impact of educational software characteristics on software performance through the mediating role of user acceptance. Our approach allows for a deeper understanding of the factors that contribute to the effectiveness of educational software by bridging the fields of educational technology, psychology, and human–computer interaction, offering a holistic perspective on software adoption and performance. This study is based on a sample collected from public and private education institutes in Northern Greece and on data obtained from 236 users. The statistical method employed is structural equation models (SEMs), via SPSS—AMOS estimation. The findings of this study suggest that user acceptance and performance appraisal are exceptionally interrelated in regard to educational applications. The study argues that user acceptance is positively related to the performance of educational software and constitutes the nested epicenter mediating construct in the educational software characteristics. Additional findings, such as computer-familiar users and users from the field of choral music, are positively related to the performance of the educational software. Our conclusions help in understanding the psychological and behavioral aspects of technology adoption in the educational setting. Findings are discussed in terms of their practical usefulness in education and further research.

1. Introduction

Technological advances in the field of human–computer interaction (HCI) have been fruitful when it comes to educational systems or educational software (Edu S/W). Among the grand challenges listed by Stephanidis et al. [1], learning and creativity “is not a new concept, and many types of technologies are already used in schools today”. Yet, the effectiveness and feasibility of such systems are an issue concerning the teachers, who need to evaluate an educational computer software product prior to its selection for the curriculum [2]. Therefore, a variety of evaluation frameworks have been developed for these purposes. The earliest framework that is most widely accepted among both communities of researchers and teachers is the systematic evaluation of computer-based education [3]. This framework introduces 14 pedagogical dimensions and 10 human–computer interaction (HCI) dimensions to be evaluated. Each dimension analyzes a feature of the educational technology (e.g., goal orientation, motivation, user activity) and has its topic positioned on a spectrum with two poles. More specifically, the motivation dimension constituted the main factor for students to engage the technology and was situated between the poles of extrinsic and intrinsic motivation.

Several evaluating approaches have been based on this framework, introducing new adaptations [4,5,6,7,8,9]. These adaptations derive from the necessities that arise from the technological advances that inevitably support novelties in education. Therefore, the evaluative dimensions need to be regularly adjusted and enriched. Additionally, certain rubrics have been developed aiming at the evaluation of real-time interactive systems of theoretical knowledge [10,11]. Even if educational applications acquired a creative tool, since real-time interactive systems are able to transmit more than mere theoretical knowledge, there have been few dimensions or rubrics proposed that cover the acquisition of practical skills [12]. However, dimensions that focus on the evaluation of vocal skills learning are even scarcer and have as a benchmark the early approach of Carole [13].

Taking into account this gap, the current study focuses on the assessment of a developed self-learning environment for individuals interested in learning Byzantine music. The educational system captures multimodal data that consist of sound and gestures, and by using ML algorithms (i.e., hidden Markov models (HMM) and dynamic time warping (DTW) algorithms included in the “Gesture Follower” software developed at IRCAM [14], it perceives the information included in the inputted data. The music genre on which the interface was trained is Byzantine music, which, as a music genre, presents a peculiarity in the fact that for each note, the absolute frequency value is not essential. Regarding the dimensions of the assessment and their relationship, the approach was to evaluate separately the software characteristics, performance, and user acceptance.

The rest of the paper is organized as follows. In Section 2, the necessary theoretical background is provided along with related works, in terms of the different assessment dimensions and the domain under study. In Section 3, we present our methodology, whereas in Section 4, we outline the results. In Section 5, we discuss the results by providing implications to researchers and practitioners. Finally, Section 6 reports the threats to validity, and Section 7 concludes the paper.

2. Related Work and Literature Review

In this section, we present related and background work, which is vital for the understanding of this paper and study. First of all, we present Edu S/W specifically in the domain of vocal music in order to provide some related work in the aspect of vocal music. Moreover, for each of the main pillars of the study (user acceptance, software characteristics, and software performance), we present some background information and the relationships between them.

2.1. Edu S/W in Vocal Music

Although the work related to the use of Edu S/W or information and communications technology (ICT) in the teaching of vocal music is limited, some publications can be found. Tejada et al. [15] examine the use of an Edu S/W called Cantus in the teaching of vocal music to students between 11–15 years old during the first four courses of elementary music studies. They remarked that of the 21 music teachers that participated in the evaluation of their software, 16 of them used technology when teaching music. In conclusion, they found that the software was positively evaluated by the participating teachers for its technical elements and pedagogical value. A larger scale study was conducted in Italy with 22 different Edu S/Ws, which are widely used in Europe [16]. The study was focused on teachers and found that the majority of them were not able to evaluate the systems, but from the rest of them, they rated the educational systems highly.

Wang [17] conducted research on an artificial intelligence-based vocal teaching system that enables students to practice vocal music independently while simultaneously allowing teachers to keep track of the students’ learning through reasoning and analysis. During their research, Wang conducted a survey where 82% of the students that participated preferred computer-based education to traditional music education. Similar approaches for different teaching methodologies, by utilizing the newer technological advantages, have been used in other studies as well. VR Edu S/Ws have been proposed in order to be able to capture the facial expressions of the students in order to help with the approaches of the instructors [18,19]. Moreover, Jun et al. [20] created an Edu S/W based on Android in order to help professional singers who want to improve their skills or teach others how to sing.

As these studies pave the way for further exploration and implementation of technology in music education, it becomes evident that the fusion of Edu S/W and innovative approaches holds great potential for shaping the future of vocal music instruction. Finally, with the limited related work in this specific domain, we can see the value of this study in the domain of vocal music.

2.2. User Acceptance

A user’s attitude towards a new technology significantly affects its acceptance. Any negative perception the user has for the technology creates difficulties for its adoption [21]. User acceptance of an Edu S/W is a complicated topic that is influenced by the technology acceptance model (TAM) and also by other social conditions [22]. The technology acceptance model proposes that two primary factors influence a user’s intention to use a technology, perceived usefulness and perceived ease of use [23]. Users are more likely to accept and use a technology if they perceive it as useful and believe that it can enhance their productivity or achieve their goals. In the context of Edu S/W, students and educators are more likely to adopt software if they see it as beneficial for their learning process. Perceived usefulness can be influenced by factors such as improved learning outcomes, increased efficiency in educational tasks, etc. Furthermore, users are also more likely to accept and use a technology if they perceive it as easy to use and user-friendly. For Edu S/W, this means that students and educators are more inclined to use software that is intuitive, requires minimal effort to use and navigate, and does not involve a steep learning curve. According to Ibrahim et al. [24], a major part of the perceived ease-of-use software also has to do with the user’s computer self-efficacy and whether they believe they are capable of using the software. Adding to that, perceived ease-of-use significantly affects the user’s intention to use the Edu S/W. Combining the above, we see that user acceptance is influenced by the software itself but also by the attitude and confidence of its user. Finally, since, according to the literature, we can see a connection between user acceptance with other aspect of the software, this study can pave the way for the connection of the three pillars and especially in a specific domain.

2.3. Evaluation and Characteristics

Since the early stages of Edu S/W development, numerous processes have been proposed to evaluate Edu S/Ws. These processes were generally based on specific structures with relevant evaluative dimensions aimed at assessing the quality of instructional applications (e.g., Jareno et al., 2016; Lee and Sloan, 2015).

Additionally, other types of evolution have been employed to evaluate instructional applications. Lewis [25] and Vlachogianni and Tselios [26] have utilized the System Usability Scale (SUS) approach, while Papadakis et al. [27] used the Evaluation Tool for Educational Apps (E.T.E.A.), which constitutes a thirteen-item assessment instrument with psychometric properties. Considering that, according to TAM, perceived usability is a major construct in evaluations, Lewis [28] investigated the similarities of widely used questionnaires like the Computer System Usability Questionnaire (CSUQ) and System Usability Scale (SUS), designed to measure perceived usability. These questionnaires where later extended by Alhadreti [29].

Widespread internet accessibility led to dynamic developments in e-learning platforms and massive open online courses (MOOCs), leading to the proposal of several software evaluation frameworks for these products. Aiming at evaluating university courses using technologically enhanced education, 43 usability heuristics were categorized into eight distinct factors based on their empirical validation. The eight identified factors are ease of navigation, design quality, information architecture, credibility, functionality quality, content quality, simplicity, and learnability. According to [30], these factors exhibit strong psychometric properties. In practice, narrowing down the aforementioned factors, Hasan [31] concluded that in educational sites, content and navigation were the most considered evaluation criteria, while the organization or architecture was the least considered one. Therefore, dimensions that describe user satisfaction and how the information is provided [32] are currently considered essential for evaluating S/W applications.

In summary, most Edu S/W evaluation methods utilize a common approach that involves the use of dimensions to measure student motivation and engagement (the latter through user activity), the effectiveness of the application based on user experience with the study topic, and the design, which is expected to accommodate individual preferences. These dimensions are also used in the operational model of this study.

In this study, we argue that the quality of the software characteristics should be evaluated according to their reflection on the acceptance of the users. In the case that the user’s experiences are positive, such as satisfaction from the use of the software, commitment to learning from the software, and motivation in using the software again, then the quality of the software characteristics is acceptable. In terms of this argument, Torkzadeh et al. [33] and Park and Lee [34] found that there is a positive relationship between positive users’ attitudes and the acceptable quality of software characteristics, and Huang [35] concluded that user satisfaction urges the continuation of cognitive application usage. So, this gives evidence to the importance of the execution of our study and the importance of it.

2.4. User Evaluation and Performance

A summative assessment is generally the most common process for student evaluation. The student’s performance is translated into a numerical quantity, which is provided as feedback at the end of the learning session [36].

Conole [37] proposed a 12-dimensional system to evaluate both the platform and students in e-learning environments. The dimensions include openness, scalability, use of multimedia, level of communication, degree of collaboration, learning pathway, quality assurance, amount of reflection, certification, formality of learning, autonomy, and diversity. Building upon this framework, several approaches to extending evaluation have been suggested [38]. In addition, Franke et al. [39] introduced the affinity for technology interaction (ATI) scale, which is an interaction style rooted in the need for cognition construct (NFC). This scale has shown promise, having been studied in more than 1500 instances. Albelbisi et al. [32] argue that user satisfaction is an essential dimension of self-learning applications. After examining the relationship between training, user attitude, and tool self-efficacy, by implementing an Internet self-efficacy scale and a computer user attitude, Torkzadeh and Dyke [40] concluded that training improved the self-efficacy.

Furthermore, various evaluation approaches have targeted specific groups, including kindergarten children [27], utilizing different structures such as usability and efficiency. Some methodologies combine inspection techniques with evaluation patterns that describe the activities to be performed during user testing [41]. Regarding MOOCs, Zaharias and Poylymenakou [42] developed a questionnaire that links web and instructional design parameters to intrinsic motivation for learning, introducing a new usability measure. Additionally, new theories on pedagogy assessment are being developed, which need to be implemented in practice [43]. In summary, concerning software performance, its effectiveness, efficiency, and quality are considered important evaluative dimensions.

Regardless of the evaluation approach or the field to which the provided knowledge belongs, the question of whether a relationship exists between user acceptance, software (S/W) performance, and S/W characteristics emerges in several evaluation surveys. Jawahar et al. [44] suggest that “user performance can be significantly enhanced by shaping end users’ acceptance or predisposition toward working with computers, teaching them to set specific and challenging goals, and enhancing their beliefs in effectively learning and using computing technology”. With similar reasoning, Hale [45] concluded that “acceptance towards singing voice were related to singing participation and interest in singing activities”. Furthermore, Huang [35] supports the idea that user satisfaction is an essential concept in Edu S/W evaluations.

3. Case Study

3.1. Research Questions

In order to study the relationship between Edu S/W characteristics, performance, and user acceptance of our system, we have formulated and set the following research questions:

RQ1:

Are Edu S/W Characteristics positively related to user acceptance?

RQ2:

Is user acceptance positively related to Edu S/W performance?

RQ3:

Are Edu S/W characteristics positively related to Edu S/W performance?

In this study, we argue that the quality of the software characteristics should be evaluated according to their reflection on the user acceptance with RQ1. So, when the user acceptance of the given system is positive, such as satisfaction with the use of the software, then the quality of the software characteristics is acceptable. Moreover, with RQ2, we plan to shed some light on the effect that user acceptance has on the performance of the educational system. This is going to help us better understand the performance of the educational system and know which aspects of the system should change in order to improve it. Finally, with RQ1 and RQ2, user acceptance fully mediates the relationship between Edu S/W characteristics and performance. However, to be able to test whether the mediation mechanism may be of the “partial type”, RQ3 was also used.

3.2. Sample

The research was conducted in the city of Thessaloniki in Greece in April of 2021. The sample that participated in the evaluation study consisted of 236 participants from various Greek cities, studying at two institutes. The data were collected from the Department of Music Science and Art of the University of Macedonia (UoM) and from “Agios Therapontas” Orthodox Church’s art workshop, which provides Byzantine music lessons. The entirety of the participants originated from the two organizations, where 168 of the respondents were studying at UoM and 68 at the church’s art workshop. The participants came from various learning grades of music, and in terms of educational level, most of them were undergraduate or postgraduate students. After using the music learning application for approximately 45 min, they were asked to fill in a questionnaire. A total of 260 questionnaires were distributed, of which, 236 were answered, giving us a response rate of 90.07 percent.

The participant group consisted of 69.9 percent male and 30.1 percent female attendees. The sample may seem to be unbalanced gender-wise in terms of percentages; however, in reality, it reflects the actual percentage of gender participation in Orthodox Church chanting, where female chanters are significantly less common [46]. Furthermore, 10.1 percent were domain experts, while the rest of the participants were simple users. The participants’ age range was between 19 to 25 years old, while their rate of familiarity with computer systems was 74.2 percent. In general, most of the participants were choristers and cantors, with a lot of experience in either Byzantine, choral, European music, or in all of the aforementioned music genres.

3.3. Measures

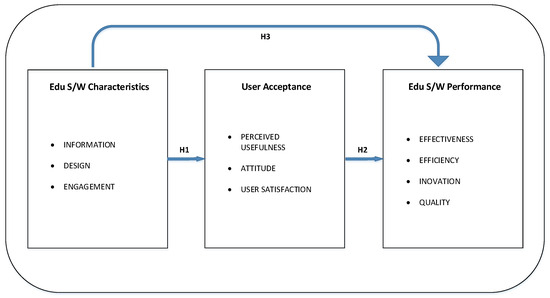

The proposed operational model of the study is presented in Figure 1. This model assumes that the user acceptance and predisposition of the individuals who use the Edu S/W mediate in the relationship between the characteristics and the performance of the Edu S/W.

Figure 1.

The operational model of the study.

The construct of Edu S/W characteristics consists of three key dimensions, adapted from Lee and Sloan [47]. First is “Instruction” (renamed to “Information”), which is composed of three items: (a) rigor, (b) value of errors, and (c) level of material. Secondly, “Design” is composed of the following: (a) screen design, (b) ease of use, (c) goal orientation, and (d) cultural sensitivity. Finally, “Engagement” is composed of three items: (a) learner control, (b) aesthetics, and (c) utility. All of the aforementioned items were measured using a 5-level Likert scale (1 = completely disagree to 5 = completely agree). Sample items include “RIGOR: The objectives of the Edu S/W are explicitly stated”; “SCREEN DESIGN: The Edu S/W interface is well-organized”; and “LEARNER CONTROL: The Edu S/W allows users to select the level where they will begin engaging”.

The construct of Edu S/W performance consists of four items, reflecting the architecture of Katou and Budhwar [48]. These items are the following: “Effectiveness”, which describes whether the application achieves its objectives; “Efficiency”, which describes whether the user strives to achieve the objectives of each task provided by the application; “Innovation”, for evaluating the application as a product and the provided experience; and “Quality” describes whether the application as a product is of high quality. These items were also measured using a 5-level ordinal scale (1 = very bad to 5 = very good).

The construct of user acceptance consists of six key dimensions, adapted from Park [49], from which we calculated three. These key dimensions are “Perceived usefulness”, “Attitude”, and “User Satisfaction”. The items were measured using a 5-level Likert scale (1 = completely disagree to 5 = completely agree).

Several additional individual variables were used for the demographics of our study in order to rule out alternative explanations of the findings [50]. More specifically, some of the demographic metrics implemented were the following: (a) gender (1 = male, 2 = female); (b) age (in years); and (c) responder’s ID (1 = expert, 2 = simple user). Furthermore, for a 5-level scale, the following were used: (a) educational level; (b) computers familiarity; (c) roll in Byzantine music; (d) experience in Byzantine music; (e) experience in choral music; (f) experience in European music, and (g) experience in stringed musical instruments.

3.4. Consistency of the Survey

Well-accepted and validated items that we developed in Section 2 were used. A validation of ergonomic criteria for the evaluation of human–computer interaction to determine content validity was also commissioned. As for the internal consistency, it was investigated by evaluating the computed alphas by Cronbach [51]. It is visible from Table 1 that the model is trustworthy since all Cronbach alphas are significantly higher than 0.70 [52]. Additionally, a confirmatory factor analysis (CFA) using SPSS—AMOS was applied to evaluate the average variance extracted (AVE) per dimension that has been obtained. The AVE values reported in Table 1 are significantly higher than 0.50, an indication of an acceptable level of convergent validity [53].

Table 1.

Means, Standard Deviations, Consistency and Reliability Measures, and Bivariate Correlations for all Variables.

Construct discriminant validity was assessed by examining two factors: firstly, if the correlation coefficients between pairs of constructs are significantly different from unity and, secondly, whether the square root of each factor’s AVE is larger than its correlations with other factors. Table 1 presents the correlation coefficients of all constructs used in the study, and it is visible that correlation coefficients differ significantly from unity, and they are smaller than the square root of each factor’s AVE.

3.5. Statistical Analysis

The methodology of structural equation models (SEMs) or latent variable models [49] was used, via SPSS—AMOS, to test the queries of the proposed framework. SEM can be effective when testing models that are path analytic with mediating variables and include latent constructs that are being measured with multiple items [54]. Moreover, this method is regarded as the most appropriate for testing mediation. Following Bollen’s [55] recommendation, the overall model fit was assessed to study multiple indices, since the model can be adequate on one fit index but inadequate on many others. Finally, the chi-square test (with critical significance level of p > 0.05) and the normed-chi-square ratio (with critical level of no more than 3), the goodness-of-fit index (GFI; with critical level not lower than 0.80), the normed fit index (NFI; with critical level not lower than 0.90), the comparative fit index (CFI; with critical level not lower than 0.90), and the root-mean-squared error of approximation (RMSEA; with critical level not more than 0.08) [56] was used.

4. Results

4.1. Data Properties

The means and the standard deviations of all the constructs used in the study are displayed in Table 1, along with the bivariate correlation coefficients between all constructs used in the study. It is visible that there are strong, positive, and significant correlations between Edu S/W characteristics, Edu S/W performance, and user acceptance, which can be used to answer the research questions of the study. However, due to interactions among several variables, the results that are based on correlations, although interesting, may be misleading. Thus, estimating and examining the measurement and structural models was essential in order to isolate the possible links between the variables involved in the operational model.

4.2. Measurement Model

While testing the hypothesized structure, the analyses indicate an acceptable fit (chi-square = 111.128, df = 32, p = 0.000, normed chi-square = 3.473, RMSEA = 0.103, CFI = 0.962, NFI = 0.948, TLI = 0.947, RMR = 0.018). Furthermore, all factor loadings and their squares for evaluating indicator reliability were examined, leading to the conclusion that all measures are meaningfully related to their proposed latent dimensions.

Consequently, the fit of the proposed measurement model was compared to an alternative less restrictive model, with all items loading on a single factor. This model seems to fit worse than the hypothesized model (chi-square = 299.502, df = 35, p = 0.000, normed chi-square = 8.557, RMSEA = 0.179, CFI = 0.873, NFI = 0.859, TLI = 0.836, RMR = 0.032), supporting the proposed factor structure of the constructs used in this study. Moreover, the comparison of the results of these two models (i.e., Δchi-square = 299.502 − 111.128 = 188.374, Δdf = 35 − 32 = 3, Δratio = Δchi-square/Δdf = 188.374/3 = 62.791), denotes that the latent factors represent distinct constructs and that common method bias is limited because the Δratio = 62.791 is much larger than the critical value of 3.84 per degree of freedom [57].

4.3. The Structural Model

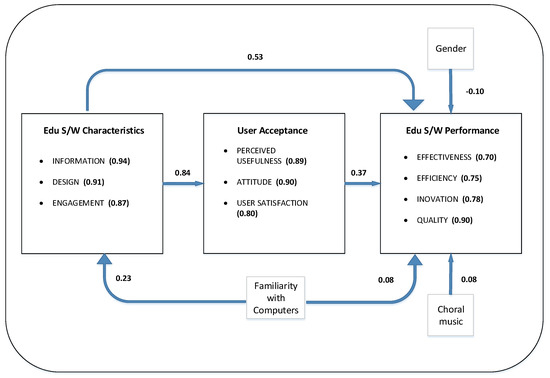

Applying SEM for estimating the operational model signifies a very good fit (chi-square = 145.299, df = 61, p = 0.000, normed chi-square = 2.382, RMSEA = 0.077, NFI = 0.934, CFI = 0.960, TLI = 0.949, RMR = 0.023). Accordingly, the estimated operational model displayed in Figure 2 is valid. It is notable, however, that the chi-square’s significant size indicates that the proposed model is not an adequate presentation of the entire set of relationships. But taking into account that the chi-square statistics may be inflated by high sample sizes, the value of the normed chi-square (i.e., the value of chi-square/degrees of freedom) was used instead. With this analysis, said value is less than 3, confirming the validity of the model [58]. In Figure 2, the squares refer to the actually measured variables, and the bold arrows denote the structural relationships between the corresponding variables. The numerical values assigned to each arrow reveal the estimated standardized coefficients. All coefficients are significant at the 0.001 level, except for the coefficient linking individual contingencies with gender, which is significant at the 0.10 level.

Figure 2.

Estimated Operational Model of the study.

4.4. Testing the Hypothesis

From Figure 2, we can see that the Edu S/W characteristics are positively (β = 0.84) related to user acceptance, a fact that answers RQ1. Moreover, the user acceptance is positively (β = 0.37) related to Edu S/W performance, answering RQ2. Additionally, Edu S/W characteristics are positively (β = 0.53) related to Edu S/W performance, answering RQ3. Combining this information, i.e., that all the research questions are positively answered, it is safe to conclude that user acceptance positively and partially mediates the relationship between Edu S/W characteristics and Edu S/W performance.

Concerning the controls used in estimation, it is noteworthy that only three of the controls produced significant results (Figure 2). In particular, considering the negative standardized coefficient of gender (β = −0.10), it is supported that women perceive the performance of the Edu S/W to be lower compared to men. The users of the Edu S/W who are familiar with computers find that both the characteristics (β = 0.23) and the performance (β = 0.08) of the Edu S/W are higher compared to users who are not so familiar with computers. Finally, the users who have experience in choral music (β = 0.08) perceive the performance of the Edu S/W to be higher compared to the users with lower experience in choral music.

5. Discussion

5.1. Implications for Theory

The theoretical significance of this study is threefold. Firstly, existing empirical evidence concerning the relationship between the Edu S/W characteristics, user acceptance, and Edu S/W performance is confirmed and extended. This contribution relies on a major finding that user acceptance, such as user satisfaction, commitment, and motivation, constitute a partial mediating mechanism of the relationship under study.

Secondly, the introduction of the specific constructs in the study extends the relative literature in evaluating Edu S/W in Byzantine music. In particular, the construct concerning the performance of Edu S/W in Byzantine music makes a real contribution. In particular, this contribution is based on the concepts of the dimensions used. Apart from the innovation and quality dimensions, effectiveness emphasizes whether the Edu S/W achieves its objectives, and efficiency considers whether these objectives are achieved with the less possible sources of the users.

Thirdly, the mediating mechanism of user acceptance has been examined using structural equation modeling. It is supported that this constitutes a contribution, because this estimation methodology highlights the importance of the dimensions of each construct in tracing performance. In the current study, by considering the values of the standardized coefficients within each construct, it is supported that it is the information of the software that influences the commitment of the user, which in turn indicates the quality of the software.

5.2. Implications for Practice

This study has clear implications for developers that create Edu S/W in Byzantine music. First of all, the characteristics of the software (i.e., information, design, engagement) have a direct impact on the performance of the software. Therefore, software developers should emphasize building software with acceptable characteristics. Secondly, the acceptance of the users (i.e., satisfaction, commitment, motivation) highlights how users perceive the characteristics of the software and accordingly express their opinion about the performance of the software.

Educators should carefully consider the characteristics of educational software when selecting tools for their classrooms. Our study finds that software features and design can impact user acceptance and therefore performance. Educators should prioritize software that aligns with their instructional goals and resonates with their students. Furthermore, according to our findings, computer-familiar users tend to perform better with educational software, thus highlighting the importance of training or support for users that may not have computer skills. Educators can offer workshops or resources to help students become more comfortable with software and computers in general.

6. Threats to Validity

In this section, we present the construct, reliability, and external threats of our study according to Runeson et al. [59].

Construct validity refers to the extent to which the phenomenon under study represents what is investigated according to the research questions. To mitigate this threat, we established a research protocol to guide the case study, which was thoroughly reviewed by two experienced researchers in the domain of empirical studies. Another threat is the fact that the tool has been evaluated without long-term usage or extended training. Therefore, the users probably faced usability issues compared to an evaluation that would have been performed after some training. So, we believe that the presented results are in line with the worst-case scenario of usage.

External validity deals with possible threats when generalizing the findings derived from the sample to a broader population. Firstly, the investigated genre is Byzantine music, and we cannot generalize the results to other music genres, given the differences that they have. Secondly, we cannot generalize the results that user acceptance positively mediates the relationship between Edu Software characteristics and performance in every Edu S/W. But, given the big number of participants, we can say that for the used software, the results are very reliable.

Finally, the reliability of the case study is related to whether the data are collected and the analysis is conducted in a way that can be replicated. To minimize potential reliability threats during the data collection process, we did not ask open questions, which can lead to different interpretations, and we limited our questionnaire to a 5-level Likert scale. Finally, to assure correct data analysis, two researchers collaborated in the analysis phase.

7. Conclusions

To summarize, this paper examines how the characteristics of educational software impact the software’s performance and presents the evaluation results of Edu S/W characteristics on Edu S/W performance through the prism of user acceptance. For this reason, a sample from 236 users of public and private education institutes was collected, while the statistical method employed was SEMs, via SPSS—AMOS estimation. The Edu S/W’s specialization is Byzantine music, which has a certain distinctiveness and which lacks learning applications. Despite certain limitations of the sample, the findings of the study suggest, through three hypotheses, that user acceptance, software characteristics, and performance are exceptionally interrelated in educational applications. In more detail, the Edu S/W characteristics are positively (β = 0.84) related to user acceptance, user acceptance is positively (β = 0.37) related to Edu S/W performance, and Edu S/W characteristics are positively (β = 0.53) related to Edu S/W performance. Thus, the hypothesis that user acceptance positively mediates the relationship between Edu S/W characteristics and performance is statistically supported. Finally, the study’s findings have practical implications for the design and development of educational software. Understanding the factors that influence user acceptance and performance can guide software developers and educators in creating and choosing more effective learning tools.

Of course, there are several future works possible that could enhance this study and provide better understanding of HCI. We could conduct further studies to validate these findings in different educational contexts and geographical locations and with a larger and more diverse sample. For example, we could extend the research to different cultural and educational contexts to determine if the observed relationships vary across regions or demographics. A step further would be to compare the findings across different types of subject domains and investigate whether the observed relationships hold true for various software applications beyond Byzantine music. Finally, we could consider conducting longitudinal studies to explore how these relationships evolve over time, since longitudinal data would provide insights into the dynamics of user acceptance and software performance throughout an extended period. All of the above would help confirm the robustness of the observed relationships.

Author Contributions

Conceptualization, K.-H.K.; methodology, K.-H.K.; software, K.-H.K.; validation, K.-H.K., S.K.G. and G.P.; analysis, K.-H.K. and G.P.; writing—original draft preparation, K.-H.K. and G.P.; writing—review and editing, N.N. and T.M.; funding acquisition, K.-H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was carried out as part of the project «Recognition and direct characterization of cultural items for the education and promotion of Byzantine Music using artificial intelligence» (Project code: KMP6-0078938) under the framework of the Action «Investment Plans of Innovation» of the Operational Program «Central Macedonia 2014 2020», which is co-funded by the European Regional Development Fund and Greece.

Data Availability Statement

Data are available upon reasonable request to the corresponding authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stephanidis, C.; Salvendy, G.; Antona, M.; Chen, J.Y.C.; Dong, J.; Duffy, V.G.; Fang, X.; Fidopiastis, C.; Fragomeni, G.; Fu, L.P.; et al. Seven HCI Grand Challenges. Int. J. Hum.-Comput. Interact. 2019, 35, 1229–1269. [Google Scholar] [CrossRef]

- Winslow, J.; Dickerson, J.; Lee, C.-Y. Applied Technologies for Teachers, 1st ed.; Kendall Hunt Publishing: Dubuque, IA, USA, 2013. [Google Scholar]

- Reeves, T.C.; Harmon, S.W. Systematic Evaluation Procedures for Interactive Multimedia for Education and Training. In Multimedia Computing: Preparing for the 21st Century; Reisman, S., Ed.; IGI Global: Hershey, PA, USA, 1996. [Google Scholar]

- Cronjé, J. Paradigms Regained: Toward Integrating Objectivism and Constructivism in Instructional Design and the Learning Sciences. Educ. Technol. Res. Dev. 2006, 54, 387–416. [Google Scholar] [CrossRef]

- Ehlers, U.-D.; Pawlowski, J.M. Handbook on Quality and Standardisation in E-Learning; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar] [CrossRef]

- Georgiadou, E.; Economides, A. An Evaluation Instrument for Hypermedia Courseware. Educ. Technol. Soc. 2003, 6, 31–33. [Google Scholar]

- Phillips, R. Challenging The Primacy Of Lectures: The Dissonance Between Theory And Practice In University Teaching. J. Univ. Teach. Learn. Pract. 2005, 2, 4–15. [Google Scholar] [CrossRef]

- Schibeci, R.; Lake, D.; Phillips, R.; Lowe, K.; Cummings, R.; Miller, E. Evaluating the Use of Learning Objects in Australian and New Zealand Schools. Comput. Educ. 2008, 50, 271–283. [Google Scholar] [CrossRef]

- Coughlan, J.; Morar, S.S. Development of a Tool for Evaluating Multimedia for Surgical Education. J. Surg. Res. 2008, 149, 94–100. [Google Scholar] [CrossRef]

- Roblyer, M.D.; Wiencke, W.R. Design and Use of a Rubric to Assess and Encourage Interactive Qualities in Distance Courses. Am. J. Distance Educ. 2003, 17, 77–98. [Google Scholar] [CrossRef]

- Albakri, A.; Abdulkhaleq, A. An Interactive System Evaluation of Blackboard System Applications: A Case Study of Higher Education. In Fostering Communication and Learning With Underutilized Technologies in Higher Education; IGI Global: Hershey, PA, USA, 2020; pp. 123–136. [Google Scholar] [CrossRef]

- Chang, A.; Breazeal, C.; Knight, H.; Dan Stiehl, W.; Toscano, R.; Wang, Y.; Breazeal Personal Robots Group, C. Robot Design Rubrics for Social Gesture Categorization and User Studies with Children. In Proceedings of the HRI Workshop on Acceptance of Robots in Society, San Diego, CA, USA, 11–13 March 2009. [Google Scholar]

- Carole, P.J. Alternative Assessment in Music Education. 1995. Available online: https://eric.ed.gov/?id=ED398141 (accessed on 1 July 2023).

- Bevilacqua, F.; Guédy, F.; Schnell, N.; Fléty, E.; Leroy, N. Wireless Sensor Interface and Gesture-Follower for Music Pedagogy. In Proceedings of the 7th International Conference on New Interfaces for Musical Expression, NIME ’07, New York, NY, USA, 6–10 June 2007; pp. 124–129. [Google Scholar] [CrossRef]

- Pérez-Gil, M.; Tejada, J.; Morant, R.; Pérez-González De Martos, A. Cantus: Construction and Evaluation of a Software Solution for Real-Time Vocal Music Training and Musical Intonation Assessment. J. Music. Technol. Educ. 2016, 9, 125–144. [Google Scholar] [CrossRef]

- Tomczyk, Ł.; Limone, P.; Guarini, P. Evaluation of modern educational software and basic digital competences among teachers in Italy. Innov. Educ. Teach. Int. 2023, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, X. Design of Vocal Music Teaching System Platform for Music Majors Based on Artificial Intelligence. Wirel. Commun. Mob. Comput. 2022, 2022, 1–11. [Google Scholar] [CrossRef]

- Proceedings of the 2023 2nd International Conference on Artificial Intelligence, Internet and Digital Economy (ICAID 2023); Hemachandran, K.; Boddu, R.S.K.; Alhasan, W. (Eds.) Springer: Berlin/Heidelberg, Germany, 2023; Volume 9. [Google Scholar]

- Han, X. Design of Vocal Music Education System Based on VR Technology. Procedia Comput. Sci. 2022, 208, 5–11. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, Z. February. Design of Vocal Music Self-Study Assistant System based on Android Technology. In Proceedings of the 2023 IEEE International Conference on Integrated Circuits and Communication Systems (ICICACS), Raichur, India, 24–25 February 2023; pp. 1–6. [Google Scholar]

- Bergmann, J.H.M.; McGregor, A.H. Body-Worn Sensor Design: What Do Patients and Clinicians Want? Ann. Biomed. Eng. 2011, 39, 2299–2312. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems; Massachusetts Institute of Technology: Cambridge, MA, USA, 1986. [Google Scholar]

- Ibrahim, R.; Leng, N.S.; Yusoff, R.C.M.; Samy, G.N.; Masrom, S.; Rizman, Z.I. E-Learning Acceptance Based on Technology Acceptance Model (TAM). J. Fundam. Appl. Sci. 2017, 9, 871–889. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. Perceived Usability Evaluation of Educational Technology Using the System Usability Scale (SUS): A Systematic Review. J. Res. Technol. Educ. 2021, 54, 392–409. [Google Scholar] [CrossRef]

- Papadakis, S.; Vaiopoulou, J.; Kalogiannakis, M.; Stamovlasis, D. Developing and Exploring an Evaluation Tool for Educational Apps (E.T.E.A.) Targeting Kindergarten Children. Sustainability 2020, 12, 4201. [Google Scholar] [CrossRef]

- Lewis, J.R. Measuring Perceived Usability: The CSUQ, SUS, and UMUX. Int. J. Hum.-Comput. Interact. 2018, 34, 1148–1156. [Google Scholar] [CrossRef]

- Alhadreti, O. Assessing Academics’ Perceptions of Blackboard Usability Using SUS and CSUQ: A Case Study during the COVID-19 Pandemic. Int. J. Hum.-Comput. Interact. 2021, 37, 1003–1015. [Google Scholar] [CrossRef]

- Singla, B.S.; Aggarwal, H. A Set of Usability Heuristics and Design Recommendations for Higher Education Institutions’ Websites. Int. J. Inf. Syst. Model. Des. 2020, 11, 58–73. Available online: https://services.igi-global.com/resolvedoi/resolve.aspx?doi=10.4018/IJISMD.2020010104 (accessed on 1 July 2023). [CrossRef]

- Hasan, L. Evaluating the Usability of Educational Websites Based on Students’ Preferences of Design Characteristics Usability of e-Learning Systems: The Case of Moodle View Project Usability of Educational Websites View Project Evaluating the Usability of Educational Websites Based on Students’ Preferences of Design Characteristics. Int. Arab. J. e-Technol. 2014, 3, 179–193. [Google Scholar]

- Albelbisi, N.A.; Al-Adwan, A.S.; Habibi, A. Self-Regulated Learning and Satisfaction: A Key Determinants of MOOC Success. Educ. Inf. Technol. 2021, 26, 3459–3481. [Google Scholar] [CrossRef]

- Torkzadeh, R.; Pflughoeft, K.; Hall, L. Computer Self-Efficacy, Training Effectiveness and User Attitudes: An Empirical Study. Behav. Inf. Technol. 2010, 18, 299–309. [Google Scholar] [CrossRef]

- Park, C.H.; Lee, S.B. A Study on the Effect of SW Education Training Characteristics on Personal Characteristics and Educational Outcomes. J. Inst. Internet Broadcast. Commun. 2020, 20, 247–259. [Google Scholar]

- Huang, Y.M. Students’ Continuance Intention Toward Programming Games: Hedonic and Utilitarian Aspects. Int. J. Hum.-Comput. Interact. 2019, 36, 393–402. [Google Scholar] [CrossRef]

- Race, P. The Lecturer’s Toolkit: A Practical Guide to Assessment, Learning and Teaching; Routledge: Oxfordshire, UK, 2019. [Google Scholar]

- Conole, G. A New Classification Schema for MOOCs. Int. J. Innov. Qual. Learn. 2014, 2, 65–77. [Google Scholar]

- Yousef, A.M.F.; Chatti, M.A.; Schroeder, U.; Wosnitza, M. A Usability Evaluation of a Blended MOOC Environment: An Experimental Case Study. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 69–93. [Google Scholar] [CrossRef]

- Franke, T.; Attig, C.; Wessel, D. A Personal Resource for Technology Interaction: Development and Validation of the Affinity for Technology Interaction (ATI) Scale. Int. J. Hum.-Comput. Interact. 2018, 35, 456–467. [Google Scholar] [CrossRef]

- Torkzadeh, G.; Van Dyke, T.P. Effects of Training on Internet Self-Efficacy and Computer User Attitudes. Comput. Human Behav. 2002, 18, 479–494. [Google Scholar] [CrossRef]

- Lanzilotti, R.; Ardito, C.; Costabile, M.F.; De Angeli, A. ELSE Methodology: A Systematic Approach to the e-Learning Systems Evaluation. Educ. Technol. Soc. 2006, 9, 42–53. [Google Scholar]

- Zaharias, P.; Poylymenakou, A. Developing a Usability Evaluation Method for E-Learning Applications: Beyond Functional Usability. Int. J. Hum.-Comput. Interact. 2009, 25, 75–98. [Google Scholar] [CrossRef]

- Kukulska-Hulme, A.; Beirne, E.; Conole, G.; Costello, E.; Coughlan, T.; Ferguson, R.; FitzGerald, E.; Gaved, M.; Herodotou, C.; Holmes, W.; et al. Innovating Pedagogy 2020: Open University Innovation Report 8. Альманах Научных Рабoт Мoлoдых Ученых Университета Итмo 2020, 3, 144–147. [Google Scholar]

- Jawahar, I.M.; Elango, B. The Effect of Attitudes, Goal Setting and Self-Efficacy on End User Performance. J. Organ. End User Comput. 2001, 13, 40–45. [Google Scholar] [CrossRef]

- Hale, C.L. Primary Students’ Attitudes towards Their Singing Voice and the Possible Relationship to Gender, Singing Skill and Participation in Singing Activities; Kansas State University: Manhattan, KS, USA, 2006. [Google Scholar]

- Salapatas, D. The role of women in the orthodox church. Z. Des Inst. Für Orthodox. Theol. Der Univ. München 2015, 2, 177–194. [Google Scholar]

- Lee, C.-Y.; Cherner, T.S. A Comprehensive Evaluation Rubric for Assessing Instructional Apps. J. Inf. Technol. Educ. Res. 2015, 14, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Katou, A.A.; Budhwar, P.S. The Effect of Human Resource Management Policies on Organizational Performance in Greek Manufacturing Firms. Thunderbird Int. Bus. Rev. 2007, 49, 1–35. [Google Scholar] [CrossRef]

- Park, S.Y. An Analysis of the Technology Acceptance Model in Understanding University Students’ Behavioral Intention to Use e-Learning. Educ. Technol. Soc. 2009, 12, 150–162. [Google Scholar]

- Turnley, W.H.; Feldman, D.C. Re-Examining the Effects of Psychological Contract Violations: Unmet Expectations and Job Dissatisfaction as Mediators. J. Organ Behav. 2000, 21, 25–42. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient Alpha and the Internal Structure of Tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1978. [Google Scholar]

- Hair, J., Jr.; Anderson, R.; Tatham, R.; Black, W. Multivariate Data Analysis, 5th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Luna-Arocas, R.; Camps, J. A Model of High Performance Work Practices and Turnover Intentions. Pers. Rev. 2008, 37, 26–46. [Google Scholar] [CrossRef]

- Bollen, K.A. Structural Equations with Latent Variables. In Structural Equations with Latent Variables; John Wiley & Sons: Hoboken, NJ, USA, 2014; pp. 1–514. [Google Scholar] [CrossRef]

- Bentler, P.M. Comparative Fit Indexes in Structural Models. Psychol. Bull. 1990, 107, 238–246. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.A. Confirmatory Factor Analysis. Handbook of Structural Equation Modeling, 361, 379; Guilford Publications: New York, NY, USA, 2015. [Google Scholar]

- Pedhazur, E.J.; Schmelkin Pedhazur, L. Measurement, Design, and Analysis: An Integrated Approach, 1st ed.; Psychology Press: New York, NY, USA, 1993. [Google Scholar] [CrossRef]

- Runeson, P.; Höst, M.; Rainer, A.; Regnell, B. Case Study Research in Software Engineering: Guidelines and Examples; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).