1. Introduction

What if, with the help of methods from the artificial intelligence family, new insights into the condition of insurers can be gained? Data from financial statements might hide similarities among composite insurance companies that indicate a path toward the deterioration of financial health. To uncover them, novel approaches are needed. The financial innovation era changed the insurers’ ecosystem. The suptechs could, thus, enable supervisors to act in a novel manner, and to have an ongoing investigation of the insurer’s financial behaviour free from biases due to expectations, anchoring, or fixed convictions. In this way, new discoveries can establish grounds for early interventions, preventing financial deterioration.

Stability and sustainability are essential for the global financial systems and economy [

1]. Any warnings of financial distress or even insolvency in the insurance market participants are important to investors, policyholders, regulators (i.e., standards-setters), competitors, and others [

2]. The authors elaborate on the point that there is an interest in the limitation of excessive insolvency risk to avoid a potential problem of contagion to other insurers in the market due to a spike in insurer insolvencies. Warnings, especially early warnings, enable supervisors to quickly investigate and, if necessary, act in a timely manner and take up adequate preventive measures [

1]. The earlier the warning, the wider the range of available responses that can be taken [

1,

3].

There is a plethora of past research in the field of predicting an entity’s financial distress. Nevertheless, the lesson from past failure models is that they rarely entail predictors that send early warning signals [

1]. While numerous studies are dealing with the banking sector or with non-financial companies, success in predicting an insurer’s financial distress remains modest. Common limitations of these studies and drawback in their applicability for the current environment are: using time periods of relative economic stability [

2], early studies using match-pair sampling techniques [

4], most of the studies examine and are based on the US data sample [

1], and predicting insolvency as the critical event e.g., [

5,

6]. De Bandt and Overton [

7] include an overview according to four considerations: (i) efficient management and corporate governance, (ii) industrial organization, (iii) the macroeconomic environment, risk appetite, and portfolio choices, and (iv) profitability. With respect to the methodology, examples of using more advanced methods are support vector machines, genetic algorithms, and simulated annealing [

6,

8]. Back-propagation and learning vector quantization from the neural networks family in insurer’s insolvency prediction was applied to US data by Brockett et al. [

5,

9,

10].

Our study is devoted to the European insurance markets. While most often the US market is studied in the literature, Europe, or its regions, was studied less often, with most focus being on some selected countries [

1]: the Netherlands [

11,

12], Germany [

13,

14], Spain [

2,

6,

8,

15], the United Kingdom [

16], France and the United Kingdom [

7], Denmark, Germany, the United Kingdom, Italy, and Switzerland [

17], Croatia, Hungary, and Poland [

18].

Insurance studies differ in sample sizes, time periods under study, and studied types of insurers. Studies used data for life insurers e.g., [

10,

19,

20]; for non-life insurers e.g., [

4,

6,

13,

21,

22,

23]; and for composite insurers e.g., [

1,

2,

7,

15,

16,

24,

25,

26,

27,

28,

29]. Limitation of the analysis is a necessity because insurer specialists have singular characteristics that may affect their solvency [

15]. Also, financial strength assessment for insurers with specific characteristics (i.e., smaller insurers, insurers with a history of reserving errors, insurers that use less reinsurance, stock insurers, etc.) is more difficult [

26].

In the literature, a broad definition of an insurer’s various failure events is utilised. The term retirement is used by [

30] to encompass all occurrences of insurers leaving the market (i.e., liquidation, runoff, dissolution, portfolio transfer, acquisition, and other events that lead to ceased operations of an insurance firm. This classification also covers insurers that halted operations for other, non-distressing reasons (i.e., mergers and acquisitions). Contrary to the term, insurer’s financial distress describes the periods from the initial failure symptoms until the occurrence of the failure event [

1]. As the discussion in the literature shows, it is a challenge to the field that an insurer’s financial distress or insolvency is a rare event [

14], especially in the European Union, which makes insolvency less useful for prediction. The characteristics of a low default portfolio occur [

29]. And more importantly, the supervision is about identifying wrong directions much earlier, therefore, in our study we had to find another characteristic of the insurance companies that could be seen as a flag for critical. A valuable insight into the thoughts of European national supervisory authorities is offered by qualitative studies on causes and early identification of failures and near misses in insurance conducted by the European Insurance and Occupational Pensions Authority [

31,

32].

This study addresses the shortcomings of the common applications from the literature and provides novel solutions capable of dealing with current issues in insurance markets as the changing insurance market landscape impacts the changing business behaviour. The study gives an approach to a different way of examining the insurance companies, namely, exploring their behaviour with the aim of early identification of a movement toward deterioration in their financial position. When doing so, we build in this research based on the idea that expectations and knowledge founded on standard methods should not bias the outcomes of research activities and, thus, hinder new discoveries. Therefore, we consider the size and characteristics of the financial data of insurance companies and use a method from the artificial intelligence approach, namely, the Kohonen’s self-organizing maps (SOM) from the neural network methods. In the application, benefits over the standard econometric methods become apparent and reveal a novel perspective about the path and the state of insurance companies with respect to their expected future financial condition.

Our approach is original in several ways; besides the chosen methodological application to the insurance companies, the novelties have been introduced in the development process of the model. In contrast to common studies where a variable is chosen as a dependent one, we do not choose such a variable and, thus, the model explores the data in a way which is unbiased by our expectations. Also, we introduced a bootstrap technique-based routine in the learning processes on randomly spited data samples. Finally, the outcome is a generated map of insurance companies that allows us to study the structural properties of the market in an original way. What was beyond our first expectations, and in the end most interesting about the results, is that the model generated new theoretical findings on the properties of European composite insurance companies. An expanded model built on the idea presented here could, thus, result in a suptech tool supporting early detection of insurance companies for which a closer examination of the business behaviour or a form of early intervention could prevent their financial deterioration and contribute to financial stability.

The paper is structured as follows. In the introduction a literature overview introduces a relevant theoretical background on the insurance companies. In the second section, material and methods, the data sources, and the characteristics are presented, together with the description of the methodological approach. The third section gives the results. Finally, a discussion and conclusions are presented.

2. Materials and Methods

The studied base consists of European composite insurance companies. Micro data on insurance companies were obtained from the rating agency A.M. Best. We decided on a single data source to ensure complete data consistency. The original database consisted of more than 50,000 observations. Derived from it, we have built the database. First, only composite insurance companies are included. Next, for each included insurance company, financial statement data for at least two consecutive years must be available, whereby the first out of each pair of consecutive years serves as data for the model groupings while the next year’s data will serve as an observation. The time frame is set from 2012 to 2021 due to the properties of available database. At the end, the final sample size decreased significantly and includes 4058 individual observations. An observation is an insurance company for a single year. Some insurance companies are included as more observations later enabled us to follow their path through the map. For some companies, the path will be rather short in case there are fewer observations for this insurance company.

The learning process of the model is based on financial data consisting of 71 financial indicators calculated from an individual insurance company’s current and past financial statement data (

Table 1). The selection of variables is based on theoretical considerations and literature overview. We also considered properties of the dataset. In the first studies in the field, small number of variables were used (for example, approximately 6 or 7 variables; see research overview in [

30]. Since then, the number of variables used in studies has grown (see, i.e., [

1,

4,

16,

25]). We group 71 financial variables into six categories that depict insurers’ financial performance: change in insurers’ profile (growth), size and financial position, profitability, liquidity, debt management, and insurers’ business segments performance.

We selected the final list of financial indicators with special attention so that SCR ratio is not included nor are they close to SCR. In this way, we ensured an unbiased learning process involving looking for similarities among insurance companies irrespective of their SCR values. The first dataset should, thus, remain uncontaminated, which will be important in the second phase, where we give a flag to insurance companies.

Next, we had to decide upon the criteria for marking the occurrence of a “critical” event, and, based on it, we flagged the insurance companies into two groups. As discussed earlier, we looked for an indicator which would reflect a state of financial deterioration much earlier than, e.g., insolvency. Additionally, we also had to consider the available data—its structure and the time frame. As explained by EIOPA [

31,

32], deteriorating capital strength and/or low solvency margin relative to the firm’s risks is the key early identification indicator for near failures in insurance business—life and non-life as well as composite, and also of all sizes. For predicting future regulatory solvency ratio, the lagged solvency ratio can be used and that is regardless of the economic conditions [

14]. Accordingly, we decided on a SCR ratio measure. For its calculation, we used the simplified version of the Solvency I rule, which was prescribed in the Directive of the European Parliament and of the Council (2000/13/EC) on solvency margin requirements for non-life insurance undertakings and the Directive of the European Parliament and of the Council (2000/12/EC) on solvency margin requirements for life assurance undertakings. Unlike Solvency II, Solvency I was not a risk-based measure for capital requirements. It is insensitive to market and credit risks and arbitrarily defines capital requirements for underwriting risk based on volume measures such as premiums or provisions. In our case, the available data only allowed for a simplified calculation of capital requirements under the Solvency I rule. On the other hand, the SCR ratio according to the EU’s Solvency II rules is only available from 2016 onwards. In order to use the widest possible set of data, we used a simplified version of the Solvency I rules to calculate the SCR. This simplified approach reduces the practical usefulness of the developed model at the moment but indicates its applicability to Solvency II data.

The calculation of the SCR ratio was performed as followed. SCR ratio equals a ratio of available solvency margin and solvency capital requirement (SCR). The sum of capital and surplus were used as a proxy for the available solvency margin. The sum of capital and surplus is calculated as [

33]: “the sum of paid-in capital, retained earnings, free reserves, statutory reserves, any voluntary contingency reserves and claims equalisation (or catastrophe) reserve”.

For a composite insurance company, the solvency capital requirement equals the sum of the capital requirement for the non-life part of an insurance company and the capital requirement for the life part of an insurance company.

Capital requirement for the non-life part of the insurance company equals the higher of the results, calculated on premium bases, or the result, calculated on the claim basis.

For the calculation of the solvency capital requirement on a premium basis, net earned premium (

NEP) was used. The net earned premium was divided into two portions. The first portion extending up to 50.3 million

EUR multiplied by 18% and the second, comprising the excess, was multiplied by 16%.

For capital requirement on claims basis, net claims incurred (

NCI) during the year was used. The net claims incurred were divided into two portions. The first portion extending up to 37.2 million

EUR multiplied by 26% and the second, comprising the excess, was multiplied by 23%.

Capital requirement for the life part of the insurance company was calculated separately for assurance linked to investment funds and for other life assurance and added together. The solvency capital requirement for assurance linked to investment funds was calculated as a 1% fraction of gross provisions for linked liabilities (

GMPUL) and for other life assurance as a fraction of 4% of provisions for long-term business (

GMPOther).

For assurance linked to investment funds, the assumption was that the assurance undertaking is not bearing any investment risk, the allocation to cover management expenses is fixed for the period exceeding five years, and the assurance undertaking is not covering a death risk. Calculation of capital requirements for other life assurance excludes the second result, which is a 3% fraction of non-negative capital at risk.

After SCR ratio of the following year was calculated for each insurance company, a flag was given. If the SCR ratio was under 130%, the insurance company was marked as critical—positive. In case the value was above 130%, the flag was negative, denoting an unproblematic insurance company. In this way, we created two groups of insurance companies: 454 critical (positive) cases and 3.604 normal (negative) cases. The total sample was then divided into training sample and test sample with 80:20 ratio. The division was performed on a random split. We decided against out-in-time test sample and purposely ignored the time component when splitting the data. The model should detect general rules of behaviour without any specific reactions of insurance companies to specific time events, such as the COVID-19 pandemic.

Next, all data entering the modelling process went through a pre-processing stage. The pre-processing included cleaning the data by removing observations with missing data and removing observations where unreasonable values for individual indicators were detected. Pre-processing also included scaling of data to the range between 0 and 1, which is recommend in order to make learning process more efficient and reliable. Thereby, we addressed the disadvantages of the method used, as explained later on.

In the training process, the model formed the Kohonen’s self-organizing map, which is a type of unsupervised neural network (hereafter, artificial intelligence model, AI model). Unsupervised learning does not require data to be labelled and there are no notions of the output during the learning process [

34]. A characteristic of majority of unsupervised learning-based techniques is relatively high computational complexity [

35]. Since Kohonen’s introduction of the method in early 1980s, great progress has been made developing the structure and learning algorithm, resulting in many effective applications, among which there are also highly complex ones [

35].

Importantly for our case, the unsupervised learning manner allowed that the information on the SCR ratio was not given to the AI model during the learning process, which is different to classical approaches where the chosen dependent variable is part of the training dataset. SOM’s main objective is to organize input vectors according to how they are positioned in the input space. Main difference between competitive layers and SOM is in the capability of neighbouring neurons to identify neighbouring segments of the input space. This property enables it to not only learn the distribution of input vectors, as in the case of competitive layers, but also the topology

[

36,

37]. Despite a variety of SOMs modifications, we have chosen the conventional SOM version since it suits the purpose of the research and the dataset characteristics. However, in the application of the method, we made proper adjustments to the research approach to perfectly address our main goal. Also, most studies that are reporting the vitality of the method relate to core SOMs [

35]. Some applications have been in the broad financial field, however, the research scope, and especially the goals, differs considerably from studying the banking sector, e.g., [

38,

39,

40], up to financial studies of the corporate sector [

41]. Insurance industry’s sectoral properties and European integration were studied [

42]. For insolvency prediction, the SOM have not been applied yet, to our knowledge. SOM was used to study the claims frequency of a non-life insurance portfolio, namely motorcycle insurance [

43].

Next, we turn to the network architecture. The physical distribution of neurons in SOM directly influences the properties of the network, and, therefore, of the final model; among other things, it includes the size and shape. The topology function determines the position of neurons. In our case, these generate hexagonal neighbourhoods as we chose the order of neurons to be hexagonal. For the learning process, Euclidian distance function was applied and the learning process was divided into ordering and tuning phase. By applying Euclidian distance function, distances between neurons are calculated for positions they take according to topology function. The model’s important property is the network’s size, which is reflected by the number of neurons. As there is no theoretically justified guidance on the optimal grid size, we have taken into account the empirical evidence on the decisions regarding the grid size, our own experience, the size of the available database, and our initial testing of the data basis. We chose the size by looking for the optimal size using the following criteria:

- −

Number of neurons should be such that there is only a small number of neurons without hits after the learning process.

- −

Sufficient resolution of the final topology must be archived in order to make separations process efficient.

- −

We used our previous experience on modelling European banking and insurance sectors as guidance [

39,

42].

Finally, the network size was set as a 15 × 15 grid of neurons. All our calculations, including the model’s training, were performed in Matlab environment.

In the learning process, we applied Kohonen learning rule. In each step, a winning neuron

is identified by applying same steps as in a competitive layer. Main difference to the competitive network is in the importance of the neighbourhood. In SOM network, change in weights of neurons is applied to all neurons within active neighbourhood

of the winning neuron (determined by a radius

). The following formula is used to update the weights of active neurons when an input vector

is presented to the network in step

[

36,

37]:

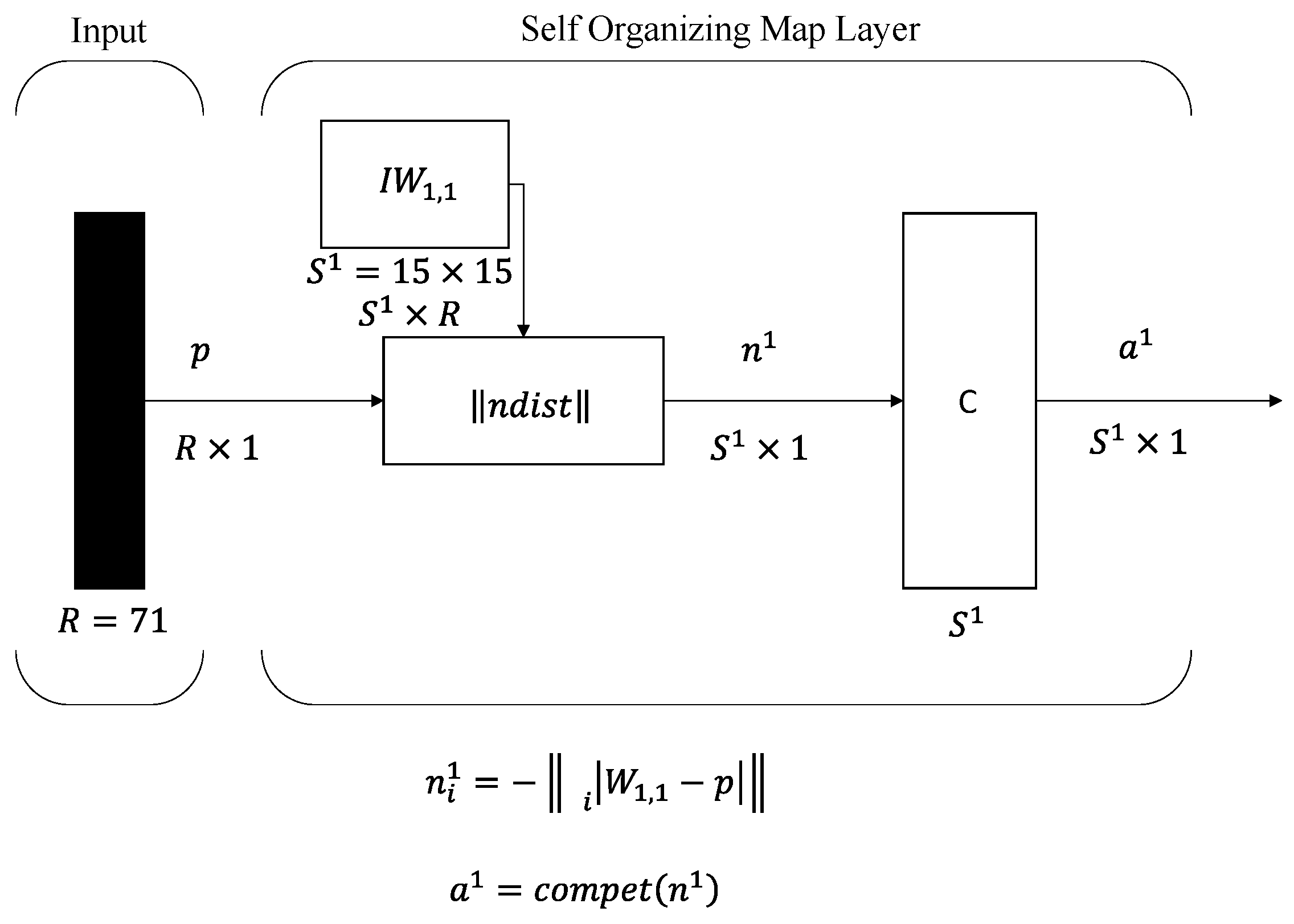

Based on principle for graphical representations of neural networks used by MathWorks [

37] in Matlab environment, the representation of the architecture of our SOM network is seen in

Figure 1. Figure is based on The MathWorks (2023), with adjustments to characteristics of our case.

represent the weight matrix,

is the Euclidian distance function,

resulting matrix of the distance function,

is the competitive function, and

is response matrix containing value 1 for winning neuron and values 0 for all other neurons. Since we have 71 (

) input variables for each observation, resulting input vector has the dimension of

. As seen, the main difference to the standard competitive network architecture is in absence of basis element [

37].

As pointed out by Caballé-Cervigón et al. [

34], a disadvantage of SOM is that results vary significantly in the presence of outliers and that handling categorical attributes causes a problem, while an advantage is that it is a simple clustering approach, the number of clusters is not required in advance, and it is an efficient clustering method.

The unsupervised learning method was integrated into our methodological approach as its disadvantages could be handled and its advantages offer the potential to derivate to a solution needed in a suptech era.

3. Results

After the learning process, the model was capable of generating a map with all observations on it. Examining first the map on the integral basis reveals structural properties of the European insurance market.

Figure 2 shows these structural properties using a colour scale, where darker colours represent huge differences between neighbouring insurance companies. Neighbouring insurance companies are those which are located in the neighbouring neurons. This type of topographic map can be next used to explain how each of 71 financial indicators behave according to the position on the map if the map is examined separately for each of them. Such a principle of analysis, along with a programming code, was developed to directly associate the position on the map with the corresponding value of the selected input variable [

44].

Next, the examination’s focus can be placed on individual insurance companies. Since the map includes all insurance companies in all observed years, for an individual insurance company, its path of changing positions can be followed. Thereby, a company could remain stably located in the neuron or the same region, while it could significantly change location to regions with different properties. The location itself, and the path in particular, can, therefore, reveal information about changes, together with their gravity.

The positions of the insurance companies on the map are presented on

Figure 3. Numbers on the figure show how many insurance companies are positioned on an individual neuron for the development sample. We can see that there are areas with no hits, meaning no insurance companies are located on a particular neuron (blank spaces with no numbers), which can be interpreted as borders between more homogenous groups of insurance companies. We can also see that the hits are well distributed on the whole map. The map structure has also to be checked for the presence of the border effects, which means the positioning of neurons with large numbers of hits only on the borders of the map. In our case, for the presented map it seems that the border effects are not strong.

Up to this point in the model development, the data for calculated the SCR ratio was not presented to the AI model. Only after the learning process was finished and the map was generated (as seen in

Figure 3), did we use the SCR ratio in order to identify areas with high probability of having an insurance company which will have a SCR ratio below 130% next year. The calculated value of the SCR ratio is used as an indicator that an unfavourable financial deterioration is to be expected.

Figure 4 shows the three-dimensional map with a Z-axis that represents the probability of an insurer’s financial deterioration. The bars with heights between 0 and 1 indicate probabilities of insurers’ financial deterioration, measured as the SCR ratio being below 130% in the next year. In

Figure 4, it is apparent that there are compact regions with high probabilities. In the next step, we use this information to create the classification rules for the application of the model. To find the optimal threshold value, the characteristics of the model are studied. Within the optimization procedure of the model, we came to the conclusion that the following should be used as the threshold rule:

- −

If the estimated position of an insurance company on the map falls on a neuron with a probability of more than 0.1, then, such a company is classified as problematic (positive)—the SCR ratio will probably be under 130% next year.

- −

If the estimated position is on the neuron with associated probability under 0.1, then, such a company is classified as unproblematic (negative)—the SCR ratio will probably be over 130% next year.

The model was tested on the test sample. The result of the AI model is binary information that a red flag notion of prospective financial distress can be given for a selected insurance company. The AI model does not take into account information on SCR ratio or closely related data but uses current and past financial data. Based on financial properties considered in the learning phase, the AI model classifies the insurance company to be in the area for which the model expects the level of SRC ratio for the following year to be under 130%.

Next, we used the test sample, which represents 20% of the total final dataset, and on it we estimated the estimated following widely used measures of accuracy:

- −

true positive ratio (TPR)—number of insurance companies which the model correctly classified to be problematic next year (TP) dived by all problematic insurance companies (P) in the test sample;

- −

false positive ratio (FPR)—number of insurance companies which the model falsely classified to be problematic next year (FP) dived by all normal insurance companies (N) in the test sample;

- −

true negative ratio (TNR)—number of insurance companies which the model correctly classified to be normal next year (TN) dived by all normal insurance companies (N) in the test sample;

- −

false negative ratio (FNR)—number of insurance companies which the model falsely classified to be normal next year (FN) dived by all problematic insurance companies (P) in the test sample.

The obtained measures of accuracy are presented in

Table 2.

Considering classification results for the test sample, the model seems to perform well. To test the model’s robustness, we applied a bootstrapping method. We created 100 development and test bootstrap samples. For each pair of samples, we re-estimated the model, resulting in 100 iterations. For each of them, we measured the model’s performance based on the TP and TN ratios. The results are presented in

Figure 5. We can observe that none of the developed models have a TP ratio below 0.73 and a TN ratio below 0.72. The histograms in the lower part of

Figure 5 show results for TPR and TNR ratio pairs for each of 100 tested models, sorted using the values of TP ratios. The lower left graph shows the distribution for result values of TP ratios, and the lower right graph shows the distribution for result values of TN ratios.

4. Discussion and Conclusions

The implications of the results are theoretical and practical. First, from the model development, we can learn that there exist similarities and resemblances in the behaviour of groups of economic subjects, among which some patterns can be recognized to lead to less favourable capital strength positions. It is interesting that similar observations were made for the banking sector [

39]. It would be worth discussing what can be the reason for it.

The developed model can be used in practice on new data as a tool to detect a path toward unfavourable locations on the map. The supervisor, and potentially also the investors or rating agencies, can use the result to gain information on expected financial deterioration for the future based on current data. As the company is, based on current data, not yet in financial distress, but only potentially could become so, the insurance sector’s supervisor can, with a closer investigation, examine why the model has placed an individual insurance company in an undesirable location on the map. In this way, the proposed approach has the potential to become a suptech tool in the field of early warning systems.

Using this tool for supervision purposes contributes to the ability of insurance market supervisor’s to earlier identify a behaviour of an individual insurance company which might lead to financial deterioration in the future. In this way, earlier intervention is possible to prevent financial instability. Contributing to financial stability is of great public interest and safeguards households’ social security in their investments into financial markets and as policyholders of insurance companies.

The development of the model had several originalities, and these are the resulting characteristics of the development process:

- (1)

The main particularity is the exclusion of SCR data from the development process as it is not presented to the model in the learning phase.

- (2)

A routine for a robustness check was introduced. It is based on a bootstrap technique where the model training was repeated 100 times on randomly split samples. The analysis shows remarkable robustness.

- (3)

The neural network model places the insurance companies on a generated map, which reveals structural properties of the European insurance market.

The methodological approach to the research question in this study is non-traditional and includes the usage of neural networks technologies. In the methodological approach, the variable which expressed financial deterioration was not used in the training (estimation) of the model, which is different to a traditional approach. Also, financial deterioration is defined with the help of CSR in a manner aimed at using the model as a very early warning system, in contrast to it often being used in bankruptcy or insolvency. Similar yet different studies might have used specialized types of insurance companies, which together with differences in regulation can lead to characteristics of the researched database which later have an important influence on the results. Therefore, a direct (numerical) benchmark of results to studies which are in their research question similar but yet differ importantly to our study would be inappropriate and misleading.

Even though a single source of data was used for model development and testing (i.e., A.M. Best insurers’ yearly financial statements’ data), the analysis of data quality during pre-processing showed that there is room for improvement. Also, the data of higher frequency (for example, quarterly data such as that used in the banking supervision process), can make the model more interesting. Additional data would also make it possible to apply Solvency II SCR ratios for classifying insurance companies into groups and more detailed classification can be made.

Further improvements can also be made in coding and analysis of the results. The movement of individual insurance companies on the map through time can be traced, and direction trajectories can be estimated. This would allow us to identify insurance companies with possible future financial distress even earlier.

The same model can also be used to study differences between companies operating in different countries/markets. Homogeneity measures can be developed to trace the effect of common regulation in EU.

Finally, other AI methods can also be tested to check if further classification results can be improved. By applying OSS-SOM [

45], the impact of border effects on normal SOM can be studied. Additionally, besides the unsupervised methods, also supervised methods can be applied. Depending on the properties of an enhanced dataset, more complex types of neural networks can be used. Probably the most promising further development would be to switch to data on a higher frequency, such as quarterly data, which are available to supervisors. It would lead to a true online model.