RTAD: A Real-Time Animal Object Detection Model Based on a Large Selective Kernel and Channel Pruning

Abstract

:1. Introduction

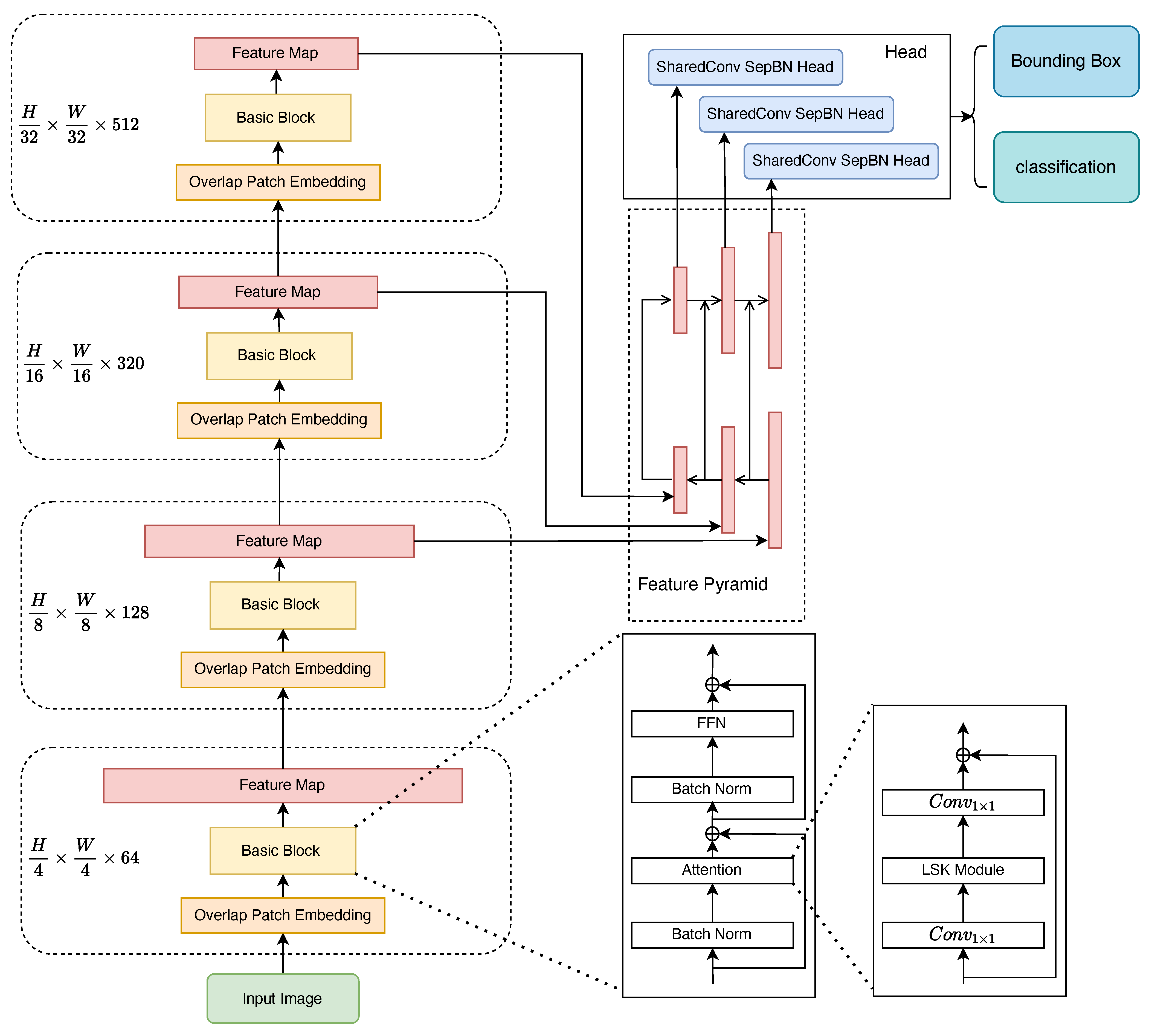

- Using the basic structure of the Transformer model and the LSK module with a larger receptive field, we created a powerful backbone network with a strong representation ability.

- To further reduce parameters and computational cost, we introduced channel pruning based on Fisher information to remove low-importance channels.

- Our RTAD has less than half the parameters of YOLO v8-s and surpasses it by 6.2 .

2. Methods

2.1. Backbone

2.2. Basic Block

| Algorithm 1 LSK module |

|

2.3. Shared Parameter Object Detector

2.4. Pruning Algorithm Based on Fisher Information

2.5. Implementation Details

2.5.1. Label Assignment

2.5.2. Data Augmentation

2.5.3. Two-Stage Training Approach

2.6. Experiment

2.7. Dataset

2.8. Experimental Setup

2.9. Evaluation Metrics

3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ViT | Vision Transformer |

| LSK | Large Selective Kernel |

| SPP | Spatial Pyramid Pooling |

| ACMix | Self-Attention and Convolution Mixed Module |

References

- Díaz, S.; Fargione, J.; Chapin, F.S.; Tilman, D. Biodiversity Loss Threatens Human Well-Being. PLoS Biol. 2006, 4, e277. [Google Scholar] [CrossRef] [PubMed]

- Ukwuoma, C.C.; Qin, Z.; Yussif, S.B.; Happy, M.N.; Nneji, G.U.; Urama, G.C.; Ukwuoma, C.D.; Darkwa, N.B.; Agobah, H. Animal species detection and classification framework based on modified multi-scale attention mechanism and feature pyramid network. Sci. Afr. 2022, 16, e01151. [Google Scholar] [CrossRef]

- Neethirajan, S. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef]

- Zheng, Z.; Li, J.; Qin, L. YOLO-BYTE: An efficient multi-object tracking algorithm for automatic monitoring of dairy cows. Comput. Electron. Agric. 2023, 209, 107857. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the Integration of Self-Attention and Convolution. arXiv 2022, arXiv:2111.14556. [Google Scholar]

- Qiao, Y.; Guo, Y.; He, D. Cattle body detection based on YOLOv5-ASFF for precision livestock farming. Comput. Electron. Agric. 2023, 204, 107579. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Yang, Q.; Xiao, D.; Cai, J. Pig mounting behaviour recognition based on video spatial–temporal features. Biosyst. Eng. 2021, 206, 55–66. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Sha, J.; Zeng, G.L.; Xu, Z.F.; Yang, Y. A light-weight and accurate pig detection method based on complex scenes. Multimed. Tools Appl. 2023, 82, 13649–13665. [Google Scholar] [CrossRef]

- Ocepek, M.; Žnidar, A.; Lavrič, M.; Škorjanc, D.; Andersen, I.L. DigiPig: First Developments of an Automated Monitoring System for Body, Head and Tail Detection in Intensive Pig Farming. Agriculture 2021, 12, 2. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Shao, H.; Pu, J.; Mu, J. Pig-Posture Recognition Based on Computer Vision: Dataset and Exploration. Animals 2021, 11, 1295. [Google Scholar] [CrossRef] [PubMed]

- Maheswari, M.; Josephine, M.; Jeyabalaraja, V. Customized deep neural network model for autonomous and efficient surveillance of wildlife in national parks. Comput. Electr. Eng. 2022, 100, 107913. [Google Scholar] [CrossRef]

- Ulhaq, A.; Adams, P.; Cox, T.E.; Khan, A.; Low, T.; Paul, M. Automated Detection of Animals in Low-Resolution Airborne Thermal Imagery. Remote. Sens. 2021, 13, 3276. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J.; Kumar, T.; Raj, K. WilDect-YOLO: An efficient and robust computer vision-based accurate object localization model for automated endangered wildlife detection. Ecol. Inform. 2023, 75, 101919. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large Selective Kernel Network for Remote Sensing Object Detection. arXiv 2023, arXiv:2303.09030. [Google Scholar]

- Liu, L.; Zhang, S.; Kuang, Z.; Zhou, A.; Xue, J.H.; Wang, X.; Chen, Y.; Yang, W.; Liao, Q.; Zhang, W. Group Fisher Pruning for Practical Network Compression. arXiv 2021, arXiv:2108.00708. [Google Scholar]

- Yu, H.; Xu, Y.; Zhang, J.; Zhao, W.; Guan, Z.; Tao, D. AP-10K: A Benchmark for Animal Pose Estimation in the Wild. arXiv 2021, arXiv:2108.12617. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:2105.15203. [Google Scholar]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows. arXiv 2022, arXiv:2107.00652. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. RTMDet: An Empirical Study of Designing Real-Time Object Detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy-Paste is a Strong Data Augmentation Method for Instance Segmentation. arXiv 2021, arXiv:2012.07177. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, L. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part V 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 11 September 2022).

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. arXiv 2021, arXiv:2108.07755. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sünderhauf, N. VarifocalNet: An IoU-aware Dense Object Detector. arXiv 2021, arXiv:2008.13367. [Google Scholar]

| Configuration | Item |

|---|---|

| Framework | PyTorch 1.12 |

| GPU | NVIDIA GeForce RTX 3090 |

| Optimizer | AdamW |

| Data augmentation | Mosaic, Mix up (first 280 epochs), LSJ (last 20 epochs) |

| Operation system | Ubuntu 18.04 |

| Retention Ratios | AP | FPS |

|---|---|---|

| 1 | 70.8 | 75.1 |

| 0.9 | 71.7 | 75.2 |

| 0.8 | 71.3 | 75.3 |

| 0.7 | 70.5 | 75.1 |

| Model | FPS | GFLOPs | Param# | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| RTMDET-m [28] | 69.3 | 89.3 | 78 | 33.6 | 70.3 | 64.5 | 79.8 | 81.1 | 74.1 | 39.16 | 24.69 |

| RTMDET-s [28] | 68.1 | 89 | 76.9 | 30 | 69.1 | 63.5 | 78.5 | 79.8 | 74.6 | 14.81 | 8.88 |

| YOLOv8-s [32] | 65.5 | 86.2 | 73.6 | 27.4 | 66.6 | 62.9 | 76.8 | 77.3 | 97.9 | 14.33 | 11.16 |

| YOLOX-m [27] | 67.5 | 88 | 76.2 | 34.9 | 68.5 | 62.2 | 74.4 | 74.6 | 55.2 | 36.84 | 25.31 |

| YOLOX-s [27] | 61.2 | 84.6 | 69.5 | 32.6 | 62.1 | 58.6 | 70.2 | 70.4 | 68.4 | 13.38 | 8.96 |

| Tood-Res101 [33] | 66.5 | 87 | 74.7 | 25.2 | 67.6 | 64.4 | 74.8 | 74.8 | 28 | 103.65 | 50.91 |

| Vfnet-Res101 [34] | 63 | 83.6 | 70.3 | 19.6 | 64.2 | 62 | 72.6 | 72.7 | 31.4 | 107.03 | 51.6 |

| ours (w/o pruning) | 70.8 | 91.3 | 79.9 | 35.5 | 71.7 | 64.8 | 79.8 | 81.1 | 75.1 | 10.54 | 4.73 |

| ours (with pruning) | 71.7 | 92.1 | 80.5 | 35.4 | 72.5 | 64.9 | 80.0 | 81.3 | 75.3 | 9.57 | 4.53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Fan, Q.; Zhao, C.; Li, S. RTAD: A Real-Time Animal Object Detection Model Based on a Large Selective Kernel and Channel Pruning. Information 2023, 14, 535. https://doi.org/10.3390/info14100535

Liu S, Fan Q, Zhao C, Li S. RTAD: A Real-Time Animal Object Detection Model Based on a Large Selective Kernel and Channel Pruning. Information. 2023; 14(10):535. https://doi.org/10.3390/info14100535

Chicago/Turabian StyleLiu, Sicong, Qingcheng Fan, Chunjiang Zhao, and Shuqin Li. 2023. "RTAD: A Real-Time Animal Object Detection Model Based on a Large Selective Kernel and Channel Pruning" Information 14, no. 10: 535. https://doi.org/10.3390/info14100535

APA StyleLiu, S., Fan, Q., Zhao, C., & Li, S. (2023). RTAD: A Real-Time Animal Object Detection Model Based on a Large Selective Kernel and Channel Pruning. Information, 14(10), 535. https://doi.org/10.3390/info14100535