1. Introduction

Nowadays, recommender systems play the role of experts on a matter, helping users find items that are tailored to their tastes. Most recommender systems use previous user information to generate recommendations. For example, the recommender systems may use the previous user’s rating of similar items to create the recommendation for similar items (Content-based methods) or they may find similar users, take items that those users rated most positively, and then recommend those items to the target user (Collaborative filtering).

However, sometimes there are no previous data from the user because the user is new to the system and, therefore, due to the lack of data, it is not possible to generate tailored recommendations for the user. This situation is widely known in the recommender systems, and it is called the cold start problem. The most common methods to palliate this problem are either asking users about their interests and tastes or asking the users directly to rate some items in order to have some data on which the recommender systems will be based. The main drawback of these methods is that they require time and effort from the user, and, therefore, those methods are ignored.

The methods proposed in this work take a slightly different approach: instead of asking users to provide explicit data, the recommender system is taking implicit data, so the user does not have to actively provide any information. To be more precise, these implicit data will be taken from the user’s social media stream. These data will be used to generate a user profile with a series of features that can eventually be used to classify the users and, therefore, create predictions building a recommender system.

One of the main problems when building algorithms that aim to alleviate the cold start problem is that it is difficult to find a dataset that has a rich user profile that can be used to overcome the problem. Although currently, it has become standard to use Movielens (

https://grouplens.org/datasets/movielens/, accessed on 20 July 2022) to evaluate and benchmark the recommender system models, when it comes to the case of cold start problem, there is not a clear dataset that would allow the evaluation of a user-feature-aware algorithm design as described above.

In this work will be introduced a new dataset optimized to be used for alleviating the cold start problem. This dataset has three tables:

Movies: contains info about the items (movies in this case) that can be used to extract features out of it.

User profiles: contains info about the users. Some of them are already feature-like, and others can be used to create features out of it.

Ratings: contains the ratings from the users for the items.

This dataset has been crafted using data from two sources: Filmaffinity (

https://www.filmaffinity.com, accessed on 19 April 2022) for the ratings and movies tables and Twitter (

https://twitter.com, accessed on 19 April 2022) for the user profile one. Due to the duality of the dataset (user and item data), this dataset is an optimal asset that can be leveraged to create models for recommender systems under the cold start problem situation, making it easier to create connections between the user profile and item ratings.

Although nowadays, there are several public datasets available for recommender systems, as indicated in

Section 2.2, these datasets lack quality user data, such as the behavioral user data CSP is providing. Some of these datasets have some demographic data about the users, such as sex or age (this is the case of the LDOS–CoMoDa dataset), but the rest of the variables are either item variables or contextual data (i.e., date of the rating, weather at the time of the rating). This contextual data can be a good asset but the usage of user-related features is a requirement to obtain more tailored recommendations. Therefore, CSP is an optimal dataset for cold start situations since it provides up to 12 behavioral variables that enable much more powerful and accurate decision-making models that leverage implicit data from users, which is a key aspect of the models that aim to alleviate the cold start problem.

This work also describes an example of the usage of this dataset by performing some data cleaning, feature selection, and the design and evaluation of a recommender system model that uses this dataset.

This model follows a mixed approach between collaborative filter and content based. The evaluation of the model proves that the model is very accurate.

The rest of this work is organized as follows.

Section 2 shows the main concepts of the recommendation systems specifying solutions for cold start problems.

Section 3 provides a description of the dataset and the designed algorithm.

Section 4 provides the results from the previously mentioned model.

Section 5 provides the conclusion of this work.

5. Discussion

The main limitation of the dataset, the algorithm itself, and potential models that leverage the datase, is that in order to be executed on real users, it would require those users to have a Twitter account (or any other kind of social stream data) that will be used to elaborate their behavioral profile. This could be seen as a drawback. However, it is the backbone of the whole work: the usage of social media data to palliate the cold start problem.

The main benefit of CSP-Dataset is the fact that the dataset offers two different tables for the same individual, representing, on one side, the behavior of the user and, on the other side, the ratings for the movies. Moreover, the dataset also includes a comprehensive table for the characteristics of the items (movies). Therefore, accurate predictions could be created by extrapolating the features from one table (behavioral data from Twitter) toward the other (rating data), creating correlation connections between the behavior features and item features.

Then, the presented dataset can be used to craft models that could be leveraged for operational applications. For example, these models could be used by streaming applications, such as Netflix or Spotify, by asking their users to grant temporary access to their social stream (i.e., Twitter), and then instant and tailored recommendations would be provided to the users in a matter of seconds without the need to perform any rating or manually providing user data.

This work provides a dataset of high interest due to the scarcity of datasets providing extensive items and user behavioral features. The dataset will enable researchers to create their models in the future with cutting-edge algorithms (i.e., Neural Networks) and, therefore, the dataset is a very good candidate to become the standard dataset for the cold start problem due to the fact that it contains many user’s behavioral data.

Moreover, the results of the experiments support the hypothesis that the presented dataset can be used for creating recommendations for users without having any previous rating information from them. The extrapolation of user classification data to the rating behavior has, therefore, also been confirmed to be a valid approach. Thus the crafted dataset can be used by other researchers to create other studies that focus on the alleviation of the cold start problem. This dataset, because of its duality of user-item information, is a candidate to become one of the standards for the cold start problem.

The algorithm used has proven to create very good results, even though many other techniques can be leveraged to improve the accuracy of the recommendations even more. Moreover, other features could be created out of the raw data and be leveraged for future work.

Author Contributions

Conceptualization, J.H.-Z., C.P. and E.H.-V.; creation and curation of the dataset, J.H.-Z., Á.T.-L. and J.B.-M.; conceived, designed, and performed the experiment, J.H.-Z., Á.T.-L. and J.B.-M.; analyzed and interpreted the data, J.H.-Z., C.P., Á.T.-L. and J.B.-M.; wrote the manuscript, J.H.-Z. and C.P.; co-supervized the analysis, reviewed and edited the manuscript, and contributed to the discussion J.H.-Z., C.P. and E.H.-V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the Spanish State Research Agency through the project PID2019-103880RB-I00 and the Andalusian Agency project P20_00673.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bobadilla, J.; Ortega, F.; Hernando, A.; Gutiérrez, A. Recommender systems survey. Knowl. Based Syst. 2013, 46, 109–132. [Google Scholar] [CrossRef]

- Burke, R.; Felfernig, A.; Göker, M. Recommender systems: An overview. AI Mag. 2011, 32, 13–18. [Google Scholar] [CrossRef]

- Pazzani, M.J.; Billsus, D. Content-Based Recommendation Systems. In The Adaptive Web: Methods and Strategies of Web Personalization; Brusilovsky, P., Kobsa, A., Nejdl, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 325–341. [Google Scholar] [CrossRef]

- Boutilier, C.; Zemel, R.S.; Marlin, B. Active Collaborative Filtering. In Proceedings of the Nineteenth Annual Conference on Uncertainty in Artificial Intelligence, Acapulco, Mexico, 7–10 August 2003; pp. 98–106. [Google Scholar]

- Bennett, J.; Lanning, S. The Netflix Prize. In Proceedings of the KDD Cup and Workshop, San Jose, CA, USA, 12 August 2007. [Google Scholar]

- Bobadilla, J.; Ortega, F.; Hernando, A.; Bernal, J. A collaborative filtering approach to mitigate the new user cold start problem. Knowl. Based Syst. 2012, 26, 225–238. [Google Scholar] [CrossRef]

- Sahu, A.; Dwivedia, P.; Kant, V. Tags and Item Features as a Bridge for Cross-Domain Recommender Systems. Procedia Comput. Sci. 2018, 125, 624–631. [Google Scholar] [CrossRef]

- Wei, J.; He, J.; Chen, k.; Zhou, Y.; Tang, Z. Collaborative filtering and deep learning based recommendation system for cold start items. Expert Syst. Appl. 2017, 69, 29–39. [Google Scholar] [CrossRef]

- Gonzalez Camacho, L.; Nice Alves-Souza, S. Social network data to alleviate cold-start in recommender system: A systematic review. Inf. Process. Manag. 2018, 54, 529–544. [Google Scholar] [CrossRef]

- Natarajan, S.; Vairavasundaram, S.; Natarajan, S.; Gandomi, A. Resolving data sparsity and cold start problem in collaborative filtering recommender system using Linked Open Data. Expert Syst. Appl. 2020, 149, 113248. [Google Scholar] [CrossRef]

- Hoang-Son, L. Dealing with the new user cold-start problem in recommender systems: A comparative review. Inf. Syst. 2016, 58, 87–104. [Google Scholar]

- Viktoratos, I.; Tsadiras, A.; Bassiliades, N. Combining community-based knowledge with association rule mining to alleviate the cold start problem in context-aware recommender systems. Expert Syst. Appl. 2018, 101, 78–90. [Google Scholar] [CrossRef]

- Hernando, A.; Bobadilla, J.; Ortega, F.; Gutiérrez, A. A probabilistic model for recommending to new cold-start non-registered users. Inf. Sci. 2017, 376, 216–232. [Google Scholar] [CrossRef]

- Lika, B.; Kolomvatsos, K.; Hadjiefthymiades, S. Facing the cold start problem in recommender systems. Expert Syst. Appl. 2014, 41, 2065–2073. [Google Scholar] [CrossRef]

- Chien, C.; Yu-Hao, W.; Meng-Chieh, C.; Yu-Chun, S. An effective recommendation method for cold start new users using trust and distrust networks. Inf. Sci. 2013, 224, 19–36. [Google Scholar] [CrossRef]

- Herce-Zelaya, J.; Porcel, C.; Bernabé-Moreno, J.; Tejeda-Lorente, A.; Herrera-Viedma, E. New technique to alleviate the cold start problem in recommender systems using information from social media and random decision forests. Inf. Sci. 2020, 536, 156–170. [Google Scholar] [CrossRef]

- Zhang, Y.; Shi, Z.; Zuo, W.; Yue, L.; Li, X. oint Personalized Markov Chains with social network embedding for cold-start recommendation. Neurocomputing 2019, 386, 208–220. [Google Scholar] [CrossRef]

- García-Sánchez, F.; Colomo-Palacios, R.; Valencia-García, R. A social-semantic recommender system for advertisements. Inf. Process. Manag. 2020, 57, 102153. [Google Scholar] [CrossRef]

- Esmaeili, L.; Mardani, S.; Golpayegani, S.; Madar, Z. A novel tourism recommender system in the context of social commerce. Expert Syst. Appl. 2020, 149, 113301. [Google Scholar] [CrossRef]

- Panda, D.K.; Ray, S. Approaches and algorithms to mitigate cold start problems in recommender systems: A systematic literature review. J. Intell. Inf. Syst. 2022, 59, 341–366. [Google Scholar] [CrossRef]

- Ramezani, M.; Akhlaghian Tab, F.; Abdollahpouri, A.; Abdulla Mohammad, M. A new generalized collaborative filtering approach on sparse data by extracting high confidence relations between users. Inf. Sci. 2021, 570, 323–341. [Google Scholar] [CrossRef]

- Viktoratos, I.; Tsadiras, A. Personalized Advertising Computational Techniques: A Systematic Literature Review, Findings, and a Design Framework. Information 2021, 12, 480. [Google Scholar] [CrossRef]

- Majumdar, A.; Jain, A. Cold-start, warm-start and everything in between: An autoencoder based approach to recommendation. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 3656–3663. [Google Scholar] [CrossRef]

- Feng, J.; Xia, Z.; Feng, X.; Peng, J. RBPR: A hybrid model for the new user cold start problem in recommender systems. Knowl. Based Syst. 2021, 214, 106732. [Google Scholar] [CrossRef]

- Vagliano, I.; Galke, L. ecommendations for item set completion: On the semantics of item co-occurrence with data sparsity, input size, and input modalities. Inf Retr. 2022, 25, 269–305. [Google Scholar] [CrossRef]

Figure 1.

Movie country distribution.

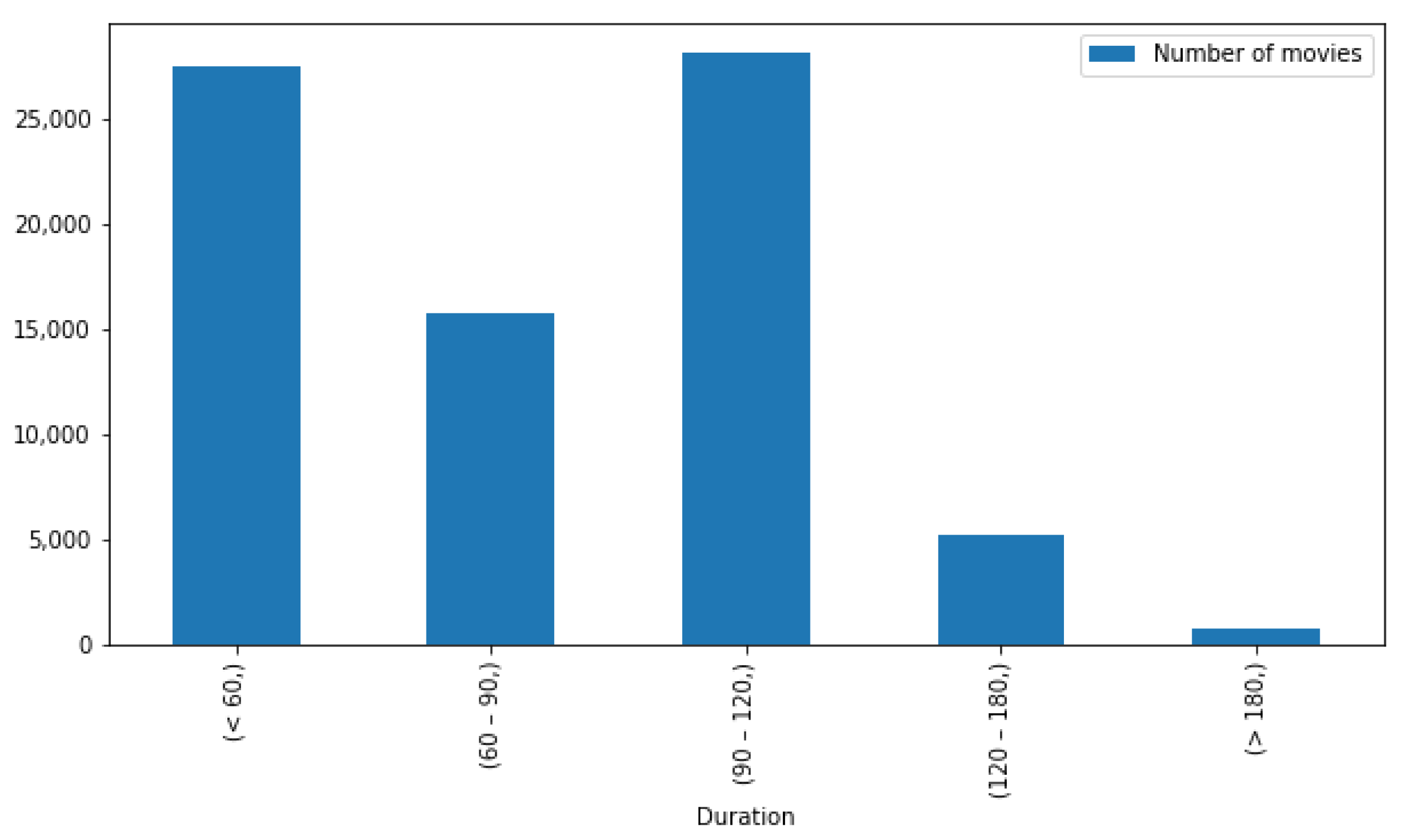

Figure 2.

Movie duration distribution.

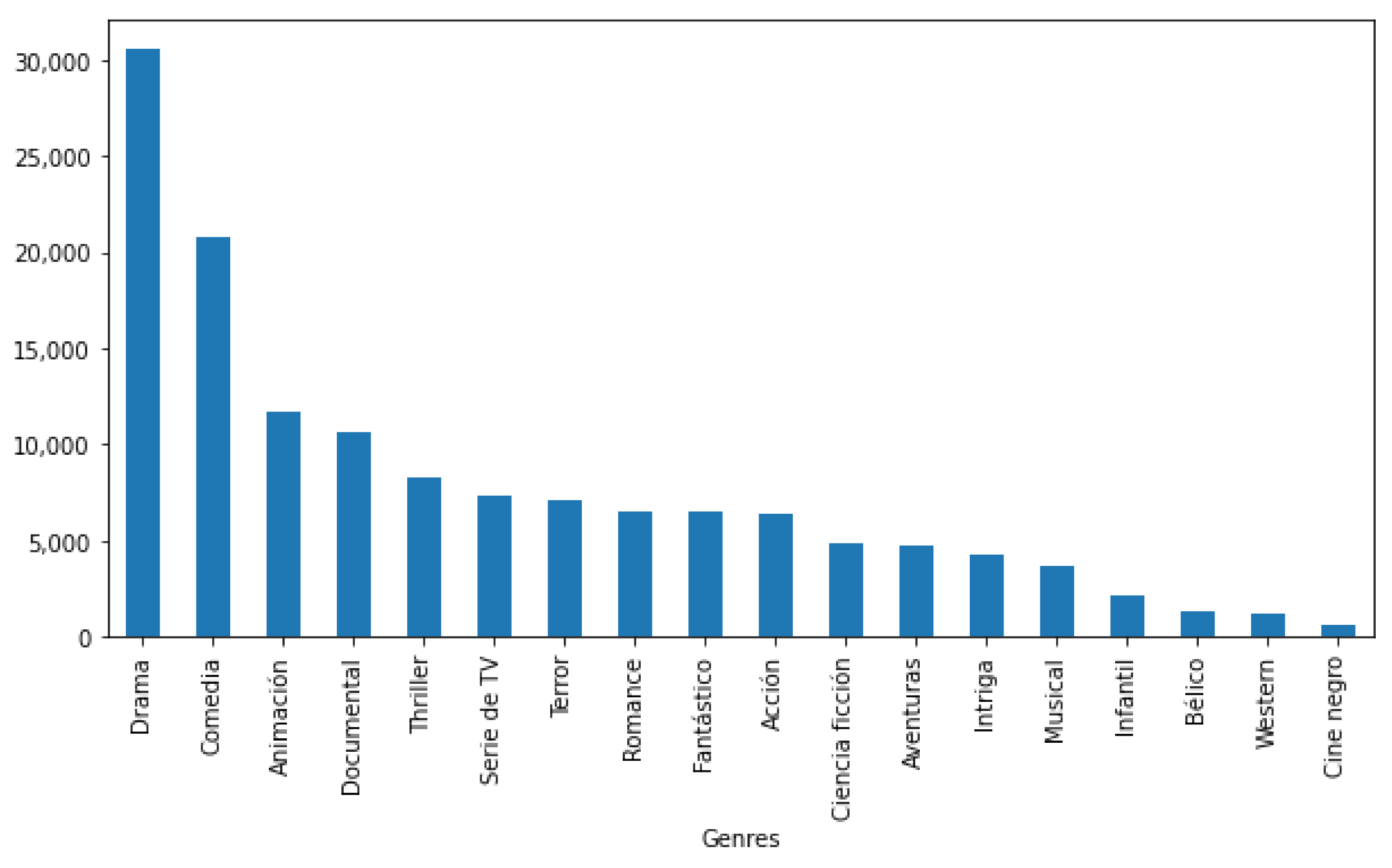

Figure 3.

Movie genre distribution.

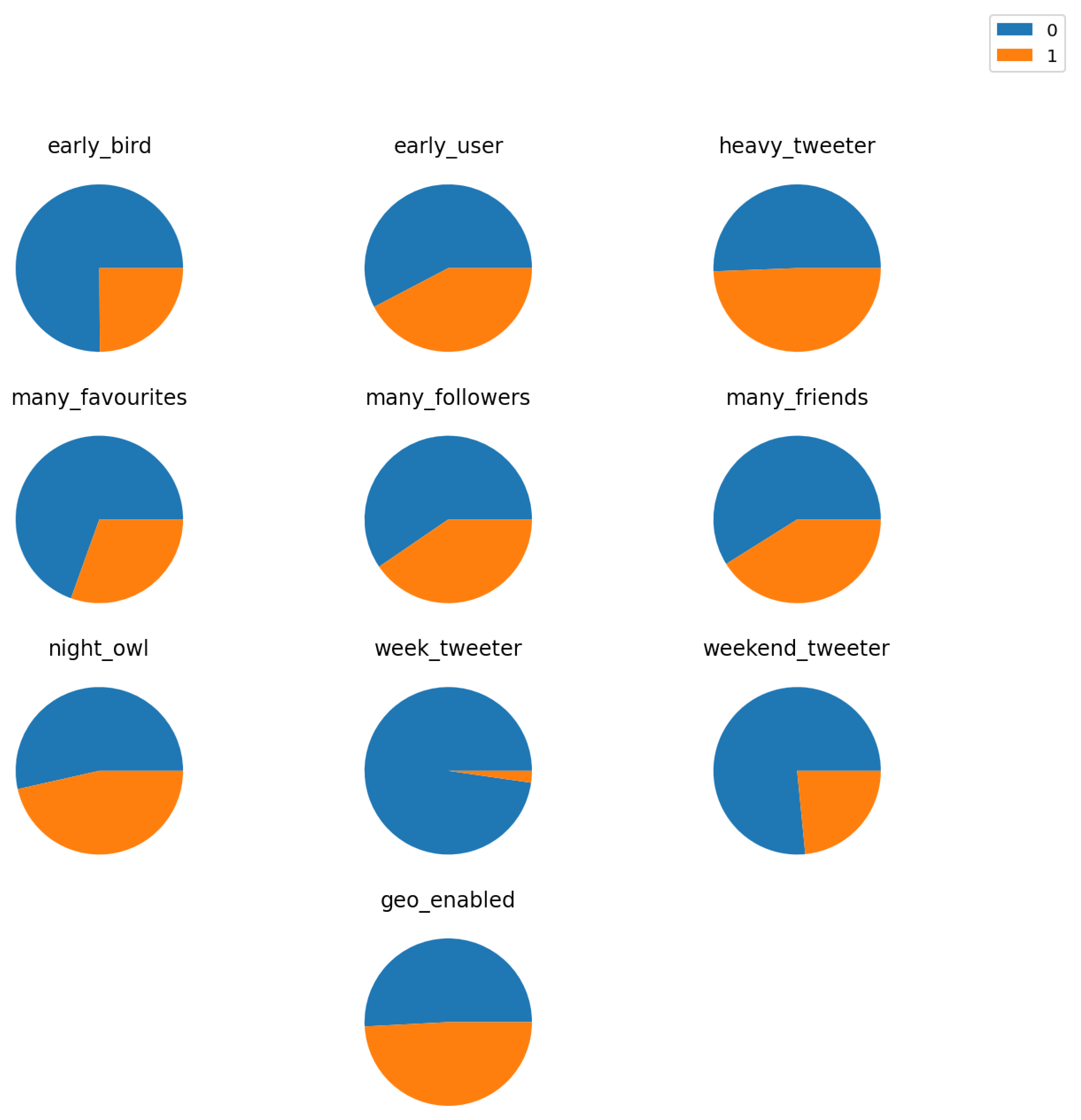

Figure 4.

All user feature distribution.

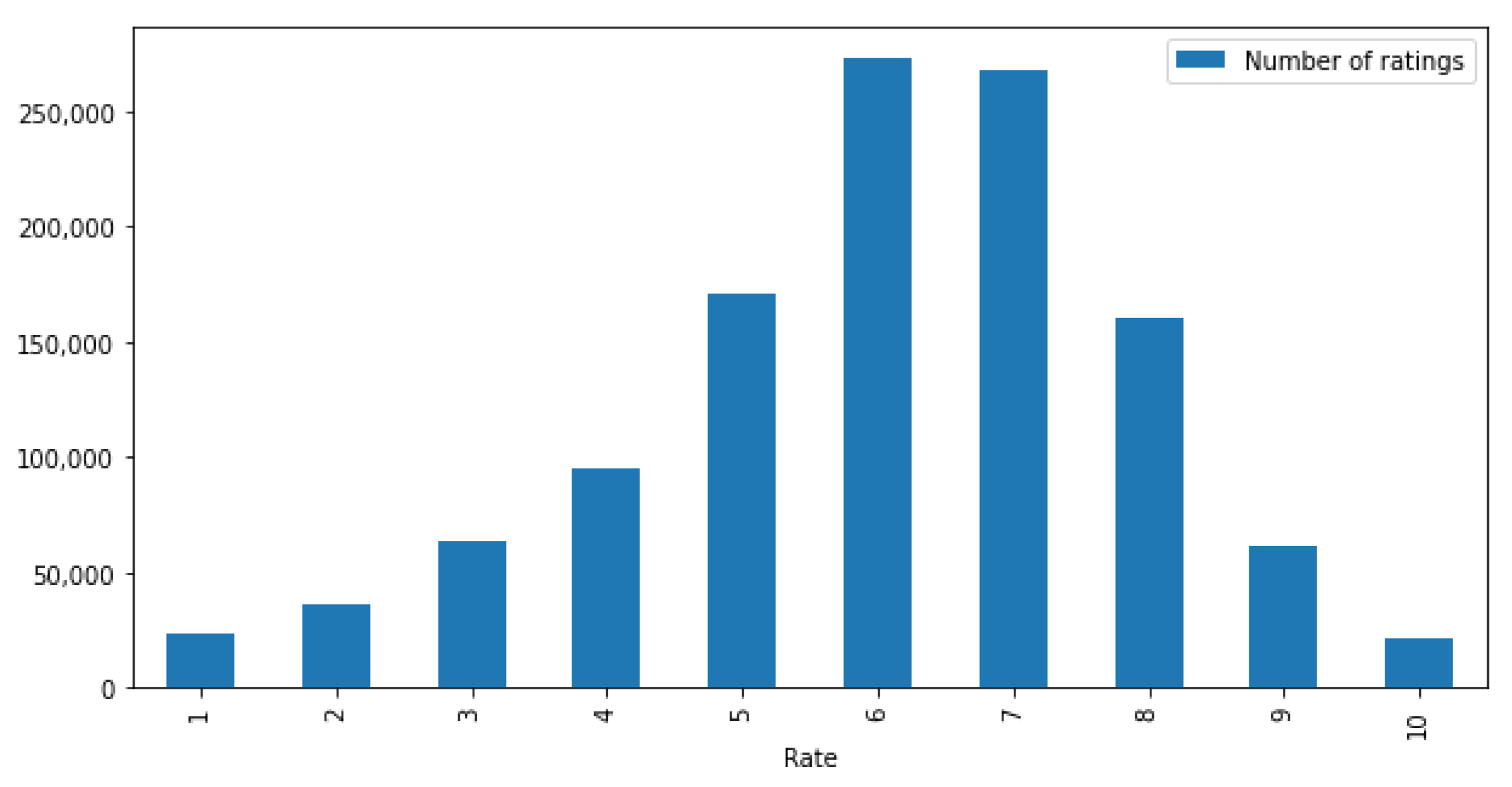

Figure 5.

Rating distribution.

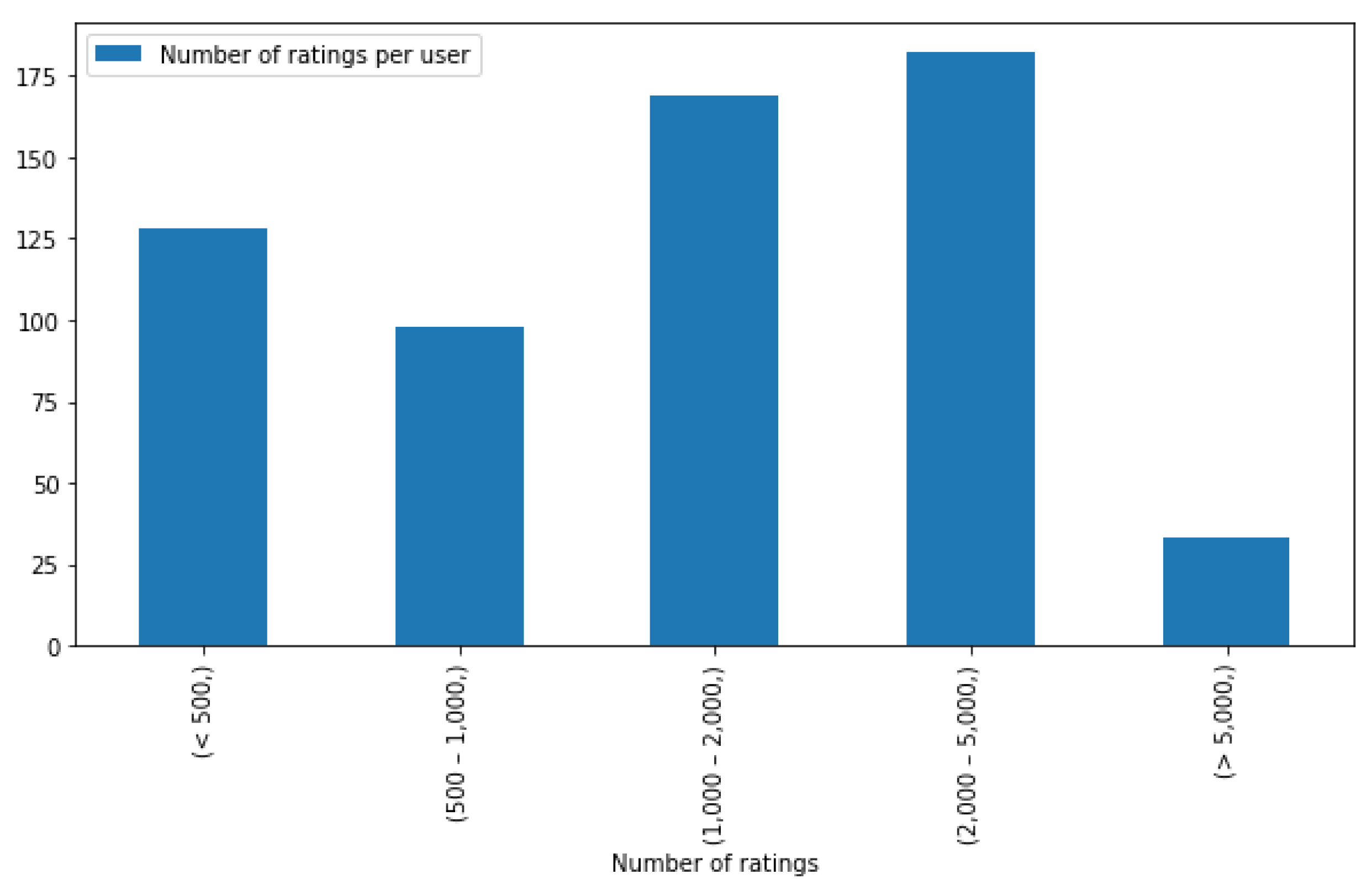

Figure 6.

Number of rating per user distribution.

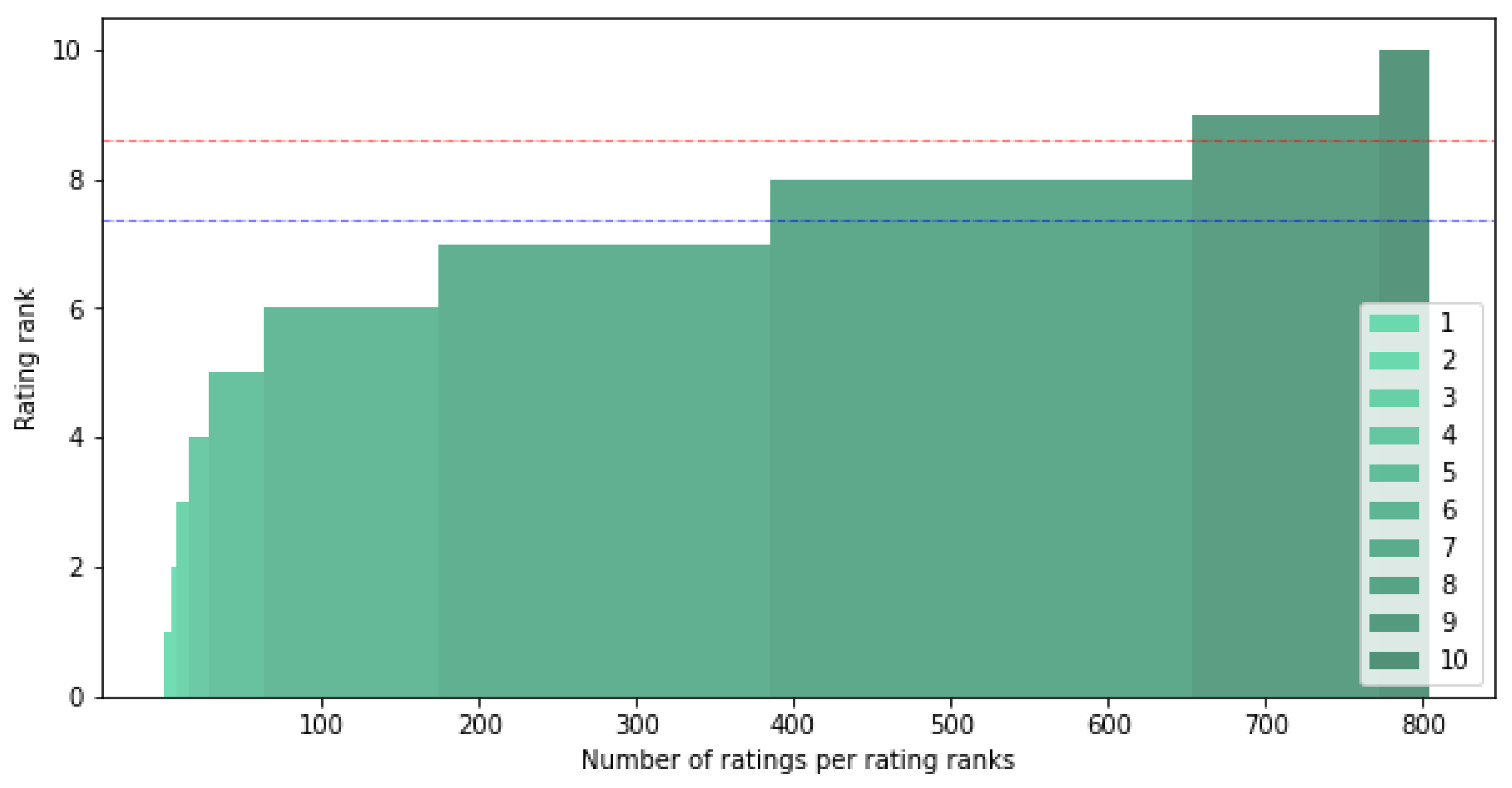

Figure 7.

User rating distribution in comparison with the average of the recommended items (red) and the item rating average from the user (blue).

Table 1.

Dataframe feature ratings matrix, including the average rating per movie for all different user feature values. The shape of the matrix is (20, 2831), where 20 is the different user feature values, and 2831 is the number of movies after filtering movies without enough ratings.

| | Movie_id | 100072 | 100408 | 100958 | … |

|---|

| Feature_key | Feature_value | | | | |

|---|

| early_bird | 0 | 6.463918 | 3.902174 | 8.345865 | … |

| | 1 | 6.545455 | 4.000000 | 8.550000 | … |

| early_user | 0 | 6.486111 | 3.957143 | 8.401869 | … |

| | 1 | 6.482759 | 3.863636 | 8.378788 | … |

| geo_enabled | 0 | 6.349206 | 4.166667 | 8.379310 | … |

| | 1 | 6.611940 | 3.700000 | 8.406977 | … |

| heavy_tweeter | 0 | 6.555556 | 4.000000 | 8.218391 | … |

| | 1 | 6.396552 | 3.839286 | 8.569767 | … |

| many_favourites | 0 | 6.521739 | 3.860759 | 8.362069 | … |

| | 1 | 6.394737 | 4.057143 | 8.456140 | … |

| many_followers | 0 | 6.629630 | 3.840000 | 8.336735 | … |

| | 1 | 6.244898 | 4.076923 | 8.466667 | … |

| many_friends | 0 | 6.600000 | 4.060606 | 8.377551 | … |

| | 1 | 6.327273 | 3.729167 | 8.413333 | … |

| night_owl | 0 | 6.470588 | 3.746032 | 8.384615 | … |

| | 1 | 6.500000 | 4.137255 | 8.402439 | … |

| week_tweeter | 0 | 6.488372 | 3.911504 | 8.390533 | … |

| | 1 | 6.000000 | 5.000000 | 8.500000 | … |

| weekend_tweeter | 0 | 6.395833 | 3.962500 | 8.411348 | … |

| | 1 | 6.735294 | 3.823529 | 8.312500 | … |

Table 2.

Dataframe movies, including all the movies with all movie features. The shape is (2831, 412), where 2831 is the number of movies, and 412 is the number of features.

| | Thriller | Drama | Romance | … | 20 s | Short | Long |

|---|

| id | | | | | | | |

|---|

| 100072 | 0 | 1 | 0 | … | 0 | 0 | 0 |

| 100408 | 0 | 0 | 0 | … | 0 | 1 | 0 |

| 100958 | 0 | 0 | 0 | … | 0 | 0 | 1 |

| … | … | … | … | … | … | … | … |

Table 3.

Dataframe matrix as the result of filtering

Table 1 with the features from the user selected. The resulting shape is (10, 2831), where 10 is all the user feature values from selected users, and 2831 is the number of movies.

| | Movie_id | 100072 | 100408 | 100958 | … |

|---|

| Feature_key | Feature_value | | | | |

|---|

| early_bird | 0 | 6.463918 | 3.902174 | 8.345865 | … |

| early_user | 1 | 6.482759 | 3.863636 | 8.378788 | … |

| geo_enabled | 1 | 6.611940 | 3.700000 | 8.406977 | … |

| heavy_tweeter | 0 | 6.555556 | 4.000000 | 8.218391 | … |

| many_favourites | 0 | 6.521739 | 3.860759 | 8.362069 | … |

| many_followers | 0 | 6.629630 | 3.840000 | 8.336735 | … |

| many_friends | 0 | 6.600000 | 4.060606 | 8.377551 | … |

| night_owl | 1 | 6.500000 | 4.137255 | 8.402439 | … |

| week_tweeter | 0 | 6.488372 | 3.911504 | 8.390533 | … |

| weekend_tweeter | 1 | 6.735294 | 3.823529 | 8.312500 | … |

Table 4.

Dataframe matrix for affinity showing the ratings from the users with high affinity with the selected user. The shape is (15, 2831), where 15 is the number of users with high affinity and 2831 is the number of movies.

| Movie_id | 100072 | 100408 | … | 998393 | 999360 |

|---|

| User_id | | | | | |

|---|

| 122203 | NaN | NaN | … | NaN | NaN |

| 175298 | NaN | NaN | … | 0.67 | 0.50 |

| 204280 | NaN | 0.50 | … | 0.83 | 1.00 |

| … | … | … | … | … | … |

| 825186 | 1.00 | NaN | … | 0.83 | 0.50 |

| 871105 | 0.50 | 0.50 | … | 0.50 | NaN |

| 976346 | 0.50 | NaN | … | 1.00 | 0.50 |

Table 5.

Array with movie predictions for the selected user according to users with high affinity. The shape is (2831), where 2831 is the number of movies.

| | Rate |

|---|

| Movie_id | |

|---|

| 100408 | 0.733333 |

| 100958 | 0.666667 |

| 101022 | 0.833333 |

| … | … |

Table 6.

Dataframe predictions for a user where the predictions for the selected user are displayed. The shape is (585, 4), where 585 is the number of movies rated by the user from the movie set, and 4 is the number of columns added for predictions.

| | y | yhat | yhat_affinity | yhat_total |

|---|

| Movie_id | | | | |

|---|

| 107060 | 7.0 | 0.566104 | 0.887597 | 0.726850 |

| 108145 | 7.0 | 0.606314 | 0.790698 | 0.698506 |

| 109220 | 7.0 | 0.712769 | 0.848837 | 0.780803 |

| … | … | … | … | … |

Table 7.

Dataframe predictions for the user where the predictions for the selected user are displayed sorted by prediction (highest prediction rank on top). The shape is (585, 4), where 585 is the number of movies rated by the user from the movie set, and 4 is the number of columns added for predictions.

| | y | yhat | yhat_affinity | yhat_total |

|---|

| Movie_id | | | | |

|---|

| 745751 | 10.0 | 0.963304 | 0.915282 | 0.939293 |

| 655275 | 8.0 | 0.917696 | 0.939276 | 0.928486 |

| 624827 | 9.0 | 0.834351 | 0.998339 | 0.916345 |

| 459936 | 9.0 | 0.950638 | 0.872689 | 0.911664 |

| 252628 | 7.0 | 0.926104 | 0.887597 | 0.906850 |

| 370639 | 9.0 | 0.923677 | 0.876586 | 0.900131 |

| … | … | … | … | … |

Table 8.

Results of the recommended item average.

| Average Rating of Recommended Items | Average Rating from User | Improvement over Average Rating |

|---|

| 8.6 (out of 10) | 7.38 (out of 10) | 16.53% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).