An Interactive Virtual Home Navigation System Based on Home Ontology and Commonsense Reasoning

Abstract

:1. Introduction

- We proposed a method for presenting candidate guides from ambiguous requests based on home ontology and common-sense reasoning.

- We proposed a method for constructing home ontology from a VirtualHome environment graph semi-automatically.

- To evaluate the proposed method, we created a dataset consisting of pairs of “utterances about ambiguous requests in the home” and “objects or rooms in VirtualHome associated with those requests.”

2. Related Works

2.1. Object Navigation

2.2. Embodied Question Answering

2.3. Vision-Dialog Navigation

2.4. Common-Sense Knowledge Graph

2.5. Home Ontology

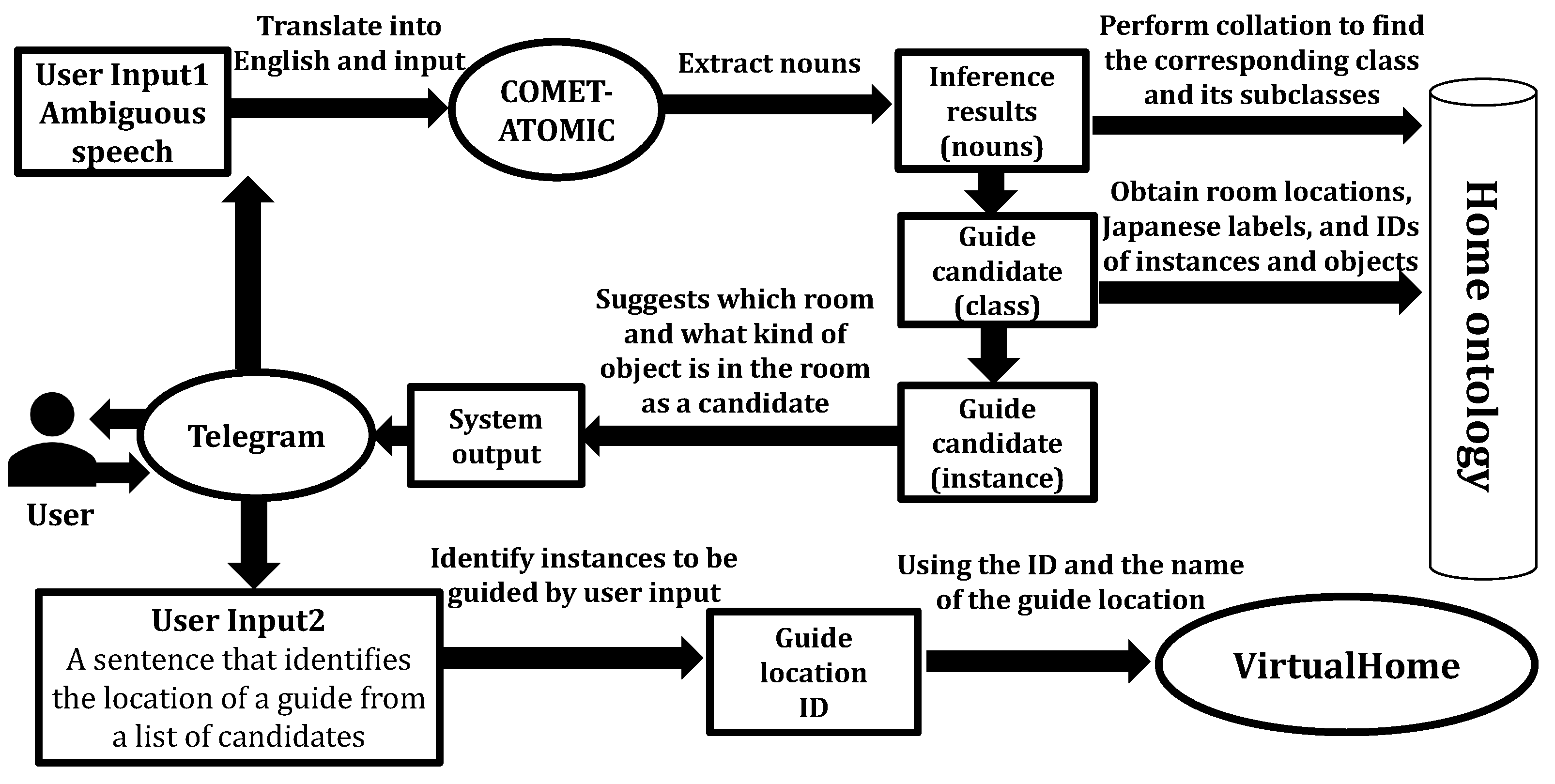

3. Proposed System

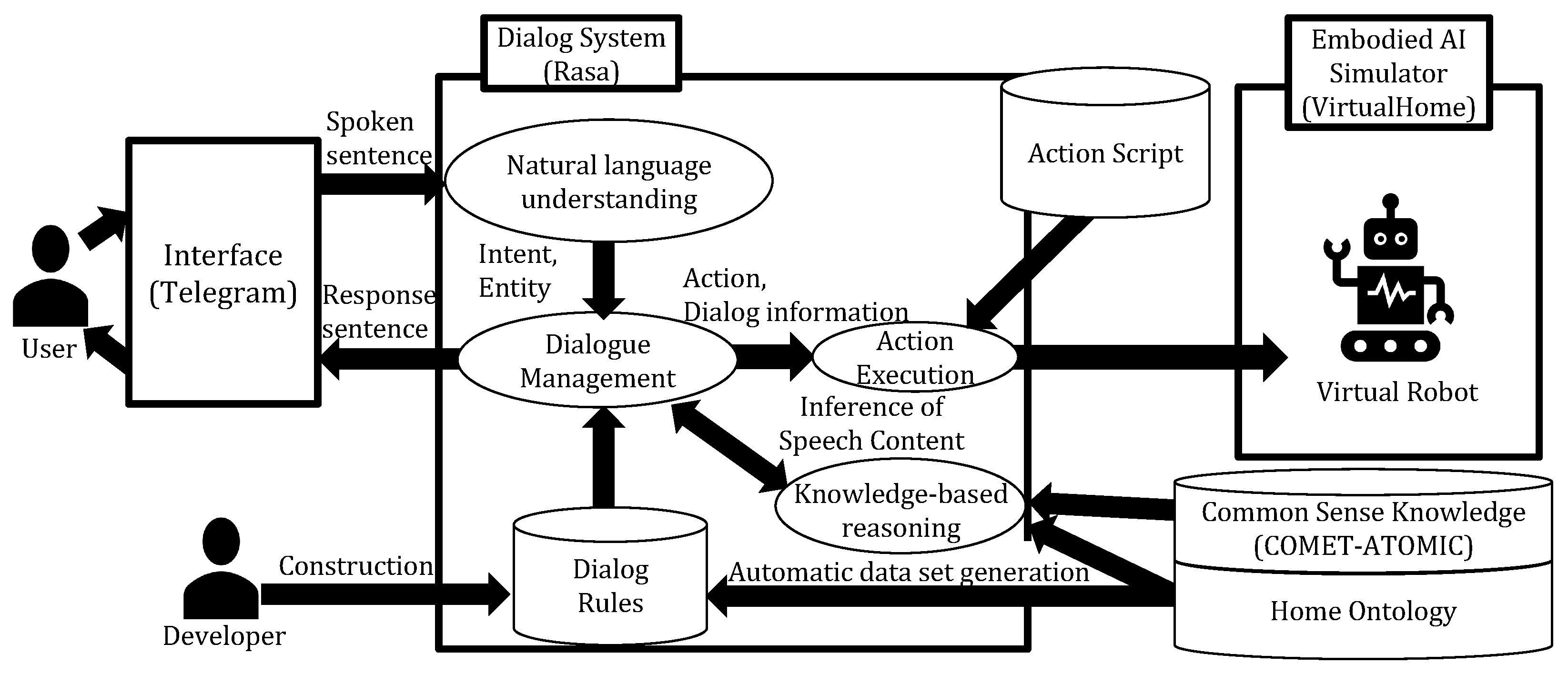

3.1. System Configuration

- It can extract intentions and entities from user input sentences;

- Its ability to configure dialogue rules and slots;

- Capable of executing programs created by the user based on dialogue rules;

- Can be integrated with existing interfaces;

- The development scale is large, and the framework is well documented and easy to use.

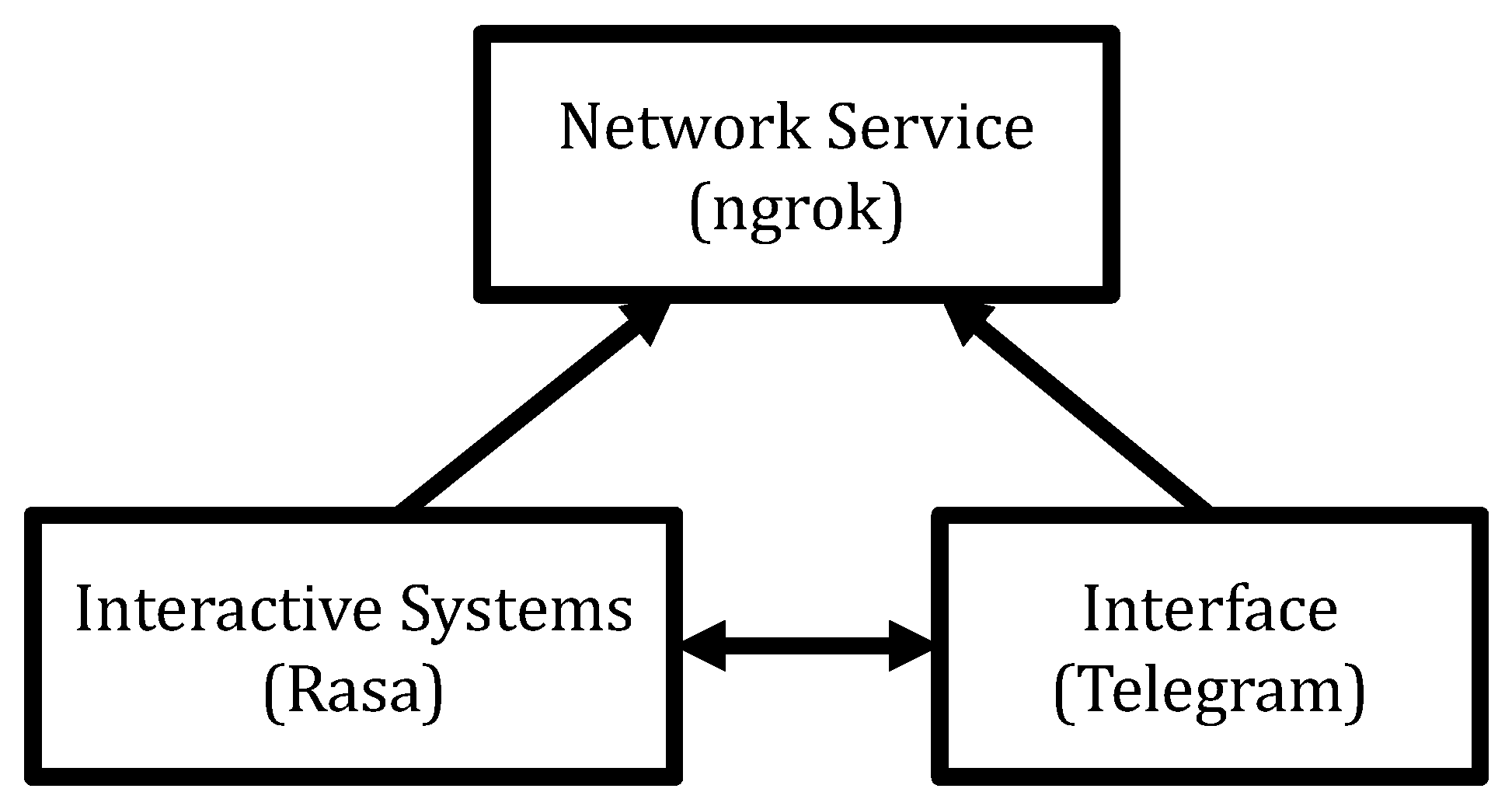

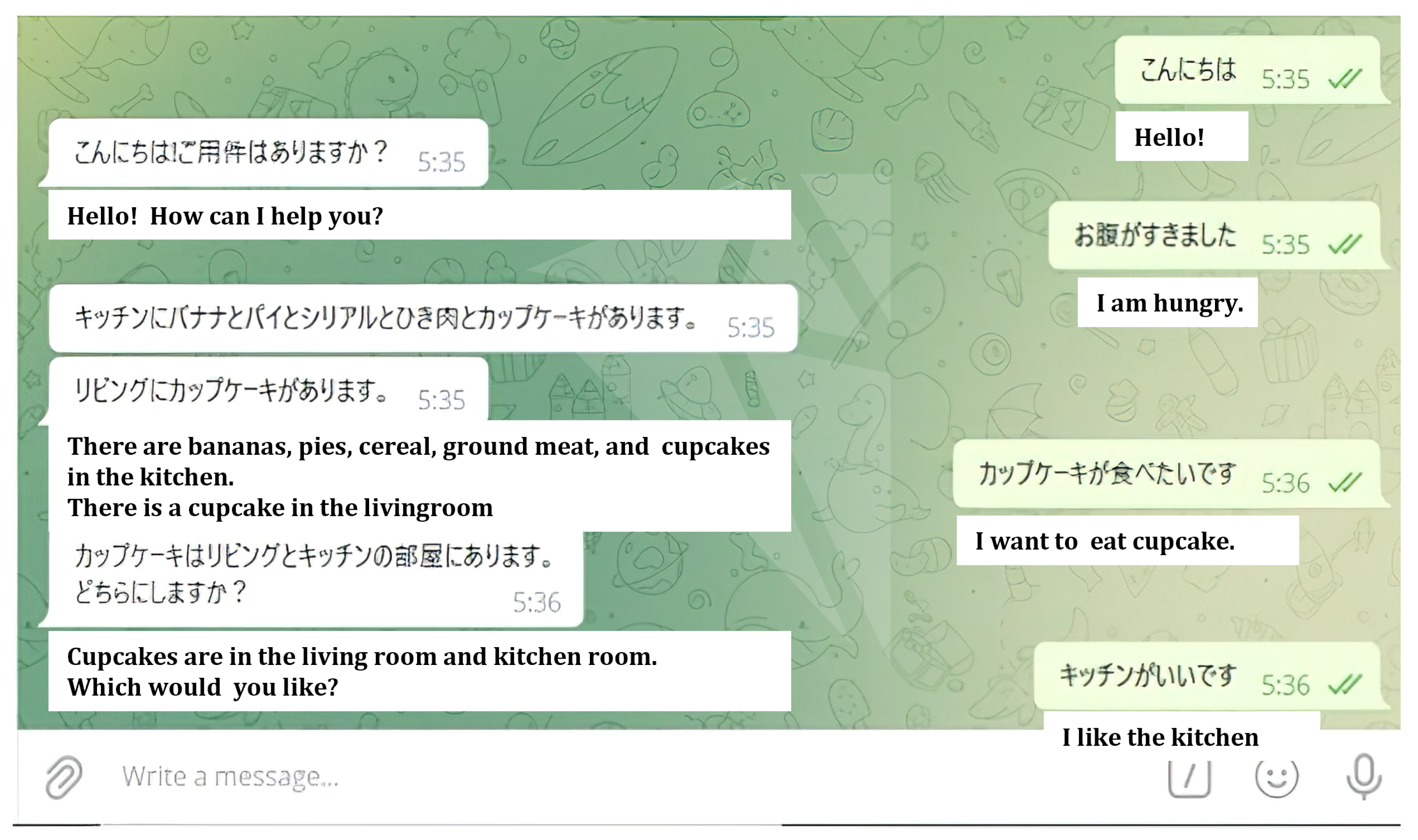

3.2. Interface

3.3. Dialogue System

3.3.1. Overview

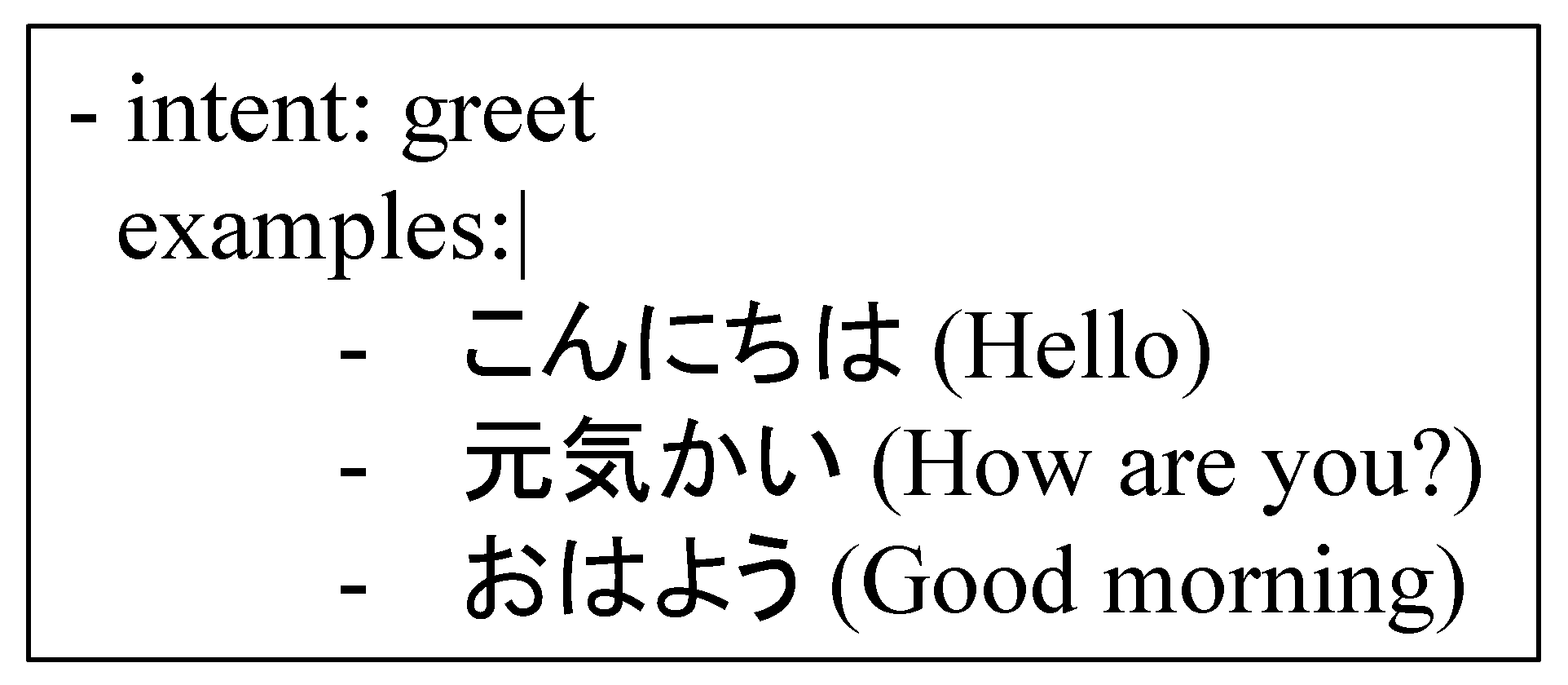

3.3.2. Natural Language Understanding Module

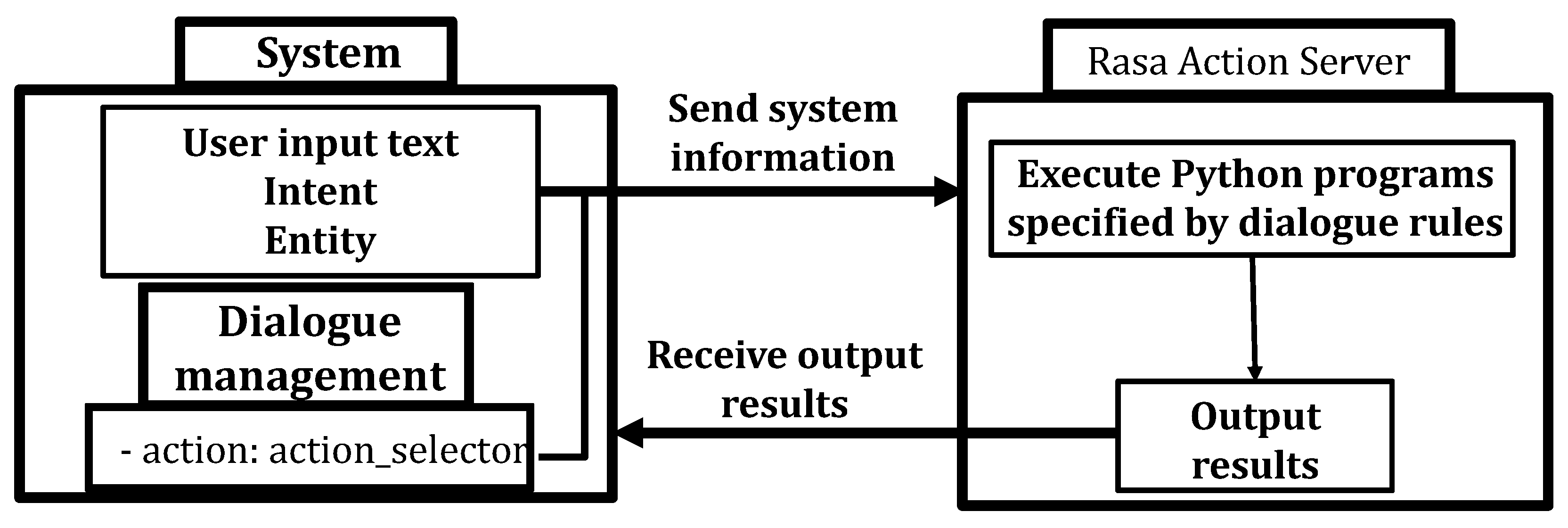

3.3.3. Dialogue Management Module

- Rules for greeting rule:When the intention “greet” is extracted, the robot’s behavior is to return the greeting.

- Rules for farewell greeting:When the intention “goodbye” is extracted, the robot’s behavior returns the greeting.

- Rules for the case when the classification of intentions is not possible:When the intention classification score is low in the natural language understanding component, i.e., the intention cannot be extracted from the user’s speech, the intention “nlu_fallback” is returned, and the system responds with content that does not understand the user’s speech.

- Rules for greeting the user when the system starts up:The system greets the user when the system starts up.

- Rules for ambiguous speech:This rule is used when an ambiguous utterance such as “I am thirsty”. is made, and “ambiguous” is extracted by the Natural Language Understanding module. This rule uses the reasoning module to find the best guidance candidate for the ambiguous utterance and suggests it to the user. Then, after multiple conversations with the user, the system identifies the guidance location and guides the user on VirtualHome. This sequence of events is written in Python and executed through the Rasa Action Server.

- Rules for cases where the name of the location is included and there is no need for inference:If the name of the location is extracted as an Entity in the speech, it is used to identify the location by class matching with the home ontology, and if the Entity is not extracted well, the location is inferred using the inference module.

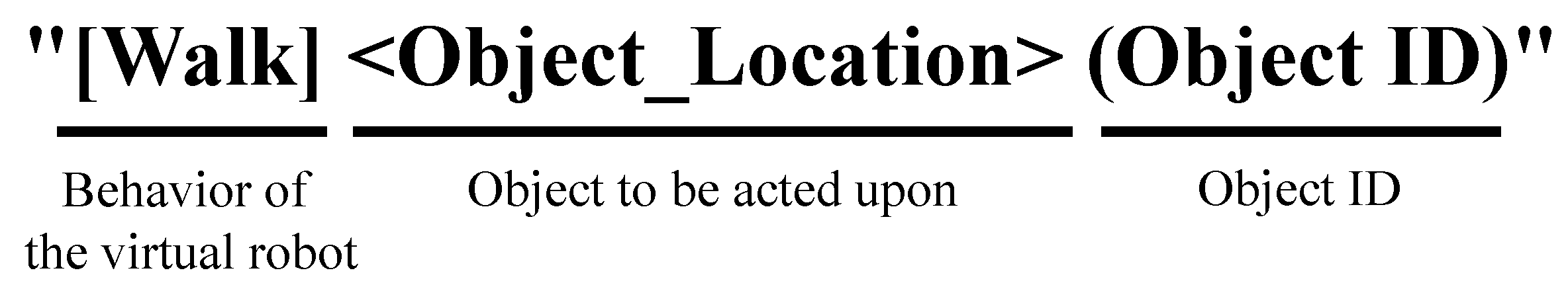

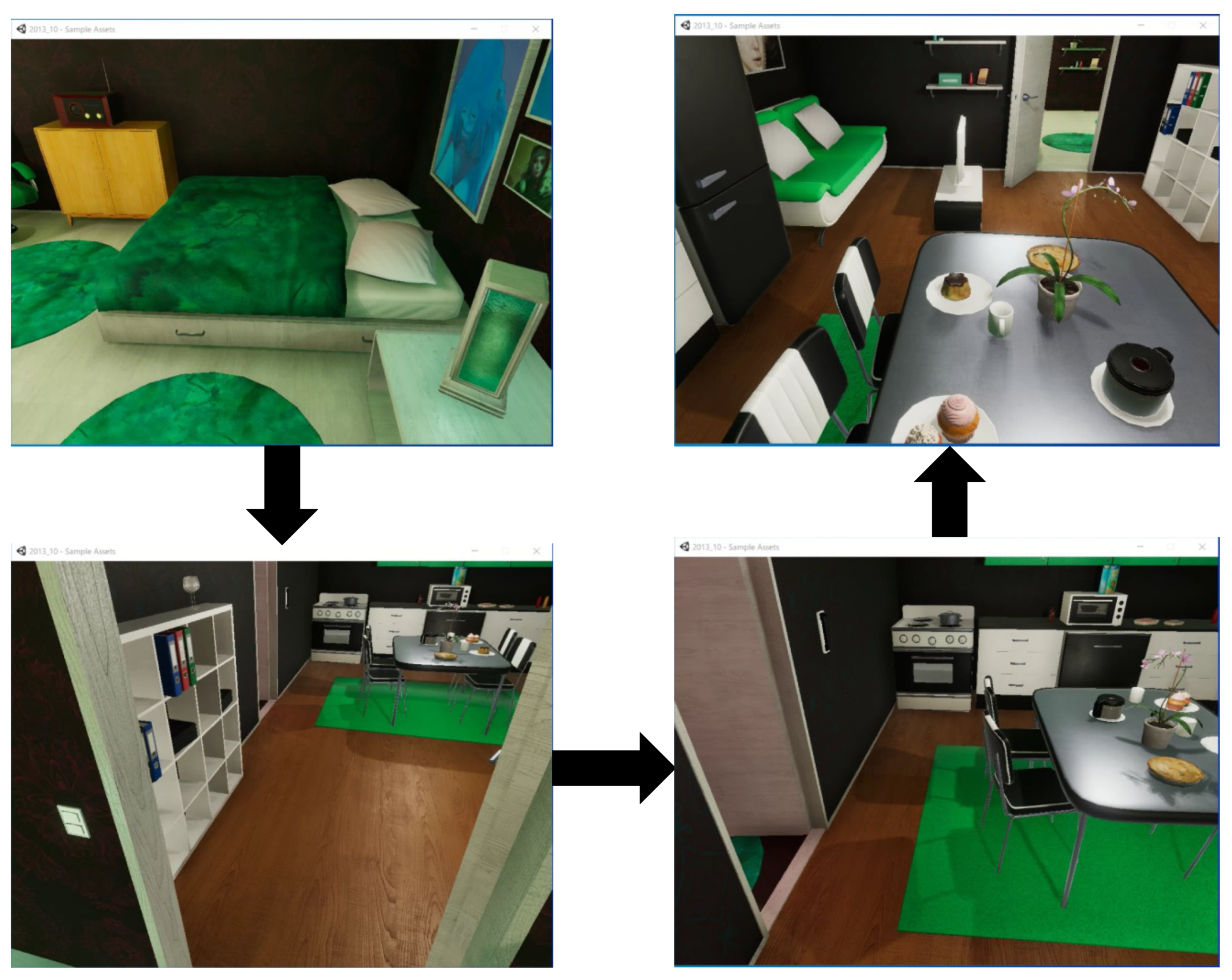

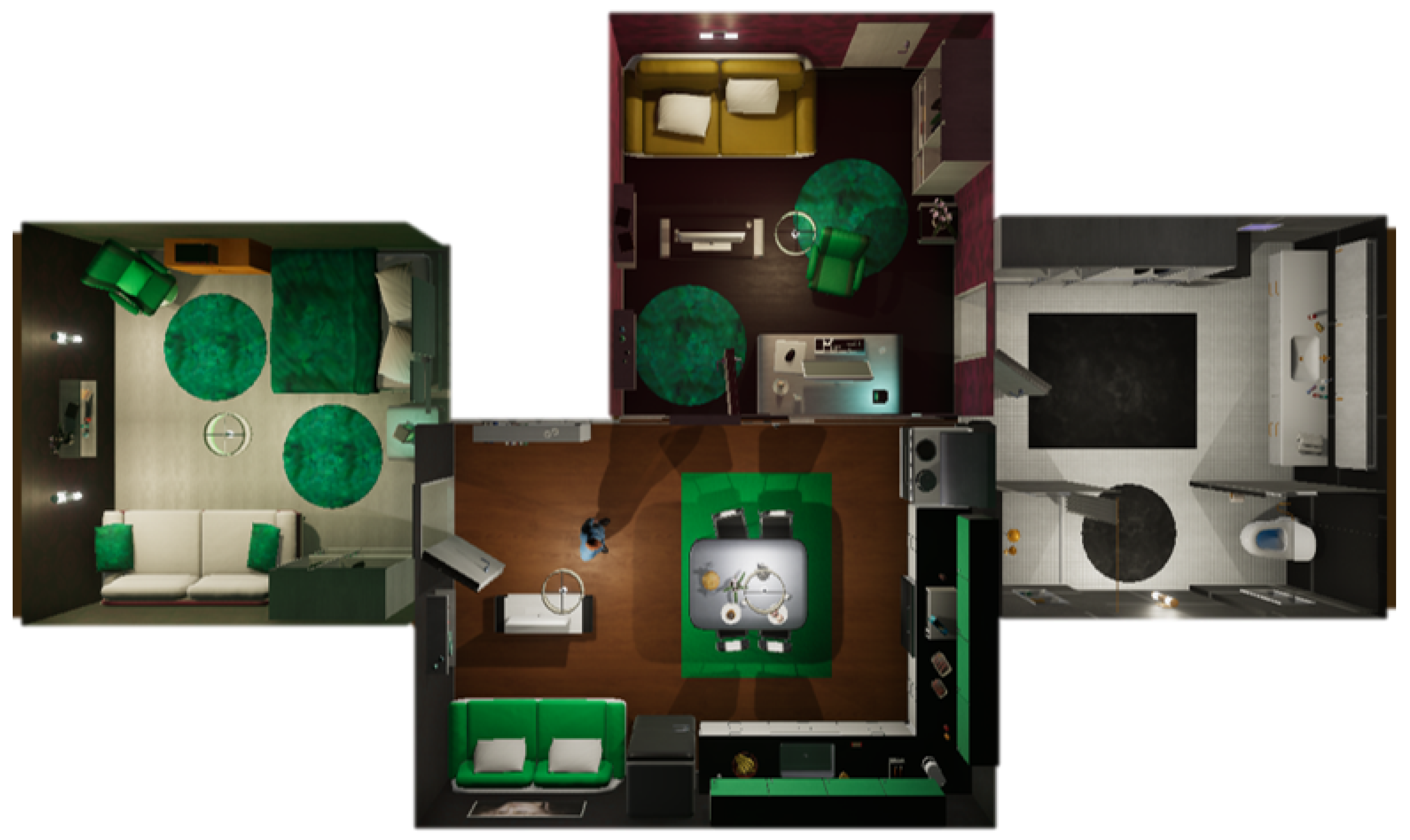

3.4. Embodied AI Simulator

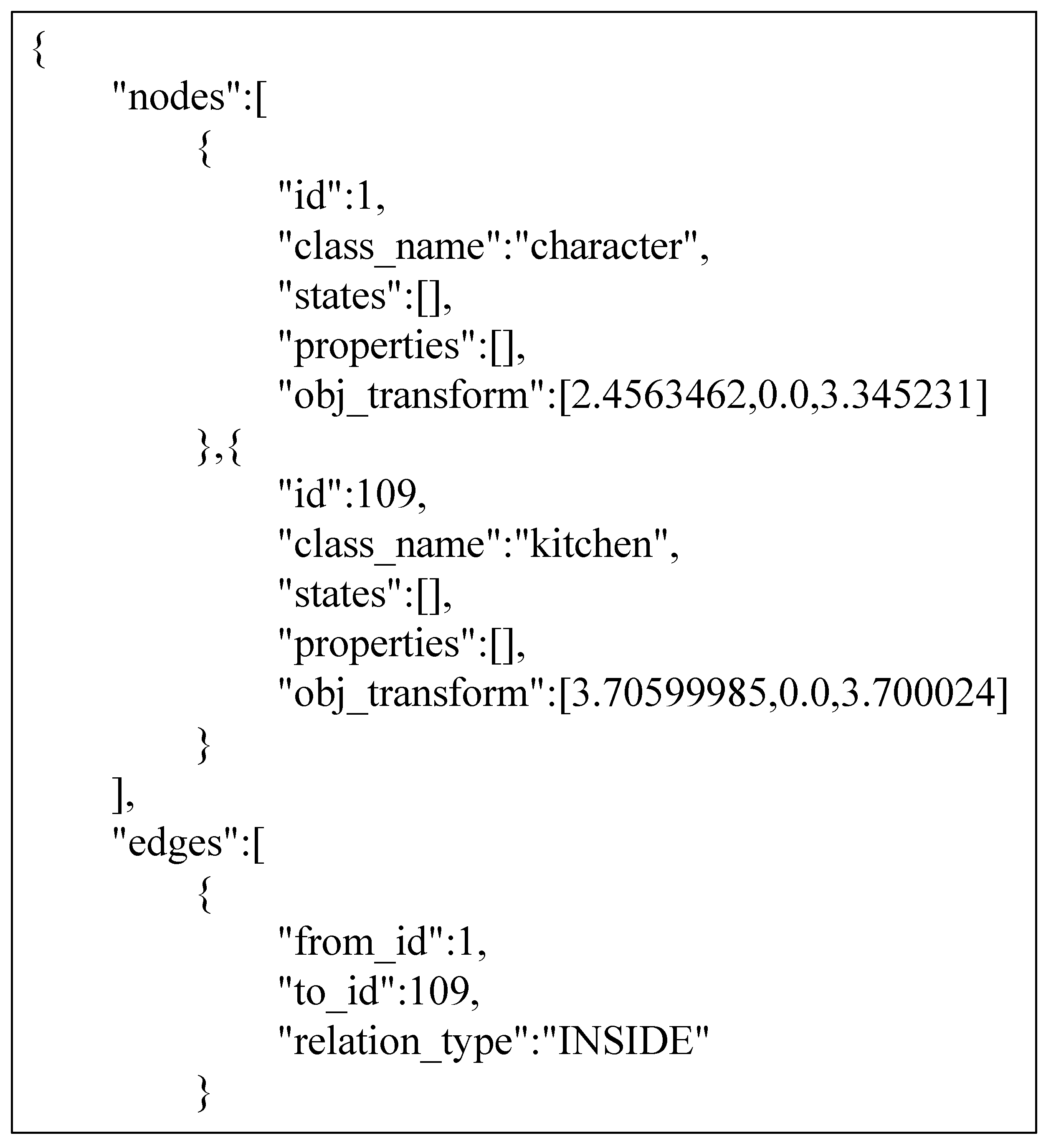

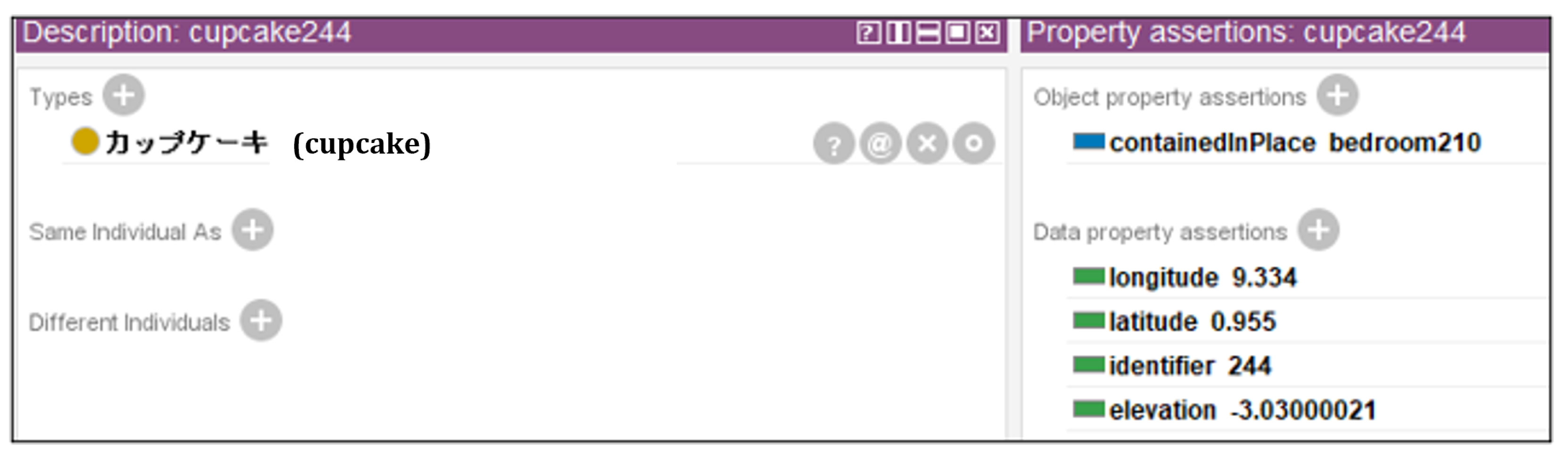

3.5. Home Ontology

3.6. Knowledge-Based Reasoning

- xNeedThis relationship means “actions that humans need to take in response to certain events”;

- xEffectThis relationship means “events that humans cause in response to certain events”;

- AtLocationThis relationship means “places where certain objects may be found”.

3.7. Execution Example of the Proposed System

4. Evaluation

4.1. Outline of the Evaluation Experiment

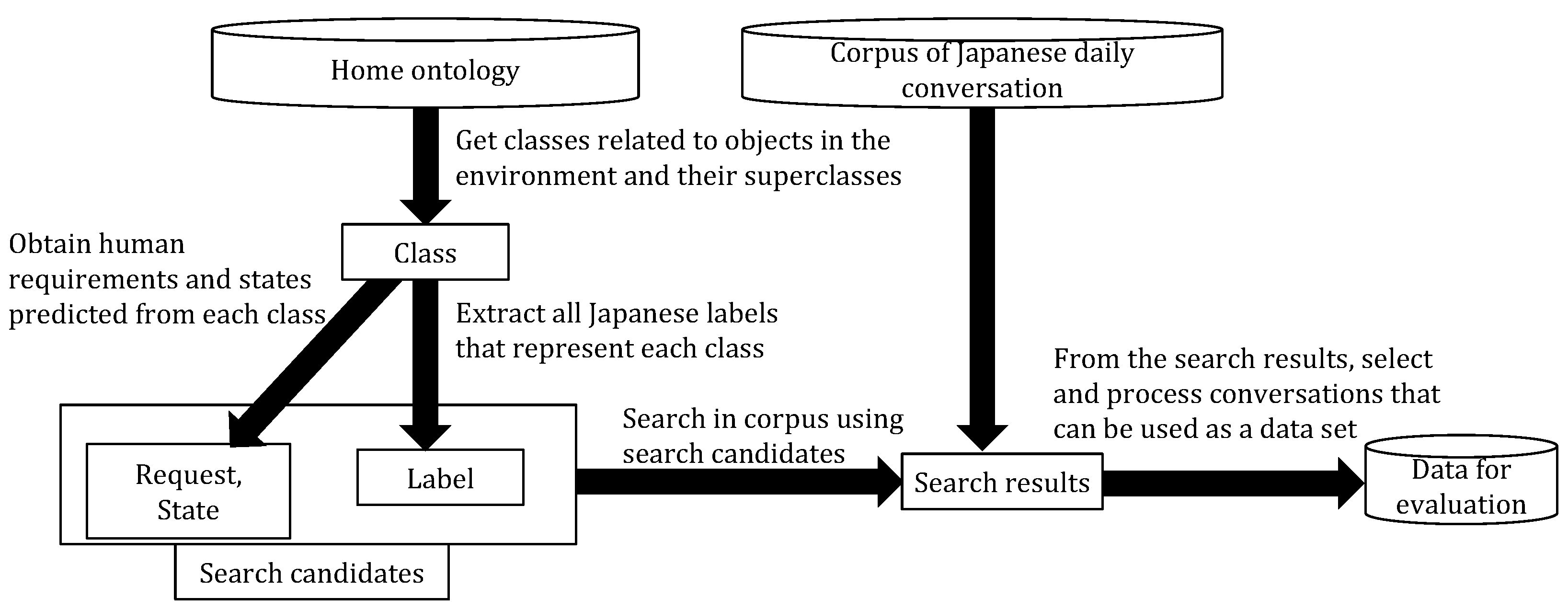

4.2. Data for Evaluation

4.3. Evaluation Method

4.4. Experimental Results

4.5. Discussion

5. Conclusions

5.1. Summary

5.2. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Example of Speech | Correct Answer Data | System Output | Precision | Recall | F-Value |

|---|---|---|---|---|---|

| I’m hungry. | kitchen, fridge, pies, cereals, kitchen table, minced meat, cutlets, bananas, pudding, bread slices, cupcakes | There is a banana, a slice of bread, a cutlet, ground meat, cereal, pudding, plates, cupcakes, pie, refrigerator, and kitchen table in the kitchen. There are plates and cupcakes and a desk in the living room. | 0.75 | 1.00 | 0.86 |

| I need somewhere to sit | sofa, chair | There is a chair and a couch and a desk in the living room. There is a chair and a sofa and a kitchen table in the kitchen. There is a chair and a sofa in the bedroom. | 0.75 | 1.00 | 0.86 |

| I’m feeling a little sleepy. | bed, bedroom | There is a bed in the bedroom. There is a toilet in the bathroom. | 0.50 | 1.00 | 0.67 |

| It’s so cold. | fireplace | No candidate for guidance found. | 0.00 | 0.00 | 0.00 |

| It’s awfully bright | electric switch, dable lamp, wall lamp | I will take you to the bathroom in the bathroom. | 0.00 | 0.00 | 0.00 |

| I’m a little thirsty. | kitchen, refrigerator, milkshake | I will take you to the refrigerator in the kitchen. | 1.00 | 0.67 | 0.80 |

| I will read a book. | books | There are books and a TV in the living room. There are books in the bedroom. There is a TV in the kitchen. | 0.50 | 1.00 | 0.67 |

| My hair is in the way. | hair products | No candidate for directions found. | 0.00 | 0.00 | 0.00 |

| I will have to do a little cooking from now on | kitchen, refrigerator | I will take you to the refrigerator in the kitchen. | 1.00 | 1.00 | 1.00 |

| I’m gonna wipe my nose. | towels | There is a cupboard in the bedroom. There is a kitchen cabinet in the kitchen. There is a cabinet and a toilet in the bathroom. | 0.00 | 0.00 | 0.00 |

| I have a tummy ache. | toilet, painkiller | There is a cabinet and a toilet in the bathroom. There is a cupboard in the bedroom. There is a kitchen cabinet in the kitchen. | 0.25 | 0.50 | 0.33 |

| I just want to play. | no correct answer | I will take you to the bathroom in the bathroom. | 0.00 | 0.00 | 0.00 |

| I’d like to do some printing, too. | books, computer | There are books and a desk in the living room. There are books in the bedroom. | 0.67 | 0.50 | 0.57 |

| It’s cold without a jacket. | closet | I take you to the bed in the bedroom. | 0.00 | 0.00 | 0.00 |

| Shall we sit down? | sofa, chair | There is a chair, a sofa, and a desk in the living room. There is a chair and a sofa and a kitchen table in the kitchen. There is a chair and a sofa in the bedroom. | 0.75 | 1.00 | 0.86 |

| I want to watch TV. | tv | There is a TV in the living room and in the kitchen room. Which would you like? | 1.00 | 1.00 | 1.00 |

| Do you have a kitchen? | kitchen | There is a banana, a slice of bread, a cutlet, ground meat, cereal, pudding, cupcakes and pie in the kitchen. There are cupcakes in the living room. | 0.09 | 1.00 | 0.17 |

| I do not have any pictures or anything. | picture frames, wall picture frames | There are books, wall picture frames and picture frames in the living room. There are wall picture frames and picture frames in the kitchen. There are books, wall picture frames and picture frames in the bedroom. There is a wall picture frame in the bathroom. | 0.78 | 1.00 | 0.88 |

| Maybe you could play the guitar for me. | no correct answer | No candidate for guidance found. | 1.00 | 1.00 | 1.00 |

| It’s not very warm in here, is it? | fireplace | I will take you to the bathroom in the bathroom. | 0.00 | 0.00 | 0.00 |

| I will leave the door open. | door | Doors are in the kitchen, bedroom and bathroom room. Which one would you like? | 1.00 | 1.00 | 1.00 |

| I’m going to bed. | bed, bedroom | There is a bed in the bedroom. There is a toilet in the bathroom. | 0.50 | 1.00 | 0.67 |

| When you make something, you need tools. | no correct answer | I will take you to the box in the kitchen. | 0.00 | 0.00 | 0.00 |

| Where do you keep all your T-shirts? | y-shirt, closet drawer | There is a closet drawer and a Y-shirt in the living room. There is a closet drawer and a Y-shirt in the bedroom. There is a closet drawer, a towel rack, and a Y-shirt in the bathroom. | 0.86 | 1.00 | 0.92 |

| I was gonna put out the hand towels. | towel | There is a toilet in the bathroom. There is a desk in the living room. | 0.00 | 0.00 | 0.00 |

| Is there, like, a smoking room or something? | no correct answer | I will take you to the bathroom in the bathroom. | 0.00 | 0.00 | 0.00 |

| You’d have to light the stove or something. | fireplace | I will take you to the fireplace in the kitchen. | 1.00 | 1.00 | 1.00 |

| It’s cold in here. | y-shirt, closet, pile of clothes | The closets are in the living room, the bedroom and the bathroom room. Which one would you like? | 1.00 | 0.67 | 0.80 |

| Do you have some kind of bowl? | bottles, mugs, coffee pot, cooking pot | There are bottles and mugs in the living room. There is a bottle, a coffee pot, a cooking pot and mugs in the kitchen. There is a bottle in the bathroom. | 1.00 | 0.80 | 0.89 |

| I like cold milk on a hot day. | refrigerator, milkshake | I will take you to the refrigerator in the kitchen. | 1.00 | 0.50 | 0.67 |

| I’m thirsty. | kitchen, refrigerator, milkshake | There is a toilet in the bathroom. There is a refrigerator in the kitchen. | 0.50 | 0.67 | 0.57 |

| Heat it up in the microwave. | microwave oven | There is a microwave, a banana, a slice of bread, a cutlet, ground meat, cereal, pudding, cupcakes, pie, and a refrigerator in the kitchen. There are cupcakes in the living room. | 0.09 | 1.00 | 0.17 |

| Where do you keep your T-shirts? | y-shirts, closet drawers | There is a closet drawer and a Y-shirt in the living room. There is a closet drawer and a y-shirt in the bedroom. There is a closet drawer, a towel rack, and a Y-shirt in the bathroom. | 0.86 | 1.00 | 0.92 |

| I feel awfully sleepy. | bed, bedroom | I will take you to the bed in the bedroom. | 1.00 | 1.00 | 1.00 |

| I’m kind of out of reach. | tv stand | There are books and a cell phone in the living room. There is a cell phone in the kitchen. There is a book in the bedroom. | 0.00 | 0.00 | 0.00 |

| I will call you in a bit. | cell phone | There is a book and a cell phone in the living room. There is a cell phone in the kitchen. There are books in the bedroom. | 0.50 | 1.00 | 0.67 |

| Let me take a picture. | picture frames, wall picture frames | There is a wall picture frame and a picture frame in the living room. There is a wall picture frame and a picture frame in the kitchen. There is a wall photo frame and a photo frame in the bedroom. There is a booth and a wall picture frame in the bathroom. | 0.88 | 1.00 | 0.93 |

| You want a banana? | banana | I will take you to the refrigerator in the kitchen. | 0.00 | 0.00 | 0.00 |

| Where is my phone? | portable | There are books and a cell phone in the living room. There is a cell phone in the kitchen. There are books in the bedroom. There is a booth in the bathroom. | 0.40 | 1.00 | 0.57 |

| It’s in the way. | boxes, wall shelves, cupboards, bathroom shelves | Doors are in the kitchen, the bedroom and the bathroom room. Which one do you want? | 0.00 | 0.00 | 0.00 |

| Is it getting dark? | electric switch, dable lamp, wall lamp | I will take you to the bathroom in the bath room. | 0.00 | 0.00 | 0.00 |

| I have to go to the bathroom. | toilet | There is a booth, a toilet and a door in the bathroom. There is a door in the kitchen. There is a door to the bedroom. | 0.20 | 1.00 | 0.33 |

| Your hands are dirty. | toilet, sink, detergent | There is a closet and a toilet in the bathroom. There is a closet in the living room. There is a closet in the bedroom. | 0.25 | 0.33 | 0.29 |

| I will get you a glass of water. | refrigerator | I will take you to the refrigerator in the kitchen. | 1.00 | 1.00 | 1.00 |

| Wash the dishcloth properly, too. | sink, detergent | There is a closet drawer and a desk in the living room. There is a kitchen table in the kitchen. There is a closet drawer in the bedroom. There is a closet drawer in the bathroom. | 0.00 | 0.00 | 0.00 |

| Is there somewhere I can relax? | bedroom, living room, bathroom, bed, sofa | There is a desk and closet drawers in the living room. There is a closet drawer in the bedroom. There is a closet drawer in the bathroom. | 0.43 | 0.60 | 0.50 |

| Is there somewhere I can sit? | sofa, chair | There is a desk, sofa and chairs in the living room. There is a kitchen table, sofa and chairs in the kitchen. There is a sofa and chair in the bedroom. | 0.75 | 1.00 | 0.86 |

| I want to wash up | bathroom, sequins, towels | In the bathroom there is toilet paper, towels, toilet, and a sceen. There is detergent in the kitchen. | 0.57 | 1.00 | 0.73 |

| I want to take a bath | bathroom | I will take you to the toilet in the bathroom. | 0.50 | 1.00 | 0.67 |

| I need to cook | kitchen, bowls, cooking pot, refrigerator, kitchen shelves, minced meat, cutlet, banana, bread cutter | I will take you to the refrigerator in the kitchen. | 1.00 | 0.22 | 0.36 |

| I need to get my period in order | cabinets, cupboards, kitchen cabinets, closets, piles of clothes, folders | There is a toilet and a cabinet in the bathroom. There is a cupboard in the bedroom. There is a kitchen cabinet in the kitchen. | 0.75 | 0.50 | 0.60 |

| I need to do my hair | toilet, hair products | I will take you to the toilet in the bathroom. | 1.00 | 0.50 | 0.67 |

| I want to read a book | books | The books are in the living room and the bedroom room. Which would you like? | 1.00 | 1.00 | 1.00 |

| I want to get out of this room | doors | There is a toilet and door in the bathroom. There is a door in the kitchen. There is a door and a bed in the bedroom. | 0.60 | 1.00 | 0.75 |

| I want to hang up my pictures | wall picture frames, picture frames | There is a wall picture frame and a book and picture frame in the living room. There is a wall picture frame and a picture frame in the kitchen. There is a wall picture frame, a book and a picture frame in the bedroom. There is a wall picture frame in the bathroom. | 0.78 | 1.00 | 0.88 |

| I’m getting sleepy. | bedroom, bed | There is a bed in the bedroom. There is a toilet in the bathroom. | 0.50 | 1.00 | 0.67 |

| I want to make coffee | coffee makers | I will take you to the fireplace in the kitchen. | 0.00 | 0.00 | 0.00 |

| Do you have anything to hang clothes on? | closets, hangers | There is a closet in the living room. There is a closet in the bedroom. There is a toilet and a closet in the bathroom. | 0.75 | 0.50 | 0.60 |

References

- Duan, J.; Yu, S.; Tan, H.L.; Zhu, H.; Tan, C. A Survey of Embodied AI: From Simulators to Research Tasks. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 230–244. [Google Scholar] [CrossRef]

- Chaplot, D.S.; Gandhi, D.P.; Gupta, A.; Salakhutdinov, R.R. Object Goal Navigation using Goal-Oriented Semantic Exploration. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 4247–4258. [Google Scholar]

- Liu, X.; Muise, C. A Neural-Symbolic Approach for Object Navigation. In Proceedings of the 2nd Embodied AI Workshop (CVPR 2021), Virtual, 19–25 June 2021. [Google Scholar]

- Ye, J.; Batra, D.; Das, A.; Wijmans, E. Auxiliary Tasks and Exploration Enable ObjectGoal Navigation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 16117–16126. [Google Scholar]

- Ramrakhya, R.; Undersander, E.; Batra, D.; Das, A. Habitat-Web: Learning Embodied Object-Search Strategies from Human Demonstrations at Scale. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Das, A.; Datta, S.; Gkioxari, G.; Lee, S.; Parikh, D.; Batra, D. Embodied Question Answering. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Gordon, D.; Kembhavi, A.; Rastegari, M.; Redmon, J.; Fox, D.; Farhadi, A. IQA: Visual Question Answering in Interactive Environments. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4089–4098. [Google Scholar]

- Yu, L.; Chen, X.; Gkioxari, G.; Bansal, M.; Berg, T.L.; Batra, D. Multi-Target Embodied Question Answering. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE Computer Society: Los Alamitos, CA, USA, 2019; pp. 6302–6311. [Google Scholar] [CrossRef] [Green Version]

- Tan, S.; Xiang, W.; Liu, H.; Guo, D.; Sun, F. Multi-Agent Embodied Question Answering in Interactive Environments. In Proceedings, Part XIII, Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 663–678. [Google Scholar] [CrossRef]

- Zhu, F.; Zhu, Y.; Chang, X.; Liang, X. Vision-Language Navigation With Self-Supervised Auxiliary Reasoning Tasks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10009–10019. [Google Scholar] [CrossRef]

- Thomason, J.; Murray, M.; Cakmak, M.; Zettlemoyer, L. Vision-and-Dialog Navigation. In Proceedings of Machine Learning Research, Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; Kaelbling, L.P., Kragic, D., Sugiura, K., Eds.; PMLR: Boulder, CO, USA, 2020; Volume 100, pp. 394–406. [Google Scholar]

- Zhu, Y.; Zhu, F.; Zhan, Z.; Lin, B.; Jiao, J.; Chang, X.; Liang, X. Vision-Dialog Navigation by Exploring Cross-Modal Memory. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10727–10736. [Google Scholar] [CrossRef]

- Kolve, E.; Mottaghi, R.; Han, W.; VanderBilt, E.; Weihs, L.; Herrasti, A.; Gordon, D.; Zhu, Y.; Gupta, A.K.; Farhadi, A. AI2-THOR: An Interactive 3D Environment for Visual AI. arXiv 2017, arXiv:1712.05474. [Google Scholar]

- Batra, D.; Gokaslan, A.; Kembhavi, A.; Maksymets, O.; Mottaghi, R.; Savva, M.; Toshev, A.; Wijmans, E. ObjectNav Revisited: On Evaluation of Embodied Agents Navigating to Objects. arXiv 2020, arXiv:2006.13171. [Google Scholar]

- Wu, Y.; Wu, Y.; Gkioxari, G.; Tian, Y. Building generalizable agents with a realistic and rich 3D environment. arXiv 2018, arXiv:1801.02209. [Google Scholar]

- Pejsa, T.; Kantor, J.; Benko, H.; Ofek, E.; Wilson, A. Room2Room: Enabling Life-Size Telepresence in a Projected Augmented Reality Environment. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing; Association for Computing Machinery (CSCW ’16), New York, NY, USA, 27 February–2 March 2016; pp. 1716–1725. [Google Scholar] [CrossRef]

- Hwang, J.D.; Bhagavatula, C.; Bras, R.L.; Da, J.; Sakaguchi, K.; Bosselut, A.; Choi, Y. COMET-ATOMIC 2020: On Symbolic and Neural Commonsense Knowledge Graphs. In Proceedings of the AAAI, Virtual, 2–9 February 2021. [Google Scholar]

- Vassiliades, A.; Bassiliades, N.; Gouidis, F.; Patkos, T. A Knowledge Retrieval Framework for Household Objects and Actions with External Knowledge. In Proceedings of the Semantic Systems; Blomqvist, E., Groth, P., de Boer, V., Pellegrini, T., Alam, M., Käfer, T., Kieseberg, P., Kirrane, S., Meroño-Peñuela, A., Pandit, H.J., Eds.; In the Era of Knowledge Graphs. Springer International Publishing: Cham, Switzerland, 2020; pp. 36–52. [Google Scholar]

- Egami, S.; Nishimura, S.; Fukuda, K. A Framework for Constructing and Augmenting Knowledge Graphs using Virtual Space: Towards Analysis of Daily Activities. In Proceedings of the 2021 IEEE 33rd International Conference on Tools with Artificial Intelligence (ICTAI), Washington, DC, USA, 1–3 November 2021; pp. 1226–1230. [Google Scholar] [CrossRef]

- Zhang, Z.; Takanobu, R.; Zhu, Q.; Huang, M.; Zhu, X. Recent advances and challenges in task-oriented dialog systems. Sci. China Technol. Sci. 2020, 63, 2011–2027. [Google Scholar] [CrossRef]

- Burtsev, M.; Seliverstov, A.; Airapetyan, R.; Arkhipov, M.; Baymurzina, D.; Bushkov, N.; Gureenkova, O.; Khakhulin, T.; Kuratov, Y.; Kuznetsov, D.; et al. DeepPavlov: Open-Source Library for Dialogue Systems. In Proceedings of the ACL 2018, System Demonstrations; Association for Computational Linguistics: Melbourne, PA, Australia, 2018; pp. 122–127. [Google Scholar] [CrossRef]

- Ultes, S.; Rojas-Barahona, L.M.; Su, P.H.; Vandyke, D.; Kim, D.; Casanueva, I.; Budzianowski, P.; Mrkšić, N.; Wen, T.H.; Gašić, M.; et al. PyDial: A Multi-domain Statistical Dialogue System Toolkit. In Proceedings of the ACL 2017, System Demonstrations; Association for Computational Linguistics: Vancouver, BC, Canada, 2017; pp. 73–78. [Google Scholar]

- Chen, H.; Liu, X.; Yin, D.; Tang, J. A Survey on Dialogue Systems: Recent Advances and New Frontiers. SIGKDD Explor. Newsl. 2017, 19, 25–35. [Google Scholar] [CrossRef]

- Puig, X.; Ra, K.; Boben, M.; Li, J.; Wang, T.; Fidler, S.; Torralba, A. VirtualHome: Simulating Household Activities Via Programs. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE Computer Society: Los Alamitos, CA, USA, 2018; pp. 8494–8502. [Google Scholar] [CrossRef] [Green Version]

- Savva, M.; Kadian, A.; Maksymets, O.; Zhao, Y.; Wijmans, E.; Jain, B.; Straub, J.; Liu, J.; Koltun, V.; Malik, J.; et al. Habitat: A Platform for Embodied AI Research. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Shen, B.; Xia, F.; Li, C.; Martín-Martín, R.; Fan, L.; Wang, G.; Pérez-D’Arpino, C.; Buch, S.; Srivastava, S.; Tchapmi, L.; et al. iGibson 1.0: A Simulation Environment for Interactive Tasks in Large Realistic Scenes. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 7520–7527. [Google Scholar] [CrossRef]

- Beattie, C.; Leibo, J.Z.; Teplyashin, D.; Ward, T.; Wainwright, M.; Küttler, H.; Lefrancq, A.; Green, S.; Valdés, V.; Sadik, A.; et al. Stig DeepMind Lab. arXiv 2016, arXiv:1612.03801. [Google Scholar]

- Yan, C.; Misra, D.K.; Bennett, A.; Walsman, A.; Bisk, Y.; Artzi, Y. CHALET: Cornell House Agent Learning Environment. CoRR 2018, arXiv:1801.07357. [Google Scholar]

- Gao, X.; Gong, R.; Shu, T.; Xie, X.; Wang, S.; Zhu, S. VRKitchen: An Interactive 3D Virtual Environment for Task-oriented Learning. arXiv 2019, arXiv:1903.05757. [Google Scholar]

- Xiang, F.; Qin, Y.; Mo, K.; Xia, Y.; Zhu, H.; Liu, F.; Liu, M.; Jiang, H.; Yuan, Y.; Wang, H.; et al. SAPIEN: A SimulAted Part-Based Interactive ENvironment. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11094–11104. [Google Scholar] [CrossRef]

- Gan, C.; Schwartz, J.; Alter, S.; Schrimpf, M.; Traer, J.; Freitas, J.D.; Kubilius, J.; Bhandwaldar, A.; Haber, N.; Sano, M.; et al. ThreeDWorld: A Platform for Interactive Multi-Modal Physical Simulation. arXiv 2020, arXiv:2007.04954. [Google Scholar]

- Bocklisch, T.; Faulkner, J.; Pawlowski, N.; Nichol, A. Rasa: Open Source Language Understanding and Dialogue Management. arXiv 2017, arXiv:1712.05181. [Google Scholar]

- Morita, T.; Fukuta, N.; Izumi, N.; Yamaguchi, T. DODDLE-OWL: Interactive Domain Ontology Development with Open Source Software in Java. IEICE Trans. Inf. Syst. 2008, E91.D, 945–958. [Google Scholar] [CrossRef]

- Miller, G.A. WordNet: A Lexical Database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Lamy, J.B. Owlready: Ontology-oriented programming in Python with automatic classification and high level constructs for biomedical ontologies. Artif. Intell. Med. 2017, 80, 11–28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koiso, H.; Den, Y.; Iseki, Y.; Kashino, W.; Kawabata, Y.; Nishikawa, K.; Tanaka, Y.; Usuda, Y. Construction of the Corpus of Everyday Japanese Conversation: An interim report. In Proceedings of the 11th International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018; pp. 4259–4264. [Google Scholar]

- Zhu, Q.; Zhang, Z.; Fang, Y.; Li, X.; Takanobu, R.; Li, J.; Peng, B.; Gao, J.; Zhu, X.; Huang, M. ConvLab-2: An Open-Source Toolkit for Building, Evaluating, and Diagnosing Dialogue Systems. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 142–149. [Google Scholar] [CrossRef]

| Intention | Intention Details | Speech Examples | Number of Speech Examples |

|---|---|---|---|

| greet | User greeting utterances | Hello | 10 |

| goodbye | User’s utterances about farewell speech | Goodbye | 10 |

| guide | Intentions about utterances from which entities can be extracted and from which no inference needs to be made | Please guide me to [kitchen] {“entity”: “guidename”} | 15 |

| ambiguous | Intentions about ambiguous speech | I’m hungry I’m thirsty I’m a little tired I’m getting sleepy I need somewhere to sit | 200 |

| Property | Domain | Range | Description |

|---|---|---|---|

| schema:identifier | Matter, Object, Room | xsd:int | object ID |

| schema:longitude | Matter, Object, Room | xsd:double | x coordinate |

| schema:latitude | Matter, Object, Room | xsd:double | y coordinate |

| schema:elevation | Matter, Object, Room | xsd:double | z coordinate |

| schema:object | Object | xsd:string | object state |

| schema:containedInPlace | Matter, Object | Room | location of the room with the object |

| Types Present in the Home Environment | Name |

|---|---|

| Room | bathroom, bedroom, kitchen, livingroom |

| Object | cupcake, plate, toilet paper, towels, keyboard, washing machine, mouse, sewing kit, etc. |

| Evaluation Data Set | Precision | Recall | F-Value |

|---|---|---|---|

| Japanese Corpus of Everyday Conversation | 0.48 | 0.58 | 0.47 |

| Examples of utterances created by subjects | 0.48 | 0.62 | 0.49 |

| Example of Speech | Correct Answer Data | System Output | Precision | Recall | F-Value |

|---|---|---|---|---|---|

| I’m feeling really sleepy. | bedroom, bed | I will take you to your bed in your bedroom. | 1.00 | 1.00 | 1.00 |

| Example of Speech | Commonsense Relation | COMET-ATOMIC Output Result | Class Matching Results with Home Ontology |

|---|---|---|---|

| I’m feeling really sleepy. | xNeed | ”go to bed”, ”early sleep”, ”drink a lot” | bed |

| xEffect | ”falls asleep”, ”gets tired”, ”gets sleepy” | None | |

| AtLocation | ”bed”, ”house”, ”hospital”, ”bedroom”, ”rest” | bedroom |

| Example of Speech | Correct Answer Data | System Output | Precision | Recall | F-Value |

|---|---|---|---|---|---|

| It’s so cold, is not it? | Fireplace | I could not find a candidate for the guide. | 0.00 | 0.00 | 0.00 |

| Example of Speech | Commonsense Relation | COMET-ATOMIC Output Result | Class Matching Results with Home Ontology |

|---|---|---|---|

| It’s really cold, is not it? | xNeed | ”to go outside.”, ”none” | None |

| xEffect | ”gets cold”, ”get” wet” | None | |

| AtLocation | ”park”, ”car”, ”city”, ”cold”, ”lake” | bedroom |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schalkwijk, A.; Yatsu, M.; Morita, T. An Interactive Virtual Home Navigation System Based on Home Ontology and Commonsense Reasoning. Information 2022, 13, 287. https://doi.org/10.3390/info13060287

Schalkwijk A, Yatsu M, Morita T. An Interactive Virtual Home Navigation System Based on Home Ontology and Commonsense Reasoning. Information. 2022; 13(6):287. https://doi.org/10.3390/info13060287

Chicago/Turabian StyleSchalkwijk, Alan, Motoki Yatsu, and Takeshi Morita. 2022. "An Interactive Virtual Home Navigation System Based on Home Ontology and Commonsense Reasoning" Information 13, no. 6: 287. https://doi.org/10.3390/info13060287

APA StyleSchalkwijk, A., Yatsu, M., & Morita, T. (2022). An Interactive Virtual Home Navigation System Based on Home Ontology and Commonsense Reasoning. Information, 13(6), 287. https://doi.org/10.3390/info13060287