Abstract

Tactile technology in mobile devices makes mediated social touch (MST) a possibility. MST with vibrotactile stimuli can be applied in future online social communication applications. There may be different gestures to trigger vibrotactile stimuli for senders and receivers. In this study, we compared senders with gestures and receivers without gestures to identify the differences in perceiving MST with vibrotactile stimuli. We conducted a user study to explore differences in the likelihood to be understood as a social touch with vibrotactile stimuli between senders and receivers. The results showed that for most MST, when participants acted as senders and receivers, there were no differences in understanding MST with vibrotactile stimuli when actively perceiving with gestures or passively perceiving without gestures. Researchers or designers could apply the same vibrotactile stimuli for senders’ and the receivers’ phones in future designs.

1. Introduction

Mediated social touch (MST) is a new form of remote communication [1]. Recent haptic technology helps to transmit MST over mobile devices. For example, ComTouch [2], POKE [3], CheekTouch [4], and KUSUGURI interface [5] have applied haptic actuators to design vibrations to transmit MST such as patting, poking, tapping, and tickling. Bendi [6] has taken advantage of the material and Internet to transmit MST with tactile feedback.

The abovementioned studies mainly developed prototypes for MST. They presented how the MST could be transmitted between senders and receivers and described what haptic feedbacks were on the receivers’ devices or the senders’ devices. However, they did not test if senders and receivers could both understand the haptic feedbacks, since the senders and receivers might have different gestures when triggering the haptic feedback on mobile devices. For example, Rantala et al. [1] mentioned that during remote communication with mobile devices, a sender actively manipulated the device while a receiver held the device passively.

In this study, we aim to test the differences between a sender and a receiver in perceiving MST with vibrotactile stimuli. The conditions are:

- The sender has specific gestures to trigger and perceive the vibrotactile stimuli, while the receiver just presses the touchscreen one time to trigger the vibrotactile stimuli.

- For the sender, we test the the likelihood to be understood as a social touch (LUMST) with vibrotactile stimuli when actively perceiving with gestures, while for the receiver, we test the LUMST when passively perceiving without gestures.

Vibrotactile stimuli were tested for receivers in our previous study [7]. In addition to the receiver, some researchers have demonstrated that it is also necessary for the sender to confirm the expected vibrotactile stimuli before sending. For example, Ramos et al. [8] mentioned that it was convenient for a sender to achieve specific target forces through additional feedback on the sender’s phone. Park et al. [4] found it necessary to have a self-checking process to transmit social touch to the receiver during phone calls. Chang et al. [2] mentioned that local feedback on a sender’s device helped users to estimate the signal intensity to be transmitted, and therefore, users could be aware of what vibrations they send in remote communication [2].

In this study, we compare the differences between a sender with gestures and a receiver without gestures in perceiving MST with vibrotactile stimuli. Firstly, we introduced vibrotactile stimuli for MST based on a previous study [7]. Then, we described the gestures of MST for the sender and the receiver based on user-defined gestures for MST from [9].

We selected 11 MSTs (nuzzle, poke, press, pull, rock, rub, shake, tap, tickle, toss and tremble) from a Touch Dictionary [9,10]. We did not consider MST with multi-point touch in this study since there were many challenges when designing user experiences of multi-touch interfaces [11]. For example, the use of complex gestures with more than one finger may not always be possible on mobile devices because of different handheld positions and usage [11]. Individual differences, ergonomics, and manufacturers are also all challenges for multi-point touch [11]. Therefore, we did not consider MST with multi-point touch in this study for easier use of the interface.

The research questions are as follows:

- Are there significant differences between a sender with gestures and a receiver without gestures in perceiving MST with vibrotactile stimuli?

- What are the implications for designing and applying MST with vibrotactile stimuli for mobile communication?

2. Related Work

2.1. Haptic Feedback on Receivers’ Mobile Devices When Transmitting MST

Many researchers have mentioned that a sender’s finger motion or gestures could be captured by sensors and transmitted through the Internet to activate haptic feedback on a receiver’s device. For example, Park et al. [4] provided CheekTouch, a bidirectional communication diagram. One user’s finger motion could be rendered on the other user’s mobile device with vibrotactile stimuli via the prototype in real time. Furukawa et al. [5] provided the KUSUGURI interface, which could offer tickling feelings for dyads. The interface was designed based on the theory of “Prediction of one’s behavior suppresses the perception brought about by the behavior” [12]. The vibrations were mainly described in receivers’ devices. Hashimoto et al. [13] provided a novel tactile display for emotional and tactile experiences in remote communication on mobile devices. Through the novel tactile display, receivers could feel different touch gestures such as tapping, tickling, pushing, and caressing, via vibrations. Due to the limitation of the prototype, only the receivers’ devices could trigger vibrations. Hemmert et al. [14] proposed intimate mobiles, which could send grasping by remote communication on mobile phones. A sender performed grasping on the device, and the detected force by embedded sensors could be transmitted to a receiver’s device. The receiver could feel the touch by sensing the pressure through the tightness actuation on their device.

Based on the above, we found that:

- Most studies have mainly provided haptic feedback to receivers. There is a lack of consideration applying haptic feedback to senders. This may make the manipulation of user interaction with the touchscreen not very precise [15]. Meanwhile, Ramos et al. [8] showed that it was necessary to apply local feedback on a sender’s phone when transmitting MST, since the local feedback could help the sender to control their expected force.

- Most studies have mainly designed and provided haptic feedback for prototypes. Their research has not considered if senders and receivers could both understand the designed haptic feedback.

Therefore, in this study, we consider testing if senders and receivers can both understand the vibrotactile stimuli for MST.

2.2. Haptic Feedback on Senders’ Mobile Devices When Transmitting MST

In addition to haptic feedback on receivers’ phones, some studies have considered applying local feedback on senders’ phones. For example, Hoggan et al. [16] proposed Pressages, which transmitted a user’s input pressure to a receiver via vibrations during phone calls. Researchers have considered local feedback for pressure input in a sender’s phone. Therefore, senders and receivers could both feel the vibrations on their phones during message transmission. Rantala et al. [1] provided a mobile prototype to communicate emotional intention via vibrotactile stimuli. Senders could sense the vibrotactile stimuli on their devices while manipulating them. The vibrotactile stimuli could be triggered on senders’ and receivers’ devices simultaneously. Therefore, senders could feel the exact vibrotactile stimuli that they wanted to transmit to receivers. Chang et al. [2] designed ComTouch, which was a vibrotactile communication device. Local feedback on the sender’s device helped users to estimate the signal intensity to be transmitted. Therefore, users could be aware of the vibrations they send during remote communication. Park et al. [6] provided Bendi, which was a shape-changing device for a tactile-visual phone conversation. When senders moved a joystick to transmit social touch, their devices would also bend up or down similar to the receivers’ devices. Therefore, a sender could see what had been sent to a receiver.

Some prototypes have provided self-checking functions for a sender to check the MST they would send to ensure that the haptic feedback on the sender’s and the receiver’s devices were the same. For example, Park et al. [3] provided POKE. The inflatable surface of POKE could send social touch to a receiver. A sender could receive index finger pressure input on the back of their device during a phone call. The POKE had a self-checking function, which helped the sender check whether the tactile feeling they wanted to send was correct.

Based on the above, we observed that:

- Some researchers have checked the feedback before sending it, but this may cause unnecessary delay and workload in the communication. It is a step in the communication process that may not be convenient for some people. Therefore, we want to take this checking step in the design and research process, rather than in the application, to ensure that both senders and receivers can understand the haptic feedback before applying it in real applications.

- A receiver’s receiving of haptic feedback and a sender’s self-checking process of haptic feedback are different. A receiver feels the haptic feedback without gestures. However, in a sender’s self-checking process, the sender feels the haptic feedback along with their gestures, since gestures trigger the haptic feedback. Therefore, differences in perceiving MST with vibrotactile stimuli may occur when gestures are different, especially when complex gestures are applied. For example, in repetitive gestures such as “shake” [9], a sender moves their fingers back and forth on the touchscreen to send the touch [9]. The vibrotactile stimuli will be along with their fingers’ movements. While for a receiver, they may press the button one time to trigger the vibrotactile stimuli and there are no finger movements when they feel the vibrotactile stimuli.

In this study, we check if the senders and receivers could both understand the vibrotactile stimuli for MST. The senders actively perceive with gestures, while the receivers passively perceive without gestures.

2.3. The Differences between Actively and Passively Perceiving Haptic Feedback

Many engineering studies have explored the differences between actively and passively perceiving haptic feedback. For example, perceived roughness is an interesting topic in the active and passive touch of surface texture. Lederman [17] investigated the perceived roughness in active and passive communication. The study found no significant differences in the perceived magnitude of surface roughness and the consistency of such judgments between the two conditions. Hatzfeld [18] also mentioned a duplex theory of roughness perception, which meant active and passive touch conditions did not affect the perceived roughness.

Other haptic devices have also been used in different applications to explore the perception differences between active and passive touch. For example, Symmons et al. [19] used a Phantom force-feedback device to explore virtual three-dimensional geometric shapes. The results showed that, as compared with passive exploration, active exploration had significantly shorter latencies. Vitello et al. [20] examined the tactile suppression between active and passive touch. The results indicated that active movements lead to a significant decrease in tactile sensibility, while passive movements seem to have a minor effect when differentiating tactile performance [20].

Ahmaniemi et al. [21] summarized that it was more suitable to apply passive tactile messages in system alerts and user discrete action feedback. Active feedback is more natural for interacting with the physical interface between a user and a system, which helps to increase the user’s feeling of control.

Based on the above, we found that the differences between actively and passively perceiving MST is an interesting research field for haptic stimuli. However, there were some gaps, as follows:

- The above studies explored physical perceptions such as perceived roughness on the surface with vibrotactile stimuli. There was a gap in the field of MST with vibrotactile stimuli.

- For actively perceiving vibrotactile stimuli, in addition to dynamically stroking a surface or object [18], more gestures could also be considered, such as pressing the touchscreen with different repeat times, rhythms, or speeds.

Therefore, in this study, we compare the differences between actively and passively perceiving MST, considering more types of MST in active sensation.

3. Gesture Data Collected from Our Previous Study

We explored user-defined gestures for MST on the touchscreen of smartphones in our previous study [9].

In [9], we proposed classifications based on movement forms. We mentioned in [9] that “Movement forms indicate the trajectory and dynamics of hands/fingers movement” [22]. We also described the spatial relations between the hands/fingers and the touchscreen. In this study, the movement forms applied are straight gestures on the touchscreen (SOT), straight gestures from the air (SFA), and repetitive gestures (RPT) on the touchscreen [9]. The detailed definitions of each movement form are given in [9]. We chose related gestures to trigger the vibrotactile stimuli for MST based on movement forms.

We also recorded the pressure and duration of user-defined gestures for each MST from [9]. These data were applied in the design of vibrotactile stimuli in [7].

4. Design of MST with Vibrotactile Stimuli

In this study, we compare the differences between the sender with gestures and the receiver without gestures in perceiving MST with vibrotactile stimuli. We apply typical vibrotactile stimuli designed in [7] and further analyze the comparison between the two conditions.

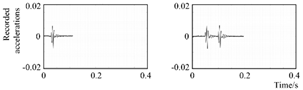

We designed the vibrotactile stimuli based on movement groups [7,9]. Table 1 shows the typical recorded accelerations of vibrotactile stimuli for MST in each group (four types of “tap”, two types of “shake”, and one type of “pull” and “toss”). The detailed design process of vibrotactile stimuli for MST are in [7]. The forms of vibrotactile stimuli for MST in each group are similar. Therefore, only typical examples of recorded accelerations are listed in Table 1.

Table 1.

Properties of vibrotactile stimuli for MST in each movement form group.

In the SFA group, all the vibrotactile stimuli for MST are similar to “tap” in Table 1, with different durations and frequencies. In the RPT group, all the vibrotactile stimuli for MST are similar to “shake”, with different numbers of repeats, durations, and frequencies. Table 1 shows the vibrotactile stimuli for “pull” and “toss” in the SOT group. The detailed values of physical parameters and accelerations of vibrotactile stimuli are in [7].

5. Gestures

5.1. Gestures to Trigger Vibrotactile Stimuli

Researchers have demonstrated that it is necessary to provide vibrotactile stimuli on both a sender’s and a receiver’s phone for remote communication [5,8,16]. In this study, we consider exploring the LUMST with vibrotactile stimuli in two conditions:

- Participants act as a receiver, passively perceiving vibrotactile stimuli without gestures. In this condition, participants were told to imagine themselves as receivers during a virtual online communication. In addition, they were asked to press a button one time to activate the MST with vibrotactile stimuli sent from the sender. In [7,23], we provided vibrotactile stimuli with buttons.

- Participants act as a sender, actively perceiving vibrotactile stimuli with specific gestures. In this situation, participants were told to imagine themselves as senders during an online mobile communication. They were asked to press a button based on the user-defined gestures of MST [9]. We considered the user-defined gestures, here, to mimic the real situation when sending MST.

We told participants what each motion should be like to control the gestures when participants acted as senders based on [9]. The detailed gesture information is as follows:

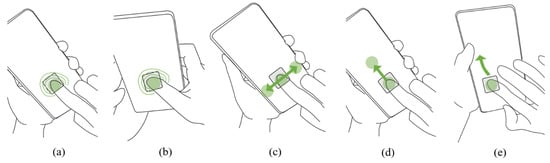

- Participants were asked to press a button for MST in the SFA group (i.e., poke, press, tap, tickle).

- For “poke”, “tap”, and “tickle”, participants were asked to use their right index finger to press a button to trigger the vibrotactile stimuli. A participant’s left hand held the phone, and their right hand acted like that in Figure 1a. Participants could press the button with different rhythms, speeds, or repeat times. For example, when the sender “poke” others, they could “poke” many times rather than just one time based on their habits. The sender could feel the vibrotactile stimuli each time they “poke” others.

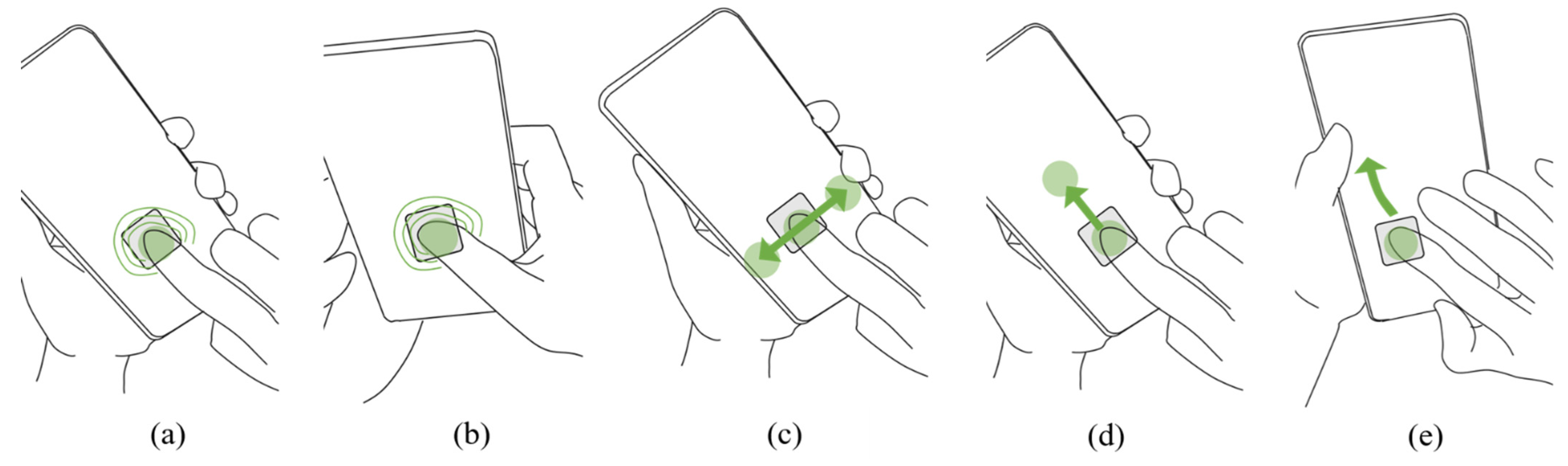

Figure 1. Gestures to trigger vibrotactile stimuli for MST. (a) the gesture for “poke”, “tap”, and “tickle”; (b) the gesture for “press”; (c) the gesture for “nuzzle”, “rub”, “rock”, “shake”, and “tremble”; (d) the gesture for “pull”; (e) the gesture for “toss”. These gesture figures were selected from [9].

Figure 1. Gestures to trigger vibrotactile stimuli for MST. (a) the gesture for “poke”, “tap”, and “tickle”; (b) the gesture for “press”; (c) the gesture for “nuzzle”, “rub”, “rock”, “shake”, and “tremble”; (d) the gesture for “pull”; (e) the gesture for “toss”. These gesture figures were selected from [9]. - For “press”, participants were asked to use their right thumb to press the button to trigger the vibrotactile stimuli (Figure 1b).

- For gestures in the RPT group (nuzzle, rub, rock, shake, tremble), participants were asked to start touching the button to activate the vibrotactile stimuli and move their right index fingers back and forth (Figure 1c), and actively sense the repetitive change of vibrotactile stimuli, until the vibrotactile stimuli stopped.

- For gestures in this group, we considered different directions. Therefore, we described the gestures as follows:

- For “pull”, participants moved their right index finger from up to bottom with a strong force, acting like they were pulling someone to a closer position (Figure 1d).

- For “toss”, participants moved their right index finger from bottom to up and moved their finger fly away from the touchscreen similar to the movement of tossing something away (Figure 1e).

The grey square in Figure 1 represents a graphic button on the touchscreen. Participants started their gestures by pressing the button to trigger the vibrotactile stimuli and continued their gestures until the vibrotactile stimuli stopped.

5.2. Gestures and Displayed Vibrotactile Stimuli

To make a sender’s gesture match with the displayed vibrotactile stimuli, we explain it based on the following gesture groups:

- Gestures in the SFA group such as poke, press, tap, and tickle had a short duration [9]. The vibrotactile stimuli of these MST were also very short [7]. The vibrotactile stimuli would finish when participants finished the quick pressing process. Users’ gestures could easily catch the displayed vibrotactile stimuli.

- For repetitive gestures such as nuzzle, rub, rock, shake, and tremble, it seemed not easy to catch the vibrotactile stimuli, since the durations of these gestures were long and the vibrotactile stimuli varied (Table 1).The rhythms of vibrotactile stimuli in this group were extracted from our previous study on user-defined gestures for MST [9]. We explored how people performed these repetitive gestures and recorded the average number of repeats, durations, and frequencies [9]. We designed vibrotactile stimuli based on user-defined gestures [7]. Those parameters came from users. Therefore, it was not difficult for users to understand the vibrotactile stimuli’ numbers of repeats, durations, and frequencies.In the user study, we told participants how to perform the repetitive gestures and asked them to catch the vibrotactile stimuli. Participants were allowed to feel the vibrotactile stimuli in this group several times before filling in the questionnaire.

- Gestures in the SOT group included pull and toss. “Pull” had a long duration [9]. The vibrotactile stimuli of “pull” were long and constant [7]. Participants touched the button to trigger the vibrotactile stimuli. When the vibrotactile stimuli stopped, participants’ fingers left the touchscreen. It was easy for participants to catch the displayed vibrotactile stimuli for “pull”.For “toss”, the duration was not long [9]. If participants touched the button to trigger the vibrotactile stimuli and performed gestures immediately, they could catch the displayed vibrotactile stimuli. The duration and the changing trend of vibrotactile stimuli were also set based on user-defined gestures [9]. Related parameters had averaged values collected from users. It was easy for participants to understand the duration and changing trend of vibrotactile stimuli.In the user study, participants were also allowed to feel the vibrotactile stimuli several times before they filled in the questionnaire.

6. User Study

6.1. Experiment Setup

We used the same experimental smartphone setup as the one used in [7,9,23]. The smartphone had a wideband linear resonant actuator (LRA) motor to display vibrotactile stimuli [23]. The detailed technical information about this smartphone is in [23].

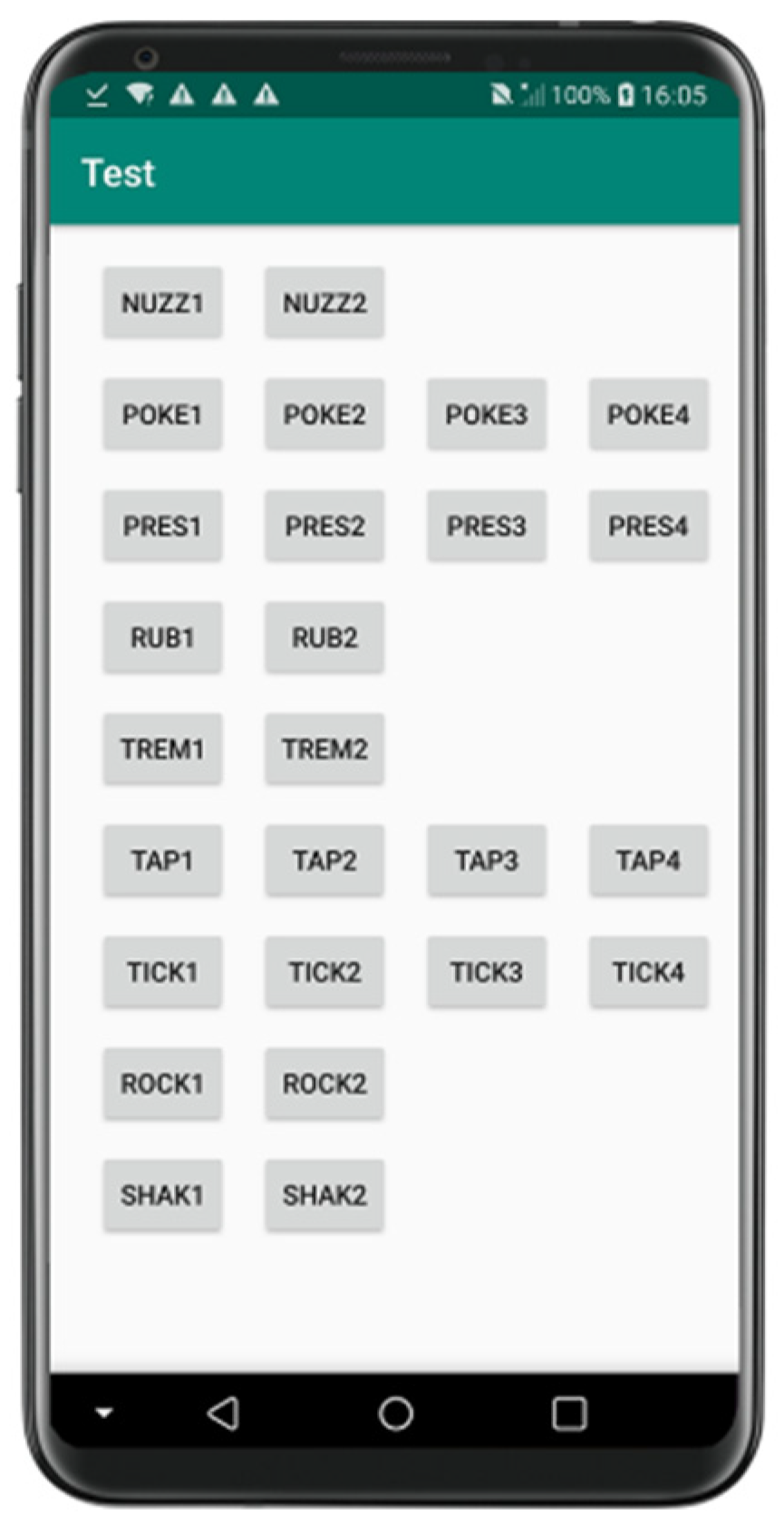

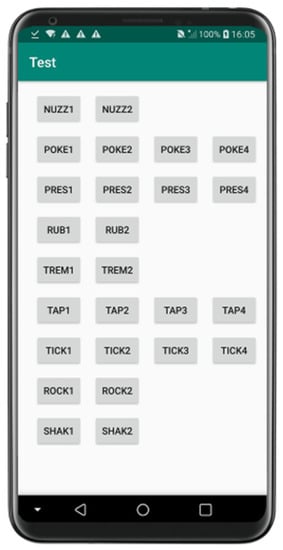

Figure 2.

Test interface. This figure was extracted from [7].

6.2. Participants

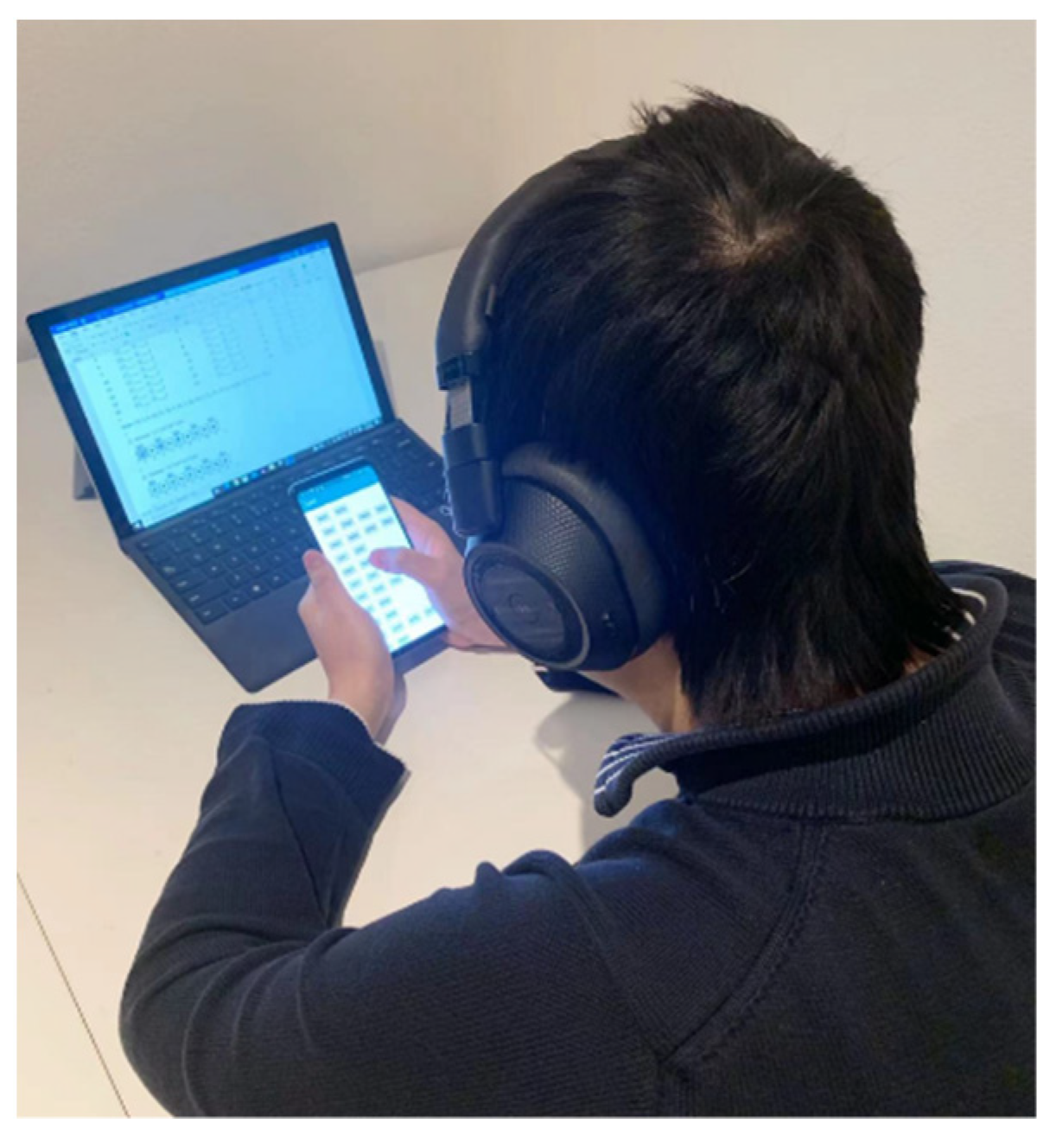

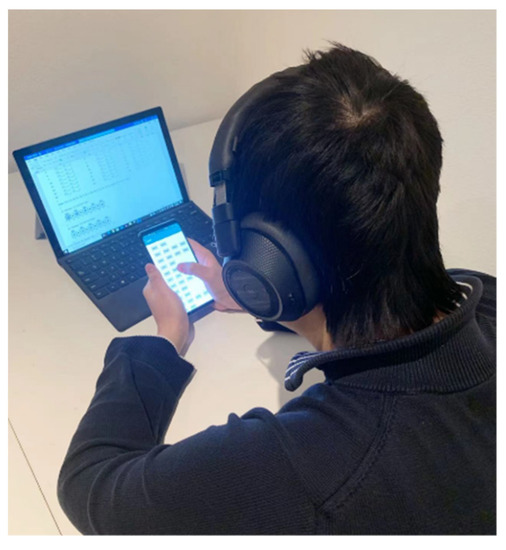

Twenty participants (eight males and twelve females) between the ages of 23 and 36 participated in this study. Participants were recruited from the local university. All participants had experiences with smartphones and online social communication. They had no physical constraints of sensing touch [7,23]. Noise-canceling headphones were provided to block out vibrotactile stimuli’ sound effects [7,23]. Figure 3 shows the test environment.

Figure 3.

Test environment.

6.3. Procedure

We introduced the test and handed out the consent forms and questionnaires before the experiment.

Table 2 shows variables and test conditions. The descriptions of two conditions were as follows:

Table 2.

Variables and test conditions.

- Participants were told to act as a receiver to feel the MST with vibrotactile stimuli. In this situation, participants only pressed the button on the touchscreen once.

- Participants were told to act as a sender to feel the MST with vibrotactile stimuli. In this situation, participants pressed the button with gestures. Participants were asked to use the gestures mentioned above (Section 5). Participants were allowed to try the vibrotactile stimuli several times before filling in the questionnaire.

As a receiver or a sender (within-group), participants were asked to feel how much the LUMST with vibrotactile stimuli was and fill in the 7-point Likert Scale from 1 (very unlikely) to 7 (very likely).

We delivered randomized orders of MST to participants on the questionnaire before testing. Participants followed the order of MST they received and felt them one by one. We obtained the randomized orders by random function in Python [7,9,23].

7. Results

We used SPSS 23.0 to conduct a one-way ANOVA analysis. The descriptive data are in Table 3. More detailed results on receivers can be seen in [7].

Table 3.

Mean LUMST with vibrotactile stimuli.

Table 4 shows the comparison data between the senders and the receivers. Our experiment did not find significant differences in perceiving vibrotactile stimuli between senders and receivers for most MST (p > 0.05). These results suggest that participants have no different understanding of the MST with vibrotactile stimuli, whether they acted as a sender actively perceiving with gestures or a receiver passively perceiving without gestures.

Table 4.

Results of differences in perceiving between a receiver (R) and a sender (S).

For actively perceiving MST such as “poke”, “press”, “tap”, and “tickle”, participants’ different rhythms, speeds, or repeat times might affect the results. We provided different types of vibrotactile stimuli for these MST. Table 4 shows no significant differences for these vibrotactile stimuli (p > 0.05), which means a user’s active behavior, in a certain range, did not lead to significant perceptual changes as compared with passive perceiving of vibrotactile stimuli.

The results in this study could guide us in application design. Researchers or designers could apply the same vibrotactile stimuli for MST for senders’ and the receivers’ phones. We could use the same vibrotactile stimuli for MST for both active perceiving with gestures and passively perceiving without gestures.

8. Discussion and Limitations

8.1. Considering Specific Demands and Context in Future Designs

Based on existing studies, the effects of active and passive perceiving were different in different contexts. For example, no significant differences were found in perceived roughness [17,18], the active perception had shorter latencies in the exploration of virtual three-dimensional geometric shapes [19], active movements lead to a significant decrease in tactile sensibility [20], etc.

This study focused on a different context, i.e., MST with vibrotactile stimuli. We also considered more gestures, such as pressing the touchscreen with different repeat times, rhythms, or speeds.

This study showed no significant differences between the two conditions in the LUMST with vibrotactile stimuli. The results indicated that senders and receivers could understand the vibrotactile stimuli we designed for MST in two different conditions. We could apply the same vibrotactile stimuli for senders and receivers in the future.

The effects may vary in different contexts, and therefore, we need to consider specific demands and context in future designs. Although the LUMST had no differences between the two conditions for the selected input gestures, other factors may affect these two conditions, such as preference, complexity [8], or controlling feelings [21]. These factors are also important in applications.

For future applications, we should consider the interface demands in a specific context and try to make MST with vibrotactile stimuli more suitable for the interface and user’s needs.

8.2. Multimodal MST for Online Social Applications

Haptic feedback is a compensation channel for information transmission [24]. It is not easy for users to recognize vibrotactile stimuli when there is no other information [25]. We told participants what the vibrotactile stimuli represented in the user study [7]. We should also mention what the vibrotactile stimuli represent in an application.

This study observed no significant differences in perceptions when participants actively or passively perceived the vibrotactile stimuli for most MST. Researchers or designers could apply the same vibrotactile stimuli for both senders and receivers. However, users may not tell the differences when similar vibrotactile stimuli come out. The vibrotactile stimuli in each movement form group have similar forms of vibrotactile stimuli [7]. For example, the vibrotactile stimuli of “poke” and “press” are all short pulses. Multimodal MST could help to differentiate them, with the visual stickers or emoji of “poke” and “press”.

In addition, suppose we need to consider multi-point gestures in the future. In that case, we need to apply the visual channel to compensate for the original complex gestures [26] to better understand MST with vibrotactile stimuli.

8.3. Limitations

The vibrotactile stimuli in this study are fixed. In the RPT group, the rhythm of vibrotactile stimuli is also fixed. When participants tried to imagine themselves as a sender and performed repetitive gestures to feel the vibrotactile stimuli, they sometimes could not catch up with the rhythm. The first feeling of rhythm may affect the results of the LUMST. In the user study, participants could try the vibrotactile stimuli several times to catch the rhythm better. In the future, real-time transmission would help to solve this problem.

Another point was that we did not consider MST with a multi-point touch. Although there are many challenges in designing user experiences of multi-touch interfaces [11], users may have different insights into MST with multi-point touch. In the future, considering MST with multi-point touch may help to thoroughly understand the active and passive MST with vibrotactile stimuli.

9. Conclusions and Future Work

In this study, we compared senders with gestures and receivers without gestures to identify the differences in perceiving MST with vibrotactile stimuli. We introduced vibrotactile stimuli for selected MST based on [7]. In [7], we mainly provided the design process of MST with vibrotactile stimuli and we also tested if the designed vibrotactile stimuli could be understood by receivers [7]. However, we did not discuss if senders could understand the designed vibrotactile stimuli in [7]. A sender’s actively perceiving ways may affect the LUMST. Therefore, we conducted a user study to check and compare the LUMST in this study.

This study showed that when participants acted as senders and receivers, they did not have a different understanding of the MST with vibrotactile stimuli when actively perceiving with gestures or passively perceiving without gestures.

Future studies should focus on applications. In applications, receivers and senders are considered together. This study connects the previous study [7] and future applications.

Combined with [7], the results of this study also provide implications for future applications. Researchers or designers could apply the same vibrotactile stimuli for both senders’ and receivers’ phones in future application designs.

In the future, we plan to consider the specific context and multimodal interfaces when applying MST with vibrotactile stimuli. For a better understanding, we also plan to consider multimodal stimuli when designing MST.

Author Contributions

Methodology, Q.W.; software, Q.W. and M.L.; validation, Q.W.; formal analysis, Q.W.; investigation, Q.W.; resources, J.H. and M.L.; writing—original draft preparation, Q.W.; writing—review and editing, J.H.; supervision, J.H. and M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the China Sponsorship Council (201807000107).

Institutional Review Board Statement

This study was conducted according to the guidelines of the local Ethical Review Board from the Industrial Design Department (protocol code: ERB2020ID137, date of approval, June 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rantala, J.; Salminen, K.; Raisamo, R.; Surakka, V. Touch Gestures in Communicating Emotional Intention via Vibrotactile Stimulation. Int. J. Hum. Comput. Stud. 2013, 71, 679–690. [Google Scholar] [CrossRef]

- Chang, A.; O’Modhrain, S.; Jacob, R.; Gunther, E.; Ishii, H. ComTouch: Design of a vibrotactile communication device. In Proceedings of the 4th Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques (DIS’02), London, UK, 25–28 June 2002; pp. 312–320. [Google Scholar] [CrossRef]

- Park, Y.W.; Baek, K.M.; Nam, T.J. The roles of touch during phone conversations: Long-distance couples’ use of POKE in their homes. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI), Paris, France, 27 April–2 May 2013; pp. 1679–1688. [Google Scholar] [CrossRef]

- Park, Y.W.; Bae, S.H.; Nam, T.J. How do couples use CheekTouch over phone calls? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’13), Austin, TX, USA, 5–10 May 2012; pp. 763–766. [Google Scholar] [CrossRef]

- Furukawa, M.; Kajimoto, H.; Tachi, S. KUSUGURI: A shared Tactile Interface for bidirectional tickling. In Proceedings of the 3rd Augmented Human International Conference (AH’12), Megève, France, 8–9 March 2012; pp. 1–8. [Google Scholar] [CrossRef]

- Park, Y.W.; Park, J.; Nam, T.J. The trial of bendi in a coffeehouse: Use of a shape-changing device for a tactile-visual phone conversation. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI’15), Seoul, Korea, 18–23 April 2015; pp. 2181–2190. [Google Scholar] [CrossRef]

- Wei, Q.; Hu, J.; Li, M. Creating Mediated Social Touch with Vibrotactile Stimuli on Touchscreens, Internal Technical Report; Department of Industrial Design, Eindhoven University of Technology: Eindhoven, The Netherlands, 2021. [Google Scholar]

- Ramos, G.; Boulos, M.; Balakrishnan, R. Pressure widgets. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’02), Vienna, Austria, 24–29 April 2002; pp. 487–494. [Google Scholar] [CrossRef]

- Wei, Q.; Hu, J.; Li, M. User-defined gestures for mediated social touch on touchscreens. Pers. Ubiquitous Comput. 2021; in press. [Google Scholar]

- Yohanan, S.; MacLean, K.E. The Role of Affective Touch in Human-Robot Interaction: Human Intent and Expectations in Touching the Haptic Creature. Int. J. Soc. Robot. 2012, 4, 163–180. [Google Scholar] [CrossRef]

- Bachl, S.; Tomitsch, M.; Wimmer, C.; Grechenig, T. Challenges for Designing the User Experience of Multi-touch Interfaces. In Proceedings of the Engineering Patterns for Multi-Touch Interfaces workshop (MUTI’10) of the ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Berlin, Germany, 19–23 June 2010. [Google Scholar]

- Blakemore, S.J.; Wolpert, D.M.; Frith, C.D. Central cancellation of self-produced tickle sensation. Nat. Neurosci. 1998, 1, 635–640. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, Y.; Nakata, S.; Kajimoto, H. Novel tactile display for emotional tactile experience. In Proceedings of the International Conference on Advances in Computer Entertainment Technology (ACE’09), Athens, Greece, 29–31 October 2009; pp. 124–131. [Google Scholar] [CrossRef]

- Hemmert, F.; Gollner, U.; Löwe, M.; Wohlauf, A.; Joost, G. Intimate mobiles: Grasping, kissing and whispering as a means of telecommunication in mobile phones. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services (MobileHCI’11), Stockholm, Sweden, 30 August–2 September 2011; pp. 21–24. [Google Scholar] [CrossRef]

- Kyung, K.; Lee, J.; Srinivasan, M.A. Precise Manipulation of GUI on a Touch Screen with Haptic Cues. In Proceedings of the World Haptics conference (WHC’09), Salt Lake City, UT, USA, 18–20 March 2009; pp. 202–207. [Google Scholar] [CrossRef]

- Hoggan, E.; Stewart, C.; Haverinen, L.; Jacucci, G.; Lantz, V. Pressages: Augmenting phone calls with non-verbal messages. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology (UIST’12), Cambridge, MA, USA, 7–10 October 2012; pp. 555–562. [Google Scholar] [CrossRef]

- Lederman, S.J. The perception of surface roughness by active and passive touch. Bull. Psychon. Soc. 1981, 18, 253–255. [Google Scholar] [CrossRef] [Green Version]

- Hatzfeld, C. Haptics as an Interaction Modality. In Engineering Haptic Devices; Hatzfeld, C., Kern, T.A., Eds.; Springer: London, UK, 2014; pp. 29–100. [Google Scholar] [CrossRef]

- Symmons, M.A.; Richardson, B.L.; Wuillemin, D.B.; Vandoorn, G.H. Active versus passive touch in three dimensions. In Proceedings of the World Haptics Conference (WHC’05), Pisa, Italy, 18–20 March 2005; pp. 108–113. [Google Scholar] [CrossRef]

- Vitello, M.; Ernst, M.; Fritschi, M. An instance of tactile suppression: Active exploration impairs tactile sensitivity for the direction of lateral movement. In Proceedings of the EuroHaptics Conference (EH’06), Paris, France, 2 July 2006; pp. 351–355. [Google Scholar]

- Ahmaniemi, T.; Marila, J.; Lantz, V. Design of dynamic vibrotactile textures. IEEE Trans. Haptics 2010, 3, 245–256. [Google Scholar] [CrossRef] [PubMed]

- Lausberg, H.; Sloetjes, H. Coding gestural behavior with the NEUROGES-ELAN system. Behav. Res. Methods 2009, 41, 841–849. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Li, M.; Hu, J.; Feijs, L. Perceived depth and roughness of buttons with touchscreens. IEEE Trans. Haptics, 2021; in press. [Google Scholar] [CrossRef] [PubMed]

- Lam, T.M.; Boschloo, H.W.; Mulder, M.; Van Paassen, M.M. Artificial force field for haptic feedback in UAV teleoperation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 39, 1316–1330. [Google Scholar] [CrossRef]

- Burke, J.L.; Prewett, M.S.; Gray, A.A.; Yang, L.; Stilson, F.R.B.; Coovert, M.D.; Elliot, L.R.; Redden, E. Comparing the effects of visual-auditory and visual-tactile feedback on user performance: A meta-analysis. In Proceedings of the 8th International Conference on Multimodal Interfaces (ICMI’06), Banff, AB, Canada, 2–4 November 2006; pp. 108–117. [Google Scholar] [CrossRef]

- Ernst, M.O.; Banks, M.S. Humans Integrate Visual and Haptic Information in a Statistically Optimal Fashion. Nature 2002, 415, 429–433. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).