Cow Rump Identification Based on Lightweight Convolutional Neural Networks

Abstract

1. Introduction

2. Materials and Methods

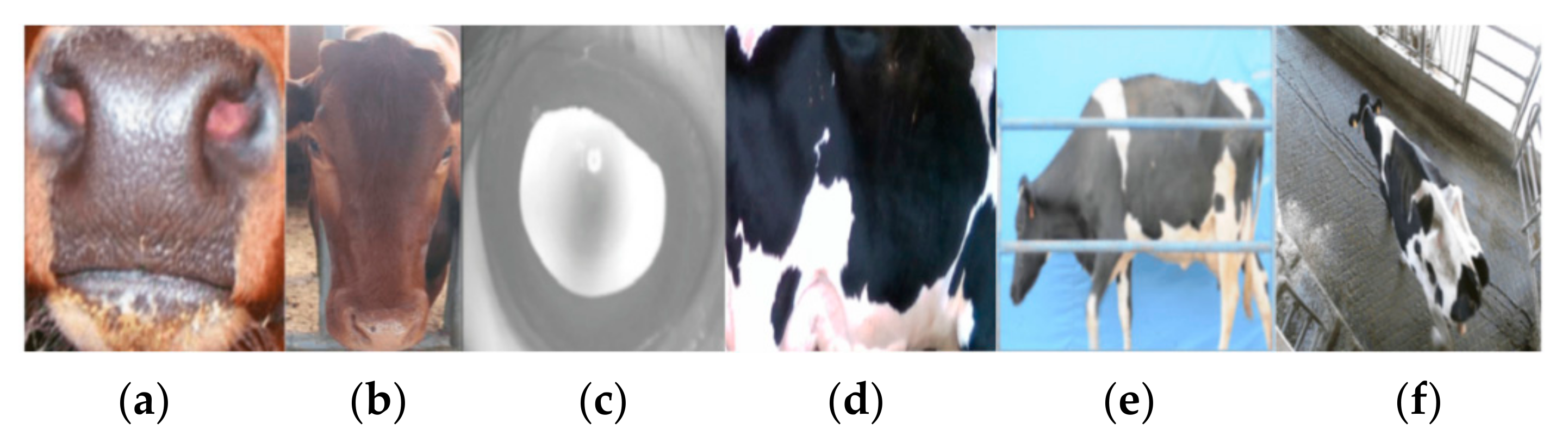

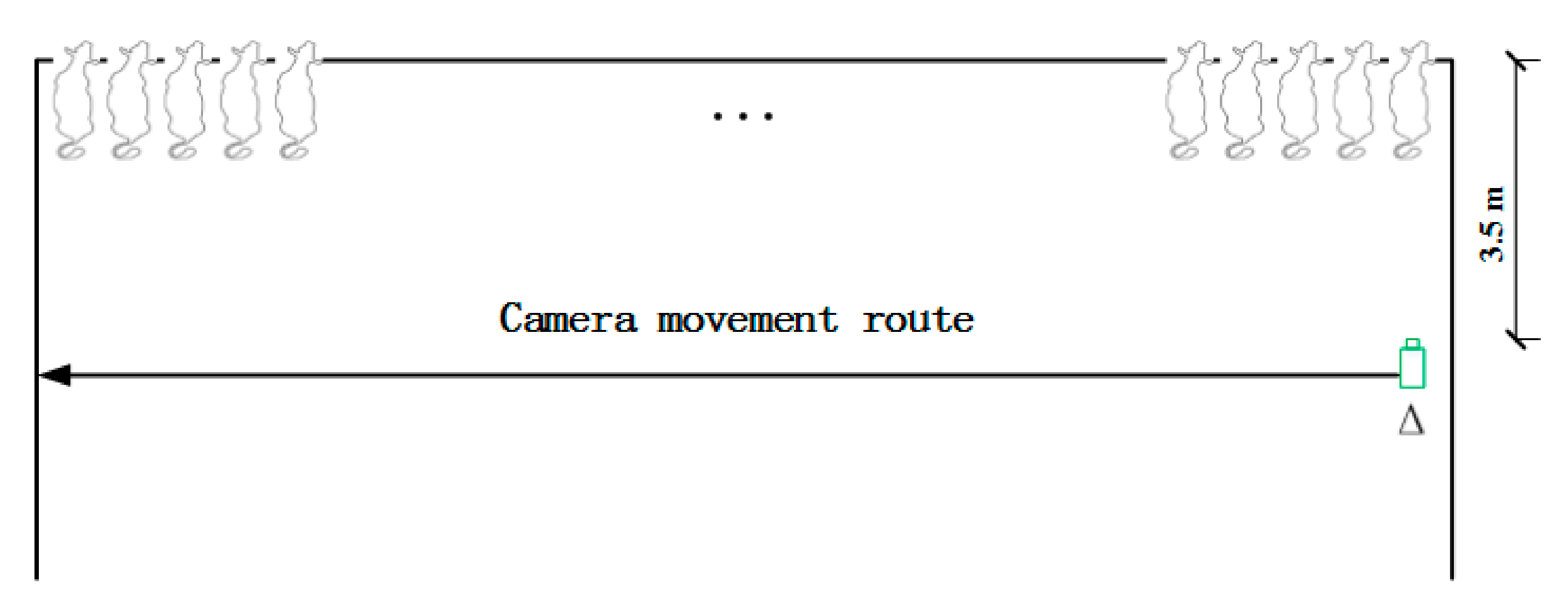

2.1. Image Acquisition

2.2. Experimental Data

2.3. Individual Identification

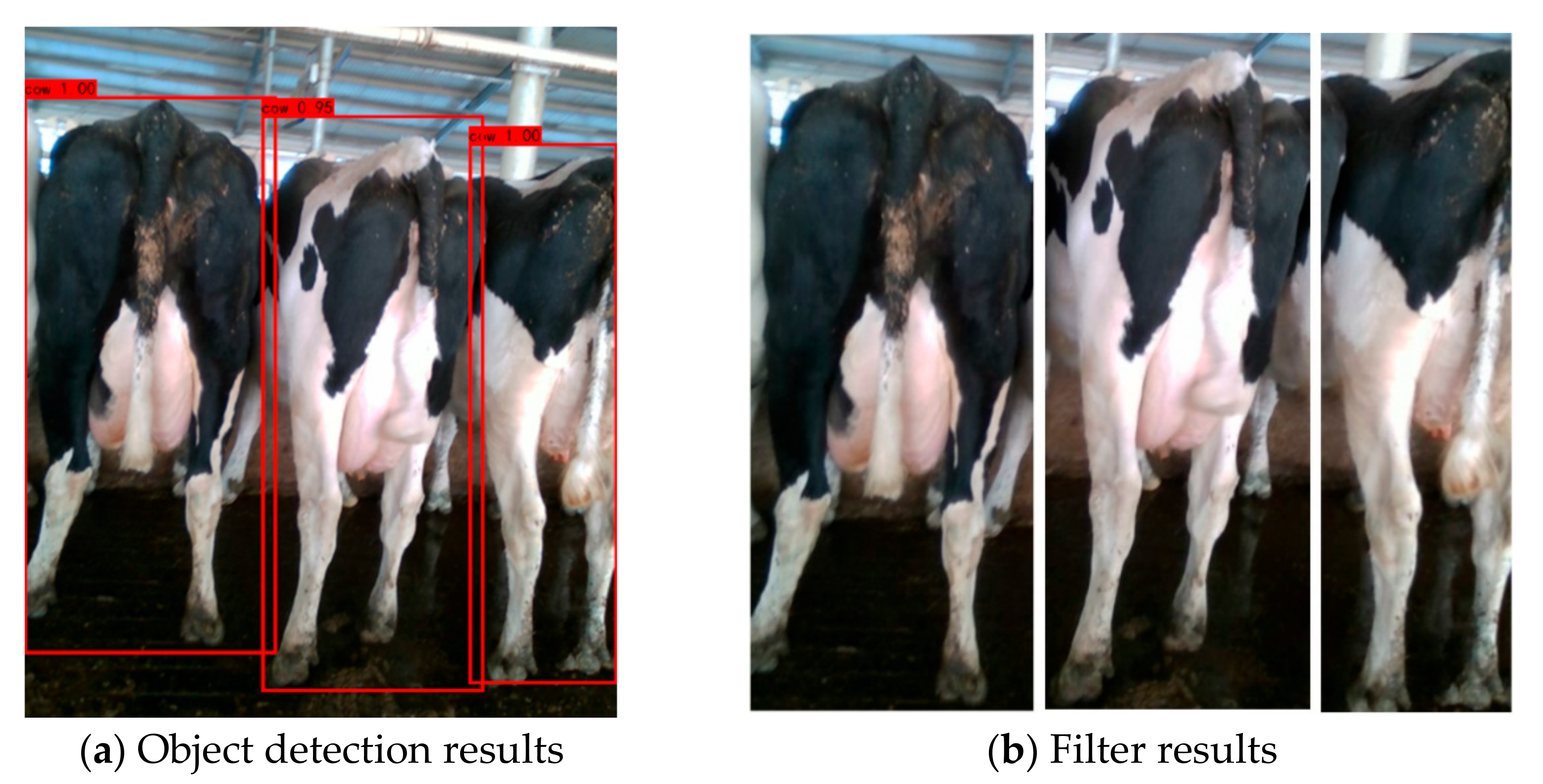

2.3.1. Object Detection

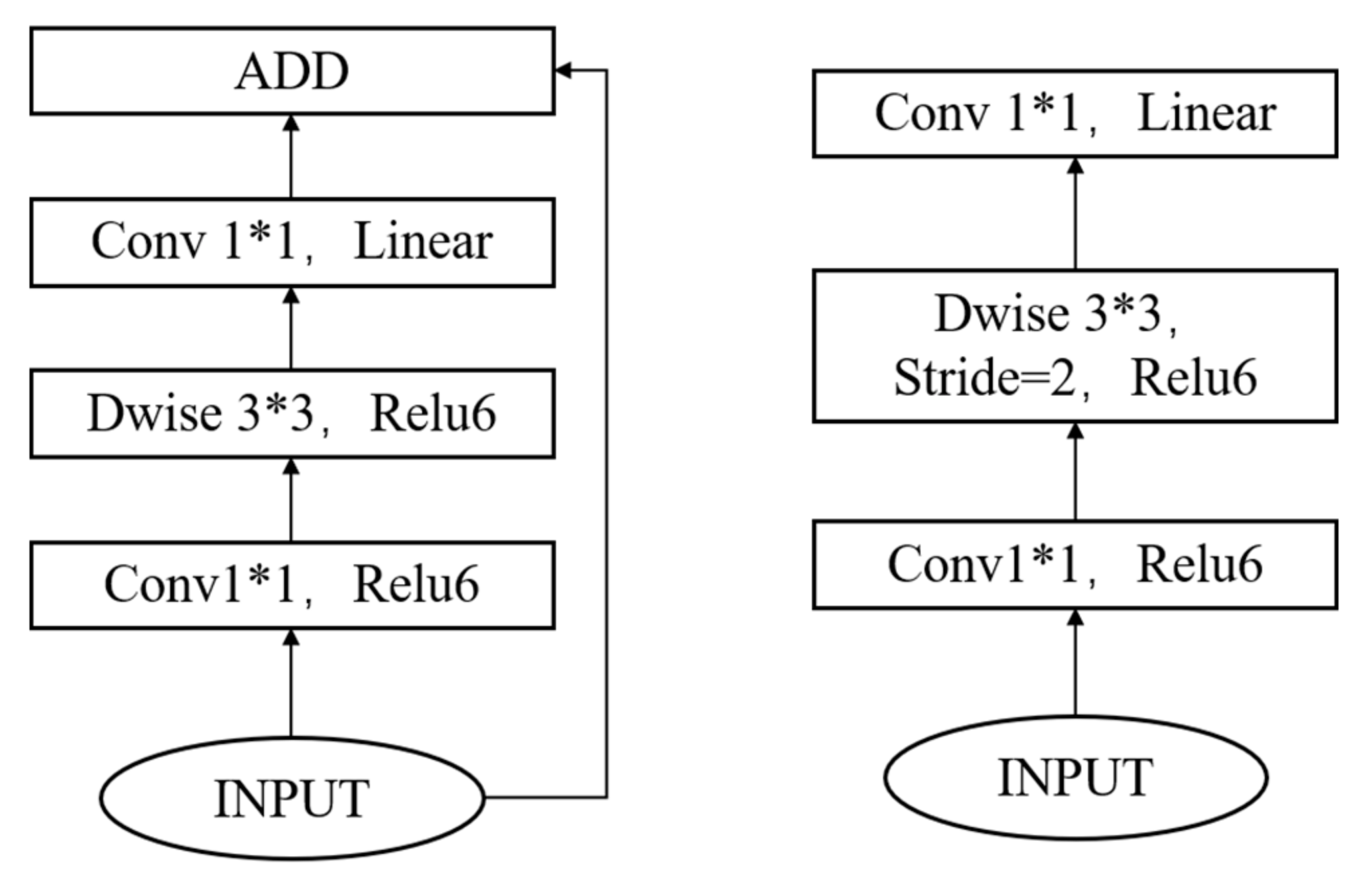

2.3.2. Cow Identification Model Based on Convolutional Neural Networks

3. Experimental Results and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adell, N.; Puig, P.; Rojas-Olivares, A.; Caja, G.; Carné, S.; Salama, A.A.K. A bivariate model for retinal image identification in lambs. Comput. Electron. Agric. 2012, 87, 108–112. [Google Scholar] [CrossRef]

- Kumar, S.; Pandey, A.; Satwik, K.S.R.; Kumar, S.; Singh, S.K.; Singh, A.K.; Mohan, A. Deep learning framework for identification of cattle using muzzle point image pattern. Measurement 2018, 116, 1–17. [Google Scholar] [CrossRef]

- Zin, T.T.; Phyo, C.N.; Tin, P.; Hama, H.; Kobayashi, I. Image Technology Based Cow Identification System using Deep Learning. In Proceedings of the International MultiConference of Engineers and Computer Scientists, Hong Kong, China, 14–16 March 2018; p. 1. [Google Scholar]

- Li, W.; Ji, Z.; Wang, L.; Sun, C.; Yang, X. Automatic individual identification of Holstein dairy cows using tailhead images. Comput. Electron. Agric. 2017, 142, 622–631. [Google Scholar] [CrossRef]

- Drach, U.; Halachmi, I.; Pnini, T.; Izhaki, I.; Degani, A. Automatic herding reduces labour and increases milking frequency in robotic milking. Biosys. Eng. 2017, 155, 134–141. [Google Scholar] [CrossRef]

- Phyo, C.N.; Zin, T.T.; Hama, H.; Kobayashi, I. A Hybrid Rolling Skew Histogram-Neural Network Approach to Dairy Cow Identification System. In Proceedings of the 2018 International Conference on Image and Vision Computing New Zealand (IVCNZ), Auckland, New Zealand, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Gaber, T.; Tharwat, A.; Hassanien, A.E.; Snasel, V. Biometric cattle identification approach based on Weber’s Local Descriptor and AdaBoost classifier. Comput. Electron. Agric. 2016, 122, 55–66. [Google Scholar] [CrossRef]

- Wei, G.; Dongping, Q. Techniques of Radio Frequency Identification and Anti-collision in Digital Feeding Dairy Cattle. In Proceedings of the 2009 Second International Conference on Information and Computing Science, Manchester, UK, 21–22 May 2009; Volume 1, pp. 216–219. [Google Scholar]

- Awad, A.I. From classical methods to animal biometrics: A review on cattle identification and tracking. Comput. Electron. Agric. 2016, 123, 423–435. [Google Scholar] [CrossRef]

- Ng, M.L.; Leong, K.S.; Hall, D.M.; Cole, P.H. A small passive UHF RFID tag for livestock identification. In Proceedings of the IEEE International Symposium on Microwave, Antenna, Propagation and EMC Technologies for Wireless Communications, Beijing, China, 8–12 August 2005; Volume 1, pp. 67–70. [Google Scholar]

- Tikhov, Y.; Kim, Y.; Min, Y.H. A novel small antenna for passive RFID transponder. In Proceedings of the 2005 European Microwave Conference, Paris, France, 4–6 October 2005; Volume 1, p. 4. [Google Scholar]

- Jin, G.; Lu, X.; Park, M.S. An indoor localization mechanism using active RFID tag. In Proceedings of the IEEE International Conference on Sensor Networks, Ubiquitous, and Trustworthy Computing (SUTC’06), Taichung, Taiwan, 5–7 June 2006; Volume 1, p. 4. [Google Scholar]

- Trevarthen, A.; Michael, K. The RFID-enabled dairy farm, towards total farm management. In Proceedings of the 2008 7th International Conference on Mobile Business, Barcelona, Spain, 7–8 July 2008; pp. 241–250. [Google Scholar]

- Voulodimos, A.S.; Patrikakis, C.Z.; Sideridis, A.B.; Ntafis, V.A.; Xylouri, E.M. A complete farm management system based on animal identification using RFID technology. Comput Electron. Agric. 2010, 70, 380–388. [Google Scholar] [CrossRef]

- Gygax, L.; Neisen, G.; Bollhalder, H. Accuracy and validation of a radar-based automatic local position measurement system for tracking dairy cows in free-stall barns. Comput. Electron. Agric. 2007, 56, 23–33. [Google Scholar] [CrossRef]

- Kuan, C.Y.; Tsai, Y.C.; Hsu, J.T.; Ding, S.T.; Te Lin, T. An Imaging System Based on Deep Learning for Monitoring the Feeding Behavior of Dairy Cows. In Proceedings of the 2019 ASABE Annual International Meeting, American Society of Agricultural and Biological Engineers. Boston, MA, USA, 7–10 July 2019; p. 1. [Google Scholar]

- Kuan, C.Y.; Tsai, Y.C.; Hsu, J.T.; Ding, S.T.; Te Lin, T. An Improved Single Shot Multibox Detector Method Applied in Body Condition Score for Dairy Cows. Animals 2019, 9, 470. [Google Scholar]

- Wu, D.; Wu, Q.; Yin, X.; Jiang, B.; Wang, H.; He, D.; Song, H. Lameness detection of dairy cows based on the YOLOv3 deep learning algorithm and a relative step size characteristic vector. Biosyst. Eng. 2020, 189, 150–163. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Cattle identification, A New Frontier in Visual Animal Biometrics Research. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2019, 90, 689–708. [Google Scholar] [CrossRef]

- Cai, C.; Li, J. Cattle face identification using local binary pattern descriptor. In Proceedings of the 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference. Kaohsiung, Taiwan, 29 October–1 November 2013; pp. 1–4. [Google Scholar]

- Lu, Y.; He, X.; Wen, Y.; Wang, P.S. A new cow identification system based on iris analysis and recognition. Int. J. Biomet. 2014, 6, 18. [Google Scholar] [CrossRef]

- Zhao, K.; Jin, X.; Ji, J.; Wang, J.; Ma, H.; Zhu, X. Individual identification of Holstein dairy cows based on detecting and matching feature points in body images. Biosyst. Eng. 2019, 181, 128–139. [Google Scholar] [CrossRef]

- Lv, F.; Zhang, C.; Lv, C. Image identification of individual cow based on SIFT in Lαβ color space. Proc. MATEC Web Conf. EDP Sci. 2018, 176, 01023. [Google Scholar] [CrossRef][Green Version]

- Okura, F.; Ikuma, S.; Makihara, Y.; Muramatsu, D.; Nakada, K.; Yagi, Y. RGB-D video-based individual identification of dairy cows using gait and texture analyses. Comput. Electron. Agric. 2019, 165, 104944. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer: Cham, The Switzerland, 2015. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In CVPR; IEEE Computer Society: Los Alamitos, CA, USA, 2017; Volume 1, p. 3. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In CVPR: IEEE Computer Society: Los Alamitos, CA, USA, 2015. IEEE Computer Society: Los Alamitos, Los Alamitos, CA, USA, 7–12 June 2015. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2, Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow, Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Hu, H.; Dai, B.; Shen, W.; Wei, X. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

| Input | Operator | t | c | n | s |

|---|---|---|---|---|---|

| 2242 × 3 | Conv2d | - | 32 | 1 | 2 |

| 1122 × 32 | Bottleneck | 1 | 16 | 1 | 1 |

| 1122 × 16 | Bottleneck | 6 | 24 | 2 | 2 |

| 562 × 24 | Bottleneck | 6 | 32 | 3 | 2 |

| 282 × 32 | Bottleneck | 6 | 64 | 4 | 2 |

| 142 × 64 | Bottleneck | 6 | 96 | 3 | 1 |

| 142 × 96 | Bottleneck | 6 | 160 | 3 | 2 |

| 72 × 160 | Bottleneck | 6 | 320 | 1 | 1 |

| 72 × 320 | Conv2d 1 × 1 | - | 1280 | 1 | 1 |

| 72 × 1280 | Avgpool 7 × 7 | - | - | 1 | - |

| 1 × 1 × 1280 | Conv2d 1 × 1 | - | 195 | - |

| Base Network | Accuracy (%) | Model Size (M) | Reasoning Times (Image/s) | |

|---|---|---|---|---|

| Original Dataset | 20 Times Augmented Dataset | |||

| Mobilenet v2 | 97.28 | 99.76 | 9.25 | 193 |

| AlexNet | 96.85 | 99.60 | 226.02 | 92 |

| GoogLeNet | 95.29 | 99.68 | 40.97 | 95 |

| VGG-16 | 97.70 | 99.80 | 519.10 | 88 |

| ResNet-50 | 95.22 | 98.89 | 18.01 | 109 |

| Method | Accuracy (%) | Object Categories | Region of Interest (ROI) |

|---|---|---|---|

| Cheng Cai and Jianqiao Li (2013) [20] | 95.30 | 30 | Face |

| Lu Y et al. (2016) [21] | 98.33 | 6 | Iris |

| Zhao, Jin, Ji, Wang, Ma, Zhu (2019) [22] | 96.72 | 66 | Body |

| Feng Lv, Chunmei Zhang, and Changwei Lv (2018) [23] | 98.33 | 60 | Cow’s side |

| CNN for cow rump | 99.76 | 195 | Rump |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, H.; Shi, W.; Guo, J.; Zhang, Z.; Shen, W.; Kou, S. Cow Rump Identification Based on Lightweight Convolutional Neural Networks. Information 2021, 12, 361. https://doi.org/10.3390/info12090361

Hou H, Shi W, Guo J, Zhang Z, Shen W, Kou S. Cow Rump Identification Based on Lightweight Convolutional Neural Networks. Information. 2021; 12(9):361. https://doi.org/10.3390/info12090361

Chicago/Turabian StyleHou, Handan, Wei Shi, Jinyan Guo, Zhe Zhang, Weizheng Shen, and Shengli Kou. 2021. "Cow Rump Identification Based on Lightweight Convolutional Neural Networks" Information 12, no. 9: 361. https://doi.org/10.3390/info12090361

APA StyleHou, H., Shi, W., Guo, J., Zhang, Z., Shen, W., & Kou, S. (2021). Cow Rump Identification Based on Lightweight Convolutional Neural Networks. Information, 12(9), 361. https://doi.org/10.3390/info12090361